DreamGym: Scalable LLM Experience Synthesis

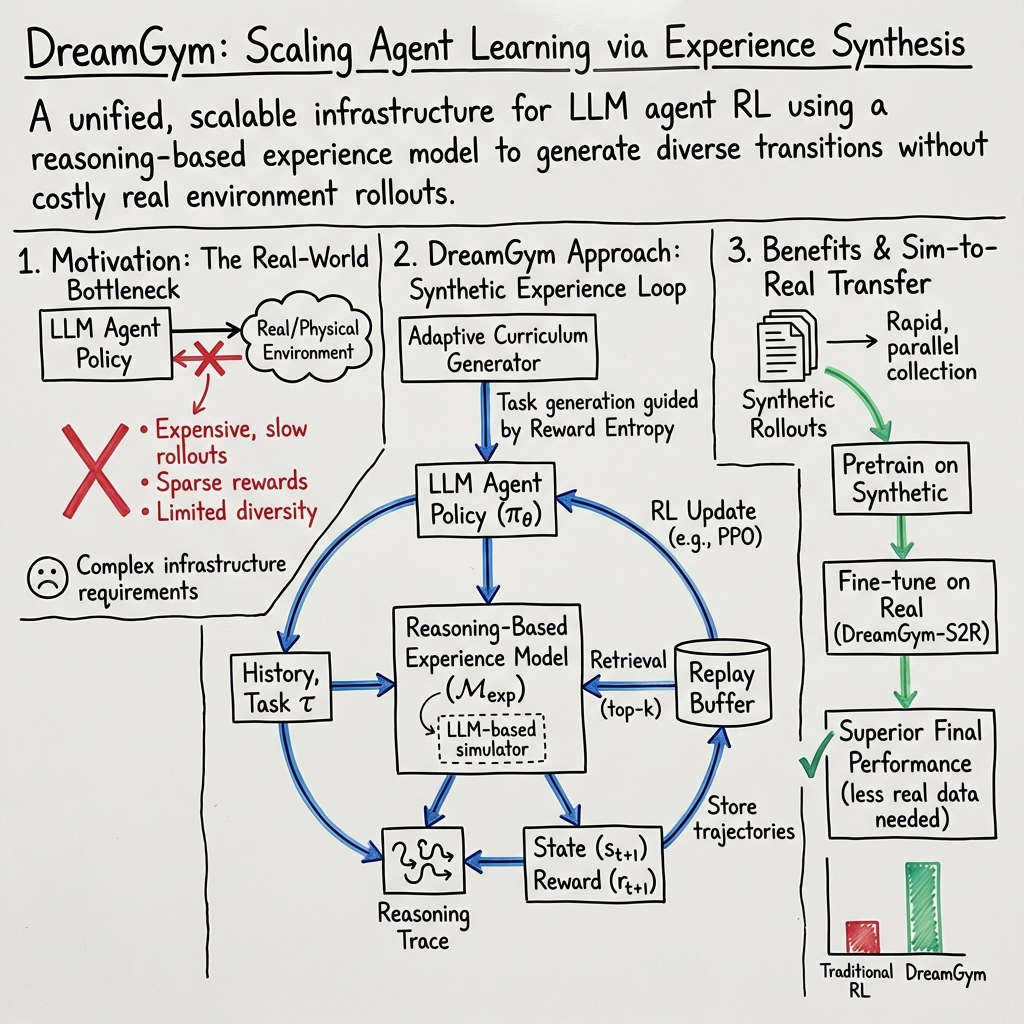

- DreamGym Framework is a unified infrastructure that enables reinforcement learning using LLM-generated synthetic experiences, reducing reliance on costly real-environment rollouts.

- It employs a reasoning-based experience model to create diverse, high-quality state transitions with detailed chain-of-thought feedback.

- Adaptive curriculum task generation and an evolving replay buffer drive sample efficiency, policy generalization, and effective sim-to-real transfer.

DreamGym Framework refers to a unified, scalable infrastructure for LLM agent reinforcement learning (RL) via experience synthesis, introduced in "Scaling Agent Learning via Experience Synthesis" (Chen et al., 5 Nov 2025). Distinct from traditional RL frameworks that interact with physical or simulated environments, DreamGym employs a reasoning-based experience model to generate diverse, high-quality state transitions and feedback, thereby allowing RL agent training without dependence on costly environment rollouts. DreamGym further augments agent learning with adaptive curriculum-based task generation and an experience replay buffer co-evolving with the agent and the experience model.

1. Foundations and Motivation

DreamGym addresses primary obstacles in RL for autonomous LLM agents: expensive and slow environment rollouts, reward sparsity, limited task diversity, unreliable feedback signals, and complex infrastructure requirements. Standard RL techniques (PPO, GRPO, etc.) are hampered in domains such as complex web interactions or knowledge-grounded reasoning where real-environment rollouts are prohibitive, and reward signals are sparse or weakly defined. DreamGym enables scalable experience collection by abstracting environment dynamics into meta-representational state transitions and using LLM-powered stepwise reasoning to synthesize trajectories. This yields a training regime where RL agents profit from dense, informative feedback and curriculum-adaptive task pools, improving both sample efficiency and generalization.

2. Architectural Components and Workflow

DreamGym comprises three tightly coupled modules:

- Reasoning-Based Experience Model () An LLM-based simulator abstracting the environment as discrete textual states. For each agent step, %%%%2%%%% outputs a state transition and a detailed reasoning trace, conditioned on the interaction history, current task , and a top- set of similar transitions retrieved from the replay buffer.

- Transition generation:

where denotes the chain-of-thought reasoning trace, and are demonstration context snippets.

- Supervised fine-tuning objective aligning with expert trajectories:

- Experience Replay Buffer A buffer seeded with offline demonstrations and continuously updated with synthetic as well as agent-generated trajectories. Top- retrieval supports factual grounding of experience model predictions, mitigates hallucination, and boosts learning diversity.

- Adaptive Curriculum Task Generator A task generation engine guided by reward entropy (), which promotes intermediate-difficulty tasks optimal for agent learning and injects challenging variations:

The agent-environment loop involves the agent policy acting in synthetic tasks generated by the task generator, state transitions emitted by the experience model, and updates to both agent and experience model parameters informed by buffer data.

3. Meta-Representational State Space and Reasoning

DreamGym eschews pixel-level, physics-based simulation in favor of a meta-representational, discrete state space for agent interactions. States are compact, expressive textual encodings of relevant information (e.g., HTML DOM fragments for web tasks, tool outputs for knowledge tasks). Stepwise transitions are causally justified via LLM chain-of-thought reasoning conditioned on context and similar trajectories, which increases factual correctness and reduces error propagation. This design yields learning signals richer and more stable than those found in standard noisy or sparse RL environments. The experience model architecture thus supports curriculum learning, cross-task generalization, and efficient policy transfer.

4. Learning Dynamics, Guarantees, and Sim-to-Real Transfer

DreamGym supports any RL algorithm (PPO, GRPO, etc.) that can consume agent trajectories in the standard format. Training proceeds with exclusive use of synthetic rollouts, enabling rapid, parallel collection of trajectories.

The framework provides a policy improvement lower bound in terms of surrogate gain versus synthetic experience model error. Under bounded error in reward estimation () and transition modeling (), the improvement bound is: where is the policy trust-region metric (KL divergence).

Sim-to-real transfer is realized via “DreamGym-S2R”: agents pretrained solely with synthetic rollouts are fine-tuned on real environment interactions—substantially reducing the amount of required real-world data while achieving superior final performance.

5. Empirical Evaluation and Analysis

DreamGym was evaluated on benchmarks not traditionally RL-ready as well as on RL-ready environments:

- WebArena: Realistic multi-hop web navigation with sparse rewards; DreamGym improved over all baselines by >30%, delivering RL feasibility in tasks previously intractable due to rollout cost.

- WebShop, ALFWorld: DreamGym matched or outperformed classic RL approaches (GRPO, PPO) with only synthetic data, and DreamGym-S2R surpassed real-only RL baselines with 10x less real data.

Ablations indicate that removing top- buffer retrieval, explicit reasoning traces, or adaptive curriculum features leads to marked performance drops, confirming the contributions of each component.

| Algorithm | WebShop | ALFWorld | WebArena |

|---|---|---|---|

| SFT (20K real) | 35.1 | 68.0 | 5.5 |

| GRPO (80K real) | 65.0 | 70.9 | 6.1 |

| DreamGym (0 real) | 63.9 | 66.3 | 9.1 |

| DreamGym-S2R (5K real) | 75.0 | 75.9 | 9.7 |

| PPO (80K real) | 64.2 | 72.9 | 4.8 |

| DreamGym, PPO (0 real) | 58.1 | 70.8 | 10.9 |

| DreamGym-S2R, PPO (5K) | 63.9 | 73.3 | 10.9 |

This suggests that for RL agents driven by meta-representational experience synthesis, policy generalization and sample efficiency exceed those available in physical simulation or purely real-environment regimes.

6. Extension, Generalization, and Prospective Impact

DreamGym’s experience synthesis framework generalizes across agent backbone architectures (e.g., LLama-3.1-8B, Qwen-2.5-7B) and diverse task domains. Cross-domain transfer is achieved without environment-specific tuning, enabled by abstract state representations and curriculum-driven task design. Agents trained with DreamGym demonstrate increased stability, reduced sensitivity to spurious signals, and higher adaptability when fine-tuned in downstream real environments or new domains. The framework’s separation of rollout collection from physical resource constraints enables scalable RL algorithms for LLM agents and, plausibly, similar methodologies for other domains where grounded direct interaction is expensive or impractical.

7. Practical Usage Pseudocode (excerpt from paper)

1 2 3 4 5 6 7 8 9 10 11 12 13 |

Initialize agent policy π_θ; experience model M_exp; replay buffer D For iteration in [1, ..., N]: Sample seed task set T For each task τ in T: s_0 ← initial state For step t = 0 to T_max: a_t ← π_θ(s_t) s_{t+1}, r_{t+1} ← M_exp(s_t, a_t, history, top-k D, τ) Store (s_t, a_t, s_{t+1}, r_{t+1}) in D If terminal: break Update π_θ via RL (PPO/GRPO) on newly collected {s, a, s', r} Update M_exp and curriculum set based on reward entropy (Optional: At later stage, transfer π_θ to real environment and fine-tune) |

DreamGym framework provides a principled basis for sample-efficient reinforcement learning via LLM-driven experience synthesis, curriculum-aligned task generation, and adaptive replay. Its methodological innovations extend RL agent training to settings previously limited by rollout cost, reward sparsity, and complex environment dynamics, and set new baselines for generalization, sim-to-real transfer, and domain adaptation in RL for autonomous agents (Chen et al., 5 Nov 2025).