Dr. CaBot: AI in Medical Diagnostics

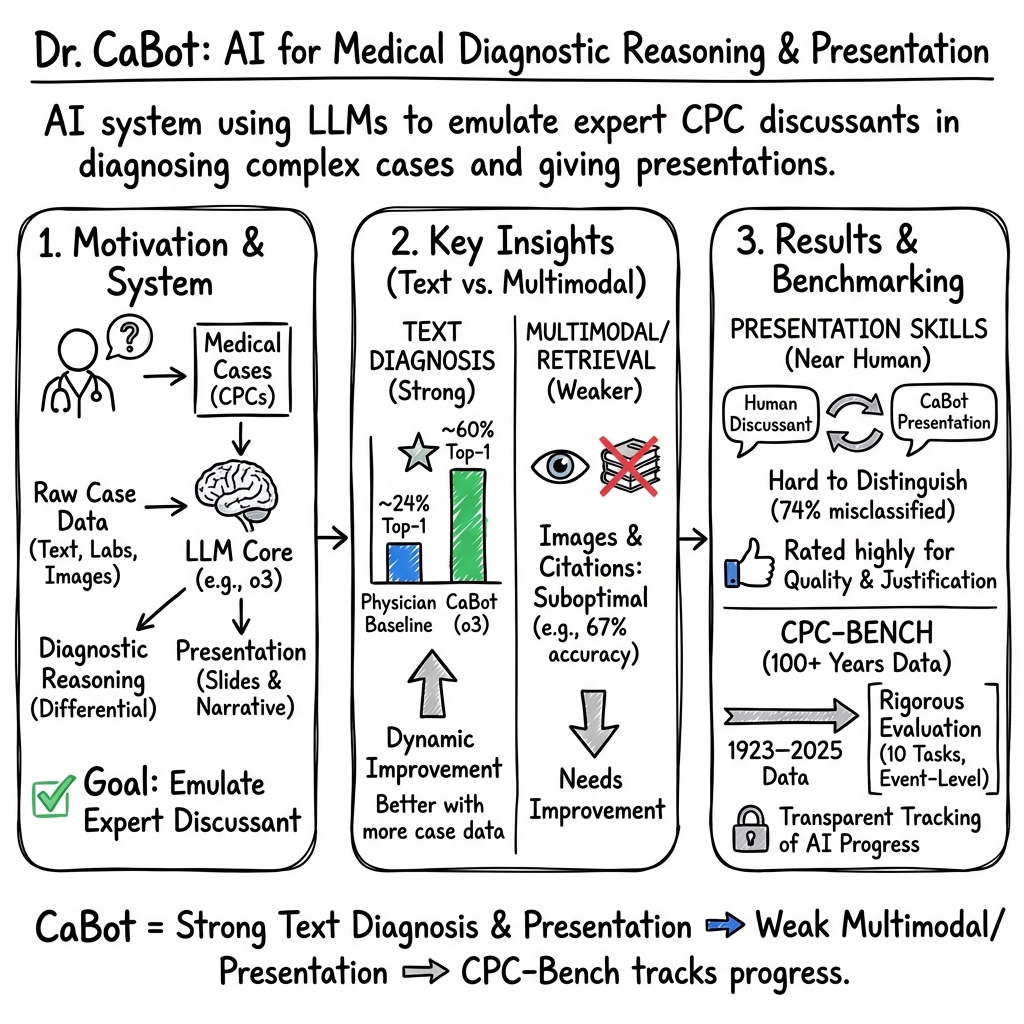

- Dr. CaBot is an AI discussant that emulates expert diagnostic reasoning and clinical presentations in medical case conferences.

- It leverages large language models to synthesize sequential clinical data, achieving top-1 and top-10 accuracies of 60% and 84% respectively.

- Benchmarking via CPC-Bench validates its diagnostic and presentation quality while highlighting areas for improvement in multimodal integration and literature retrieval.

Dr. CaBot is an artificial intelligence discussant specifically engineered to emulate expert diagnostic reasoning and presentation skills in the context of medical case conferences, notably the New England Journal of Medicine Clinicopathological Conferences (CPCs). Built on a LLM architecture, Dr. CaBot synthesizes case presentations and produces differential diagnoses, textual and slide-based presentations, evaluated comprehensively against physician-validated standards spanning a century of clinical data. Its deployment and benchmarking methodology represent a new paradigm for tracking the progress and limitations of medical AI, as detailed in (Buckley et al., 15 Sep 2025).

1. Architecture and Foundational Principles

Dr. CaBot operates using leading LLMs, including the o3 (OpenAI) platform, as its computational core. The system ingests raw case presentations—structured as sequential events, each containing clinical information—and outputs comprehensive written and slide-based discussant presentations. The architecture is designed to model the multifaceted reasoning of human experts, encompassing initial hypothesis generation, differential diagnosis, justifications for test selection, and evidence-based narrative synthesis. Dr. CaBot's output is subjected to stringent physician annotation, event-level analysis, and comparative evaluation against the CPC-Bench, a benchmark comprising 10 distinct text-based and multimodal diagnostic tasks.

2. Diagnostic Reasoning and Accuracy

Dr. CaBot demonstrates advanced proficiency in text-based differential diagnosis through evaluation on 377 contemporary CPC cases. The o3 model achieves a top-1 diagnostic accuracy of 60% (95% CI: 55%–65%), and a top-10 accuracy of 84% (95% CI: 80%–87%). For context, baseline performance of 20 internal medicine physicians using electronic searches is markedly lower: ~24% top-1 and ~45% top-10 accuracy. Dr. CaBot also excels in dynamic, stepwise diagnostic refinement. As case presentations unfold through sequential events—including history, laboratory, and imaging updates—the model’s performance improves, exemplified by a 29 percentage point gain between the first and fifth event. The mean rank of correct diagnoses remains lower (better) than contemporary GPT-4o baselines at each diagnostic touchpoint.

The next-test selection accuracy is 98% (95% CI: 96%–99%), indicating high reliability in recommending appropriate subsequent investigations—a critical skill in clinical reasoning. Accuracy is measured using standard metrics:

with event-level quantification to capture diagnostic progress as information accumulates.

3. Emulation of Expert Presentation Skills

Dr. CaBot is designed to not only generate differential diagnoses but also produce written and slide-based clinical presentations that follow the conventions of expert CPC discussants. In blinded pairwise comparisons involving five internal medicine physicians and 27 cases (2023–2025), the source of the differential (human vs. CaBot) was misclassified in 46 of 62 trials (74%), indicating that physicians could distinguish between human and AI-generated differentials only 26% of the time. Furthermore, CaBot's output was rated more favorably across dimensions such as overall quality, justification of diagnoses, citation accuracy, and learner engagement. This demonstrates near-equivalence—and in some quality aspects, superiority—to human discussants in narrative synthesis and diagnostic justification.

4. Multimodal and Literature Retrieval Performance

While Dr. CaBot rivals and often exceeds physician performance on text-based reasoning tasks, its capabilities in literature retrieval and image interpretation are comparatively weaker. In the NEJM Image Challenge and other visual differential diagnosis tasks, advanced models such as o3 and Gemini 2.5 Pro attain approximately 67% accuracy on image-only cases, with even lower rates for specific radiology images. In citation recall without external retrieval mechanisms, Dr. CaBot performs suboptimally. The application of retrieval-augmented generation strategies improves literature search performance but does not reach parity with text-based diagnostic accuracy.

A plausible implication is that further advancement in multimodal model integration and retrieval augmentation is necessary to address limitations in image-based and knowledge-intensive subtasks.

5. Benchmarking Methodology: CPC-Bench

The CPC-Bench, curated from 7102 CPCs (1923–2025) and 1021 Image Challenges (2006–2025), provides a granular benchmark for 10 diagnostic and multimodal tasks. Each task is annotated by physicians with event-level detail, enabling precise per-unit information analysis. Benchmarking metrics include top-n accuracy, next-test selection, citation quality, image interpretation, and presentation scoring. Statistical validity is ensured through Clopper-Pearson confidence intervals and bootstrap estimates. CPC-Bench thus furnishes a rigorous, standardized framework for transparent tracking of medical AI progress and its comparison to human performance across text, image, and retrieval domains.

6. Implications for Medical Education and Clinical Decision Support

The findings indicate that LLMs—when tailored for medical reasoning and presentation—can effectively emulate the cognitive and communicative dimensions of human expert discussants in complex clinical conferences. Dr. CaBot’s outputs are favorably rated by practicing internists, suggesting its potential role as an educational partner and decision-support tool. However, the observed shortcomings in literature retrieval and image interpretation highlight specific domains for continued technical innovation.

CPC-Bench is positioned as a central resource to facilitate transparent evaluation, improvement, and deployment of medical AI systems, supporting ongoing research into their integration within educational and clinical workflows.

7. Future Directions and Limitations

Research on Dr. CaBot points to the necessity of enhanced multimodal integration, robust evidence retrieval, and iterative model evaluation using CPC-Bench standards. While the model’s strengths in text-based diagnosis and differential presentation are pronounced, future work should focus on improving image-based reasoning and literature search reliability. Continuous public benchmarking and model release via CPC-Bench will allow the research community to monitor, compare, and accelerate advances in medical artificial intelligence.

In summary, Dr. CaBot embodies the contemporary capabilities and limitations of LLM-based medical reasoning and presentation, setting a new standard in the differential diagnosis and narrative synthesis domain while highlighting the need for further research in multimodal and retrieval-augmented AI techniques (Buckley et al., 15 Sep 2025).