Curriculum Generator in Machine Learning

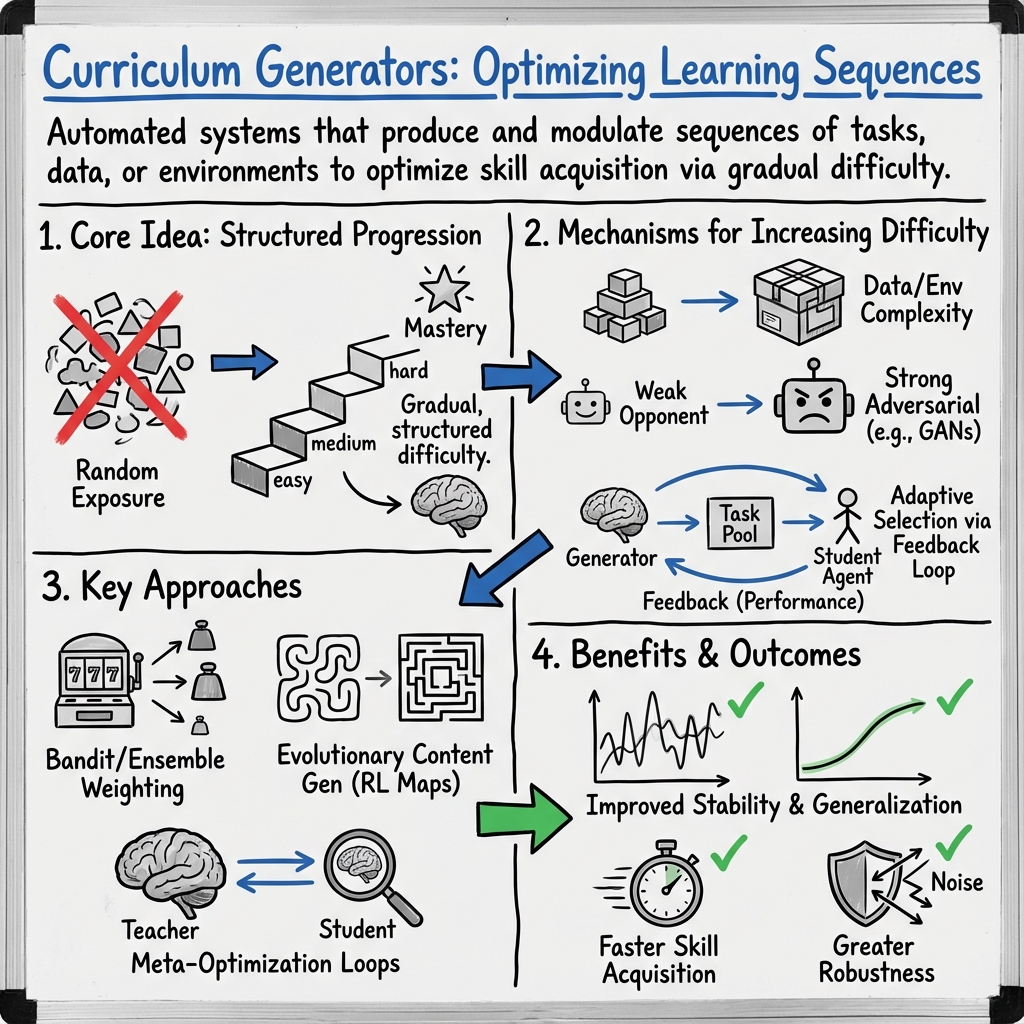

- Curriculum Generator is a system that automatically creates structured learning sequences with progressive difficulty to enhance skill acquisition.

- It employs various strategies—such as GAN scheduling, bandit-driven ensembles, and meta-optimized teacher-student loops—to refine training effectiveness.

- Empirical studies demonstrate improved model stability, enhanced generalization and data efficiency, and accelerated learning across diverse domains.

A curriculum generator is an algorithmic or model-based system that automatically produces and modulates sequences of learning experiences—tasks, examples, environments, or data—so as to optimize skill acquisition or model training according to principles of gradual, structured difficulty progression. In contemporary machine learning and AI research, curriculum generators are integral to curriculum learning: rather than exposing a learner to randomly sampled or uniformly difficult problems, the generator organizes the sequence (and sometimes the content) of training exposures to maximize effective mastery, generalization, or robustness. Implementation domains range from generative adversarial networks, reinforcement learning, continual learning, and educational technology, to retrieval-augmented LLM pipelines. Below is a systematic review of core perspectives, methodologies, and empirical outcomes associated with curriculum generator design and deployment.

1. Fundamental Principles and Typologies

A curriculum generator embodies the notion of structured, incremental difficulty common to human learning. In machine learning, this can manifest as:

- Progressive environment or data difficulty: The model is initially trained on easy cases and gradually exposed to harder situations, either by modifying environment or sample complexity.

- Modulation of adversarial difficulty: In adversarial models such as GANs, the generator's task is made more challenging over epochs by strengthening the discriminator, e.g., via a weighted ensemble of nested function classes in Curriculum GANs (Sharma et al., 2018).

- Adaptive selection over task pools: Using metrics derived from agent or model performance, the generator adaptively samples curriculum items believed to optimize learning rate, coverage, or robustness, as in bandit-driven approaches (Doan et al., 2018) or multi-agent self-play curricula (Du et al., 2022).

- Data ordering or augmentation: Sequence or sample ordering within an input dataset, employing autoencoder-optimized schedules (Sarkar et al., 2021), or augmentation controls (e.g., bottom-k sampling for paraphrases in NLP tasks (Lu et al., 2022)).

Types of curriculum generators can be classified along several axes:

- Explicit (hand-crafted progression rules or heuristics)

- Implicit (emergent curricula via self-play or reward maximization)

- Model-based (using generative, optimization, or reinforcement learning to synthesize curriculum elements)

- Data-driven (using historical trajectories, expert demonstrations, or environment feedback to synthesize training state sequences).

2. Algorithmic Strategies and Methodological Architectures

Curriculum generators employ a diverse array of algorithmic designs:

- Convex Discriminator Decomposition and λ-Scheduling (Curriculum GANs) The GAN discriminator is represented as , with each from nested function classes . The weighting vector follows a curriculum schedule so that the effective discriminator increases in representational power, raising the bar for generator outputs (Sharma et al., 2018).

- Bandit-Driven Discriminator Ensembles Generators are paired with an ensemble of discriminators of varying capacity, with a Hedge algorithm or similar full-information adversarial bandit mechanism dynamically weighting their influence according to feedback rewards, smoothing, and measured learning progress (Doan et al., 2018):

with updated as a moving average of rewards.

- Evolutionary and Procedural Content Generation Evolutionary algorithms such as FI-2Pop generate feasible and maximally challenging environments or maps for RL agents. The generator iteratively proposes training samples (e.g., maps) that maximize the agent's loss, transferring only feasible candidates and shifting evolutionary pressure post-feasibility to maximize curriculum utility (Green et al., 2019, Howard et al., 2022).

- Autoencoder-Driven Training Strategy Optimization Discrete sequence orderings of training batches/examples are encoded in a latent space, optimized using a differentiable predictor to maximize downstream performance, then decoded to form the updated curriculum schedule (Sarkar et al., 2021).

- Meta-Optimization and Teacher-Student Loops Curriculum is supplied by a teacher model (often itself a generator), updated via bi-level optimization so that the curriculum generation directly drives student model performance improvements on downstream tasks; exemplified in medical data augmentation (Li et al., 2022).

- Paraphrase Similarity and Difficulty Scheduling In NLP, a paraphrase generator samples variants at various similarity scores (using bottom-k sampling and filtering mechanisms), ranks them into graduated difficulty buckets, and presents examples cyclically to mitigate catastrophic forgetting (Lu et al., 2022).

- Goal Curriculum Generation via Multi-Agent Self-Play or Contextual Bandits Automatic generation of a diverse and escalating goal curriculum is achieved via multi-agent games with regret-maximizing teachers (goal generators) and student agents, or using contextual bandit frameworks that modulate task sampling based on student history and feedback (Du et al., 2022, Wang et al., 2023).

- Retrieval-Augmented Generation with Curriculum Learning CL-RAG creates multi-level curriculum datasets for both retriever and generator in RAG-based QA. This variegates the sequence of training samples from "easy" (gold or reliably aligned) to "hard" (counterfactual or noisy retrieval), using explicit stratification and stage-wise training (Wang et al., 15 May 2025).

3. Performance Outcomes and Comparative Assessments

Empirical studies demonstrate pronounced benefits of curriculum generators across domains:

- Stability and Generalization in Generative Models:

Curriculum GANs, by increasing discriminator power gradually, prevent early generator collapse and yield 33.6% lower error in synthetic signal generation and equivalently high-fidelity image synthesis as complex progressive growing approaches (Sharma et al., 2018). Bandit-based adaptive ensembles increase mode coverage and sample diversity, outperforming static mixtures or single-discriminator approaches, as evidenced by lower FID and improved convergence (Doan et al., 2018).

- Efficacy in RL and Procedural Domains:

Evolutionary curation of RL training maps led to higher performance peaks and faster sample efficiency, with full networks surpassing randomly mixed map curricula by 20%+ in evaluation metrics (Green et al., 2019). Automated goal curricula in multi-agent and single-agent RL contexts achieve zero-shot generalization improvements and faster mastery of unseen, out-of-distribution tasks relative to domain randomization or manually designed curricula (Du et al., 2022, Liang et al., 2024). Curriculum generator methods using MAP-Elites and mixed terrain representations yield increased coverage, rapid skill acquisition, and alignment between agent capability and environment challenge (Howard et al., 2022).

- Data Efficiency and Effectiveness in NLP and Vision:

Training Sequence Optimization and curriculum pointer-generator frameworks attain 2–3.5% accuracy/AP gains over random data ordering and surpass previous curriculum algorithms on CIFAR datasets (Sarkar et al., 2021), and yield 51% BLEU-4 / 17% Rouge-L gains over prior reading comprehension baselines (Tay et al., 2019). Paraphrase-based and cyclic curriculum data augmentation boost accuracy and naturalness in few-shot text classification and dialogue systems beyond token-level or non-cyclical approaches (Lu et al., 2022). Retrieval-augmented curricula in LLMs/RAG demonstrate up to 4% improvement in EM/F1 scores on open-domain QA by controlling exposure to distractors and progressively shifting from easy to hard contextual support (Wang et al., 15 May 2025).

- Educational Content Generation and Alignment:

Retrieval-augmented curriculum generators tailored to Ugandan and Malaysian secondary school curricula create lesson plans and MCQs that meet or exceed technical content and alignment when compared to expert human baselines, as measured by adapted LPAP and STS-based metrics (Kloker et al., 2024, Wahid et al., 6 Aug 2025). COGENT’s curriculum decomposition and readability-controlled passage generation consistently achieves higher expert and LLM-alignment scores, improving both curriculum fidelity and grade appropriateness (Liu et al., 11 Jun 2025).

4. Applications, Generalizations, and Implications

Curriculum generators exhibit broad applicability:

- In adversarial frameworks (GANs), adaptive curricula counteract instability and mode collapse, simplifying network design and facilitating generalization across modalities, including synthetic sequence and medical domains (Sharma et al., 2018, Li et al., 2022).

- In reinforcement and multi-agent learning, curriculum generators facilitate transfer, accelerate exploration in sparse reward environments, and support population-invariant or hierarchical skill learning (Wang et al., 2023).

- In retrieval-augmented LLMs, staged curricular exposure to ground-truth, challenging, and adversarial contexts fosters robustness against noisy retrieval and improves factual fidelity (Wang et al., 15 May 2025, Wahid et al., 6 Aug 2025).

- Curriculum generators built on RAG and code-generation LLMs, as in Eurekaverse and EvoCurr, demonstrate that LLM-driven environments or task progressions lead to policies that outperform human-designed curricula in simulated and real-world robotics or decision-making scenarios (Liang et al., 2024, Cheng et al., 13 Aug 2025).

The approach of automatically generating and evolving curricula creates a paradigm wherein model training is not statically defined, but incrementally adapted in response to learner performance, domain requirements, or evolving task distributions.

5. Key Theoretical Constructs and Optimization Frameworks

Curriculum generators frequently operationalize the following mathematical and algorithmic principles:

- Monotonicity and Partial Order of Curriculum Schedules: The property that each subsequent curriculum component “dominates” previous (easier) elements, often realized via cumulative sum constraints on mixture weights or explicit monotonic scheduling (Sharma et al., 2018).

- Bandit Optimization: Curriculum selection (for discriminators, goals, or tasks) as a multi-armed or contextual bandit problem, with reward or regret-based adaptation (Doan et al., 2018, Wang et al., 2023).

- Bi-Level Meta-Optimization: Teacher–student formulations with inner- and outer-loop optimization, enabling feedback-driven augmentation policy adaptation (Li et al., 2022).

- Performance Predictors and Latent Space Optimization: Use of learned predictors to differentiate and optimize over discrete curriculum sequences in continuous representation spaces (Sarkar et al., 2021).

- Entropy Regularization and Diversity Coverage: Soft actor-critic or maximum-entropy principles incorporated to avoid collapse in curriculum or environment space, ensuring broad and diverse exposure (Du et al., 2022).

- Curriculum Evaluation Metrics: Use of STS, LPAP, and other alignment/factuality frameworks for automated, scalable assessment of generated curricular materials (Kloker et al., 2024, Wahid et al., 6 Aug 2025).

6. Limitations, Challenges, and Prospective Directions

While empirical successes are apparent, curriculum generators face several challenges:

- Hallucination or failure to ground content appropriately when context retrieval is insufficient or ambiguous, especially in educational and LLM-driven RAG domains (Kloker et al., 2024, Wahid et al., 6 Aug 2025).

- Sensitivity to the granularity and ordering of curriculum levels—premature escalation can lead to model failure or catastrophic forgetting; overly conservative progressions can slow training (Cheng et al., 13 Aug 2025).

- Computational cost and efficiency, particularly where generative LLMs are used to synthesize or evolve curricula at scale, remains a bottleneck; future work on model finetuning and enhanced feedback will be critical (Liang et al., 2024).

- Theoretical open questions remain regarding the dependency of optimal curricula on learner properties, the standardization of curriculum schedules, and optimal evaluation protocols (Sarkar et al., 2021).

Future research is expected to focus on:

- Adaptive, multi-agent, and multi-modal curriculum generation strategies

- Scalability in low-resource and cross-lingual educational environments

- Seamless integration of curriculum generation with retrieval, augmentation, and feedback-rich training pipelines

- Empirical benchmarks for generalization, robustness, and efficiency across diverse task domains and model architectures

7. Representative Table: Canonical Curriculum Generator Methodologies

| Methodology | Domain(s) | Key Mechanism |

|---|---|---|

| Convex Lambda Scheduling | GANs, Image/Seq Generation | Weighted nested discriminators |

| Adversarial Bandit Ensemble | GANs, Generative Models | Bandit-based mixture and reward |

| Evolutionary Content Generation | RL, Procedural Generation | Loss-maximizing evolutionary pressure |

| Autoencoder Sequence Optimization | Supervised, Vision | Gradient-based reordering in latent space |

| Meta-Optimized Teaching Loops | Data Augmentation, Medical Vision | Bi-level teacher-student optimization |

| Multi-Agent Self-Play | RL, Goal Generation | Entropic regret-driven adversaries |

| RAG with Curriculum Scheduling | RAG-LLM, QA, EdTech | Multi-level example/ context planning |

| Paraphrase Difficulty Scheduling | NLP Classification, Dialogue | Similarity-controlled augmentation |

This summary reflects the current landscape and design space for curriculum generators in contemporary AI and educational technology research, grounded in peer-reviewed empirical literature and supported by formal optimization frameworks.