Conversational Honeypots

- Conversational honeypots are interactive deception systems that mimic operating system or application behaviors using advanced AI to capture attacker interactions.

- They utilize dynamic orchestration, LLM-driven dialogue management, and cross-modal simulation to prolong attacker dwell time and enhance threat analysis.

- Deployment challenges include managing latency, resource constraints, and consistency in simulating realistic environments while mitigating detection risks.

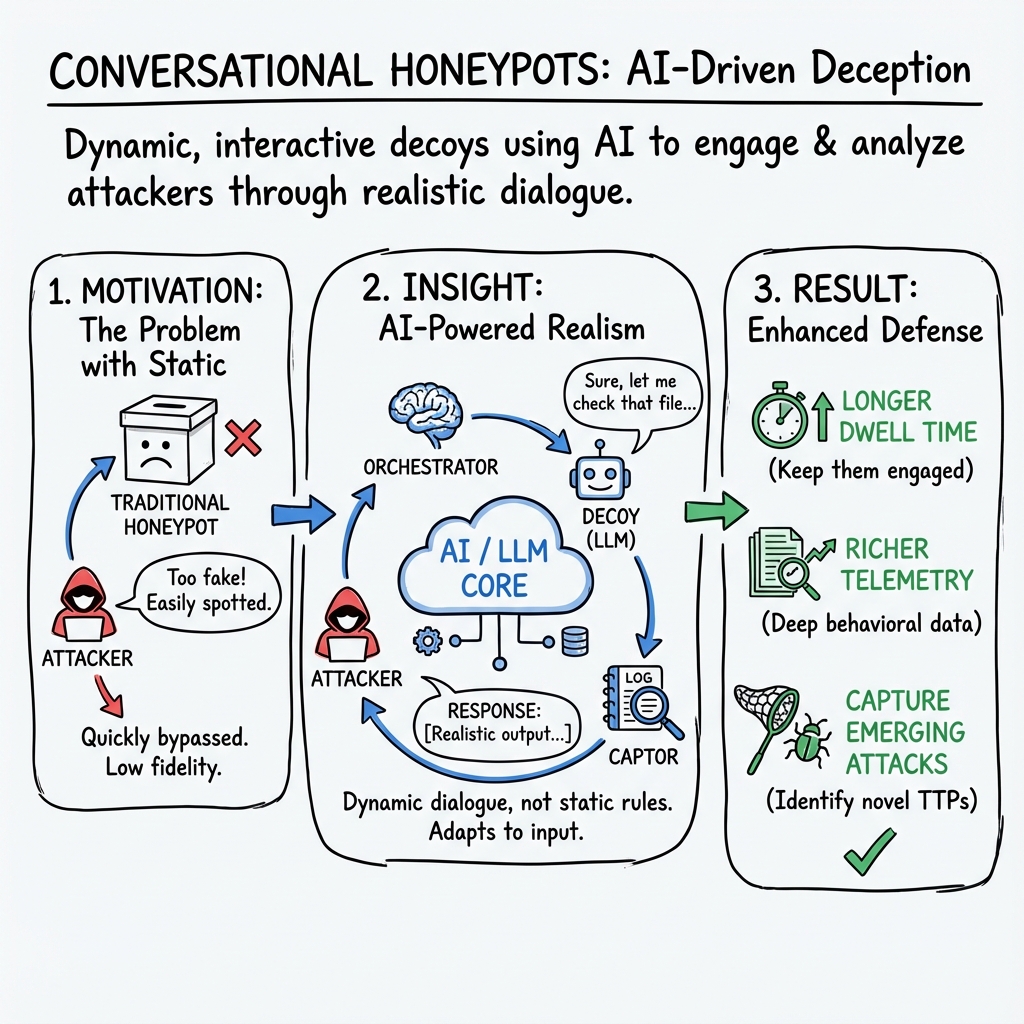

Conversational honeypots are dynamically interactive deception systems designed to lure, engage, and analyze malicious actors through realistic dialogue or command-based interfaces. Unlike traditional honeypots that expose static services or limited protocol emulation, conversational honeypots utilize advanced automation—frequently powered by LLMs, reinforcement learning, or context-aware orchestration—to simulate operating system behavior, application logic, or even device-specific communication. The result is significantly prolonged attacker dwell time, richer behavioral telemetry, and enhanced capacity to capture emerging attack techniques.

1. Conceptual Foundations and Evolution

Conversational honeypots represent the convergence of classical honeypot methodologies with advancements in AI-driven interaction and contextual simulation. Early honeypots primarily operated as static decoys offering limited fidelity, often restricted to specific ports or network services. The progression toward behavioral and conversational honeypots is driven by three key developments:

- Dynamic orchestration: Automated deployment of decoys adapted to attacker behavior (Bartwal et al., 2022).

- AI-driven dialogue management: Use of transformer models, reinforcement learning, or LLMs to interpret, generate, and sequence naturalistic responses in real time (Mfogo et al., 2023, Sladić et al., 2023, Wang et al., 4 Jun 2024, Malhotra, 1 Sep 2025).

- Cross-modal deception: Emulation of various interaction layers, including shell terminals, IoT protocols, and natural language chat, expanding coverage and realism (McKee et al., 2023, Mfogo et al., 2023).

This paradigm shift is motivated by the increasing sophistication of adversaries, who can often quickly fingerprint and bypass low- or medium-interaction honeypots.

2. Core Architectures and System Components

Conversational honeypot architectures are stratified into several primary components, as elucidated in modern designs such as HoneyDOC (Fan et al., 9 Feb 2024), HoneyGPT (Wang et al., 4 Jun 2024), LLMHoney (Malhotra, 1 Sep 2025), and AIIPot (Mfogo et al., 2023). A prototypical architecture typically includes:

| Module | Main Function | Representative Papers |

|---|---|---|

| Decoy | Provisioning/interfacing as OS/device | (Fan et al., 9 Feb 2024, McKee et al., 2023, Malhotra, 1 Sep 2025) |

| Captor | Full-spectrum telemetry collection | (Fan et al., 9 Feb 2024, Bartwal et al., 2022) |

| Orchestrator | Dynamic response, session management | (Fan et al., 9 Feb 2024, Bartwal et al., 2022, Wang et al., 4 Jun 2024) |

Decoy modules may leverage virtual filesystems and LLMs to present realistic stateful shell behaviors (Malhotra, 1 Sep 2025), or emulate device-specific command sets as in IoT honeypots (Mfogo et al., 2023). Captor modules are responsible for high-fidelity logging of attacker inputs, responses, and system interactions, including system call traces and network flows (Fan et al., 9 Feb 2024). Orchestrator modules coordinate decoy provisioning, redirect attacker flows based on session quality, and may autonomously escalate the interaction level (e.g., from low-interaction to high-interaction), often with programmable SDN integration for redirection and stealth (Fan et al., 9 Feb 2024).

3. Machine Learning and LLM-Driven Dialogue Management

Recent advances leverage powerful sequence modeling and LLMs to substantially elevate deception realism. These systems fall into several methodological categories:

- Prompt Engineering and Session Management: State-of-the-art honeypots craft complex prompts for LLMs by including static settings, historical interactions, and real-time state registers. HoneyGPT (Wang et al., 4 Jun 2024) formalizes the process as:

where is the honeypot principle, is system configuration, is interaction history, is the system state register, and is the attacker’s latest command.

- Hybrid Dictionary/Generative Systems: LLMHoney (Malhotra, 1 Sep 2025) and related designs employ a cached dictionary for common commands and dispatch only novel or complex requests to the LLM backend, balancing latency and authenticity. The algorithmic strategy is:

- Reinforcement Learning (RL) and MDP-Based Engagement: AIIPot (Mfogo et al., 2023) structures the conversational interaction as a Markov Decision Process, using RL to maximize session length and attacker engagement:

where is the attacker’s input, is the system’s response, and quantifies the conversational reward.

- Fine-Tuning Open-Source LLMs: LLM Honeypot (Otal et al., 12 Sep 2024) executes supervised fine-tuning (SFT) of models such as Llama3 on datasets derived from real-world attacker logs (e.g., from Cowrie) and Linux command outputs, employing LoRA, QLoRA, and Flash Attention for efficiency.

4. Evaluation Metrics, Performance, and Model Selection

Conversational honeypot solutions are assessed along several axes:

| Metric | Typical Quantification | Example Source |

|---|---|---|

| Realism/Accuracy | Cosine/Jaro-Winkler similarity | (Malhotra, 1 Sep 2025, Otal et al., 12 Sep 2024) |

| Response Latency | Mean latency per command (sec) | (Malhotra, 1 Sep 2025): ~3.0s for Gemini-2.0 |

| Memory Overhead | ΔMB per session/command | (Malhotra, 1 Sep 2025): ~1–11 MB |

| Hallucination Rate | % outputs inconsistent with state | (Malhotra, 1 Sep 2025): 5.8–12.9% |

| Engagement Length | Mean session/interaction length | (Bartwal et al., 2022): 3148s (dynamic) vs 102s (static) |

| Coverage/Flexibility | Number of commands accurately simulated | (Otal et al., 12 Sep 2024): 617 scenarios |

Selection of LLM backend and prompt strategy reflects a trade-off between output fidelity, response time, and resource consumption. For example, Gemini-2.0 and Phi3:3.8B produced the most reliable results in LLMHoney, but incurred higher computational demand (Malhotra, 1 Sep 2025). HoneyGPT's prompt pruning via an explicit "weaken factor" controls long-term context within LLM token limits (Wang et al., 4 Jun 2024).

5. Security Analytics and Telemetry Collection

Conversational honeypots are valuable not merely for attack detection, but for their capacity to provide fine-grained telemetry and behavioral intelligence:

- Session logs: All attacker interactions, system state changes, and timing data are captured for offline TTP (tactics, techniques, and procedures) analysis (McKee et al., 2023, Otal et al., 12 Sep 2024).

- Impact grading: HoneyGPT assigns an "impact factor" for each command, quantifying the aggressiveness or severity (from 0 for information requests to 4 for actions like privilege escalation) (Wang et al., 4 Jun 2024).

- Adaptive environmental morphing: Systems such as HoneyDOC (Fan et al., 9 Feb 2024) and dynamic SOAR-based honeypots (Bartwal et al., 2022) can programmatically escalate or de-escalate the interaction based on detected threats to maximize data quality while maintaining stealth.

By logging extended, realistic scenarios, these systems assist defenders in identifying novel attacker pathways and potentially unreported vulnerabilities.

6. Practical Deployment, Limitations, and Challenges

Deployment of conversational honeypots introduces operational trade-offs:

- Maintenance and Fidelity: Realistic emulation requires continual prompt refinement, regular update of command dictionaries, and careful management of virtual state to prevent inconsistencies (McKee et al., 2023, Otal et al., 12 Sep 2024).

- Latency and Resource Usage: Larger LLMs increase realism at the cost of increased latency (sometimes up to 9 seconds per command for large models) and higher per-session memory requirements (Malhotra, 1 Sep 2025).

- Hallucination and Consistency Management: Even with stateful prompting, LLMs may generate outputs incongruent with simulated file systems. Implementations like LLMHoney address this by grounding LLM prompts with up-to-date state and deferring to cached responses whenever possible (Malhotra, 1 Sep 2025).

- Detection Risks: Skilled adversaries may discover the deceptive nature of the system over extended interaction or by probing for behavioral anomalies (McKee et al., 2023).

Ongoing research focuses on mitigating these risks via better prompt engineering, multi-level interaction, model selection, and context management.

7. Applications, Impact, and Directions for Future Research

Conversational honeypots have immediate relevance across several domains:

- Enterprise Network Defense: Integration into SOAR or SDN-orchestrated environments (e.g., HoneyDOC) achieves scalable, dynamic deception covering multiple VLANs and attack surface areas (Bartwal et al., 2022, Fan et al., 9 Feb 2024).

- Cloud and SSH Endpoint Security: Fine-tuned LLMs deployed as SSH servers can gather attacker TTPs in realistic Linux environments (Malhotra, 1 Sep 2025, Otal et al., 12 Sep 2024).

- IoT Security: Transformer-based and RL-driven honeypots like AIIPot substantially improve attack session capture rates and dwell time in IoT ecosystems (Mfogo et al., 2023).

- Defensive ML/NLP Systems: Watermarked and honeypot-enhanced models (e.g., HoneyModels, backdoor-resilient PLMs) provide trapdoor-based detection for adversarial machine learning threats (Abdou et al., 2022, Tang et al., 2023).

- Security Analytics: Prolonged, high-depth interactions yield superior threat intelligence for incident response, attacker profiling, and vulnerability research.

Emergent research areas include adaptive prompt compression for context scaling, improved multimodal deception, integration with real-time threat detection pipelines, and fine-grained forensic analysis leveraging the detailed conversational logs unique to this class of honeypots.

In conclusion, conversational honeypots synthesize advanced AI-driven interaction models with deception engineering, enabling dynamic, adaptive, and persistent engagement of sophisticated adversaries. Their architecture, supported by prompt management, stateful simulation, and a hybrid of deterministic and generative response mechanisms, delivers significantly enhanced deception quality, threat intelligence capture, and operational flexibility compared to traditional honeypot systems (Bartwal et al., 2022, McKee et al., 2023, Mfogo et al., 2023, Fan et al., 9 Feb 2024, Wang et al., 4 Jun 2024, Otal et al., 12 Sep 2024, Malhotra, 1 Sep 2025). The field continues to evolve rapidly as model capabilities, deployment practices, and attacker countermeasures coevolve.