- The paper introduces a closed-loop framework combining LLM-driven penetration testing with game-theoretic reasoning to compute Nash equilibria for cybersecurity operations.

- It validates G-CTR’s performance by demonstrating up to 245× speedup and over 140× cost reduction compared to traditional manual threat modeling in bug bounty and CTF exercises.

- The study shows that strategic digest injection significantly improves success rates and stabilizes agent behavior, setting a new benchmark for autonomous cyber defense.

Cybersecurity AI: Closed-Loop Game-Theoretic Guidance for Autonomous Attack and Defense

Overview

"Cybersecurity AI: A Game-Theoretic AI for Guiding Attack and Defense" (2601.05887) proposes and validates a closed-loop architecture for autonomous cybersecurity operations that fuses agentic LLM-driven penetration testing with a game-theoretic reasoning layer. At its core, the approach centers on Generative Cut-the-Rope (G-CTR): an algorithm that automatically extracts attack graphs from unstructured AI logs, computes Nash equilibria to derive optimal attacker/defender strategies, and injects actionable feedback directly into the planning context of AI agents. This yields strategy-aware agents that execute at machine speed but are systematically anchored to decisive paths and chokepoints.

Architectural and Algorithmic Innovations

The system architecture is defined by a tri-phasic closed-loop:

- Game-Theoretic AI Analysis with G-CTR: Attack graphs are synthesized from raw security logs (i.e., the behavior of a penetration-testing LLM-powered agent), rather than by human subject-matter experts. These graphs are pruned, normalized, and then analyzed via the Cut-the-Rope (CTR) framework to compute Nash equilibria and associated path/defense probabilities.

- Strategic Interpretation (Digest Generation): The equilibrium is algorithmically processed into concise digests summarizing optimal paths, bottlenecks, and critical nodes. This digest can be generated by deterministic rules or, more effectively, via LLM-based natural-language synthesis conditioned on the equilibrium data.

- Strategy-Guided Agent Execution: The digest is injected into the system prompt of subsequent LLM-agent planning episodes. Agents thus remain tightly guided by the dynamic game-theoretic context, with feedback updated every n interactions (typically n=5).

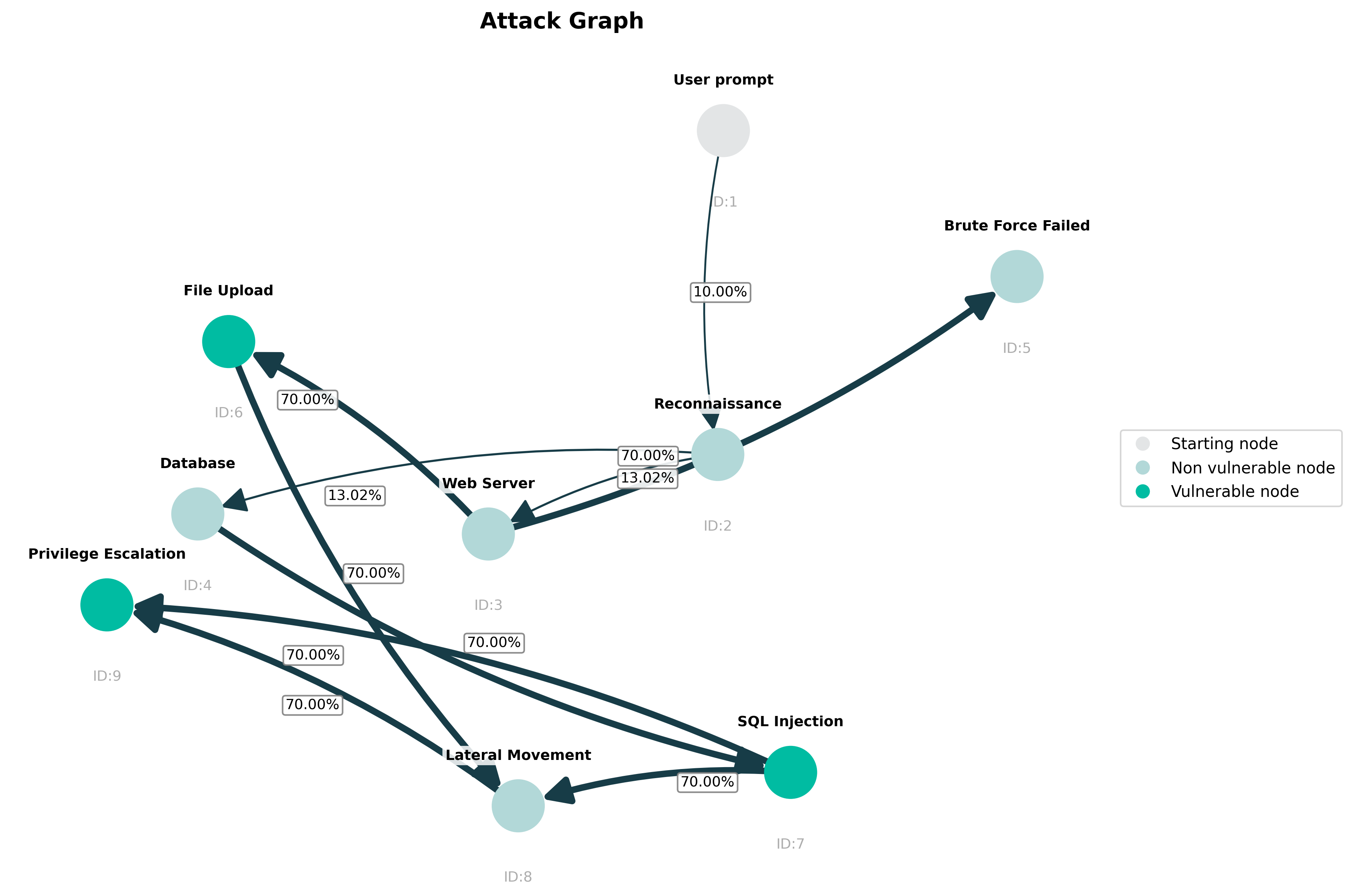

Figure 1: Attack graph example generated via LLM-based extraction, capturing progression from entry point through intermediate and vulnerability nodes with context annotations.

Figure 1 concretizes G-CTR's graph extraction: nodes encode AI-observed artifacts (e.g., services, domains, vulnerabilities) while edges reflect feasible chains discovered during agent operations.

Empirical Validation and Numerical Results

Quantitative experiments span five real-world bug bounty scenarios plus cyber-range CTFs. Key findings:

Critically, LLM-inferred digests—conditioned on Nash equilibrium statistics—outperform static rule-based interpretations, providing higher practical exploit rates and more consistent behavior.

Multi-Agent Attack/Defense Orchestration

The closed-loop paradigm naturally supports adversarial "purple" teaming: both attacker and defender agents are coordinated via a shared (or separately inferred) G-CTR context. Five team compositions are compared:

- No guidance (baseline): LLM agent with no strategic overlay.

- Red G-CTR: Only attacker guided.

- Blue G-CTR: Only defender guided.

- Purple G-CTR: Both agents guided with isolated attack graphs.

- Purple G-CTR (merged): Both agents share a single attack graph and context.

Figure 3: Cowsay challenge results—multi-agent attack/defense performance across strategic guidance modes, highlighting superiority of merged purple G-CTR in adversarial competition.

In competitive A/D CTFs (cowsay, pingpong), merged purple G-CTR configurations attain win/loss ratios of 1.8:1 to 3.7:1 over LLM-only and independent-strategy teams. This configuration achieves a qualitatively higher level of agentic collaboration, compressing variance and sustaining high win rates in adversarial, time-limited settings.

Theoretical and Practical Implications

G-CTR demonstrates that generative, LLM-driven extraction of operational context can replace manual threat modeling as the substrate for game-theoretic cybersecurity analysis. Unlike classical approaches that decouple automation (agent execution) from strategic reasoning (static graph-based risk calculation), G-CTR unifies both into a single, self-reinforcing loop. This impacts both:

From a theoretical perspective, the work substantiates that closed-loop, multi-agent guidance architectures can be formalized with generic security games (in this case, CTR with effort-aware scoring) and solved efficiently at the scale and cadence of LLM-driven security automation.

Limitations and Prospects for Future Research

Despite substantial advantages, several limitations persist:

- Node granularity and vulnerability labelling remain dependent on the extraction LLM’s semantic fidelity. Hallucination suppression is not absolute, and some complex flows are oversimplified by the fastest models.

- The effort-based scoring mechanism substitutes probabilistic edge weights with normalized heuristics (token counts, message distances, cost proxies). Extensions involving adaptive scoring or richer semantics could further improve practical utility.

- Current integration focuses primarily on LLM planning layers; deeper coupling with external knowledge bases or sensor data could mitigate context drift on longer engagements.

Promising future directions include adversarial robustness exercises versus human red teams, expansion to agent populations with stochastic policy variation for creative attack synthesis, and extensions toward other security game families with more complex utility interactions.

Conclusion

This work delivers a scalable, performant architecture for AI cybersecurity agents that meaningfully integrates LLM-driven automation with rigorous game-theoretic reasoning. Empirically, embedding Nash-equilibrium-guided feedback into security AI agents yields multi-fold improvements in exploit discovery rate, resource efficiency, and tactical consistency. The proposed framework operationalizes principles of optimal play in dynamic, adversarial cyber environments and sets a new standard for closed-loop, autonomous security orchestration.

The framework's reproducibility (open-source CAI platform), strong efficiency claims, and validated superiority in adversarial competitions collectively mark it as a reference implementation for future work in agentic cybersecurity intelligence—laying the groundwork for the strategic, accountable, and explainable orchestration of autonomous cyber-operations at scale.