- The paper introduces a predictive framework that adapts inference strategies to meet strict time budgets in safety-critical applications.

- It employs fine-grained response length prediction and analytical execution time estimation to dynamically configure model runtime settings.

- Empirical evaluations show sub-2% MAPEs and enhanced response performance compared to static baseline approaches.

TimeBill: Time-Budgeted Inference for LLMs

Introduction and Motivation

The deployment of LLMs in time-sensitive applications—robotics, autonomous driving, control systems, and industrial automation—requires not only accurate linguistic responses but also adherence to stringent time budgets for decision-making and safety-critical operations. Standard auto-regressive generation mechanisms in LLMs introduce significant uncertainty in inference latencies, compounded by input-dependent and model-specific response lengths. Existing approaches leveraging offline compression (quantization, pruning) and online optimization (KV cache eviction/quantization) are either insensitive to dynamic time budgets or suboptimal in adapting runtime configurations, failing to guarantee timely, high-quality responses across heterogeneous tasks.

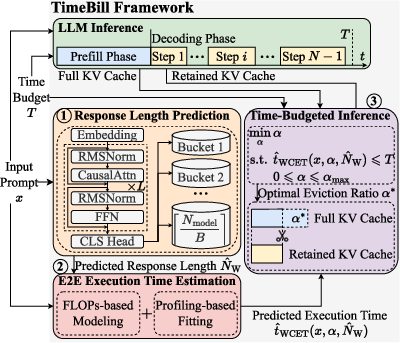

The "TimeBill" framework formalizes and addresses these shortcomings by proposing a predictive, adaptive mechanism that achieves a Pareto-optimal balance between inference latency and response performance. TimeBill introduces fine-grained response length prediction and analytical execution time estimation, enabling principled runtime configuration of cache eviction ratios in accordance with user-specified time budgets.

Figure 1: An example of different inference strategies. The vanilla inference may overrun and miss the deadline, resulting in incomplete output. The As-Fast-As-Possible (AFAP) strategy will degrade the response performance, while time-budgeted inference improves the response performance under timing constraints.

Framework Overview

TimeBill maps the time-budgeted inference problem into a constrained optimization over LLM runtime configurations, abstracted as follows: maximize the response performance metric M(⋅) while ensuring end-to-end inference finishes within the time budget T and producing at most Nmax tokens. The framework decomposes the multi-stage inference into prefill and decoding phases; for each, execution time is characterized as a function of input length, model structure, and KV cache status.

Key challenges addressed:

Predictive Mechanisms: Response Length Predictor and Execution Time Estimator

Fine-Grained Response Length Predictor (RLP)

The RLP, built on a Small LLM (SLM) and designed using bucketed classification, predicts the response length for a given prompt with higher granularity than prior art. Knowledge distillation aligns the predictor with target LLM behavior using empirical training data (xj,Nj), where a classification label encodes the bucket containing the observed response length for each prompt. Unlike BERT-based proxies, the SLM architecture can process longer prompts and achieves lower MAE and RMSE metrics, especially as the number of buckets (granularity) increases.

Figure 3: The overview of the proposed fine-grained response length predictor (RLP).

Workload-Guided Execution Time Estimator (ETE)

TimeBill’s ETE analytically models FLOPs for each stage (Norm, CausalAttention, FeedForward, LMHead), deriving execution time as quadratic and linear terms in input size and KV cache length. Actual hardware-dependent coefficients are learned via profiling, allowing for accurate real-time execution prediction. The ETE accommodates pessimistic WCET guarantees via a configurable factor k on predicted response length, achieving hard real-time safety.

Figure 4: The timeline of TimeBill, where incoming arrows represent inputs (e.g., x1,Nx1) , and outgoing arrows represent outputs (e.g., $\hat{\mathbf{y}_1, \alpha_1^*$).

Figure 5: Fitted curves for estimating $\hat{t}_{\text{prefill-phase}$ and $\hat{t}_{\text{decoding-step}$.

Figure 6: The performance of estimating $\hat{t}_{\text{e2e}$ and $\hat{t}_{\text{WCET}$.

Time-Budgeted Efficient Inference: Optimization and System Deployment

The framework solves for the minimal KV cache eviction ratio α∗ required to satisfy the given time budget T per job, subject to constraints on completion rates and maximal allowed degradation (αmax). This optimization leverages predictive outputs from RLP and ETE, integrating both during runtime parallel to the model’s prefill phase via CPU/GPU co-processing. Prompt compression is optionally invoked to further mitigate input overhead, ensuring that deadline-violating inference is proactively avoided.

Empirical Evaluation

Efficacy of RLP and ETE

Evaluation on Qwen2.5-7B-Instruct employing LongBench demonstrates that the fine-grained RLP outperforms ProxyModel and S3 (BERT-based) predictors, with lowest MAE/RMSE at highest bucket granularity. The ETE achieves sub-2% MAPEs for both prefill and decoding estimations, providing reliable upper bounds for WCET, crucial for real-time guarantees.

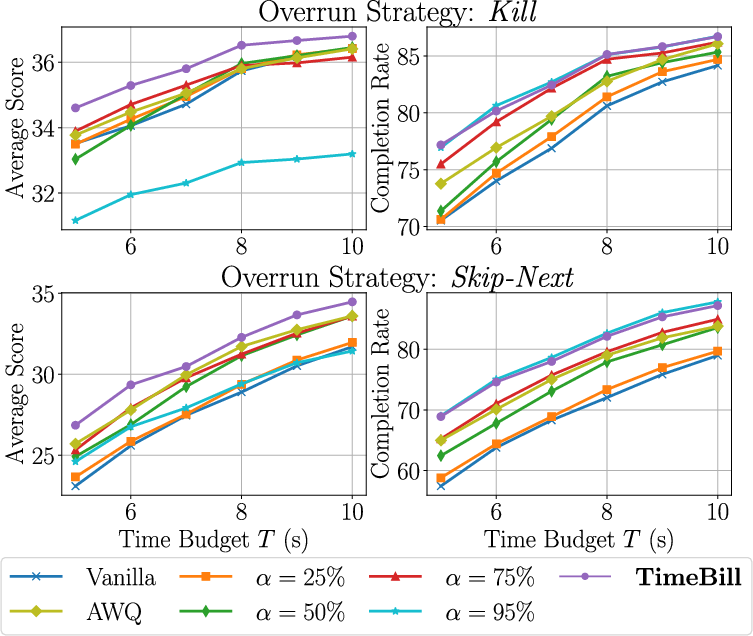

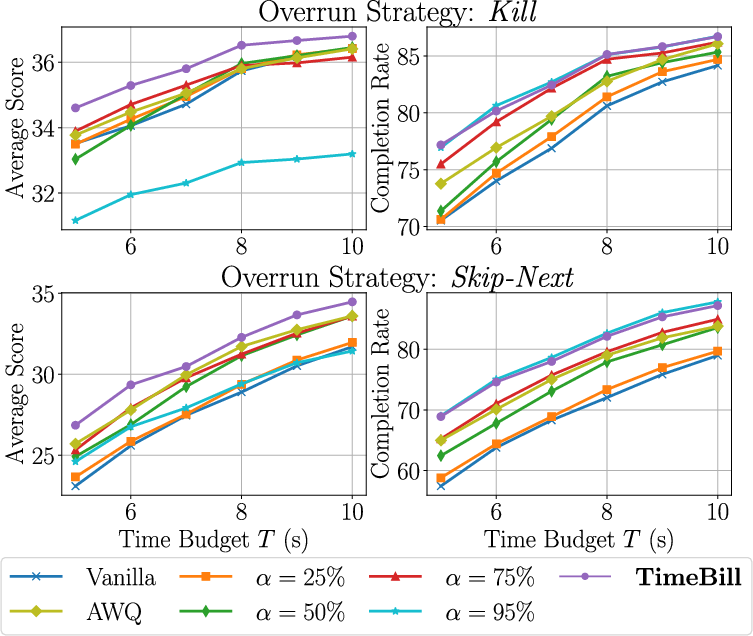

Benchmarking versus baselines (Vanilla, fixed α, AWQ quantization) under Kill and Skip-Next overrun strategies, TimeBill attains higher average response scores while maintaining competitive task completion rates, outperforming static and quantized approaches across variable time budgets.

Figure 7: The average scores and completion rates of different approaches under Kill and Skip-Next.

Sensitivity to Pessimistic Factor

Experiments analyzing the effect of WCET pessimistic factor k (range [1,8]) confirm the necessity of careful tuning. Moderate k (e.g., 5) increases reliability (completion rate) without substantial loss in average score, but excessive conservatism (k>5) leads to unnecessarily high α, harming output fidelity.

Figure 8: The average scores and completion rates with different pessimistic factors k under the overrun strategy Kill, where the time budget T=5 s.

Implications, Applications, and Future Directions

TimeBill’s unified predictive and adaptive approach enables precise control over inference latencies in LLM deployment for time-critical systems. The framework is agnostic to target LLM architecture and compatible with offline compression and online quantization methods, potentially extensible to ensembles or multi-modal models. For future work, integrating reinforcement learning with online feedback might further optimize response-performance trade-offs under fluctuating load and mixed-criticality constraints. The modeling framework could expand to distributed setups or incorporate richer hardware heterogeneity. Methodologically, theoretical bounds on WCET approximation error and joint optimization for multi-instance scheduling remain open topics.

Conclusion

TimeBill presents a formal, robust solution for time-budgeted inference in LLM deployment, integrating fine-grained response prediction, analytical time estimation, and adaptive runtime configuration. Empirical results validate the superiority of this framework in meeting hard real-time deadlines without sacrificing output quality, highlighting its practical value for AI-driven, safety-critical systems.