- The paper introduces an ambient-blocking optical sensor that segments contact pixels without requiring macroscopic deformation.

- It leverages a precise geometric arrangement and calibration to produce high-contrast images with near-black non-contact regions.

- Experimental results demonstrate robust performance across liquids, ultra-soft solids, and rigid materials for advanced robotic manipulation.

Introduction

The LightTact sensor establishes a new approach to visual-tactile sensing by enabling direct, optics-based recognition of contact without requiring measurable macroscopic surface deformation. Unlike conventional vision-based tactile sensors (VBTSs) that depend on visible deformation of soft surfaces for contact inference, LightTact employs an ambient-blocking optical configuration that excludes non-contact pixel illumination from both external and internal sources. This architecture yields raw images with near-black non-contact regions and high-fidelity visual appearance for contact pixels, facilitating accurate, pixel-level contact segmentation across a wide array of materials—liquids, ultra-soft solids, and rigid objects—under unstructured environmental lighting. LightTact thus opens the door for manipulation and perception tasks predicated on the detection of extremely light contact, and further supports direct multimodal reasoning by vision-LLMs (VLMs).

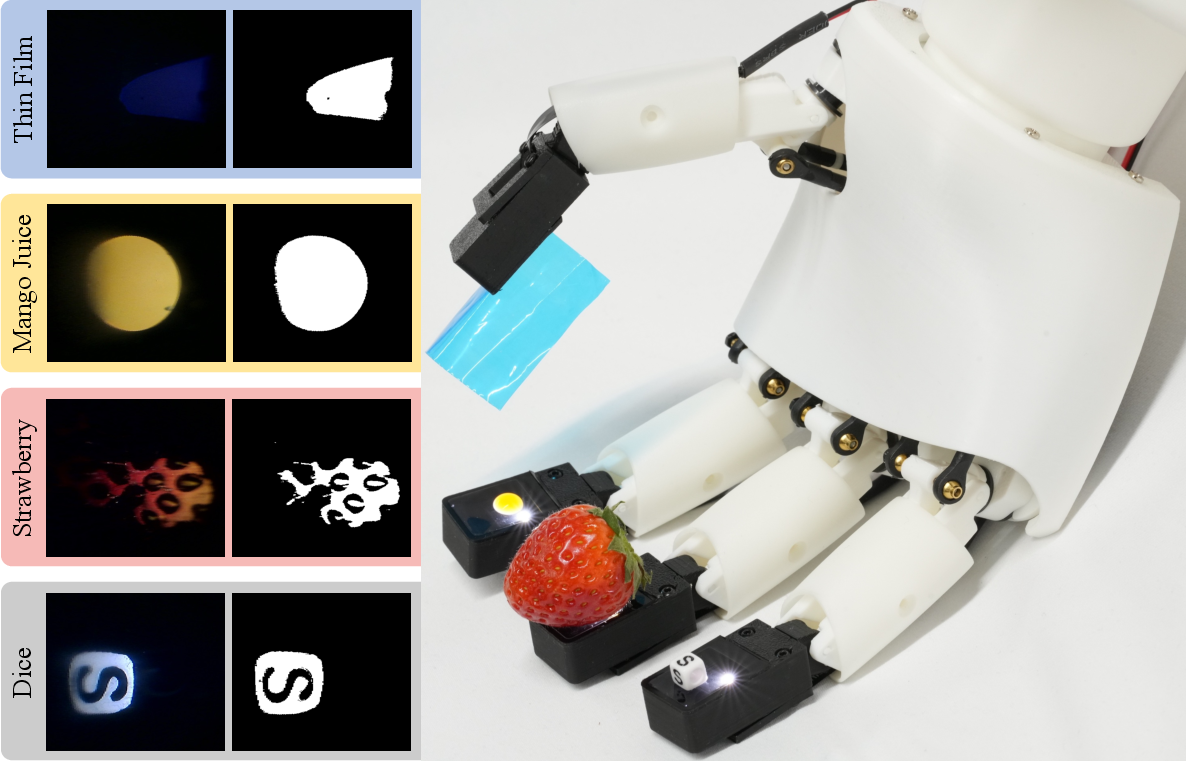

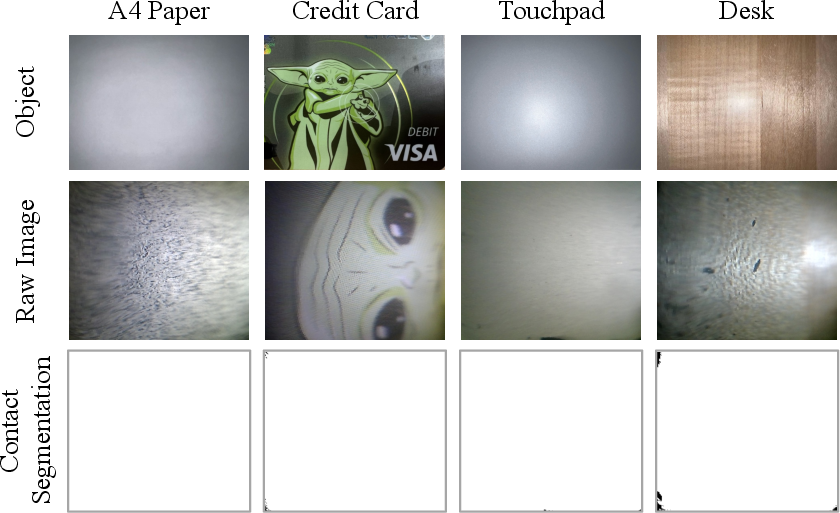

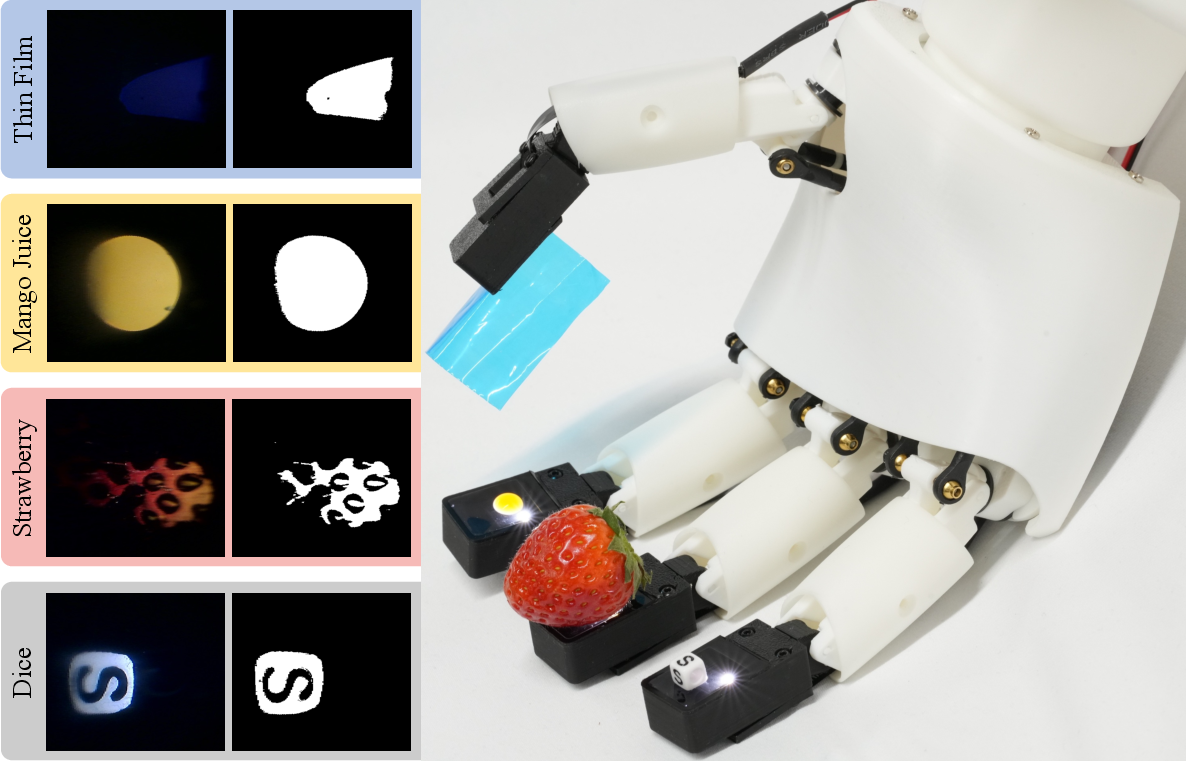

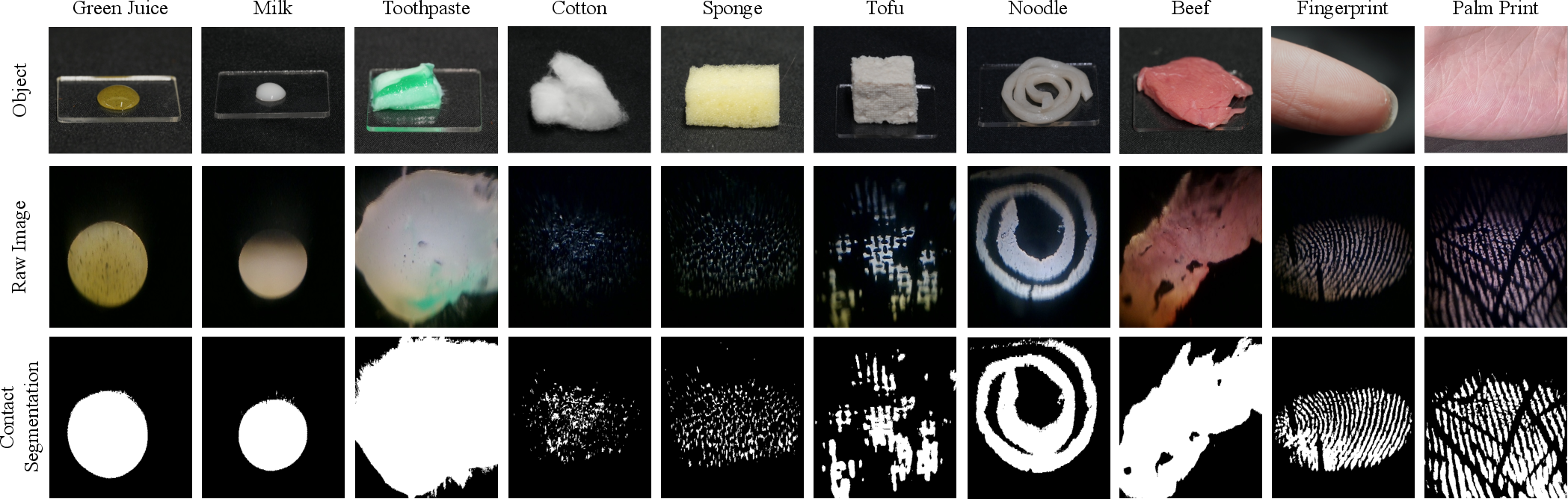

Figure 1: LightTact provides direct, pixel-level contact sensing across liquids, ultra-soft materials, and rigid objects, without requiring a minimum contact force. Its optical design produces high-contrast raw images in which non-contact pixels remain near-black, while contact pixels preserve the natural appearance of the contacting surface. LightTact is fingertip-sized and can be integrated into dexterous hands such as Amazing Hand.

Ambient-Blocking Optical Principle and Sensor Design

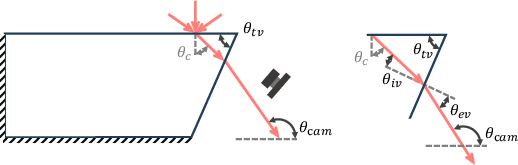

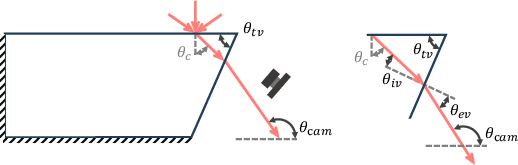

LightTact's novel optical layout exploits the geometric arrangement of the touching and viewing surfaces of a transparent medium, illuminated internally by an LED and viewed by a camera. By orienting the two surfaces at a nonparallel wedge angle (θtv), the system ensures total internal reflection (TIR) for rays entering through non-contact regions, thereby blocking both ambient and internal illumination. Only diffuse scatter resulting from true contact traverses the viewing surface to reach the camera, sharply delineating contact from non-contact in the resulting images. The optimal operation range for θtv is derived analytically to satisfy both light isolation and effective transmission of contact-scattered illumination, with the default configuration adopting perpendicular surfaces (θtv≈90∘).

Figure 2: Optical layout and sensing principle of LightTact, demonstrating (a) component arrangement, (b) external light rejection at non-contact regions via TIR, (c) internal illumination suppression, and (d) diffuse transmission of contact scatter.

The structural assembly integrates a soft transparent gel and a rigid acrylic window for compliant interaction and optical stability, encased within a matte-black perimeter shell and auxiliary black gel that functions as a transition layer and light absorber. An obliquely oriented SMD LED and a wide-FOV compact camera are precisely positioned to satisfy the geometric constraints needed for robust light suppression.

Figure 3: Design of LightTact, showing assembled, exploded, and schematic views of the fingertip-scale visual-tactile sensor.

Gel layer fabrication leverages dedicated molds and surface treatments to minimize internal reflection and maximize modular integration of the optical path, ensuring the black gel and shell geometry provides consistent block-out of stray light across the perimeter and facilitates consistent contact segmentation under practical deployment.

Figure 4: Gel fabrication for LightTact, schematic illustration of casting steps for both transparent and black gel layers.

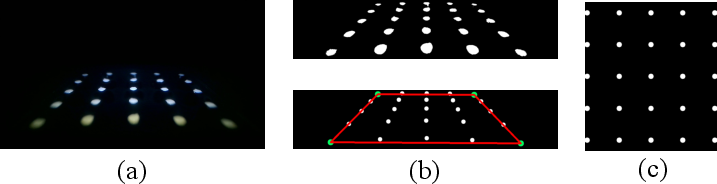

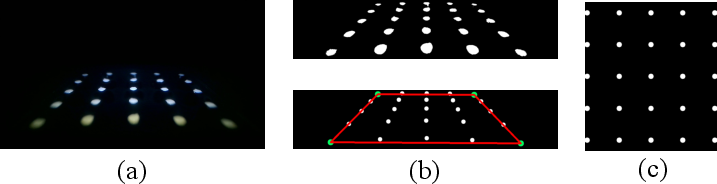

The LightTact segmentation algorithm exploits the inherent high image contrast of the sensor output. After calibration via a 5×5 array tool that enables spatial rectification of camera pixels to the physical touching surface, the method records a series of reference frames in the non-contact state, then infers contact by thresholding pixelwise brightness differences against multi-channel and color-consistency criteria.

Figure 5: Camera calibration for LightTact, demonstrating imprint capture, segmentation, and spatial rectification for reliable pixel-contact mapping.

Quantitative evaluation under varying LED and ambient illuminance conditions confirms persistence of low mean gray values (<3) in non-contact regions even under extreme >2000 lux ambient exposures, as well as minimal standard deviation, attesting to the efficacy of the optical design for consistent dark background conservation.

Experimental Validation Across Materials and Manipulation Contexts

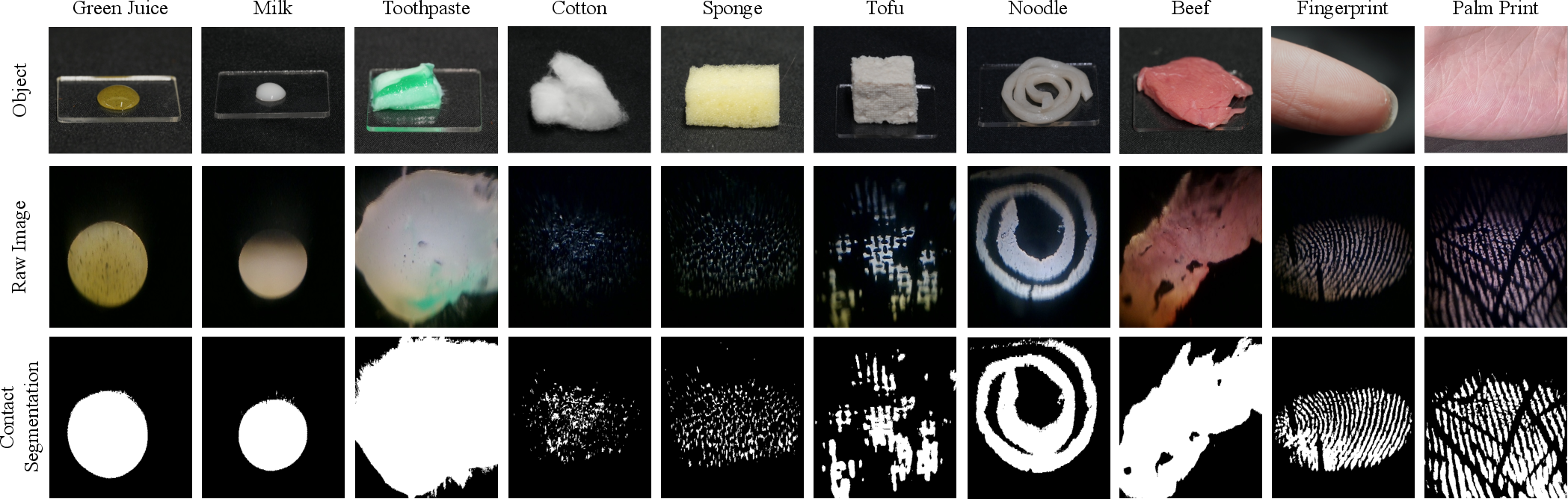

LightTact's performance was systematically benchmarked in scenarios featuring extremely light contacts with liquids (e.g., water, milk, juice), semi-liquids (e.g., toothpaste, facial cream), ultra-soft solids (e.g., sponge, cotton, tofu, thin noodle, biological tissues), as well as rigid bodies exhibiting complex surface patterns. In all cases, LightTact provided robust contact segmentation with no minimum force threshold and preserved the native appearance of contacting surfaces.

Figure 6: LightTact achieves robust contact segmentation with materials inducing negligible surface deformation, including liquids, semi-liquids, and ultra-soft solids.

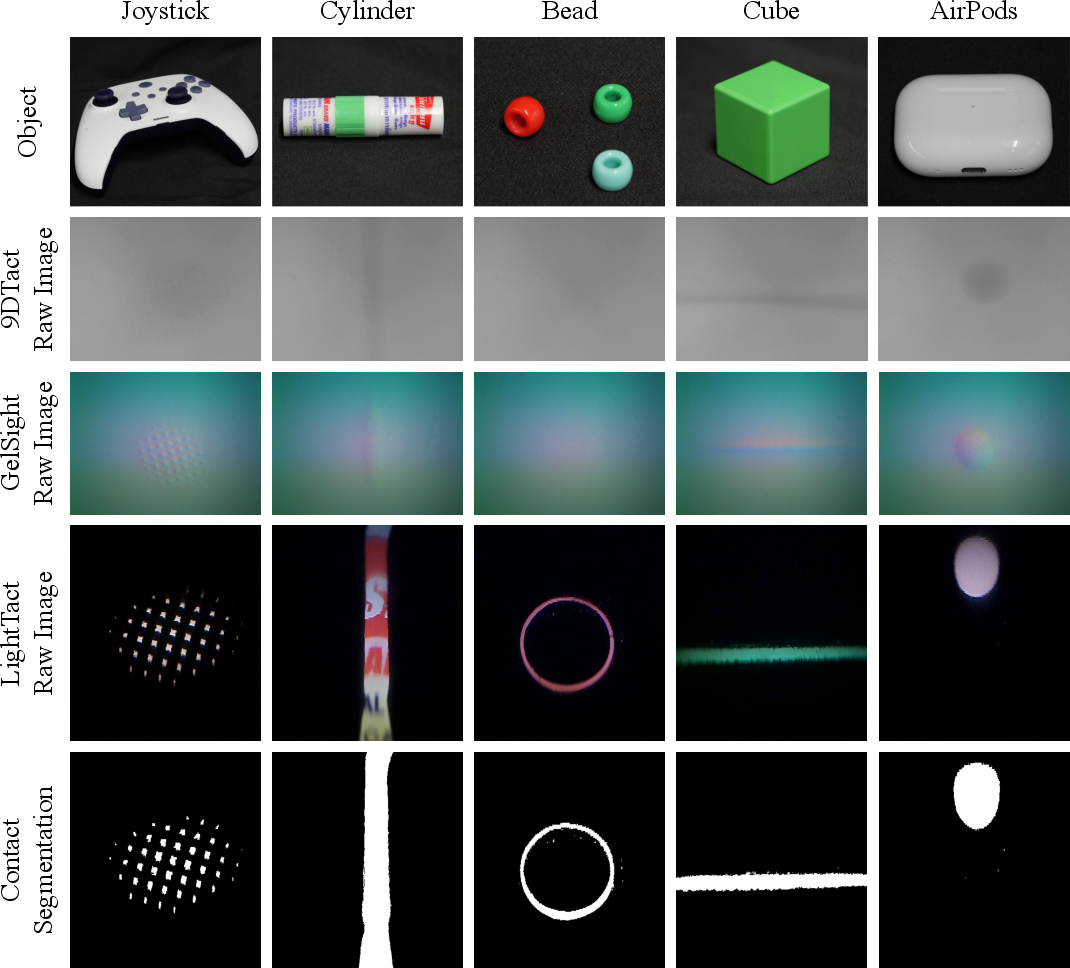

For rigid objects, the sensor maintains visual appearance and segmentation fidelity across both light and firm contacts.

Figure 7: LightTact reliably senses contact with rigid objects, maintaining natural surface appearance regardless of contact force.

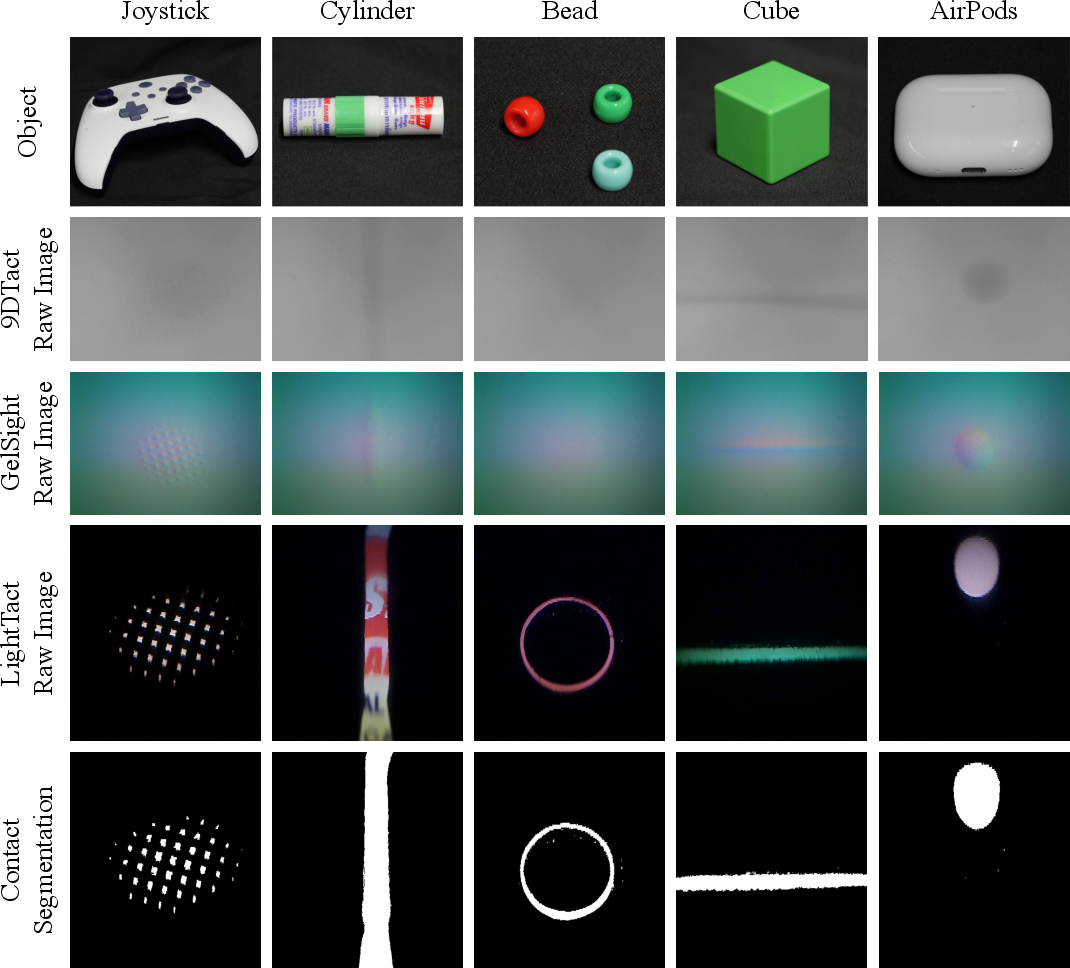

Direct comparison with baseline sensors (force-torque, 9DTact, GelSight-Mini, DelTact) consistently demonstrates failure of deformation-dependent sensors in detecting and segmenting non-rigid and low-force interactions.

Additional robotic manipulation experiments illustrate practical gains. For example, in water spreading, LightTact enables adaptive closed-loop control by segmenting contact with a liquid layer and appropriately regulating vertical position to avoid collision.

Figure 8: LightTact segments contact with water to enable closed-loop robotic spreading without surface collision.

In semi-liquid dipping, LightTact allowed end-effector control based on coverage criteria for gentle cream acquisition, preserving reliable contact segmentation during both approach and withdrawal.

Figure 9: Using LightTact, robots dip facial cream based on segmented contact coverage.

For ultra-thin film interaction, responsive lateral end-effector motion was achieved with dual LightTact sensors, tracked at minimal contact force for human-robot co-manipulation.

Figure 10: LightTact sensors enable responsive lateral end-effector control during ultrathin film touch.

LightTact further supports direct VLM reasoning from raw sensor images, enabling fine-grained manipulation such as inferencing resistance values from contact images of resistors for robotic sorting, achieving 80% task success.

Figure 11: VLM-driven robotic sorting using LightTact images, with the model inferring resistance from color bands synchronized to contact geometry.

Discussion and Implications

LightTact provides reliable, deformation-independent contact sensing over a full spectrum of materials and contact forces, including scenarios where macroscopic deformation is negligible or force-based metrics are sub-threshold. This extends the operational domain of tactile-enabled robotic manipulation into previously inaccessible regimes, particularly for tasks involving liquids, bio-materials, and delicate textures. The approach also facilitates temporal and spatial alignment of multimodal signals for downstream learning and reasoning, improving compatibility with VLMs and enabling direct transfer from naturalistic visual contact images to action selection pipelines. The sensor's intrinsic simplicity and minimal computation requirements suggest scaling potential for high-density deployment in dexterous hands and multi-fingered manipulation.

Theoretically, LightTact decouples the fundamental limitations of indentation-dependent VBTSs by leveraging geometric optics and surface architecture, pointing towards optical solutions for robust tactile sensing under uncontrolled environmental conditions. Future developments may include integration with distributed tactile arrays, active lighting modulation, and fusion with proprioceptive or force domain signals for even richer multimodal perception. Extension of the wedge angle and shell design, as explored in supplementary analysis, allows adaptation for cluttered or confined spatial environments without meaningful degradation of light suppression properties.

Figure 12: Optical analysis of transparent medium under variant wedge angles (θtv), relevant for compact form factor adaptation.

Figure 13: Detailed render views of sensor shell, transparent gel, and black gel, highlighting modular composability and optical isolation features.

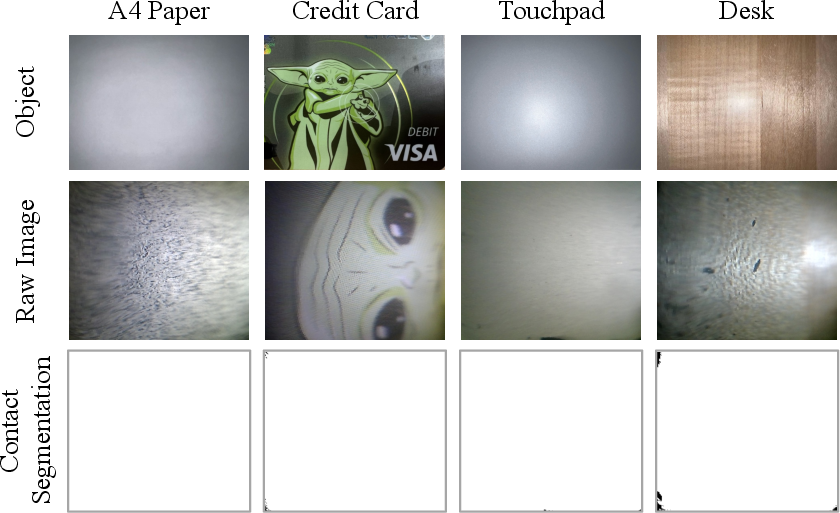

Large-area contact on flat surfaces, often problematic for deformation-based sensors due to weak global signals, is also robustly segmented by LightTact due to its optics-only principle.

Figure 14: LightTact segmentation of broad, flat-surface contacts independent of measurable indentation.

Conclusion

LightTact represents a substantive advance in fingerprint-scale visual-tactile sensing through its ambient-blocking optical architecture and deformation independence. The sensor achieves robust, pixel-level contact segmentation for a wide range of material interactions, supports light-contact-driven manipulation behaviors, and directly enables reasoning by vision-LLMs via appearance-preserving outputs. These capabilities advocate for the increasingly synergistic design of visual-tactile hardware and multimodal reasoning systems, impacting emerging paradigms in robotic manipulation, haptic perception, and AI-integrated sensing (2512.20591).