Few-Step Distillation for Text-to-Image Generation: A Practical Guide (2512.13006v1)

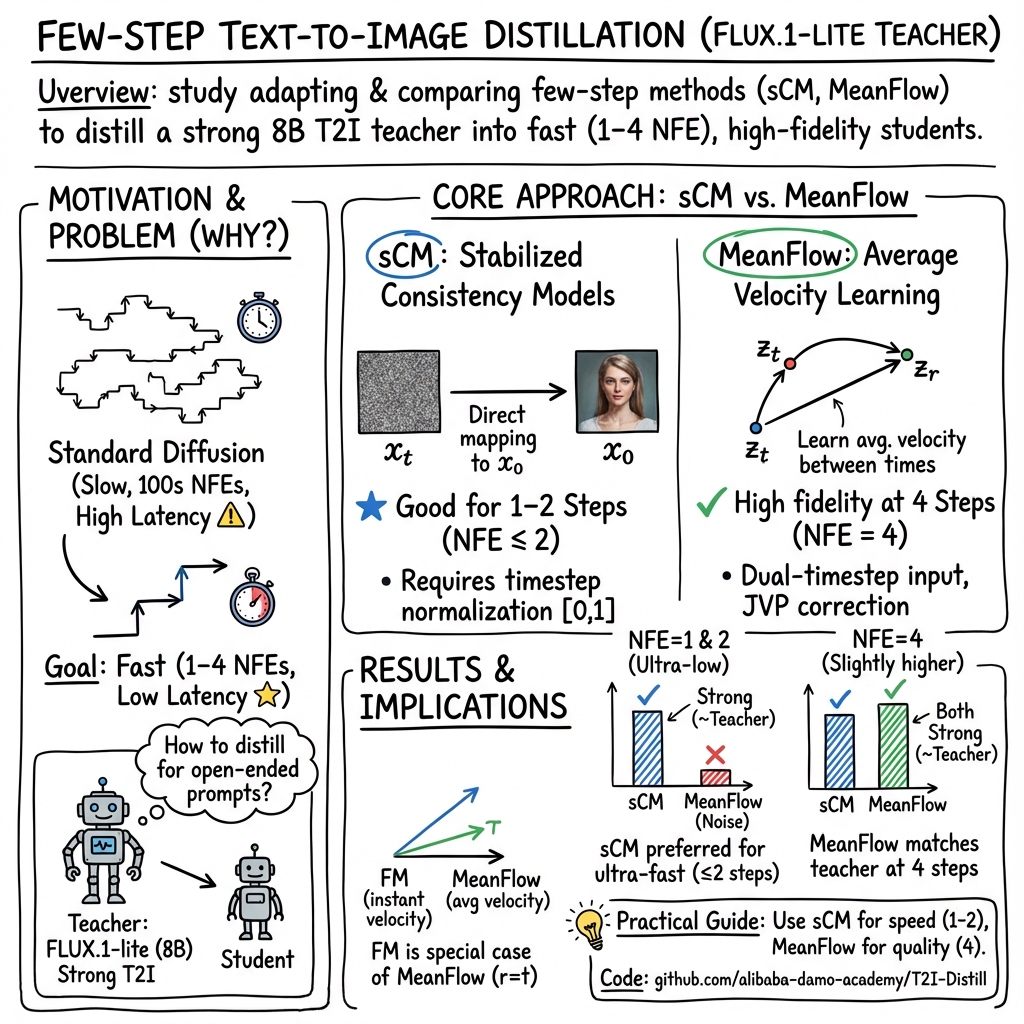

Abstract: Diffusion distillation has dramatically accelerated class-conditional image synthesis, but its applicability to open-ended text-to-image (T2I) generation is still unclear. We present the first systematic study that adapts and compares state-of-the-art distillation techniques on a strong T2I teacher model, FLUX.1-lite. By casting existing methods into a unified framework, we identify the key obstacles that arise when moving from discrete class labels to free-form language prompts. Beyond a thorough methodological analysis, we offer practical guidelines on input scaling, network architecture, and hyperparameters, accompanied by an open-source implementation and pretrained student models. Our findings establish a solid foundation for deploying fast, high-fidelity, and resource-efficient diffusion generators in real-world T2I applications. Code is available on github.com/alibaba-damo-academy/T2I-Distill.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about making text-to-image AI faster without losing much quality. Today’s best models can turn words into detailed pictures, but they usually need hundreds of tiny steps to do it, which makes them slow. The authors study how to “distill” a big, slow expert model (called a teacher) into a smaller student that can create good images in just a few steps. They focus on adapting and fairly comparing the latest fast-generation techniques to open-ended text prompts (not just fixed categories), using a strong teacher model called FLUX.1-lite.

Key Objectives

The paper aims to:

- Figure out which few-step methods work best for text-to-image generation and why.

- Adapt newer, promising techniques (originally tested on simpler datasets) to complex text prompts.

- Offer practical tips—like how to set inputs, pick network designs, and tune training settings—plus share open-source code and pretrained student models.

How They Did It (Methods, in simple terms)

Think of diffusion models like artists who start with a canvas full of static noise and gradually “clean” it to reveal a picture. Traditional models do hundreds of clean-up strokes; few-step methods try to do just 1–8 strokes.

The authors compare and adapt three families of fast methods. To keep this friendly, imagine learning shortcuts from a teacher:

- Distribution Distillation (match the final “style” of the teacher):

- DMD/DMDv2: The student learns to make final images that look like the teacher’s, using clever losses (including GAN-style training).

- LADD: The student learns to fool a discriminator in the “latent space” so its results are indistinguishable from the teacher’s, achieving 1–4-step generation.

- Trajectory-based Distillation (learn the path, not just the destination):

- sCM (simplified Consistency Models): A student learns to jump from any noisy state directly toward a clean image in as few steps as possible. Older versions were unstable; sCM adds fixes so training is steadier and scales better.

- IMM (Inductive Moment Matching): Trains from scratch by making sure samples taken at different times end up matching the same target distribution, using a stable measure called MMD.

- MeanFlow: Instead of learning instantaneous speed (“velocity”) at each time, MeanFlow learns average speed between two times. This helps smooth the path the student follows so it can take bigger steps without drifting off-course.

To adapt these ideas for text-to-image:

- Teacher–student setup: The teacher is FLUX.1-lite (an 8-billion-parameter model). The student is a faster model trained to imitate the teacher.

- Timestep normalization: They rescale the model’s “time” input from 0–1000 down to 0–1 to stabilize training. Think of it like making the ruler consistent so the model doesn’t get confused about step sizes.

- sCM adaptation: The student uses the teacher’s guided outputs (like hints about the right direction to clean the noise) to learn stable, few-step updates.

- MeanFlow adaptation:

- Dual-time input: Since MeanFlow needs two times (start r and end t), they add an extra time-encoding branch to process the difference (t−r) and combine it with the main time input.

- Teacher guidance: Instead of learning from noisy, raw targets, the student uses the teacher’s clean “velocity” (a smarter hint about which way to move in the noise) to learn average speed plus a correction term. This reduces randomness and focuses the student on straightening the path.

- Stronger loss: They found using a higher-order error penalty (like punishing mistakes more sharply) improves results for MeanFlow.

- Better guidance mixing (CFG): They blend conditional and unconditional predictions in a controlled way, which helps align images to the text prompt more reliably.

Helpful relationships the paper explains (without retraining):

- Flow Matching and TrigFlow can be converted into each other by changing how time and signals are scaled. This means samplers and models from either side can work together.

- MeanFlow becomes regular Flow Matching when both times (r and t) are the same—then “average speed” equals “instantaneous speed.”

- MeanFlow and sCM are closely related in their training signals; MeanFlow can be seen as a version of sCM where the target network is fully synchronized with the current student.

- IMM can reduce to a consistency-model-style training when certain simplifications are made.

Main Findings

The authors tested on two public benchmarks:

- GenEval: Checks object presence, counts, colors, positions, and attributes in generated images.

- DPG-Bench: Checks global structure, entities, attributes, and relationships.

Key results:

- The “rescaled” teacher (with time range 0–1) performs almost the same as the original FLUX.1-lite, proving the input scaling is safe.

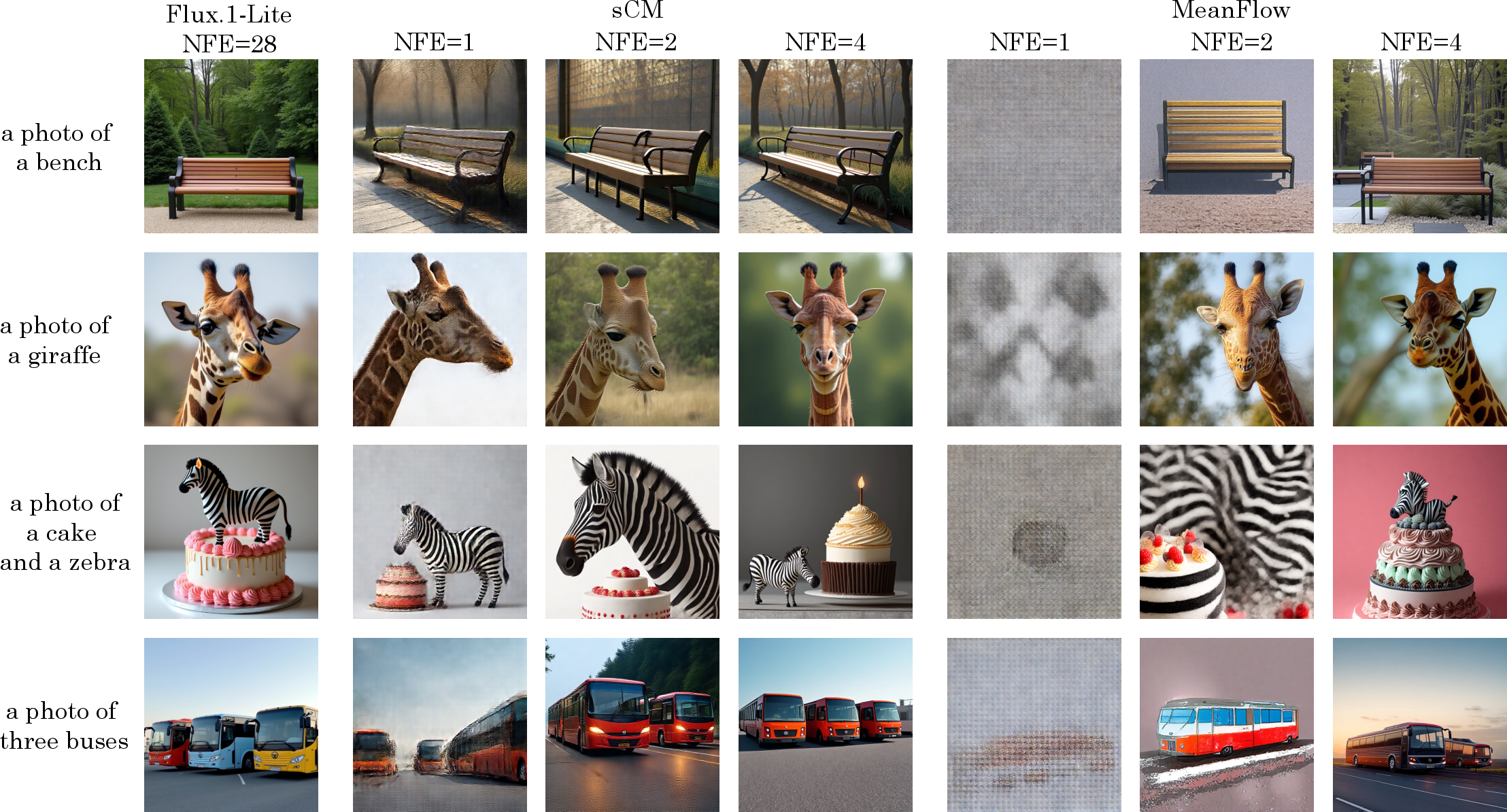

- sCM is very strong in extremely few steps:

- At 2 steps (NFE=2), sCM nearly matches the teacher on GenEval.

- Even at 1 step, sCM still produces meaningful images and decent alignment.

- On DPG-Bench, sCM maintains good structure and alignment at 1–2 steps.

- MeanFlow shines with a little more budget:

- At 4 steps (NFE=4), MeanFlow reaches or nearly matches teacher-level quality across benchmarks and can outperform sCM in fine details.

- At 1–2 steps, MeanFlow struggles—images can collapse into noise at 1 step and remain unstable at 2 steps. It needs at least 4 steps to work well.

- Practical tweaks matter:

- Using the teacher’s clean velocity improves MeanFlow training.

- A higher-order loss (stronger penalty for errors) boosts MeanFlow scores.

- Improved classifier-free guidance (CFG) raises MeanFlow’s text alignment scores.

In short: sCM is best when you need near-real-time generation with 1–2 steps. MeanFlow is great when you can afford about 4 steps and want higher fidelity and sharper details.

Implications and Impact

This work provides a clear roadmap for building fast, high-quality text-to-image systems that are practical in real-world apps:

- Real-time or resource-limited settings: sCM is a strong choice for games, AR, design tools, and any interface needing quick feedback.

- High-quality outputs with low latency: MeanFlow offers excellent detail and alignment at only 4 steps, trimming time and compute versus traditional sampling.

- Interoperability: Knowing how different frameworks convert into each other lets teams mix and match models and samplers without retraining.

- Community benefits: The open-source code and pretrained students make it easier for others to reproduce, compare, and build on these results.

Overall, the paper lays a solid foundation for faster text-to-image generation, turning big, slow models into nimble ones that still obey the prompt and look great—opening the door to more responsive, creative AI tools.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored in the paper, framed so future researchers can act on it:

- Generalization across teachers: The study only distills from FLUX.1-lite (8B). It does not assess whether sCM or MeanFlow adaptations generalize to other strong T2I teachers (e.g., SDXL/SD3, Qwen-Image, Imagen). Benchmarking across multiple teachers is needed to validate method robustness.

- Reproducibility constraints: Training uses a proprietary dataset that cannot be released. This prevents independent verification of claims and limits conclusions about generalization. A replication on public datasets (LAION, COCO, PartiPrompts, DrawBench) is necessary.

- Student capacity vs. step-efficiency trade-offs: The student is “identical” to the teacher architecture (MMDiT), but parameter counts, compression, and capacity constraints are not explored. Systematic ablations on student width/depth, parameter sharing, and adapters vs. downstream quality at NFEs 1–8 are missing.

- Missing empirical evaluation of IMM: IMM is analyzed theoretically but not adapted and evaluated for T2I. Concrete experiments are needed to test IMM’s stability, guidance compatibility, and sample quality under open-ended prompts.

- No comparison to DMD/DMDv2 and LADD on the same teacher: Although these paradigms are discussed, the paper does not provide controlled empirical comparisons on FLUX.1-lite. A head-to-head evaluation (quality, speed, stability, training cost) is required.

- Latency and throughput not reported: NFEs are given, but wall-clock time, GPU utilization, energy cost, and memory footprints for training and inference (including JVP overhead) are not measured. Real deployment metrics on consumer GPUs (e.g., A10, 4090), mobile NPUs, or CPUs remain unknown.

- Solver and step-size sensitivity: MeanFlow collapses at NFE=1 and underperforms at NFE=2, but the paper does not test solver variants (adaptive step-size, higher-order integrators, Heun/Runge–Kutta, schedule tuning). A thorough solver sensitivity study could clarify whether collapse is algorithmic or integration-related.

- Guidance scales and scheduling: The Improved CFG variant uses a mixing parameter κ, but the paper does not systematically ablate κ, unconditional/conditional weighting schedules, or prompt-dependent/dynamic CFG scaling. The interaction between guidance scale at training vs. inference is not quantified.

- Evaluation metrics limited to alignment: GenEval and DPG-Bench assess compositional alignment but not photorealism, aesthetics, CLIP score, FID/KID, diversity, or human preference. A broader evaluation suite is needed to understand fidelity and mode coverage.

- Diversity and mode collapse: There is no analysis of sample diversity, uniqueness across seeds, or entropy of outputs under few-step distillation. Measurements of diversity (e.g., LPIPS diversity, coverage of style/content modes) are missing.

- Safety and bias: The impact of distillation on safety (content filters, bias amplification, toxicity, illegal content) is unaddressed. Robustness to adversarial prompts and mitigation retention is unknown.

- Prompt distribution and OOD generalization: The proprietary dataset’s prompt distribution is unspecified. Generalization to long, compositional, multilingual, or rare-object prompts (e.g., TIFA, TIFA-5K, VQAv2-derived prompts) is not evaluated.

- Timestep normalization scope: While normalizing timesteps to [0, 1] stabilizes training, the underlying cause, robustness across architectures, and interaction with different noise schedules or reparameterizations (EDM, sigma-space) remain untested and theoretically unexplained.

- Resolution mismatch effects: The initial distillation at 1024×1024 followed by sCM training at 512 lacks analysis of resolution scaling effects on alignment, detail retention, and artifact prevalence. Experiments at 768/1024/2K/4K are needed.

- Latent-space design choices: The pipeline uses AutoencoderKL (16-channel, 8× downsampling), but the effect of different VAEs (channels, compression rates, reconstruction loss), and whether pixel-space vs. latent-space training changes few-step behavior, is unexamined.

- Training dynamics and convergence: sCM is trained for 3,000 iterations; MeanFlow for 25,000. There is no convergence analysis (learning curves, variance across seeds, stability windows), nor characterization of how longer training affects few-step quality or overfitting.

- JVP computational burden: MeanFlow relies on Jacobian-vector products, but the paper does not quantify their training cost, memory pressure, numerical stability, or how JVP approximations impact sample quality.

- FM–TrigFlow conversion validity in conditional settings: The bidirectional conversion is stated but not empirically validated under text conditioning, CFG, and real T2I prompts. Error bounds, stability regions, and numerical drift from conversions are not characterized.

- sCM–MeanFlow gradient equivalence conditions: The claimed gradient equivalence depends on target-network synchronization (θ−=θ). The effect of EMA decay rates, lag, and stochastic targets on stability and sample quality is not empirically tested.

- Diagnosing MeanFlow’s low-step failure: The “pure noise” outputs at NFE=1 are reported but not analyzed. It is unclear whether the failure stems from curvature misestimation, teacher mismatch, insufficient Jacobian correction, or solver discretization error.

- Hybrid trajectories and stitching: The idea of combining sCM (for first steps) with MeanFlow (later steps) or trajectory-stitching (e.g., T-Stitch) is not examined. Hybrid schedules may eliminate low-step collapse while retaining high-fidelity at NFE=4.

- Adaptive NFEs: The paper does not explore prompt- or content-aware dynamic NFEs (early-exit strategies) that allocate steps based on predicted difficulty, potentially balancing latency and quality in production.

- Inference-time guidance alignment: The specific inference CFG settings used for students (vs. teacher) are not reported, and alignment between training-time target mixing and inference-time guidance is not studied.

- Robustness to compositional challenges: Teacher and students show low scores in counting and position on GenEval; the paper does not investigate targeted remedies (loss reweighting by attribute, curriculum learning, prompt augmentation).

- Public release completeness: While code and pretrained students are mentioned, there is no audit of what is released (exact checkpoints, configs, training logs) and whether reproductions on public data achieve reported metrics.

- Benchmark breadth and fairness: The lack of evaluation on established public suites (e.g., DrawBench, PartiPrompts, TIFA, HPS v2, PickScore) and aesthetic preference studies limits cross-paper comparability.

- Hardware portability: No experiments demonstrate deployment on resource-constrained devices (mobile, embedded). Quantization, pruning, and memory-optimized variants for few-step students are not assessed.

- Negative prompts and control modalities: Support and performance for negative prompts, style controls, ControlNet-like conditioning, or LoRA adapters in few-step students are not tested.

- Uncertainty estimation: The paper does not measure or expose uncertainty/confidence about generated attributes, which could guide adaptive sampling or user feedback loops.

- Theoretical guarantees for trajectory distillation: Formal error bounds for few-step trajectory distillation under open-ended text conditioning are absent; conditions for stability and convergence with CFG are not proven.

- Ethical and licensing considerations: The dataset’s provenance and licensing are unspecified; the implications for model bias, copyright, and downstream usage of distilled students are not discussed.

Glossary

- AdaLN: A conditioning-aware layer normalization variant used in diffusion transformers to modulate activations with timestep embeddings. "AdaLN modulation"

- AutoencoderKL: A variational autoencoder with a KL-divergence regularization term used to encode images into a compact latent space. "via the pretrained AutoencoderKL"

- bfloat16: A 16-bit floating-point format with wider exponent range than FP16, used for mixed-precision training to save memory while maintaining numerical stability. "bfloat16 mixed-precision"

- Classifier-free guidance (CFG): A sampling technique that blends conditional and unconditional model outputs to enhance fidelity and adherence to prompts. "classifier-free guidance"

- Consistency Distillation (CD): A learning approach where a student model is trained to match a teacher’s direct mapping from noisy to clean samples in one step. "Consistency Distillation, CD"

- Consistency Models (CMs): Generative models that learn a single-step mapping from a noisy sample at time t to its clean counterpart. "Consistency Models (CMs) aim to learn a function"

- Consistency Training (CT): Training CMs from scratch (without a teacher) to learn direct denoising mappings across time. "Consistency Training, CT"

- DeepSpeed ZeRO: A distributed training strategy that partitions optimizer states, gradients, and parameters to scale large models efficiently. "DeepSpeed ZeRO"

- Distribution Matching Distillation (DMD): A method that distills multi-step diffusion into a one-step generator by minimizing distribution differences, often with auxiliary losses. "Distribution Matching Distillation (DMD)"

- DMDv2: An improved variant of DMD that replaces costly regression losses with adversarial losses to enhance quality and efficiency. "DMDv2, enhances image quality, training efficiency, and flexibility"

- DPG-Bench: A benchmark that assesses alignment of global structure, entities, attributes, and relations in generated images. "DPG-Bench"

- EDM: Elucidated Diffusion Models, a parameterization of diffusion models focused on stable training and sampling. "parameterized with EDM"

- EMA (Exponential Moving Average): A technique that maintains a slowly-updating copy of model parameters to stabilize training targets. "exponential moving average (EMA)"

- FLUX.1-lite: A large text-to-image diffusion model used as the teacher in distillation experiments. "FLUX.1-lite"

- Flow Matching: A training paradigm that learns the instantaneous velocity field driving a diffusion process. "Flow Matching (Algorithm \ref{alg:flowmatching})"

- GenEval: A benchmark for evaluating text-to-image generation quality across multiple semantic criteria. "GenEval benchmark"

- Hugging Face Diffusers: A library providing implementations of diffusion models and training pipelines. "Hugging Face Diffusers library"

- Inductive Moment Matching (IMM): A from-scratch training method that enforces distributional consistency across time via kernel-based moment matching. "Inductive Moment Matching (IMM)"

- Jacobian correction term: A term involving derivatives of the model output with respect to time that corrects trajectory curvature in MeanFlow objectives. "via the Jacobian correction term"

- Jacobian-vector products (JVPs): Efficient computations of Jacobian times a vector used to obtain derivative terms without forming full Jacobians. "Jacobian-vector products (JVPs)"

- Latent Adversarial Diffusion Distillation (LADD): An adversarial distillation method where a student matches a teacher’s latent distribution by fooling a discriminator. "Latent Adversarial Diffusion Distillation (LADD)"

- Latent diffusion model: A diffusion process applied in a compressed latent space rather than pixel space for efficiency. "latent diffusion model (the teacher model)"

- MeanFlow: A training/distillation framework that learns the average velocity between two timesteps, enabling few-step generation. "MeanFlow takes a distinctly different approach from standard Flow Matching."

- MMD (Maximum Mean Discrepancy): A kernel-based metric used to compare distributions by matching all moments implicitly. "Maximum Mean Discrepancy (MMD)"

- MMDiT architecture: A diffusion transformer architecture variant used in FLUX.1-lite and its distilled students. "MMDiT architecture"

- Network Function Evaluations (NFEs): The number of model calls during sampling; reducing NFEs lowers latency. "Network Function Evaluations (NFEs)"

- sCM (stabilized Continuous-time Consistency Models): A consistency modeling framework with techniques (e.g., TrigFlow, adaptive weighting) to fix continuous-time training instability. "stabilized Continuous-time Consistency Models (sCM)"

- Signal-to-noise ratio (SNR): A measure of signal strength relative to noise; preserved when mapping between Flow Matching and TrigFlow time domains. "signal-to-noise ratio (SNR)"

- Stochastic interpolant: A probabilistic path connecting distributions over time used to define consistency constraints. "stochastic interpolant path"

- stopgrad: An autodiff operation that prevents gradients from flowing through a target, stabilizing regression. "stopgrad(u_tgt)"

- Teacher-student paradigm: A training setup where a student model learns from a fixed, often larger teacher model to achieve efficient generation. "teacher-student paradigm"

- Trajectory-based Distillation: A paradigm where a student is trained to match entire segments of the teacher’s sampling trajectory, not just final outputs. "Trajectory-based Distillation"

- TrigFlow: A trigonometric parameterization of the diffusion process that improves stability and enables conversions with Flow Matching. "TrigFlow formulation"

- TrigFlow reparameterization: Using TrigFlow’s time/state transforms to reinterpret a model within a different sampling framework. "TrigFlow reparameterization of the FLUX.1-lite model"

Practical Applications

Immediate Applications

The following applications can be deployed now using the paper’s open-source codebase, pretrained student models, and the practical recipes provided (e.g., timestep normalization, dual-timestep encoding for MeanFlow, improved CFG, TrigFlow–Flow Matching interoperability).

- Real-time creative co-pilot for designers and marketers

- Sector(s): software, advertising, media, e-commerce

- What: Integrate sCM-based few-step T2I (NFE ≤ 2) into UI tools (Figma/Photoshop plug-ins, web editors) for instant visual ideation from prompts and rapid iteration during creative workflows.

- Tools/Workflows: A Diffusers-backed microservice exposing “draft at NFE=1, refine at NFE=2–4” endpoints; server-side classifier-free guidance presets; prompt history and versioning.

- Dependencies/Assumptions: Access to a FLUX-class teacher or the released student checkpoints; safety filters for brand/compliance; prompt logging for auditability.

- Low-latency content generation for games and XR

- Sector(s): gaming, AR/VR

- What: Use sCM for on-the-fly asset sketches (textures, decals, icons) with NFE=1–2 during gameplay or prototyping; switch to MeanFlow NFE=4 for final-quality assets at scene load.

- Tools/Workflows: Unity/Unreal plug-ins calling Distill-students with step budget controls; caching and progressive refinement pipelines.

- Dependencies/Assumptions: Deterministic seeds for reproducibility; quality gates for visual artifacts; guardrails to prevent generating IP-sensitive assets.

- Instant product mock-ups in e-commerce

- Sector(s): retail, e-commerce

- What: Generate lifestyle images, colorways, or packaging concepts from text prompts with near-real-time latency for A/B testing and listing generation.

- Tools/Workflows: “Try-draft → approve → upscale” pipeline; sCM at NFE=2 for rapid drafts, MeanFlow at NFE=4 for production; batch endpoints for catalog workflows.

- Dependencies/Assumptions: Strong prompt templates; human-in-the-loop curation; watermarking and disclosure policies for synthetic content.

- Cost-optimized inference for cloud providers

- Sector(s): software/cloud, energy

- What: Replace 28-NFE teacher inference with 2–4 NFE students to cut GPU-hours and emissions while maintaining alignment (GenEval/DPG-Bench).

- Tools/Workflows: Autoscaling based on step budgets; routing: sCM for sub-200ms SLAs, MeanFlow for quality-priority SLAs; token-bucket rate limiting.

- Dependencies/Assumptions: Accurate demand prediction; robust observability for quality drift; periodic re-distillation if teacher updates.

- Progressive generation UX for messaging and social platforms

- Sector(s): social media, consumer apps

- What: Show “instant preview” at NFE=1 (sCM), then refine to NFE=2–4 while the user reviews or edits the prompt.

- Tools/Workflows: Client-side prefetch; server-side multistep refinement; prompt editing with live feedback and safety scanning.

- Dependencies/Assumptions: Efficient caching; moderation API in the loop; device capability detection for on-device vs. edge inference.

- Academic baselines and reproducible experiments

- Sector(s): academia

- What: Use the unified framework and codebase to benchmark sCM vs. MeanFlow for T2I, replicate GenEval/DPG-Bench results, test TrigFlow–Flow Matching conversions without retraining.

- Tools/Workflows: Diffusers training scripts, DeepSpeed ZeRO, JVP utilities; standardized evaluation harnesses; ablation notebooks.

- Dependencies/Assumptions: GPU access (training used 32 Nvidia H20 GPUs); dataset licenses; careful hyperparameter transfer across teachers.

- Internal rapid prototyping labs (“T2I distillation recipes”)

- Sector(s): enterprise R&D, software

- What: Apply the paper’s practical guidelines (timestep normalization, dual-time input, improved CFG, loss exponent γ=2) to distill any strong T2I teacher into few-step students.

- Tools/Workflows: Modular teacher–student pipelines; CI for retraining as teachers evolve; artifacts registry for student promotion.

- Dependencies/Assumptions: Teacher availability and licensing; access to high-quality text–image data; monitoring for distribution shift.

- Energy and sustainability reporting uplift

- Sector(s): policy, sustainability

- What: Quantify and report energy savings from moving to few-step generators (NFE 28 → 2–4), supporting Green AI objectives and ESG disclosures.

- Tools/Workflows: Emissions calculators tied to inference logs; per-request NFE telemetry; cost and carbon dashboards.

- Dependencies/Assumptions: Reliable emissions factors; organizational policy alignment; auditor-friendly logging.

- Synthetic dataset generation for vision tasks (textures, backgrounds)

- Sector(s): robotics, autonomous systems, healthcare imaging R&D

- What: Use fast T2I to create diverse visual backdrops and textures for sim-to-real transfer or data augmentation in perception pipelines.

- Tools/Workflows: Prompt libraries mapped to task domains; quality filters (DPG-like alignment); controlled randomization and metadata tagging.

- Dependencies/Assumptions: Careful domain gap analysis; licensing and labeling of synthetic data; downstream validation of model robustness.

- Educational content creation at scale

- Sector(s): education

- What: Rapidly generate illustrative figures, concept visuals, and worksheets with low latency to support lesson authoring platforms.

- Tools/Workflows: Templates with curriculum-aligned prompts; sCM drafts, MeanFlow finals; accessibility checks (contrast, clarity).

- Dependencies/Assumptions: Content safety policies; educator review; IP and attribution guidance.

Long-Term Applications

The following require further research, scaling, or productization (e.g., broader teacher generalization, public datasets, safety tooling, on-device optimization, and robust governance).

- On-device few-step T2I for mobile and wearables

- Sector(s): consumer devices, edge AI

- What: Deploy sCM/MeanFlow students on NPUs for private, low-power generation; enable AR try-ons, local stickers/filters.

- Tools/Workflows: Model compression (quantization/pruning) plus dual-time encoders; ONNX/CoreML/TensorRT pipelines; fallback to edge servers.

- Dependencies/Assumptions: Hardware acceleration for JVP or equivalent ops; tight memory footprints; rigorous battery and thermal testing.

- Policy-aligned safety, watermarking, and provenance for fast T2I

- Sector(s): policy, platform governance

- What: Standardize provenance for low-latency generation, including cryptographic watermarks, safety classifiers tuned for few-step outputs, and rate-control to prevent misuse.

- Tools/Workflows: Integrated moderation before/after steps; prompt safety linting; provenance metadata in outputs; compliance dashboards.

- Dependencies/Assumptions: Reliable watermarking resilient to post-processing; evolving regulatory standards; cross-platform interoperability.

- Sector-specific assistants with constrained generation (healthcare, finance, public sector)

- Sector(s): healthcare, finance, government

- What: Domain-constrained T2I (e.g., medical diagram generation, compliance-safe infographics) with guardrails and audit trails.

- Tools/Workflows: Domain prompt libraries; policy filters; expert-in-the-loop review; regulated data isolation.

- Dependencies/Assumptions: Strict safety regimes; legal approvals; domain dataset curation and teacher adaptation.

- Unified trajectory-distillation platforms (multi-teacher, multi-student, multi-sampler)

- Sector(s): software platforms, MLOps

- What: A platform that automates teacher selection, distillation strategy (sCM/MeanFlow/IMM), sampler conversions (TrigFlow↔Flow Matching), and step-budget routing.

- Tools/Workflows: Orchestrators with experiment tracking; automated hyperparameter search; service meshes with SLO-aware switching.

- Dependencies/Assumptions: Broad teacher access; automated evaluation (GenEval/DPG variants); model registry with governance.

- Interactive 3D/scene generation via few-step image-to-asset pipelines

- Sector(s): gaming, XR, robotics simulation

- What: Use fast T2I to produce style-consistent sprites, textures, and normal maps, then lift to 3D via downstream mesh/texturing models.

- Tools/Workflows: Prompt-to-asset chains; consistency checks across modalities; iterative refinement loops.

- Dependencies/Assumptions: Robust 2D→3D pipelines; material/lighting consistency across assets; IP-safe content creation.

- Enterprise “green compute” procurement standards

- Sector(s): policy, enterprise IT

- What: Procurement rules that favor few-step generative systems, with emissions SLAs and periodic audits tied to NFE budgets and throughput.

- Tools/Workflows: Vendor compliance tests; emissions baselines; independent verification frameworks.

- Dependencies/Assumptions: Industry consortium alignment; reliable cross-vendor metrics; legal enforceability.

- Formalizing interoperability standards between flow parameterizations

- Sector(s): academia, standards bodies

- What: Standard APIs/specs for TrigFlow–Flow Matching conversion so models/samplers interoperate across libraries without retraining.

- Tools/Workflows: Reference implementations; test suites; versioned specs managed by open-source foundations.

- Dependencies/Assumptions: Community adoption; conformance tooling; clear licensing.

- High-fidelity few-step video generation (extension of T2I)

- Sector(s): media, advertising, entertainment

- What: Extend trajectory distillation to text-to-video for short clips with real-time previews and refined finals.

- Tools/Workflows: Temporal consistency losses; multi-frame MeanFlow/sCM; streaming UX with progressive refinement.

- Dependencies/Assumptions: New teachers and datasets; significant engineering for temporal stability; stronger safety controls.

- Public benchmark and dataset release for open-domain T2I distillation

- Sector(s): academia, open-source ecosystem

- What: Create open datasets and standardized evaluation suites beyond ImageNet, enabling fair comparisons of sCM/MeanFlow/IMM in T2I.

- Tools/Workflows: Licensing-cleared corpora; annotation protocols; leaderboards with reproducibility badges.

- Dependencies/Assumptions: Data-sharing agreements; funding for curation; community governance.

- Automated prompt engineering and step-budget optimization

- Sector(s): software tooling, MLOps

- What: Systems that learn optimal CFG, κ, and NFE per prompt to balance speed and quality automatically.

- Tools/Workflows: Meta-controllers using GenEval/DPG proxy metrics; reinforcement learning on latency–quality trade-offs; cache-aware routing.

- Dependencies/Assumptions: Robust prompt-quality estimators; safe exploration strategies; continuous monitoring to prevent regressions.

Collections

Sign up for free to add this paper to one or more collections.