- The paper introduces a novel open-access agentic AI framework that integrates eight open-source LLMs with over twenty material-science APIs to automate autonomous research workflows.

- It demonstrates both single-tool and multi-tool pipelines that combine data-driven simulations with benchmarked LLM performance, ensuring reproducible and efficient materials design.

- The evaluation reveals that while tool augmentation improves bulk modulus predictions, it adversely affects accuracy for other properties, highlighting the need for adaptive tool-selection strategies.

AGAPI-Agents: An Open-Access Agentic AI System for Autonomous Materials Research

System Architecture and Core Innovations

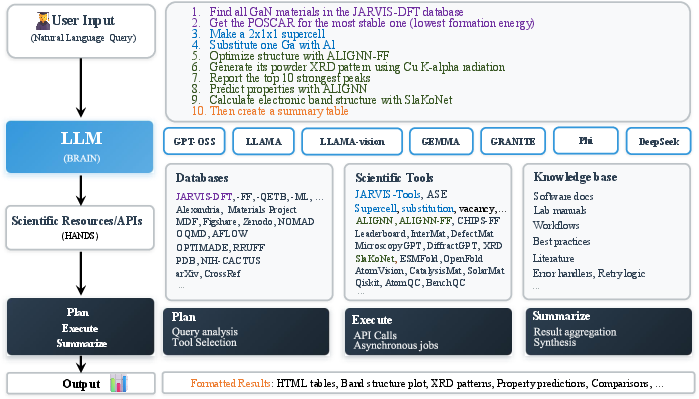

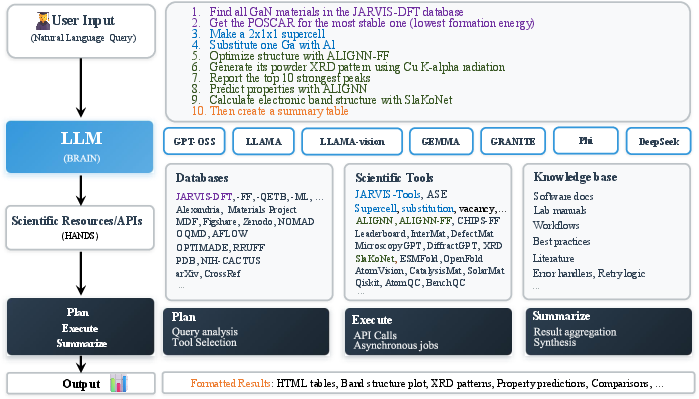

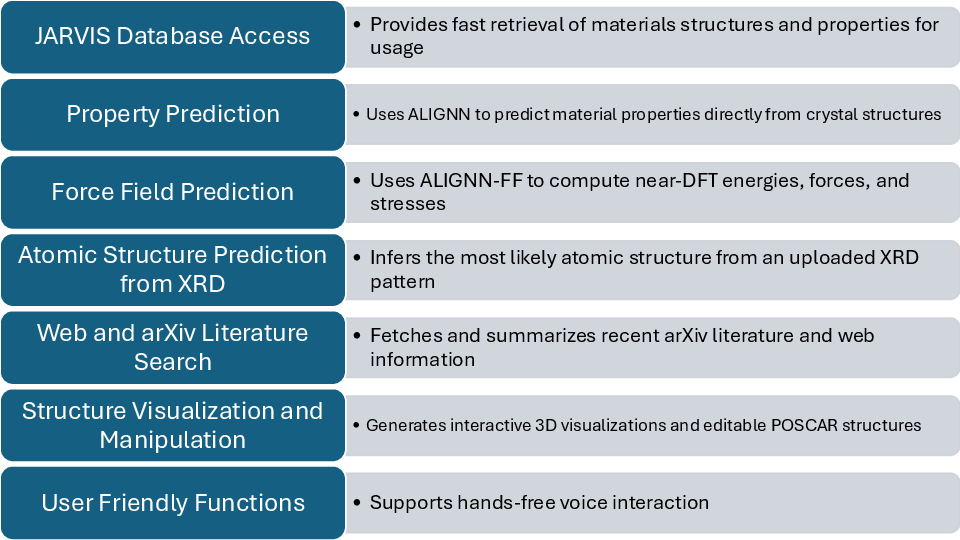

"AGAPI-Agents: An Open-Access Agentic AI Platform for Accelerated Materials Design on AtomGPT.org" (2512.11935) introduces a fully open-access, agentic AI framework specifically engineered to orchestrate data-driven and simulation-driven workflows in materials science. AGAPI integrates eight open-source LLMs with over twenty materials-science tool APIs, encompassing data retrieval, property prediction, optimization, and characterization. The central architecture adheres to an Agent-Planner-Executor-Summarizer abstraction, modularizing reasoning, tool orchestration, execution, and result synthesis into distinct phases, and facilitating autonomous multi-step workflows.

Figure 1: Overview of the AGAPI-Agents workflow integrating an LLM-driven planner with multi-tool orchestration for autonomous materials design.

The architecture separates the reasoning module (LLM), the API execution layer (containing databases and computational models), an asynchronous workflow orchestrator, and a response synthesis engine. The API layer itself exposes endpoints for diverse functionalities, including direct access to JARVIS-DFT, the Materials Project, AFLOW, OQMD, NIH-CACTUS, PDB, AlphaFold, and advanced ML models such as ALIGNN, ALIGNN-FF, SlaKoNet, DiffractGPT, and CHIPS-FF. This modular design supports the addition of new tools (hard or soft matter), ensuring transparent and extensible scientific automation.

Mitigating Hallucination and Bias in Scientific LLM Applications

A central challenge addressed by AGAPI is factual hallucination—a frequent pitfall of LLMs in scientific contexts. By enforcing tool-calling as the default behavior, the system grounds all outputs in validated computations or data retrieved from credible sources, thereby minimizing the risk of hallucinated (i.e., physically implausible or incorrect) outputs.

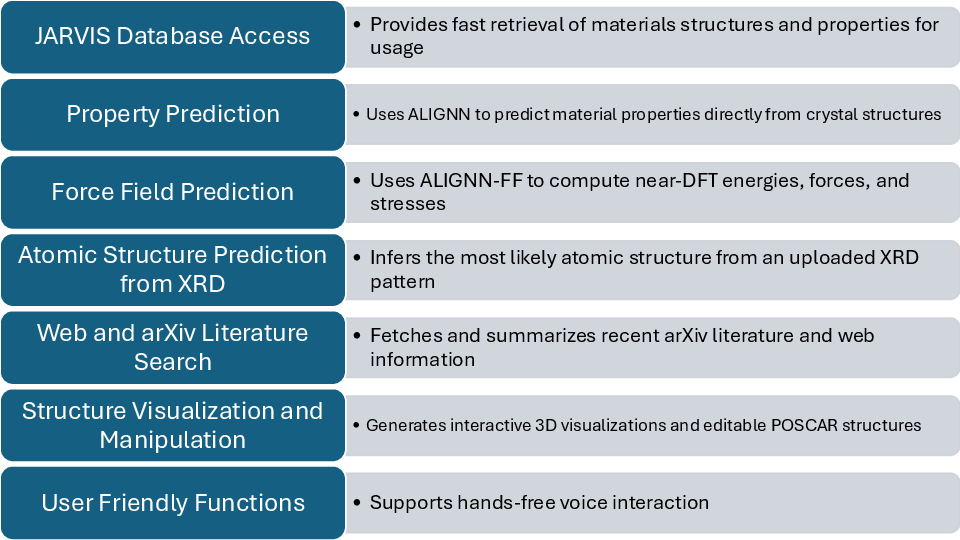

Figure 2: Tool calling within AtomGPT.org constrains the LLM to validated outputs via external APIs, reducing hallucination during materials-related queries.

Retrieval-augmented generation is enforced, and the system employs deterministic sampling and version pinning to enable reproducibility, which is conventionally hampered in commercial LLM ecosystems due to API version drift and proprietary behavior changes.

AGAPI systematically benchmarks eight open-source LLMs for token generation speed, reasoning efficacy, and scalability under load. Notably, the GPT-OSS-20B yielded a mean token generation speed of 141.7 tokens/s (3.93× Llama-3.2-90B baseline), with the GPT-OSS-120B achieving 122.3 tokens/s (3.39× baseline). Testing simulates 1,000 concurrent users with mean response latency of 16.6 s, highlighting compute bottlenecks but projecting feasibility of further optimization towards sub-2s latency.

Figure 3: Comparative benchmarking of LLM token generation speeds and API response latency under heavy concurrent load, demonstrating superior throughput for open-source models.

Selection of open models (versus commercial LLMs) resolves cost and IP barriers and guarantees reproducibility, albeit with potentially marginal reductions in benchmark accuracy for some downstream tasks.

Unified API, Interoperability, and Reproducibility

AGAPI provides a RESTful interface, documented via OpenAPI 3.1, covering >20 endpoints encompassing property prediction, structure manipulation, database queries, force field relaxation, and characterization (e.g., XRD, band structure simulation). Each endpoint supports synchronous and asynchronous requests, model/version pinning, and deterministic execution for strict reproducibility.

Figure 4: AGAPI’s modular API design enables seamless integration of databases, ML property predictors, structure generators, and characterization pipelines for autonomous workflows.

Figure 5: Automatically generated interactive API documentation, supporting both GET and POST for information retrieval and on-demand scientific computation.

AGAPI demonstrates canonical AI-powered research tasks via both single-tool and multi-tool workflows. Atomic structure-based property prediction (ALIGNN), force field optimization (ALIGNN-FF), advanced search/filtering (JARVIS-DFT), and structure visualization are each executable with a simple API call.

Figure 6: Real-time property prediction via ALIGNN using AGAPI API calls, returning structured JSON outputs and atomic structure visualizations.

Figure 7: End-to-end database search workflow, from natural language query translation to formatted, metadata-rich results for downstream processing.

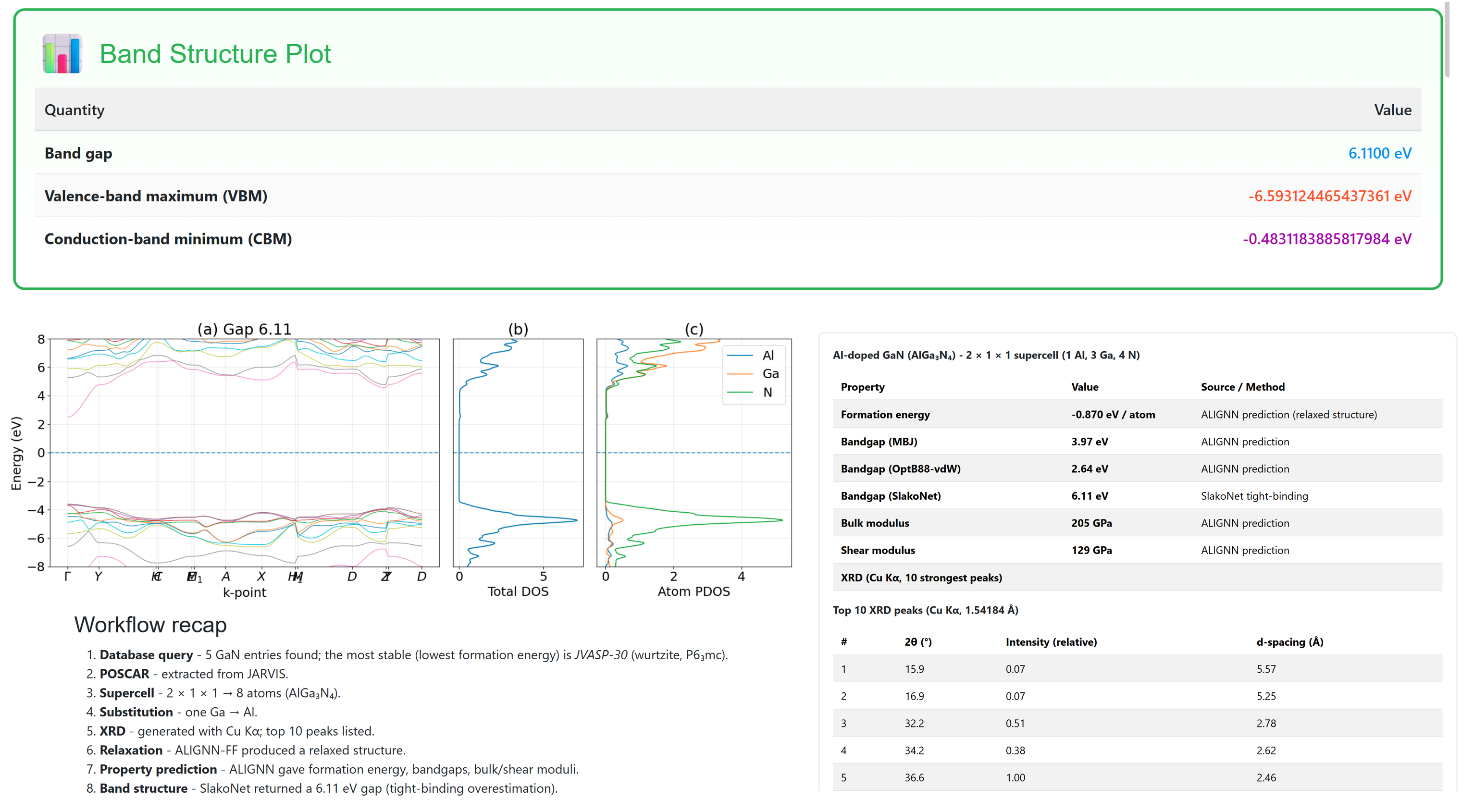

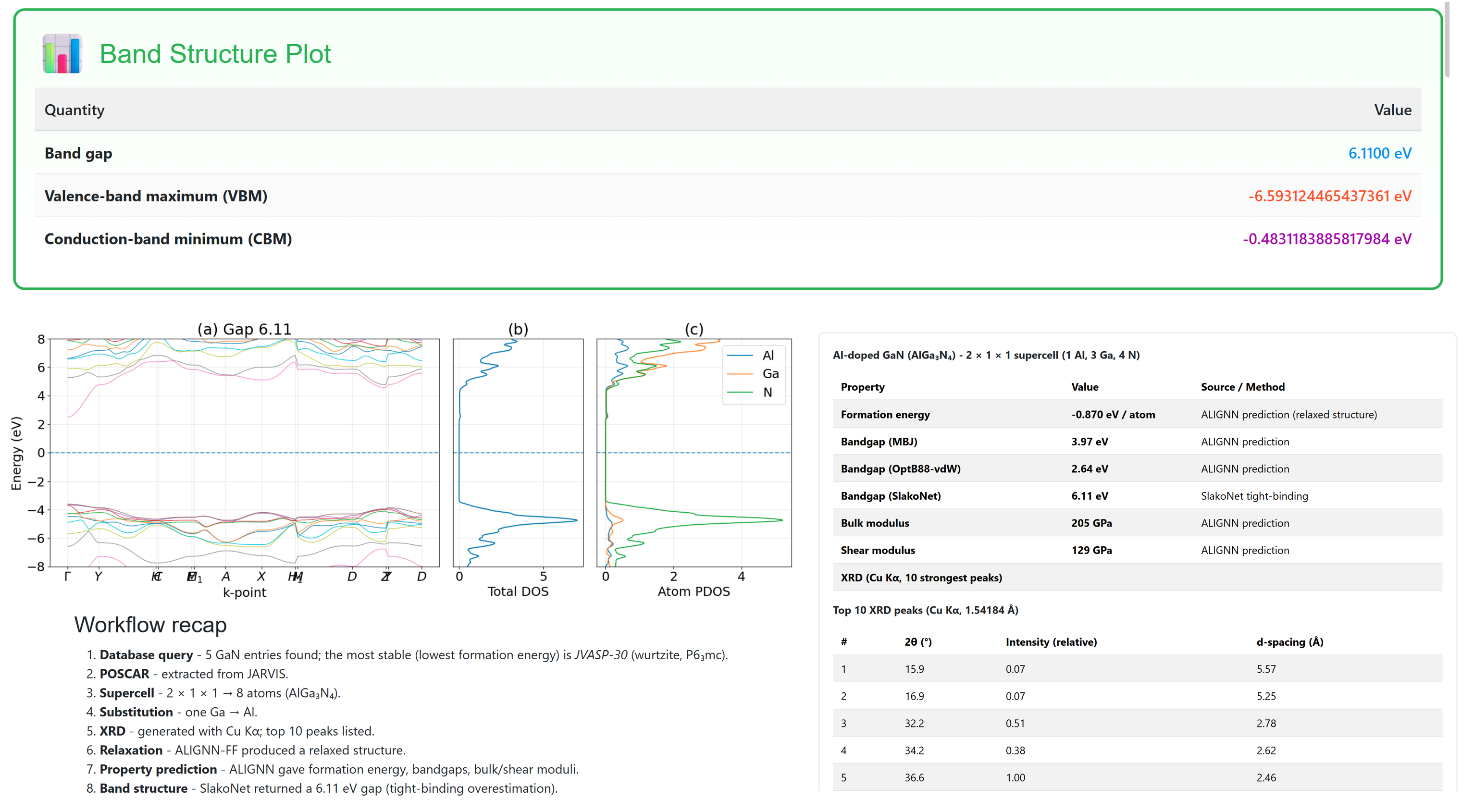

Multi-step simulations orchestrate complex design, characterization, and defect engineering pipelines through composition of multiple endpoints. For example, a comprehensive 10-step semiconductor defect workflow involves structure search, supercell construction, atomic substitution, geometric relaxation, XRD simulation, property and band structure calculations, and result synthesis—executed fully autonomously.

Figure 8: A 10-step workflow for defect analysis and band structure computation, incorporating structure generation, optimization, experimental observable simulation, and summarization.

AGAPI quantitatively benchmarks prediction accuracy for five material properties, comparing LLM-only (tool-free) against tool-augmented predictions versus experiment. Bulk modulus predictions benefit from tool integration (MAE reduction: 27%; R2 increase: 0.984→0.994), but band gap, Tc, SLME, and εx accuracy degrade (band gap MAE +40%, Tc MAE ×5, SLME +63%, εx +86%) when tool augmentation is enabled.

Figure 9: Parity plots for five properties show tool integration improves bulk modulus prediction but degrades other target properties versus experiment. Performance is property-dependent due to discrepancies in data quality and model/database coverage.

This non-monotonic enhancement arises from property- and database-specific factors. For well-publicized materials, LLM parametric knowledge—in some cases—outperforms database retrieval, but as queries diverge from high-visibility systems (e.g., involve defects or superlattices) or require outputs not derivable from textual knowledge (full band structures), the benefit of physics-based tool APIs increases. The authors advocate for future adaptive tool-selection protocols within scientific AI agents.

Limitations, Theoretical Implications, and Future Prospects

The AGAPI framework substantially lowers the access barrier for autonomous materials simulation, encourages rigorous reproducibility, and enables rich, multi-step workflow composition. The agentic paradigm, as operationalized in this system, proves especially valuable when integrated with ML-based property predictors, force fields, and domain constraints.

Limitations include coverage boundaries (limited to available API endpoints), occasional LLM misinterpretation of ambiguous queries, and brittleness in excessively long automated workflows (due to error propagation and execution timeouts). The efficacy of tool augmentation is non-uniform across prediction tasks, contingent on database quality, coverage, and computational standardization.

Theoretically, AGAPI supports the hypothesis that the next bottleneck in scientific AI will shift from LLM reasoning to the breadth and reliability of accessible scientific tools and data APIs. Practically, the work provides a scalable model for other scientific disciplines by enforcing domain-specific tool integration, open source infrastructural transparency, and proactive reproducibility.

Prospective development directions include extension to multi-modal (image/spectra) workflows, adaptive and active learning, multi-agent autonomous composition, on-prem/cloud hybrid deployment, and community-contributed plugins and databases.

Conclusion

AGAPI-Agents establishes an open, highly modular, and agentic AI framework for autonomous materials discovery, combining robust LLM-driven planning with reproducible, tool-augmented execution. Performance analyses reveal the nuanced relationship between tool augmentation and LLM competency across material properties, underscoring the importance of adaptive agent architectures and verifiable API integration in scientific automation. The platform's extensible infrastructure and open-source orientation position it as a reference implementation for next-generation agentic science systems.