- The paper introduces a novel on-the-fly 3D reconstruction framework leveraging multi-camera rigs to overcome monocular limitations.

- It employs hierarchical camera initialization, lightweight bundle adjustment, and redundancy-free Gaussian sampling to achieve drift-free trajectories and high-fidelity scene synthesis.

- Experiments show significant gains in rendering fidelity (up to 2.82 dB PSNR improvement) and efficient reconstruction, enabling real-time applications in autonomous navigation and XR.

On-the-fly Large-scale 3D Reconstruction from Multi-Camera Rigs

Introduction and Motivation

This work presents an on-the-fly 3D reconstruction framework that leverages multi-camera rigs, enabling online synthesis of large-scale scenes with both high photorealistic fidelity and trajectory stability. Prior on-the-fly 3DGS (3D Gaussian Splatting) approaches predominantly target monocular inputs, frequently resulting in incomplete coverage and spatial inconsistency due to the restricted FOV. By fusing overlapping multi-camera RGB streams, this method achieves comprehensive scene sampling, drift-free trajectory optimization, and eliminates the persistent need for explicit camera calibration.

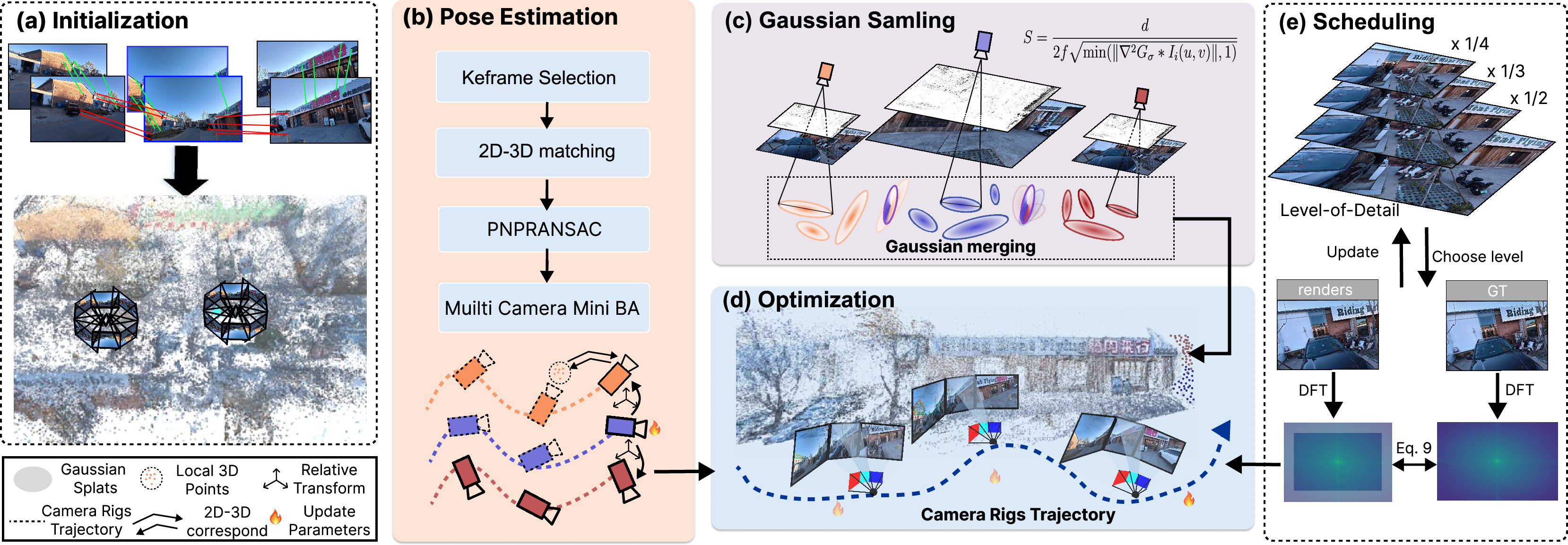

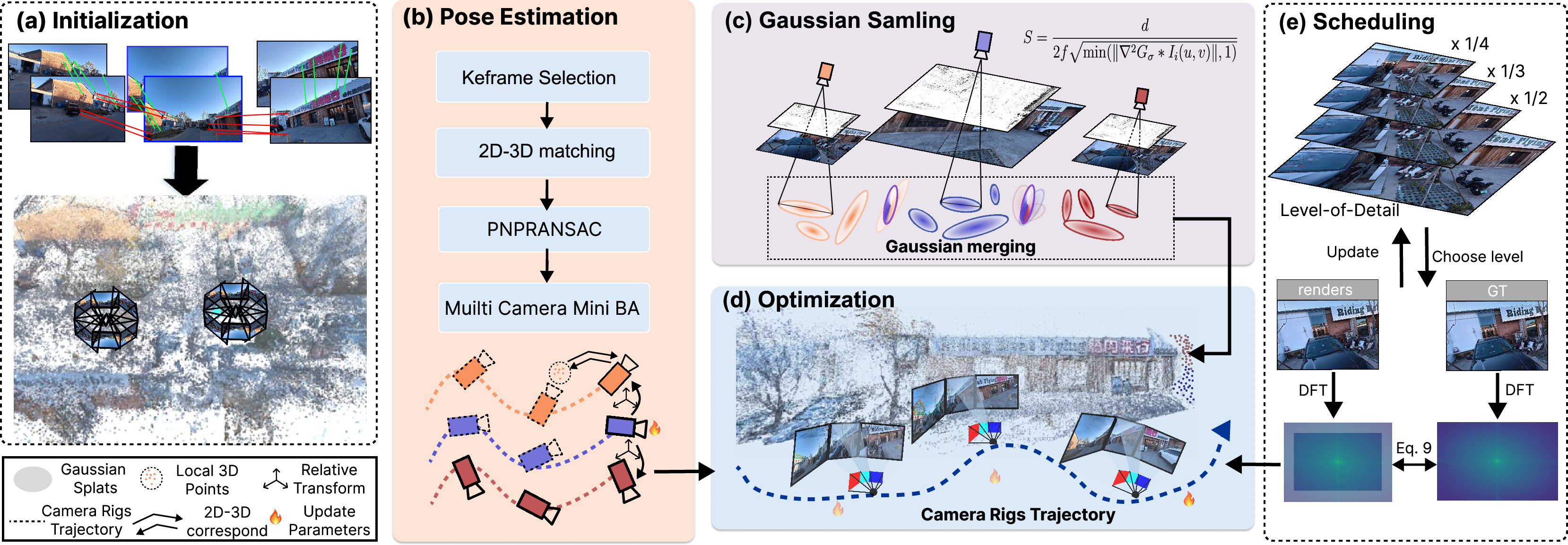

Pipeline Overview

The reconstruction pipeline initiates with hierarchical camera initialization, identifying a central camera by feature matching and progressively aligning other devices without extrinsic or focal calibration. A lightweight multi-camera bundle adjustment (BA) follows, stabilizing trajectories across wide baselines with minimal computational overhead. Redundancy-free Gaussian sampling mitigates spatial and temporal overlap, while a frequency-aware optimization scheduler dynamically refines regions by local spectral characteristics and geometric uncertainty.

Figure 1: Overview of the on-the-fly multi-camera pipeline integrating initialization, bundle adjustment, Gaussian sampling/merging, and frequency-adaptive scheduling for large-scale scene synthesis.

Multi-Camera Rig and Pose Initialization

The proposed system establishes inter-camera consistency using central-camera feature-based alignment and tree-structured matching. For each camera rig configuration, the central reference is selected to minimize pairwise distance to other cameras. Initial transformation matrices are computed and refined using GPU-accelerated RANSAC and mini BA over co-visible 3D points. This hierarchical strategy is critical for robust trajectory inference across flexible, synchronized multi-camera arrays, especially in wide-baseline scenarios.

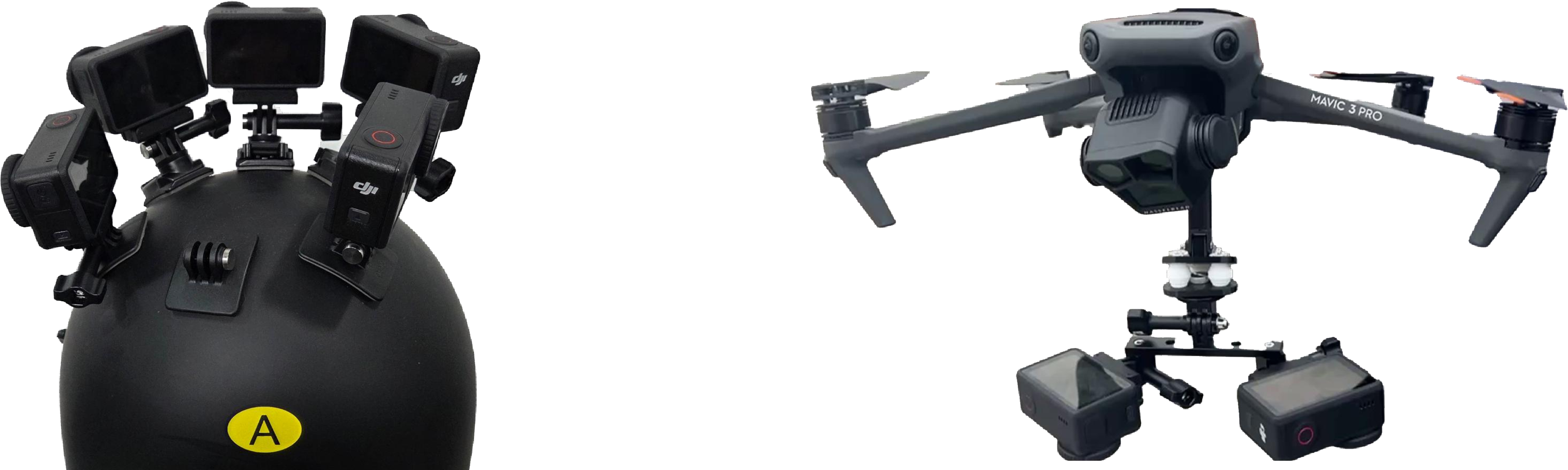

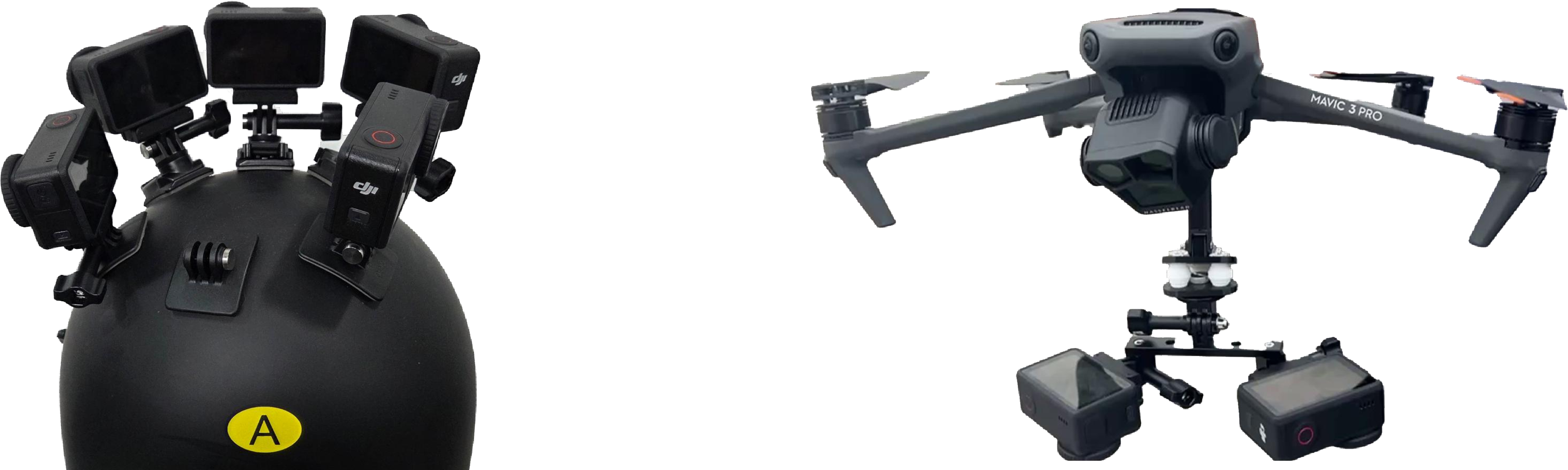

Figure 2: Characterization of helmet and drone-mounted multi-camera rigs utilized for street and aerial captures, providing synchronized and spatially overlapping perspectives.

Redundancy-free Gaussian Sampling and Merging

Instead of unsupervised pixel-wise splat initialization, the framework applies a Laplacian of Gaussian operator to compute insertion probabilities along high-frequency image regions or areas with high reconstruction error. Gaussian primitives are instantiated adaptively by thresholding Pa(u,v), ensuring spatial compactness. Inter-camera redundancy is further addressed by reprojection and bilinear correspondence matching, where Gaussian merging is conditioned on image-space scale and depth consistency. Primitives with substantial depth deviation are withheld from merging to preserve occlusion structure and surface separation.

Frequency-based Optimization Scheduling

The optimization protocol leverages framewise DFT analysis to allocate refinement iterations in a spatially-adaptive manner. Regions retaining low-frequency spectral dominance are prioritized for further upsampling and anchor aggregation, accelerating convergence for globally consistent scene synthesis. Both Gaussian and camera parameters are updated jointly, with spherical harmonic gradients detached for non-central cameras. The scheduler ensures strict iteration bounds per keyframe (10–60) to satisfy real-time constraints.

Dataset: RigScapes

Recognizing that existing multi-camera datasets exhibit exposure mismatches, excessive dynamics, and restrictive scales, a new RigScapes dataset is introduced. It features synchronized helmet and drone captures, spanning 1 km street scenes and 1 km² aerial areas, systematically managing exposure, dynamic object prevalence, and desynchronization. Data is acquired with DJI Action 5 Pro cameras, providing a comprehensive benchmark for low-latency, high-fidelity outdoor reconstruction.

Quantitative and Qualitative Evaluation

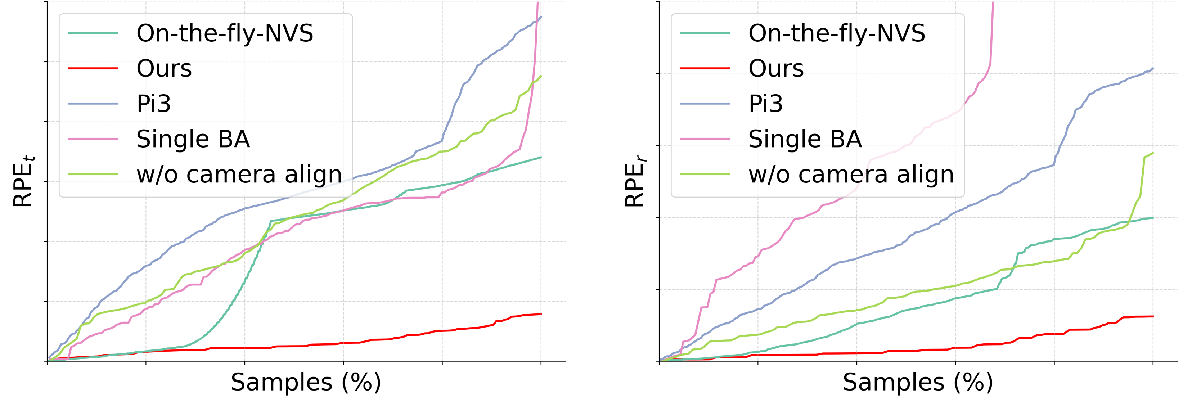

Experimental analysis covers three datasets with configurations engineered to test system robustness under varying overlap and scene complexity. Novel view synthesis is evaluated with PSNR, SSIM, and LPIPS; pose accuracy is measured via ATE and RPE. The method surpasses baselines both in rendering fidelity (up to 2.82dB PSNR gain and 15% SSIM improvement) and rapid reconstruction time (e.g., kilometer-scale scenes in 2 minutes), outperforming SLAM and offline approaches in both efficiency and consistency.

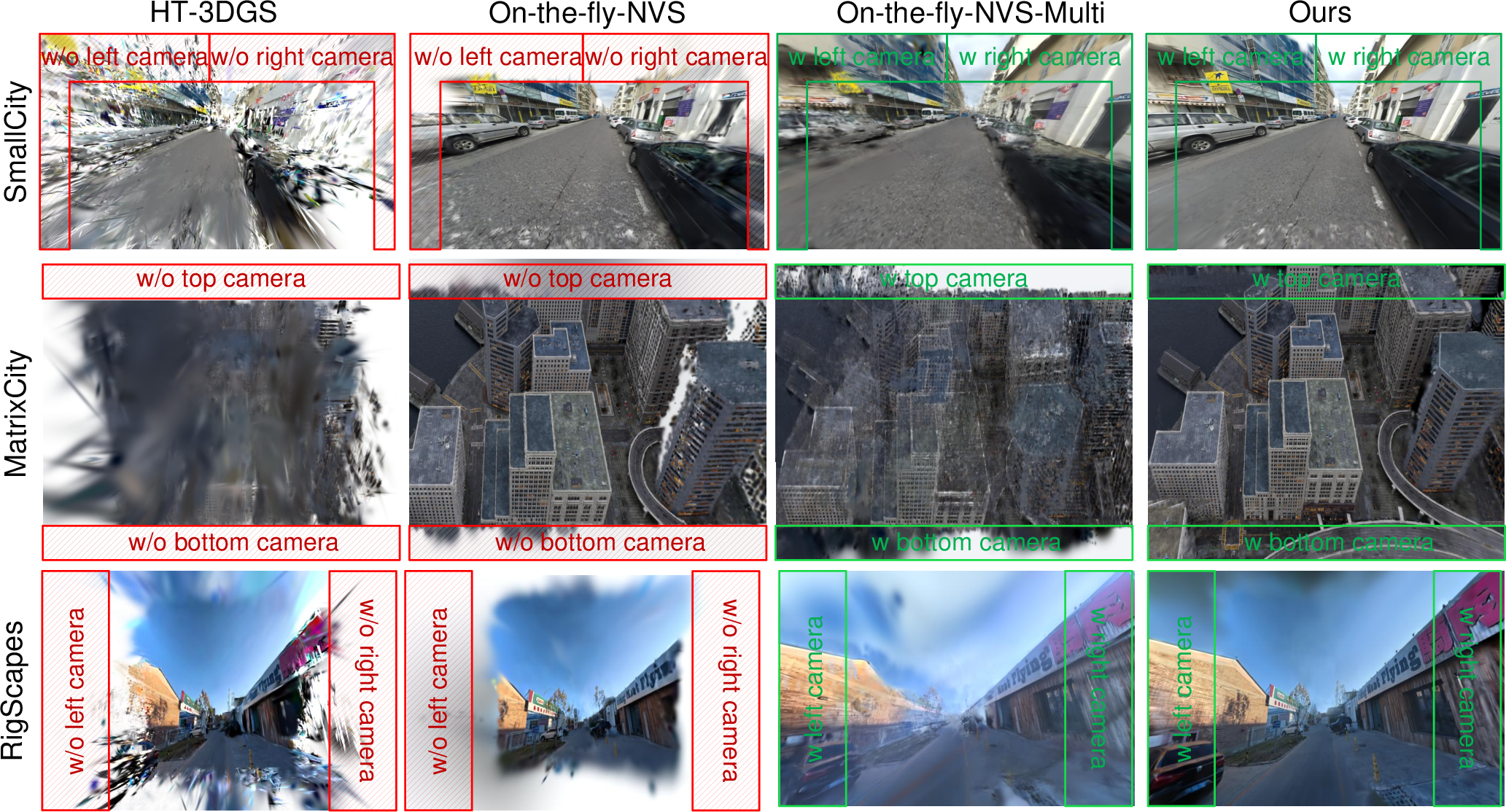

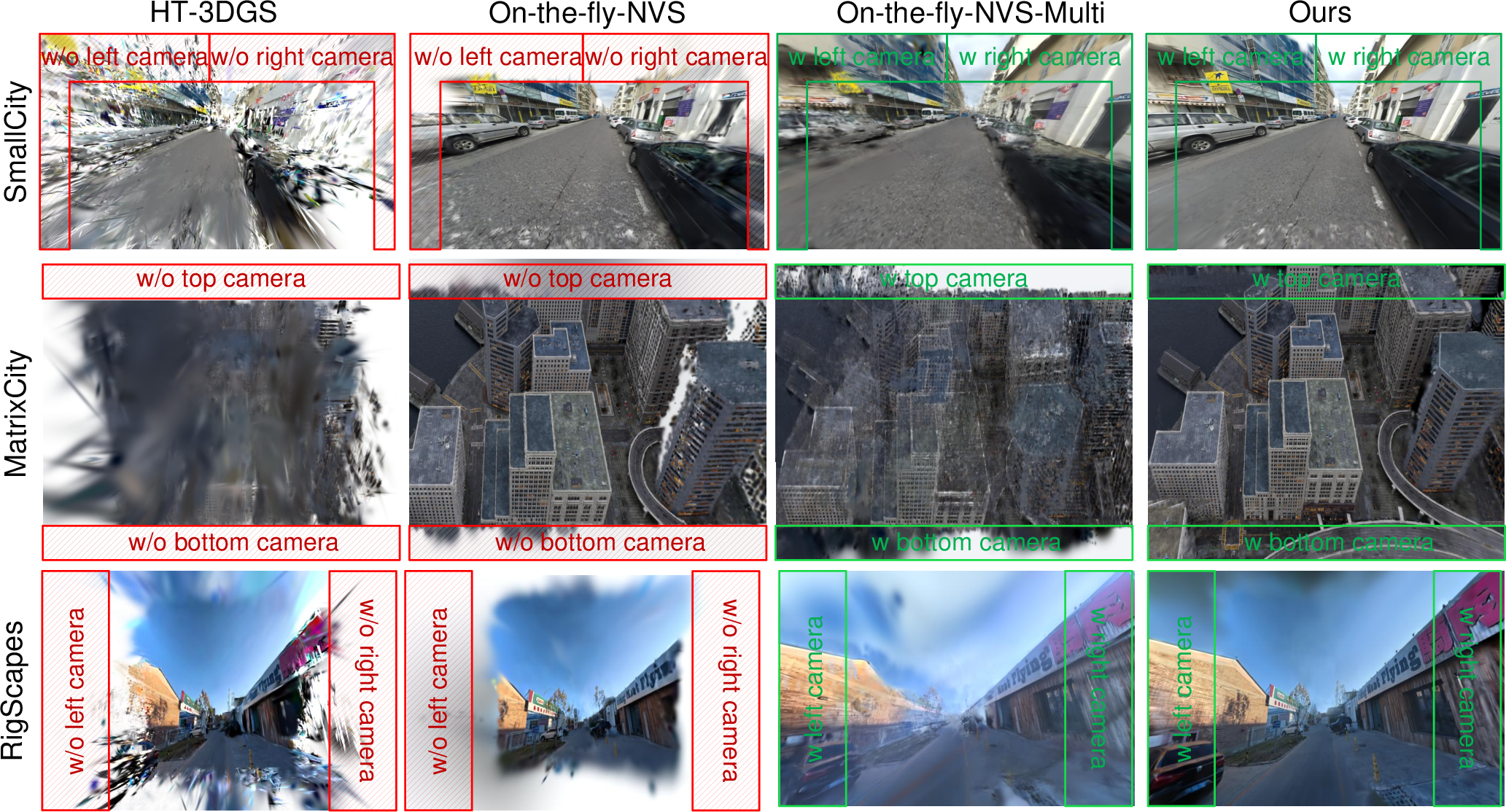

Figure 3: Scene reconstructions under expanded FOV—monocular methods display incomplete coverage, while multi-camera fusion achieves complete, artifact-free synthesis.

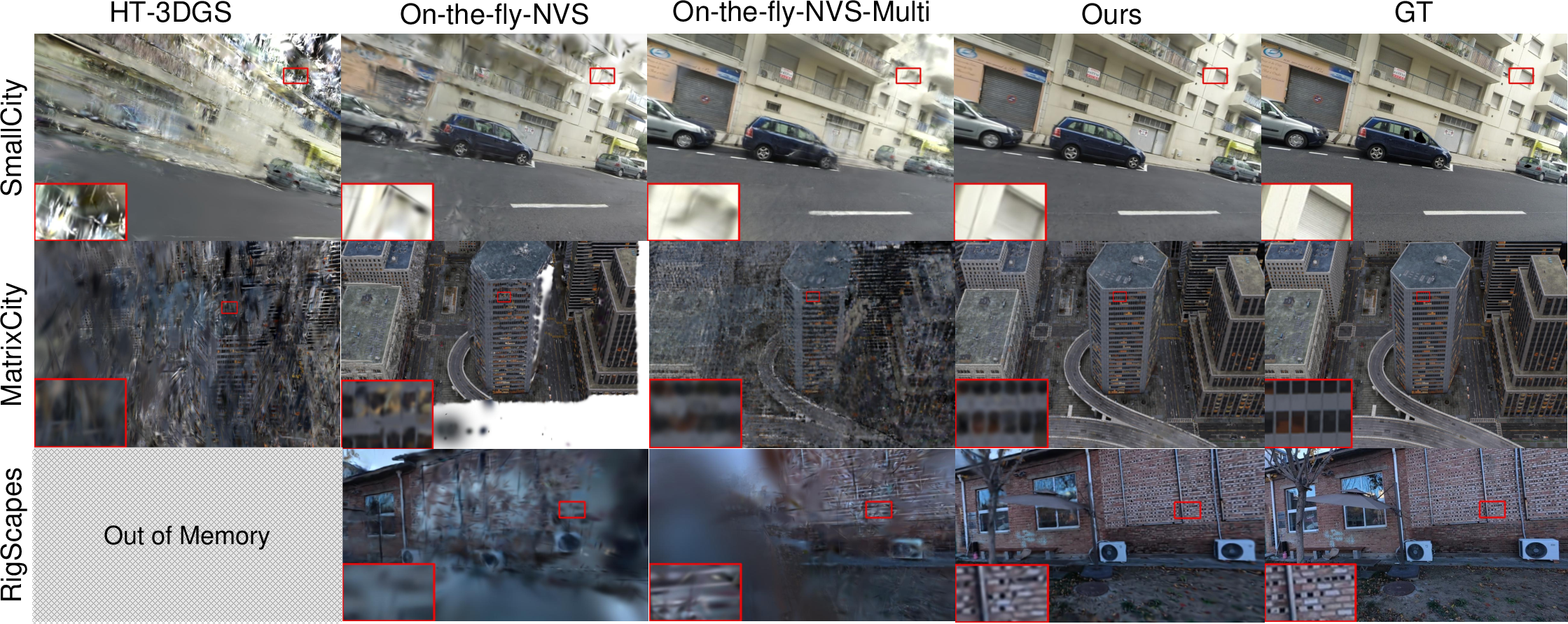

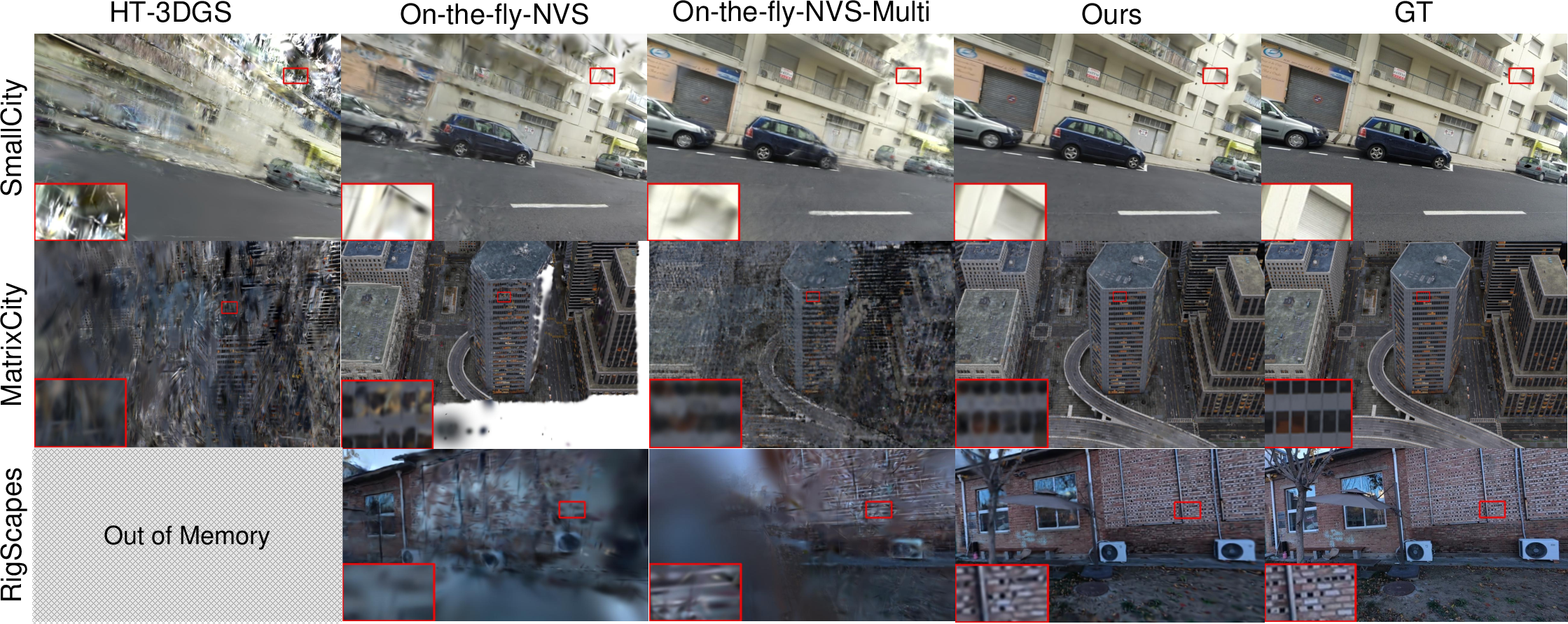

Figure 4: Performance under extreme viewpoint shifts; only the proposed approach maintains artifact-free renderings and geometric consistency.

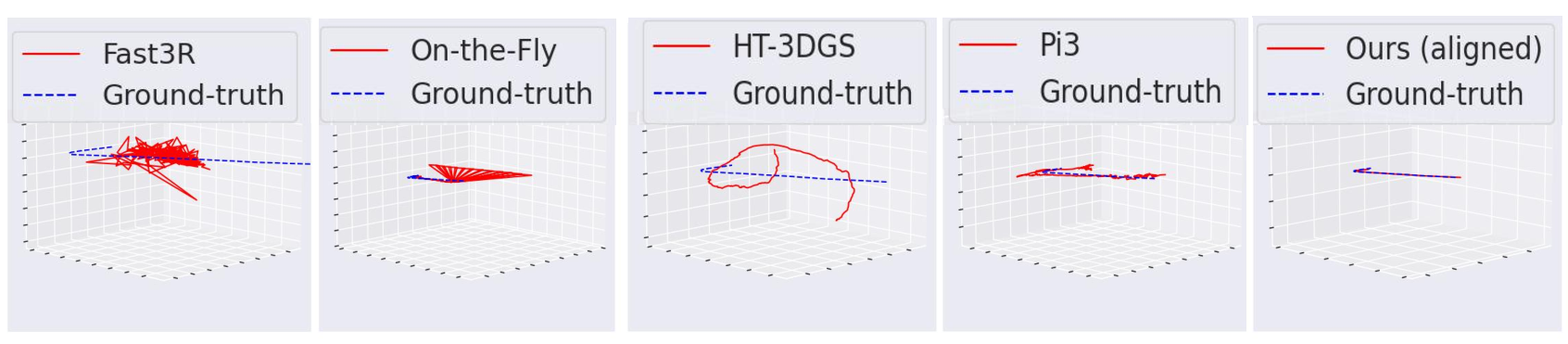

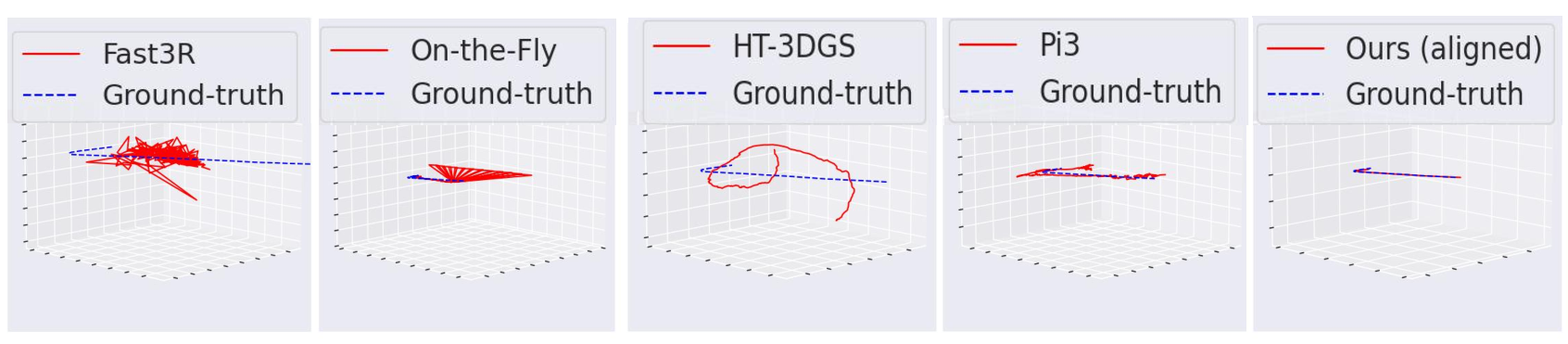

Figure 5: Visualization of multi-camera rig trajectories; unlike prior methods, trajectory drift is effectively suppressed across wide baselines.

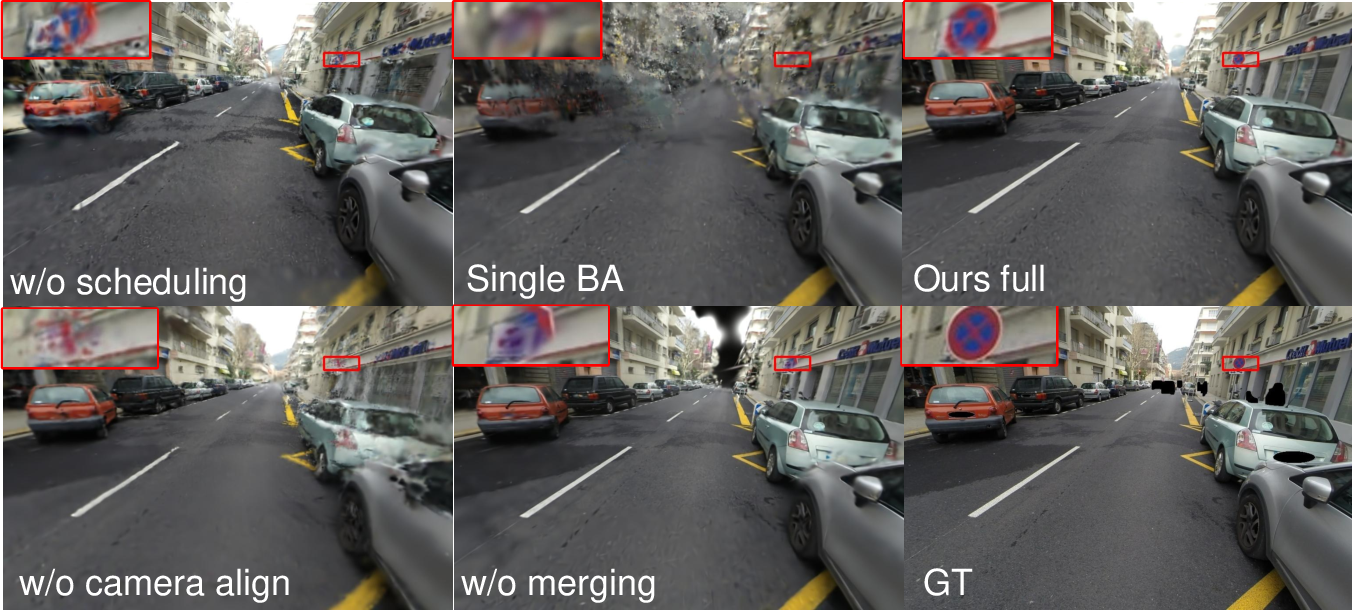

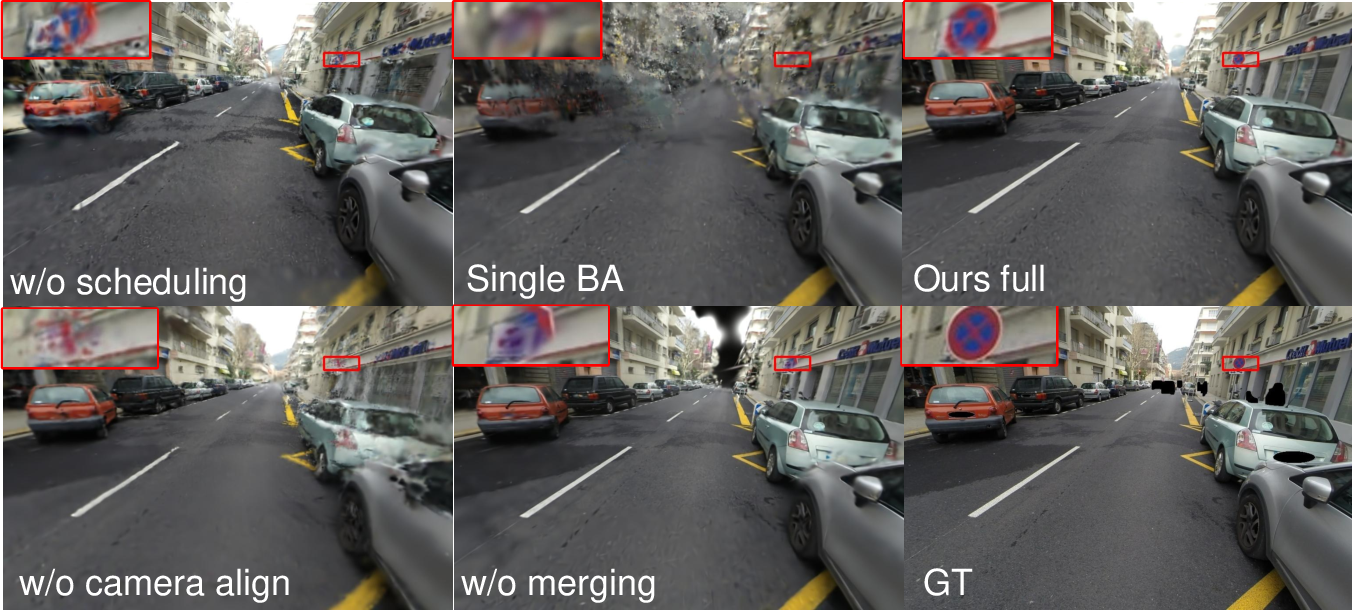

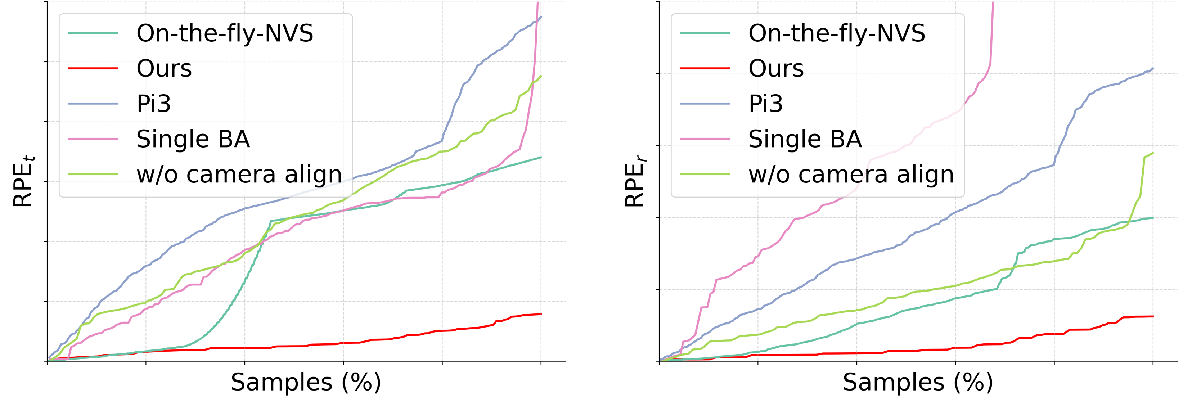

Ablation and Efficiency Analysis

Comprehensive ablation studies indicate substantial drops in PSNR, SSIM, and increases in LPIPS when disabling multi-camera BA, hierarchical alignment, frequency scheduling, or Gaussian merging. Memory usage is minimized by the merging strategy. The per-keyframe runtime breakdown confirms real-time feasibility (∼200 ms per camera), and scaling remains reliable for multi-camera inputs.

Figure 6: Ablation results highlighting the critical contributions of camera alignment, BA, scheduling, and Gaussian merging for maintaining high visual fidelity.

Figure 7: Pose error distributions across ablation settings, demonstrating trajectory stabilization provided by the full pipeline.

Implications and Future Directions

This framework innovates large-scale online 3D reconstruction by eliminating the requirements for calibration, inertial sensors, or offline optimization. Its architecture is immediately applicable to autonomous navigation, city modeling, and XR scenarios demanding comprehensive, low-latency scene synthesis. The underlying hierarchical initialization, redundancy-aware sampling, and spectral scheduling components offer new axes for scaling scene size, reducing memory, and improving multi-view consistency. Future directions may address further generalization to unconstrained dynamic scenes, non-rigid rigs, and integration with learned priors for improved semantic or temporal reasoning.

Conclusion

The paper advances state-of-the-art on-the-fly 3D reconstruction from multi-camera rigs, introducing a robust pipeline that fuses hierarchical initialization, scalable bundle adjustment, adaptive Gaussian management, and frequency-based optimization scheduling. The resulting system achieves efficient, drift-free, and high-fidelity reconstruction of kilometer-scale scenes using raw camera streams—pushing the envelope for practical, robust multi-camera scene capture and synthesis (2512.08498).