SurfFill: Completion of LiDAR Point Clouds via Gaussian Surfel Splatting (2512.03010v1)

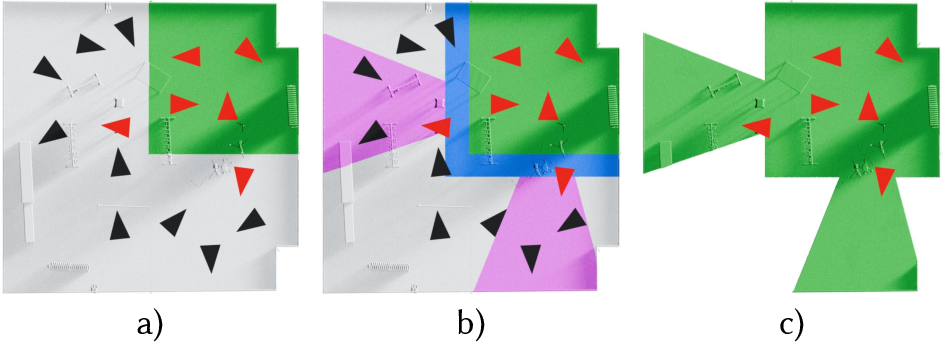

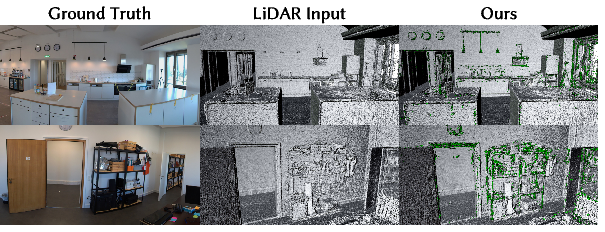

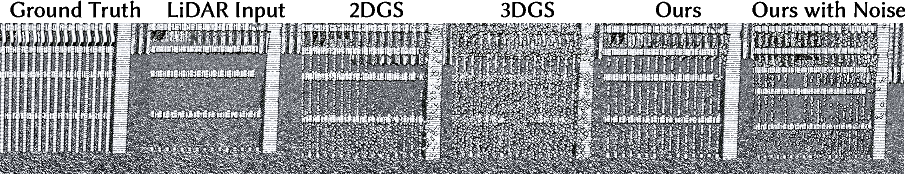

Abstract: LiDAR-captured point clouds are often considered the gold standard in active 3D reconstruction. While their accuracy is exceptional in flat regions, the capturing is susceptible to miss small geometric structures and may fail with dark, absorbent materials. Alternatively, capturing multiple photos of the scene and applying 3D photogrammetry can infer these details as they often represent feature-rich regions. However, the accuracy of LiDAR for featureless regions is rarely reached. Therefore, we suggest combining the strengths of LiDAR and camera-based capture by introducing SurfFill: a Gaussian surfel-based LiDAR completion scheme. We analyze LiDAR capturings and attribute LiDAR beam divergence as a main factor for artifacts, manifesting mostly at thin structures and edges. We use this insight to introduce an ambiguity heuristic for completed scans by evaluating the change in density in the point cloud. This allows us to identify points close to missed areas, which we can then use to grow additional points from to complete the scan. For this point growing, we constrain Gaussian surfel reconstruction [Huang et al. 2024] to focus optimization and densification on these ambiguous areas. Finally, Gaussian primitives of the reconstruction in ambiguous areas are extracted and sampled for points to complete the point cloud. To address the challenges of large-scale reconstruction, we extend this pipeline with a divide-and-conquer scheme for building-sized point cloud completion. We evaluate on the task of LiDAR point cloud completion of synthetic and real-world scenes and find that our method outperforms previous reconstruction methods.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What this paper is about (in simple terms)

This paper is about fixing “holes” and mistakes in 3D scans made by LiDAR. A LiDAR scan is a 3D picture made from millions of dots (called a point cloud). LiDAR is great at measuring big, flat areas very accurately, but it often misses thin things (like chair legs or railings) and struggles with dark, shiny, or glassy surfaces.

The authors’ idea, called SurfFill, combines the strengths of LiDAR and photos. They use photos to help rebuild the small, detailed parts that LiDAR often misses, and they do it in a focused, careful way so they don’t make the overall scan worse.

What questions the researchers asked

- How can we spot the parts of a LiDAR scan that are likely wrong or missing, especially around thin structures and edges?

- Once we find those problem areas, how can we use photos to fill in the missing pieces without adding lots of mistakes or noise?

How they did it (explained simply)

Think of a LiDAR point cloud as a city made of tiny dots. In crowded neighborhoods (well-scanned areas), dots are packed tightly. Near gaps and edges (where LiDAR struggles), dot density drops.

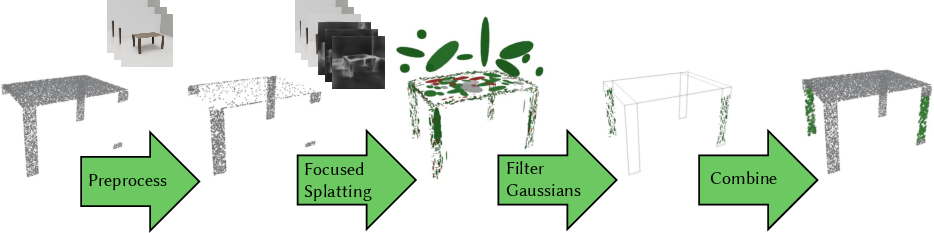

The team built a pipeline with these main steps:

- Find “ambiguous” border areas

- They measure how crowded each point’s neighborhood is. Where the dot density suddenly changes, that’s a clue you’re near a missing piece (like the edge of a thin railing).

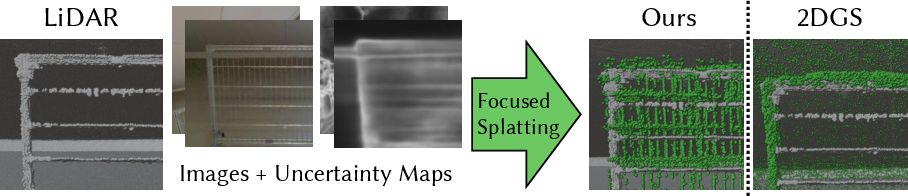

- They also look at the photos and estimate how surface directions (normals) change across pixels. Big changes in these directions often signal edges and uncertainty. They turn this into an “uncertainty map,” a kind of heatmap that highlights where photos say the geometry is tricky.

- Focus the reconstruction on just those tricky areas

- They use a technique called Gaussian surfel splatting. Imagine covering surfaces with many tiny, soft, flat “stickers” (surfels) that look like fuzzy coins. Each sticker knows its position, size, and tilt. By placing and adjusting these stickers, you can rebuild surfaces from photos.

- Instead of rebuilding the whole scene, they “zoom in” the optimization onto the ambiguous areas. They:

- Prefer training images that see lots of uncertain regions.

- Use an “edge-aware” loss so edges and fine details are sharpened.

- Keep the stickers small (with a size penalty) so they capture thin structures.

- Add and remove stickers smartly (densification and pruning), protecting the reliable LiDAR parts and exploring gaps.

- Add a tiny bit of positional noise to encourage exploring nearby space, which helps discover missing structures.

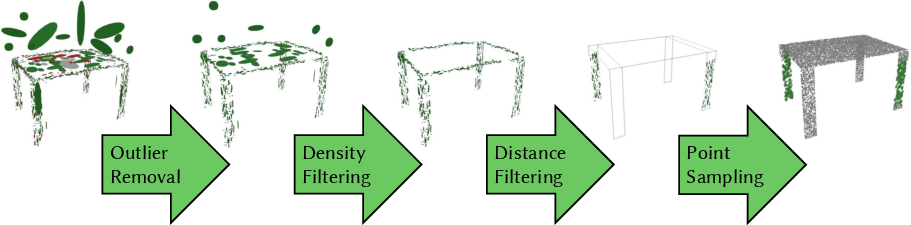

- Filter and convert the stickers back into points

- After training, they throw out any stickers that look suspicious: ones that are too big, too faint, too close to the original LiDAR (duplicate info), or way too far (likely outliers).

- From the remaining stickers, they “sample” new 3D points—both on the stickers themselves and between nearby stickers—to fill holes and create a nicely detailed, consistent point cloud.

- Handle very large scenes by slicing them up

- Big scans (like whole buildings) can be too large for a single computer’s memory. They split the scene into chunks (like cutting a big cake), process each chunk independently, and then merge the results. This lets them handle tens of millions of points efficiently.

What they found and why it matters

Main results:

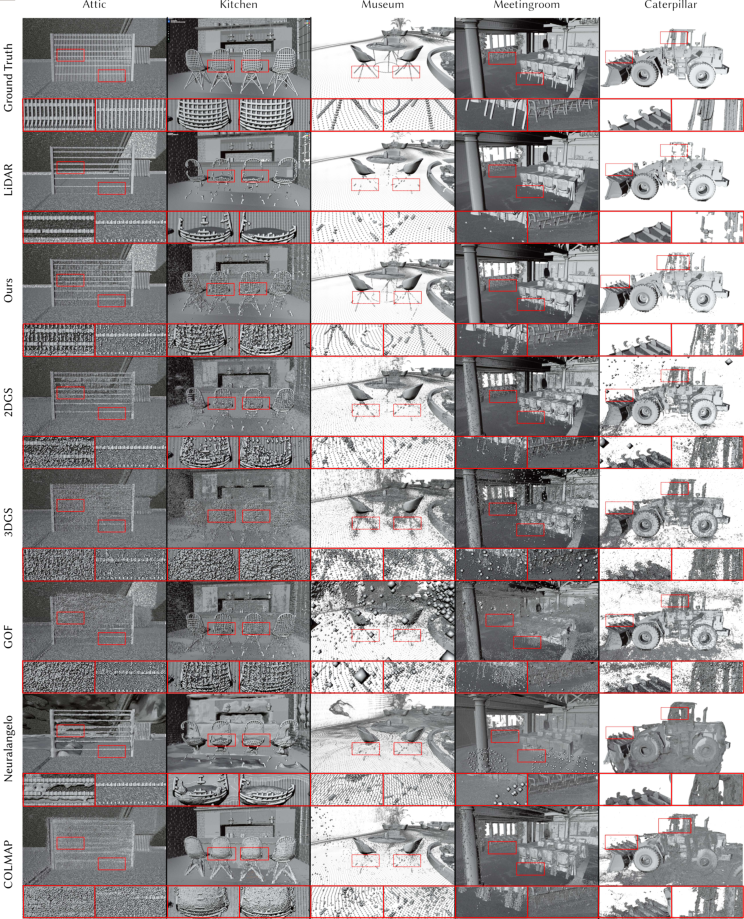

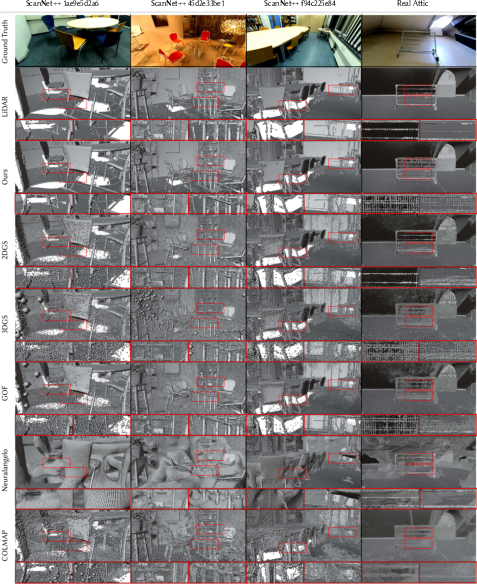

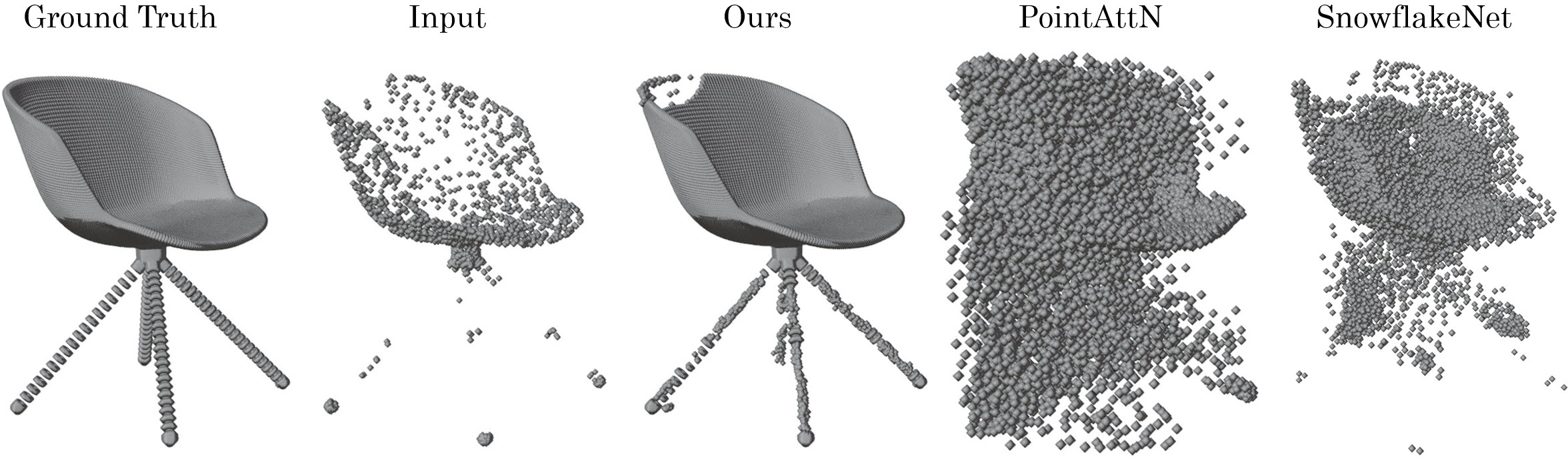

- Their method recovers thin, detailed structures (like fence bars, chair legs, and edges) that LiDAR often misses.

- It adds very little noise, keeping the high accuracy of LiDAR in flat areas.

- It runs fast for this kind of problem: under about 75 minutes for building-scale scans on a modern GPU setup.

- In tests on both synthetic and real-world scenes, SurfFill outperformed popular methods like COLMAP (multi-view stereo), Neuralangelo (a NeRF-based method), standard 3D Gaussian Splatting (3DGS), 2D Gaussian Splatting (2DGS), and GOF. It achieved better geometric accuracy and better coverage of missing details.

Why this matters:

- You get the best of both worlds: LiDAR’s overall accuracy and stability plus photos’ ability to capture fine edges and small pieces.

- It can reduce the need for costly rescans or manual fixing by 3D artists.

- Cleaner, more complete 3D scans are useful for architecture, construction, robotics, virtual/augmented reality, and digital twins.

What this could lead to

- Better maps and models for robots and self-driving systems that need to “see” thin or tricky objects.

- Faster, cheaper workflows for building accurate 3D models of rooms, buildings, and outdoor sites.

- A general strategy for combining different sensors (like lasers and cameras) to fill in each other’s weaknesses.

Simple note on limits:

- The method still depends on good photos and accurate camera positions. If the photos are too sparse, objects are moving, or the camera poses are wrong, results can degrade. Future versions could include ways to handle moving scenes or fix camera pose errors on the fly.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, consolidated list of what remains missing, uncertain, or unexplored in the paper, framed as concrete items to guide future research.

- Ambiguity heuristic parameterization: No systematic method for choosing k, δ (typical spacing), and the ambiguity threshold τ across different LiDAR devices, resolutions, and pre-processing settings; an auto-calibration procedure tied to scanner specs and scene scale is needed.

- Empty vs. missing structures: The density-based ambiguity heuristic cannot disambiguate truly empty space from unobserved thin structures (e.g., real three-legged table vs. a missed leg); mechanisms to prevent hallucinations (semantic priors, physical plausibility checks, or structural pattern detectors) are not provided.

- LiDAR signal attributes unused: Intensity, number-of-returns, and waveform information (when available) are not leveraged to identify mixed pixels or low-confidence returns; integrating these could improve ambiguity detection and filtering.

- Camera uncertainty modeling: The monocular normal estimation for uncertainty maps lacks validation in textureless, specular, low-light, and high dynamic range conditions; thresholds for mask creation (M, Ḿ) and their sensitivity are not characterized.

- Cross-modal alignment: Robustness to camera–LiDAR extrinsic calibration errors, camera intrinsics (fisheye undistortion), and pose noise beyond ±0.01° is not analyzed; practical tolerance bounds and pose refinement strategies remain open.

- Guarding well-scanned regions: Focused densification and altered pruning could still modify accurate, low-ambiguity LiDAR areas; formal constraints or guarantees ensuring non-degradation of trusted regions are missing.

- Training view selection: The probability P_image = n_a / |N_v| ignores occlusions, visibility, and view informativeness; occlusion-aware, coverage- or uncertainty-driven view scheduling may yield better focus and efficiency.

- Gap-bridging sampling risks: Interpolating points between Gaussians (p′ = (1−a)p + a q_i) lacks topological and geometric constraints (normal consistency, curvature continuity, line/curve adherence), risking spurious connections across unrelated structures.

- Reflective/transmissive surfaces: Mirrors and windows are known failure modes; there is no targeted detection or treatment to suppress virtual rooms or photometric artifacts during completion.

- Chunk boundary consistency: The divide-and-conquer scheme may introduce seam artifacts, duplicates, or inconsistencies across chunk borders; global reconciliation, overlap-aware optimization, and boundary regularization are not addressed.

- Generalization of fixed thresholds: Global use of t_min = 0.01 m and t_max = 3 m for Gaussian–LiDAR distance filtering is not validated across scenes, sensors, or scales; an adaptive, data-driven thresholding strategy is needed.

- Large-scale scalability: Memory-bound limits and per-GPU chunking are presented, but streaming/out-of-core optimization, on-device scheduling, and scaling beyond building-level datasets (city-scale) are unexplored.

- Dynamic scenes and lighting: Handling moving objects, view-dependent effects, and variable illumination is acknowledged as a limitation; integrating temporal consistency, motion modeling, or reflectance estimation remains open.

- Evaluation ground truth: Real-world ground truth for “missing fine structures” is absent; the paper relies on synthetic removals and general metrics (Chamfer, F1 at 5 mm), lacking edge-focused/thin-structure metrics, per-region analyses, and benchmark datasets curated for LiDAR completion.

- Failure case analysis: Persistent noise (e.g., near kitchen chair legs and caterpillar tires) is noted but not systematically analyzed; identifying root causes and targeted remedies (filter tuning, loss balancing, geometry priors) is left open.

- Loss weighting design: Hyperparameters (λ, γ, ρ, α, β) for the objective are chosen heuristically; no sensitivity analysis, principled tuning, or automatic weight scheduling is provided.

- Gradient-based densification: Choosing maximum screen-space gradients (vs. mean) is empirically motivated; theoretical understanding of the trade-offs (especially with sparse RGB coverage) and robustness across datasets is missing.

- Initial scale policy: The choice s = sqrt(p)·2{-1/2} for initializing surfel scales lacks theoretical grounding and cross-scene validation; its impact on densification dynamics and fine detail reconstruction needs paper.

- Cross-modal ambiguity consistency: Consistency between 3D ambiguity (density-based) and 2D uncertainty (normal-based) is not evaluated; methods to reconcile conflicts or propagate confidence across modalities remain open.

- Confidence for added points: The pipeline lacks per-point confidence estimates or uncertainty quantification for synthesized points, which is important for downstream tasks and safety-critical uses.

- Semantic guidance: No semantic or learned priors (e.g., detectors for thin objects, structural repetition, or category-aware constraints) are used to reduce false completions and guide point growth.

- Surface reconstruction: The method outputs points but does not ensure watertight or topologically consistent surfaces; integrating meshing that preserves fine details without oversmoothing remains an open engineering and evaluation problem.

- Vendor pre-processing variability: The approach assumes final, vendor-filtered LiDAR clouds; generalization to raw returns or varying proprietary filters is uncertain, and standardized evaluation across vendors is lacking.

- False positive/negative quantification: The rate at which the ambiguity heuristic triggers incorrect completions vs. true positives is not reported; a thorough error taxonomy and precision–recall breakdown for thin structures is needed.

- Hybrid model integration: While 2DGS, 3DGS, and GOF are compared, strategies to hybridize or jointly optimize LiDAR-constrained Gaussian models (e.g., combining 2DGS surfels with GOF opacity fields) are unexplored.

Glossary

- Adaptive Density Control (ADC): A Gaussian Splatting module that adds/removes primitives based on gradient heuristics to control model density during training; "we adjust the densification strategy from the Adaptive Density Control (ADC) module~\cite{kerbl20233d}."

- Alpha-blending: Rendering technique that composites semi-transparent layers by blending their alpha values; "Rendering is done via alpha-blending in an efficient tile-based renderer."

- Ambiguity heuristic: A method to identify transition regions near missing structures by analyzing point density change; "We introduce a technique for this, which we call the ambiguity heuristic."

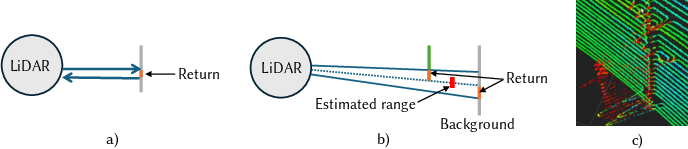

- Beam divergence: The widening of a laser beam over distance, increasing footprint and causing mixed measurements; "we attribute LiDAR beam divergence as a main factor for artifacts, manifesting mostly at thin structures and edges."

- Chamfer Distance: A metric measuring average nearest-point distance between two point sets to quantify geometric similarity; "We assess performance using the Chamfer Distance as well as the F1-score metric~\cite{Knapitsch2017}."

- Chunking: Dividing a large scene into manageable spatial subsets (chunks) for independent training; "we use a chunking approach as described in Sec.~\ref{sec:largescale}."

- Depth distortion regularization: A penalty encouraging consistent depth predictions and reducing distortions during reconstruction; "For geometric accuracy, normal consistency and depth distortion regularization are employed, and meshes are extracted via TSDF fusion."

- Densification: The process of adding new Gaussians/surfels based on training signals to increase detail; "we adjust the densification strategy from the Adaptive Density Control (ADC) module~\cite{kerbl20233d}."

- Divide-and-conquer: Strategy that splits a large problem into smaller parts processed independently; "We extend this with a divide-and-conquer scheme, allowing us to process this building-scale point cloud in less than 75 minutes."

- Epipolar lines: Lines in image pairs along which matching points must lie, used for stereo depth estimation; "matched for depth along epipolar lines to maximize similarity."

- F1-score: The harmonic mean of precision and recall, used to evaluate reconstruction accuracy and coverage; "We assess performance using the Chamfer Distance as well as the F1-score metric~\cite{Knapitsch2017}."

- Frustum: The conical/pyramidal volume of a sensor or camera’s field of view; "which can also be thought of as a frustum, hits a small geometric structure or an edge,"

- Gaussian Opacity Fields (GOF): A Gaussian-based representation that leverages ray–splat intersections and opacity for geometry extraction; "Gaussian Opacity Fields (GOF)~\cite{yu2024gaussian} utilizing 3D Gaussians"

- Gaussian surfel: A flat, Gaussian-weighted surface element with position, tangent vectors, and scale used for rendering and reconstruction; "Huang et al.~\cite{huang20242d} introduce the use of Gaussian surfels for surface reconstruction, on which we build upon."

- Gaussian surfel splatting: Rendering and optimization technique that projects Gaussian surfels into views to reconstruct surfaces; "Specifically, we introduce constraints for Gaussian surfel splatting to precisely reconstruct into missed areas."

- K-nearest neighbor (KNN) search: A method to find the closest k points to a query point, used for density/ambiguity estimation; "estimated using a -nearest neighbor search:"

- LiDAR SLAM: Simultaneous Localization and Mapping using LiDAR scans to build consistent, large-scale models; "Due to the LiDAR's panoramic 360-degree field of view, LiDAR SLAM methods generate models with high global consistency and precision, even for outdoor scenes \cite{hong2023comparison}."

- Mixed pixels: LiDAR returns that average multiple surfaces within a beam footprint, causing erroneous ranges; "potentially yielding an average of the observations, called mixed pixels~\cite{tang2007comparative}."

- Monocular surface normals: Surface orientation estimates derived from single images; "we estimate monocular surface normals, infer uncertainty through their 2D spatial differences and restrict reconstruction to these regions."

- Multi-view Stereo (MVS): Technique that reconstructs 3D geometry by matching features across multiple calibrated images; "Such Multi-view Stereo (MVS) reconstructions can be very detailed, but they often exhibit holes in feature-sparse areas."

- Neural Radiance Fields (NeRFs): Neural volumetric models that render views by integrating radiance and density along rays; "Neural Radiance Fields (NeRFs)~\cite{mildenhall2021nerf} reconstruct the scene as a volume, abstracted in a Multilayer Perceptron (MLP)."

- Normal consistency: A constraint encouraging neighboring surfels/points to have coherent surface normals; "For geometric accuracy, normal consistency and depth distortion regularization are employed, and meshes are extracted via TSDF fusion."

- Novel View Synthesis (NVS): Methods that generate new images from different viewpoints using reconstructed scene representations; "MVS has been the input for Novel View Synthesis (NVS) methods in image-based rendering techniques~\cite{shum2000review, eisemann2008floating, chaurasia2011silhouette,hedman2016scalable, hedman2018deep,riegler2021stable, kopanas2021perviewopt}."

- Photogrammetry: Inferring 3D geometry from multiple photographs via feature matching and geometric reconstruction; "applying 3D photogrammetry can infer these details as they often represent feature-rich regions."

- Plane-sweeping: An MVS strategy that tests candidate planes to find depths maximizing image similarity; "processed with plane-sweeping~\cite{collins1996space}"

- Poisson surface reconstruction: Technique that builds watertight surfaces from point samples and normals by solving a Poisson equation; "reconstruct the scene via Poisson surface reconstruction of rendered depth maps~\cite{guedon2024sugar,dai2024high}."

- Scale regularization: A loss that constrains surfel sizes to stay small, promoting fine-detail reconstruction; "we introduce a scale regularization for all Gaussians "

- Spherical harmonics: Basis functions used to model view-dependent appearance on surfels; "view-dependent appearance via three spherical harmonics bands."

- Structure-from-Motion (SfM): Estimating camera poses and sparse structure from image sequences; "Following a camera calibration via Structure-from-Motion (SfM)~\cite{snavely2006photo,schonberger2016structure},"

- Tile-based renderer: A GPU-friendly renderer that processes images in tiles for efficiency; "Rendering is done via alpha-blending in an efficient tile-based renderer."

- Truncated Signed Distance Function (TSDF) fusion: Integrating depth observations into a volumetric grid to extract meshes; "meshes are extracted via TSDF fusion."

- Volume rendering: Rendering images by integrating color and density along rays through a volumetric model; "simplify the reconstruction pipeline by optimizing an implicit surface representation via volume rendering."

Practical Applications

Immediate Applications

Below are practical use cases that can be deployed now by leveraging SurfFill’s ambiguity-driven completion and focused Gaussian surfel splatting pipeline. Each item notes sectors, workflows, potential tools/products, and feasibility assumptions.

- LiDAR scan post-processing for as-built documentation and Scan-to-BIM

- Sectors: Architecture/Engineering/Construction (AEC), Facilities Management, Real Estate/PropTech

- Workflow: After LiDAR registration and pose estimation (via SLAM/SfM), run SurfFill to recover thin elements (e.g., railings, cable trays, chair/table legs, pipe supports) before mesh/line extraction and BIM modeling. Export completed point clouds (e.g., E57/PLY) for Revit/Civil 3D/IFC workflows.

- Tools/Products: Plugin/module for Autodesk ReCap, Bentley ContextCapture, Leica Cyclone, FARO Scene; a “SurfFill Studio” desktop app; a cloud API to process room/building-scale scans in tens of minutes per area (single GPU).

- Assumptions/Dependencies: Sufficient RGB coverage and accurate camera poses; mostly static scenes; access to a modern GPU for reasonable turnaround; integration with existing registration pipelines.

- Digital twin and asset inventory refinement for facilities and campuses

- Sectors: Smart Buildings/Cities, Operations & Maintenance, Utilities

- Workflow: Use SurfFill to densify thin structures missed by TLS/MLS (handrails, signage, small conduits) in existing digital twins, improving downstream analytics and maintenance planning.

- Tools/Products: Cloud batch processing linked to asset registries; dashboards using SurfFill’s ambiguity heatmaps for QA.

- Assumptions/Dependencies: Existing co-registered imagery; stable environments; compute resources for large-scale chunked processing.

- HD map enrichment for autonomous systems (offline)

- Sectors: Robotics, Automotive/HD Mapping, Logistics

- Workflow: Offline processing of fleet-collected LiDAR+camera datasets to fill thin poles, curbs, sign edges and street furniture that LiDAR under-samples, improving map completeness while preserving LiDAR precision in flat areas.

- Tools/Products: Map backend integration (e.g., ROS-based pipelines); SurfFill as a post-process stage before vectorization.

- Assumptions/Dependencies: High-quality sensor extrinsics and camera coverage; non-real-time batch operation; dynamic objects filtered beforehand.

- Cultural heritage digitization and conservation

- Sectors: Museums, Heritage/Archaeology, Education

- Workflow: Combine terrestrial laser scans with photographs to complete ornate thin details (tracery, carvings, grillwork), reducing manual retouching.

- Tools/Products: Integrations with Meshlab/CloudCompare; downloadable toolkit for site teams.

- Assumptions/Dependencies: Sufficient multi-view photo capture; limited scene dynamics; GPU availability during field/lab post-processing.

- Infrastructure inspection and documentation

- Sectors: Transportation (bridges, stations), Energy (substations, plants), Oil & Gas, Manufacturing

- Workflow: Apply SurfFill to plant scans to restore small but safety-critical features (fasteners, thin brackets, ladder rungs, cable ladders), enabling more reliable measurements and compliance checks.

- Tools/Products: SurfFill QA module that flags high-ambiguity regions for human review; integration into digital inspection platforms.

- Assumptions/Dependencies: Adequate photo coverage (often available from field teams); static or minimally dynamic environments; controlled lighting helpful but not strictly required.

- Forensics and public safety scene reconstruction

- Sectors: Public Safety, Insurance, Legal

- Workflow: Post-process LiDAR+photo captures of incidents to recover thin, legally significant details (e.g., wires, tool edges), enabling more faithful reconstructions.

- Tools/Products: A secure on-prem SurfFill service; confidence/ambiguity overlays for courtroom-ready deliverables.

- Assumptions/Dependencies: Accurate camera poses; chain-of-custody for both point clouds and imagery; limited motion during acquisition.

- Dataset cleaning and benchmark preparation

- Sectors: Academia, Software/ML

- Workflow: Use SurfFill to reduce systematic LiDAR incompleteness in training/benchmark datasets (e.g., ScanNet-like collections), improving ground-truth quality for geometric learning tasks.

- Tools/Products: Open-source SurfFill code; dataset curation scripts; reproducible ambiguity maps for QC.

- Assumptions/Dependencies: Community access to paired images; consistent pose quality across datasets.

- Consumer/SMB room scanning enhancement (via cloud)

- Sectors: AR/VR, Interior Design, Real Estate, Insurance

- Workflow: Augment iPhone/iPad LiDAR room scans with photos to fill rails, chair legs, trim, and thin fixtures prior to mesh/texturing for virtual walkthroughs and measurement apps.

- Tools/Products: Cloud API/SDK integrated into scanning apps; SurfFill-backed “fix my scan” button.

- Assumptions/Dependencies: Users capture sufficient photos; device pose estimation or cloud SfM; processing done off-device due to GPU needs.

- Quality-control dashboards using ambiguity and uncertainty maps

- Sectors: AEC, Mapping/GIS, Robotics

- Workflow: Generate ambiguity heatmaps on point clouds and uncertainty masks on images to triage problematic regions and trigger either SurfFill completion or rescan requests.

- Tools/Products: QC web dashboards; pipeline hooks to auto-select training views and chunks.

- Assumptions/Dependencies: Access to imagery and registration; operator workflow alignment with QC feedback.

Long-Term Applications

Below are forward-looking opportunities that require further research, engineering, or ecosystem changes before broad deployment.

- Near-real-time on-device completion for robots and mobile mapping

- Sectors: Robotics, Automotive, Drones

- Potential: Integrate focused Gaussian surfel splatting into mapping stacks to complete thin obstacles on the fly, improving navigation and safety.

- Tools/Products: CUDA-optimized edge devices; ROS nodes with incremental chunking; streaming training/inference.

- Assumptions/Dependencies: Significant GPU acceleration and memory optimization; robust handling of dynamics and lighting; continuous pose refinement.

- Active acquisition guidance using ambiguity maps

- Sectors: AEC, Surveying, Inspection, Consumer Scanning

- Potential: Real-time guidance (AR overlays) that directs operators to capture supplemental photos where ambiguity is high, reducing revisit costs and improving coverage.

- Tools/Products: Field apps with live ambiguity visualization; integration with scanners’ on-device cameras.

- Assumptions/Dependencies: Fast on-site processing; ergonomic UX; reliable pose estimates while users move.

- Integration with SLAM to improve loop closure and map consistency

- Sectors: Robotics, SLAM/Localization

- Potential: Use completed geometry at edges to increase feature richness for visual or LiDAR-visual SLAM, improving loop closures and localization robustness.

- Tools/Products: SLAM back-ends that accept SurfFill-informed priors; joint optimization of poses and completion.

- Assumptions/Dependencies: Stable co-optimization; safeguards against introducing biased geometry.

- Completion under challenging photometric conditions and dynamic scenes

- Sectors: Automotive/Robotics, Infrastructure, Public Safety

- Potential: Robust extensions that handle variable lighting, reflective/translucent surfaces (glass/mirrors), and transient motion without introducing artifacts.

- Tools/Products: Learned priors for reflectance/BRDF; temporal filtering; motion segmentation.

- Assumptions/Dependencies: New regularizers and datasets; better normal/uncertainty estimation in adverse conditions.

- Standardized “scan completeness” metrics and procurement guidelines

- Sectors: Policy/Standards, AEC, Public Agencies

- Potential: Use ambiguity scores and coverage statistics to define acceptance criteria for digital twin deliverables and public tenders (e.g., minimal ambiguity coverage near assets).

- Tools/Products: Open metrics; compliance reports; certification programs.

- Assumptions/Dependencies: Multi-stakeholder consensus; validation on diverse environments; tooling support in common platforms.

- Lightweight/embedded variants for consumer devices

- Sectors: AR/VR, Mobile, Consumer Apps

- Potential: Approximations of focused surfel splatting that run on mobile NPUs/GPUs for on-device previews and partial in-app fixes.

- Tools/Products: Model compression/distillation; mixed-precision kernels; tiled inference.

- Assumptions/Dependencies: Hardware advances or algorithmic simplification; tolerance for lower accuracy.

- Automated CAD/BIM feature extraction after completion

- Sectors: AEC, Manufacturing, Utilities

- Potential: Improved parametric fitting (pipes/cables/rails) after SurfFill completion to accelerate Scan-to-BIM and digital twin updates.

- Tools/Products: Post-completion fitting libraries; semi-automatic annotation tools.

- Assumptions/Dependencies: Robustness across materials; consistent topology after filling gaps.

- Safety-critical mapping enhancements (e.g., thin wire and overhead hazard detection)

- Sectors: Construction Safety, Drones, Utilities

- Potential: Detect/complete thin hazards often missed in raw LiDAR, improving flight and crane operations near lines.

- Tools/Products: Hazard labeling pipelines; preflight clearance checks using completed models.

- Assumptions/Dependencies: Reliable image capture; advanced filtering to avoid false positives; validation protocols.

- Privacy-preserving completion and selective anonymization

- Sectors: Public Sector, Corporate Facilities

- Potential: Combine completion with automated removal or blurring of sensitive elements (e.g., screens, faces) in photogrammetry-supported scenes.

- Tools/Products: Masking modules using the same uncertainty maps; policy-compliant pipelines.

- Assumptions/Dependencies: Reliable detection models; governance around combining imagery and LiDAR.

Notes on Cross-Cutting Dependencies and Risks

- Core dependencies:

- Co-registered RGB images and accurate camera poses (SfM/SLAM).

- Sufficient view coverage of ambiguous regions; sparse views limit performance.

- Mostly static scenes; dynamic content degrades results.

- GPU resources; building-scale processing may require chunking and multi-GPU setups.

- Operational considerations:

- Lighting/reflectance challenges (dark, shiny, glass surfaces) may require tailored settings or additional captures.

- Parameterization (e.g., ambiguity thresholds, pruning thresholds) may need per-scan tuning for different sensors/point densities.

- Data governance: storing/processing imagery alongside LiDAR must meet privacy and compliance requirements.

- Integration opportunities:

- Direct integration with existing point cloud suites (ReCap, Cyclone, CloudCompare).

- ROS packages for robotics pipelines (offline first).

- Cloud services for consumer and enterprise scanning apps, enabling compute offloading.

By deploying SurfFill as an add-on step in existing LiDAR+photo workflows, organizations can immediately recover thin, high-detail geometry with minimal noise and without sacrificing LiDAR accuracy in flat regions. Longer term, embedding and accelerating the focused surfel approach opens the door to real-time guidance, on-device completion, and standardized completeness metrics across industries.

Collections

Sign up for free to add this paper to one or more collections.