EGG-Fusion: Efficient 3D Reconstruction with Geometry-aware Gaussian Surfel on the Fly (2512.01296v1)

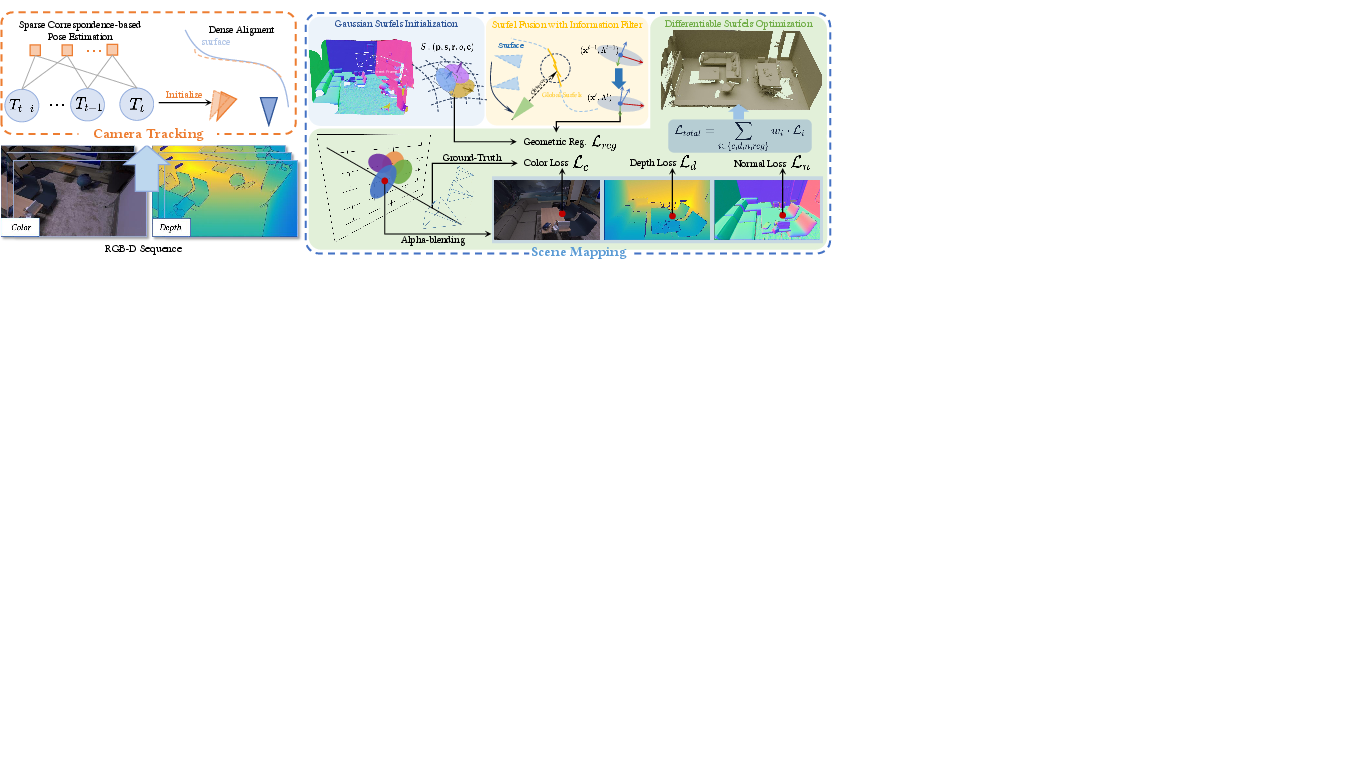

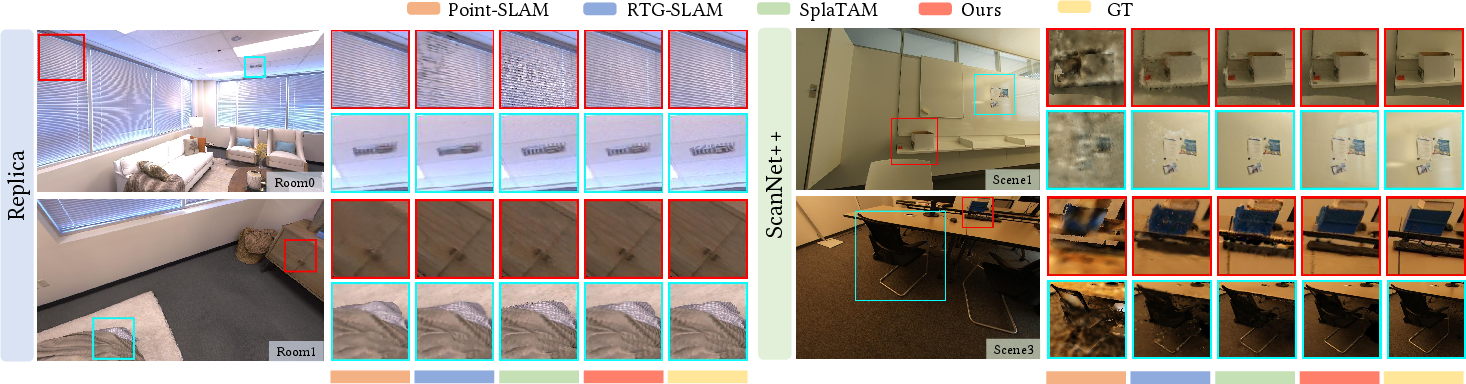

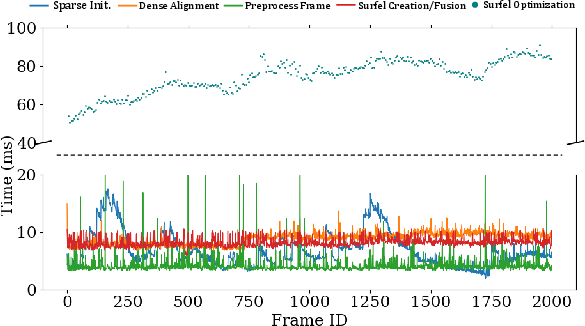

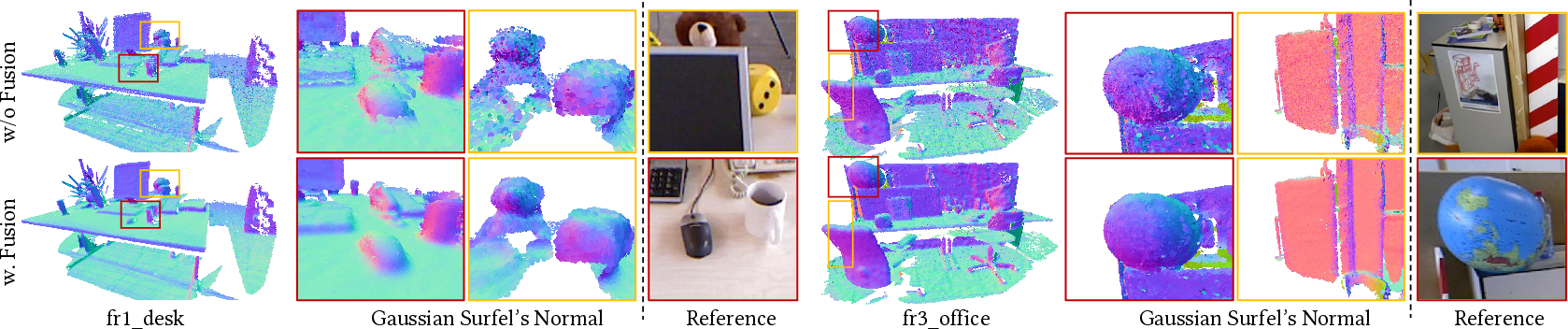

Abstract: Real-time 3D reconstruction is a fundamental task in computer graphics. Recently, differentiable-rendering-based SLAM system has demonstrated significant potential, enabling photorealistic scene rendering through learnable scene representations such as Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS). Current differentiable rendering methods face dual challenges in real-time computation and sensor noise sensitivity, leading to degraded geometric fidelity in scene reconstruction and limited practicality. To address these challenges, we propose a novel real-time system EGG-Fusion, featuring robust sparse-to-dense camera tracking and a geometry-aware Gaussian surfel mapping module, introducing an information filter-based fusion method that explicitly accounts for sensor noise to achieve high-precision surface reconstruction. The proposed differentiable Gaussian surfel mapping effectively models multi-view consistent surfaces while enabling efficient parameter optimization. Extensive experimental results demonstrate that the proposed system achieves a surface reconstruction error of 0.6\textit{cm} on standardized benchmark datasets including Replica and ScanNet++, representing over 20\% improvement in accuracy compared to state-of-the-art (SOTA) GS-based methods. Notably, the system maintains real-time processing capabilities at 24 FPS, establishing it as one of the most accurate differentiable-rendering-based real-time reconstruction systems. Project Page: https://zju3dv.github.io/eggfusion/

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Explaining “EGG-Fusion: Efficient 3D Reconstruction with Geometry-aware Gaussian Surfel on the Fly”

Overview

This paper introduces EGG-Fusion, a fast system that builds accurate 3D models of real-world scenes from video in real time. It improves two big parts of the job:

- figuring out where the camera is as it moves, and

- creating a detailed and trustworthy 3D map.

To do this, the system uses “Gaussian surfels,” which you can imagine as tiny, colored, flat coins that stick to surfaces in 3D. It also uses a smart way to combine noisy sensor measurements so the 3D model stays sharp and correct.

Objectives and Questions

This research tackles everyday problems that 3D reconstruction systems face:

- How can we keep 3D modeling fast while still making it very accurate?

- How do we stop sensor noise (like shaky depth readings) from messing up the 3D shape?

- Can we represent surfaces in a way that looks correct from many different camera angles?

- How can we track the camera reliably even when it moves quickly or sees tricky scenes?

Methods and Approach

The system works in two connected parts: camera tracking and scene mapping. Here’s how each piece functions, explained with simple ideas and analogies.

1) SLAM, in simple terms

SLAM stands for Simultaneous Localization and Mapping. Think of it like walking through a room with a camera:

- “Localization” is figuring out where the camera is at each moment.

- “Mapping” is building a 3D model of the room at the same time.

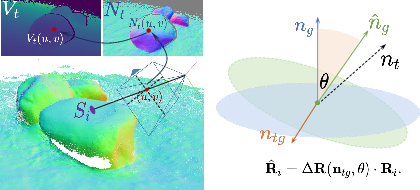

2) Gaussian surfels: tiny 3D “stickers” for surfaces

Instead of using big blocks or clouds of points, EGG-Fusion covers surfaces with tiny, flat ellipses (the “surfels”), each with:

- a position in 3D,

- a size and rotation (so it aligns with the surface),

- a color and opacity (how see-through it is),

- and a normal (the direction the surface is facing).

Picture covering a wall with small stickers that all tilt and color-match the wall. The system adjusts these stickers so they look good from different camera angles.

3) Differentiable rendering: learn by comparing pictures

The system “renders” (imagines) what the scene should look like from the camera’s point of view using those surfels. Then it compares the rendered image to the actual camera image and gently adjusts the surfels to reduce the difference. This works for color, depth, and normals (surface directions), so the model improves step by step.

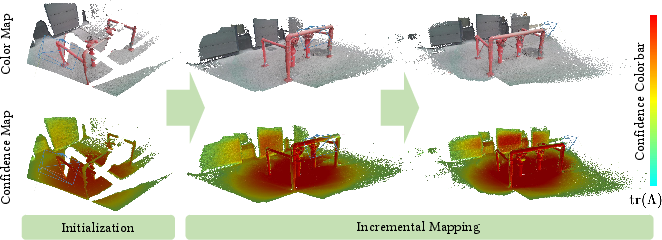

4) Geometry-aware initialization: place surfels where they matter

Instead of adding surfels everywhere, EGG-Fusion adds them smartly:

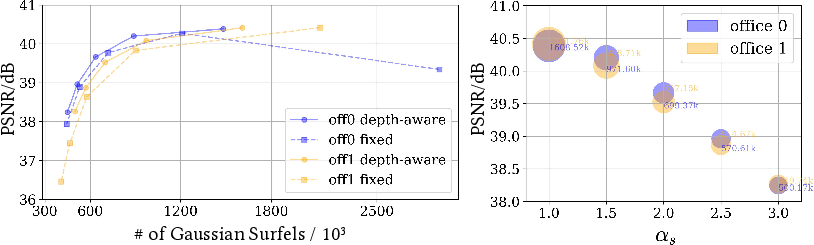

- It places new surfels where the current model looks uncertain or where fresh, closer surfaces appear.

- It adapts each surfel’s size based on how far it is from the camera. Farther surfels get bigger so their projection on the image is stable and efficient.

This keeps the model compact and avoids unnecessary clutter.

5) Information filter-based fusion: combine noisy measurements the smart way

Depth sensors (like consumer-grade RGB-D cameras) can be noisy. To handle this, EGG-Fusion uses an “information filter” (a close cousin of a Kalman filter). In simple terms:

- Every surfel keeps track of its best guess for position and direction, plus how confident it is (its “information”).

- When a new frame arrives, the surfel updates its guess by blending the old estimate with the new measurement.

- Measurements that are likely noisier (e.g., farther away) get less weight.

- The system also updates surfel rotation using the change in its normal so the orientation stays well-defined.

Think of it like averaging test scores, but more carefully: if a test is known to be unreliable, it affects your average less.

This makes the 3D surface more stable and accurate over time, and it lets the system label which parts of the model are high-confidence.

6) Sparse-to-dense camera tracking: start rough, refine precise

To track the camera:

- First, the system uses a few reliable feature matches (sparse points) to get a good initial pose. This is robust, even during fast motion.

- Then it refines the pose using dense alignment: it aligns the entire depth and color image to the current 3D model, similar to fitting puzzle pieces more tightly. This step uses both geometry (depth and surface direction) and appearance (color).

This two-step strategy keeps tracking stable and precise.

7) Fast optimization with gentle regularization

Because surfels are already kept near the right surface by the fusion step, the final “learning” (optimization) can be quick:

- The system optimizes on a small batch of recent frames (a local window).

- It matches the rendered color, depth, and normals to what the camera sees.

- It adds a geometric regularization that nudges each surfel to stay close to its fused position and direction, preventing drift.

This combination makes the system converge fast and stay accurate.

Main Findings and Why They Matter

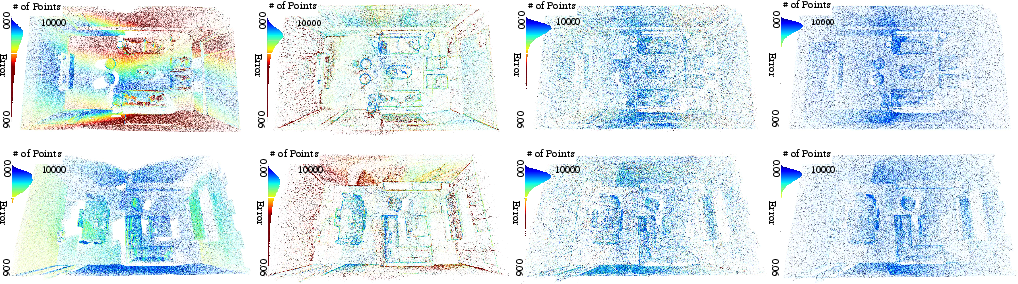

- Accuracy: On standard datasets (Replica and ScanNet++), the system achieves about 0.6 cm surface error, which is extremely precise for real-time systems. It’s over 20% more accurate than leading 3D Gaussian splatting (GS)-based methods.

- Speed: It runs at 24 frames per second (FPS), which is real-time performance suitable for interactive applications.

- Robust tracking: It outperforms other methods on tracking benchmarks (like TUM-RGB-D and Replica), thanks to the sparse-to-dense strategy and the geometry-aware surfels.

- Better rendering: It renders realistic images from new viewpoints and maintains high visual quality even in challenging scenes.

- Confidence-aware surfaces: By keeping an “information matrix” for each surfel, the system can extract high-confidence parts of the scene, which is valuable when you need trustworthy geometry.

Implications and Potential Impact

This work pushes real-time 3D reconstruction closer to what’s needed in real-world use:

- Augmented and virtual reality can get more reliable, detailed 3D maps for placing virtual objects.

- Robots and autonomous systems can navigate with better understanding of surfaces and geometry.

- 3D scanning and digital twins can be done faster and more accurately with consumer sensors.

Because the authors also release the code, other researchers and developers can build on it, leading to even better performance and new applications. In short, EGG-Fusion shows that you can have both speed and accuracy by representing surfaces smartly and handling sensor noise the right way.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The following list highlights what remains missing, uncertain, or unexplored in the paper and suggests concrete directions future researchers could pursue:

- Scalability to large-scale and outdoor environments is not evaluated; quantify drift and reconstruction quality on long trajectories with loop closure and pose-graph optimization in online mode (not just offline variants).

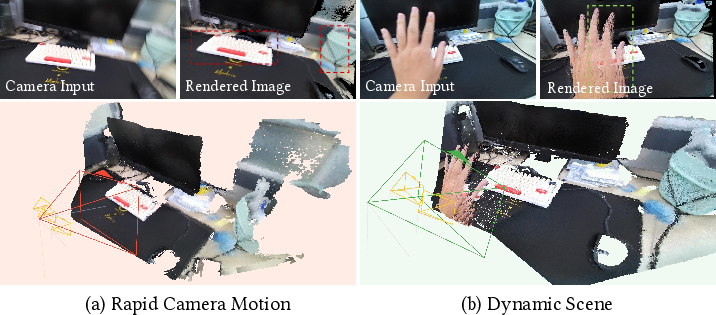

- Dynamic scene handling is unaddressed; extend the information-filter formulation with a process model (e.g., motion priors) to separate static and moving objects, and evaluate on sequences with articulated or non-rigid motion.

- The measurement noise model assumes diagonal covariance and depth-proportional variance; investigate full covariance modeling (position–normal coupling), sensor-specific calibration, and per-pixel uncertainty learned or estimated from raw sensor signals.

- Data association for surfel reobservation/fusion is unspecified; formalize and benchmark gating (e.g., Mahalanobis distance), nearest-neighbor search, spatial hashing, and occlusion-aware association strategies to prevent incorrect updates.

- Normal-to-rotation update via cross-product and angle can be degenerate (near-parallel/anti-parallel normals) and sign-ambiguous; analyze failure modes and propose robust constraints (e.g., tangent-plane projections, quaternion averaging, or Lie algebra updates) with quantitative evaluations.

- The filter lacks a state transition/process noise (pure measurement updates); paper filter stability, bias, and lag under noisy or sparse observations, and compare information filter vs EKF/UKF formulations for surfel states.

- Outlier rejection in surfel fusion is not described; introduce statistical gating, robust loss, and forgetting factors to avoid “locking in” erroneous depth/normal updates under specularities or multipath artifacts, and measure recovery from wrong updates.

- Handling of missing or severely corrupt depth (Azure Kinect’s failure cases) is not methodically addressed; investigate depth inpainting, priors, or learned completion and quantify impact on completeness metrics.

- The system assumes static lighting and relies on RGB photometric alignment; assess robustness to illumination changes, exposure shifts, and HDR, and consider gradient-domain or reflectance-based losses.

- The approach is RGB-D only; explore generalization to monocular RGB (with learned depth), stereo, LiDAR, or event cameras, and integration with IMU for high-speed motion robustness.

- Initialization strategy (low-opacity zones and positive depth disparity) may miss surfaces with poor rendering or occlusions; provide ablations on thresholds, failure cases, and alternative activation strategies (e.g., uncertainty-driven sampling).

- Surfels’ disk-like geometry can struggle with thin/high-curvature structures and grazing angles; evaluate anisotropic/multi-layer surfels or hierarchical primitives, and quantify accuracy on challenging geometries.

- No explicit evaluation or algorithm for map growth control, pruning, and merging; measure memory footprint over time, design surfel culling/merge policies, and report resource usage vs scene complexity.

- Regularization weights (w_d, w_n, w_reg) and optimization schedule (N_batch, m) lack tuning guidance; perform sensitivity analysis, automatic scheduling, or meta-optimization, and report accuracy–speed trade-offs.

- Loop closure and global consistency are not integrated in the online pipeline (offline variants rely on ORB-SLAM2); incorporate pose-graph optimization within the differentiable mapping framework and quantify benefits.

- Uncertainty calibration of the per-surfel information matrix is not validated; measure calibration (e.g., expected calibration error), define thresholds for “high-confidence” surface extraction, and evaluate downstream utility (e.g., planning).

- Depth/normal rendering equations (e.g., Eq. for geometric blending) are underspecified and contain typographical issues; provide exact definitions, occlusion handling policy, and analyze numerical stability of depth/normal compositing.

- Association between surfel fusion and differentiable optimization may induce bias if photometric loss conflicts with fused geometry; paper strategies that decouple appearance from geometry (e.g., albedo estimation) and quantify convergence behavior.

- Robustness to rolling shutter and motion blur is not analyzed; integrate rolling-shutter camera models and blur-aware tracking, and benchmark on fast-motion sequences.

- Hardware and performance details are missing; report GPU/CPU specs, memory usage, FPS vs resolution and surfel count, and scalability trends across scenes to ground the 24 FPS claim.

- Mesh extraction from surfels is not specified; develop watertight surface extraction (e.g., Poisson or TSDF from surfels), and compare mesh quality (manifoldness, sharp features) against baselines.

- Fairness of reconstruction comparisons (e.g., Point-SLAM using GT depth for sampling) is acknowledged but not controlled; include controlled variants where all methods use consistent inputs/sampling policies.

- Depth noise model is assumed depth-squared proportional; validate and refine per-sensor models (Azure Kinect, RealSense, iPhone LiDAR) and examine generalization across devices.

- Data structures and search strategies for reobserved surfel lookup are not described; implement and benchmark GPU-friendly acceleration (e.g., voxel grids, BVH) for real-time fusion under heavy map sizes.

Glossary

- 3D Gaussian Splatting (3DGS): A primitive-based differentiable scene representation that rasterizes 3D Gaussian ellipsoids directly in image space for fast optimization and rendering. "3DGS represents a scene using a set of ellipsoids, significantly enhancing the rendering performance of differentiable scene representations by rasterizing these 3D ellipsoids directly in 2D image space."

- ATE RMSE: Absolute Trajectory Error (root mean square), a standard metric for quantifying camera pose accuracy over a sequence. "To evaluate the accuracy of camera tracking, we use ATE RMSE ~\cite{sturm2012benchmark} as the metric."

- Alpha compositing: A blending technique that combines contributions from multiple layers or primitives using opacity. "After sorting by depth, alpha compositing is used for blending to obtain the final color with the alpha blending weight :"

- Bayesian update: A recursive probabilistic update that refines state estimates by incorporating new observations. "we perform recursive Bayesian updates on reobserved surfels to refine their geometric properties."

- Bundle adjustment (BA): A joint optimization of camera poses and scene parameters (structure) over multiple frames to reduce reprojection error. "making photometric/geometric bundle adjustment (BA) within a keyframe sliding window feasible."

- Covariance matrix: A matrix that quantifies the uncertainty of state estimates and their correlations. "Each surface element's geometric state is defined as and is associated with a covariance matrix to quantify measurement uncertainty, enabling progressive confidence accumulation through sequential observations."

- Differentiable rasterization: A rasterization process that provides gradients with respect to scene parameters, enabling end-to-end optimization. "real-time reconstruction methods based on 3DGS~\cite{splatam, rtg_slam} have shown great promise due to their efficient differentiable rasterization capabilities."

- Differentiable rendering: A rendering pipeline whose outputs are differentiable with respect to scene parameters, allowing learning and optimization via gradient descent. "In recent years, the rapid development of differentiable rendering techniques has significantly enhanced the realism and visual quality of 3D reconstruction, with notable advancements in technologies such as NeRF~\cite{mildenhallNeRFRepresentingScenes2020} and 3DGS~\cite{kerbl3DGaussianSplatting2023}."

- Exponential map: A mathematical mapping from a Lie algebra to its corresponding Lie group, used to parameterize rigid-body motions. "Here, is the exponential map from the Lie algebra to the Lie group and is a robust loss function used to mitigate the influence of outliers."

- Gaussian surfel: A disk-like Gaussian primitive used to represent local surface patches with learnable attributes (position, scale, rotation, color, opacity). "In the scene mapping module (Sec.~\ref{sec:scene_mapping}), Gaussian surfels are utilized as the fundamental primitives for scene representation and can achieve high-quality real-time reconstruction"

- Information filter: An estimation framework using the information (precision) form, updating the information matrix/vector instead of covariance/state directly. "we introduce a novel surfel fusion strategy with information filter~\cite{thrun2004simultaneous, maybeck1982stochastic}, which incrementally updates the geometric attributes of surfels using depth observations from each frame."

- Information matrix: The inverse of the covariance matrix, representing the precision (confidence) of an estimate. "The information matrix also facilitates confidence estimation for each primitive, allowing the system to extract highly reliable reconstruction results."

- Lambertian (surface): A surface with purely diffuse reflectance, appearing equally bright from all viewing directions. "enabling the modeling of non-Lambertian surfaces."

- Levenberg–Marquardt (LM) optimization: A nonlinear least-squares optimization algorithm combining gradient descent and Gauss–Newton. "The initial camera pose is estimated via LevenbergâMarquardt (LM) optimization:"

- Lie algebra: A vector-space representation of infinitesimal transformations used to parameterize rigid motions compactly (e.g., se(3)). "In optimization problems, we adopt the more compact and structure-preserving Lie algebra representation of camera poses as ."

- Lie group: A continuous group of transformations (e.g., SE(3)) that rigid-body motions belong to; related to Lie algebra via the exponential map. "Here, is the exponential map from the Lie algebra to the Lie group and is a robust loss function used to mitigate the influence of outliers."

- LPIPS: Learned Perceptual Image Patch Similarity, a deep metric for perceptual image quality. "Regarding rendering performance, we generate full-resolution rendered images and utilize three metrics for evaluation: PSNR~\cite{hore2010image}, SSIM~\cite{wang2004image}, and LPIPS~\cite{zhang2018unreasonable}."

- Marching cubes: A classic algorithm for extracting polygonal meshes from volumetric data (e.g., TSDF). "followed by mesh extraction via the marching cubes to assess the final reconstruction accuracy~\cite{nice_slam}."

- Markov process: A stochastic process where the current state depends only on the previous state and the latest observation. "The surfel state estimation is formulated as a Markov process, where the current state depends solely on its previous state and the latest sensor observation ."

- NeRF (Neural Radiance Fields): A neural scene representation that models view-dependent radiance and density for photorealistic rendering. "Recently, differentiable-rendering-based SLAM system has demonstrated significant potential, enabling photorealistic scene rendering through learnable scene representations such as Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS)."

- Normal map: An image-space map of per-pixel surface normals derived from geometry (e.g., depth), used for alignment and shading. "we pre-process the data using the intrinsic parameters of the camera to obtain the normal map and the vertex map in the camera coordinate system:"

- Novel view synthesis: Rendering images from viewpoints not seen during training, given a learned scene representation. "More recently, SLAM systems~\cite{mono_gs,splatam,yanGSSLAMDenseVisual2024,rtg_slam} based on 3DGS have demonstrated impressive performance in novel view synthesis and rendering speed, thanks to the efficiency of the scene representation."

- Photometric error: The difference between observed and rendered pixel intensities, used for direct image alignment. "DTAM~\cite{newcombeDTAMDenseTracking2011a} pioneered the dense 3D model reconstruction of an indoor scene from monocular video by directly tracking the camera to the model using photometric error"

- PSNR: Peak Signal-to-Noise Ratio, a logarithmic metric of reconstruction fidelity relative to ground truth images. "Regarding rendering performance, we generate full-resolution rendered images and utilize three metrics for evaluation: PSNR~\cite{hore2010image}, SSIM~\cite{wang2004image}, and LPIPS~\cite{zhang2018unreasonable}."

- Quaternion: A four-parameter representation of 3D rotation avoiding gimbal lock. "its rotation in quaternion form (in the global coordinate system)"

- Rasterization: The process of projecting and discretizing geometry into image space for rendering. "After the explicit Gaussian surfel fusion based on upcoming measurements, we implement end-to-end optimization of the surfels using the rasterization-based rendering pipeline~\cite{kerbl3DGaussianSplatting2023}."

- Reprojection error: The 2D discrepancy between observed image points and projected 3D points under an estimated pose. "we formulate the pose estimation as a reprojection error minimization problem."

- SLAM (Simultaneous Localization and Mapping): A system that jointly estimates sensor pose and builds a map of the environment. "Over the past decades, significant progress has been made in RGBD-based Simultaneous Localization and Mapping (SLAM)"

- Spherical harmonic basis functions: Orthogonal functions used to represent angular-dependent color/lighting, enabling view-dependent appearance. "The color attribute is explicitly encoded as coefficients of spherical harmonic basis functions, where the dimension depends on the defined order, enabling the modeling of non-Lambertian surfaces."

- TSDF (Truncated Signed Distance Field): A volumetric representation storing truncated signed distances to surfaces, used for fusion and meshing. "~\cite{newcombeKinectFusionRealtimeDense2011, newcombeDynamicFusionReconstructionTracking2015} utilizes consumer-grade RGB-D cameras (Microsoft Kinect) to accomplish featureless camera tracking by optimizing the transformation of depth information to the TSDF target."

- Vertex map: An image-space map of per-pixel 3D points in camera coordinates, derived from depth. "we pre-process the data using the intrinsic parameters of the camera to obtain the normal map and the vertex map in the camera coordinate system:"

Practical Applications

Overview

This paper introduces EGG-Fusion, a real-time 3D reconstruction and SLAM system that uses geometry-aware 2D Gaussian surfels and an information-filter-based fusion strategy to explicitly account for sensor noise. It achieves high geometric accuracy (0.6 cm surface error on Replica and ScanNet++), robust camera tracking via a sparse-to-dense strategy, and real-time performance at 24 FPS. The method provides confidence-aware surface extraction (via per-surfel information matrices), adaptive surfel initialization for compact scene representation, and fast convergence through differentiable optimization with geometric regularization.

The following bullet points summarize actionable applications across industry, academia, policy, and daily life, grouped into Immediate and Long-Term Applications. Each item specifies relevant sectors, potential tools/products/workflows, and assumptions/dependencies affecting feasibility.

Immediate Applications

- Real-time AR/VR/MR environment scanning and occlusion

- Sectors: software, media/entertainment, gaming, education

- Tools/products/workflows: Unity/Unreal plugins for on-the-fly room/object scanning; AR occlusion and physics using confidence-aware surfaces; previsualization and set digitization with live feedback on scan confidence; mobile app for iPhone LiDAR/Android ToF that exports meshes and materials

- Assumptions/dependencies: requires RGB-D sensors (e.g., iPhone LiDAR, Azure Kinect, RealSense) and a GPU capable of rasterization at ~24 FPS; scenes mostly static; known camera intrinsics and basic calibration

- Robotics mapping and manipulation in indoor spaces

- Sectors: robotics, logistics, manufacturing, service robotics

- Tools/products/workflows: ROS/ROS2 node for EGG-Fusion; confidence-aware point/surfel maps for navigation and grasp planning; sparse-to-dense tracking to maintain pose under fast motions; real-time digital twin updates for robot planning layers

- Assumptions/dependencies: integration with robot middleware; stable RGB-D sensor; GPU availability on robot or edge computer; performance validated on indoor settings; moving objects may need filtering

- Construction, facilities management, and building digital twins

- Sectors: construction, architecture/engineering (AEC), real estate

- Tools/products/workflows: handheld scanners for as-built capture; BIM integration workflows; scan QA overlays highlighting low-confidence regions (from information matrices); room-scale measurements and clash detection; periodic change detection for site monitoring

- Assumptions/dependencies: consumer-grade sensors yield ~cm-level accuracy (adequate for room-scale, not for precision components); indoor/static scenes preferred; trained operators for consistent viewpoint coverage

- E-commerce and retail product digitization

- Sectors: retail, media/entertainment

- Tools/products/workflows: rapid product scanning stations; surfel-based export of watertight meshes with materials; integration into online catalogs and AR try-on experiences

- Assumptions/dependencies: scanning quality depends on surface reflectance/transparency and depth reliability; lighting control reduces sensor noise; GPU compute at capture station

- Cultural heritage and museum artifact digitization (room/object scale)

- Sectors: culture/heritage, education

- Tools/products/workflows: portable scanning setups; confidence maps guiding rescans; online exhibits using photorealistic rendering

- Assumptions/dependencies: gentle handling of artifacts; depth sensor limitations on shiny/transparent surfaces; accuracy appropriate for visualization, not metrology-grade detail

- Telepresence and remote inspection (indoor)

- Sectors: operations, security/public safety, maintenance

- Tools/products/workflows: real-time 3D streaming for remote operators; confidence-aware hazard mapping; integration with teleoperation UIs

- Assumptions/dependencies: network bandwidth/latency; static/dominated by quasi-static indoor scenes; depth sensor and GPU at capture endpoint

- Education and academic research

- Sectors: academia, education

- Tools/products/workflows: open-source code as a teaching and benchmarking platform for differentiable rendering-based SLAM; lab exercises on sensor noise modeling and information filters; reproducible evaluations on Replica/TUM/ScanNet++

- Assumptions/dependencies: availability of datasets and GPU resources; students familiar with SLAM and rendering basics

- Insurance claims documentation and property assessment (pilot use)

- Sectors: finance/insurance, real estate

- Tools/products/workflows: rapid 3D capture of damage with confidence indicators; standardized export (mesh+confidence) for claims systems; remote adjuster review

- Assumptions/dependencies: acceptance of 3D evidence in underwriting/claims; scan accuracy sufficient for room-scale assessment; operator training

- Interior design and home DIY scanning

- Sectors: daily life, consumer software

- Tools/products/workflows: mobile scanning apps for measuring rooms, planning furniture placement, and producing virtual tours; confidence-guided rescans to reduce gaps

- Assumptions/dependencies: consumer RGB-D-enabled devices; static scenes; modest GPU/SoC capability

Long-Term Applications

- City-scale and outdoor mapping with multi-sensor fusion

- Sectors: autonomous driving, urban planning, energy/utilities

- Tools/products/workflows: extended EGG-Fusion variants using stereo/multi-view depth, LiDAR, and IMU; hierarchical surfel representations for large-scale environments; cloud-based multi-agent fusion of maps with confidence tracking

- Assumptions/dependencies: algorithmic extensions for outdoor lighting, weather, and dynamic objects; sensor fusion and robust calibration; scalability and distributed optimization; regulatory and operational safety requirements

- Wearable AR glasses with on-device real-time reconstruction

- Sectors: consumer electronics, software

- Tools/products/workflows: low-power implementations optimized for mobile GPUs/NPUs; surfel-based occlusion and physics for persistent AR; edge/cloud offload and streaming of confidence-aware maps

- Assumptions/dependencies: hardware acceleration for splatting and filtering; power and thermal constraints; privacy-preserving mapping standards

- Household and service robots operating primarily with RGB cameras

- Sectors: robotics (consumer/service)

- Tools/products/workflows: EGG-Fusion adaptations leveraging learned monocular depth and IMU to reduce reliance on depth sensors; semantic mapping and object-level surfel fusion for task planning

- Assumptions/dependencies: robust monocular depth estimation in diverse lighting; handling moving objects; training data and domain adaptation; compute constraints

- Industrial inspection and metrology-grade scanning

- Sectors: manufacturing, aerospace, energy

- Tools/products/workflows: upgraded sensors (structured light/industrial LiDAR) and calibration workflows; mm-level accuracy surfel fusion with enhanced noise models; automated QA pipelines with confidence thresholds triggering rescans

- Assumptions/dependencies: higher-grade sensors and calibration; stricter error bounds; traceability and certification; controlled environments

- Healthcare and clinical planning (specialized scanners)

- Sectors: healthcare

- Tools/products/workflows: OR/procedure room mapping for equipment layout and collision avoidance; preoperative environment planning; confidence-aware safety zones

- Assumptions/dependencies: clinical-grade sensors and sterile workflows; compliance with medical regulations; validated accuracy and reliability

- Policy and standards for digital twin fidelity and privacy

- Sectors: policy/regulation, AEC, public safety

- Tools/products/workflows: standards specifying accuracy bands, confidence reporting (information matrices) and audit trails; guidelines for safe capture and storage of indoor scans; acceptance criteria for building inspections and emergency planning

- Assumptions/dependencies: multi-stakeholder consensus; pilot programs demonstrating reliability; privacy impact assessments and data governance

- Semantic scene understanding layered on EGG-Fusion

- Sectors: robotics, software, education

- Tools/products/workflows: joint reconstruction and segmentation/classification; task-driven map optimization (e.g., graspable surfaces, traversable areas); domain-specific surfel attributes (material, affordance)

- Assumptions/dependencies: real-time multi-task models; training datasets; increased compute demands; robust handling of dynamic scenes

- Multi-agent collaborative mapping

- Sectors: robotics, public safety, industrial operations

- Tools/products/workflows: distributed surfel fusion across agents with merged information matrices; conflict resolution and map consistency protocols; live global digital twins for coordination

- Assumptions/dependencies: reliable inter-agent localization and communication; synchronization and consensus algorithms; security and access control

- Teleoperation with physics-consistent virtual twins

- Sectors: mining, hazardous environments, defense

- Tools/products/workflows: real-time, confidence-aware reconstructions feeding physics engines to simulate interactions; predictive rendering for low-latency control

- Assumptions/dependencies: stable capture pipelines; low-latency networking; validated mapping fidelity under challenging conditions

Cross-cutting assumptions and dependencies

- Sensor availability and quality: current results assume RGB-D inputs; performance degrades with missing or noisy depth (specular/transparent surfaces, far range).

- Compute resources: real-time performance at ~24 FPS typically requires a modern GPU; mobile/wearable use calls for hardware acceleration and algorithmic optimization.

- Scene dynamics: methods target predominantly static environments; dynamic objects need explicit handling (e.g., masking or motion modeling).

- Calibration: accurate camera intrinsics/extrinsics and synchronization are necessary for robust tracking and fusion.

- Scalability: large-scale outdoor deployment requires hierarchical representations and distributed optimization beyond current scope.

- Data governance: indoor mapping raises privacy concerns; deployment must consider consent, storage policies, and regulatory compliance.

Collections

Sign up for free to add this paper to one or more collections.