Adversarial Flow Models (2511.22475v1)

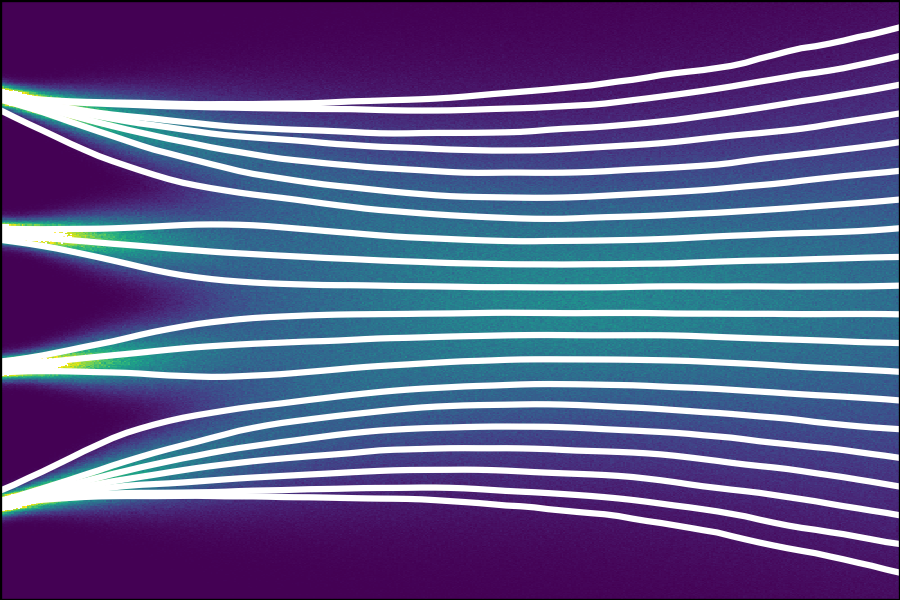

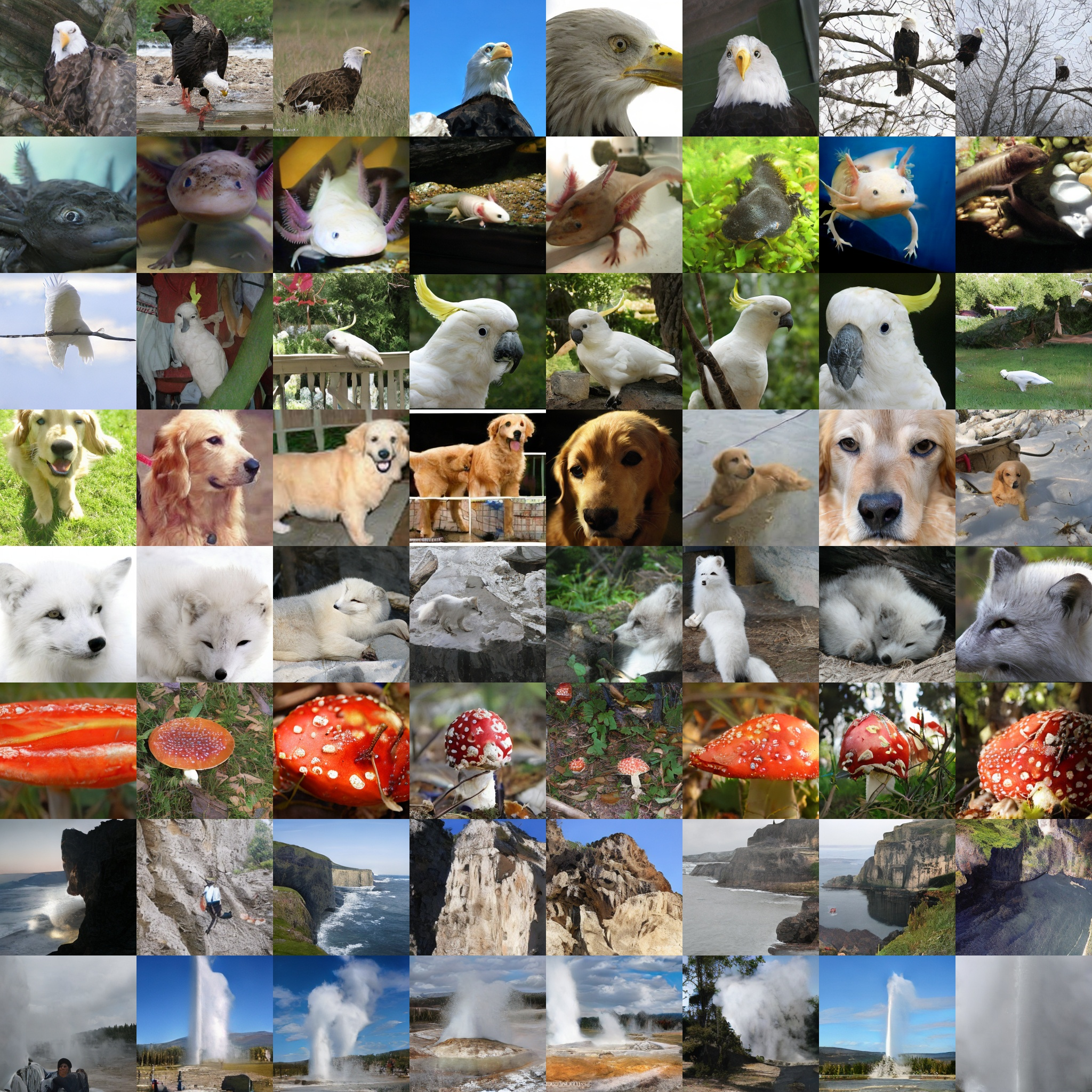

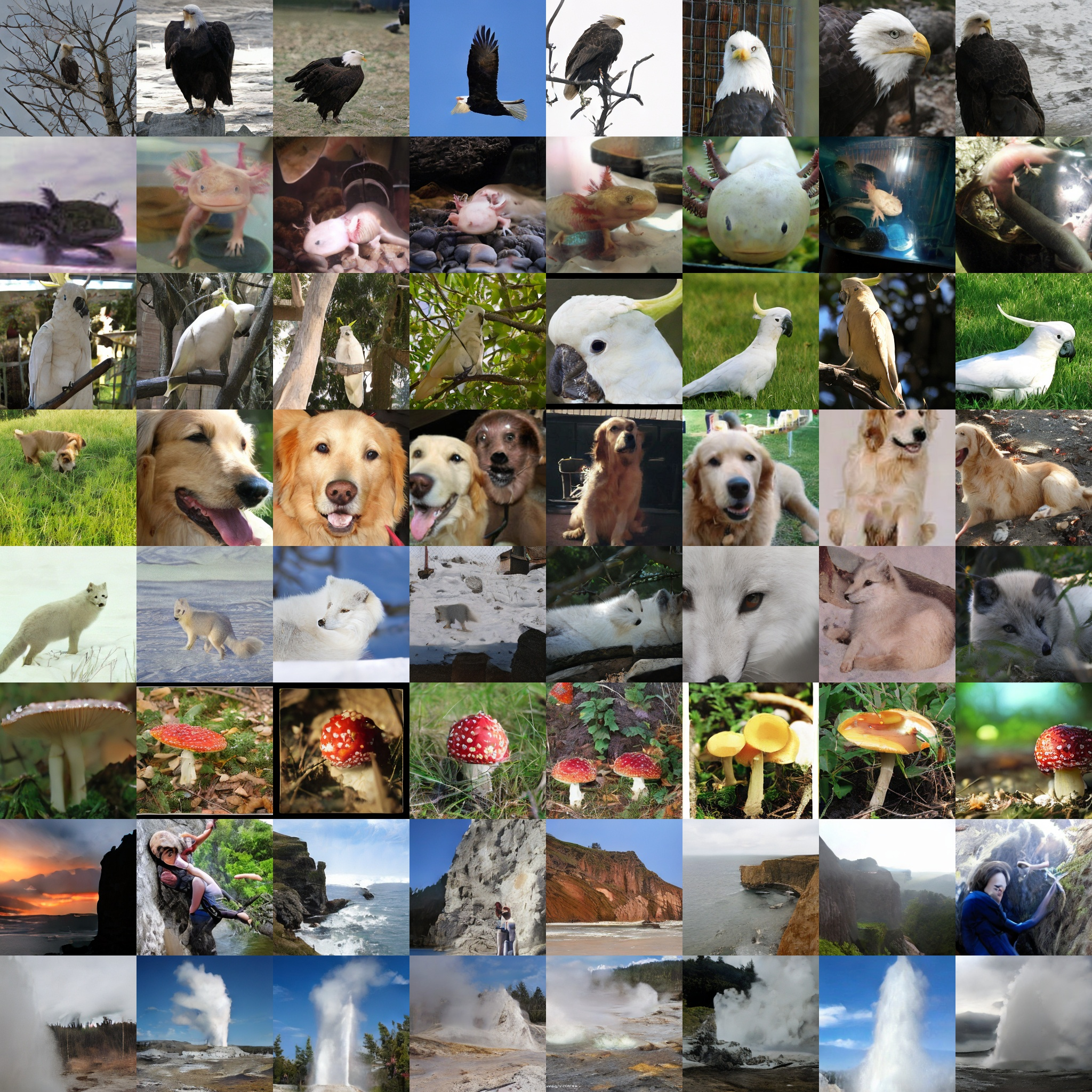

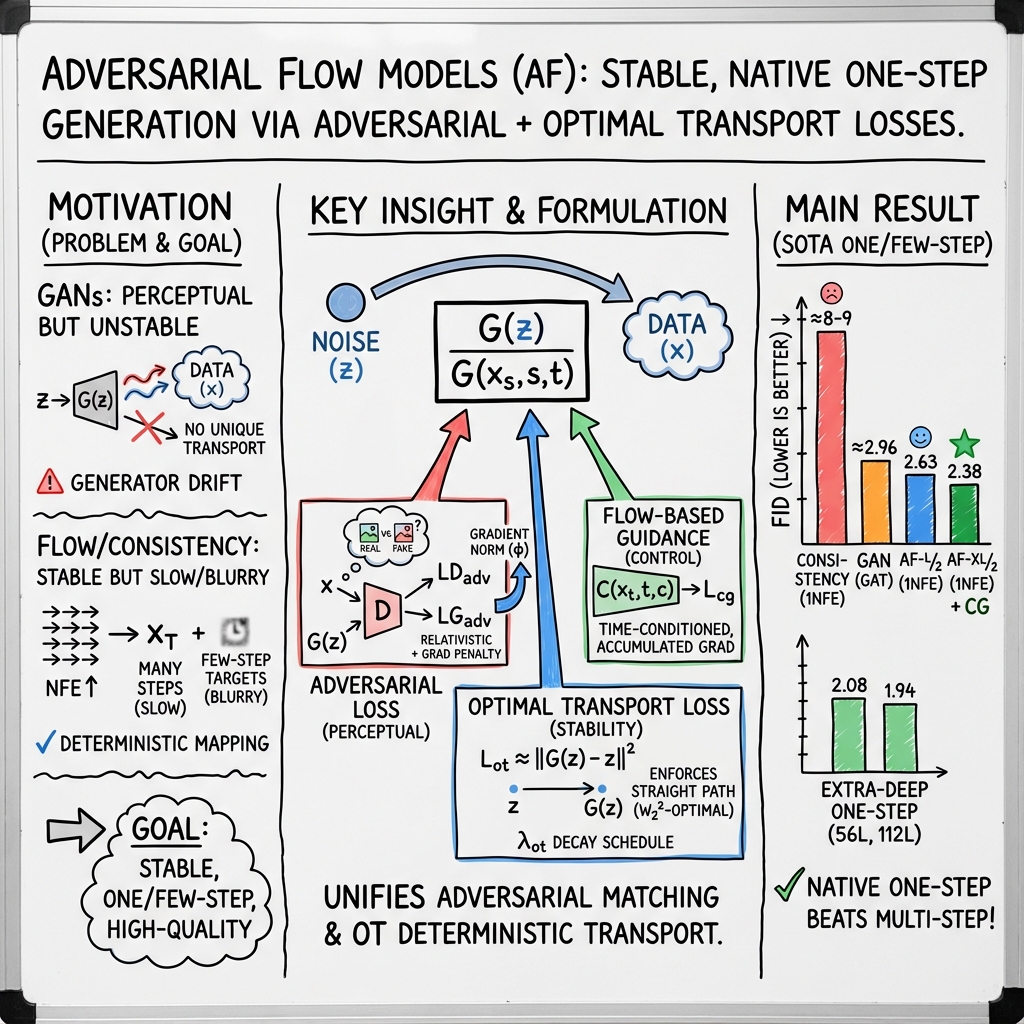

Abstract: We present adversarial flow models, a class of generative models that unifies adversarial models and flow models. Our method supports native one-step or multi-step generation and is trained using the adversarial objective. Unlike traditional GANs, where the generator learns an arbitrary transport plan between the noise and the data distributions, our generator learns a deterministic noise-to-data mapping, which is the same optimal transport as in flow-matching models. This significantly stabilizes adversarial training. Also, unlike consistency-based methods, our model directly learns one-step or few-step generation without needing to learn the intermediate timesteps of the probability flow for propagation. This saves model capacity, reduces training iterations, and avoids error accumulation. Under the same 1NFE setting on ImageNet-256px, our B/2 model approaches the performance of consistency-based XL/2 models, while our XL/2 model creates a new best FID of 2.38. We additionally show the possibility of end-to-end training of 56-layer and 112-layer models through depth repetition without any intermediate supervision, and achieve FIDs of 2.08 and 1.94 using a single forward pass, surpassing their 2NFE and 4NFE counterparts.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces “Adversarial Flow Models,” a new way to make AI-generated images. It combines two popular ideas:

- GANs (Generative Adversarial Networks), which are great at making sharp, realistic images in one shot but can be hard to train.

- Flow-based models, which move a picture from random noise to a final image through many tiny steps and are stable, but slow and sometimes a bit blurry if you try to do it in just a few steps.

The goal is to get the best of both worlds: fast, one-step or few-step image generation that is stable to train and produces high-quality, realistic results.

The big questions the paper asks

- Can we make one-step or few-step image generators as stable and sharp as GANs, but without their training headaches?

- Can we avoid the “blurry” look that sometimes happens when models try to jump in fewer steps?

- Can we do this using standard transformer-based architectures (popular in modern AI), without special tricks?

- Can we improve performance on a major benchmark (ImageNet at 256×256) measured by FID, a standard score for image quality?

How they did it, in simple terms

Think of making an image as moving a marble from a “noise bowl” to a “image bowl.” There are different strategies:

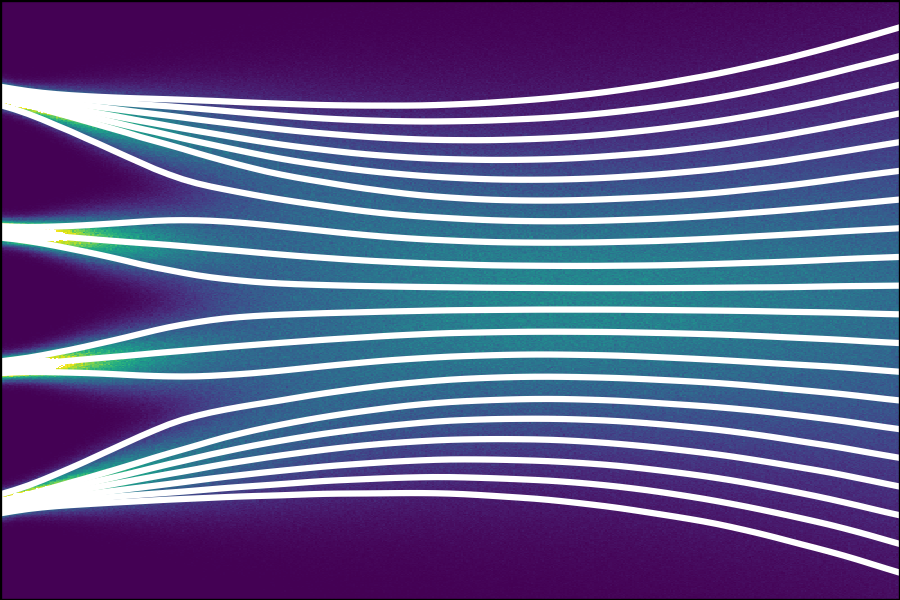

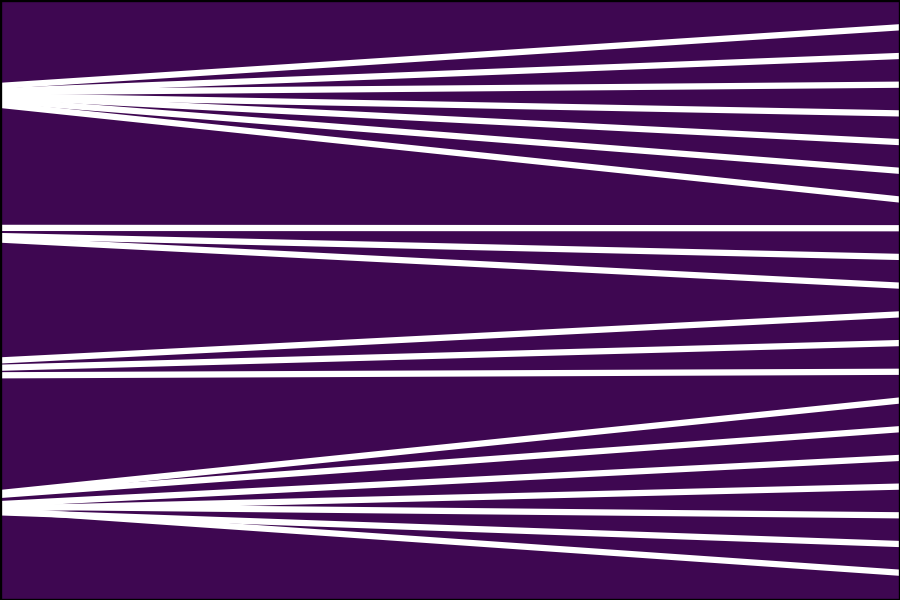

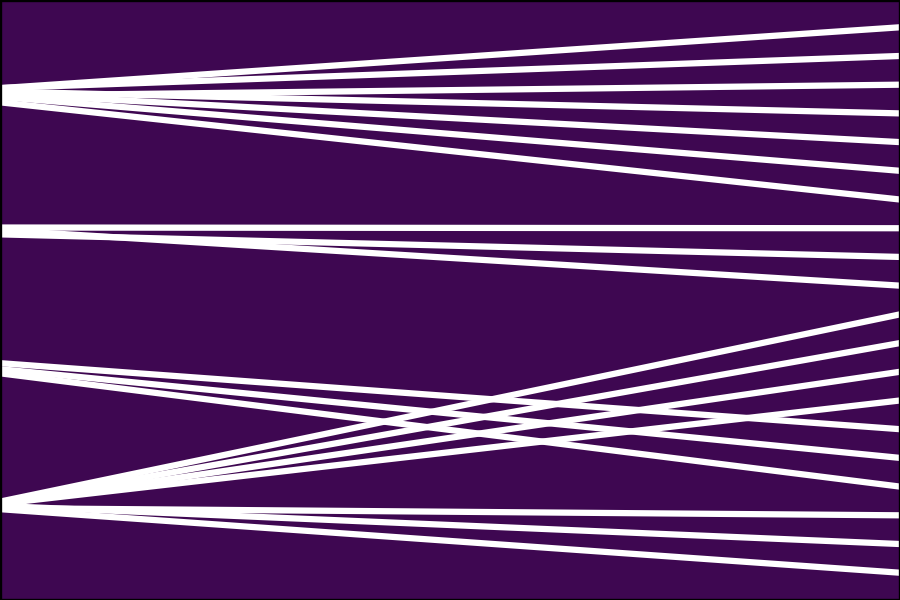

- GANs: The “artist” AI (generator) tries to create images that fool a “judge” AI (discriminator). The artist can choose any route from noise to image. That freedom can make training unstable because there isn’t a single target path to learn.

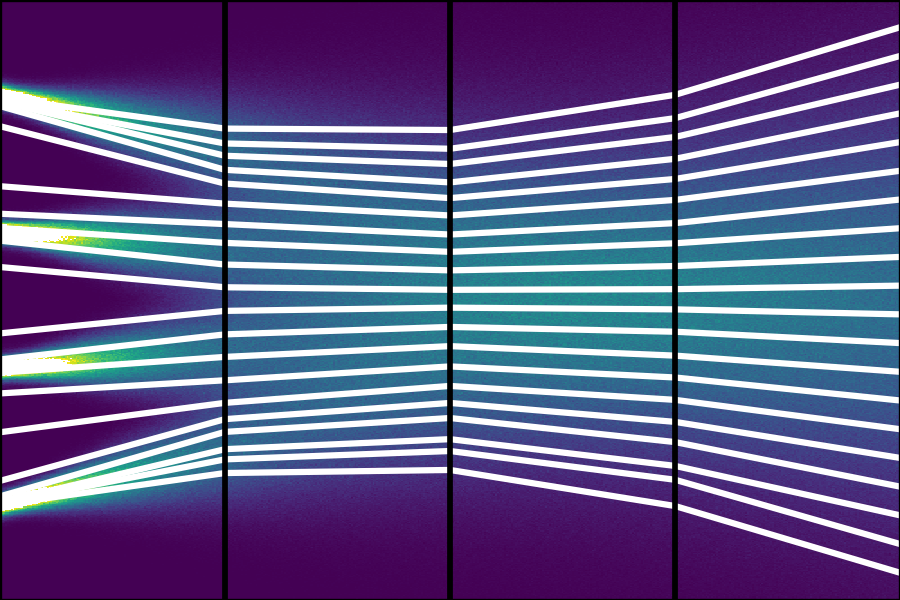

- Flow models: They define a precise path from noise to image and take many small steps along it. This is stable but slow. If you cut steps to make it fast, results can become blurry unless you add more tricks.

The paper’s idea:

- Keep the judge (adversarial training) from GANs (this helps match real image distributions and look sharp).

- Add a rule that forces the artist to follow a fixed, best route from noise to image (this gives a single “right answer” like flow models and stabilizes training).

How does this “fixed route” work?

- They add an “optimal transport” loss. Imagine giving a penalty when the artist takes a detour. The loss encourages the shortest, most direct path between noise and the final image. In practice, it’s like saying: “Stay close to the straight-line route.” This breaks the ambiguity of GANs and makes training stable.

Single-step generation:

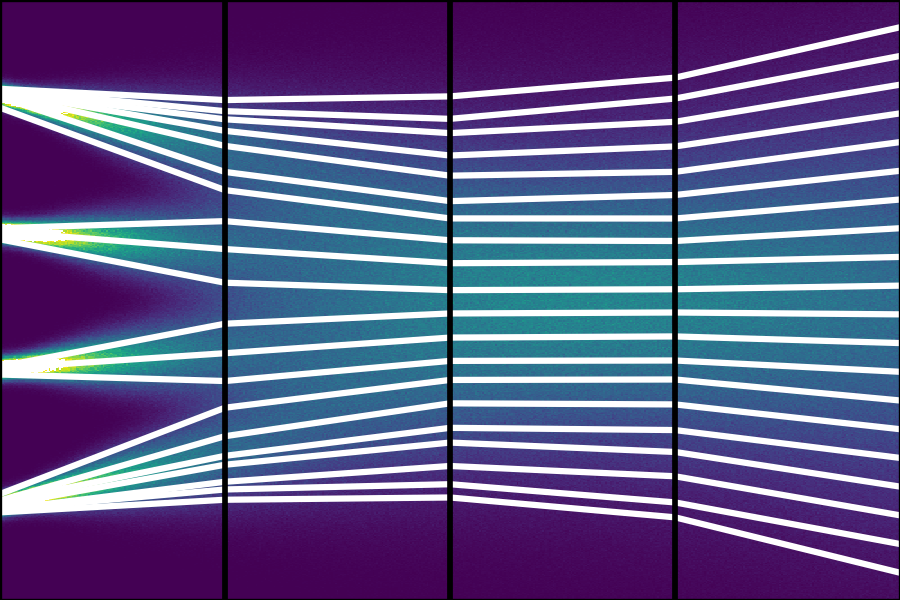

- The model learns to go directly from noise to a final image in one move, guided by the judge and the path rule. No need to learn all the middle steps.

Multi-step generation:

- If needed, the same method supports multiple steps by using a simple “interpolation” function that defines where the marble should be along the path at different times. The model can jump between any two points along the route.

Training tricks they used (explained simply):

- Gradient penalties: Guardrails for the judge so it doesn’t push the artist too hard or too softly.

- Gradient normalization: The artist gets two kinds of feedback (from the judge and from the path rule). They normalize the judge’s feedback so both signals are balanced.

- Don’t show the judge the starting noise: If the judge is told both the start and the end, training can get confused. So the judge only sees the image and its “time” along the path.

- EMA (Exponential Moving Average): They keep a smoothed version of the artist’s weights that often performs better, and periodically replace the live artist with this smoother version later in training.

- Occasional judge reset and light data augmentations: Practical ways to keep training moving when it stalls.

- Architecture: They use a standard “DiT” (Diffusion Transformer) for both artist and judge. No fancy custom networks.

- Extra-deep single-step models: They repeat transformer blocks inside the artist so it can do complex transformations in one forward pass, kind of like folding multiple steps inside the network, still trained end-to-end without intermediate supervision.

Guidance (making images match a given class or prompt more strongly):

- They use “classifier guidance” by training a classifier that tells how much an image looks like a certain class.

- Instead of guiding at just the final image, they guide along the path (at different times), which mimics the best-known guidance used in flow models. This gives stronger, more reliable alignment without losing realism.

What they found and why it’s important

Key results on ImageNet at 256×256 resolution:

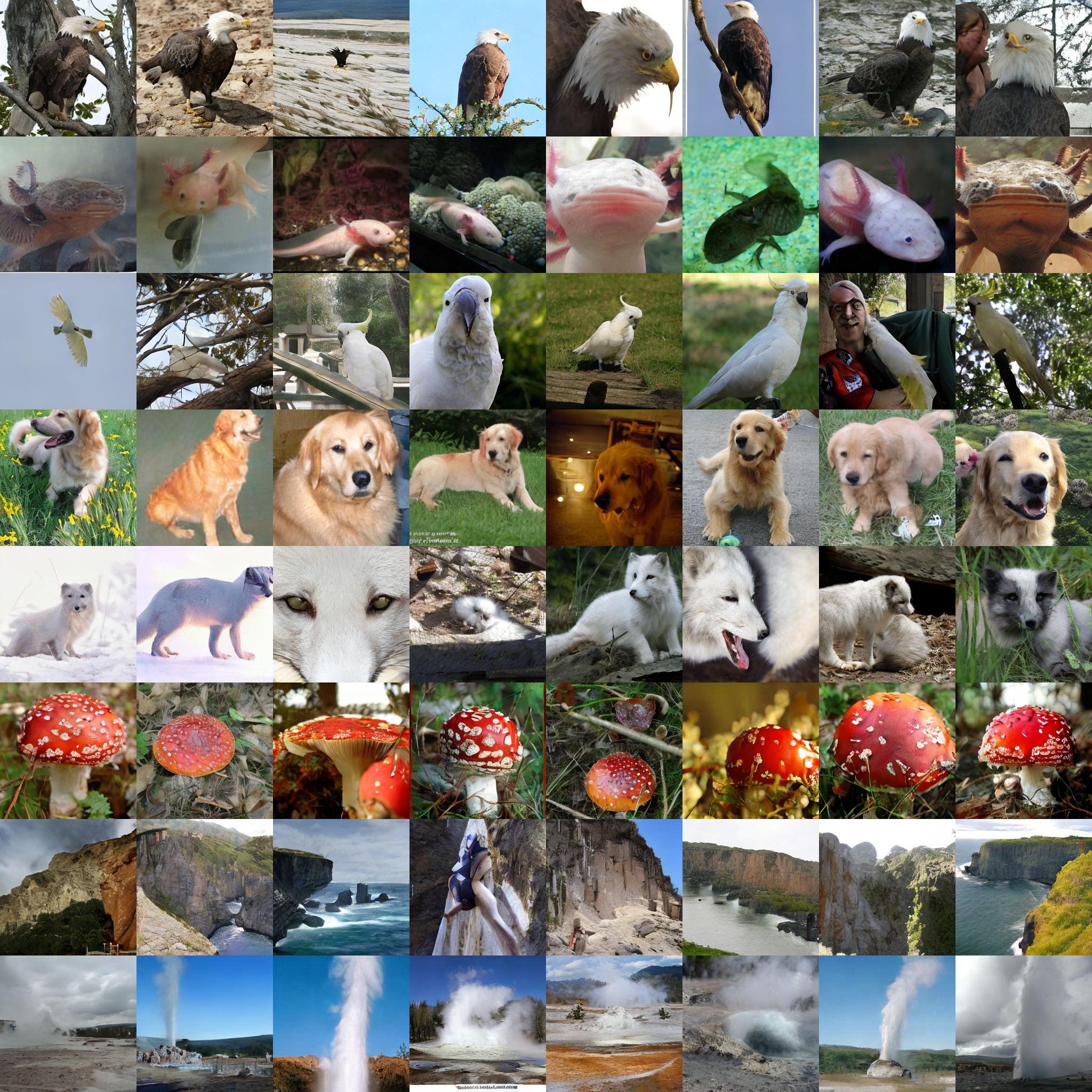

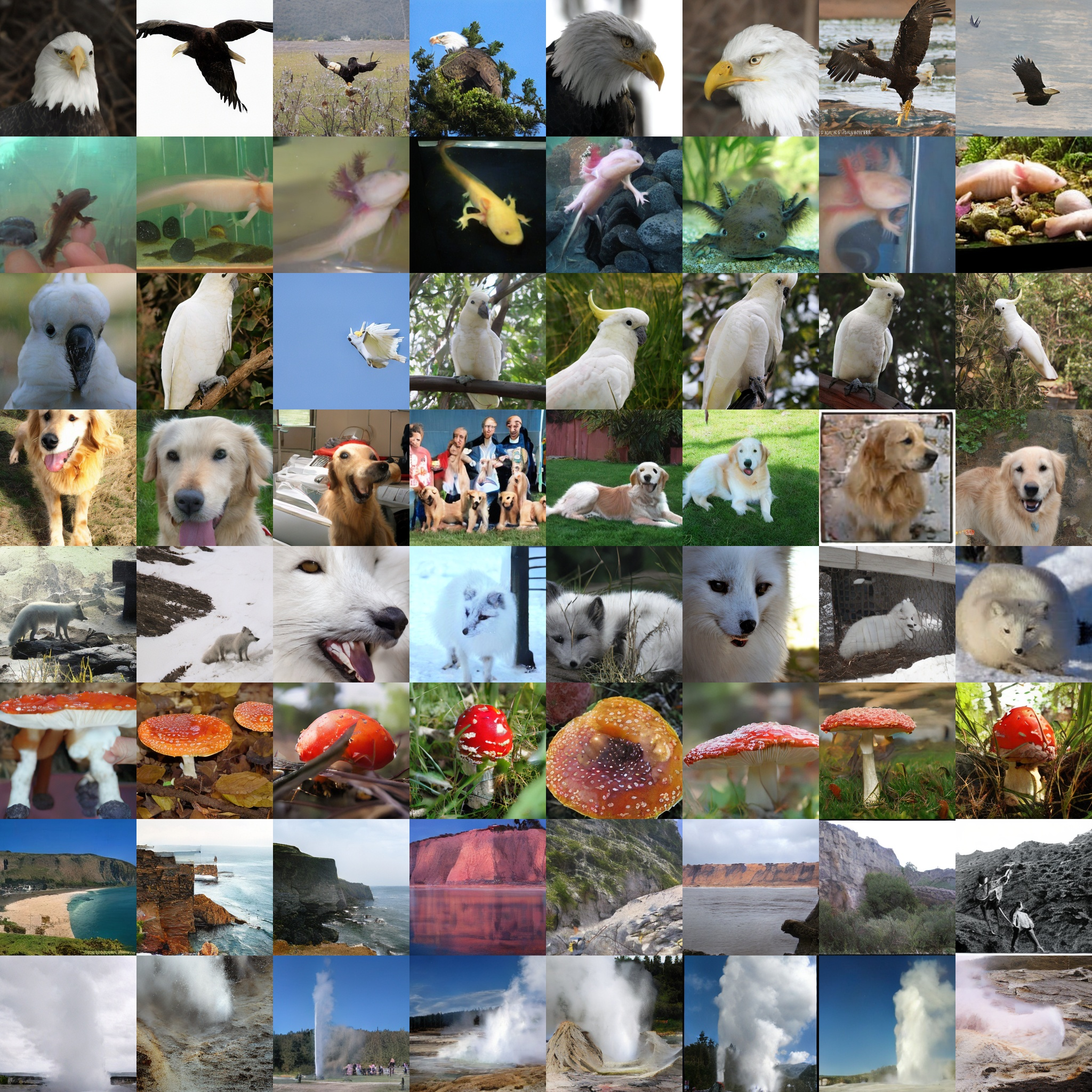

- Single-step with guidance: Their largest model (XL/2) achieved an FID of 2.38, a new best among methods using the same transformer-and-latent setup. Even their smaller B/2 model beat some much larger consistency-based models. This shows that not wasting capacity on all intermediate steps pays off.

- Few-step with guidance: Their method also beats other few-step models, confirming the approach works beyond one-step.

- No-guidance: Their models outperformed flow-matching models that use very many steps, even when no guidance is used. This suggests adversarial training measures “semantic” differences better, so images look more natural without extra tricks.

- Extra-deep single-step models: By repeating transformer blocks, their single-pass models achieved FIDs of 2.08 and 1.94, beating 2-step and 4-step versions. This hints that depth inside the generator might matter more than how many sampling steps you do.

- Stability: They could train strong transformers with adversarial objectives end-to-end, something often considered hard. Their “optimal transport” loss was crucial for stability.

Why FID matters:

- FID (Fréchet Inception Distance) is a number that measures how close generated images are to real ones. Lower is better. Numbers near 2–3 are very high quality in this setting.

What this means going forward

- A bridge between two worlds: The method unifies adversarial training (sharp images) and flow modeling (clear paths), making training more stable and generation faster.

- One-step or few-step generation becomes practical: That means quicker image creation and less computation at inference time.

- Better use of model capacity: Not learning every intermediate step avoids wasted effort and reduces blur, especially for smaller models.

- Stronger consistency and realism without heavy guidance: The judge helps the generator match the true look and feel of real images, even with little or no guidance.

- Depth matters: Instead of adding more sampling steps, increasing the network’s depth can improve image quality in one pass. This opens a path to simpler, faster systems.

- Broad applicability: Because the architecture is standard (DiT) and training is end-to-end, the technique could be adopted widely in image and possibly video generation.

In short, this paper proposes a way to make fast, high-quality image generators that are stable to train and work well with common transformer architectures. It could help future systems produce realistic images faster and more reliably, while keeping models simple and efficient.

Knowledge Gaps

Knowledge Gaps, Limitations, and Open Questions

Below is a concise list of unresolved issues and concrete directions that future researchers could explore to strengthen, generalize, or better understand adversarial flow models.

- Theoretical guarantees on the transport map:

- Under what conditions (on the prior and data distributions) does the adversarial objective plus the squared L2 OT loss provably converge to the Monge optimal transport map used by flow matching?

- Is the claimed “same optimal transport as flow-matching (with linear interpolation)” unique and stable in high dimensions and under adversarial training noise, or are additional regularity assumptions required for uniqueness and existence?

- How does the adversarial component alter the OT solution compared to pure flow matching, especially when is decayed to near-zero?

- Generalization beyond linear interpolation and squared L2 costs:

- What happens if the interpolation is nonlinear (e.g., geodesic on learned manifolds) or if the cost function uses perceptual metrics (e.g., feature-space distances) rather than Euclidean L2?

- Can alternative cost functions improve sample realism without reintroducing instability, and how do they affect convergence?

- Prior distribution and dimensionality constraints:

- The method requires and to share dimensionality (). How restrictive is this in practice for pixel-space generation, 3D, audio, or other modalities where priors are typically lower-dimensional?

- How sensitive is performance to the choice of prior (e.g., Gaussian vs. other priors) and to the latent space learned by the VAE?

- Robustness of hyperparameter schedules and normalization:

- The approach hinges on decaying over time. Can the schedule be made adaptive or self-tuning, and how robust is it across model sizes, datasets, and architectures?

- The proposed backward-path gradient normalization operator lacks theoretical analysis. What are its convergence properties, failure modes, and interactions with different discriminators/penalties and optimizers?

- Discriminator penalties and approximations:

- Finite-difference approximations for gradient penalties (with on 25% of batch) are heuristic. What is the accuracy/variance trade-off of this approximation and its sensitivity to , batch fraction, and dimension?

- Are Lipschitz constraints actually satisfied in practice, and how much do violations affect stability and sample quality?

- Heuristics that influence training dynamics:

- The use of repeated EMA weight replacement for , discriminator augmentation (DA), and periodic discriminator reloading are strong heuristics. Which improvements are attributable to AF itself vs. these auxiliary techniques?

- Can these heuristics be replaced by principled min-max optimization methods (e.g., optimistic gradient, extragradient, diffusion-style critics) without degrading performance?

- Multi-step training formulation and capacity allocation:

- The weighting function is empirical. Is there a theoretically grounded weighting scheme that yields better stability and quality across timesteps?

- Any-step training underperforms designated few-step training due to “capacity dilution.” How can model capacity and batch size be allocated or regularized to make any-step training competitive?

- Distributional evaluation beyond FID:

- Claims of “better distribution matching” are based on FID. How does AF perform on precision/recall, coverage, diversity, and memorization metrics, and in human perceptual evaluations?

- Does AF reduce common failure modes (e.g., oversharpening under guidance, canonicalization, class bias) relative to consistency/flow models?

- Guidance mechanisms and their costs:

- Flow-based classifier guidance requires a time-conditioned classifier. What are the compute and data costs of training such classifiers, and do they scale to higher resolutions and diverse condition types (text prompts, segmentation, layout)?

- How does AF compare to CFG under the same classifier backbone and data budgets, and can CLIP-, DINO-, or task-specific guidance be integrated without leaking external priors unfairly?

- Architectural choices and generalization:

- Results are tied to DiT in a VAE latent space with patch size 2. How do conclusions change in pixel space, with different VAEs, or alternative encoder/decoder backbones?

- What is the impact of deeper discriminators, alternative conditioning (e.g., cross-attention vs. modulation), and variants of formulation (direct vs. residual) on stability and quality?

- Extra-deep single-step models:

- Transformer block repetition improves FID but increases compute. What are the memory, throughput, and training stability trade-offs at scale (e.g., 256+ layers), and can curriculum or partial unrolling recover similar gains more efficiently?

- Does deeper require deeper or differently regularized to avoid vanishing gradients or critic overfitting?

- Scalability and modality coverage:

- The paper evaluates ImageNet-256px only. How does AF scale to higher resolutions (512–1024 px), video generation, diffusion-conditioned tasks, and multimodal inputs?

- What happens in low-data regimes, long-tailed distributions, or fine-grained conditional tasks (e.g., compositional prompts, small objects, rare classes)?

- Mode coverage, collapse, and rare-mode fidelity:

- Does enforcing a minimal transport cost introduce an implicit “minimal displacement bias” that could hinder creative variation or reduce exploration of rare modes?

- How does AF behave under known GAN pathologies (mode collapse, discriminator overfitting), and which diagnostics or regularizers are most effective?

- Training compute and fair comparison:

- AF incurs extra compute from -related losses/regularizations. What is the end-to-end training/inference cost vs. consistency models under identical hardware, data, and batch constraints?

- Many baselines use different guidance schemes or feature networks. Can an apples-to-apples benchmark suite be established to isolate the gains from AF alone?

- Stability over long training horizons:

- As decays, does the generator drift re-emerge? Are there long-horizon instabilities, and how often must EMA replacements or discriminator reloads occur to maintain peak performance?

- Coupling structure and invertibility:

- Can AF incorporate constraints or priors that encourage invertible or monotone mappings, improving interpretability or controllability of the learned transport?

- Are there advantages to learning bidirectional mappings (e.g., an inverse ) for diagnostics, editing, or latent interpolation?

- Data augmentation biases:

- DA can inject inductive biases (e.g., affine invariances). What augmentation strategies minimize unwanted biases while still ensuring adequate overlap and stable learning?

- Implementation details and reproducibility:

- The backward-path normalization alters gradients silently. What is its memory overhead, effect on mixed-precision training, and compatibility with distributed training and graph compilation?

- Are the improvements reproducible across seeds and realistic resource budgets, and what are the failure cases when these techniques are omitted?

Glossary

- Adversarial flow models: A generative modeling framework that unifies adversarial training with flow-based deterministic transport to enable stable one- or few-step generation. "We present adversarial flow models, a class of generative models that unifies adversarial models and flow models."

- Adversarial objective: A loss that trains a generator to fool a discriminator, focusing on distribution matching rather than pointwise targets. "and is trained using the adversarial objective."

- Autoregressive modeling: A modeling paradigm that predicts the next token given previous ones, with targets determined by the training corpus. "and autoregressive modeling, which has ground-truth token probabilities predetermined by the training corpus."

- Classifier-free guidance (CFG): A sampling technique that adjusts conditional strength without an explicit classifier by mixing conditional and unconditional predictions. "The effect of classifier-free guidance (CFG)~\citep{ho2022classifier} is not only low-temperature sampling, but also perceptual guidance~\citep{lin2023diffusion}."

- Classifier guidance (CG): A conditioning method that uses gradients from a classifier to steer generation toward desired classes. "We use classifier guidance (CG)~\citep{dhariwal2021diffusion} for conditional generation as an illustration because of its popularity."

- Consistency model (CM): A class of models trained with self-consistency constraints to enable few-step generation. "Consistency model (CM)~\citep{song2023consistency,song2023improved} proposes the use of self-consistency constraint and supports standalone training as a new class of generative models."

- Consistency propagation: The requirement in consistency-based training to enforce consistency across all timesteps of a flow. "consistency-based models must still be trained on all timesteps of the flow for consistency propagation."

- DiffusionGAN: An adversarial method that projects the discriminator onto a flow to provide gradients at noised levels. "DiffusionGAN~\citep{wang2022diffusion} projects onto a flow in the same spirit as our approach with guidance"

- Diffusion transformer (DiT): A transformer architecture adapted for diffusion/flow-based generative modeling, often used in latent space. "Both the and networks use standard diffusion transformer architecture (DiT)~\citep{peebles2023scalable}."

- Discriminator augmentation (DA): Data augmentations applied to the discriminator inputs to improve GAN training stability and overlap. "Discriminator augmentation (DA)~\citep{karras2020training} is another approach to increase the distribution overlap"

- Exponential moving average (EMA): A running average of model parameters or statistics used to stabilize and improve generative quality. "The operator tracks the exponential moving average (EMA) of the gradient norm"

- Finite difference approximation: A numerical technique to approximate derivatives without second-order autodiff, used here for gradient penalties. "so we use finite difference approximation~\citep{lin2025diffusion}:"

- Flow matching: A family of models that learns a velocity field to transport samples from a prior to data along a defined interpolation. "Flow matching~\citep{lipman2022flow,song2020score} is a class of generative models"

- Fréchet Inception Distance (FID): A metric comparing distributions of real and generated images via Inception features; lower is better. "Evaluations use Fréchet Inception Distance on 50k class-balanced samples (FID-50k)~\citep{heusel2017gans} against the entire train set."

- Gradient normalization: A technique to rescale discriminator-propagated gradients to balance adversarial and transport losses. "Therefore, we propose a gradient normalization technique."

- Gradient penalties (R1 and R2): Regularizers on discriminator gradients w.r.t. real and generated samples to stabilize GAN training. "Additionally, gradient penalties and ~\cite{roth2017stabilizing} are added on ."

- Linear interpolation: A straight-line mixing of data and noise defining the path of the probability flow between endpoints. "It is known that when linear interpolation and the squared distance loss are used, this combination establishes a transport plan"

- Lipschitz constant: A bound on how much a function can change relative to its input change; constraining it stabilizes GAN objectives. "and impose a constraint on the Lipschitz constant of ~\citep{gulrajani2017improved}."

- Logit-centering penalty: A regularizer to keep discriminator logits centered and prevent drift in relativistic GAN setups. "a logit-centering penalty is added, similar to prior work~\citep{karras2017progressive}:"

- Minimax game: The adversarial optimization setup where the discriminator maximizes and the generator minimizes a shared objective. "The adversarial optimization involves a minimax game where is trained to maximize differentiation while is trained to minimize the differentiation by ."

- Monte Carlo approximation: Estimating expectations by averaging over samples, used for minibatch training of objectives. "The expectation is obtained through Monte Carlo approximation over minibatches of data during training."

- Number of function evaluations (NFE): The count of model evaluations during sampling; lower NFE implies faster generation. "Under the same 1NFE setting on ImageNet-256px, our B/2 model approaches the performance of consistency-based XL/2 models"

- Optimal transport (OT): The theory of transporting one distribution to another at minimal cost under a chosen metric. "we only need to add an additional optimal transport (OT) loss on ."

- Optimal transport loss: A loss enforcing the generator to follow a minimal-cost transport mapping between prior and data. "we only need to add an additional optimal transport (OT) loss on ."

- Probability flow: The continuous path of distributions between prior and data induced by an interpolation schedule. "A probability flow is established by interpolating the data and the prior samples, and a neural network learns the gradient of the flow."

- Relativistic objective: A GAN objective that compares real and fake scores relatively to shape a smoother loss landscape. "We adopt the relativistic objective~\citep{jolicoeur2018relativistic}"

- Signal-to-noise ratio (SNR): A measure comparing signal power to noise power; here, it bounds effectiveness of discriminator projections. "but this only guarantees support up to the signal-to-noise ratio that can perfectly fool under each's capacity."

- Teacher-forcing: Training with ground-truth intermediate targets; avoided here to prevent mismatch and error accumulation. "Our one-step model also completely avoids teacher-forcing."

- Time-conditioned classifier: A classifier that takes timestep as input to provide guidance along the flow trajectory. "we switch to a time-conditioned classifier that predicts on a probability flow."

- Transformer block repetition: Reusing the same transformer blocks multiple times to build extra depth without intermediate supervision. "our extra-deep models use transformer block repetition~\citep{dehghani2018universal}."

- Transport plan: A mapping or coupling specifying how mass moves from the prior to the data distribution under a cost. "there exist infinite valid transport plans that the generator may pick"

- Variational autoencoder (VAE): A latent-variable model with an encoder–decoder architecture used here to define a latent space. "We use pre-trained variational autoencoder (VAE)\footnote{\href{https://huggingface.co/stabilityai/sd-vae-ft-mse}{https://huggingface.co/stabilityai/sd-vae-ft-mse}~\citep{rombach2022high}"

- Wasserstein-2 distance (W22): A metric from optimal transport measuring squared cost of moving probability mass under quadratic cost. "squared Wasserstein-2 ($\mathbf{W_2^2$)} distance between the prior and data distributions"

- WGAN: The Wasserstein GAN formulation optimizing Earth Mover’s distance with a Lipschitz-constrained discriminator. "WGAN~\citep{arjovsky2017wasserstein} is proposed but requires a K-Lipschitz ."

Practical Applications

Immediate Applications

Below are actionable, near-term uses that can be deployed now or with modest engineering, grounded in the paper’s methods (adversarial flow models with OT regularization), training recipes (gradient penalties, EMA replacement, DA, D reload), architecture (DiT, extra-deep repetition), and guidance (flow-based classifier guidance).

- Sector: Software/Creative Tools — Low-latency single-step image generation engines

- Use case: Power image-generation features (e.g., thumbnails, concept art, social graphics) with one-pass inference to reduce latency and cost.

- What to build: A service exposing AF 1NFE models in VAE latent space (e.g., SD-vae-ft-mse), exported via ONNX/TensorRT for GPU/edge.

- Why now: The paper demonstrates SOTA FID for 1NFE in the same latent setting; single-pass is deployable today.

- Dependencies/assumptions: High-quality VAE; DiT-based generator; tuned OT loss schedule; gradient normalization; GPU kernels optimized for DiT.

- Sector: Software/Model Providers — Post-training refinement of diffusion/flow models

- Use case: Sharpen and de-blur few-step/one-step distilled models without retraining the full teacher.

- What to build: “Adversarial Flow Fine-Tune” stage that uses the paper’s implicit flow-based classifier guidance (derived from an existing teacher) and OT loss to stabilize adversarial updates.

- Why now: AF improves guidance-free fidelity and can outperform flow matching without CFG; integrates cleanly with existing diffusion pipelines.

- Dependencies/assumptions: Access to a pre-trained flow-matching model for implicit guidance gradient; careful lambda_ot decay; discriminator training pipeline.

- Sector: Marketing/Advertising/E-commerce — Rapid variant generation and A/B testing

- Use case: Generate many controlled variations (backgrounds, colors, class-conditional renditions) for ad creatives and product imagery at low latency.

- What to build: A creative engine that modulates conditional alignment with flow-based classifier guidance (train a latent-space, time-conditioned classifier and apply the paper’s Eq. (flow-guidance)).

- Why now: Paper shows guidance scales/time ranges that improve FID without heavy multi-step costs.

- Dependencies/assumptions: Label availability or proxy classifiers (e.g., CLIP-heads adapted to time-conditioned inputs); DA choice may affect inductive biases.

- Sector: Mobile/Consumer Apps — On-device photo effects and AI wallpapers

- Use case: One-tap stylization, quick reimagining, or class-conditional photo effects on smartphones.

- What to build: Quantized AF 1NFE generators in VAE latent space with small B/2–M/2 DiTs.

- Why now: Single-step inference cuts latency and power; no need to train on all timesteps as in consistency models.

- Dependencies/assumptions: Mobile-friendly memory footprint; potential trade-offs between extra-deep single-step quality and device limits.

- Sector: Robotics/Autonomy/Simulation — Fast synthetic image augmentation

- Use case: Generate on-the-fly visual variations for training perception modules or RL agents.

- What to build: Domain-tuned AF models trained directly on designated few-step schedules for targeted quality/speed trade-offs.

- Why now: AF few-step training can conserve capacity by training only the timesteps you’ll use; single-pass enables faster data generation loops.

- Dependencies/assumptions: High-quality, domain-relevant datasets; potential need for pixel-space models if VAE latents don’t capture domain specifics.

- Sector: Academia/ML Engineering — Stabilizing transformer-based GAN training

- Use case: Make Transformer GANs viable without bespoke architectures or frozen features.

- What to build: Open-source training recipes for OT-regularized adversarial objectives, gradient normalization operator φ, finite-difference R1/R2, EMA replacement, and D reload when training stalls.

- Why now: The paper shows instability without OT and demonstrates convergence on standard DiTs; minimal code diffs to existing GAN stacks.

- Dependencies/assumptions: Careful hyperparameter tuning (λ_gp, λ_ot decay, EMA); batch-size sensitivity for any-step training.

- Sector: Cloud/Platforms — Inference cost reduction and throughput gains

- Use case: Serve more generations per GPU-hour for consumer gen-AI services.

- What to build: AF 1NFE deployment path alongside existing diffusion stacks; autoscaling and dynamic routing to single-step backends.

- Why now: One-pass reduces inference compute; paper indicates improved FID even at 1NFE.

- Dependencies/assumptions: Extra-deep models may increase single-pass compute; need cost/quality benchmarking per SKU.

- Sector: Safety/Compliance — Guidance-free fidelity and semantic matching audits

- Use case: Assess generative models’ ability to match data distributions without CFG (which can mask artifacts).

- What to build: AF-based “audit runs” to stress-test guidance-free outputs, calibrate risk and bias, and compare semantic vs pixel-distance matching.

- Why now: Paper reports AF outperforms flow matching without guidance; useful for unbiased capability assessment.

- Dependencies/assumptions: Diverse evaluation sets; FID alone is insufficient—add semantic and fairness metrics.

- Sector: Open-Source Tooling — Flow-based classifier guidance for any generator

- Use case: Add time-conditioned guidance to other one-step models or distillations without reimplementing diffusion solvers.

- What to build: A minimal library that trains time-conditioned classifiers on interpolated samples and exposes guidance via the paper’s loss.

- Why now: Paper shows that guidance at multiple flow timesteps outperforms single-timestep classification pressure.

- Dependencies/assumptions: Interpolation pipeline; labels or pseudo-labels; compute to train the classifier.

- Sector: Education — Rapid generation of class-conditional visual teaching materials

- Use case: Create balanced, class-labeled visual datasets for labs/demos.

- What to build: Classroom-friendly AF model checkpoints (B/2) and recipes to train time-conditioned classifiers in latent space.

- Why now: Low-latency and solid class conditioning are adequate for courseware and demos.

- Dependencies/assumptions: Labeled datasets; instructor-managed safety filters.

Long-Term Applications

These require additional research, scaling, or engineering (e.g., new modalities, stronger guidance, or safety frameworks).

- Sector: Text-to-Image/Video — One-pass or few-pass high-res generative media

- Vision: Extend AF to rich prompts using time-conditioned CLIP/LLM guidance along the flow; scale to pixel-space, high resolution, and video frames.

- Potential products: Real-time promptable content creation in design suites; live video stylization.

- Research needs: Robust guidance beyond class labels, scalable DiT backbones for pixels/video, curriculum for any-step stability without huge batch sizes.

- Dependencies/assumptions: Large high-quality datasets; new guidance heads (e.g., CLIP as a time-conditioned classifier); efficient memory/sharding.

- Sector: XR/Metaverse — Interactive scene generation and editing

- Vision: Use single-step AF for interactive AR/VR content generation/editing at low latency.

- Potential products: Creator tools that morph scenes or assets on the fly.

- Research needs: 3D-consistent representations; latency/memory optimization; safety alignment at interactive speeds.

- Sector: 3D/Graphics/Robotics — 3D asset and environment generation

- Vision: Apply AF in 3D latent spaces (e.g., triplanes, point clouds, NeRF latents) with transport-regularized adversarial objectives.

- Potential products: Procedural 3D asset generators for games/robots; fast sim asset synthesis for RL.

- Research needs: 3D interpolation schemes and OT costs; discriminators on 3D manifolds; evaluation metrics beyond FID.

- Sector: Healthcare — Realistic synthetic medical imaging

- Vision: Use AF’s stronger distribution matching to create realistic, label-faithful synthetic scans for augmentation, training, or privacy-aware sharing.

- Potential products: Synthetic cohort generation tools for limited-data modalities.

- Research needs: Privacy leakage audits for adversarial training; regulatory validation; medically meaningful guidance and evaluation beyond pixel FID.

- Dependencies/assumptions: Curated and licensed medical datasets; clinical partnerships; robust watermarking and traceability.

- Sector: Finance/Enterprise AI — Synthetic document/image data for OCR and QA

- Vision: Generate diverse, realistic synthetic forms, receipts, IDs for robustness testing.

- Potential products: “DocSynth” AF pipelines with layout-aware guidance heads.

- Research needs: Structured conditioning (layout, fields) as time-conditioned guidance; security reviews to avoid forging risks.

- Sector: On-Device Personalization — Private, user-adapted models

- Vision: Fine-tune compact AF models on-device to a user’s style or gallery, generating personalized outputs locally.

- Potential products: Private style models in camera/gallery apps.

- Research needs: Stable small-data adversarial fine-tuning; memory-efficient extra-deep variants; safety alignment without server checks.

- Dependencies/assumptions: Federated or on-device training capabilities; hardware acceleration.

- Sector: Foundation Model Training — “One-pass” extra-deep generators

- Vision: Scale extra-deep single-pass transformers as a new foundation for fast generative backends.

- Potential products: Serving stacks with single-pass high-fidelity generation at scale.

- Research needs: Memory-efficient depth scaling, residual/normalization schemes, distributed training, and new regularizers for ultra-deep one-pass models.

- Sector: Policy/Safety — Detection and provenance for AF-generated media

- Vision: Watermarking and detection that remain robust under adversarial training regimes producing sharper, guidance-free outputs.

- Potential products: Provenance SDKs tailored to AF artifacts; audits comparing AF vs CFG-based outputs.

- Research needs: Forensics that survive OT-regularized adversarial training; standardized evaluation beyond FID.

- Sector: Sustainability — Energy-efficient generative services

- Vision: Replace multi-step samplers with single-step AF where quality parity is met to reduce inference energy.

- Potential products: “Green Gen-AI” SKUs with SLOs on energy per image.

- Research needs: End-to-end LCA benchmarking across models/hardware; policy incentives and reporting standards.

- Sector: RL/Autonomy — Real-time visual data synthesis in training loops

- Vision: AF models synthesizing realistic observations on-the-fly for policy training, curriculum generation, or domain randomization.

- Potential products: Plug-in generators for simulators supporting time-conditioned guidance.

- Research needs: Tight sim integration; temporal consistency for sequential tasks; reward-aligned guidance.

- Sector: Tooling/Platforms — Turnkey AF Trainer

- Vision: A standardized library exposing AF’s key components: s/t sampling, w(s,t), OT scheduling, φ gradient normalization, EMA replacement, DA, and D reload.

- Potential products: Trainer/SDK for practitioners to transition from diffusion distillation to AF.

- Research needs: Auto-tuning of λ_ot and λ_gp across scales; curriculum for any-step training to reduce batch-size demands.

- Sector: Alignment & Control — Decoupled distribution matching and alignment

- Vision: Use AF’s separation of distribution matching (D) and optimal transport to design nuanced, modular alignment heads (safety, style, brand).

- Potential products: Multi-head time-conditioned guidance (safety + brand + content).

- Research needs: Head interaction and trade-off tooling; evaluation of unintended mode shaping; human-in-the-loop controls.

Notes on general assumptions and dependencies:

- Most results are in a VAE latent space with DiT backbones at 256px; pixel-space scaling and high-res video require further work.

- The OT loss must be scheduled (decayed) and balanced against adversarial gradients; gradient normalization (φ) is critical for stable tuning across sizes.

- Any-step training is more batch-size sensitive; designated few-step schedules are more efficient in practice.

- DA can inject inductive biases; the paper sometimes prefers D reload to avoid bias for no-guidance setups.

- FID is only one metric; deployers should add semantic, fairness, and safety evaluations, especially as AF improves guidance-free realism.

Collections

Sign up for free to add this paper to one or more collections.