Gaussians on Fire: High-Frequency Reconstruction of Flames (2511.22459v1)

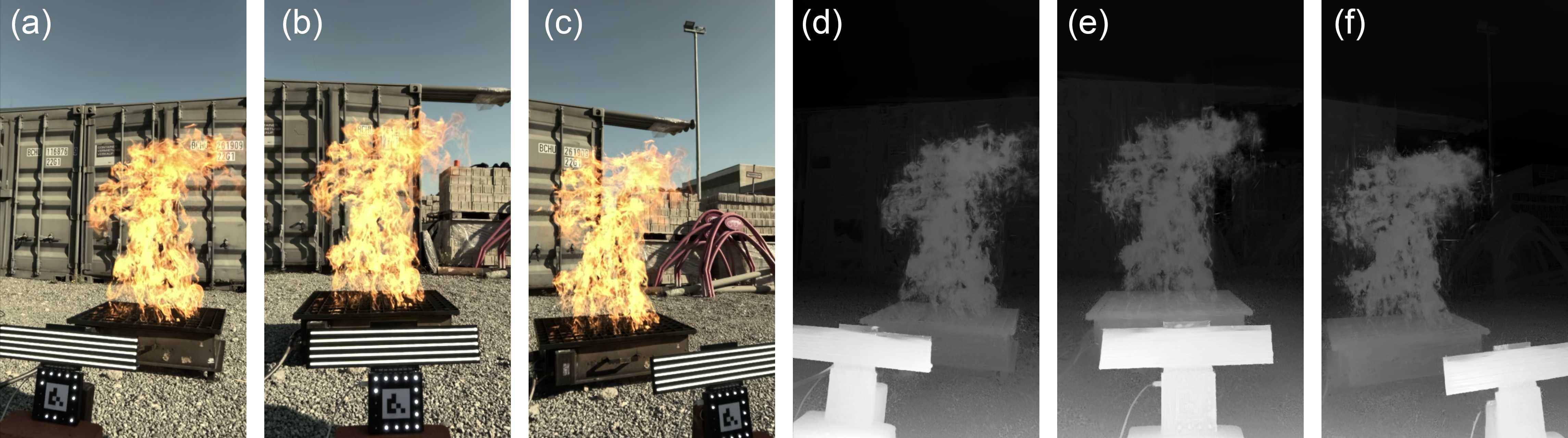

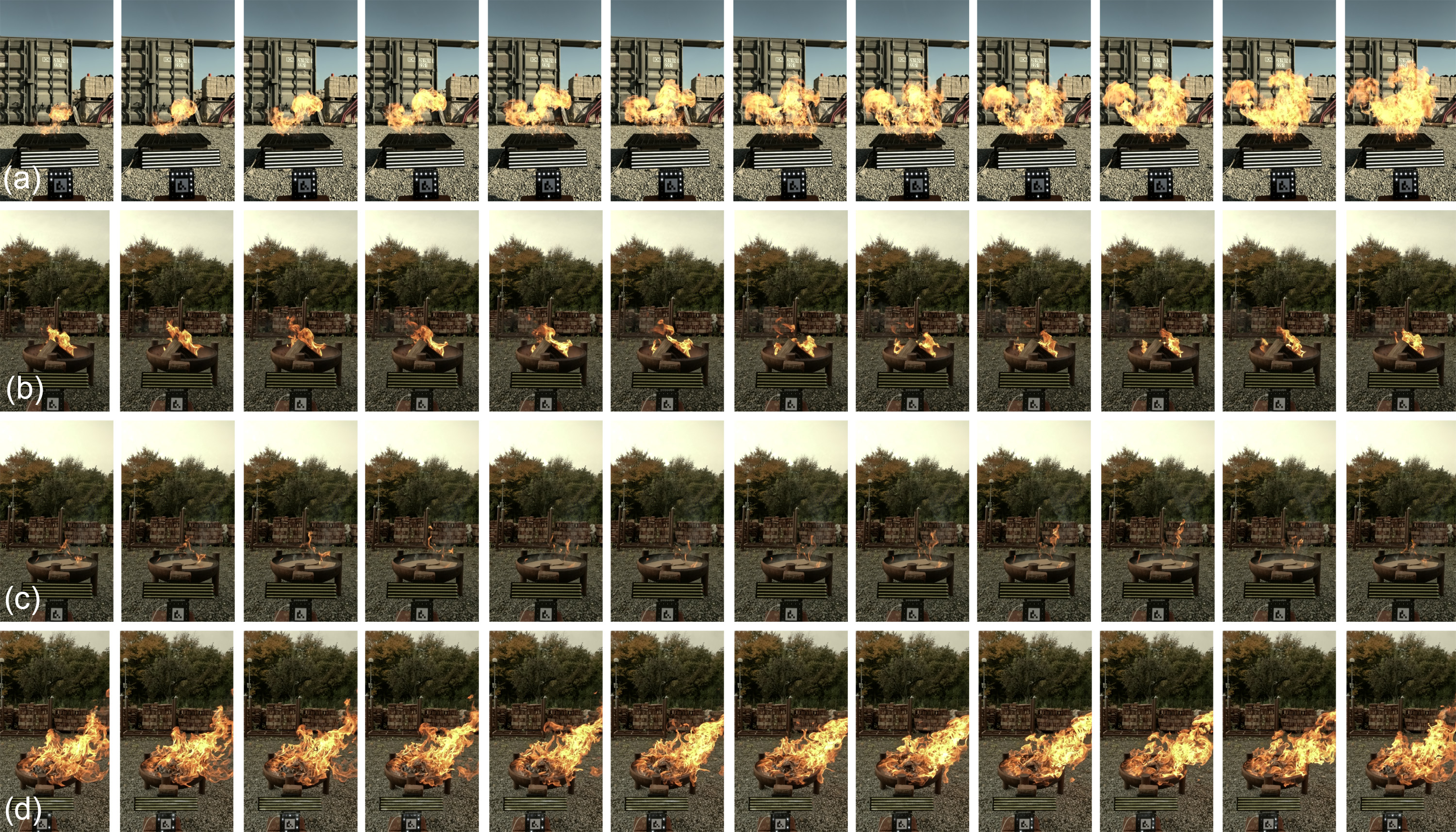

Abstract: We propose a method to reconstruct dynamic fire in 3D from a limited set of camera views with a Gaussian-based spatiotemporal representation. Capturing and reconstructing fire and its dynamics is highly challenging due to its volatile nature, transparent quality, and multitude of high-frequency features. Despite these challenges, we aim to reconstruct fire from only three views, which consequently requires solving for under-constrained geometry. We solve this by separating the static background from the dynamic fire region by combining dense multi-view stereo images with monocular depth priors. The fire is initialized as a 3D flow field, obtained by fusing per-view dense optical flow projections. To capture the high frequency features of fire, each 3D Gaussian encodes a lifetime and linear velocity to match the dense optical flow. To ensure sub-frame temporal alignment across cameras we employ a custom hardware synchronization pattern -- allowing us to reconstruct fire with affordable commodity hardware. Our quantitative and qualitative validations across numerous reconstruction experiments demonstrate robust performance for diverse and challenging real fire scenarios.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Simple explanation of “Gaussians on Fire: High-Frequency Reconstruction of Flames”

1. What is this paper about?

This paper shows how to capture and rebuild a moving flame in 3D using only three regular cameras. Fire is hard to record in 3D because it moves quickly, changes shape, is partly see-through, and gives off light. The authors use a clever way of representing fire as many tiny, soft “blobs” (called Gaussians) that glow and move over time, so you can view the fire from new angles and see its motion realistically.

2. What questions did the researchers ask?

They set out to answer:

- Can we reconstruct realistic, fast-moving fire in 3D using just a few cameras?

- How do we separate the steady background (like walls or ground) from the flickering, moving flames?

- Can we capture the flame’s fine details and motion over time so it looks right from different viewpoints?

- Is there a low-cost way to make sure all cameras are in sync (capturing at the same moment) for such quick motion?

3. How did they do it? (Methods explained simply)

First, imagine a flame as a cloud made of many tiny, soft, glowing dots that can appear, move, and fade. The team models the flame with these dots (“3D Gaussians”), each with:

- A start time

- How long it lives

- A direction and speed (its velocity)

Here’s the approach, step by step, using everyday language:

- Capture and Timing:

- They recorded fire with three GoPro cameras at very high speed (400 frames per second).

- Cameras that use “rolling shutter” scan the image line-by-line, which can distort fast motion. The team built a simple LED flashing system that appears in the videos so they can line up the timings of all cameras very precisely. Think of it like a blinking timing code that helps them sync everything to microsecond accuracy.

- Separate Background from Fire:

- Flames are bright compared to the background. By stacking frames over time and taking the darkest values, most of the flame disappears, leaving a good guess of the static scene (like removing the flicker to see the room behind it).

- With this “clean” background, they estimate where the cameras are and build a 3D map of the scene using multi-view stereo (software that figures out depth from multiple photos) and an AI that guesses depth from a single picture when stereo isn’t available. This stabilizes the background 3D model.

- Build a Solid Background:

- They train a standard 3D Gaussian model for the static scene. To avoid mistakes from only having three views, they gently guide the training using the AI depth map like a “soft ruler” that keeps the geometry sensible.

- Estimate Flame Motion:

- For the moving fire, they measure how pixels shift between consecutive frames in each camera. This is called “optical flow” — imagine tiny arrows showing where each pixel moves.

- They combine (fuse) these 2D motion arrows from all three cameras into a 3D motion field on a grid (like 3D Lego blocks). This gives a good initial guess for how the fire moves in space.

- Dynamic Gaussians:

- Each tiny Gaussian blob gets a start time, a lifetime, and a velocity based on the 3D motion field. The blob’s position over time is just “start point + velocity × time,” and its brightness fades in and out over its lifetime.

- Because the motion is initialized from real measurements, the blobs track the flow of the flames more accurately, even with only three cameras.

- Handle Rolling Shutter:

- Since the camera scans a frame row-by-row, fast-moving flames can look slightly bent. The team models this effect so the 3D reconstruction matches what the cameras actually saw, reducing warping.

4. What did they find and why does it matter?

- Realistic Flames from Just Three Cameras:

- Their method reconstructs fire that looks lifelike from different angles, even capturing high-frequency details (small, fast changes) that are typical in real flames.

- It produces smooth motion over time — the fire doesn’t jump or smear oddly.

- Strong Results Compared to Other Methods:

- When compared to two known dynamic Gaussian methods, their approach recreated the moving fire more accurately and produced more believable 3D depth. In other words, it not only looks good but also has consistent geometry.

- Works on Different Fire Types:

- They tested propane jets, burning wood, cardboard, paper, and outdoor scenarios. The method works across these diverse flame styles.

- Affordable Setup:

- Using only three consumer cameras and a simple LED timing gadget makes it more practical for real-world use outside of controlled labs.

This matters because high-quality 3D fire capture often needs many cameras or complex physics simulations. Doing it with just three cameras makes it cheaper and easier to use, while still getting impressive results.

5. Why is this important? (Implications and impact)

- Film, Games, and AR/VR:

- Realistic 3D fire can be used in visual effects, game engines, and immersive experiences without huge camera rigs or expensive simulations.

- Training and Safety:

- Firefighters or safety trainers could use accurate 3D models to paper flame movement and heat patterns in different scenarios.

- Robotics and Navigation:

- Robots working near fire could analyze the flame’s motion field (the per-blob velocities) to make safer decisions and plan paths.

- Scientific Study:

- Researchers can explore how flames move and evolve from sparse, real-world captures, bridging the gap between lab conditions and outdoor environments.

Looking ahead, the team suggests mixing physics knowledge of combustion with their method, which could make the motion and appearance even more accurate. They also aim to push toward using fewer cameras — maybe even a single camera — by improving how they estimate motion in 3D directly.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concise list of what remains missing, uncertain, or unexplored in the paper. Each point is phrased to be concrete and actionable for future research.

- The dynamic segmentation of “fire regions” used to separate static and dynamic components is not described or evaluated; the pipeline’s sensitivity to segmentation errors, thresholds, or misclassification (e.g., bright reflections, smoke) is unknown.

- The 3D flow fusion relies on dense per-view optical flow without uncertainty modeling; there is no weighting scheme for view-specific confidence, occlusion handling, or propagation of flow errors into the 3D velocity field.

- Only one optical flow method (MEMFOF) is used; robustness to different flow estimators, frame rates, noise levels, and motion regimes (e.g., large displacements, brightness changes due to emissive heating) is not studied.

- The 3D flow fusion uses a simple Tikhonov regularization and orthogonality constraints; the lack of physically informed priors (e.g., divergence, buoyancy-driven upward bias, turbulence spectra) may yield implausible motion in under-constrained regions.

- FreeTimeGS assumes per-Gaussian linear trajectories and Gaussian temporal lifetimes; acceleration, rotational dynamics, and non-linear advection typical of turbulent flames are not modeled or evaluated.

- The heuristic lifetime initialization (four times the frame time) and voxel-size-dependent random position offsets are not ablated for sensitivity; their impact on temporal coherence, moiré artifacts, and overfitting remains unclear.

- Rolling-shutter handling is approximated with a local linear delay model; there is no calibration/validation of per-camera readout profiles beyond row time, nor a joint optimization of rolling-shutter parameters and scene dynamics.

- The LED-based synchronization provides microsecond-level precision but has not been validated against ground-truth timing (e.g., using external timecode or calibrated strobes); the method’s portability across different sensors and exposure settings is untested.

- Background–foreground interactions are assumed negligible during dynamic optimization (static background fixed); the method does not account for time-varying illumination on the background (flicker), refractive distortions, or shadows cast by flames.

- The radiative transport of flames (emission, absorption, scattering, refraction) is not modeled; the current representation may reproduce appearance but lacks physically grounded light transport, limiting generalization and physical interpretability.

- Depth regularization uses linearly aligned monocular depth priors; failure modes under large stereo gaps, misaligned scales, monocular artifacts, or non-Lambertian surfaces are not analyzed.

- Quantitative evaluation uses image metrics (PSNR, SSIM, LPIPS) and depth RMSE vs aligned monocular predictions; there is no ground-truth 3D geometry or motion validation (e.g., LiDAR, Schlieren/PIV, multi-view tomography), leaving absolute accuracy unknown.

- Temporal consistency metrics (e.g., per-pixel trajectory stability, flicker indices, temporal PSNR/LPIPS) are not reported; the extent of temporal artifacts and motion coherence is therefore unquantified.

- Novel-time synthesis is claimed (arbitrary time steps) but not systematically evaluated for interpolation/extrapolation fidelity, especially under rapid dynamics and sparse temporal sampling.

- Generalization to fewer cameras (two or one) is not evaluated; the minimum capture requirements and failure thresholds as a function of baseline, triangulation geometry, and camera placement are unknown.

- The method assumes a fixed multi-view rig; handling of moving cameras, hand-held capture, or online camera pose drift is left unexplored.

- Dataset coverage is limited (17 scenes, 100-frame subsequences, specific fuels and backgrounds); performance on outdoor, windy, smoke-heavy, or large-scale fires, and different lighting conditions (daylight vs. low light) is not assessed.

- Sensitivity to camera settings (ISO, exposure, dynamic range at 400 Hz) and sensor noise on flow estimation and radiance reconstruction is not analyzed.

- Scalability and efficiency are not reported (training/inference time, memory footprint, number of Gaussians); practical limits for long sequences, larger volumes, or higher-resolution capture remain unclear.

- The approach does not exploit multi-spectral or thermal imaging; the potential benefits of spectral priors (e.g., temperature-dependent emission) for disentangling geometry from radiance are uninvestigated.

- The fusion of stereo and monocular depth for static initialization is purely geometric; jointly optimizing with photometric priors and per-pixel uncertainty (e.g., Bayesian fusion) is not explored.

- There is no mechanism to detect and correct physically implausible motion (e.g., non-causal trajectories, penetration of static geometry); the pipeline lacks consistency checks and constraints tying dynamic Gaussians to static scene boundaries.

- The impact of lens distortions and camera calibration inaccuracies on the dynamic 3D reconstruction (especially under rolling shutter) is not quantified; calibration robustness and error bounds are missing.

- The evaluation masks the synchronization pattern for metrics but does not assess how the pattern’s presence in training views affects optimization or learned radiance, nor strategies to eliminate it without masking.

Glossary

- 3D Gaussian Splatting (3DGS): An explicit, differentiable point-based scene representation using Gaussian kernels for real-time novel-view synthesis. "3D Gaussian Splatting (3DGS) \cite{Kerbl2023GaussianSplatting} introduced an explicit, differentiable representation based on anisotropic Gaussians that enables real-time, high-fidelity rendering of static scenes."

- 4D Gaussian Splatting: Extension of 3DGS that models spatiotemporal dynamics with time-varying Gaussian primitives for dynamic view synthesis. "GS4D and 4D Gaussian Splatting learn 4D Gaussian primitives for real-time photorealistic dynamic view synthesis~\cite{yang2023gs4d,Wu_2024_CVPR}"

- anisotropic Gaussians: Gaussian kernels with direction-dependent variances used to represent geometry and appearance. "based on anisotropic Gaussians"

- back-projection: Mapping 2D image measurements (e.g., flow or depth) back into 3D space using camera geometry. "are back-projected to 3D space"

- canonical-space deformations: Transformations that deform a canonical (reference) scene representation over time to model dynamics. "model continuous canonical-space deformations"

- COB LED strips: Chip-on-board LED light sources used as high-speed visual timing markers for synchronization. "by sequentially toggling a series of five COB LED strips."

- COLMAP: A popular SfM/MVS pipeline for camera calibration, pose estimation, and dense stereo reconstruction. "we first calibrate and register cameras using COLMAP~\cite{schoenberger2016vote}."

- DepthAnythingV2: A pretrained model providing monocular depth priors to regularize geometry from single images. "we predict monocular depth maps $D_{\text{mono}$ using DepthAnythingV2~\cite{depth_anything_v2}."

- depth regularization: A loss term that constrains rendered depths toward external depth priors to stabilize optimization. "as well as the depth regularization term"

- differentiable rendering: Rendering formulations where gradients with respect to scene parameters can be computed for learning. "neural and differentiable rendering has explored increasingly expressive scene representations"

- DUSt3R: A geometric correspondence and reconstruction method used for static or slowly changing scenes. "DUSt3R and MASt3R~\cite{dust3r_cvpr24,mast3r_eccv24}"

- Dynamic 3D Gaussians: A representation attaching per-frame rigid transforms to Gaussians to model object motion. "Dynamic 3D Gaussians attach per-frame rigid transforms to canonical Gaussians for tracking and view synthesis~\cite{Luiten2024Dynamic3DGaussians}"

- ESP32 microcontroller: A low-cost microcontroller used to drive LED-based synchronization patterns. "we use an ESP32 microcontroller to control the logic-level outputs of $21$ pins."

- FreeTimeGS: A dynamic Gaussian framework with per-primitive time, lifespan, and velocity parameters enabling free-time motion. "we chose a FreeTimeGS-based representation~\cite{Wang2025FreeTimeGS}"

- Gaussian splats: Projected Gaussian primitives used to render scenes via a splatting pipeline. "Gaussian splats capturing volumetric radiance and motion"

- Gaussian primitives: Individual Gaussian elements representing scene parts in point-based rendering. "Gaussian primitives"

- global shutter: Imaging model where the entire sensor captures an exposure simultaneously, avoiding rolling distortion. "Image rendered with a global-shutter model"

- Gray code: A binary numeral system where successive values differ in only one bit, used for robust frame indexing. "which represent the frame number in Gray code (Fig.~\ref{fig:setup}, right)"

- LPIPS: Learned Perceptual Image Patch Similarity, a perceptual metric for image quality. "we report PSNR, SSIM, and LPIPS."

- MASt3R: A method for grounding image matching in 3D, often used in geometry pipelines. "DUSt3R and MASt3R~\cite{dust3r_cvpr24,mast3r_eccv24}"

- MEMFOF: A high-resolution, memory-efficient multi-frame optical flow estimator. "estimated using MEMFOF~\cite{bargatin2025memfof}."

- monocular depth: Depth estimated from a single image used as a prior when stereo is unavailable. "complemented by aligned monocular depth predictions for regularization."

- multi-view stereo (MVS): Dense depth estimation from multiple calibrated views. "combining dense multi-view stereo images with monocular depth priors."

- Neural Radiance Fields (NeRF): Continuous volumetric scene representations for view synthesis learned from images. "Neural radiance fields (NeRFs) ~\cite{mildenhall2020nerf}"

- NRSfM (Non-Rigid Structure-from-Motion): Estimating 3D structure and motion for deformable objects from monocular sequences. "NRSfM priors"

- optical flow: Pixel-wise motion between consecutive frames used to infer dynamic 2D/3D motion fields. "dense optical flow"

- PatchMatchStereo: A fast, patch-based stereo algorithm used for dense depth initialization. "apply COLMAP's implementation of PatchMatchStereo~\cite{schoenberger2016vote,bleyer2011patchmatch}"

- point cloud: A set of 3D points representing scene geometry used for initialization or analysis. "point-clouds highlighting the spatial distribution and density of the Gaussian primitives over time"

- PSNR: Peak Signal-to-Noise Ratio, a reconstruction fidelity metric. "we report PSNR, SSIM, and LPIPS."

- PSNR_flame: PSNR computed specifically over image regions containing motion (flames). "PSNR\textsubscript{flame}~()"

- rasterization: Converting geometric primitives to pixels efficiently for rendering. "couples naturally with the efficient rasterization of Gaussians"

- RMSE_depth: Root Mean Squared Error between rendered and reference depth maps. "RMSE\textsubscript{depth}~()"

- rolling shutter: Row-by-row sensor readout causing spatiotemporal image distortions under motion. "the resulting images are prone to rolling-shutter distortion (Fig.~\ref{fig:rolling_shutter})."

- scene flow: 3D motion field of points in a scene derived from optical flow and depth. "self-supervised scene-flow estimation"

- singular value decomposition: A matrix factorization used to solve least-squares problems in motion estimation. "which is solved via singular value decomposition."

- SSIM: Structural Similarity Index, an image quality metric aligned with human perception. "the SSIM loss "

- SSIM_flame: SSIM computed over dynamic flame regions only. "SSIM\textsubscript{flame}~()"

- Structure-from-Motion (SfM): Joint estimation of camera poses and sparse geometry from image correspondences. "depth from structure-from-motion (SfM)"

- Tikhonov regularization: A stabilization technique adding a norm penalty to ill-posed optimization problems. "we apply a Tikhonov regularization with the regularization parameter"

- tomographic capture: Reconstructing volumetric phenomena (e.g., smoke/flames) from multiple projections. "has relied on tomographic capture"

- undistortion parameters: Camera model coefficients used to correct lens distortion in images. "Given the sensor specifications and the undistortion parameters"

- volumetric radiance: Light emission/transport modeled within a volume rather than only at surfaces. "capturing volumetric radiance and motion"

- voxel grid: 3D grid of volumetric cells used to aggregate and represent motion or density fields. "project all 2D flows onto a voxel grid."

- voxelization: Converting continuous data into a discrete voxel representation. "fused voxelized 3D flow field"

Practical Applications

Immediate Applications

Below are actionable use cases that can be deployed now with the paper’s pipeline (three synchronized commodity cameras, COLMAP + PatchMatchStereo, MEMFOF optical flow, monocular depth priors, FreeTimeGS-based dynamic Gaussians, and the low-cost ESP32 LED synchronization). Each item notes the sector, potential tools/products/workflows, and key dependencies or assumptions.

- Media and entertainment (VFX, virtual production)

- Use case: Capture high-fidelity, free-viewpoint flame assets on set with only three cameras; render in real time for previz or integrate into post-production.

- Tools/products/workflows: “FireGS Capture Kit” (ESP32 LED sync board + calibration and preprocessing scripts), a 3DGS/FreeTimeGS-to-DCC importer (Blender/Maya), and Unreal/Unity rasterization bridges for Gaussian splats.

- Dependencies/assumptions: Controlled shoot space; flames brighter than background (to enable minimum-intensity projection); accurate camera calibration and LED-based sub-frame synchronization; artists comfortable with Gaussian assets rather than voxel grids.

- Industrial combustion R&D (energy, manufacturing)

- Use case: Quantify and visualize burner and furnace flame dynamics from sparse views for design tuning, stability assessment, and CFD validation.

- Tools/products/workflows: “Flow-initialized Gaussian Viewer” with velocity overlays; export velocity fields to CFD packages; batch capture protocol for lab rigs.

- Dependencies/assumptions: Stable multi-view mounting around hot zones; consistent lighting; linear velocity parameterization is sufficient for short windows; safety procedures for high-temperature environments.

- Safety training and operations (public safety, education)

- Use case: Build AR/VR training modules from controlled burns with free-viewpoint playback and motion trajectories to illustrate flashover, plume rise, and fuel-dependent behavior.

- Tools/products/workflows: Instructor toolkit that ingests three-camera training burn videos, outputs interactive sequences; headset-ready Gaussian renderers.

- Dependencies/assumptions: Controlled burns with multi-view capture; content aligned to curricula; headset or classroom displays; acceptable latency for playback (offline reconstruction, real-time viewing).

- Robotics perception benchmarking (robotics)

- Use case: Generate realistic datasets and evaluation scenes for hazard-aware navigation and perception near flames using explicit per-Gaussian velocity fields and emissive rendering.

- Tools/products/workflows: Dataset export with camera poses, dynamic Gaussians, and derived scene flow; training pipelines that mix reconstructed real-fire sequences with synthetic scenes.

- Dependencies/assumptions: Robots trained on emissive/transparent phenomena; generalization from lab flames to real deployments; use of three-view data for labeling reference.

- Incident analysis and claims support (forensics, insurance/finance)

- Use case: Reconstruct flame evolution from multi-view footage to assess ignition points, growth rate, and visibility constraints, informing post-incident analysis and claims.

- Tools/products/workflows: Analyst console for timeline scrubbing and velocity field inspection; report generation with snapshots and derived metrics.

- Dependencies/assumptions: Availability of multiple synchronized or synchronizable views; tolerance for reconstruction uncertainty in emissive regions; method validity documented for evidentiary contexts.

- Academic research in dynamic 3D reconstruction (academia/software)

- Use case: Use the released dataset and pipeline to benchmark dynamic emissive phenomena, test flow fusion strategies, and paper rolling-shutter modeling in differentiable rendering.

- Tools/products/workflows: Reproducible notebooks, ablation harnesses, and integration with COLMAP, DepthAnythingV2, and MEMFOF; comparative baselines (4DGS variants).

- Dependencies/assumptions: Code and data availability; compute resources for optimization; adoption of the ESP32 sync approach or similar.

- Educational demonstrations (education/daily life)

- Use case: Interactive classroom demonstrations of flame dynamics and radiative effects with free-viewpoint playback from small controlled captures (e.g., Bunsen burners).

- Tools/products/workflows: Simple lab capture protocol, pre-built viewers for laptops/tablets.

- Dependencies/assumptions: Safe lab setups; small-scale flames; minimal smoke; students can access viewers.

- Hardware and workflow standardization (software/hardware)

- Use case: Adopt the microcontroller-driven LED Gray-code timecode and rolling shutter characterization as a low-cost synchronization standard for multi-camera capture of fast phenomena.

- Tools/products/workflows: Open-source firmware and CAD for LED pattern rigs; calibration routines that recover row time and per-camera offsets; documentation for consumer CMOS sensors.

- Dependencies/assumptions: Visibility of LED patterns in each camera; rolling shutter prominent enough to measure; basic microcontroller assembly skills.

Long-Term Applications

These use cases require further research, scaling, robustness improvements, or additional integration (e.g., physics priors, monocular operation, outdoor robustness, real-time inference).

- Wildfire front modeling and monitoring (public safety, energy/environment)

- Use case: Drone or mast-mounted sparse camera rigs reconstruct flame fronts and plume dynamics to inform incident command on spread, intensity, and safe ingress routes.

- Tools/products/workflows: Field-hardened multi-camera payloads with synchronized capture; pipeline adapted for smoke-rich, high-turbulence outdoor scenes; telemetry and georegistration tools.

- Dependencies/assumptions: Robustness to smoke, wind, extreme lighting and occlusions; scalable synchronization without LED rigs; physics-informed motion beyond linear velocities.

- Real-time firefighter AR overlays (public safety/robotics)

- Use case: On-scene dynamic reconstruction streamed to AR headsets to visualize hidden or predicted flame propagation and radiative hazards in low visibility.

- Tools/products/workflows: Edge compute modules performing fast dynamic Gaussian updates; headset integration with latency constraints; thermal imaging fusion.

- Dependencies/assumptions: Real-time or near-real-time optimization; reliable tracking in chaotic scenes; safety-certified wearables; network bandwidth and resilience.

- Autonomous firefighting robot/drone perception (robotics)

- Use case: Use Gaussian velocity fields and emissive modeling to guide path planning around flame plumes, dynamic obstacles, and heat sources with minimal sensor suites.

- Tools/products/workflows: Perception stacks that ingest dynamic reconstructions; planners that incorporate uncertainty from emissive/translucent media; simulation-to-real transfer using reconstructed datasets.

- Dependencies/assumptions: Monocular or stereo-only operation maturation; robustness to smoke/soot; integration with thermal and gas sensors; policy and safety approvals for autonomous operation.

- Predictive combustion analytics and digital twins (energy/manufacturing)

- Use case: Couple reconstructed velocities with physics priors to forecast flame behavior, optimize burners, and run “what-if” safety scenarios in plant digital twins.

- Tools/products/workflows: “FireTwin” modules bridging Gaussian reconstructions and CFD solvers; parameter estimation for combustion models; predictive dashboards.

- Dependencies/assumptions: Hybrid data-driven + physics modeling; standardized sensor/camera placements; validation against ground-truth instrumentation.

- Insurance underwriting and risk modeling (finance/policy)

- Use case: Derive quantitative risk features (flame growth rates, plume reach, visibility impairments) from reconstructed historical incidents to refine premiums and mitigation strategies.

- Tools/products/workflows: Analytics pipelines that translate reconstruction outputs into risk metrics; integration with claims systems; audit trails for methodological transparency.

- Dependencies/assumptions: Curated incident datasets; agreed-upon validation standards; regulatory acceptance of reconstruction-based evidence.

- Urban and building safety analytics (smart infrastructure/policy)

- Use case: Integrate sparse camera networks in public spaces to reconstruct and localize flame or smoke events for early warning and egress planning.

- Tools/products/workflows: City-scale camera calibration and synchronization services; on-prem inference with event triggers; GIS integration for evacuation modeling.

- Dependencies/assumptions: Privacy-preserving capture policies; reliable multi-view coverage; false-positive mitigation; maintenance of calibration over time.

- Generalized dynamic volumetric capture beyond flames (software/academia)

- Use case: Extend to smoke, steam, fireworks, plasma arcs, and other emissive/translucent phenomena with minimal camera count for scientific visualization and media production.

- Tools/products/workflows: Broader motion models (nonlinear/turbulent), scattering-aware radiance modeling, learned 3D flow estimators.

- Dependencies/assumptions: Advanced appearance models beyond emissive-only; domain-specific priors; training data with ground truth where possible.

- Consumer-grade creative tools (daily life/media)

- Use case: One- or two-phone capture workflows that approximate dynamic flame reconstructions for hobbyist filmmaking and AR art.

- Tools/products/workflows: Smartphone apps that guide capture, estimate depth/flow, and produce simplified dynamic Gaussian assets; cloud-based processing.

- Dependencies/assumptions: Progress on monocular dynamic reconstruction; relaxation of synchronization requirements; user-friendly UX and safety guidance.

- Standards for fast-phenomena multi-camera capture (policy/industry consortia)

- Use case: Establish best-practice guidelines and synchronization standards (e.g., LED Gray-code patterns, row-time characterization) for capturing fast, emissive events safely and reproducibly.

- Tools/products/workflows: Reference designs, certification processes, benchmark datasets, and documentation shared across agencies and studios.

- Dependencies/assumptions: Cross-industry collaboration; alignment on safety and data governance; updates for new sensor generations.

Notes on Core Assumptions and Dependencies

- Minimum hardware: three calibrated cameras with sub-frame synchronization; the LED Gray-code rig and rolling-shutter timing characterization are critical to high-frequency alignment.

- Scene characteristics: brighter flames than background aid in minimum-intensity projection for static-background estimation; heavy smoke or complex background interaction may reduce accuracy.

- Modeling limits: FreeTimeGS linear velocity per Gaussian suits short time windows; turbulent or large-scale fires likely require nonlinear motion or physics priors.

- Algorithms: Reliance on COLMAP/ PatchMatchStereo, MEMFOF optical flow, and monocular depth priors (DepthAnythingV2/DepthPro) introduces dependencies on their accuracy and operating ranges.

- Safety and compliance: Any real-fire capture demands strict safety protocols; outdoor/wildfire deployments introduce regulatory and operational constraints.

- Performance profile: Current pipeline targets offline reconstruction with real-time rendering; moving to real-time reconstruction requires algorithmic and systems engineering advances.

Collections

Sign up for free to add this paper to one or more collections.