- The paper shows that decoder-induced distortions in latent spaces lead to non-uniform memorization and increase vulnerability to inversion attacks in LDMs.

- It employs Riemannian geometry metrics and singular value decomposition to quantify distortion, analyzing datasets like CelebA, CIFAR-10, and ImageNet-1K.

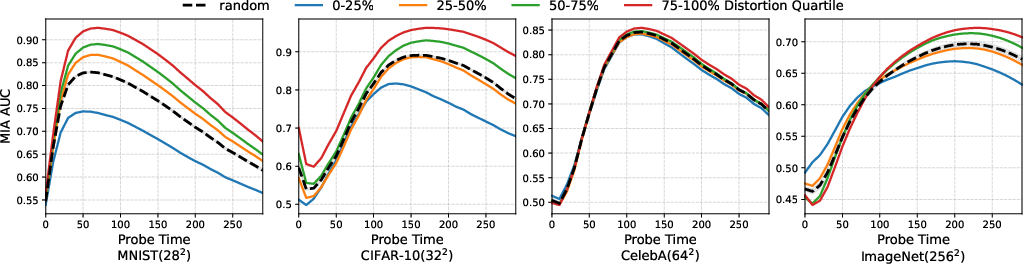

- The study reveals that per-dimensional Jacobian analysis can identify and remove less influential dimensions, enhancing membership inference attack precision.

Latent Diffusion Inversion Requires Understanding the Latent Space

Introduction

The paper "Latent Diffusion Inversion Requires Understanding the Latent Space" (2511.20592) investigates the overlooked interplay between autoencoder geometry and memorization vulnerabilities in Latent Diffusion Models (LDMs). Previous efforts predominantly focused on model inversion within data-domain diffusion models, neglecting latent spaces manipulated by an encoder/decoder pair. The authors identify critical factors causing latent code memorization to be spatially non-uniform and offer insights into latent dimension contributions to memory leaks.

Understanding Latent Space Memorization

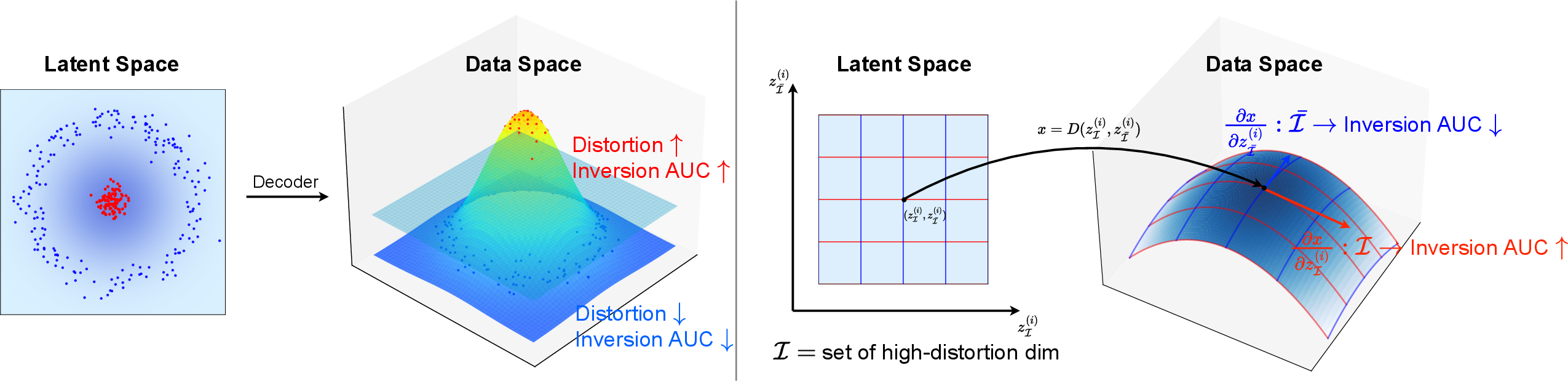

Latent diffusion models adopt Variational Autoencoders (VAEs) to encode data into latent spaces where diffusion processes generate new samples. This study reveals that non-uniform memorization characteristics arise due to local distortions in these latent representations, which are dependent on the decoder's geometric properties. Regions exhibiting high distortion in latent space are particularly susceptible to membership inference attacks due to their predisposition for overfitting.

Figure 1: Points mapped to high-distortion regions of the latent space (red) are vulnerable to inversion and exhibit stronger memorization compared to those in low-distortion regions (blue).

Evaluating Decoder-Induced Distortions

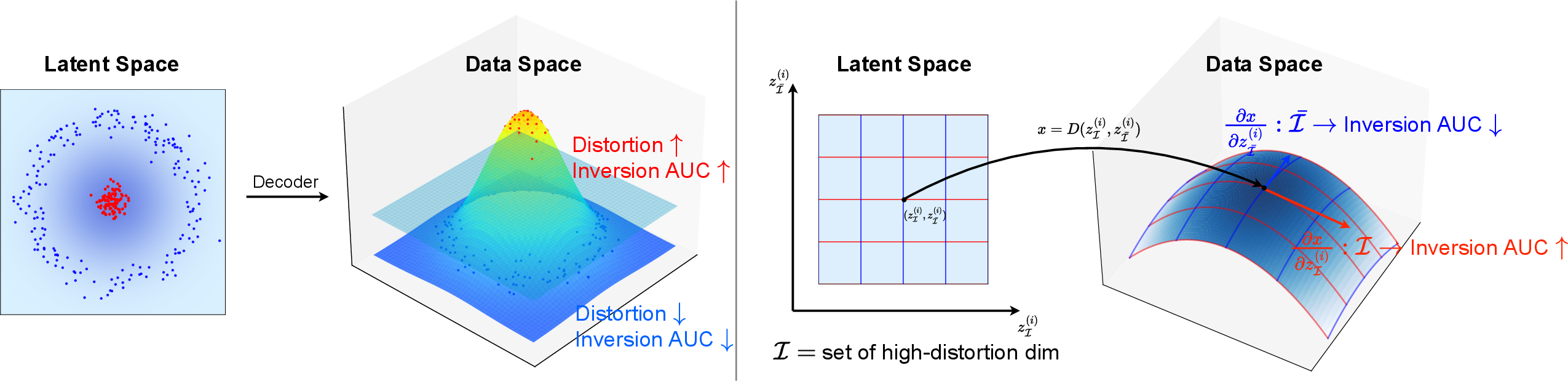

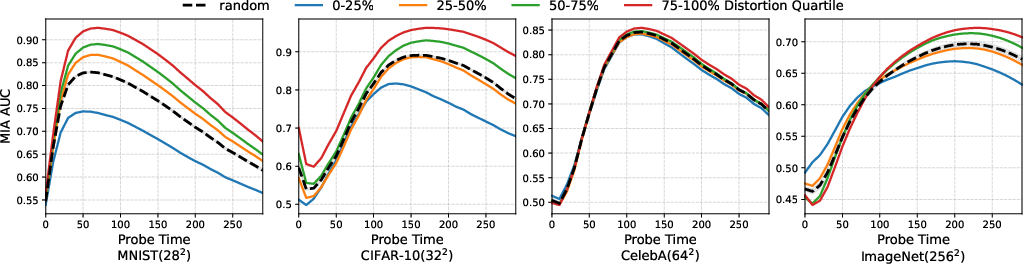

The variance in memorization strength across latent regions is quantified using the decoder-induced pullback metric from Riemannian geometry, giving a measure of local geometric distortion. Samples with high decoder distortion correlate strongly with increased model inversion vulnerability. For high-dimensional models, approximate singular value decomposition techniques estimate distortion, highlighting areas more prone to attack.

Figure 2: Decoder-induced local distortion distribution across CelebA, CIFAR-10, and ImageNet-1K. CelebA displays nearly uniform distortion, while CIFAR-10 and ImageNet-1K show significant variation.

Per-Dimension Influence on Memorization

Beyond regional disparity, latent dimensions within a code contribute unequally to memorization. The authors propose measuring per-dimensional influence using the Jacobian's magnitude as a determinant of how much each dimension contributes to distortion. Removing less influential dimensions during attack preparation enhances the precision of existing membership inference attacks, reflecting improved AUROC and TPR@FPR metrics.

Figure 3: Membership inference AUC stratified by quartiles of local distortion of decoder. Higher-distortion quartiles consistently yield higher attack AUCs.

Methodology Impact

This work underscores the necessity of considering encoder-decoder dynamics when analyzing LDM privacy risks. The findings suggest a need to refine current attack methodologies, emphasizing geometric features as critical to accurately assessing memorization vulnerabilities. Filtering attack vectors by distortion metrics consistently improves accuracy across models and datasets.

Future Directions

This paper procures new avenues for understanding latent space impact on generative model security. Future lines of inquiry may explore optimizing encoder-decoder architectures specifically for robust model defense strategies or explore distortion-aware methodologies to ensure privacy robustness in diffusion frameworks.

Conclusion

The findings of "Latent Diffusion Inversion Requires Understanding the Latent Space" articulate the influence of latent structure on model memorization, broadening the scope for diffusion model inversion strategies that leverage spatial and dimensional distortion analysis. By prioritizing latent space geometry, researchers can enhance attack precision while minimizing privacy risks inherent in generative models.