Material-informed Gaussian Splatting for 3D World Reconstruction in a Digital Twin (2511.20348v1)

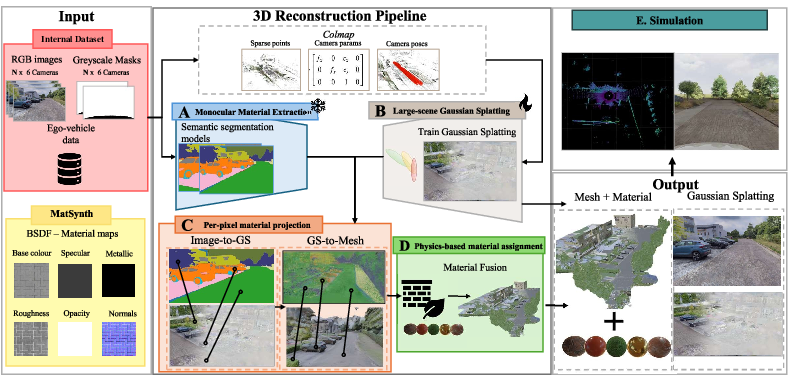

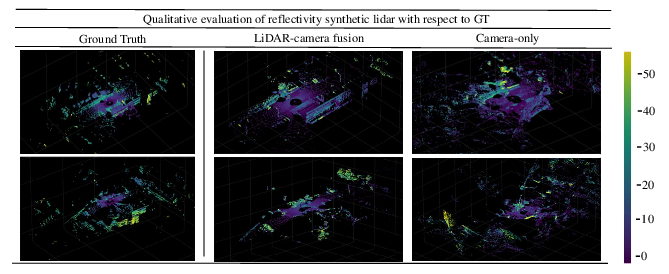

Abstract: 3D reconstruction for Digital Twins often relies on LiDAR-based methods, which provide accurate geometry but lack the semantics and textures naturally captured by cameras. Traditional LiDAR-camera fusion approaches require complex calibration and still struggle with certain materials like glass, which are visible in images but poorly represented in point clouds. We propose a camera-only pipeline that reconstructs scenes using 3D Gaussian Splatting from multi-view images, extracts semantic material masks via vision models, converts Gaussian representations to mesh surfaces with projected material labels, and assigns physics-based material properties for accurate sensor simulation in modern graphics engines and simulators. This approach combines photorealistic reconstruction with physics-based material assignment, providing sensor simulation fidelity comparable to LiDAR-camera fusion while eliminating hardware complexity and calibration requirements. We validate our camera-only method using an internal dataset from an instrumented test vehicle, leveraging LiDAR as ground truth for reflectivity validation alongside image similarity metrics.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What this paper is about (big picture)

The paper shows a new way to rebuild a 3D version of the real world (like a detailed video-game level of a street) using only regular camera photos. This 3D world is called a “Digital Twin.” The key idea is to make the digital world not just look real, but also behave like the real world when virtual sensors (like a simulated laser scanner) “see” it. To do that, the authors figure out which parts are glass, metal, asphalt, etc., and give those parts realistic physical properties.

The main questions the paper asks

- Can we build a high‑quality 3D world using only cameras, without using a laser scanner (LiDAR)?

- Can we correctly “paint” each surface with its real material (glass, metal, asphalt…) so that a virtual sensor reacts to it like a real one would?

- If we simulate a LiDAR on this camera‑only 3D world, will it behave similarly to a real LiDAR in the real world?

How they did it (in simple steps)

Think of making a movie set from photos, then assigning the right materials so lights and sensors act realistically. The pipeline has five steps:

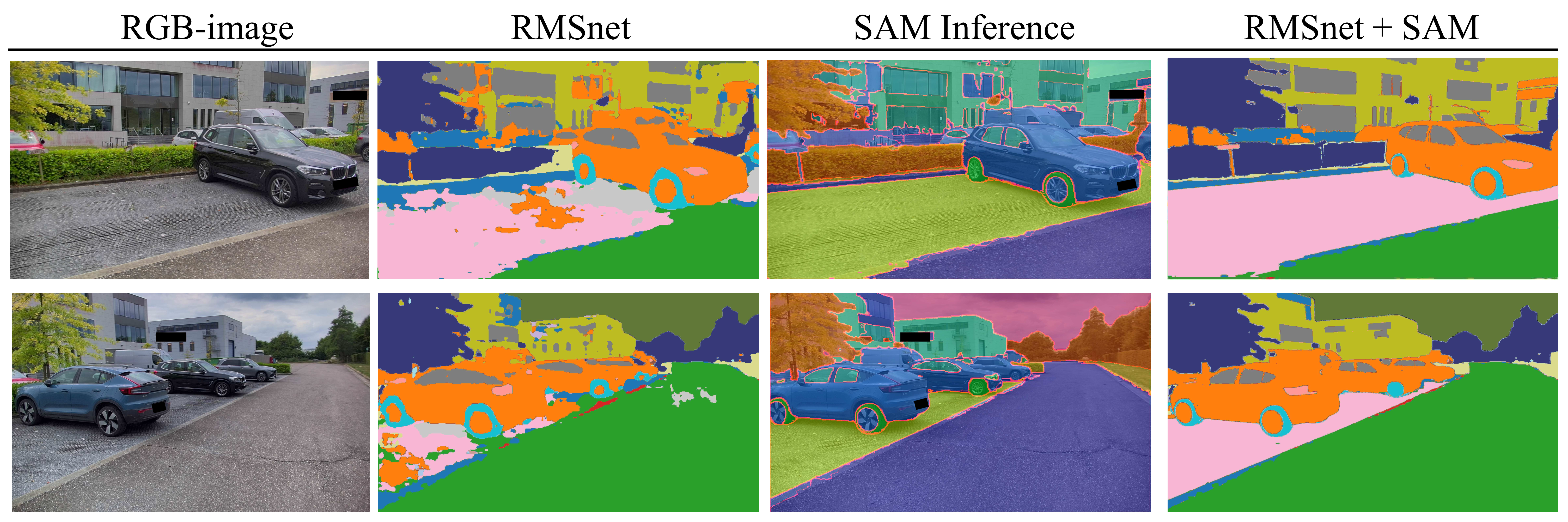

- Step 1: Find materials in the photos The system looks at each image and labels every pixel with a material, like “asphalt,” “glass,” or “metal.” It first guesses materials from texture and color, then sharpens the edges using an object‑finding tool. This is like coloring within the lines so each region has a consistent label.

- Step 2: Rebuild the 3D scene from photos (camera‑only) They use a method called “3D Gaussian Splatting.” Imagine thousands of soft, colored dots floating in 3D that, together, look like the original scene from any angle. They also extract a “mesh,” which is a clean surface made of tiny triangles (like a very fine 3D net). The mesh is important because most simulators need this kind of geometry.

- Step 3: Move 2D labels into 3D The material labels from the 2D photos are projected onto the 3D model. In everyday terms, it’s like shining each photo onto the 3D world and deciding, triangle by triangle, what material it is based on what most photos say.

- Step 4: Give surfaces real‑world physical behavior They use physics‑based materials (often called PBR) so the surfaces interact with light and sensors correctly. For example, glass can be transparent and reflective; metal reflects differently than concrete. These properties make virtual sensors respond more realistically.

- Step 5: Test it with a virtual LiDAR Finally, they place the 3D world into a simulator and “drive” a virtual car with a virtual LiDAR through it. Then they compare the simulated LiDAR results to real LiDAR scans recorded from the same place in the real world. This checks if their camera‑only method is trustworthy.

Helpful analogies for the technical terms:

- Digital Twin: a lifelike digital copy of a real place.

- LiDAR: a sensor that shoots tiny laser pulses and measures how long they take to bounce back to build a 3D map and measure reflectivity (how strong the return is).

- 3D Gaussian Splatting: building a scene from many soft 3D dots that can be rendered very fast and look very realistic.

- Mesh: the clean, triangle‑based surface used by most 3D tools and simulators.

- Material segmentation: labeling which pixels are glass, metal, road, etc.

- PBR (physics‑based rendering): materials with real‑world properties so light and sensors behave realistically on them.

What they found and why it matters

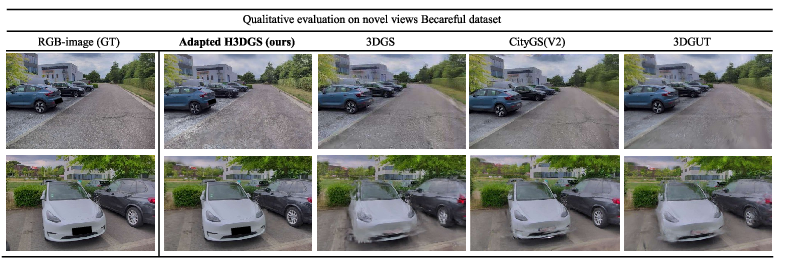

- Photorealistic looks: Their camera‑only 3D reconstructions look very good, close to real photos from new viewpoints. Some exact pixel‑by‑pixel scores are a bit lower than other methods, but a key “perceptual” score (how real it looks to people) was the best on average. In short: it looks convincing.

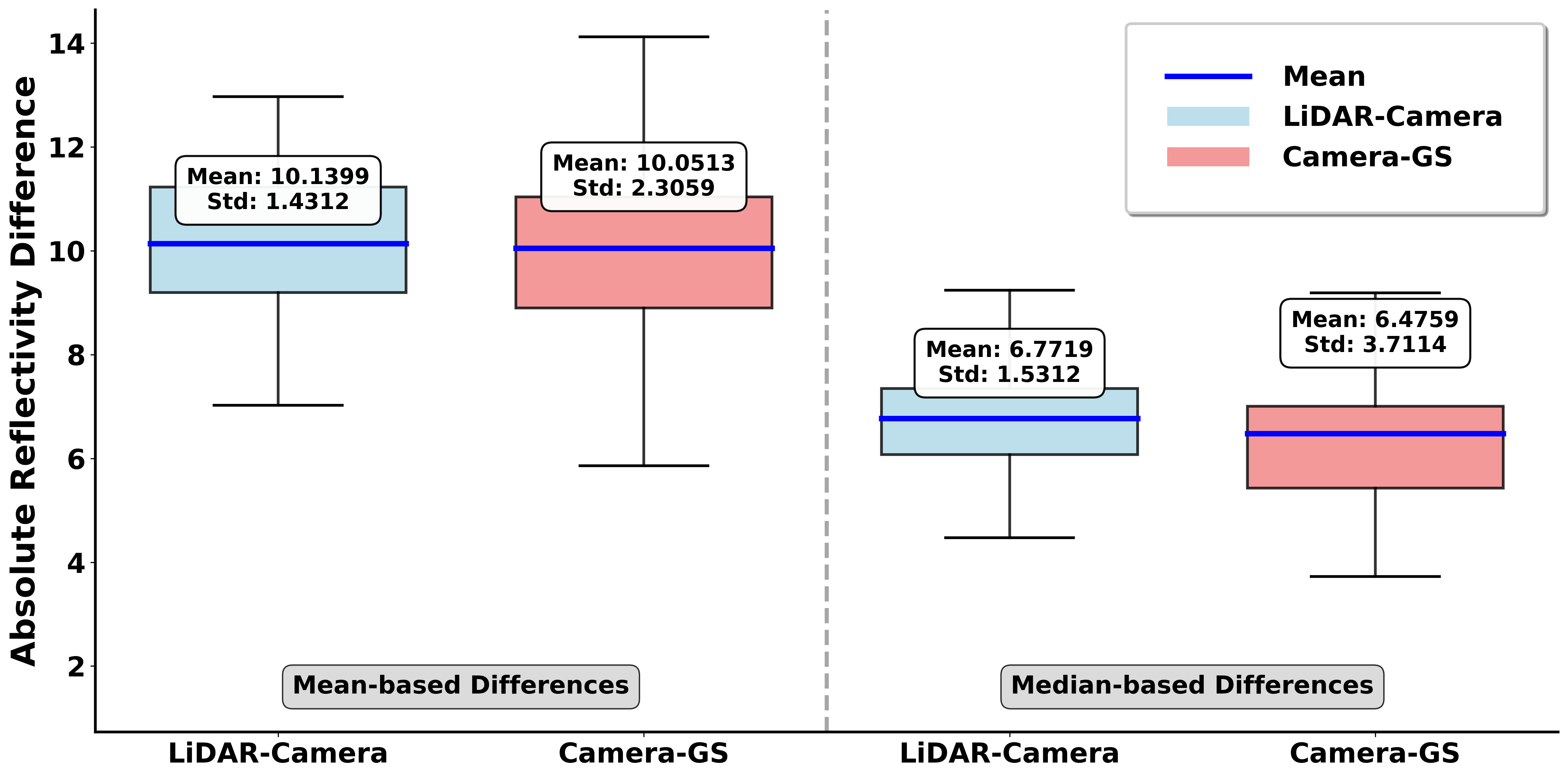

- Sensor realism (the big test): When they simulated a LiDAR on their camera‑only 3D world and compared it to real LiDAR data from the same scenes, the errors were about the same as a strong baseline that used real LiDAR for building the 3D geometry. That’s impressive because their method didn’t need a LiDAR to build the scene in the first place.

- Materials matter: By correctly labeling materials and using physics‑based settings, the simulated LiDAR reflected off surfaces in a realistic way. For example, glass and metal behaved more like they do in reality. This is important because LiDAR can struggle with some materials (like glass), while cameras capture them well.

- Practical bonus: Their approach avoids the hassle of using multiple sensors at once (no tricky calibration between LiDAR and cameras). You can build high‑quality Digital Twins with just cameras, which are cheaper and easier to install.

Limits they noticed:

- Camera‑only geometry can be a bit noisier than LiDAR, especially for faraway or shiny/transparent objects.

- Plants (like trees and bushes) were harder to simulate perfectly, likely due to complex shapes and simplified material settings.

Why this research matters (impact)

- Easier, cheaper Digital Twins: You can create realistic, physically meaningful 3D worlds using only cameras. That lowers the cost and complexity for companies testing self‑driving features.

- Better testing before real roads: With believable materials and realistic sensor responses, engineers can safely test how cars “see” tricky surfaces (glass buildings, shiny cars, wet roads) in simulation before going outside.

- Fewer hardware headaches: Skipping LiDAR during reconstruction means less equipment, less data to sync, and fewer calibration steps.

- A strong base for future work: The authors suggest improving geometry from cameras, handling moving objects (dynamic scenes), and learning even better material models. That could make Digital Twins even closer to reality.

In short: The paper shows that with smart use of photos, careful material labeling, and physics‑based settings, you can build 3D worlds that both look real and make virtual sensors behave like real ones—without needing a laser scanner to build the scene.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored in the paper that future researchers could address.

- Limited data diversity and scale: evaluation is restricted to five short, static urban scenes; no coverage of night-time, adverse weather (rain, fog, snow), wet/icy surfaces, seasonal changes, or complex lighting that affect materials and reconstruction.

- Lack of geometric accuracy benchmarking: no quantitative mesh quality metrics (e.g., chamfer/L2 distance vs. LiDAR, normal deviation, completeness) or alignment error analyses against ground-truth geometry.

- Unquantified impact of disabling H3DGS LoD/anti-aliasing: trade-offs between compatibility with simulators and reconstruction/rendering fidelity are not systematically measured; guidance on preserving pixel-level accuracy while maintaining engine compatibility is missing.

- Reliability on GPS/COLMAP priors: robustness to inaccurate GPS, GNSS-denied environments, and camera intrinsics/extrinsics drift is untested; fallbacks or self-calibration strategies are not explored.

- Dynamic scenes unsupported: pipeline targets static scenes; handling moving actors, non-rigid objects, temporal material changes, and motion-consistent labeling remains an open problem.

- Motion blur and ego-motion artifacts: side-camera motion blur and moving-capture artifacts degrade quality; no motion compensation, deblurring, or joint pose refinement strategies are evaluated.

- Material class coverage and granularity: segmentation focuses on coarse classes (e.g., asphalt, concrete, glass, metal); lacks fine-grained/critical ADAS materials such as lane markings, retroreflective signs, wet/dusty surfaces, plastics, and composites.

- Error propagation in shape-aware refinement: majority voting within FastSAM/SAM2 masks can overwrite fine material details (e.g., road markings); strategies for preserving small-scale material patterns are not studied.

- Cross-view material consistency: SegAnyGS is used, but there is no quantitative 3D consistency metric for material labels across views or per-surface conflict analysis and resolution.

- Label projection fidelity to mesh: nearest-Gaussian KNN assignment may mislabel coarse triangles and mixed-material surfaces; methods for sub-triangle material mixing (e.g., per-vertex/per-texel labeling) and sensitivity to mesh resolution are unaddressed.

- Transparent and reflective surface handling: depth-sorted alpha blending can assign background labels through transparent surfaces (e.g., glass); explicit modeling of transmission/reflectance, BTDF/BRDF choice, and label disambiguation for transparent materials is missing.

- Vegetation reflectivity mismatch: systematic over-prediction suggests missing volumetric/porous scattering models and species/season variability; parameterization and validation for foliage remains open.

- Semantic-to-physical mapping gap: selection of PBR textures from Matsynth is not tied to measured wavelength-dependent BRDF/BTDF for LiDAR; a reproducible pipeline to derive physically accurate material parameters from semantic labels and imaging is absent.

- Per-material reflectivity validation: reflectivity errors are reported globally; breakdown per material, incidence angle, and range (including multi-echo behavior) is missing to pinpoint which materials and geometries need improved modeling.

- Single LiDAR model and wavelength: validation is only for an Ouster OS1-128; generalization to other LiDAR architectures (spinning vs. solid-state), wavelengths (905 nm vs. 1550 nm), beam divergence, and detector characteristics is not assessed.

- Other sensors not validated: no evaluation for radar, camera photometry under PBR assignments, or cross-sensor consistency; extending material assignments to radar-specific scattering and camera HDR/tonemapping remains open.

- Chunk merging artifacts and scalability: custom merging of H3DGS chunks is not evaluated for seam artifacts, texture continuity, or scalability to kilometer-scale scenes and high-density urban environments.

- Compute and performance reporting: training/inference time, memory usage, throughput, and real-time rendering performance in downstream engines (with LoD off) are not reported; guidelines for large-scale processing budgets are missing.

- Sensitivity analyses: effects of segmentation model choice (FastSAM vs. SAM2), mask quality, and COLMAP uncertainties on final reflectivity accuracy are not propagated beyond 2D segmentation metrics.

- Ground-truth materials: the linkage between Matsynth materials and real-world site-specific materials is unclear; a protocol for empirical material capture (spectral BRDF/BTDF, roughness, index of refraction) and its integration into the pipeline is needed.

- Multi-material and layered surfaces: handling painted lines on asphalt, coatings on metals, or layered composites is not supported; methods for layered PBR and spatial mixing at render time are unaddressed.

- Occlusion and label conflicts: no analysis of failure cases under heavy occlusion, thin structures, specular highlights, or view-dependent appearance that cause cross-view label disagreements.

- Perceptual evaluation: despite strong LPIPS, no user paper or task-driven metrics (e.g., downstream perception performance in the simulator) validate whether perceptual improvements translate to better ADAS outcomes.

- Reproducibility and benchmarks: reliance on an internal dataset and proprietary simulator limits reproducibility; open-source code, standard benchmarks, and public digital-twin testbeds would enable broader validation.

- Weathering and aging: material properties change over time due to wear, dirt, moisture; temporal modeling and re-parameterization for aged materials are not explored.

- Transparency in simulation: glass and water surfaces require wavelength-dependent absorption/refraction; simulator-side support and validation for transmission paths and ghost returns are not demonstrated.

- Uncertainty estimation: no confidence measures for reconstructed geometry or material labels; mechanisms to quantify and propagate uncertainty through simulation outputs are absent.

Practical Applications

Immediate Applications

Below are concrete, deployable uses that can be implemented with the paper’s camera-only, material-informed Gaussian Splatting pipeline today.

- ADAS/Autonomous driving scenario reconstruction and LiDAR-in-the-loop testing — sectors: automotive, software/simulation; tools/products/workflows: “Camera-Only Digital Twin Builder” that ingests multi-view drives (fleet or test-vehicle cameras), runs COLMAP → H3DGS/MiLO → 2D-to-3D material projection → PBR mesh export → Simcenter Prescan/Unreal; enables regression tests of perception stacks with realistic, material-aware LiDAR reflectivity; dependencies/assumptions: sufficient multi-view coverage and overlap, reasonably accurate camera intrinsics/poses (SfM/GPS priors), mostly static scenes, PBR material library coverage for road, building, and vegetation classes, GPU compute.

- Cost-reduction for real-world data collection and calibration — sectors: automotive, robotics; tools/products/workflows: replace expensive LiDAR or complex LiDAR-camera calibration with camera-only capture for many validation tasks; deploy as a “Calibration-Light Validation” workflow for early-stage ADAS feature testing; dependencies/assumptions: acceptable tolerance to camera-only geometric noise, quality depends on lighting/motion blur and segmentation generalization to target geography.

- Material-aware synthetic data generation for perception training — sectors: automotive, robotics, software; tools/products/workflows: produce photoreal RGB and realistic LiDAR supervision (including reflectivity) from PBR meshes for training object/material recognition, ground segmentation, or reflectivity-aware perception; dependencies/assumptions: need domain-relevant PBR mappings and class taxonomies; geometry and materials must match distribution of target deployment.

- Robotics simulation in factories/warehouses with LiDAR-like sensing realism — sectors: robotics, manufacturing; tools/products/workflows: “Camera-to-ROS/Ignition Plug‑in” exporting PBR meshes for sensor simulation and navigation benchmarking; dependencies/assumptions: adequate indoor material taxonomy and PBR textures, controlled lighting, static layout during capture.

- As-built site capture for planning and safety reviews — sectors: AEC (architecture, engineering, construction), infrastructure; tools/products/workflows: rapid PBR mesh creation from site photos for safety walkthroughs, visibility studies, or crane/vehicle pathing; exports to Unreal/Blender or BIM-adjacent viewers; dependencies/assumptions: scene access for image capture, masking/removal of dynamic equipment/people, compliance with site safety and privacy.

- VFX/Games/AR asset digitization with physically plausible materials — sectors: media, gaming, XR; tools/products/workflows: pipeline to generate photoreal meshes with BRDF-consistent materials from location stills; speeds up set/prop digitization; dependencies/assumptions: consistent lighting across captures; postprocessing for baked textures vs engine lighting; IP/usage rights.

- Insurance and forensic scene reconstruction (accident, incident sites) — sectors: insurance, legal; tools/products/workflows: “Material-Accurate Scene Replay” for claims review or litigation support, including sensor viewpoint replication and reflectivity-informed LiDAR renders; dependencies/assumptions: thorough photo capture from multiple viewpoints; chain-of-custody and evidentiary standards; documented uncertainties.

- Municipal road asset inventories and maintenance prioritization — sectors: public sector, smart cities; tools/products/workflows: camera-only city street scans yielding material-labeled meshes for pavement condition trend analysis and signage/material audits; dependencies/assumptions: coverage via municipal dashcams or contracted fleets, policy-based privacy protections, scalable processing for city-scale.

- Education and research labs for sensor physics and graphics — sectors: academia, education; tools/products/workflows: teaching modules to demonstrate BRDF, material segmentation, and LiDAR reflectivity; minimal hardware (consumer cameras) with open-source toolchain; dependencies/assumptions: commodity GPUs; curated scenes for classroom scale.

- Cultural heritage digitization (static exhibits and facades) — sectors: culture, museums, tourism; tools/products/workflows: material-aware reconstructions for virtual tours and conservation records; dependencies/assumptions: permissions for capture, stable lighting or photometric normalization, texture rights.

- Software modules as product components — sectors: software; tools/products/workflows: “2D→3D Material Projection SDK,” “PBR Mesh Exporter,” and “Reflectivity Validator” components for integration into existing digital-twin/graphics pipelines; dependencies/assumptions: engine compatibility (Unreal/Blender/Prescan), maintenance of material taxonomies and PBR libraries.

Long-Term Applications

These use cases require advances in dynamic-scene handling, scaling, standards, or cross-sensor physics before they become routine.

- City-scale, continuously updated digital twins from fleet cameras — sectors: smart cities, automotive; tools/products/workflows: always-on reconstruction from ride-hailing/municipal fleets to update road materials, signage, façades; used for AV regression, urban analytics; dependencies/assumptions: robust dynamic-object handling, map alignment, privacy/compliance frameworks, streaming/compute at scale, automatic QoS monitoring.

- Dynamic-scene simulation (moving actors, deformable and time-varying materials) — sectors: automotive, robotics, media; tools/products/workflows: extend Gaussian/mesh pipeline with temporal geometry and material updates for realistic traffic, crowds, and weathering; dependencies/assumptions: research into dynamic Gaussian/mesh co-optimization, stable tracking, and material parameter changes over time.

- Cross-sensor digital twins (radar, thermal, event cameras) — sectors: automotive, defense, public safety; tools/products/workflows: parameterize not only BRDF but also radar cross-section and thermal emissivity to simulate multi-sensor stacks; dependencies/assumptions: validated mappings from semantic materials to sensor-specific physical parameters and simulators; curated material databases beyond PBR.

- Hardware-in-the-loop sensor design and certification sandboxes — sectors: automotive, test/certification, policy; tools/products/workflows: standardized camera-only reconstructions to benchmark new LiDARs/algorithms, support regulatory evidence of performance in edge cases (glass/metal façades, tunnels); dependencies/assumptions: agreement on metrics, scene banks, and test protocols; third-party auditing.

- Construction robotics and logistics planning with material-aware interactions — sectors: AEC, logistics; tools/products/workflows: plan robot tasks considering surface reflectivity/slipperiness proxies and sensor degradation; dependencies/assumptions: mapping from PBR proxies to contact/friction and sensor degradation models; dynamic site updates.

- Energy and utilities inspection twins (powerlines, substations, pipelines) — sectors: energy, utilities; tools/products/workflows: UAV or vehicle camera capture to build material-aware twins for inspection planning and sensor simulation (e.g., LiDAR glints on conductors); dependencies/assumptions: access to restricted sites, specialized metal/glass PBRs, safety and flight regulations.

- Retail and facility twins for in‑store analytics and robot navigation — sectors: retail, facilities; tools/products/workflows: indoor reconstructions for planogram testing, inventory robots, and shopper-flow simulations with sensor realism; dependencies/assumptions: robust indoor SfM under repetitive textures and reflectivity, tailored material segmenters for indoor classes, privacy safeguards.

- Standards for material taxonomies and PBR‑to‑physics mappings — sectors: standardization bodies, policy, software; tools/products/workflows: open material schemas linking semantic labels to BRDF parameters and sensor-physics proxies (e.g., LiDAR reflectivity normalization); dependencies/assumptions: cross-industry collaboration, shared datasets, validation methodologies.

- Consumer-grade room capture with material realism for XR and home planning — sectors: consumer XR, real estate; tools/products/workflows: smartphone capture → material-aware meshes for VR staging, lighting previews, and consumer robots’ navigation assessments; dependencies/assumptions: on-device or cloud compute, user guidance for coverage, simplified material sets and auto-tuning.

- Privacy-preserving, federated reconstruction at scale — sectors: public sector, platforms; tools/products/workflows: edge or federated pipelines to build twins without centralizing raw images; dependencies/assumptions: secure feature/representation sharing (e.g., compressed Gaussians), differential privacy, regulatory acceptance.

- Learned material representations reducing reliance on preset PBR libraries — sectors: software, automotive, robotics; tools/products/workflows: jointly learn material parameters from images and sparse sensor readings for better generalization; dependencies/assumptions: datasets with ground-truth material measurements, robust inverse rendering at scale, domain adaptation.

Notes on Feasibility and Dependencies (common across many applications)

- Data capture: success depends on multi-view coverage, stable camera intrinsics, and manageable motion blur; moving ego-vehicle requires masking and careful sampling.

- Scene characteristics: current pipeline targets static environments; dynamic objects and highly reflective/transparent surfaces remain challenging.

- Material segmentation: performance hinges on segmenters (RMSNet + FastSAM) generalizing to local materials; swapping in stronger models (e.g., SAM2) improves accuracy at added compute cost.

- Material libraries: realistic simulation presumes access to high-quality PBR datasets and correct mapping from semantic labels to physical parameters relevant to the target sensor(s).

- Compute and tooling: GPU resources for training and support for mesh and PBR in downstream engines (Unreal, Blender, Prescan) are required.

- Validation: while LiDAR is not needed for reconstruction, it is useful for benchmarking reflectivity and guiding improvements when available.

- Legal/ethical: capture permissions, privacy (faces/plates), and IP around textures must be respected, especially for municipal and indoor deployments.

Glossary

- 3D Gaussian Splatting: A point-based radiance field representation using anisotropic 3D Gaussians for fast, differentiable rendering and reconstruction. "We propose a camera-only pipeline that reconstructs scenes using 3D Gaussian Splatting from multi-view images"

- 3DGS: The original 3D Gaussian Splatting method for real-time radiance field rendering used as a baseline. "From left to right: ground truth RGB, our adapted H3DGS model, 3DGS, CityGaussianV2, and 3DGUT."

- 3DGUT: A Gaussian Splatting variant supporting distorted cameras and secondary rays for improved rendering. "From left to right: ground truth RGB, our adapted H3DGS model, 3DGS, CityGaussianV2, and 3DGUT."

- ADAS (Advanced Driver Assistance Systems): Automotive driver-assistance technologies relying on multimodal sensors and algorithms. "Modern Advanced Driver Assistance Systems (ADAS) and AI systems rely on sophisticated algorithms and complex multimodal sensor setups"

- Alpha blending: A compositing technique that uses alpha values and depth ordering to combine overlapping primitives. "When multiple Gaussians project to the same pixel, depth sorting during alpha blending assigns the label of the closest Gaussian."

- Bidirectional Reflectance Distribution Function (BRDF): A function describing how light is reflected at a surface as a function of incoming and outgoing directions. "Modern graphics engines simulate surface interactions through the Bidirectional Reflectance Distribution Function (BRDF)"

- CityGaussianV2: A large-scale Gaussian Splatting approach emphasizing efficiency and geometric accuracy for urban scenes. "we employ H3DGS, selected over alternatives such as CityGaussianV2 due to its superior visual quality on street-level autonomous driving datasets."

- COLMAP: A Structure-from-Motion/Multi-View Stereo pipeline that estimates camera poses and produces sparse point clouds from images. "Trained on the same COLMAP sparse point cloud, MiLO produces both a 3D Gaussian model and an explicit mesh."

- Delaunay triangulation: A triangulation method that maximizes minimum angles and avoids points inside triangle circumcircles, used for mesh extraction. "integrates mesh extraction directly into training via Delaunay triangulation, enabling joint optimization of appearance and geometry."

- Digital Twin: A high-fidelity virtual replica of a physical environment/system used for simulation, analysis, and validation. "3D reconstruction for Digital Twins often relies on LiDAR-based methods"

- Differentiable rasterization: A rendering technique where the rasterization process is differentiable, enabling gradient-based optimization of scene parameters. "We employ differentiable rasterization to render Gaussians to 2D"

- Ego-vehicle: The instrumented vehicle carrying the sensor suite in a driving dataset or simulation. "To avoid artifacts from the moving ego-vehicle, we mask it out in all input images."

- Fast Segment Anything (FastSAM): A lightweight, fast instance segmentation model used to extract object boundaries. "Fast Segment Anything (FastSAM) to identify all instances within each scene."

- Gaussian primitives: The elementary 3D Gaussian entities used to represent a scene in Gaussian Splatting. "Surface Extraction from Gaussian Primitives"

- H3DGS: A hierarchical 3D Gaussian representation for real-time rendering of very large datasets. "H3DGS constructs a global scaffold and subdivides the scene into chunks."

- Level-of-Detail (LoD): A multi-resolution strategy that allocates higher detail to nearby regions to optimize rendering performance. "Many employ Level-of-Detail (LoD) techniques, allocating higher resolution to nearby regions to improve rendering speed."

- LiDAR-camera fusion: A reconstruction approach combining LiDAR geometry with camera appearance and semantics. "we compare our camera-only approach against the LiDAR-camera fusion baseline"

- LiDAR reflectivity: The returned signal strength from LiDAR, influenced by surface materials and incidence angles. "enabling accurate physics-based LiDAR reflectivity simulation."

- LPIPS: A learned perceptual image similarity metric that correlates strongly with human judgments. "using image similarity metrics (PSNR, SSIM, LPIPS)"

- MiLO (Mesh-In-the-Loop Gaussian Splatting): A method that jointly optimizes Gaussian Splatting with mesh extraction for accurate surface geometry. "we employ MiLO, which provides geometrically accurate mesh extraction required for physics-based simulation."

- Neural Kernel Surface Reconstruction (NKSR): A neural method that reconstructs meshes from point clouds using kernel-based representations. "The LiDAR-based baseline uses NKSR for mesh reconstruction from LiDAR point clouds"

- Neural Radiance Fields (NeRF): An implicit volumetric representation enabling photorealistic novel view synthesis from images. "Neural Radiance Fields (NeRF) enabled photorealistic novel view synthesis from camera-only input"

- Novel view synthesis: Generating images from unseen camera viewpoints based on a learned scene representation. "Recent advancements in novel view synthesis have introduced 3D Gaussian Splatting"

- Physically-Based Rendering (PBR): A rendering paradigm that models materials and light transport using physically meaningful parameters. "These datasets provide PBR (Physically-Based Rendering) texture maps including base color, normal maps, roughness, and metallic maps."

- Poisson reconstruction: A surface reconstruction technique that solves a Poisson equation over oriented points to recover a continuous mesh. "Methods such as ... use Poisson reconstruction"

- Principled BSDF (Disney BSDF): A standardized physically-based shader model that parameterizes surface scattering with intuitive controls. "We assign physics-based material properties to the mesh using the Principled BSDF (Bidirectional Scattering Distribution Function) shader following the Disney BSDF standard"

- RTK-GPS: Real-Time Kinematic GPS providing centimeter-level positioning via carrier-phase corrections. "equipped with an Ouster OS1-128 LiDAR, six surround-view cameras, and RTK-GPS, all hardware-synchronized at 20Hz."

- RMSNet: An RGB-only material segmentation network tailored for road scenes, distinguishing classes like asphalt, concrete, and glass. "we adopt RMSNet due to its strong performance on autonomous driving datasets"

- SAM2: The second-generation Segment Anything model offering higher-quality segmentation masks at increased computational cost. "Although SAM2 offers superior segmentation quality, the 2% accuracy improvement does not justify the increased computational overhead for our application."

- SegAnyGS: A method that enforces consistent segmentation labels across 3D Gaussians from multiple views. "Inconsistencies across views, such as static objects receiving different labels, are resolved using SegAnyGS"

- Signed Distance Field (SDF): An implicit representation where the value at any point is the signed distance to the nearest surface. "Signed Distance Field (SDF)-based approaches require dense multi-view data."

- Simcenter Prescan: An automotive simulation platform used for physics-based sensor modeling and scenario validation. "we integrate the reconstructed scenes into Simcenter Prescan to recreate realistic traffic scenarios with physics-based sensor simulation."

Collections

Sign up for free to add this paper to one or more collections.