- The paper introduces Inv, an innovative framework that leverages invariant latent space to enhance language model inversion from outputs.

- It utilizes a two-stage training process employing contrastive alignment and reinforcement learning to maintain semantic and cyclic invariance.

- Experiments across nine datasets show Inv outperforms previous methods by 4.77% on BLEU scores with less training data, highlighting its robustness.

An Invariant Latent Space Perspective on LLM Inversion

Introduction to LLM Inversion

LLM Inversion (LMI) is an emerging threat to privacy and security, characterized by the ability to reconstruct hidden prompts from a LLM's outputs. This paper presents a novel approach, reframing LMI through the reusability of the LLMs' (LLMs) latent space. The proposed Invariant Latent Space Hypothesis (ILSH) posits that outputs with shared source prompts should maintain semantic consistency, and cyclic mappings between inputs and outputs should reflect self-consistency in a unified latent space.

Method: Invariant Inverse Attacker (Inv)

The paper introduces Inv, an advanced framework leveraging ILSH to enhance LMI efficiency and effectiveness. The approach involves utilizing the LLM as an invariant decoder while developing a specialized inverse encoder that maps outputs back into consistent pseudo-representations. This restructured approach reduces dependency on extensive inverse data and enhances inversion fidelity.

Figure 1: Overview of Inv. An inverse encoder maps one or more outputs into denoised pseudo-representations in the LLM's latent space, and the LLM is reused to recover the prompt.

Training and Evaluation

Inv employs a two-stage training process emphasizing source and cyclic invariances. Initial training focuses on contrastive alignment to ensure semantic consistency across diverse outputs from identical prompts. Subsequently, reinforcement learning strengthens cyclic invariance, optimizing the model for high-fidelity input reconstruction via the inverse encoder.

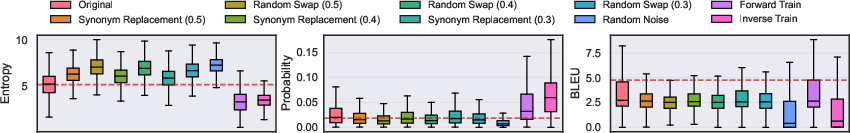

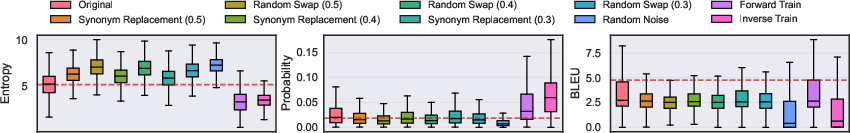

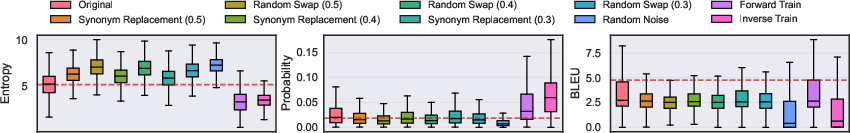

Figure 2: Evaluation of cyclic invariance. Synonym Replacement, Random Swap (randomly swapping words within a sentence), and Random Noise (replacing words with random WordNet entries) represent different perturbation types. Numbers in parentheses indicate the proportion of perturbed words. The brown dashed line marks the mean under the original setting.

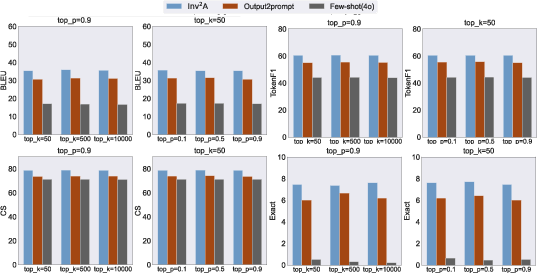

Inv exhibits notable resilience, outperforming past methods on metrics like BLEU score by 4.77%, demanding significantly less training data to achieve comparable effectiveness. Experiments conducted across nine datasets demonstrate Inv's superiority, leveraging invariant latent geometries inherent in LLMs, and challenging conventional defense strategies reliant on sampling randomness.

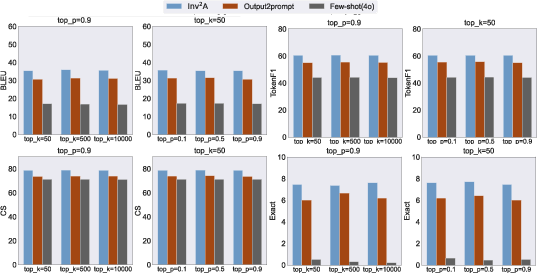

Figure 3: Robustness study of diverse sampling strategies (Top-k and Top-n).

Practical Implications and Future Directions

The findings underline the vulnerability of existing LLMs, fostering a pressing call for enhanced security measures. By exposing the latent inversion properties of LLMs, this research catalyzes a shift towards fortified defenses and privacy-preserving mechanisms, emphasizing model robustness without compromising utility.

Conclusion

The presented research offers a profound perspective on LMI, showcasing Inv as a formidable framework explicitly harnessing the latent invariances within LLMs to optimize inversion processes. This study marks a pivotal step within AI, prompting further exploration into effective defenses and encouraging responsible consideration of LMI capabilities.