Automated Market Making for Goods with Perishable Utility (2511.16357v1)

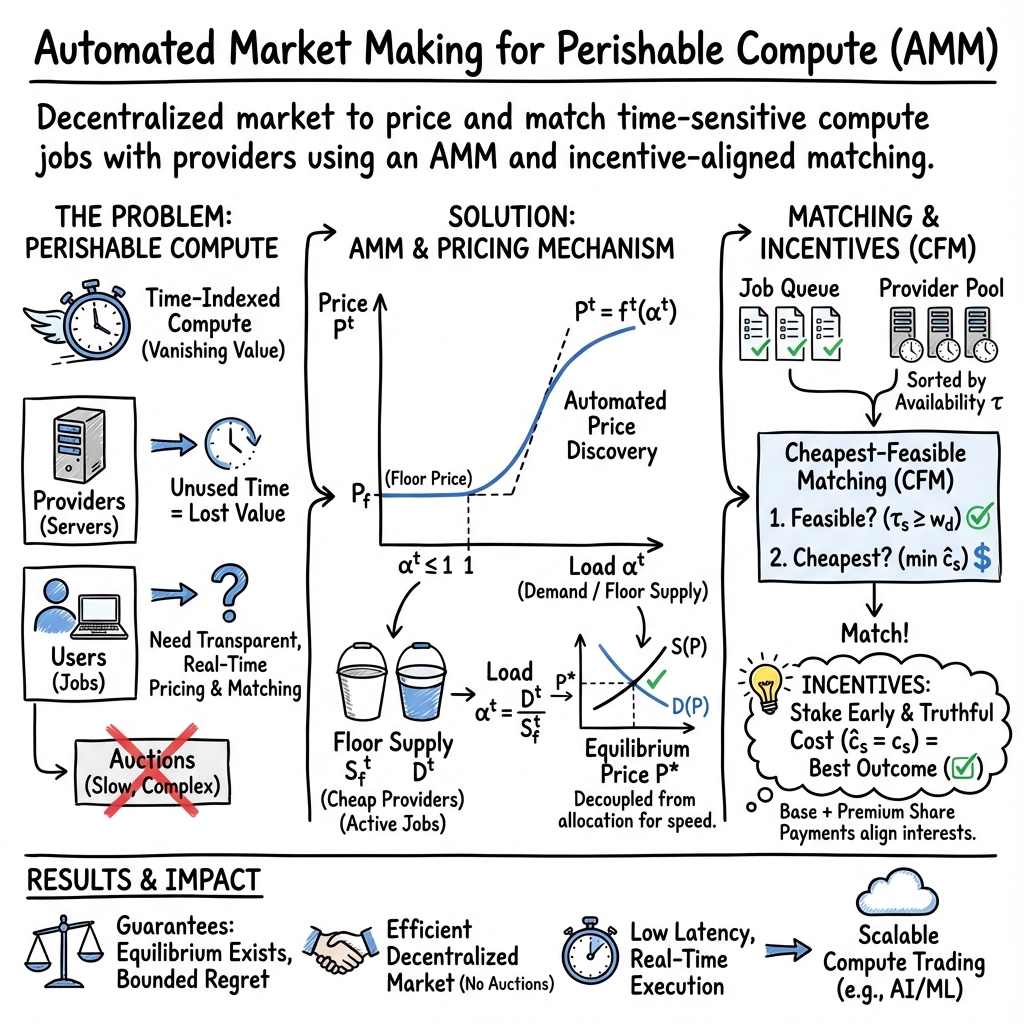

Abstract: We study decentralized markets for goods whose utility perishes in time, with compute as a primary motivation. Recent advances in reproducible and verifiable execution allow jobs to pause, verify, and resume across heterogeneous hardware, which allow us to treat compute as time indexed capacity rather than bespoke bundles. We design an automated market maker (AMM) that posts an hourly price as a concave function of load--the ratio of current demand to a "floor supply" (providers willing to work at a preset floor). This mechanism decouples price discovery from allocation and yields transparent, low latency trading. We establish existence and uniqueness of equilibrium quotes and give conditions under which the equilibrium is admissible (i.e. active supply weakly exceeds demand). To align incentives, we pair a premium sharing pool (base cost plus a pro rata share of contemporaneous surplus) with a Cheapest Feasible Matching (CFM) rule; under mild assumptions, providers optimally stake early and fully while truthfully report costs. Despite being simple and computationally efficient, we show that CFM attains bounded worst case regret relative to an optimal benchmark.

Sponsored by Paperpile, the PDF & BibTeX manager trusted by top AI labs.

Get 30 days freePaper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper designs a new kind of marketplace for computer time (the hours a machine can run your task). In this market, unused time disappears at the end of each hour—just like how an empty seat on a train has zero value after the train leaves. The authors show how to set fair, real‑time prices and quickly match people who need compute (users) with people who have idle machines (providers), while making sure everyone has good reasons to be honest and helpful.

What are the main questions the paper tries to answer?

- How can we set a simple, transparent price for computer time that reacts smoothly to supply and demand?

- How can we match jobs to machines fast without running complicated auctions?

- How do we encourage providers to offer their full availability and report their true costs?

- Does the market settle into a sensible “equilibrium” price, and is that equilibrium unique?

- Can a simple matching rule be nearly as good as the best possible method?

How does their system work? (Explained with everyday ideas)

Treating compute as a “perishable” good

Think of computer time like electricity or concert seats. If no one uses an hour of compute, it’s gone forever. Recent tech improvements let jobs pause, verify correctness, and resume on different machines. That’s like saving your video game, proving your save is valid, and loading it on another console. Because this is reliable, we can treat compute as interchangeable time slots instead of special one‑of‑a‑kind machines.

Automated Market Maker (AMM) for price setting

Instead of auctions, the market uses an automatic rule to post a price every hour. It looks at:

- Demand: how many jobs are waiting,

- Floor supply: how many providers are willing to work at a preset “floor price” (a safe, low number).

It then sets the price as a smooth, increasing function of “load,” which is demand divided by floor supply. If demand is less than or equal to the floor supply, the price stays at the floor. If demand rises above floor supply, price gently increases to attract more providers.

Analogy: It’s like a smart vending machine for time. If lots of people want compute at once, the price nudges up; if things are quiet, it stays low.

Providers stake and report costs

Providers lock in (stake) their machines for certain hours and say the lowest price they’re willing to accept. Their “availability window” counts down each hour if they stay staked.

Pool sharing to align incentives

When a provider works on a job, they get:

- Their base rate (the price they reported),

- Plus a fair share of the “premium”—the extra money users pay above base rates during that hour.

Analogy: It’s like a team of waiters splitting tips from the tables they served during the same shift. This rewards providers who show up early and honestly, because they join more shared premium pools over time.

Cheapest‑Feasible Matching (CFM)

Matching is kept simple and fast: the market picks the cheapest provider who can finish a job within the needed hours. “Feasible” means the provider has enough availability left to complete it.

Analogy: If you need a ride that takes 40 minutes, the app picks the cheapest driver who can actually make the trip before your deadline.

Verification and racing

To handle trust in a decentralized world, the system uses verifiable checkpoints (cryptographic “save points”) and a “racing” mechanism where multiple providers can try a job; the first valid progress wins. Misbehavior can be punished (“slashing”), which keeps everyone honest.

What did they find?

1) The price is well‑behaved

- There is always an equilibrium hourly price that fits the supply/demand situation, and it’s unique (no confusing multiple answers).

- Under mild conditions, this equilibrium is “admissible,” meaning there’s enough active supply to meet demand at that price. In plain words: the posted price won’t promise more than providers can deliver.

2) Incentives make providers honest and early

- With premium sharing and cheapest‑feasible matching, providers do best when they:

- Stake all their available time as soon as possible,

- Report their true minimum price (no reason to lie).

- This keeps prices fair and activates more supply right when it’s needed.

3) Simple matching is near‑optimal

- The greedy CFM rule (pick the cheapest provider who can finish the job) is fast and scalable.

- Even though it’s simple, it has bounded “regret”: its performance is guaranteed to be close to the best possible strategy, even in worst‑case scenarios.

4) Decoupling price and matching cuts latency

- By separating price setting (AMM) from allocation (greedy matching), the system avoids slow, complex auctions. That makes real‑time trading and scheduling practical.

Why does this matter?

- Lower costs and faster access for users: People with deadlines and budgets get transparent prices and quick matches.

- Better use of idle machines: Individuals and small providers can join easily and earn money from devices that would otherwise sit unused.

- Fair rewards and trust: Premium sharing and verification ensure providers are paid fairly and kept honest without heavy policing.

- Open, decentralized markets: Instead of relying on a few big cloud companies with opaque pricing, this design supports a competitive, transparent marketplace for compute.

What could this change in the future?

- A more liquid, reliable “compute economy”: Think of buying computer time the way you buy mobile data—simple, on‑demand, and fairly priced.

- Help for AI and data jobs: Tasks can pause and resume across different machines safely, lowering costs and improving resilience.

- Beyond compute: The approach could apply to other time‑sensitive resources, like battery storage, network bandwidth, or even last‑minute delivery slots.

In short, the paper shows how to run a fair, fast, and trustworthy market for something that disappears if you don’t use it: time on computers. By setting prices smoothly, matching jobs simply, and rewarding early honest participation, the system keeps both sides—users and providers—happy and the market healthy.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise, actionable list of what the paper leaves missing, uncertain, or unexplored. Items are grouped by theme to aid follow‑up research.

Modeling and equilibrium assumptions

- Realism of the “no outside option” assumption: How do the equilibrium and incentive results change when providers and users have outside markets (e.g., cloud, on‑prem) with nonzero reservation utilities?

- Static, period‑by‑period analysis: The paper proves existence/uniqueness of a per‑period quote but does not analyze intertemporal dynamics (e.g., stability, convergence, oscillations) under stochastic arrivals, backlogs, and strategic waiting.

- Load definition mismatch: Price depends on counts ( and ) rather than capacity/need (e.g., total requested hours vs. available machine‑hours or availability windows). How should use “hours-weighted” load to better reflect supply and demand intensity?

- Ignoring heterogeneous durations in pricing: Demand is modeled by job count, yet allocation feasibility depends critically on and . What pricing adjustments are needed to anticipate duration-induced congestion?

- Tier abstraction validity: How sensitive are results to performance dispersion within a “tier”? What measurement/normalization error (e.g., different throughput per “hour”) undermines the “fungible hour” assumption?

- Assumed continuity/concavity: Existence and comparative statics rely on continuous, concave, monotone mappings. How robust are results when user value functions are step‑like (e.g., hard deadlines) or non-concave?

- Upper bound on prices (): The equilibrium proof assumes a finite cap on users’ hourly budgets. What happens without this cap or when cap is hit (rationing/priority rules)?

- Admissibility conditions: The regular‑crossing (S1) and responsiveness (F1) assumptions ensuring at equilibrium are strong and may fail in thin markets. How to detect violations in real time and correct prices/allocations safely?

Mechanism design and incentives

- User truthfulness: The design focuses on provider incentives but does not elicit truthful reporting of user budgets, deadlines, or minimum viable hours. What mechanisms (e.g., penalties, commitments, auction surcharges) ensure users don’t misreport to game prices or priority?

- Strategic timing by users and providers: With time‑varying prices and checkpointing, users can delay to buy at lower prices; providers might strategically withhold supply to push up. What equilibria arise with such intertemporal strategies?

- Market power and collusion: How does the mechanism behave when a large provider (or cartel) can manipulate or demand (e.g., via sybil jobs) to raise prices and earn premiums?

- Floor supply governance: The floor price and are pivotal yet their update policy, governance, and manipulation resistance are undeveloped. What rules identify a “healthy” in volatile or seasonal markets?

- Incomplete information and thin markets: Incentive proofs assume “matching competitiveness” (a hazard‑rate lower bound). How can the system ensure this holds (or replace it) in small markets where a provider’s match probability is not highly sensitive to price?

- Pool‑sharing design vulnerabilities: Equal splitting of the premium pool by count encourages sybil splitting of provider identities to capture larger aggregate shares. How to make premiums sybil‑resistant (e.g., proportional to capacity, stake, or verified work)?

- Premium pool manipulation: Providers (or their affiliates) can submit sham jobs to raise and inflate the premium pool. What anti‑wash‑trading measures, deposit requirements, or fraud detection are needed?

- Matching priority externalities: Cheapest‑first matching may systematically deprioritize higher‑cost but more reliable providers. How to balance short‑run efficiency with long‑run reliability/participation incentives?

- User–provider surplus split: The platform’s revenue model is unspecified. If all surplus () is redistributed to providers, how does the marketplace sustain operations (fees, subsidies), and how do fees affect incentives?

Algorithmic design and guarantees

- Cheapest Feasible Matching (CFM) regret claim: The paper asserts bounded worst‑case regret but does not specify assumptions (arrival model, comparator, deadline constraints) or provide a formal bound or proof. What is the precise regret guarantee and under which adversarial or stochastic models?

- Feasibility vs. global optimality: Greedy assignment on ignores future arrivals and deadline interactions; it can lead to myopic blocking or starvation. What online algorithms (with proven bounds) better incorporate deadlines and multi‑period feasibility?

- Rationing at price caps or overload: When reaches or admissibility fails, what allocation/priority rules minimize welfare loss and strategic manipulation?

- Multi‑tier substitution: Users may trade off slower tiers (more hours) vs. faster tiers (fewer hours). How to design cross‑tier pricing/matching to handle substitution and prevent arbitrage/misalignment?

- Budget locking across periods: Users buy hours at , but prices update hourly. Are later hours price‑locked, prepaid, or re‑priced? How are budget overruns, cancellations, and partial completions settled?

- Backlog dynamics: The paper mentions but does not analyze how accumulating backlogs affect load, prices, and welfare, or how to avoid backlog-induced price spirals.

Verification, security, and operational issues

- RepOps and verification coverage: Some workloads are non‑deterministic or not checkpointable without significant overhead. What is the feasible coverage of the approach across real task types (ML training with nondeterminism, non‑idempotent IO, memory‑bound tasks)?

- Verification economics: The racing/slashing mechanism is referenced but not specified (stake sizing, verifier selection, false‑positive risk, griefing resistance, and latency/overhead). What are the precise protocols and their cost–security trade‑offs?

- Reliability and failure handling: How are provider failures, partial progress, or data corruption compensated? What is the penalty model and how is user loss (e.g., lost time near a deadline) handled?

- Data movement and privacy: The model abstracts away data staging, bandwidth constraints, and confidentiality requirements—key determinants of feasibility and utility. How are data transfer costs, privacy guarantees, and compliance constraints incorporated?

- Adversarial demand/supply spam: What anti‑sybil, rate‑limit, and deposit mechanisms prevent denial‑of‑service via spurious jobs/providers designed to distort and ?

- Price integrity and latency: Frequent recomputation of with decentralized inputs invites latency and front‑running risks. How is quote integrity ensured (e.g., commit–reveal, oracles, batching rules) without eroding “low‑latency trading” goals?

Empirical validation and deployment

- Lack of empirical evaluation: No simulations or experiments validate price stability, welfare, throughput, or robustness under realistic arrival patterns and cost distributions. What benchmark workloads and datasets can stress‑test the design?

- Parameter calibration: Guidance for selecting and tuning (shape, slope bounds), , stake sizes, and penalty rates is missing. How should these be calibrated to meet utilization and incentive goals across market regimes?

- Sensitivity to measurement error: Misestimation of , , , or tier performance may destabilize pricing and allocation. What monitoring and correction mechanisms keep the system resilient?

- Governance and legal considerations: The paper does not address governance for updating floors, dispute resolution, or regulatory issues (e.g., data jurisdiction, taxation, KYC) that affect participation and enforcement.

These gaps outline concrete avenues for extending theory, refining mechanisms, hardening security, and validating performance prior to practical deployment.

Practical Applications

Immediate Applications

Below are concrete, deployable use cases that can be built now by leveraging the paper’s AMM design, Cheapest-Feasible Matching (CFM), and reproducible/verified execution stack.

- Decentralized GPU marketplace for ML training and batch inference (software, cloud, AI)

- Description: Post an hourly, load-based price; providers stake machines with availability windows and reported costs; users submit jobs with budgets/deadlines; CFM assigns the cheapest feasible provider; checkpoint/verify lets jobs pause/migrate across heterogeneous GPUs.

- Potential tools/products/workflows:

- Provider client (staking, availability, attestation), job segmenter/checkpointer, price oracle/dashboard, CFM scheduler service, settlement contracts with premium-sharing and slashing, user SDK (budget+deadline APIs).

- Dependencies/assumptions: Deterministic operators and verifiable checkpointing (e.g., RepOps/Verde), basic collateral/slashing enforcement, sufficient network bandwidth for checkpoint transfers, job types that are preemptible and tolerant to migration, no-outside-option economics for participants.

- Internal “compute AMM” for enterprise clusters and universities (enterprise IT, education, HPC)

- Description: Convert idle cluster/GPU time into time-indexed capacity across departments/labs; transparent hourly pricing smooths peaks; CFM minimizes internal costs by allocating to lowest-cost nodes that can complete each job within its window.

- Potential tools/products/workflows:

- SLURM/Kubernetes scheduler plugin implementing CFM + AMM pricing; departmental cost centers as “providers” earning base+premium; budget/deadline-aware job submission portal; utilization analytics.

- Dependencies/assumptions: Intra-org policy for cost accounting; reproducible builds; acceptance of preemption and checkpoint-resume; minimal trust requirements (slashing may be simplified internally).

- Cloud cost-optimization for MLOps pipelines (software, finance/FinOps)

- Description: A controller that watches the posted AMM price and triggers workload start/pauses to optimize cost under budget/deadlines; leverages the paper’s discrete concave user utility model to set hours adaptively.

- Potential tools/products/workflows:

- Airflow/Ray/Kubeflow operator that buys hours when price ≤ threshold; integration with experiment tracking; budget guards for hyperparameter sweeps; price alerts and reservation hedges.

- Dependencies/assumptions: Checkpointable workloads; predictable job length estimates; access to the AMM price/feed; tolerable start/stop latency.

- VFX and rendering exchanges with frame-chunk checkpointing (media/entertainment)

- Description: Split rendering into verifiable chunks; the AMM stabilizes price during crunch periods; CFM prioritizes cheaper render nodes with sufficient windows.

- Potential tools/products/workflows:

- Blender/Arnold integration for chunked renders; job verifier; marketplace dashboard; provider payout with premium-sharing; “racing” for tight deadlines.

- Dependencies/assumptions: Deterministic rendering configs; reproducible containerized toolchains; acceptable artifact verification.

- Scientific batch jobs and parameter sweeps across federated labs (academia, public research)

- Description: Share idle HPC windows across labs; AMM pricing improves fairness and transparency; CFM avoids combinatorial auction overhead while giving bounded regret scheduling performance.

- Potential tools/products/workflows:

- Federated queue with budget/deadline submission; SLURM plugin; reporting for grants; lightweight slashing for failed segments; price-based admission control.

- Dependencies/assumptions: Inter-lab data-sharing agreements; reproducible numerical stacks; checkpointable simulations; minimal governance for disputes.

- Community “Compute LP” product for prosumers (finance, consumer tech)

- Description: Prosumer GPU owners stake devices as liquidity (time-bounded capacity) to earn base rate + pro-rata surplus; pool-sharing rewards early/continuous staking.

- Potential tools/products/workflows:

- Mobile/desktop app for staking and proof-of-availability; yield dashboard; automated diagnostics/attestation; reputation scoring; opt-in racing for higher reliability premiums.

- Dependencies/assumptions: Device hardening and sandboxing; collateral and slashing that are comprehensible to consumers; clear tax/reporting treatment.

- Edge/backfill inference during off-peak windows (telecom, edge computing)

- Description: Non-latency-critical inference (batch scoring, content tagging) absorbs idle edge capacity; AMM quotes per-edge tier; CFM hits cheapest feasible edge nodes first.

- Potential tools/products/workflows:

- Edge orchestrator integration; chunked inference batches; verification probes; regional AMM curves with floor supply per tier.

- Dependencies/assumptions: Verification overhead is small relative to batch size; stable network paths; light-weight checkpoint/rehydration for models.

- Transparent procurement pilots for public-sector compute (policy, public research)

- Description: Use AMM quotes and CFM allocation to run open, auditable compute procurement for grant-funded workloads; publish load curves and equilibrium price updates.

- Potential tools/products/workflows:

- Public dashboards; standardized SLAs/SLOs; archival of price/volume; basic dispute resolution tied to slashing outcomes.

- Dependencies/assumptions: Policy acceptance of cryptographic attestations; standard terms for preemption and data handling.

Long-Term Applications

These opportunities rely on broader adoption of deterministic/verifiable compute, scaling the market design, and/or regulatory and standards maturation.

- Cross-cloud compute clearinghouse with tiered fungibility (cloud, software)

- Description: A unified AMM quoting GPU-hour tiers across clouds and on-prem providers; time-bounded, verifiably fungible capacity tradable across heterogeneous hardware.

- Potential tools/products/workflows:

- Interop standards for tiers and deterministic ops; market-maker governance; cross-provider identity and sybil resistance; global CFM routing; MEV-resistant matching pipelines.

- Dependencies/assumptions: Industry-wide RepOps standards; cryptographic attestation (TEEs/remote attestation); robust identity/reputation; antitrust-compliant market governance.

- Compute derivatives and risk management (finance)

- Description: Futures/options on GPU-hours and “compute indices” for hedging AI roadmaps or training budgets; structured products using pool-sharing yield.

- Potential tools/products/workflows:

- Reference rate/price oracle; margining/clearing infra; risk models based on load elasticity and floor supply; reserve pools for extreme volatility.

- Dependencies/assumptions: Persistent and manipulation-resistant spot market; regulatory clarity; audited benchmarks; robust data feeds and surveillance.

- Carbon- and grid-aware demand response via compute AMM (energy, sustainability)

- Description: Couple the AMM slope to carbon intensity or renewable availability; shift flexible compute to low-carbon/low-price windows; providers earn green premia.

- Potential tools/products/workflows:

- Carbon-aware price adjustments; co-optimization with power markets; SLAs that trade latency for green cost savings.

- Dependencies/assumptions: Reliable carbon-intensity signals; multi-objective pricing policy; coordination with utilities/operators.

- Privacy-preserving verified compute at scale (healthcare, finance, government)

- Description: Add confidential computing/zero-knowledge proofs to the verification/racing stack for sensitive data; expand eligible workloads.

- Potential tools/products/workflows:

- TEEs + deterministic kernels; ZK proofs of correct execution or checkpoint transitions; compliance kits (HIPAA/GDPR).

- Dependencies/assumptions: Practical proof systems with acceptable overhead; certified deterministic toolchains; mature key management.

- Generalized perishable-utility AMMs beyond compute (mobility, ads, hospitality)

- Description: Apply “floor supply” load-based pricing and cheapest-feasible matching to other time-coupled perishables:

- Mobility/ridehailing driver-hours; last-minute hotel/venue slots; ad impressions in time-bounded campaigns.

- Potential tools/products/workflows:

- Sector-specific feasibility constraints (e.g., location/time windows for drivers); premium-sharing analogs to reward early/available supply; simple greedy matchers with bounded regret where applicable.

- Dependencies/assumptions: Suitable “feasibility” reductions (like checkpointing in compute) so that bundles become time-indexed units; reliable verification/SLAs; sector regulations.

- Global research compute commons and co-ops (academia, NGOs)

- Description: Federated, open compute pool where institutions contribute baseline capacity (floor supply) to stabilize prices; surplus capacity is dynamically priced for broader access.

- Potential tools/products/workflows:

- Governance charters; transparent auditing; price smoothing policies; equitable access rules embedded in AMM parameters.

- Dependencies/assumptions: Durable funding and governance; standardized verification; dispute resolution that spans jurisdictions.

- Robustness features: racing-at-scale and adversarial resilience (software, security)

- Description: Market-native redundancy (racing) and slashing for reliability; probabilistic replication under tight deadlines; anti-collusion and sybil-resistance mechanisms.

- Potential tools/products/workflows:

- Adaptive racing policies keyed to deadline/budget slack; economic penalties for delay or divergence; reputation-weighted matching.

- Dependencies/assumptions: Cost-effective redundancy; credible enforcement; careful equilibrium analysis under strategic coalitions.

- Consumer-level ambient participation (daily life, consumer tech)

- Description: Household PCs/GPUs and home routers automatically rent safe, sandboxed compute windows; users earn low-friction credits.

- Potential tools/products/workflows:

- One-click staking; automatic health and thermal checks; bandwidth-aware checkpoint syncing; family/ISP policy controls.

- Dependencies/assumptions: Strong sandboxing; simple user consent and safety defaults; micro-payout rails; device attestation.

- Formal policy and standards around reproducible operators and slashing (policy, standards)

- Description: Standards bodies define deterministic operator sets, checkpoint formats, and verifiable execution proofs; legal frameworks recognize cryptographic evidence for service-level enforcement.

- Potential tools/products/workflows:

- Reference test suites and certifications; compliance profiles; standard contracts referencing slashing/verifiable logs.

- Dependencies/assumptions: Multi-stakeholder coordination; legal recognition of cryptographic attestations; interoperability across vendors.

Cross-cutting assumptions and dependencies to monitor

- Technical: Availability and performance of reproducible operators and verifiable checkpointing; acceptable overheads for verification; predictable job-length estimates; sufficient bandwidth and storage for checkpoints; scheduler latency.

- Economic/behavioral: No-outside-option or at least limited outside options; truthful or quasi-rational behavior; sufficient competition to sustain “matching competitiveness.”

- Security/governance: Collateralization and slashing enforceability; identity/reputation to deter sybils; anti-collusion monitoring; privacy guarantees for sensitive workloads.

- Market design: Healthy floor price selection (to keep α ≲ 1 and suppress volatility); tiering definitions that reflect the least-performant machines in a tier; transparency of price updates and load calculation.

Glossary

- Admissible: A state where active supply meets or exceeds demand; used to assess whether a price enables market clearing. "admissible~(i.e.~active supply weakly exceeds demand)"

- Automated Market Maker (AMM): An algorithmic mechanism that continuously posts prices based on market conditions rather than running auctions. "We design an automated market maker~(AMM) that posts an hourly price as a concave function of load--the ratio of current demand to a ``floor supply'' (providers willing to work at a preset floor)."

- Bounded worstâcase regret: A performance guarantee ensuring the mechanism’s loss relative to an optimal benchmark is capped in the worst case. "we show that CFM attains bounded worstâcase regret relative to an optimal benchmark."

- CheapestâFeasible Matching (CFM): A matching rule that prioritizes the lowest-priced providers who can feasibly serve a job’s required duration. "CheapestâFeasible Matching~(CFM) rule; under mild assumptions, providers optimally stake early and fully while truthfully report costs."

- Combinatorial auctions: Auctions allowing bids on bundles of items with complementarities, often leading to computational complexity in winner determination. "Expressive mechanisms--such as combinatorial auctions and continuous double auctions--can capture complementarities and multiâattribute resources"

- Continuous double auctions: Market mechanisms where buyers and sellers continuously submit bids and asks that are matched in real time. "Expressive mechanisms--such as combinatorial auctions and continuous double auctions--can capture complementarities and multiâattribute resources"

- Crossâside externalities: Effects where participation or pricing decisions on one side of a two-sided market influence the other side’s value or behavior. "Twoâsided market theory studies how intermediaries internalize crossâside externalities and set prices and matching rules across both sides"

- Cryptographic commitments: Cryptographic constructs that bind to a value while keeping it hidden, enabling later verification of integrity. "canonical checkpoints with cryptographic commitments allow progress to be paused, verified, and resumed"

- Deterministic replay: Re-execution of computations with guaranteed identical results, aiding reproducibility across heterogeneous hardware. "Recent advances in reproducible operators~(RepOps), deterministic replay, and verifiable checkpointing make compute effectively fungible in time"

- Equilibrium quote: A price fixed point where the posted price equals the value prescribed by the pricing function at the current load. "An equilibrium quote at time is any solution of"

- Floor plateau: A property of the pricing function that remains flat at the floor price when load is at or below a threshold. "with floor plateau "

- Floor price: A preset minimum price level used as a baseline for market quoting and activation of supply. "The floor price is a pre-specified price"

- Floor supply: The count of providers willing to work at the floor price, used to normalize load and stabilize quotes. "Define the floor supply as the number of providers whose reported cost is below the floor price"

- Greedy matching: A heuristic that assigns jobs to feasible providers in a straightforward, locally optimal way for real-time scalability. "Jobs are matched to providers via a greedy matching algorithm discussed in~\S\ref{sec:matching}."

- Hazard rate: A measure of the instantaneous decrease in matching probability as reported cost increases, used in incentive analysis. "the hazard rate of , is bounded below"

- Load: A ratio of demand to floor supply that drives the price posted by the market maker. "concave function of load--the ratio of current demand to a ``floor supply''"

- Local responsiveness: A condition on the pricing function’s derivative near the floor ensuring prices react adequately to changes in load. "(F1) (Local responsiveness) The right derivative exists and"

- Myopic buyers: Buyers who optimize based on short-term considerations without accounting for long-run effects. "models with finite horizons, stochastic arrivals, and myopic buyers underpin revenue and welfare analyses for perishables"

- NPâhard: A complexity class indicating problems for which no known polynomial-time solution exists, applicable to winner determination. "despite NPâhard winner determination and latency concerns in online settings"

- Online bipartite matching: A dynamic matching framework where jobs arrive over time and are assigned to providers based on availability windows. "the assignment problem is naturally modeled as online bipartite matching"

- Outside option: An alternative opportunity outside the platform; its absence simplifies incentive design. "we make a no outside option assumption"

- Premiumâsharing pool: A mechanism that distributes surplus above reported costs among concurrently working providers to align incentives. "we pair a premiumâsharing pool~(base cost plus a proârata share of contemporaneous surplus)"

- Quasiârationality: A behavioral assumption that providers avoid reporting below true cost to prevent negative payoffs. "[quasi-rationality]"

- RANKING: An online matching algorithm that orders vertices to obtain competitive guarantees in dynamic assignment. "Classic RANKING/greedy approaches give robust guarantees and realâtime scalability"

- Refereed delegation: A verification method that pinpoints the first step of computational divergence with low overhead. "and refereed delegation identifies the first divergent step at low cost."

- Regular crossing: A technical condition ensuring demand and supply cross in a controlled way to guarantee admissible equilibrium prices. "(S1) (Regular crossing) There exists such that"

- Reproducible operators (RepOps): Standardized computational primitives ensuring bitwise-identical results across heterogeneous accelerators. "Recent advances in reproducible operators~(RepOps), deterministic replay, and verifiable checkpointing make compute effectively fungible in time"

- Reservation utilities: Baseline utilities participants receive if they do not trade, often normalized to zero for modeling. "Accordingly, both sidesâ reservation utilities are normalized to zero."

- Slashingâbased verification: A protocol that penalizes misbehavior (e.g., misreporting capacity) to enforce truthful participation. "we extend the framework to an incomplete-information setting by introducing slashing-based verification~\citep{arun2025verde}"

- Stochastic arrivals: Random arrival patterns of jobs or participants over time used in modeling perishables and dynamic pricing. "models with finite horizons, stochastic arrivals, and myopic buyers underpin revenue and welfare analyses for perishables"

- Timeâindexed capacity: Treating compute as units of time-bound capacity rather than fixed bundles, enabling flexible allocation. "allow us to treat compute as timeâindexed capacity rather than bespoke bundles."

- Twoâsided market: A platform-mediated market with distinct participant groups whose interactions generate cross-side effects. "Twoâsided market theory studies how intermediaries internalize crossâside externalities"

- Verifiable checkpointing: Creating canonical checkpoints that can be cryptographically verified to ensure correct and resumable execution. "Recent advances in reproducible operators~(RepOps), deterministic replay, and verifiable checkpointing make compute effectively fungible in time"

- Winnerâdetermination hardness: Computational difficulty of deciding auction winners in expressive mechanisms. "winnerâdetermination hardness, particularly in highâfrequency or realâtime settings"

Collections

Sign up for free to add this paper to one or more collections.