Dental3R: Geometry-Aware Pairing for Intraoral 3D Reconstruction from Sparse-View Photographs (2511.14315v1)

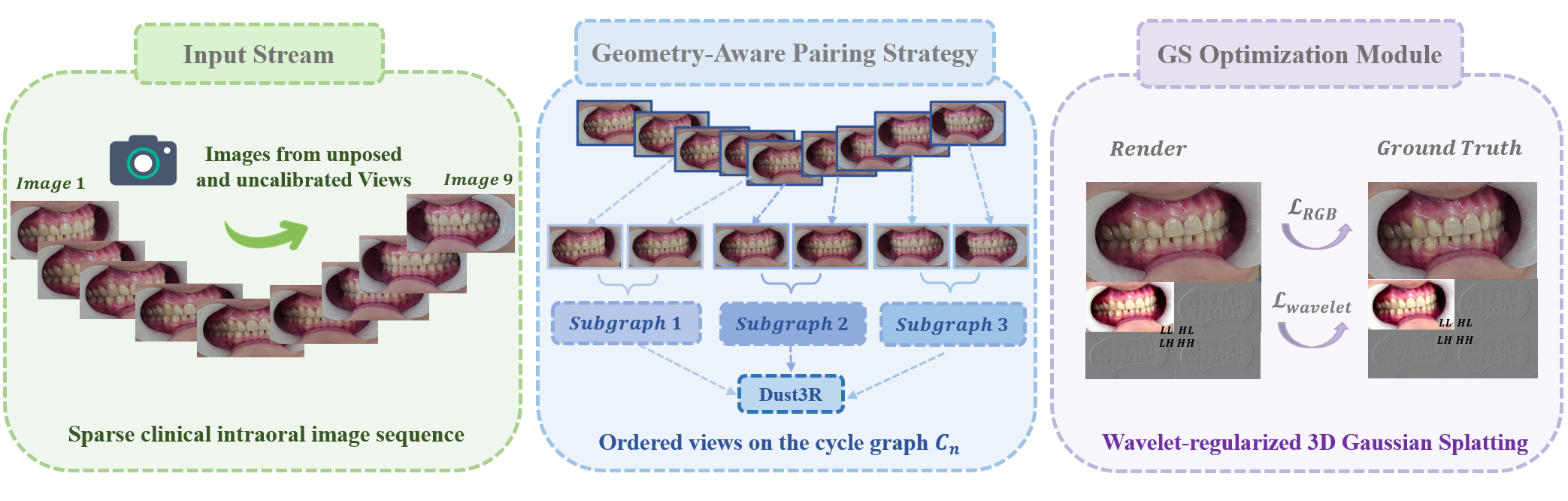

Abstract: Intraoral 3D reconstruction is fundamental to digital orthodontics, yet conventional methods like intraoral scanning are inaccessible for remote tele-orthodontics, which typically relies on sparse smartphone imagery. While 3D Gaussian Splatting (3DGS) shows promise for novel view synthesis, its application to the standard clinical triad of unposed anterior and bilateral buccal photographs is challenging. The large view baselines, inconsistent illumination, and specular surfaces common in intraoral settings can destabilize simultaneous pose and geometry estimation. Furthermore, sparse-view photometric supervision often induces a frequency bias, leading to over-smoothed reconstructions that lose critical diagnostic details. To address these limitations, we propose \textbf{Dental3R}, a pose-free, graph-guided pipeline for robust, high-fidelity reconstruction from sparse intraoral photographs. Our method first constructs a Geometry-Aware Pairing Strategy (GAPS) to intelligently select a compact subgraph of high-value image pairs. The GAPS focuses on correspondence matching, thereby improving the stability of the geometry initialization and reducing memory usage. Building on the recovered poses and point cloud, we train the 3DGS model with a wavelet-regularized objective. By enforcing band-limited fidelity using a discrete wavelet transform, our approach preserves fine enamel boundaries and interproximal edges while suppressing high-frequency artifacts. We validate our approach on a large-scale dataset of 950 clinical cases and an additional video-based test set of 195 cases. Experimental results demonstrate that Dental3R effectively handles sparse, unposed inputs and achieves superior novel view synthesis quality for dental occlusion visualization, outperforming state-of-the-art methods.

Sponsored by Paperpile, the PDF & BibTeX manager trusted by top AI labs.

Get 30 days freePaper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces Dental3R, a computer method that builds a 3D model of someone’s teeth using only a few ordinary photos taken inside the mouth. It’s meant for tele-orthodontics (remote check-ups), where people often send smartphone pictures instead of visiting a clinic. The goal is to turn three or more photos—usually a front view and two side views—into a realistic 3D model that dentists can use to see how the top and bottom teeth meet (the “bite”) and plan treatment.

Goals and Questions

The researchers wanted to solve three main problems:

- How can we make a 3D model from just a few photos without knowing the exact positions of the cameras (the “poses”)?

- How can we pick the best pairs of photos to compare so the computer can understand the 3D shape more reliably, especially when the photos are taken from very different angles and the teeth are shiny?

- How can we keep important fine details—like sharp edges where teeth meet—without adding fake or noisy textures, which often happens when you have only a few views?

How the Researchers Did It

The method has two big ideas: smart pairing of images and detail-preserving training.

1) Smart pairing of photos (GAPS: Geometry-Aware Pairing Strategy)

Imagine you’re assembling a 3D jigsaw puzzle from just a few pictures. If you try to compare every picture with every other picture, it’s slow and confusing. If you compare too few, you miss important matches. Dental3R uses a “view graph,” which is like drawing a circle of all the photos and connecting each photo to a small set of other photos that are most likely to overlap (show similar parts of the teeth).

- It uses a ranking system that prefers pairs likely to share the same regions of teeth.

- It limits how many connections each photo gets so the computer doesn’t run out of memory.

- This creates a compact network of high-value pairs that helps the system find reliable matches across the set.

This pairing focuses a model called DUSt3R on the most useful photo pairs. DUSt3R is a tool that, given two photos, predicts which points in each photo correspond to the same points in 3D space—like finding matching freckles on a face to figure out depth.

2) Building and training the 3D model (3D Gaussian Splatting with wavelet regularization)

Once Dental3R finds matches and recovers camera poses and a rough 3D point cloud, it builds the full 3D model using 3D Gaussian Splatting (3DGS).

- Think of 3DGS as representing the scene with lots of soft, cloudy dots (“Gaussians”). Each dot has position, size, shape, and color. By layering these dots and looking from different angles, you can render realistic images of the teeth.

- Training normally tries to match the color of the rendered image to the input photos. But with only a few photos, the result can look too smooth and blurry (it misses sharp details) or, sometimes, generates sparkly artifacts.

To fix this, Dental3R adds a “wavelet” loss. Wavelets separate an image’s content into different types of details—like low, medium, and high frequencies (similar to separating bass, mids, and treble in audio). By carefully preserving mid-frequency edges and boundaries (e.g., enamel edges and the spaces between teeth), while reducing bad high-frequency noise, the method keeps important sharp features without adding glittery artifacts.

Main Findings and Why They’re Important

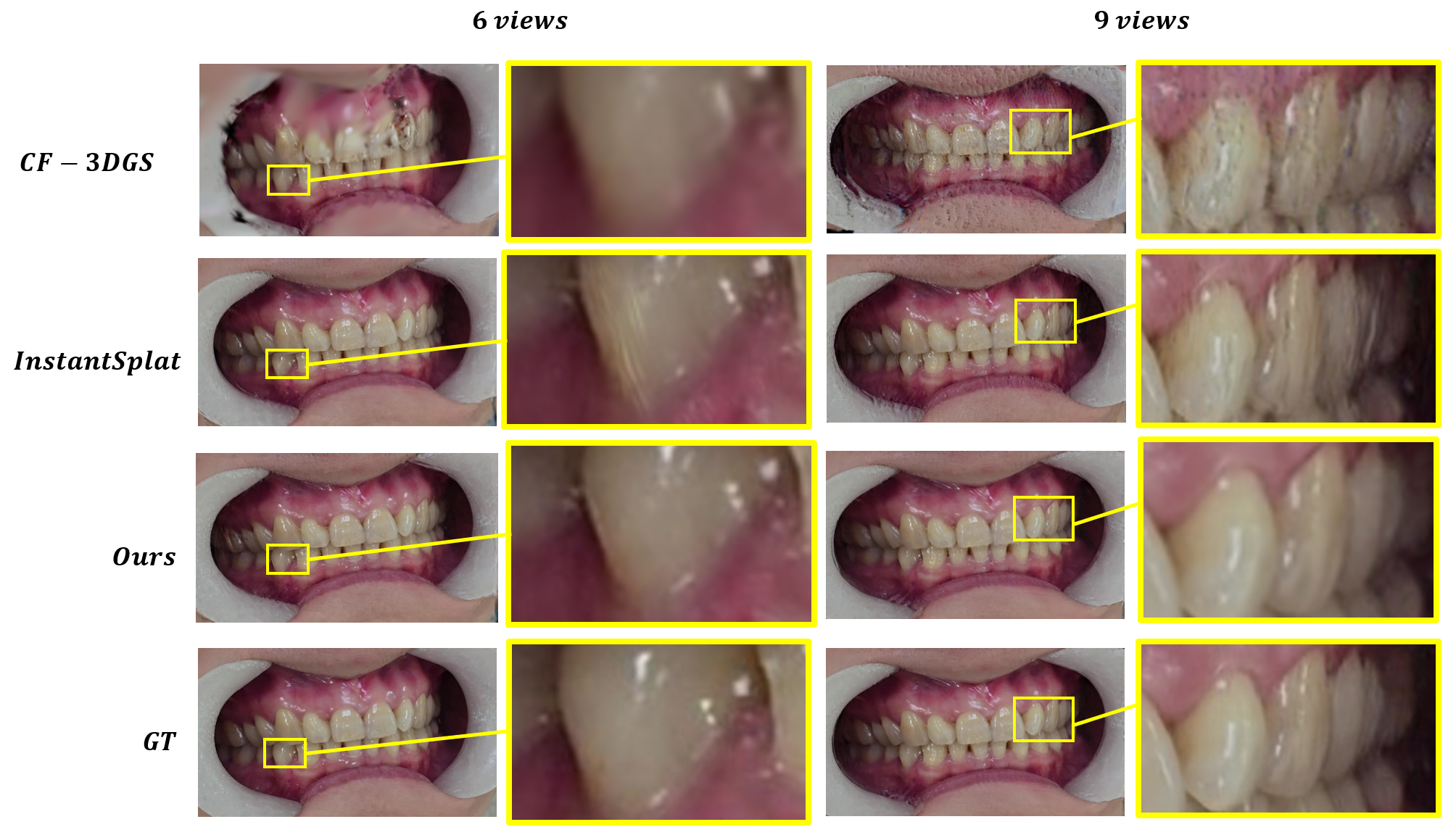

The team tested Dental3R on:

- 950 cases with just three photos (front, left side, right side).

- 195 video cases, each turned into sets of sparse images (3, 6, 9, or 12 views).

Compared to other advanced methods:

- Dental3R produced clearer, more accurate renders from very few photos.

- It handled large angle differences and shiny tooth surfaces better, with fewer floating or distorted shapes.

- It preserved key clinical details—like edges where teeth meet—which matter for diagnosing bite issues.

- It did this while using less memory than strategies that compare every photo to every other photo.

In plain terms: Dental3R made better-looking, more trustworthy 3D views of teeth from fewer, messier photos.

What This Could Mean in the Real World

- Remote orthodontic care: Patients can send a small set of smartphone photos, and dentists could still see reliable 3D views of their bite without special scanners.

- Lower costs and better access: Fewer clinic visits and cheaper equipment make follow-ups easier, especially for people far from clinics.

- Better planning and monitoring: Since the 3D models keep important details sharp, dentists can make more confident decisions about braces, aligners, and other treatments.

In short, Dental3R brings high-quality 3D reconstruction to settings where only a handful of everyday photos are available, helping orthodontists work more efficiently and making care more accessible.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The following points summarize what remains missing, uncertain, or unexplored in the paper, framed to enable concrete follow-up studies:

- Pose-free claim vs. evaluation protocol: The video dataset experiments state “training images and their corresponding camera poses,” which undermines the pose-free narrative. A clear, pose-free evaluation (with unknown poses and intrinsics at train and test time) and a comparison against pose-estimation baselines are needed.

- Absolute metric scale recovery: DUSt3R pointmaps are scale-normalized; the paper does not recover metric scale required for appliance design and clinical measurements. Investigate how to recover scale from sparse intraoral images (e.g., camera calibration, fiducials, tooth size priors, stereo baselines, or learned scale cues).

- Geometry accuracy vs. clinical ground truth: Image-based metrics (PSNR/SSIM/LPIPS) are insufficient for clinical validation. Add quantitative geometry evaluation against IOS/CBCT models (surface-to-surface error, occlusal contact localization error, interproximal gap distances, cusp alignment) and clinical task metrics.

- Domain gap to tele-orthodontics: The dataset uses DSLR macro lens with forced flash; typical remote inputs are smartphone photos with auto-exposure, noise, rolling shutter, motion blur, and variable intrinsics. Systematically measure cross-device/generalization gaps and develop domain adaptation or robust photometric normalization.

- Handling orthodontic appliances and adverse conditions: No analysis of cases with brackets/wires, aligners, composites, saliva specularities, and soft-tissue occlusions. Stratify performance by case condition and design robustness strategies (specular-aware rendering, polarization cues, appliance segmentation).

- Specular/BRDF modeling for enamel: The pipeline treats view-dependent color via SH but does not model enamel’s specular reflectance or polarization. Explore BRDF-/SVBRDF-aware splatting, specularity suppression, or inverse rendering under flash capture.

- Multi-view consistency under sparse supervision: The wavelet loss is per-view and photometric; there is no explicit cross-view frequency or geometric consistency term. Study multi-view frequency-consistent regularization and its effect on edge preservation without overfitting.

- Wavelet design choices and sensitivity: The paper omits the chosen wavelet family, decomposition level details, and weights λx, and presents limited ablation. Evaluate different wavelet bases (Haar, Daubechies, biorthogonal), levels, and weight schedules; quantify edge-preservation (e.g., edge F-score, gradient magnitude histograms).

- GAPS hyperparameters and generality: The weight decay function φ, range-class thresholds, degree cap b, and offsets K are not specified or analyzed for sensitivity. Provide principled selection/tuning, adaptive graph construction (learned or data-driven), and generalization to unordered image sets.

- Pairing strategy for non-sequential/irregular captures: GAPS assumes a cycle and multi-scale chords; many smartphone captures are unordered. Design a robust pairing strategy that infers graph topology from visual overlap or learned correspondence quality in the absence of a sequential prior.

- Pose accuracy benchmarking: The paper does not quantify recovered pose accuracy (rotation/translation errors) against COLMAP or fiducial-based ground truth. Add pose error metrics and analyze failure cases with large baselines and specular surfaces.

- Lens distortion and intrinsics variability: There is no treatment of lens distortion or intrinsics estimation. Investigate joint recovery of intrinsics/distortion from sparse intraoral views and its impact on geometry fidelity.

- Mesh extraction and CAD readiness: 3DGS yields Gaussians; the paper does not demonstrate watertight, artifact-free mesh extraction suitable for CAD/CAM workflows. Develop robust Gaussian-to-mesh pipelines (e.g., Poisson/level sets, denoising) and validate manifoldness, cleanliness, and STL suitability.

- Soft-tissue dynamics and jaw pose changes: The method assumes rigid occlusion; intraoral captures often include tongue/cheek motion and slight jaw pose differences. Integrate tooth segmentation/masking, rigid alignment per arch, and non-rigid handling to prevent over-reconstruction.

- Robustness to image degradations: No stress tests for noise, blur, exposure shifts, vignetting, flash shadows, or occlusions. Conduct controlled perturbation studies and design augmentation or robustness modules.

- Uncertainty estimation and failure detection: DUSt3R predicts per-pixel confidence, but the pipeline does not propagate uncertainty to reconstruction quality or clinical decision-making. Incorporate uncertainty-aware optimization and automatic failure flags for telehealth deployment.

- Computational footprint and deployment: Experiments use an RTX 4090; mobile/clinic-grade hardware is usually lower-end. Profile time/memory vs. view count across commodity devices, and explore pruning/compression or lightweight alternatives for real-time or near-real-time use.

- Scalability with larger or fewer views: Memory and accuracy vs. graph density are reported, but scaling behavior and optimal pairing under extreme sparsity (e.g., 2 views) or larger sets (20–50 views) remain unexplored.

- Statistical rigor: Report variance, confidence intervals, and significance tests across cases; include per-condition performance breakdowns (e.g., presence of appliances, arch crowding, reflective saliva).

- Dataset and code availability: The paper does not state public release. Reproducibility and clinical research uptake would benefit from releasing the dataset (with consent), code, and pretrained components.

- Clinical utility studies: Validate with orthodontist workflows (e.g., occlusal contact maps, treatment planning), user studies, and error tolerance analysis to determine actionable thresholds for clinical decision-making.

- Integration with auxiliary cues: Explore fusing monocular depth priors, intraoral IMU, inertial or acoustic scale cues, or learned tooth shape priors to stabilize geometry and scale under sparse views.

- Failure mode taxonomy: Beyond qualitative examples, provide a systematic taxonomy and quantitative rates of drift, floating artifacts, over-reconstruction, and texture smearing, along with targeted mitigations.

Practical Applications

Practical, Real-World Applications of Dental3R

The paper introduces Dental3R, a pose-free, graph-guided pipeline for 3D reconstruction from sparse intraoral photographs, using a Geometry-Aware Pairing Strategy (GAPS) and wavelet-regularized 3D Gaussian Splatting (3DGS). Below are actionable applications derived from its findings, methods, and innovations.

Immediate Applications

The following applications can be deployed now, leveraging the reported robustness to sparse, unposed views and the validated performance on clinical datasets.

- Remote orthodontic follow-ups and monitoring (Healthcare)

- Use case: Patients capture the standard triad of anterior and bilateral buccal smartphone images at home; Dental3R produces a 3D occlusion model for clinicians to review progress, detect drift, and assess appliance effectiveness.

- Tools/workflows: Patient-facing capture app with guided framing; cloud-based Dental3R processing; clinician dashboard to compare time-series reconstructions; integration with practice management systems.

- Assumptions/dependencies: Minimum image quality; consistent capture protocol; metric scale may require a calibration reference or known anatomical priors for precise measurements; HIPAA/GDPR-compliant data handling.

- Tele-orthodontic triage and pre-screening (Healthcare)

- Use case: Initial remote consults use 3-view photos to reconstruct occlusion and screen for malocclusion severity, crossbite, and crowding before in-office appointments.

- Tools/workflows: Web intake portal; automated reconstruction and occlusion visualization; triage scoring overlay for clinicians.

- Assumptions/dependencies: Triage thresholds calibrated against gold-standard IOS/CBCT; robust handling of specular enamel and variable illumination.

- Progress verification and appliance fit checks (Healthcare, Dental devices)

- Use case: Verify aligner seating and interproximal edge visibility with sparse-view 3D reconstructions; flag over-reconstruction artifacts or drift to request re-capture.

- Tools/workflows: Clinic-side QC module that uses GAPS to prioritize pairings and flags low-confidence regions; alerts for re-capture or in-person evaluation.

- Assumptions/dependencies: Accurate occlusion alignment requires consistent bite pose; potential need for scale reference for device fit assessment.

- Documentation and claims support (Finance/Insurance)

- Use case: Generate consistent 3D visual evidence from patient photos to support claims, pre-authorizations, and outcome documentation without clinic-grade scanners.

- Tools/workflows: Claims portal integration; standardized capture kit; report generator with qualitative renderings and quantitative image-based metrics.

- Assumptions/dependencies: Payer acceptance of 3D reconstructions; alignment with telehealth reimbursement rules; audit trails.

- Educational visualization for dental training (Education)

- Use case: Build 3D occlusion visualization libraries from sparse images to teach enamel boundaries and interproximal anatomy, leveraging wavelet-regularized detail preservation.

- Tools/workflows: Curriculum modules, case galleries, and interactive viewer; compatibility with low-compute lab stations via memory-efficient GAPS pairing.

- Assumptions/dependencies: License for clinical imagery; anonymization; alignment with program learning outcomes.

- Memory-efficient pairing library for sparse-view 3D reconstruction (Software/AI)

- Use case: Adopt GAPS as a pairing module to stabilize pose recovery and reduce GPU memory in DUSt3R+3DGS workflows for intraoral and similar specular scenes.

- Tools/products: Open-source plugin or SDK implementing degree-bounded, range-aware pairing; integration into InstantSplat/EasySplat-like pipelines.

- Assumptions/dependencies: Availability of DUSt3R/3DGS stack; developers can tune range classes and degree caps for their datasets.

- Quality control and capture guidance (Software, Daily life)

- Use case: Real-time capture guidance (pose hints, lighting checks) and post-capture QC (confidence maps, blur/specular warnings) to reduce unusable submissions.

- Tools/workflows: Mobile app with on-device heuristics; server-side confidence-aware scoring; re-capture prompts.

- Assumptions/dependencies: Device-level access to camera APIs; variability across smartphones; user compliance.

Long-Term Applications

The following applications require further research, scaling, clinical validation, or product development, especially around metric accuracy, regulatory approval, and broader generalization.

- End-to-end tele-orthodontics platform with CAD/CAM integration (Healthcare, Dental devices)

- Use case: Design preliminary aligners or retainers directly from sparse-view 3D reconstructions, with periodic at-home updates.

- Tools/workflows: Mesh extraction from 3DGS (e.g., splat-to-mesh conversion), metric calibration (fiducials or learned scaling), CAD integration, and automated appliance design.

- Assumptions/dependencies: Regulatory clearance; proven metric accuracy vs. IOS/CBCT; robust meshing without artifacts; clinical trials.

- Mobile/on-device reconstruction for real-time feedback (Software, Mobile health)

- Use case: Lightweight on-device 3D occlusion previews that inform immediate retakes and reduce cloud costs.

- Tools/workflows: Optimized DUSt3R inference, trimmed GAPS for low memory, simplified wavelet loss; hybrid edge-cloud processing.

- Assumptions/dependencies: Efficient models for mobile GPUs/NPUs; battery and thermal constraints; acceptable latency and accuracy.

- AI-driven treatment planning assistance (Healthcare, AI)

- Use case: Automated analysis of reconstructed occlusion to propose plan adjustments, indicate elastics usage, or anticipate movement goals.

- Tools/workflows: Decision-support layer trained on longitudinal reconstructions; integration with EHRs and imaging systems; explainability dashboards.

- Assumptions/dependencies: Large labeled datasets with ground-truth outcomes; bias and safety assessments; clinician-in-the-loop governance.

- Standard-setting for tele-dentistry capture protocols and reimbursement (Policy)

- Use case: Establish guidance for acceptable sparse-view protocols, minimum quality thresholds, and billing codes for remote orthodontic assessments.

- Tools/workflows: Consensus guidelines (professional bodies), payer policy updates, compliance frameworks (privacy, auditability).

- Assumptions/dependencies: Multi-stakeholder alignment; clinical validation to underpin standards; privacy-by-design implementations.

- Cross-domain adoption for specular, sparse-view scenes (Robotics, Medical imaging)

- Use case: Apply GAPS and wavelet-regularized 3DGS to endoscopic or surgical reconstruction with large baselines, inconsistent illumination, and glossy tissues.

- Tools/workflows: Domain adaptation (e.g., motion handling, deformable scenes), integration with SLAM and depth priors, specialized capture protocols.

- Assumptions/dependencies: Handling non-rigid anatomy; additional priors for dynamics; clinical validation and safety requirements.

- Consumer oral-care apps for self-monitoring (Daily life, Consumer health)

- Use case: Patients visualize bite changes in 3D, receive habits coaching, and share structured updates with providers.

- Tools/workflows: Subscription apps; gamified adherence; shared reconstruction timelines.

- Assumptions/dependencies: Simplified UX for consistent captures; privacy safeguards; provider integration.

- Cloud-scale services and MLOps for dental networks (Software/Cloud)

- Use case: Deploy scalable reconstruction services for large practices, supporting thousands of cases with versioned models and reproducible pipelines.

- Tools/workflows: Containerized Dental3R services; automated QC; audit logs; continuous monitoring of performance metrics (PSNR, SSIM, LPIPS).

- Assumptions/dependencies: Cost-effective GPU provisioning; data governance; robust failover and disaster recovery.

- Research benchmarks and reproducible evaluation suites (Academia)

- Use case: Create standardized benchmark tasks for sparse intraoral reconstruction, including pose-free evaluation, frequency-bias mitigation, and clinical relevance metrics.

- Tools/workflows: Public datasets with diverse devices and lighting; open baselines implementing GAPS and wavelet loss; standardized testing harness.

- Assumptions/dependencies: Data sharing approvals; de-identification pipelines; multi-institution collaboration.

- Photogrammetry enhancements for glossy small objects (E-commerce, XR)

- Use case: Use GAPS to stabilize sparse-view 3D capture of reflective products (jewelry, ceramics) and wavelet losses to retain sharp edges in neural rendering.

- Tools/workflows: Producer-facing capture kits; cloud reconstruction APIs; 3D viewers for product pages and AR previews.

- Assumptions/dependencies: Domain adaptation for non-dental reflectance; calibration for true-to-scale outputs.

Notes on feasibility across applications:

- Metric scale: The method operates pose-free with normalized pointmaps; achieving accurate metric scale may require fiducials, learned scaling, or device-specific calibration.

- Meshing: 3DGS is an explicit Gaussian representation; watertight meshes for CAD/CAM or printing require post-processing and may introduce errors.

- Clinical-grade accuracy: Photometric metrics (PSNR, SSIM, LPIPS) demonstrate view synthesis quality; clinical deployment demands geometry accuracy validation against IOS/CBCT and rigorous QA.

- Compute and memory: GAPS reduces pairing and GPU memory requirements, enabling broader deployment, but production systems will likely rely on cloud GPUs for consistent performance.

- Data privacy and compliance: Healthcare deployments must incorporate secure data handling, consent management, and audit trails.

Glossary

- 3D Gaussian Splatting (3DGS): An explicit radiance field representation that renders scenes using many anisotropic 3D Gaussian primitives with differentiable rasterization. "3D Gaussian Splatting (3DGS) achieves photorealistic synthesis with efficient differentiable rasterization over explicit Gaussian primitives."

- alpha-blending: A compositing technique that accumulates color and depth along a ray using per-primitive opacity. "The final color ... are then synthesized by alpha-blending the contributions of all overlapping Gaussians"

- anisotropic Gaussian primitives: Direction-dependent Gaussian splats used to model spatial density and appearance in 3DGS. "represents a 3D scene as an explicit collection of anisotropic Gaussian primitives."

- band-limited fidelity: A constraint that preserves desired frequency content while suppressing unwanted frequencies during optimization. "By enforcing band-limited fidelity using a discrete wavelet transform"

- buccal photographs: Dental images taken from the cheek side of the teeth (left and right). "the standard clinical triad of unposed anterior and bilateral buccal photographs"

- bundle adjustment: A nonlinear optimization refining camera poses and 3D points jointly to minimize reprojection error. "and performing bundle adjustment."

- camera intrinsics: The internal calibration parameters of a camera (e.g., focal length, principal point) used to map pixels to rays. "Given a depth map D and camera intrinsics K, the pointmap is defined as:"

- CBCT (Cone-Beam Computed Tomography): A 3D imaging modality commonly used in dentistry for detailed volumetric scans. "While CBCT and intraoral scanning (IOS) deliver accurate 3D models and occlusal relationships"

- confidence-aware training objective: A loss that is modulated by predicted per-pixel confidences to handle uncertain regions. "DUSt3R employs a confidence-aware training objective."

- degree-bounded selection: A graph selection strategy that limits the number of edges per node to control complexity while keeping important connections. "a degree-bounded selection yields a compact subgraph that balances local reliability and global rigidity."

- dental occlusion: The contact relationship between upper and lower teeth, important for clinical visualization. "superior novel view synthesis quality for dental occlusion visualization"

- differentiable rasterization: A rendering process whose outputs are differentiable with respect to scene parameters, enabling gradient-based optimization. "photorealistic synthesis with efficient differentiable rasterization"

- Discrete Wavelet Transform (DWT): A multi-resolution transform that decomposes images into frequency- and orientation-specific sub-bands. "The two-dimensional Discrete Wavelet Transform (DWT) is applied to a discrete image"

- DUSt3R: A feed-forward model that predicts dense 3D pointmaps and relative camera poses from image pairs without prior calibration. "DUSt3R~\cite{dust3r} introduces a novel paradigm for dense 3D reconstruction from unconstrained image collections"

- dyadic downsampling: Downsampling by a factor of two after filtering in wavelet transforms. "Each filtering stage is followed by dyadic downsampling."

- end-to-end pipeline: A fully differentiable system trained jointly from inputs to outputs without separate pre-processing stages. "introduces an end-to-end pipeline for simultaneous camera tracking and novel view synthesis directly from video"

- enamel: The hard, outer surface layer of teeth whose fine boundaries are clinically important. "preserves fine enamel boundaries"

- frequency bias: The tendency of photometric losses under sparse views to favor low-frequency content, causing over-smoothing. "sparse-view photometric supervision often induces a frequency bias"

- Geometry-Aware Pairing Strategy (GAPS): A graph-based method to select informative image pairs that stabilize pose/geometry estimation with limited memory. "Our method first constructs a Geometry-Aware Pairing Strategy (GAPS) to intelligently select a compact subgraph of high-value image pairs."

- Hybrid Sequential Geometric View-Graph: A structured pairing graph over images arranged in a cycle with multi-scale chords as candidates. "We construct a Hybrid Sequential Geometric View-Graph that arranges images on a cycle"

- interproximal edges: Boundaries between adjacent teeth that are important for diagnostic detail. "preserves fine enamel boundaries and interproximal edges"

- intraoral scanning (IOS): A clinic-based method to capture in-mouth 3D geometry using a specialized scanner. "While CBCT and intraoral scanning (IOS) deliver accurate 3D models and occlusal relationships"

- large view baselines: Substantial viewpoint differences between images that make correspondence and pose estimation difficult. "The large view baselines, inconsistent illumination, and specular surfaces common in intraoral settings"

- LPIPS (Learned Perceptual Image Patch Similarity): A learned perceptual metric that correlates with human judgments of image similarity. "Peak Signal-to-Noise Ratio (PSNR)~\cite{psnr}, Structural Similarity Index Measure (SSIM)~\cite{SSIM}, and Learned Perceptual Image Patch Similarity (LPIPS)~\cite{Lpips}."

- Multi-Layer Perceptron (MLP): A neural network used in NeRF to represent the radiance field as a continuous function. "represents a scene as a continuous 5D function learned by a Multi-Layer Perceptron (MLP)."

- Multi-View Stereo (MVS): A method that densifies 3D reconstructions by enforcing photometric consistency across multiple views. "pipelines combining SfM and Multi-View Stereo (MVS)."

- Neural Radiance Fields (NeRF): A neural representation that maps 3D positions and viewing directions to color and density for view synthesis. "A paradigm shift occurred with the introduction of Neural Radiance Fields (NeRF)"

- novel view synthesis: Rendering photorealistic images from unobserved camera viewpoints given a learned scene representation. "shows promise for novel view synthesis"

- plane-sweep cost volumes: Volumetric representations built by sweeping depth planes and measuring multi-view matching costs. "By leveraging plane-sweep cost volumes (a multi-view stereo technique) to provide geometry cues"

- point cloud: A set of 3D points representing scene geometry, often used for initialization. "first recover a sparse 3D point cloud and camera poses"

- pointmap: A dense mapping from image pixels to 3D points in a particular coordinate frame. "a dense scene representation called a pointmap."

- positive semi-definite: A property of covariance matrices ensuring non-negative quadratic forms, required for stable optimization. "To ensure the covariance matrix remains positive semi-definite"

- Quadrature Mirror Filters (QMFs): Paired low-pass and high-pass filters used to implement wavelet filter banks. "The transform utilizes a pair of Quadrature Mirror Filters (QMFs), a low-pass filter h and a high-pass filter g"

- quaternion: A 4-parameter representation for 3D rotations used to parameterize Gaussian orientations. "a rotation quaternion"

- PSNR (Peak Signal-to-Noise Ratio): A signal fidelity metric measuring reconstruction quality relative to ground truth. "Peak Signal-to-Noise Ratio (PSNR)~\cite{psnr}"

- radiance field: A function mapping spatial positions and viewing directions to emitted/received radiance for rendering. "3DGS~\cite{kerbl20233dgs} is a high-fidelity radiance field method"

- SSIM (Structural Similarity Index Measure): An image quality metric that evaluates structural similarity between images. "Structural Similarity Index Measure (SSIM)~\cite{SSIM}"

- Structure-from-Motion (SfM): A pipeline that recovers camera poses and sparse 3D structure from overlapping images. "pipelines combining SfM and Multi-View Stereo (MVS)."

- Spherical Harmonic (SH) coefficients: Coefficients of SH basis functions used to model view-dependent color in 3DGS. "a set of Spherical Harmonic (SH) coefficients for modeling view-dependent color"

- specular surfaces: Highly reflective surfaces that cause view-dependent highlights and complicate reconstruction. "specular surfaces common in intraoral settings"

- sub-bands (LL, LH, HL, HH): The approximation and detail components produced by a 2D wavelet decomposition. "This process yields four coefficient sub-bands:"

- tele-orthodontics: Remote orthodontic care leveraging at-home image capture and virtual follow-ups. "inaccessible for remote tele-orthodontics, which typically relies on sparse smartphone imagery."

- Transformer-based priors: Pretrained Transformer models leveraged to provide geometric or correspondence priors. "modern Transformer-based priors such as DUSt3R"

- volumetric rendering: Integrating contributions of semi-transparent elements along camera rays to compute pixel color and depth. "This volumetric rendering process is formulated as:"

- wavelet loss: A loss computed on wavelet-domain residuals to guide frequency-aware optimization. "Our framework incorporates a wavelet loss term to regularize the optimization process in the frequency domain"

- wavelet-regularized objective: An optimization objective augmented with wavelet-based constraints to preserve fine details. "we train the 3DGS model with a wavelet-regularized objective."

Collections

Sign up for free to add this paper to one or more collections.