Opt3DGS: Optimizing 3D Gaussian Splatting with Adaptive Exploration and Curvature-Aware Exploitation (2511.13571v1)

Abstract: 3D Gaussian Splatting (3DGS) has emerged as a leading framework for novel view synthesis, yet its core optimization challenges remain underexplored. We identify two key issues in 3DGS optimization: entrapment in suboptimal local optima and insufficient convergence quality. To address these, we propose Opt3DGS, a robust framework that enhances 3DGS through a two-stage optimization process of adaptive exploration and curvature-guided exploitation. In the exploration phase, an Adaptive Weighted Stochastic Gradient Langevin Dynamics (SGLD) method enhances global search to escape local optima. In the exploitation phase, a Local Quasi-Newton Direction-guided Adam optimizer leverages curvature information for precise and efficient convergence. Extensive experiments on diverse benchmark datasets demonstrate that Opt3DGS achieves state-of-the-art rendering quality by refining the 3DGS optimization process without modifying its underlying representation.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces Opt3DGS, a smarter way to train a popular 3D graphics method called 3D Gaussian Splatting (3DGS). 3DGS builds detailed 3D scenes from regular photos and lets you view them from new angles. Opt3DGS improves the training process so the scenes look sharper and more accurate, without changing how 3DGS represents the scene itself.

What questions did the researchers ask?

- How can we stop 3DGS from getting “stuck” in okay-but-not-best solutions during training?

- How can we make 3DGS finish training with more precise, high-quality results?

- Can we do all this by only improving the optimization (the way we adjust the model), rather than changing the 3DGS scene representation?

How did they approach the problem?

Quick background: 3D Gaussian Splatting in simple terms

Imagine you’re building a 3D scene out of millions of soft, fuzzy points (like tiny semi-transparent paint blobs) called “Gaussians.” Each blob has a position in space, a size, a direction, a color, and an opacity (how see-through it is). When you “splat” these blobs onto an image, and blend them together, you get a picture of the scene. Training 3DGS means adjusting all those blob settings so photos taken from different angles look as realistic as possible.

The catch: training can get stuck. It’s like hiking in a landscape of hills and valleys. You want the deepest valley (the best solution), but you might stop at a smaller one nearby because getting out is hard.

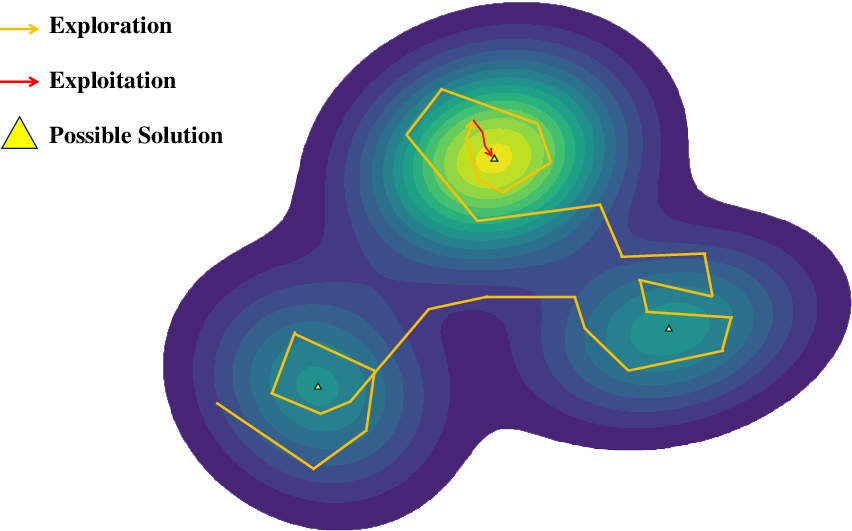

Stage 1 (Exploration): Adaptive Weighted SGLD

Goal: Explore the “landscape” better and avoid getting stuck too early.

- SGLD (Stochastic Gradient Langevin Dynamics) is like walking downhill (following the gradient) with a bit of randomness (noise) to help you wander and discover other valleys.

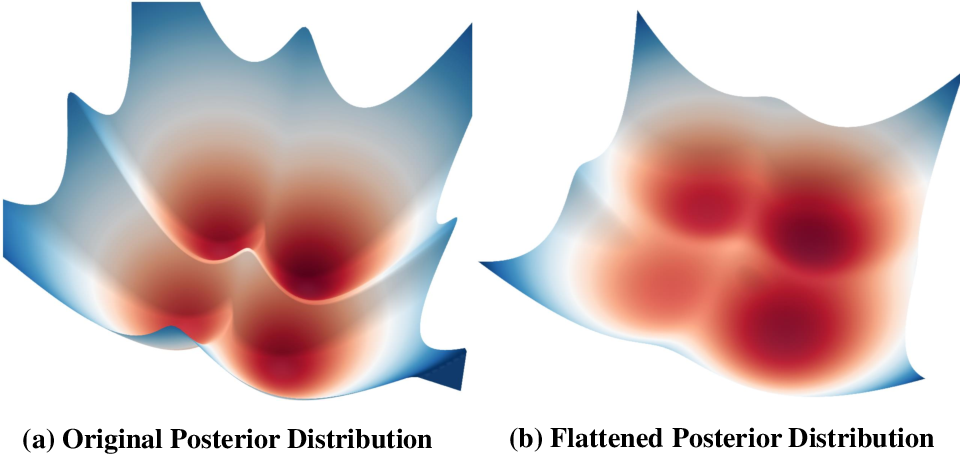

- The paper adds “Adaptive Weighted” steps based on the flat-histogram principle. In simple terms, they gently reshape the training landscape so tall barriers feel lower, making it easier to move between different regions.

- Practically, they watch the loss (how wrong the current scene is) and use adaptive weights to “flatten” the landscape. This reduces the chance of piling too many Gaussians in already strong areas (a known issue with opacity-based sampling) and encourages exploration in underrepresented parts of the scene.

Think of it like smoothing out the mountains so you can roam more freely and are less likely to settle for the first decent valley you find.

Stage 2 (Exploitation): Local Quasi-Newton Direction-guided Adam (LQNAdam)

Goal: Once you’re near a good valley, finish with precise, stable steps.

- Adam is a popular optimizer that takes smart steps based on recent gradients (how the loss changes).

- “Quasi-Newton” means estimating how curved the ground is (without doing heavy math) so your steps match the local shape. This helps you reach the bottom more efficiently.

- They use L-BFGS (a lightweight quasi-Newton method) to find a good direction for moving the positions of the Gaussians, then feed that direction into Adam. This keeps Adam’s stability, but adds curvature awareness for better final accuracy.

- It focuses locally (each Gaussian considered independently), which is fast and practical.

Think of it as switching from wandering (exploration) to careful stepping (exploitation), using smarter moves that consider how the ground curves under your feet.

What did they find?

Here is a brief summary of the results and why they matter:

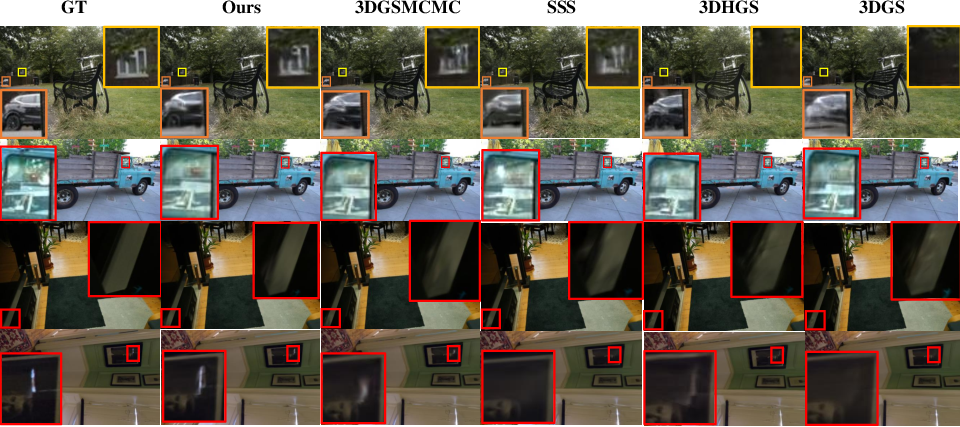

- Across multiple standard datasets (like MipNeRF360, Tanks and Temples, and DeepBlending), Opt3DGS produced clearer images, better fine details, and more accurate colors than existing methods.

- Against a strong baseline (3DGSMCMC), Opt3DGS consistently improved common image quality scores:

- PSNR and SSIM (higher is better) improved.

- LPIPS (lower is better, measures how images look to people) decreased noticeably.

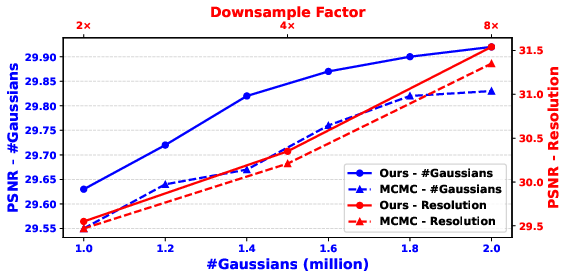

- It worked well even in harder situations:

- Random initialization (starting from scratch without a good guess) still led to strong results.

- Higher-resolution images (more details to match) improved over baselines.

- Fewer Gaussians (less capacity) still achieved better quality, showing the optimizer itself makes a big difference.

- An ablation paper (turning features on/off) showed:

- The exploration module (AW-SGLD) helped escape local traps and improved coverage of the scene.

- The exploitation module (LQNAdam) further sharpened results, giving more precise final convergence.

Overall, they achieved state-of-the-art or near state-of-the-art quality by improving the optimization steps alone.

Why does this matter?

Better optimization means:

- Sharper, more realistic 3D scenes from regular photos, useful for VR/AR, video games, movies, robotics, mapping, and more.

- Faster, more reliable training without redesigning the 3D representation or adding heavy computation.

- A plug-in approach: Opt3DGS can slot into many 3DGS-based systems since it doesn’t change the basic “Gaussian blobs” idea.

In short, Opt3DGS shows that how you train can be just as important as what you train. By learning to explore smartly and finish precisely, it helps 3DGS reach higher-quality results more consistently.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise list of what remains missing, uncertain, or unexplored in the paper, formulated to guide future research.

- Lack of theoretical guarantees: no formal convergence, stability, or bias analysis for AW-SGLD’s posterior flattening or for LQNAdam’s curvature-guided updates (e.g., impact of the gradient multiplier on stationary points and mode-seeking behavior).

- Unclear effect of posterior flattening on final solution quality: no paper of when flattening harms convergence (e.g., oversmoothing fine structures, biasing toward shallow basins) or a principled criterion for disabling beyond a fixed iteration switch.

- Fixed phase switch is heuristic: the exploration-to-exploitation transition at iteration 29,000 is not adaptive; no data-driven or criterion-based scheduler (e.g., based on loss curvature, gradient variance, or acceptance ratios) is proposed or evaluated.

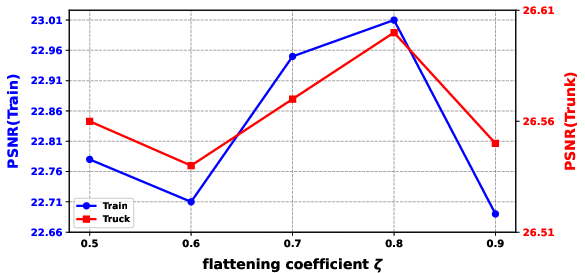

- Hyperparameter sensitivity largely unexamined: only is ablated; temperature , bin count , energy bounds , SA step size , noise coefficient , and Adam/L-BFGS settings (e.g., , learning rates) lack sensitivity analyses and robust defaults.

- Energy estimation details are under-specified: how is estimated per-iteration (mini-batch variance, smoothing, bias) and how bin assignments remain stable under stochastic training are not analyzed; no paper of the effect of warm-up length on SA stability.

- SA convergence for is unproven in this setting: no theoretical or empirical assessment of SA stability/oscillation, step-size schedules, or convergence speed of the weight vector under nonstationary training dynamics.

- Opacity-driven sampling remains unchanged: despite mitigating clustering via exploration, no alternative addition/pruning sampling rules (e.g., coverage-aware, uncertainty-driven, or geometric error-based) are evaluated; coverage metrics for under-reconstructed regions are absent.

- Curvature updates only for positions: LQNAdam is applied to but not to scale , rotation , opacity , or SH coefficients; no ablation on whether curvature-aware updates for these parameters improve fidelity or stability.

- No comparison with strong second-order baselines: LQNAdam is not benchmarked against LM, near-second-order 3DGS, or other Hessian-free/curvature-aware optimizers under matched settings and budgets.

- Computational and memory overheads are not quantified: per-Gaussian L-BFGS history cost (with ), AW-SGLD weighting/SA updates, and overall GPU memory/time profiles are not reported across scene scales; no scaling paper to millions of Gaussians or low-memory devices.

- Generalization to challenging material/lighting: performance under specular, translucent, reflective, or low-light scenes is not evaluated; robustness to photometric non-idealities remains unclear.

- Dynamic and large-scale scenarios are not addressed: applicability to dynamic scenes (4D), city-scale/large environments, streaming/SLAM settings, or real-time constraints is untested.

- Loss function effects are unexplored: the exploitation phase switches L1 to L2 without analyzing trade-offs; no evaluation with perceptual or geometry-aware losses (e.g., depth/normal consistency) or their interaction with AW-SGLD/LQNAdam.

- Interaction with densification strategies is unstudied: how AW-SGLD/LQNAdam integrate with principled densification (RevisingGS, AbsGS) or frequency regularization (FreGS) is not evaluated; potential synergies or conflicts remain unknown.

- Occlusion-order and rasterization stability: moving Gaussians using quasi-Newton directions may alter occlusion ordering; no analysis of rendering stability or artifacts induced by larger curvature-informed steps.

- Failure-case analysis is missing: no qualitative/quantitative identification of scenes where AW-SGLD or LQNAdam underperform, induce artifacts, or fail to escape local modes.

- Evaluation fairness and reproducibility: baselines often use their default settings while Opt3DGS aligns with 3DGSMCMC; no uniform training budget across all methods; code, seed management, and full training scripts/configs are not provided for reproducibility.

- Metrics limited to PSNR/SSIM/LPIPS: no geometry accuracy (e.g., depth/mesh errors), temporal consistency (for dynamic scenes), or downstream task metrics (e.g., SLAM trajectory quality); no user paper or perceptual evaluation beyond LPIPS.

- Adaptive exploration intensity is not developed: AW-SGLD relies on fixed and binning; no method to automatically modulate exploration (noise, flattening degree) based on measured multi-modality or mode transitions.

- Temperature is introduced but not instantiated: the role, selection, and sensitivity of in Eq. (6) are not specified or ablated, leaving its practical guidance unclear.

- L-BFGS history size choice is fixed: no paper on how affects convergence, stability, or compute cost across scenes; adaptive strategies remain unexplored.

Practical Applications

Immediate Applications

Below is a concise list of applications that can be deployed now using Opt3DGS’s optimization framework, along with sectors, potential tools/workflows, and assumptions that may affect feasibility.

- Media and Entertainment (VFX, Games, Virtual Production)

- Application: Higher-fidelity, artifact-resistant novel view synthesis for scanned environments and assets with existing 3DGS pipelines.

- Tools/Workflows: Integrate Opt3DGS as a drop-in optimizer (AW-SGLD for exploration + LQNAdam for convergence) into 3DGS-based asset pipelines; Unity/Unreal plugins for photogrammetry-to-render workflows; cloud training backends for studio pipelines.

- Assumptions/Dependencies: Modern GPU availability (e.g., RTX-class); minimal extra training time; tuning flattening coefficient () and energy bins; availability of 3DGS codebase and CUDA.

- XR/AR Content Capture (Consumer and Enterprise)

- Application: Robust reconstruction from handheld captures with fewer SfM dependencies thanks to improved random initialization performance.

- Tools/Workflows: Capture app uploads images → Opt3DGS training in cloud → real-time viewing/rendering in AR; automated quality checks using PSNR/SSIM/LPIPS.

- Assumptions/Dependencies: Sufficient image coverage; server-side GPUs; privacy/compliance for user data; standardized pipelines to convert splats to mobile-capable assets.

- Robotics and SLAM

- Application: Improved scene coverage and reduced over-clustering in Gaussian Splatting SLAM backends for navigation and mapping.

- Tools/Workflows: Replace or augment optimizer in GS-SLAM systems; use AW-SGLD to escape local optima in low-texture or repetitive environments; LQNAdam for precise convergence of positional attributes.

- Assumptions/Dependencies: Real-time constraints may require tuning noise schedules and batch sizes; robustness validated on target hardware; compatibility with SLAM loop closures and keyframe selection.

- AEC/BIM, Surveying, and Geomatics

- Application: More reliable photogrammetry of buildings and infrastructure with higher fidelity under limited Gaussian budgets.

- Tools/Workflows: Integrate Opt3DGS into photogrammetry tools (e.g., via plugins or custom scripts); automated pipeline steps for densification control; QA dashboards for energy-bin coverage.

- Assumptions/Dependencies: Adequate image baselines; GPU compute; conversion workflows to meshes or point clouds when required.

- Cultural Heritage Digitization

- Application: Better reconstruction of fine details and distant structures, reducing artifacts in large or complex scenes.

- Tools/Workflows: Batch processing of museum/artifacts with Opt3DGS; cloud-hosted render services for stakeholders; archival rendering pipelines.

- Assumptions/Dependencies: Image capture quality; institutional compute provisioning; long-term storage and compatible rendering formats.

- Real Estate and E-commerce Visualization

- Application: Faster, more robust creation of photorealistic walkthroughs and product scenes from consumer-grade captures.

- Tools/Workflows: Web-based pipeline—upload → Opt3DGS train → deploy views on listing/product pages; automated hyperparameter presets per scene type.

- Assumptions/Dependencies: Server-side GPU; pipeline standardization; compression/transcoding to client-friendly formats.

- Software and Developer Tools (Graphics/Rendering)

- Application: Opt3DGS as an optimizer library for 3DGS frameworks (e.g., kerbl’s 3DGS, 3DGSMCMC variants), improving rendering metrics without changing representation.

- Tools/Workflows: Python/CUDA SDK; configuration templates for AW-SGLD (energy partitioning, ) and LQNAdam (L-BFGS history size K).

- Assumptions/Dependencies: API compatibility; maintained bindings; documentation/examples; reproducibility on common datasets.

- Academic Research (Vision, Rendering, Optimization)

- Application: Benchmarking non-convex optimization strategies (adaptive exploration + curvature-aware exploitation) in explicit differentiable rendering.

- Tools/Workflows: Reproducing results on MipNeRF360, Tanks & Temples, DeepBlending; ablation studies on , energy binning, and switch iteration; integration in coursework and research repos.

- Assumptions/Dependencies: Dataset licenses; open-source code availability; baseline parity in resolution and Gaussian count.

- Cloud Rendering Services

- Application: Service-level upgrade for photorealistic reconstructions with better PSNR/SSIM/LPIPS across challenging conditions (high resolution, fewer Gaussians).

- Tools/Workflows: Managed training queues using Opt3DGS; autoscaling GPU fleets; SLA around quality metrics; customer-facing API.

- Assumptions/Dependencies: Cost controls; model monitoring; auto-tuning for diverse scene types.

- Quality Assurance and Production Engineering

- Application: Reduced reliance on heuristic ADC rules and more principled posterior exploration; fewer failure modes in complex scenes.

- Tools/Workflows: Pipeline checks for mode-trapping; energy histogram monitoring; fallback strategies with AW-SGLD reweighting.

- Assumptions/Dependencies: Logging/observability in training; team familiarity with stochastic optimization; minimal additional runtime overhead.

Long-Term Applications

These applications will benefit from further research, scaling, or engineering work before broad deployment.

- City-Scale Digital Twins and Urban Planning

- Application: Applying Opt3DGS to extremely large scenes (e.g., VastGaussian, CityGaussian) for municipal-scale visualization and planning.

- Tools/Workflows: Distributed training (multi-GPU/cluster) with adaptive exploration; hierarchical energy binning; streaming/rendering services for stakeholders.

- Assumptions/Dependencies: Data governance and privacy policies; open standards for splat representations; cluster scheduling and memory handling.

- Dynamic Scene Reconstruction (4D Gaussian Splatting)

- Application: Extending AW-SGLD to temporal dimensions for robust exploration in dynamic scenes; LQNAdam for motion and deformation parameters.

- Tools/Workflows: Temporal energy partitioning; curvature-aware updates across time; specialized noise schedules for motion.

- Assumptions/Dependencies: Algorithmic generalization to 4D; real-time constraints; validation on dynamic datasets.

- On-Device Training and Edge Deployment

- Application: Mobile or embedded training for AR/robotics using lighter optimizers and sort-free rendering techniques.

- Tools/Workflows: Hardware-aware implementations of AW-SGLD; memory-efficient L-BFGS variants; model compression and quantization.

- Assumptions/Dependencies: Energy consumption limits; minimal RAM/VRAM; co-design with mobile GPUs/NPUs.

- Autonomous Driving Simulation and HD Maps

- Application: Camera-only reconstruction of routes and assets with improved fidelity under difficult conditions; synthetic data generation for training.

- Tools/Workflows: Continuous mapping pipelines; integration with simulation engines; automated QA of rendering metrics for safety-critical usage.

- Assumptions/Dependencies: Regulatory compliance; large-scale data processing; pipeline reliability guarantees.

- Healthcare Imaging (Pre-Operative Planning, Endoscopy)

- Application: Patient-specific 3D reconstructions with better convergence in challenging scenes (low texture, specularities).

- Tools/Workflows: Clinical pipelines integrating Opt3DGS; audit trails and quality metrics; human-in-the-loop validation.

- Assumptions/Dependencies: Clinical validation and certification; privacy/security; domain adaptation to medical imagery.

- Energy and Utilities Inspection (Industrial Assets)

- Application: Reconstruction of plants, substations, and pipelines from limited viewpoints with improved robustness.

- Tools/Workflows: Drone capture → Opt3DGS-based training → inspection dashboards with photorealistic navigation.

- Assumptions/Dependencies: Safety and flight regulations; standardized data formats; integration with asset management systems.

- Robotics: Robust Mapping in Adverse Conditions

- Application: Enhanced exploration reduces failure in low-texture/reflective areas during autonomous mapping; better coverage with constrained Gaussian budgets.

- Tools/Workflows: Adaptive optimizer settings tied to scene confidence; hybrid strategies with SLAM front-ends; field validation.

- Assumptions/Dependencies: Real-time adaptation strategies; compatibility with multi-sensor fusion; robustness to motion blur.

- General-Purpose Optimizers for Non-Convex ML Problems

- Application: Porting the flat-histogram AW-SGLD + curvature-aware local updates to other explicit rendering or combinatorial ML tasks.

- Tools/Workflows: Optimizer package with energy-binning schedulers; LQNAdam extensions beyond positional parameters; auto-tuning via meta-learning.

- Assumptions/Dependencies: Theoretical guarantees for broader tasks; stable defaults across domains; community adoption.

- Production-Grade Auto-Tuning and Monitoring

- Application: Automated selection of , energy bins, switch iteration, and noise schedule for diverse scene types and budgets.

- Tools/Workflows: Meta-learning or Bayesian optimization for hyperparameters; monitoring dashboards; alarms for mode-trapping or over-clustering.

- Assumptions/Dependencies: Sufficient telemetry; robust priors; safe optimization in production.

- Standards and Policy for Photorealistic 3D City Models

- Application: Leveraging improved fidelity to inform standards for municipal digital twins, open data sharing, and accessibility.

- Tools/Workflows: Cross-agency collaborations; standardized quality metrics (PSNR/SSIM/LPIPS) for procurement and QA.

- Assumptions/Dependencies: Policy frameworks and funding; public data governance; interoperability across platforms and vendors.

Glossary

- Adam optimizer: A first-order stochastic optimization method that uses adaptive estimates of lower-order moments. "feed it into the Adam optimizer"

- Adaptive Density Control (ADC): A heuristic procedure in 3DGS that clones, splits, and prunes Gaussians based on fixed thresholds to control density. "adaptive density control (ADC) step"

- Adaptive Weighted SGLD (AW-SGLD): A modified SGLD scheme that flattens the posterior to improve global exploration and escape local optima. "we introduce Adaptive Weighted SGLD (AW-SGLD)."

- Alpha blending: A compositing technique that accumulates color contributions using per-primitive opacity along a ray. "the final pixel color is computed using alpha blending:"

- Bayesian theorem: A principle in probability used to interpret sampling biases and posterior modes in optimization. "Based on Bayesian theorem, such clustering creates sampling bias"

- Conical frustum sampling: A ray-sampling strategy that reduces aliasing by integrating over conical frusta instead of points. "Mip-NeRF360 address aliasing with conical frustum sampling"

- Covariance matrix: A matrix specifying the spatial extent and orientation of a Gaussian primitive via scale and rotation. "a covariance matrix that determines the spatial extent and orientation of the Gaussian"

- Curvature information: Second-order characteristics of the loss surface that guide more precise update directions. "leverages curvature information for precise and efficient convergence."

- Densification: Increasing the number or density of primitives to better cover scene regions. "Densification strategies have also been actively explored:"

- Eigenvalues: Values characterizing matrix scaling along principal directions, used here for regularizing Gaussian covariance. "\left|\sqrt{\mathrm{eig}_j(\Sigma_i)}\right|_1"

- Energy landscape: The structure of the loss surface (posterior energy) with multiple modes and barriers affecting optimization. "The posterior energy landscape is often highly multi-modal"

- Flat-histogram principle: A strategy to flatten sampled energies across bins, facilitating traversal of modes. "inspired by the flat-histogram principle"

- Gaussian primitives: Explicit scene elements parameterized by position, opacity, appearance, and covariance, used for rendering. "modeling the radiance field of scenes using discrete Gaussian primitives."

- Indicator function: A function that returns 1 if a condition holds and 0 otherwise, used in piecewise definitions. "where is the indicator function"

- L-BFGS (Limited-memory Broyden–Fletcher–Goldfarb–Shanno): A quasi-Newton optimizer that estimates curvature using a limited history without storing full Hessians. "We apply the limited-memory Broyden-Fletcher-Goldfarb-Shanno (L-BFGS) algorithm"

- Levenberg–Marquardt (LM) algorithm: A second-order method blending gradient descent and Gauss–Newton for non-linear least squares. "or the Levenberg–Marquardt (LM) algorithm to accelerate optimization"

- Line search: A procedure to choose step size along a search direction to ensure sufficient decrease. "L-BFGS typically uses a line search to determine the step size."

- LPIPS (Learned Perceptual Image Patch Similarity): A perceptual metric that measures visual similarity using deep features. "we adopt three widely used visual quality metrics: PSNR, SSIM, and LPIPS"

- Local Quasi-Newton Direction-guided Adam (LQNAdam): An optimizer that feeds L-BFGS directions into Adam to obtain curvature-aware yet robust updates. "We propose a Local Quasi-Newton Direction-guided Adam Optimizer (LQNAdam)."

- Markov Chain Monte Carlo (MCMC): A class of algorithms that sample from complex distributions via Markov chains. "reformulates 3DGS as a Markov Chain Monte Carlo (MCMC) process"

- Neural Radiance Fields (NeRF): An implicit representation that models volumetric scene radiance with neural networks for view synthesis. "Unlike implicit predecessors such as Neural Radiance Fields (NeRF)~\cite{mildenhall2021nerf}"

- Opacity-based sampling: Selecting positions or primitives according to their opacity-induced probability distribution to guide addition/removal. "normalized opacity-based probability distribution"

- Posterior distribution: The target probability over configurations given data, which optimization seeks to explore and maximize. "AW-SGLD flattens the posterior distribution"

- PSNR (Peak Signal-to-Noise Ratio): A metric quantifying reconstruction fidelity by comparing pixel intensities. "we adopt three widely used visual quality metrics: PSNR, SSIM, and LPIPS"

- Quaternion: A four-dimensional representation for rotations, used to parameterize Gaussian orientation. " (represented as a quaternion)"

- Quasi-Newton methods: Optimization techniques that approximate second-order information without computing full Hessians. "Unlike full Quasi-Newton methods, it avoids the need for computationally expensive line searches."

- Rasterization: A rendering process that projects primitives onto the image plane in parallel for efficiency. "renders them via parallel rasterization"

- SGLD (Stochastic Gradient Langevin Dynamics): An optimizer that adds Gaussian noise to SGD updates to sample from a posterior. "3DGSMCMC employs Stochastic Gradient Langevin Dynamics (SGLD) for parameter updates:"

- SGHMC (Stochastic Gradient Hamiltonian Monte Carlo): A momentum-based stochastic sampler for improved exploration in high-dimensional posteriors. "SSS adopts stochastic gradient Hamiltonian Monte Carlo for enhanced exploration."

- SGMCMC (Stochastic Gradient Markov Chain Monte Carlo): A family of stochastic samplers that use minibatch gradients to enable scalable Bayesian updates. "Methods based on Stochastic Gradient Markov Chain Monte Carlo (SGMCMC) unify the update, addition, and removal of Gaussian primitives"

- Spherical harmonic coefficients: Basis-function coefficients modeling view-dependent appearance on the sphere. "view-dependent spherical harmonic coefficients for appearance modeling"

- SSIM (Structural Similarity Index Measure): A perceptual similarity metric comparing structural information between images. "we adopt three widely used visual quality metrics: PSNR, SSIM, and LPIPS"

- Stochastic Approximation (SA): An iterative method to estimate unknown quantities (e.g., weights) from noisy observations during training. "we employ a stochastic approximation (SA) approach"

- Temperature parameter: A scalar controlling the sharpness of the target distribution in energy-based formulations. "and is the temperature parameter."

- Transmittance: The accumulated fraction of light not absorbed by preceding primitives along a ray. "accumulated transmittance"

- Volumetric rendering: Rendering technique that integrates color and opacity along rays through a volume. "using volumetric rendering to synthesize novel views."

Collections

Sign up for free to add this paper to one or more collections.