Birth of a Painting: Differentiable Brushstroke Reconstruction (2511.13191v1)

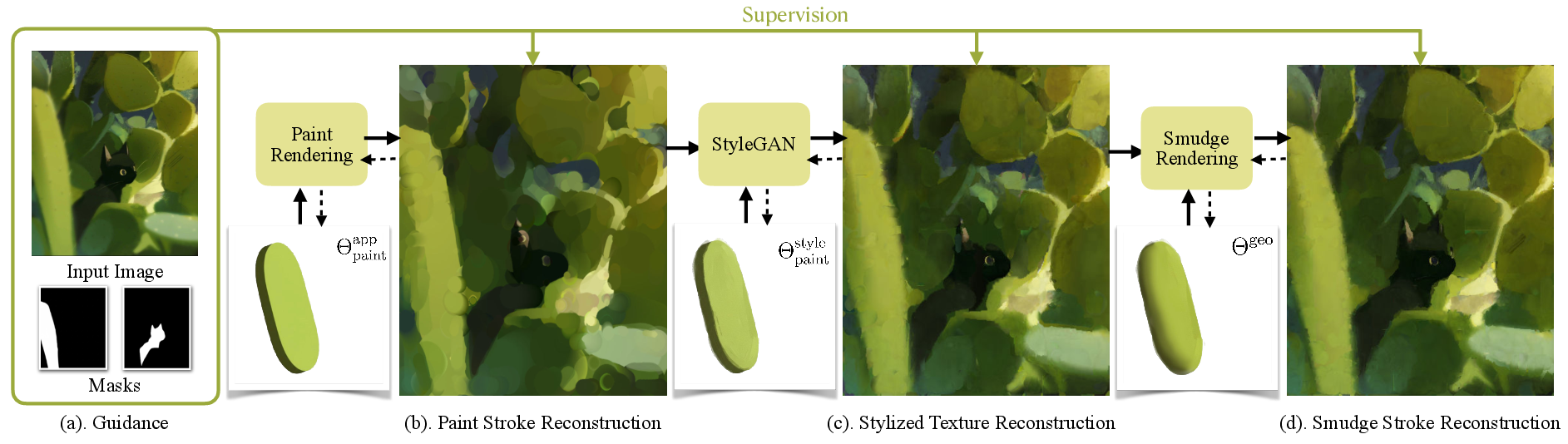

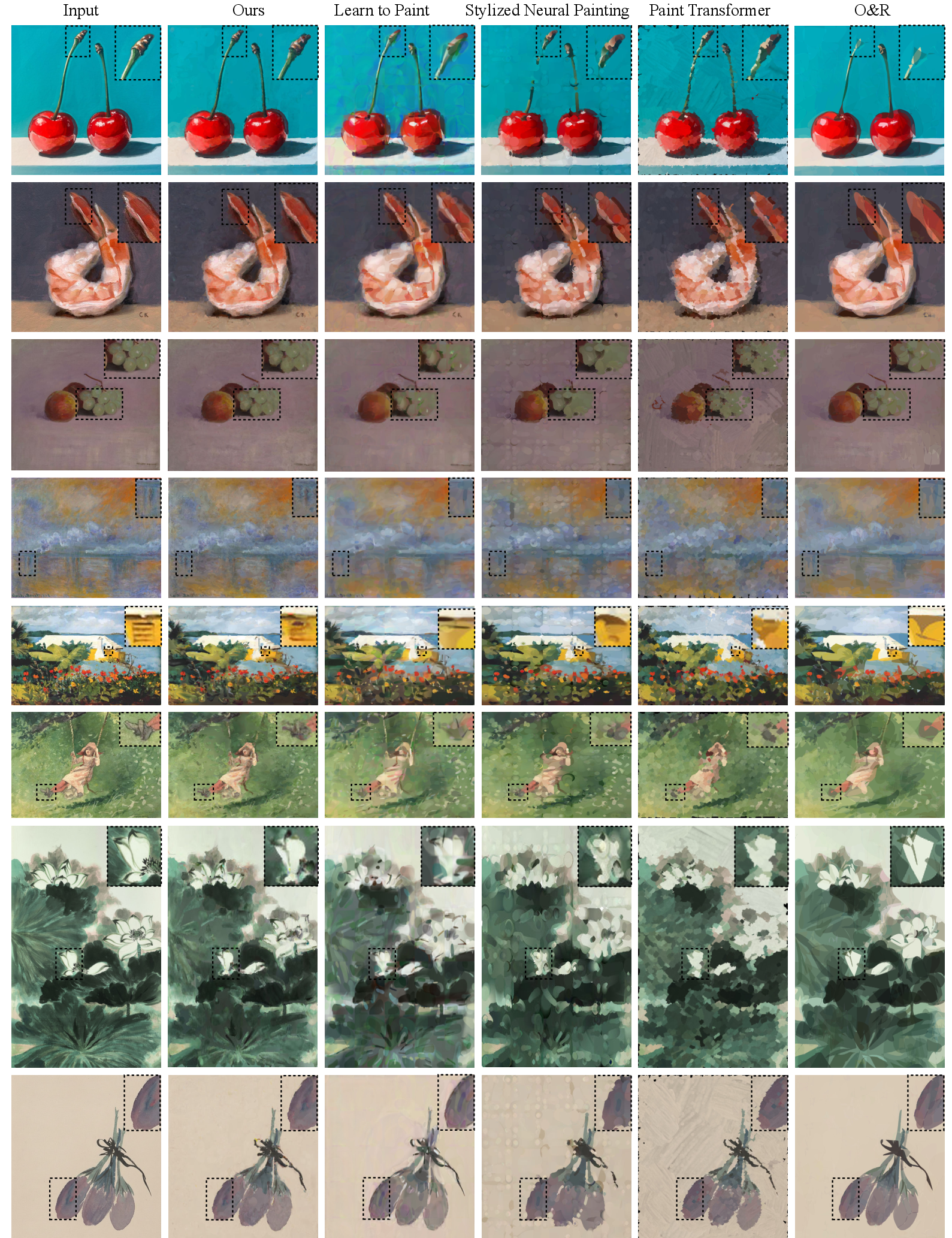

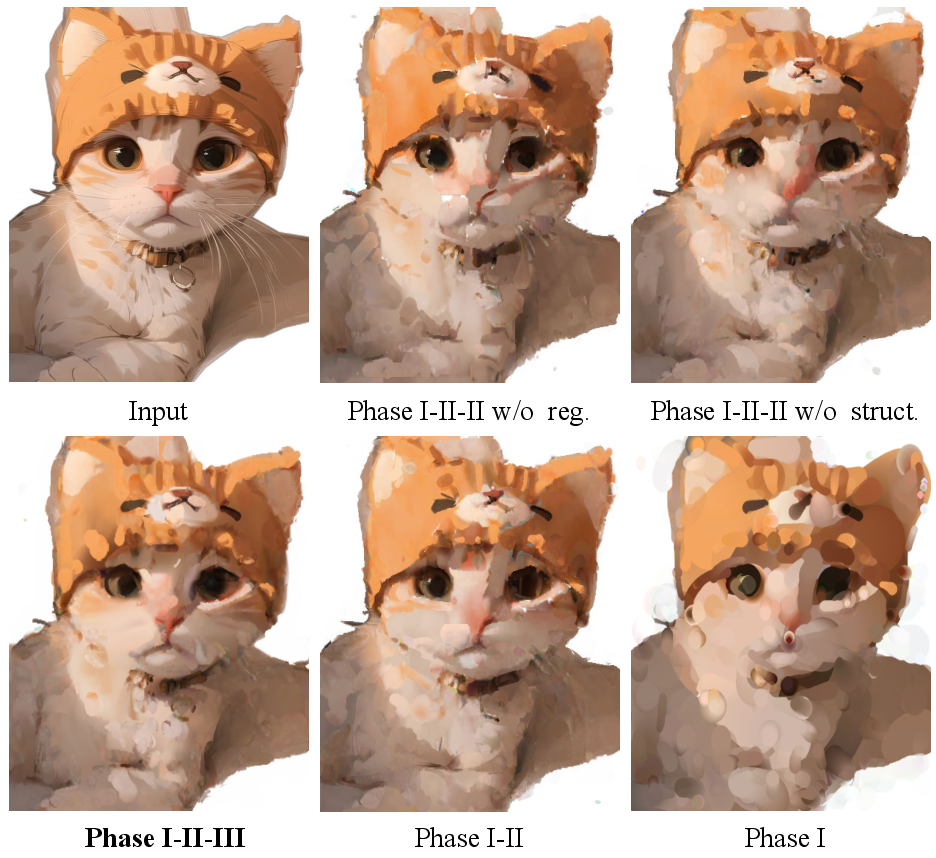

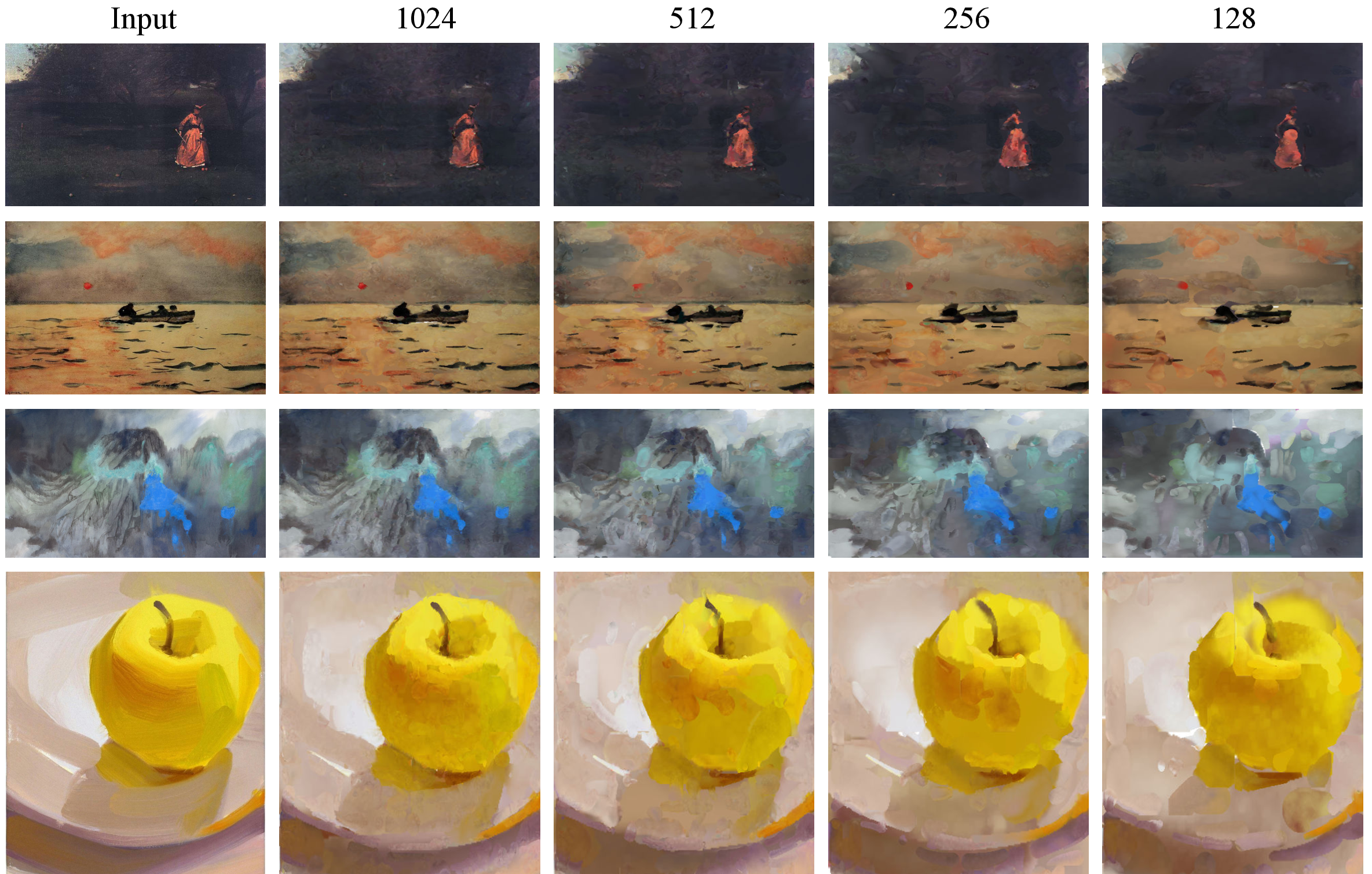

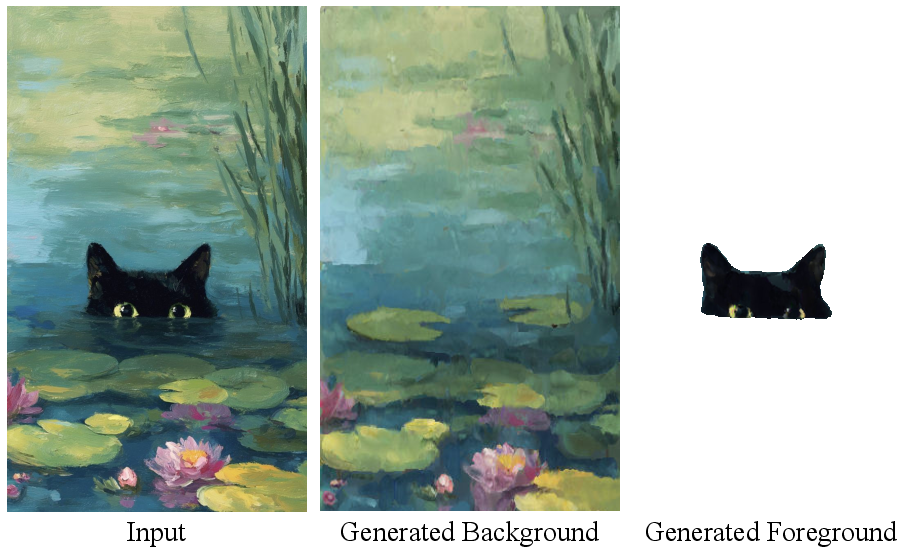

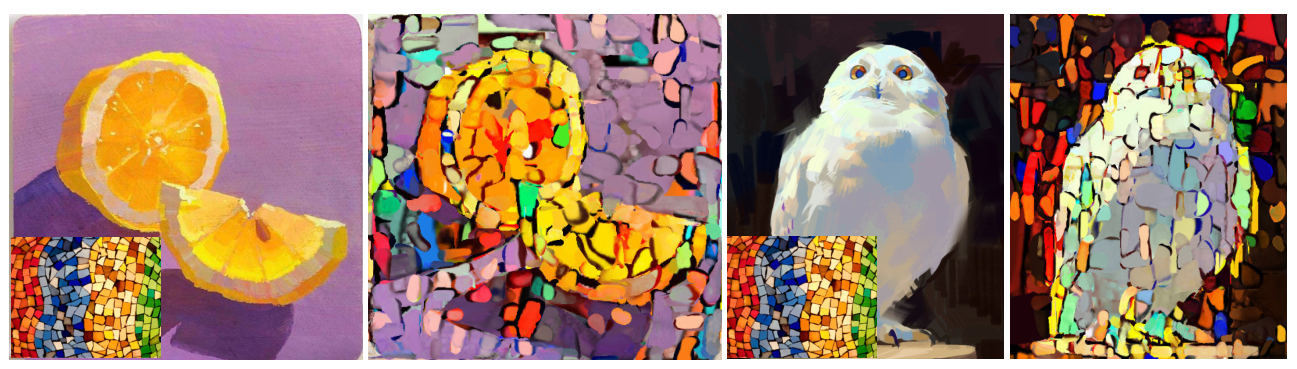

Abstract: Painting embodies a unique form of visual storytelling, where the creation process is as significant as the final artwork. Although recent advances in generative models have enabled visually compelling painting synthesis, most existing methods focus solely on final image generation or patch-based process simulation, lacking explicit stroke structure and failing to produce smooth, realistic shading. In this work, we present a differentiable stroke reconstruction framework that unifies painting, stylized texturing, and smudging to faithfully reproduce the human painting-smudging loop. Given an input image, our framework first optimizes single- and dual-color Bezier strokes through a parallel differentiable paint renderer, followed by a style generation module that synthesizes geometry-conditioned textures across diverse painting styles. We further introduce a differentiable smudge operator to enable natural color blending and shading. Coupled with a coarse-to-fine optimization strategy, our method jointly optimizes stroke geometry, color, and texture under geometric and semantic guidance. Extensive experiments on oil, watercolor, ink, and digital paintings demonstrate that our approach produces realistic and expressive stroke reconstructions, smooth tonal transitions, and richly stylized appearances, offering a unified model for expressive digital painting creation. See our project page for more demos: https://yingjiang96.github.io/DiffPaintWebsite/.

Sponsored by Paperpile, the PDF & BibTeX manager trusted by top AI labs.

Get 30 days freePaper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview: What this paper is about

This paper is about teaching a computer to “re-create” a painting the way a human might make it—stroke by stroke, with smooth blending and realistic textures. Instead of only copying the final image, the system figures out the actual brushstrokes, how they’re placed, how colors blend, and how the texture looks in styles like watercolor, oil, ink, or digital art. The key idea is a unified, smart painting pipeline that combines painting, texturing, and smudging, and can adjust itself automatically to match a given artwork.

Key objectives and questions

To make the painting process realistic and useful, the paper sets out to answer a few simple questions:

- How can we represent each brushstroke so a computer can change its shape, color, and texture?

- How can we render strokes efficiently and allow smooth shading, like when a painter blends colors?

- How can we generate stroke textures (like watercolor blooms or oil paint thickness) across many styles without training a separate model for each style?

- How can we reconstruct the painting process in a way that is faithful to the original image and easy to edit or learn from?

How the research works (in everyday terms)

Think of the system as a three-step loop that mimics how artists paint:

- Painting strokes (placing color)

- The system represents each stroke as a smooth, bendy line called a Bézier curve.

- A Bézier curve is like drawing with a flexible ruler: you set a start point, an end point, and a “control point” that bends the path.

- Along this path, it places many tiny “stamps” of color (little circles of paint) to build the stroke.

- “Differentiable” means the computer can automatically tweak stroke settings (like position, width, or color) to better match the target image—like getting feedback and improving with each try.

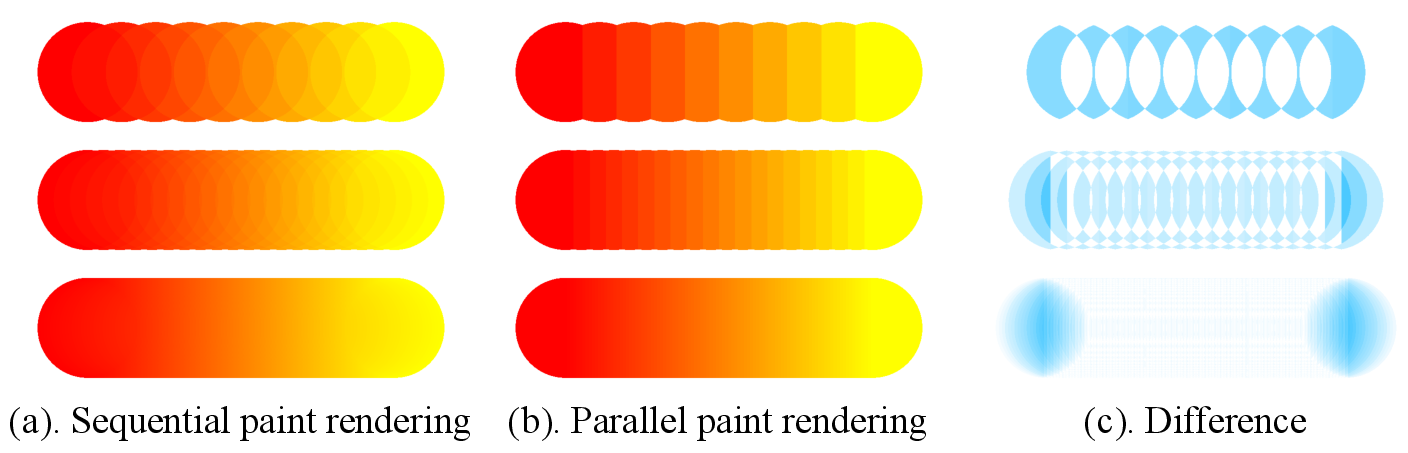

- “Parallel” rendering means it can process many pixels at once, making it faster (like having multiple helpers painting simultaneously).

- Adding style and texture (making it look like watercolor, oil, ink, etc.)

- Beyond flat color, real paintings have textures: watercolor has soft spreads; oil can look thick and glossy; ink can have crisp edges.

- The system uses a texture generator (StyleGAN) to produce these appearances based on the stroke’s shape. You can think of the “style code” as a secret recipe that tells the computer how the paint should look on the canvas.

- The geometry (the stroke’s path and width) stays fixed while the texture and material are adjusted to match the target style.

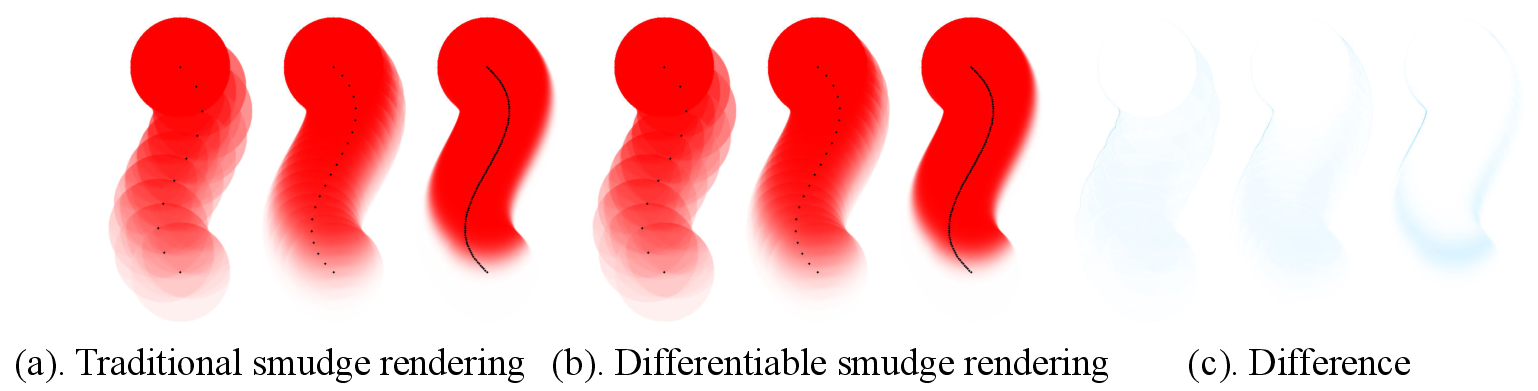

- Smudging (blending colors smoothly)

- Real painters often blend or smudge colors to create soft shadows and smooth transitions.

- The system includes a “smudge renderer” that mimics dragging a brush or finger across the paint to blend colors.

- It introduces a clever, one-shot setup so the blending can be calculated efficiently and still look natural, with color carried along the stroke and mixed on the canvas.

Coarse-to-fine strategy

- The computer starts with big, simple strokes to capture the main shapes (coarse), then gradually adds smaller strokes and details (fine).

- This is similar to how artists block in large areas first, then refine details.

Guidance and checks

- The system uses several simple “checks” to keep it realistic:

- Pixel and perceptual checks: Does the stroke match the colors and overall look of the target image?

- Edge and direction checks: Do strokes follow the image’s contours and edges?

- Segmentation checks: Do strokes stay within the right object areas (so paint doesn’t spill across unrelated parts)?

- Regularization: Prevents weird strokes (like vanishingly small ones) and helps optimization stay stable.

Main findings and why they matter

- Realistic strokes and shading: The system reconstructs paintings with smooth blending and expressive textures, closely matching watercolor, oil, ink, and digital styles.

- Speed and efficiency: Their parallel stroke renderer is up to about 7× faster than a traditional sequential method, while keeping accuracy.

- Unified across styles: Unlike older methods that train separate models for each style, this approach uses one framework to produce multiple styles by adjusting the “style code.”

- Better quality scores: Across many test images, the method outperforms popular baselines on image quality metrics (meaning the results look closer to the original and more natural to human eyes).

- Practical tools:

- Layer-aware painting: The system can separate a scene into layers (like foreground vs. background), making editing much easier.

- Style transfer: It can keep the content and structure from one image while applying the texture and color feel of another style image.

Why this is important

- It moves beyond simple “final image” generation to a process-aware reconstruction with actual strokes and blending. That makes the output useful for learning, editing, and even robotic painting.

- The unified representation makes it more flexible and scalable across styles and images.

Implications and impact

- Art education and tutorials: Students can learn how a painting was built step by step, not just see the finished artwork.

- Digital painting software: The techniques could be integrated to create smarter brushes that blend naturally and render efficiently.

- Style and content editing: Artists and designers can easily swap styles or tweak layers while preserving structure.

- Robotics and automation: Robots or systems that paint could use the stroke instructions to replicate human-like painting processes.

- Research and creative tools: Provides a bridge between generative AI and human painting workflows, potentially improving tools for animation, museums, and art restoration.

In short, this work gives computers a more “artist-like” way to paint—placing strokes, adding texture, and smudging—so the reconstructed artworks look and feel more like the real thing, across many styles, and at practical speeds.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored in the paper. Each item is phrased to be directly actionable for future research.

- End-to-end scalability is not reported: what are full reconstruction times, memory footprints, and convergence behavior for high-resolution canvases (e.g., 4K+) and large stroke counts (e.g., 10k+ strokes) on commodity GPUs?

- The parallel paint renderer uses nearest-stamp selection (argmin) which is non-differentiable at boundaries; investigate soft assignment (e.g., softmin or kernel-weighted blending) to improve gradient stability and avoid Voronoi-like artifacts.

- Stamps are sampled uniformly in the Bezier parameter t, not by arc length; quantify spacing bias and evaluate arc-length–uniform sampling to prevent uneven stamp density on curved segments.

- Sequential alpha compositing is replaced by nearest-stamp coloring; measure aesthetic and physical differences in color mixing versus traditional layered compositing, especially for overlapping stamps and semi-transparency.

- The smudge operator is a simplified recursive blend with constant coefficients; extend it to physically grounded pigment/water/oil models (e.g., diffusion–advection, capillarity, drying, paper grain absorption) and validate against real media (oil, watercolor, ink).

- Brush dynamics are under-modeled (no pressure, tilt, speed, bristle anisotropy, dry-brush effects); incorporate time-varying brush states and analyze their impact on stroke geometry and texture.

- Stroke geometry is restricted to a single cubic Bezier with endpoint radii and linear width interpolation; evaluate richer primitives (variable-width profiles, piecewise curves, branching, calligraphic strokes, textured outlines).

- Only dual-color strokes are supported; explore multi-color and layered strokes (glazing, impasto) and their interaction with smudging and texture synthesis.

- StyleGAN-based texture synthesis is trained on 128×128 patches; assess multi-scale texture generators (patch pyramids, tileable textures) to avoid repetition and to preserve meso-/macro-scale style features on large strokes.

- The conditioning of the texture generator on “geometry” is underspecified; formalize what geometric features are fed (e.g., curvature, width profile, orientation, region semantics) and perform ablations on conditioning design.

- Style control and disentanglement are not characterized; establish interpretable controls (e.g., paint thickness, paper grain, brush roughness) and evaluate latent traversals for consistency and style fidelity across domains.

- Generalization beyond the training distribution is not tested; quantify performance on out-of-domain styles and mixed-media works, and paper domain adaptation/robustness strategies.

- Evaluation dataset is small (80 images) and biased (WikiArt + curated digital); expand to larger, more diverse, and process-annotated datasets (including scanned physical works with known media and techniques).

- No user paper or artist-in-the-loop evaluation; conduct perceptual studies with artists to assess realism, stylistic fidelity, and usefulness for teaching or creative workflows.

- Stroke ordering is not explicitly reconstructed (renderer is parallel); devise methods to infer plausible stroke sequences (temporal plans) and evaluate their utility for educational playback and robotic painting.

- Segmentation-based constraints depend on SAM and VLM inpainting; analyze failure cases (transparent/semi-transparent regions, fine structures) and propose robust strategies for ambiguous or overlapping content.

- The decision to omit smudging at the final grid level to “preserve edge sharpness” is heuristic; develop adaptive region-aware smudging policies that balance crisp edges and smooth shading.

- No comparison to smooth-shading vector approaches (diffusion curves, gradient meshes); benchmark against these baselines to quantify benefits of open-curve stroke modeling.

- Lack of stroke-level metrics; introduce measures for stroke geometry accuracy (e.g., curvature/width error), stroke ordering plausibility, and shading smoothness beyond global PSNR/SSIM/LPIPS/FD.

- Feature Distance (FD) metric details are unclear (ResNet vs. Inception, layers used); standardize metrics (e.g., FID variants for paintings) and validate their correlation with human judgments.

- Loss design and hyperparameters lack ablations; perform sensitivity analysis for each loss term (perceptual, gradient magnitude/direction, segmentation, OT, area) and justify chosen weights.

- No robustness analysis to capture/scan artifacts (lighting, white balance, texture noise); evaluate preprocessing and color calibration impacts on reconstruction quality.

- Export and interoperability are not addressed; define how textured strokes are serialized to standard vector formats (SVG/PDF) and rendered consistently across tools (including rasterization strategies for textures).

- Real-medium validation is absent; print results or render on textured substrates, and compare to physical paintings to assess material realism (specular highlights for oil, bleeding for watercolor, paper tooth for ink).

- Failure modes are not documented; catalog typical errors (haloing at stamp boundaries, texture tiling, oversmoothing, segmentation leaks) and propose diagnostics/mitigations.

- Smudge kernel parameters (a, b, αc, αs) are fixed; explore learning these parameters per style/region or predicting them conditionally from local content to match artist-specific smudging behaviors.

- Stroke placement is guided by a simple L1 error map; investigate more informative guidance (edge/structure maps, saliency, semantic cues) and global planning to reduce redundant strokes while preserving detail.

Practical Applications

Below are practical, real-world applications grounded in the paper’s differentiable brushstroke reconstruction framework (parallel differentiable paint renderer, geometry-conditioned StyleGAN textures, and differentiable smudge operator), organized by deployment horizon.

Immediate Applications

- Digital art software: vectorized painterly reconstruction from raster images

- Sector: Creative software, design

- What it enables: Turn images into layered, open-curve, stylized stroke sequences with smooth shading (SVG-like, but with smudge-aware strokes) for non-destructive editing.

- Potential tools/workflows: “PainterlyVectorizer” plugin for Photoshop/Procreate/Krita; Illustrator/InkScape extension for open-Bézier brushstroke reconstruction.

- Assumptions/dependencies: SAM-style segmentation; pretrained StyleGAN texture model (trained on 128×128 patches); GPU acceleration for interactive speeds; licensing of training data.

- Layer-aware painting generation and editing

- Sector: Creative software, VFX/animation

- What it enables: Semantically decomposed, editable painting layers; object-wise stroke reconstruction; selective restyling, recoloring, and re-shading per layer.

- Potential tools/workflows: Layer-aware “stroke stacks” (geometry + style latent + smudge parameters); post-hoc layer rebalancing and edge-preserving refinements.

- Assumptions/dependencies: Reliable segmentation (SAM/Grounded-SAM); background inpainting when content is semi-transparent.

- Style transfer with structural preservation

- Sector: Creative software, marketing/design

- What it enables: Transfer brush textures, color palettes, and material appearance from a style reference while preserving geometry and semantics of the input.

- Potential tools/workflows: “LayeredStyleTransfer” with geometry fixed and perceptual/style losses redirected to a reference; batch processing for brand visuals.

- Assumptions/dependencies: Style image rights/consent; content/style loss tuning; robustness to domain shifts.

- Efficient brush engines for interactive apps and game tools

- Sector: Software (graphics), games

- What it enables: GPU-parallel stamp-based painting and differentiable smudging with smooth shading at lower latency; LOD control via coarse-to-fine strokes.

- Potential tools/workflows: Integration into Unreal/Unity brush systems; shader-based stroke compositors using nearest-stamp color assignment.

- Assumptions/dependencies: GPU availability; adaptation of the SDF/nearest-stamp model to engine constraints; UX tuning for feel vs. accuracy.

- Educational painting playback and curricula

- Sector: Education, edtech

- What it enables: Step-by-step, human-like paint–smudge loops from an image; practice tasks that isolate stroke geometry vs. smudging; “how it was painted” visualizations.

- Potential tools/workflows: Teacher dashboards showing stroke order, gradient alignment; student exercises on shading via smudge paths.

- Assumptions/dependencies: The reconstructed process is plausible, not ground truth; requires pedagogy-aligned lesson design.

- Automated time‑lapse generation of painting processes

- Sector: Social media, content creation, marketing

- What it enables: Create convincing progress videos from a single still artwork; reveal animations for campaigns and tutorials.

- Potential tools/workflows: “Birth-of-a-Painting” export to video/timelines; parameterized pacing with coarse-to-fine stroke reveal.

- Assumptions/dependencies: Coherence of reconstructed stroke order; user control over tempo and emphasis.

- Asset compression and re-targetable painterly vectors

- Sector: Content pipelines, asset libraries

- What it enables: Compact, resolution-independent, re-stylable representations (open curves + style latent + smudge); easy recoloring/retexturing downstream.

- Potential tools/workflows: Stroke-param archives; asset normalization to a common geometry with swappable style latents.

- Assumptions/dependencies: Tooling for import/export; consistent style-space calibration across projects.

- Previsualization and look development for NPR (non‑photorealistic rendering)

- Sector: VFX/animation

- What it enables: Rapid exploration of painterly looks on concept art, with controllable stroke geometry, texture, and smudging; consistent shading vs. patch-based methods.

- Potential tools/workflows: “NPR Look Dev” nodes in Houdini/Nuke; shot-level restyling via latent manipulation.

- Assumptions/dependencies: Pipeline integration; consistency across shots requires batch constraints and seed management.

- Robot painting path prototype in simulation

- Sector: Robotics (creative robotics)

- What it enables: Export open Bézier stroke paths and smudge trajectories as initial motion plans in a digital sandbox; test stroke counts and shading strategies virtually.

- Potential tools/workflows: “RobotBrush Planner” that maps reconstructed curves to robot waypoints; simulation in Blender/ROS.

- Assumptions/dependencies: No physical paint model; still requires calibration to brushes, paint viscosity, drying times.

- Museum and cultural heritage interpretation

- Sector: Heritage, education

- What it enables: Interactive exhibits that hypothesize “how it may have been painted,” layer-by-layer viewing and analysis (non-forensic, interpretive).

- Potential tools/workflows: Touchscreen kiosks with stroke replay; semantic layers toggling; contextual commentary.

- Assumptions/dependencies: Results are reconstructions, not historical ground truth; curatorial oversight; provenance/rights for source images.

Long-Term Applications

- Physical robot painting with pigment-aware smudging

- Sector: Robotics, manufacturing

- What it could enable: Closed-loop execution of paint–smudge loops with real brushes and paints; fewer strokes via controlled blending; material-aware shading.

- Potential tools/workflows: Vision-in-the-loop controllers; pigment transport models; schedule planning for drying and reloading.

- Assumptions/dependencies: Physics-calibrated models of paint/surface; sensing for wetness/thickness; robust sim-to-real transfer.

- Real-time, on-device painterly editors

- Sector: Mobile/consumer software

- What it could enable: Interactive stroke reconstruction, smudging, and restyling on tablets/phones at high resolution.

- Potential tools/workflows: Metal/Vulkan compute kernels; distilled or quantized texture generators; edge-aware caching.

- Assumptions/dependencies: Further optimization of GPU kernels; model compression; UX research for latency/quality trade-offs.

- Video- and sequence-level painterly stylization with temporal consistency

- Sector: VFX/animation, streaming

- What it could enable: Stable, stroke-consistent painterly videos; controllable temporal smudging; motion-aware stroke adjustments.

- Potential tools/workflows: Temporal constraints in losses; 3D camera-aware stroke reprojection; editorial controls for flicker vs. freshness.

- Assumptions/dependencies: New temporal models; memory-efficient stroke tracking; scene/depth estimation.

- Forensic analysis and art-historical hypothesis testing

- Sector: Academia, conservation

- What it could enable: Quantitative comparisons of plausible stroke orders; testing alternate construction scenarios; detecting improbable processes.

- Potential tools/workflows: Hypothesis search over stroke sequences; priors from known artist techniques; metrics for plausibility.

- Assumptions/dependencies: Requires domain priors; reconstructed process remains a hypothesis; needs validation against material analyses.

- Intelligent restoration and inpainting guidance

- Sector: Conservation, design

- What it could enable: Propose stroke and smudge plans to reconstruct missing regions with stylistically coherent shading and texture.

- Potential tools/workflows: Region-selective reconstruction with constraints; restorer-in-the-loop interactive planning.

- Assumptions/dependencies: Trustworthy segmentation of damaged areas; user controls for conservational ethics; provenance tracking.

- Cross-modality expansion to 3D/AR painting experiences

- Sector: AR/VR, creative tech

- What it could enable: Stroke and smudge primitives extended to 3D canvases/surfaces; geometry-conditioned textures in immersive painting.

- Potential tools/workflows: VR brush engines using open-curve primitives; 3D surface parameterization for stroke texturing.

- Assumptions/dependencies: Surface mapping and curvature-aware smudging; performance on XR hardware.

- Standardized painterly vector format with smudge semantics

- Sector: Software standards, tooling ecosystem

- What it could enable: An extension to SVG (or new spec) encoding open strokes with texture latents and smudge operators; interoperability across tools.

- Potential tools/workflows: “SVG-S” (SVG with Smudge) proposal; converters and validators; open-source reference renderer.

- Assumptions/dependencies: Community and vendor buy-in; IP around differentiable operators.

- Ethical and policy frameworks for style consent and provenance

- Sector: Policy, platforms, IP management

- What it could enable: Style licensing/consent for texture models; embedded provenance/watermarks for reconstructed processes; platform-level disclosures.

- Potential tools/workflows: Style registries with opt-in/opt-out; cryptographic signatures in vector files; platform UX for attribution.

- Assumptions/dependencies: Legal clarity on style rights; standardization with C2PA-like efforts; platform enforcement.

- Data generation for training painting agents and RL policies

- Sector: ML research

- What it could enable: Large-scale, high-quality stroke trajectories with shading for training agents that learn efficient paint–smudge policies.

- Potential tools/workflows: Synthetic datasets with parameterized difficulty; multi-objective rewards (fidelity, aesthetics, efficiency).

- Assumptions/dependencies: Scalable reconstruction on diverse corpora; task-aligned reward design; compute resources.

- Hybrid NPR pipelines in real-time engines

- Sector: Games, simulation

- What it could enable: Runtime painterly rendering combining raster NPR with learned smudge primitives for shading coherence; dynamic restyling.

- Potential tools/workflows: Hybrid shader graphs; LOD-aware stroke instancing; content-aware smudge passes.

- Assumptions/dependencies: Engine integration; performance budgets on consoles; tooling for artists to author constraints.

Note on feasibility and risks across applications:

- Compute: Current prototypes reported on high-end GPUs (e.g., H100); practical deployment will need kernel optimizations, batching, or model distillation.

- Data and models: Texture synthesis depends on pretrained StyleGAN variants and curated style data; licensing/consent and domain gaps matter.

- Reconstruction vs. ground truth: The method yields plausible processes, not the actual artist’s sequence; use interpretively, not as forensic fact, unless validated.

- Integration: Quality of segmentation (SAM) and perceptual guidance (VGG) affects output; UI/UX and artist controls are essential for adoption.

Glossary

- Adam optimizer: A stochastic gradient-based optimization algorithm that adapts learning rates for each parameter using estimates of first and second moments. "we employ the Adam optimizer with a learning rate that linearly warms up from 0 to 0.01 over the first 5\% of iterations, remains constant for the next 70\%, and then follows a cosine decay schedule to 0 over the final 25\%."

- alpha compositing: A method for combining overlapping colors using per-pixel opacity (alpha) to produce the final color. "combined through sequential alpha compositing:"

- arc length: The length measured along a curve, often used for parameterizing positions uniformly along a trajectory. "Reparameterizing the trajectory by cumulative arc length with total length and normalized positions , we obtain"

- Bézier curve: A parametric curve defined by control points, widely used to represent smooth stroke geometry. "models each stroke as a single cubic Bézier curve, blending colors through smudging."

- Bézier Splatting: A rendering technique combining Bézier curve primitives with point-based splatting for efficient vectorized image synthesis. "Bézier Splatting \cite{liu2025b} leverages Bézier curves in combination with Gaussian splatting to achieve efficient vectorized image rendering~\cite{liu2025b}."

- canvas blending coefficient: A parameter controlling how much of the brush’s previous color is deposited onto the canvas during smudging. "where is the canvas blending coefficient controlling how much of the previous brush state remains on the canvas"

- coarse-to-fine optimization: An iterative strategy that optimizes at low resolution or large regions first and progressively refines details at higher resolutions. "Coupled with a coarse-to-fine optimization strategy, our method jointly optimizes stroke geometry, color, and texture under geometric and semantic guidance."

- conditional StyleGAN generator: A generative model that produces images conditioned on auxiliary inputs (e.g., geometry), controlling style via latent codes. "We adopt a conditional StyleGAN generator~\cite{shugrina2022neural}, denoted as "

- differentiable paint renderer: A rendering module for paint strokes whose output is differentiable with respect to stroke parameters, enabling gradient-based optimization. "through a parallel differentiable paint renderer"

- differentiable rasterizer: A rasterization process that allows gradients to flow from pixels back to vector parameters, enabling optimization of vector graphics. "DiffVG~\cite{li2020differentiable} first introduced a differentiable rasterizer for vector graphics learning and generation."

- diffusion curves: Vector primitives that define color gradients via boundary curves, yielding smooth shading when diffused over the image. "diffusion curves \cite{orzan2008diffusion} and gradient meshes \cite{sun2007image} model gradient-based shading effects"

- diffusion models: Generative models that synthesize data by iteratively denoising from noise, widely used for image and video generation. "\cite{song2024processpainter} and \cite{chen2024inverse} explore diffusion models to predict time-lapse videos of artistic creation"

- diffusion transformers: Transformer-based variants of diffusion models that model temporal or structural dependencies in generative processes. "\cite{zhang2025generating} utilizes diffusion transformers to generate both past and future drawing processes."

- entropy-regularized optimal transport loss: A transport-based matching objective with entropy regularization that stabilizes and smooths the transport plan during optimization. "we adopt an entropy-regularized optimal transport loss \cite{zou2021stylized}:"

- Gaussian splatting: A rendering approach that approximates shapes by placing and blending Gaussian kernels for efficient, differentiable image synthesis. "in combination with Gaussian splatting"

- gradient meshes: Mesh-based vector primitives assigning colors at mesh vertices to interpolate smooth color gradients across surfaces. "diffusion curves \cite{orzan2008diffusion} and gradient meshes \cite{sun2007image} model gradient-based shading effects"

- Layer decomposition: The process of separating an image into semantically meaningful layers to enable structured editing and rendering. "Layer decomposition has been widely used in image editing~\cite{tan2016decomposing, yang2025generative} and vectorization tasks~\cite{ma2022towards}."

- Learned Perceptual Image Patch Similarity (LPIPS): A perceptual metric that measures similarity between images using deep network features rather than raw pixels. "Learned Perceptual Image Patch Similarity (LPIPS)~\cite{zhang2018unreasonable}"

- Peak Signal-to-Noise Ratio (PSNR): A quantitative metric measuring pixel-level reconstruction fidelity between images, higher is better. "Peak Signal-to-Noise Ratio (PSNR)~\cite{gonzalez2009digital}"

- perceptual loss: A loss computed on deep feature representations (e.g., VGG) to encourage perceptual similarity beyond pixel-wise differences. "we incorporate a perceptual loss \cite{shugrina2022neural}"

- ResNet-based feature distance (FD): A distance metric comparing images in a pretrained ResNet feature space to assess high-level perceptual similarity. "ResNet-based feature distance (FD)~\cite{he2016deep}"

- RMSprop: An adaptive gradient optimization algorithm that normalizes updates by a running average of squared gradients. "we use RMSprop with a learning rate of $0.003$."

- Segment Anything (SAM): A foundation model for general-purpose image segmentation that can produce high-quality masks with minimal prompts. "Segment Anything (SAM) \cite{kirillov2023segment}"

- self-retention coefficient: A parameter controlling how much pigment the brush retains from its previous state during smudging. " is the self-retention coefficient controlling how much pigment the brush retains from its own past state during the smudging process."

- signed distance function (SDF): A function that gives the distance from a point to the nearest boundary, with sign indicating inside/outside, used for implicit stroke regions. "we also introduce a signed distance function (SDF) representation"

- smudge renderer: A renderer that simulates color blending by transporting pigment along a stroke trajectory over the canvas. "a differentiable smudge renderer to simulate color blending"

- stamp-based painting algorithm: A brush simulation approach that composes a stroke from a sequence of overlapping “stamps” placed along a path. "Following standard stamp-based painting algorithms~\cite{ciao2024ciallo}"

- Structural Similarity Index (SSIM): A metric that evaluates image similarity based on luminance, contrast, and structure rather than raw errors. "Structural Similarity Index (SSIM)~\cite{wang2004image}"

- StyleGAN-based generator: A StyleGAN architecture used to synthesize textures or appearances, often controlled via latent codes. "a StyleGAN-based generator to optimize latent textures conditioned on stroke geometry"

- style latent code: A vector in the generator’s latent space that controls visual attributes like texture and material appearance. " is a style latent code controlling texture and appearance."

- temporal attention: A mechanism in sequence models focusing on relevant time steps to improve temporal coherence in generated videos. "ProcessPainter~\cite{song2024processpainter} integrates image diffusion models with temporal attention to generate painting videos all at once."

- VGG network: A deep convolutional neural network commonly used to extract features for perceptual similarity metrics. "the feature map extracted from the -th layer of a pretrained VGG network \cite{johnson2016perceptual}"

- vector graphics (VG): A representation of images using parametric geometric primitives (e.g., curves), enabling resolution-independent rendering. "Vector graphics (VG) represents images parametrically as compositions of geometric primitives."

Collections

Sign up for free to add this paper to one or more collections.