Reconstructing 3D Scenes in Native High Dynamic Range (2511.12895v1)

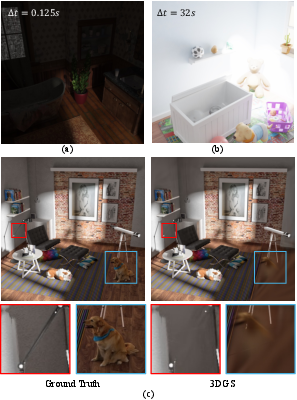

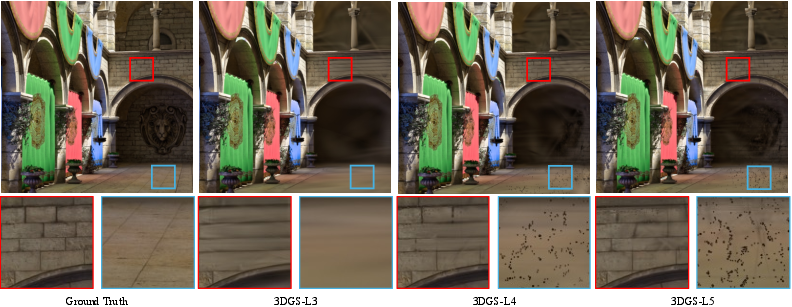

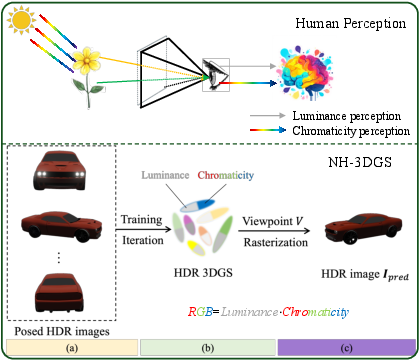

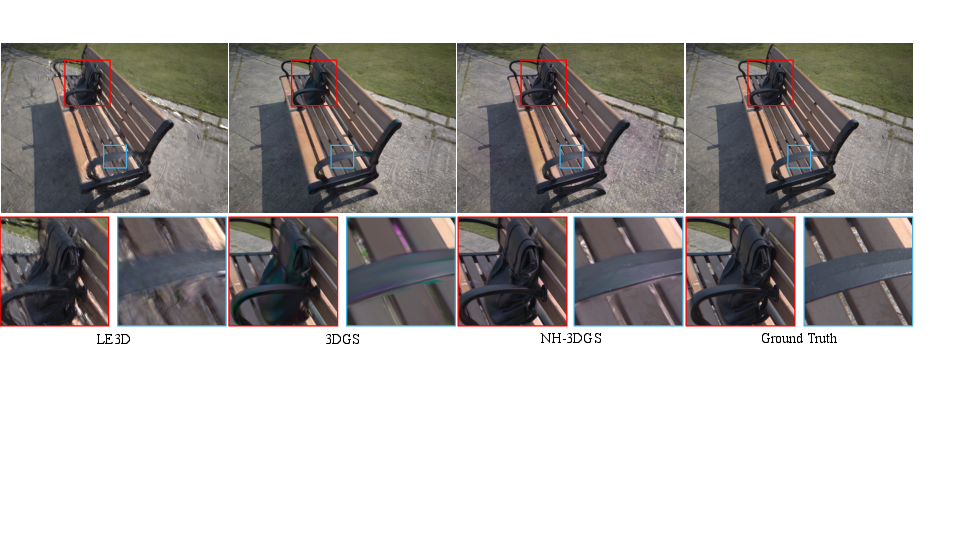

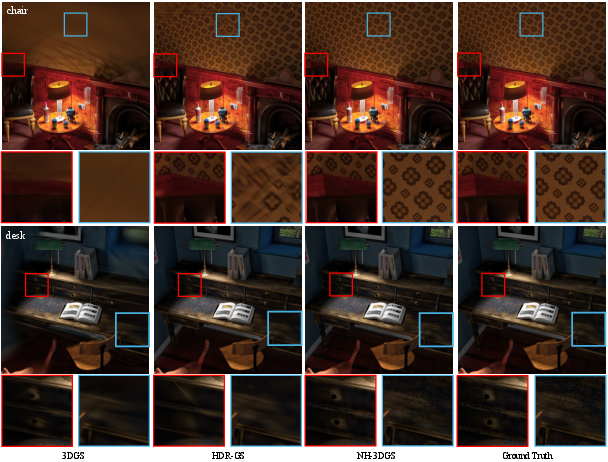

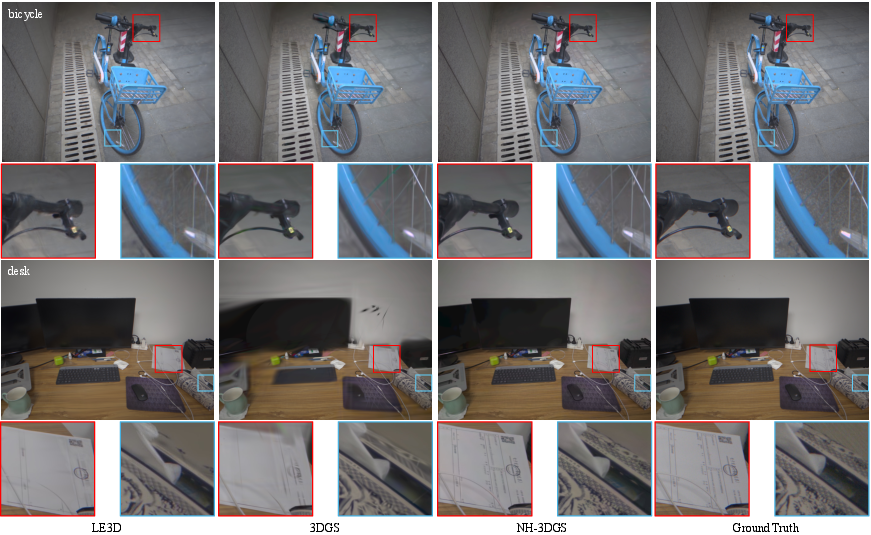

Abstract: High Dynamic Range (HDR) imaging is essential for professional digital media creation, e.g., filmmaking, virtual production, and photorealistic rendering. However, 3D scene reconstruction has primarily focused on Low Dynamic Range (LDR) data, limiting its applicability to professional workflows. Existing approaches that reconstruct HDR scenes from LDR observations rely on multi-exposure fusion or inverse tone-mapping, which increase capture complexity and depend on synthetic supervision. With the recent emergence of cameras that directly capture native HDR data in a single exposure, we present the first method for 3D scene reconstruction that directly models native HDR observations. We propose {\bf Native High dynamic range 3D Gaussian Splatting (NH-3DGS)}, which preserves the full dynamic range throughout the reconstruction pipeline. Our key technical contribution is a novel luminance-chromaticity decomposition of the color representation that enables direct optimization from native HDR camera data. We demonstrate on both synthetic and real multi-view HDR datasets that NH-3DGS significantly outperforms existing methods in reconstruction quality and dynamic range preservation, enabling professional-grade 3D reconstruction directly from native HDR captures. Code and datasets will be made available.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about building 3D models of real scenes that look good in both very bright and very dark areas. Most current 3D reconstruction methods use regular images with limited brightness range (called Low Dynamic Range, or LDR), which often lose detail in shadows or highlights. The authors introduce a new method, called NH-3DGS, that works directly with High Dynamic Range (HDR) images, including RAW photos from modern cameras, to preserve more detail and accurate brightness across a scene.

Objectives

Here are the main goals of the paper, stated simply:

- Make 3D scene reconstruction work directly on HDR images captured in a single shot, instead of relying on multiple exposures.

- Fix the common problems in dark areas (blurriness) and bright areas (washed-out colors) that happen when using LDR-based methods.

- Keep the method fast enough for practical use (like real-time rendering), while improving quality.

- Show that separating “brightness” from “color” during reconstruction helps models learn more reliably from HDR data.

How the method works (in everyday language)

To understand the method, it helps to know a few ideas:

- HDR vs. LDR: LDR images can’t show very bright and very dark parts at the same time, so bright areas may look blown out, and dark areas may look muddy. HDR images can keep details in both bright and dark regions.

- 3D Gaussian Splatting (3DGS): Think of a 3D scene as built from lots of tiny, fuzzy dots (Gaussians). When you look from a certain angle, these dots “splat” their light onto the screen to create the image. This is fast and works well for making new views of a scene.

- Spherical Harmonics (SH): This is a math tool used to describe how light/color changes when you look at an object from different directions—like using simple wave patterns around a sphere.

- Luminance vs. Chromaticity: Luminance is how bright something is. Chromaticity is the “color” part (like red vs. blue) without brightness. Separating these lets you control brightness and color independently.

What the authors changed:

- In regular 3DGS, brightness and color are tangled together in the same representation. That works fine for LDR but breaks for HDR because brightness can change massively depending on where you look.

- NH-3DGS separates brightness and color:

- It gives each tiny 3D blob (Gaussian) its own brightness value (luminance) that can vary with view direction.

- It uses spherical harmonics only to represent the color part (chromaticity), not brightness.

- This separation means the model doesn’t get confused by extreme brightness differences. Dark areas get enough attention during training, and bright areas don’t cause weird color shifts.

Two extra details that make it work better:

- Mu-law compression: During training, the method applies a gentle “compression” to very bright values (like turning down the volume on only the loudest parts) so the model doesn’t ignore dark areas. This keeps both bright and dark details meaningful.

- RAW Bayer training: Many cameras store HDR data in RAW format using a checkerboard of color filters (the Bayer pattern). Instead of turning RAW into a regular RGB image first (which can add errors), the method trains directly on the RAW pattern. This keeps the original sensor information clean and accurate.

Main findings and why they matter

The authors tested their method on both synthetic HDR scenes and real RAW captures. They compared NH-3DGS to popular methods (NeRF, 3DGS) and HDR-specific ones.

Key results:

- Better quality: NH-3DGS produced sharper details in shadows, avoided color mistakes in bright spots, and kept the full brightness range more accurately than other methods.

- Faster rendering: It stayed fast—near real-time—unlike some HDR methods that can be thousands of times slower.

- Works directly with single-exposure HDR/RAW: It doesn’t need the hassle of capturing multiple exposures and trying to fuse them, which makes it practical for filmmaking and virtual production.

- Robust colors and brightness: By separating brightness (luminance) from color (chromaticity), the model learns more reliably and avoids the common HDR pitfalls (like green/magenta tints in highlights).

These results mean you can capture a scene with an HDR-capable camera and directly build a high-quality 3D model without complex extra steps.

Implications and impact

This research can make 3D content creation more professional and practical:

- Filmmakers, game developers, and AR/VR creators can build 3D scenes that look correct in both bright sunshine and dim interiors.

- No need for complicated exposure bracketing (taking multiple photos with different brightness settings), which saves time and storage.

- More accurate color and brightness means more realistic renders and fewer artifacts, improving the quality of visual effects and virtual sets.

- Because it’s efficient and works with real HDR/RAW data, it’s suitable for modern production pipelines and fast iteration.

In short, NH-3DGS brings 3D reconstruction closer to how cameras—and our eyes—actually see the world, making it a strong step toward professional-grade, high-fidelity 3D scene modeling.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise list of concrete gaps and unresolved questions that, if addressed, could guide future research:

- Scope of data: Evaluation relies on a small synthetic set (8 scenes) and a small real dataset (RAW-4S, 4 scenes); generalization to diverse scene types (large outdoor scenes, low-texture areas, highly glossy/transparent materials, skin, mixed indoor lighting) remains untested.

- Static-scene assumption: Method is validated only on static scenes; handling dynamic content (moving objects, time-varying illumination), rolling shutter, and motion blur is not explored.

- Pose dependence: Assumes accurate precomputed camera poses; robustness to pose noise, joint pose refinement in HDR/RAW space, and COLMAP-free training (under harsh HDR conditions) are not studied.

- Exposure/white balance variability: No per-image exposure, gain, or white-balance calibration parameters are optimized; robustness to view-wise photometric inconsistencies and multi-camera heterogeneity (different CFAs, black levels, color matrices) is unclear.

- Absolute radiometric calibration: Although the method claims to “preserve absolute luminance,” no calibration to physical units (nits) is provided; how to map to true scene radiance across cameras/sessions is unspecified.

- Sensor effects and noise: No explicit modeling of RAW sensor noise (Poisson-Gaussian, read noise, dark current), pixel response nonuniformity, vignetting, or hot pixels; impact on shadow reconstruction and color fidelity is unquantified.

- Saturation and clipping: How the model behaves in saturated highlights or near-saturation regions in native HDR/RAW is not analyzed; no strategy for saturation-aware training is discussed.

- Luminance-chromaticity factorization details: The paper’s equation defines Lum as view-independent, while the text suggests “conditioned on viewpoint”; whether Lum is directional and how to model view-dependent intensity (specular lobes) is unresolved.

- View-dependent reflectance: With a scalar luminance and low-order SHs for chromaticity, the capacity to capture high-frequency, view-dependent specularities, anisotropic BRDFs, or glints is limited; alternative bases or BRDF-aware components are not explored.

- Chromaticity constraints: The claim that chromaticity lies in [0,1] is not matched by the unbounded SH output; enforcement of valid chromaticity (non-negativity, unit-sum, gamut constraints) is unspecified.

- Positivity of luminance: Lum is stated as R+, but the mechanism ensuring positivity (e.g., softplus/exp) is not detailed for RAW training; potential negative or unstable values are a risk.

- Sensitivity to SH order and parameterization: Only low-order SHs are used for chromaticity; ablations on SH order, alternative compact bases (e.g., spherical Gaussians), or directional factorization for luminance are missing.

- mu-law choice and training stability: A single μ=5000 is used across scenes; sensitivity to μ, scene-adaptive compression, and impacts on gradient scaling and convergence are not studied.

- Loss design in RAW/Bayer space: SSIM is adapted heuristically via subsampling; the suitability of SSIM for RAW HDR fidelity and alternatives (HDR-VDP-3, PU-encoded metrics, RAW-domain perceptual losses) remain open.

- Evaluation protocol: Tone-mapped comparisons via Photomatix Pro are used for visualization; standardized HDR perceptual metrics and ablations across tone mappers are absent, risking evaluation bias.

- Demosaicing dependency at evaluation: RAW-PSNR is computed after bilinear demosaicing; sensitivity to the chosen demosaicing method and end-to-end ISP consistency are not examined.

- Geometry–radiometry interplay: Effects of the new factorization on geometric reconstruction quality (e.g., how decoupling impacts density estimation, surface sharpness) are not analyzed.

- Memory/speed vs. quality trade-offs: Gains partly come from “more Gaussians” in dark regions; the scaling behavior (VRAM, fps) on larger scenes and the Pareto frontier of speed–quality–memory is not characterized.

- Multi-camera acquisition: RAW-4S reportedly uses multiple cameras, but per-camera calibration (CFA pattern, color matrix, black level, gains) and cross-device consistency modeling are not presented.

- Generalization to display-referred HDR: Applicability to HDR video formats (PQ/HLG), camera-provided HDR pipelines, and mixed pipelines (RAW + HDR10) is not studied.

- Lens and imaging effects: Depth-of-field blur, chromatic aberrations, flare, and ghosting in HDR optics are not modeled; how these affect NH-3DGS estimates is unknown.

- Robustness to challenging lighting: Extreme sun-in-frame, high contrast backlighting, colored illumination, and spectral metamerism are not systematically stress-tested.

- Interoperability with inverse rendering: The factorization does not separate illumination from material reflectance; whether NH-3DGS can support relighting, reflectance estimation, or integration with PBR BRDFs is an open question.

- Fairness/coverage of baselines: State-of-the-art RAW/HDR GS methods (e.g., HDRSplat) are not included in tables; reproducibility and fairness of cross-domain comparisons (LDR→HDR vs native HDR) need broader coverage.

- Failure modes: No explicit analysis of where NH-3DGS fails (e.g., ultra-dark noise amplification, extreme speculars, thin structures), leaving users without guidance on limitations.

- Reproducibility: Dataset and code are “to be released”; until available, external validation and benchmarking under varied conditions remain open.

- Practical capture guidance: Minimal capture protocols for native HDR (exposure/gain settings, black-level handling, per-view metadata requirements) are not provided; portability to consumer HDR/RAW devices is uncertain.

Practical Applications

Immediate Applications

Below are actionable use cases that can be deployed with current hardware and workflows, leveraging NH-3DGS’s ability to reconstruct native HDR radiance fields directly from single-exposure HDR/RAW captures and render in real time.

- Film/TV/Virtual Production: HDR set digitization and relighting

- Sector: media/entertainment, VFX, virtual production

- What: Capture sets with native HDR/RAW cameras and reconstruct HDR-accurate 3D scenes for shot planning, relighting, set extension, and VFX integration. Reduce dependence on multi-exposure bracketing and avoid tone-mapping artifacts.

- Tools/workflows: NH-3DGS capture → pose estimation (e.g., COLMAP) → train in Bayer-space → export to DCC tools (Blender, Unreal/Unity) → on-stage LED volume calibration using HDR-accurate radiance fields

- Assumptions/dependencies: Access to native HDR/RAW cameras; static scenes or limited motion; accurate camera poses; GPU with ~7 GB VRAM for training; tone-mapping for LDR output when needed

- AR/VR/Gaming: HDR environmental scanning for realistic lighting

- Sector: software, AR/VR, gaming

- What: Rapidly scan real environments (interiors/night scenes/sunlit exteriors) to create HDR-consistent assets with correct highlight/shadow behavior and view-dependent color.

- Tools/workflows: NH-3DGS training pipeline → real-time rendering integration in game engines → HDR environment maps generated from the reconstructed radiance field

- Assumptions/dependencies: Engine-side HDR pipeline (e.g., HDR10/ACES) for display; consistent camera intrinsics/extrinsics; static or quasi-static capture

- Architecture/Real Estate: HDR-accurate walkthroughs and lighting evaluation

- Sector: construction, real estate, digital twins

- What: Produce tours that preserve details in bright windows and dark interiors; evaluate glare/sunlight penetration; improve visualization fidelity for material selection.

- Tools/workflows: Single-exposure HDR capture → NH-3DGS reconstruction → HDR walkthrough rendering → export to customer-facing LDR via tone-mapping

- Assumptions/dependencies: Fixed scene; native HDR sensor availability; tone-mapping consistency for web/mobile delivery

- Cultural Heritage/Photogrammetry: High-fidelity documentation under extreme lighting

- Sector: heritage preservation, surveying

- What: Digitize artifacts/sites where glossy/reflective materials and deep shadows challenge LDR methods; maintain radiometric fidelity without multi-exposure stacks.

- Tools/workflows: NH-3DGS training directly on RAW Bayer → HDR render archive → long-term storage of physical radiance fields for re-lighting studies

- Assumptions/dependencies: Controlled multi-view capture; sensor calibration; metadata preservation for reproducibility

- E-commerce/Product Visualization: Accurate portrayal of reflective/metallic products

- Sector: retail, marketing

- What: Reconstruct 3D product scenes that retain specular highlights and subtle color under different viewpoints; reduce photography setup complexity.

- Tools/workflows: HDR capture → NH-3DGS rendering pipeline → interactive product viewers (web/AR) with HDR-to-LDR tone-mapping

- Assumptions/dependencies: Lighting control or HDR-capable sensors; performance constraints on client devices

- Robotics/Autonomous Systems: HDR maps for high-contrast environments

- Sector: robotics, autonomous driving

- What: Build photometrically consistent 3D maps of roads and facilities where signal lights, headlamps, or sunlight cause extreme contrast; improve localization/photometric alignment.

- Tools/workflows: HDR camera rigs → NH-3DGS reconstruction → downstream SLAM modules using HDR textures for better feature detection in shadows/highlights

- Assumptions/dependencies: Primarily static environments; current method optimized for static scenes; synchronization and pose accuracy

- Academic Benchmarking and Method Development: HDR datasets and baselines

- Sector: academia

- What: Use RAW-4S and NH-3DGS codebase to benchmark HDR reconstruction, develop HDR-specific metrics (beyond PSNR/LPIPS), and paper luminance–chromaticity disentanglement.

- Tools/workflows: NH-3DGS training scripts → Bayer-native loss functions → controlled experiments on HDR perception and optimization

- Assumptions/dependencies: Dataset/code availability; reproducible capture protocols; standardized evaluation

- Photography/Post-production: Reduced capture complexity for HDR composites

- Sector: prosumer/photography

- What: Replace multi-exposure bracketed captures with single-exposure HDR scans for 3D reconstructions used in post; mitigate motion/fusion artifacts.

- Tools/workflows: Single-exposure HDR capture → NH-3DGS → tone-map for deliverables; integrate with Photomatix or ACES as needed

- Assumptions/dependencies: Availability of compatible cameras; pipeline familiarity; static subjects

Long-Term Applications

Below are applications that will benefit from further research, scaling, or engineering to handle dynamics, mobile constraints, standardization, and broader deployment.

- Real-time HDR SLAM and dynamic scene reconstruction

- Sector: robotics, AR

- What: Extend NH-3DGS to handle moving objects and dynamic illumination for on-device HDR mapping and localization in challenging lighting.

- Potential products: HDR-aware SLAM SDK; embedded module for drones/AR glasses

- Dependencies: Algorithmic support for dynamics; sensor synchronization; low-power GPU acceleration

- In-camera HDR 3D capture for consumer devices

- Sector: mobile, consumer electronics

- What: Integrate NH-3DGS-like decomposition and Bayer-native optimization into phone firmware or camera apps for one-tap HDR 3D scans.

- Potential products: Smartphone HDR 3D scanner app; OEM camera pipeline feature

- Dependencies: Efficient on-device inference/training; hardware ISP cooperation; storage and privacy policies

- HDR Digital Twins at urban scale

- Sector: smart cities, infrastructure, energy

- What: Build city-scale HDR radiance fields to support traffic analysis, lighting audits, safety planning, and accurate night-time visibility simulations.

- Potential products: Cloud HDR twin platform; municipal HDR mapping services

- Dependencies: Scalable multi-view capture; robust pose estimation at scale; storage/compute budgets; data governance

- Physically-based inverse rendering and material estimation

- Sector: graphics, manufacturing, design

- What: Use luminance–chromaticity decomposition as a stepping stone to separate reflectance, illumination, and geometry for robust material property recovery (BRDFs).

- Potential products: Material scanning booths; CAD-integrated reflectance estimators

- Dependencies: Integration with physics-based renderers; additional priors/labels; controlled lighting

- Medical and surgical environment modeling under extreme illumination

- Sector: healthcare (visualization/training)

- What: HDR 3D reconstructions of operating rooms and training simulators where specular tools and bright surgical lights challenge LDR methods.

- Potential products: Surgical sim environments; training modules with accurate lighting

- Dependencies: Compliance with medical data standards; capturing protocols; non-static participants

- Standardization and policy for HDR scene capture and archival

- Sector: policy/standards, archives

- What: Establish guidelines for single-exposure HDR multi-view capture, storage formats (RAW/Bayer), and evaluation metrics tailored to HDR radiometry.

- Potential outputs: HDR capture standards; archival best practices; public benchmarks

- Dependencies: Cross-industry consensus; involvement of standards bodies; long-term data maintenance

- Energy-efficient building simulation and daylighting analysis

- Sector: energy, sustainability

- What: Feed HDR-accurate indoor/outdoor reconstructions into simulation tools for glare, daylighting, and lighting control strategies.

- Potential products: BIM plugins powered by NH-3DGS; daylighting compliance tools

- Dependencies: Interoperability with BIM/energy models; validation studies; integration with PBR frameworks

- Telepresence and immersive communication with true-to-life lighting

- Sector: communications, enterprise

- What: Create HDR-accurate virtual meeting spaces and remote site inspections where lighting fidelity affects perception and decision-making.

- Potential products: HDR telepresence platforms; enterprise remote audit tools

- Dependencies: Bandwidth for HDR data; robust tone-mapping across devices; multi-user real-time rendering

- HDR-aware content moderation and privacy tooling

- Sector: policy, platform governance

- What: With increased detail from HDR reconstructions, develop tools to detect sensitive content and enforce privacy (faces, license plates) under high contrast.

- Potential products: HDR-aware redaction engines; compliance dashboards

- Dependencies: Reliable detection under extreme luminance; platform adoption; regulatory frameworks

Cross-cutting assumptions and dependencies

- Hardware availability: Native single-exposure HDR sensors or RAW-capable cameras with sufficient bit depth; multi-view rigs; accurate pose estimation (e.g., COLMAP).

- Scene constraints: Current NH-3DGS primarily addresses static scenes; dynamic scenes require research extensions.

- Compute budgets: Training typically on a single high-end GPU (~7 GB VRAM); inference speeds are high but may slow with scene complexity.

- Color management: HDR rendering and display require consistent tone-mapping (ACES/HDR10) for LDR delivery; radiometric calibration benefits quality.

- Data governance: HDR reconstructions can reveal more scene detail; privacy/security policies must adapt, especially for public/enterprise deployments.

- Ecosystem integration: Engine/DCC plugin support, standardized file formats, and workflow training will affect adoption.

Glossary

- 3D Gaussian Splatting (3DGS): An explicit, point-based scene representation where 3D Gaussians with learned attributes are rasterized for fast radiance field rendering and novel view synthesis. "Our analysis reveals that applying 3D Gaussian Splatting (3DGS) \cite{3dgs}, a state-of-the-art framework for NVS, to HDR inputs leads to catastrophic failure"

- Azimuthal order: The index m in spherical harmonics specifying angular frequency and phase around the polar axis for a given degree l. "the index denotes the azimuthal order of the spherical harmonic basis function "

- Bayer pattern: A color filter array layout on image sensors that samples red, green, and blue at different pixel positions (e.g., BGGR). "in the Bayer pattern (e.g., BGGR)"

- Bilinear demosaicing: A simple interpolation method to reconstruct full RGB images from sparsely sampled Bayer data. "after bilinear demosaicing"

- Chromatic aberrations: Color fringing or tint artifacts caused by misaligned color channels or optics, especially visible in highlights. "exhibiting spatial blurring in low-illumination regions and chromatic aberrations in neutral highlights"

- Chromaticity: The color component independent of luminance, representing relative RGB proportions. "a chromaticity function represented by SHs:"

- Demosaicing: The process of converting Bayer-pattern sensor data into full-resolution RGB images by interpolating missing color samples. "rather than demosaicing to RGB space"

- Differentiable volume rendering: A rendering technique where gradients can be backpropagated through the rendering process, enabling learning of volumetric scene properties. "via differentiable volume rendering"

- Exposure bracketing: Capturing multiple images at different shutter speeds to cover a wide dynamic range for fusion into HDR. "precise exposure bracketing"

- High Dynamic Range (HDR): Imaging that preserves a wide luminance range, maintaining details in both deep shadows and bright highlights. "High Dynamic Range (HDR) imaging is essential for professional digital media creation"

- Low Dynamic Range (LDR): Imaging with limited luminance range and radiometric precision, typically normalized to [0, 1] in sRGB. "Low Dynamic Range (LDR) imagery"

- LPIPS: A learned perceptual image patch similarity metric used to quantify visual similarity beyond pixel-wise errors. "supplemented by LPIPS as a perceptual similarity measure."

- Luminance: The intensity or brightness component of color that encodes radiance magnitude separate from chromatic information. "a scalar luminance term "

- Luminance–chromaticity decomposition: A modeling strategy that separates intensity (luminance) from color ratios (chromaticity) to stabilize HDR optimization. "luminance--chromaticity decomposition"

- Mu-law (μ-law): A logarithmic compression function applied to HDR images to preserve luminance scale while boosting gradients in dark regions. "we adopt a μ-law function \citep{hdr-gs, hdrnerf} to the predicted HDR images and the ground truth images"

- Neural Radiance Fields (NeRF): An implicit neural representation mapping 3D coordinates and viewing directions to color and density for view synthesis. "NeRF \citep{nerf} pioneered this paradigm by using neural networks to map 3D coordinates and viewing directions to volume density and color via differentiable volume rendering."

- Novel View Synthesis (NVS): The task of generating images of a scene from unseen viewpoints using multi-view observations and learned scene representations. "Novel View Synthesis (NVS) has achieved remarkable progress"

- Photometric loss: An error term measuring differences between predicted and ground-truth image intensities, often used for training reconstruction models. "compute photometric loss in this subsampled color space."

- PSNR: Peak Signal-to-Noise Ratio; a distortion metric comparing image fidelity relative to ground truth, higher is better. "We adopt PSNR and SSIM as primary metrics"

- Radiance field: A function that describes emitted light intensity and color throughout 3D space and viewing directions. "radiance fields heavily biased toward bright regions"

- Radiometric consistency: Maintaining physically accurate luminance relationships across images and views without ad-hoc normalization. "maintaining radiometric consistency across scenes"

- RAW sensor data: Linear, high-bit-depth measurements directly from the camera sensor prior to demosaicing and tone-mapping. "leveraging RAW sensor data \citep{rawnerf,hdrsplat,le3d,li2024chaos}"

- Spherical harmonics (SH): A set of orthonormal basis functions on the sphere used to model view-dependent appearance with low-frequency expansions. "low-order spherical harmonics (SHs) (e.g., degree 3, yielding 16 coefficients)."

- Tone mapping: Converting HDR radiance to display-referred LDR images using a compression operator for visualization. "apply tone mapping to our HDR renderings"

- Tone-mapping network: A learned module that maps between LDR and HDR domains, often used to fuse exposures or reconstruct HDR from LDR. "tone-mapping networks \citep{hdr-gs, hdrnerf}"

Collections

Sign up for free to add this paper to one or more collections.