- The paper introduces ArachNet, an agentic framework that uses LLM-based agents to independently compose and implement complex internet measurement workflows.

- The paper details a four-agent architecture that coordinates via a compositional registry to reduce code length and integration latency in multi-step analysis tasks.

- The paper validates the system through case studies replicating expert analyses and forensic investigations, demonstrating efficiency gains and broader accessibility for non-specialists.

Agentic Automation of Internet Measurement Workflows: The ArachNet System

Introduction

Internet measurement research contends with the integration of heterogeneous, specialized tools—BGP analyzers, traceroute processors, topology mappers, and performance monitors—each accompanied by distinct interfaces and data representations. Composing rigorous, multi-step workflows for nuanced research questions or operational incident response has historically required deep domain expertise and extensive manual coordination. The paper introduces ArachNet, an end-to-end agentic workflow automation framework that leverages LLM-based agents to independently assemble and implement expert-level Internet measurement workflows, substantially lowering access barriers for non-specialists and augmenting expert productivity.

System Architecture

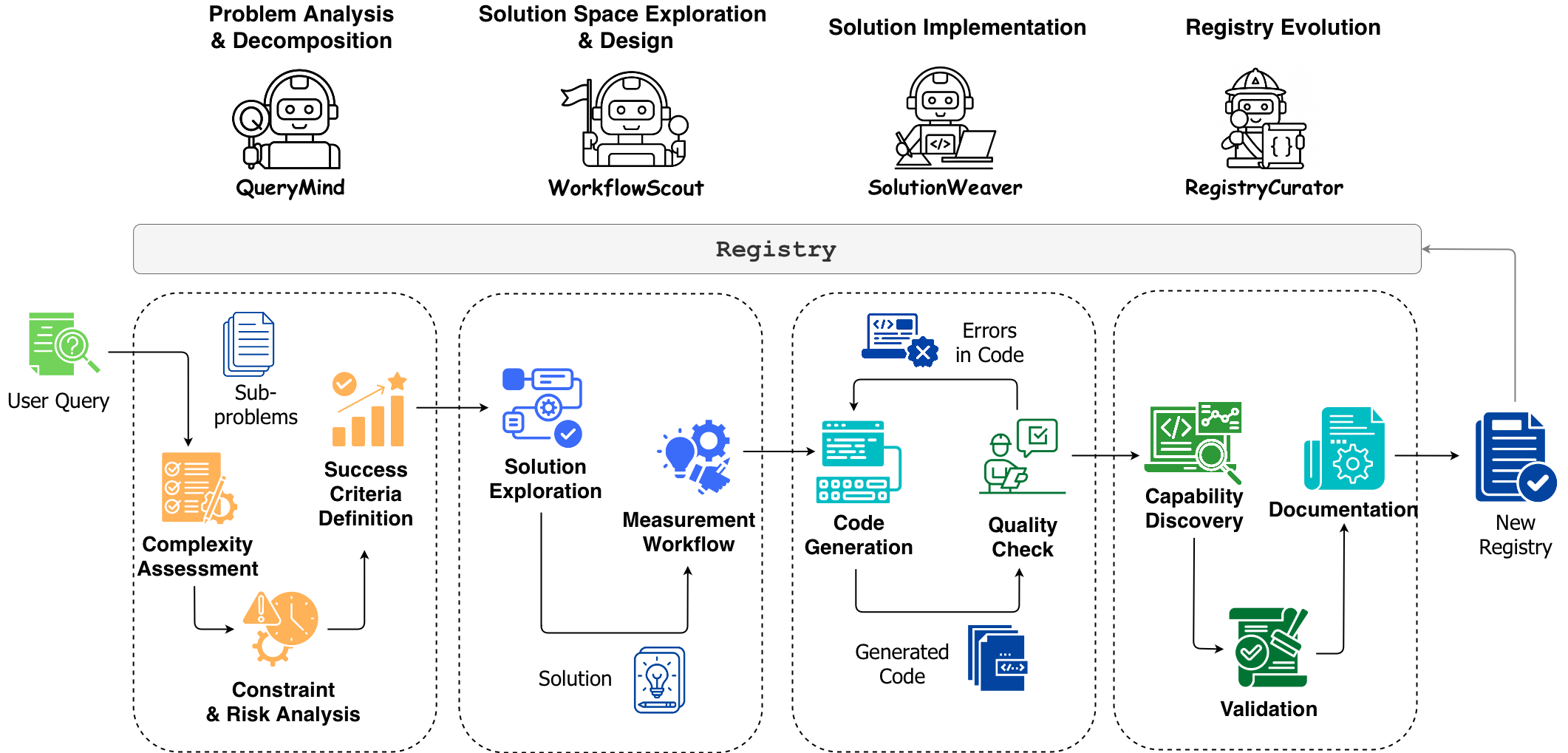

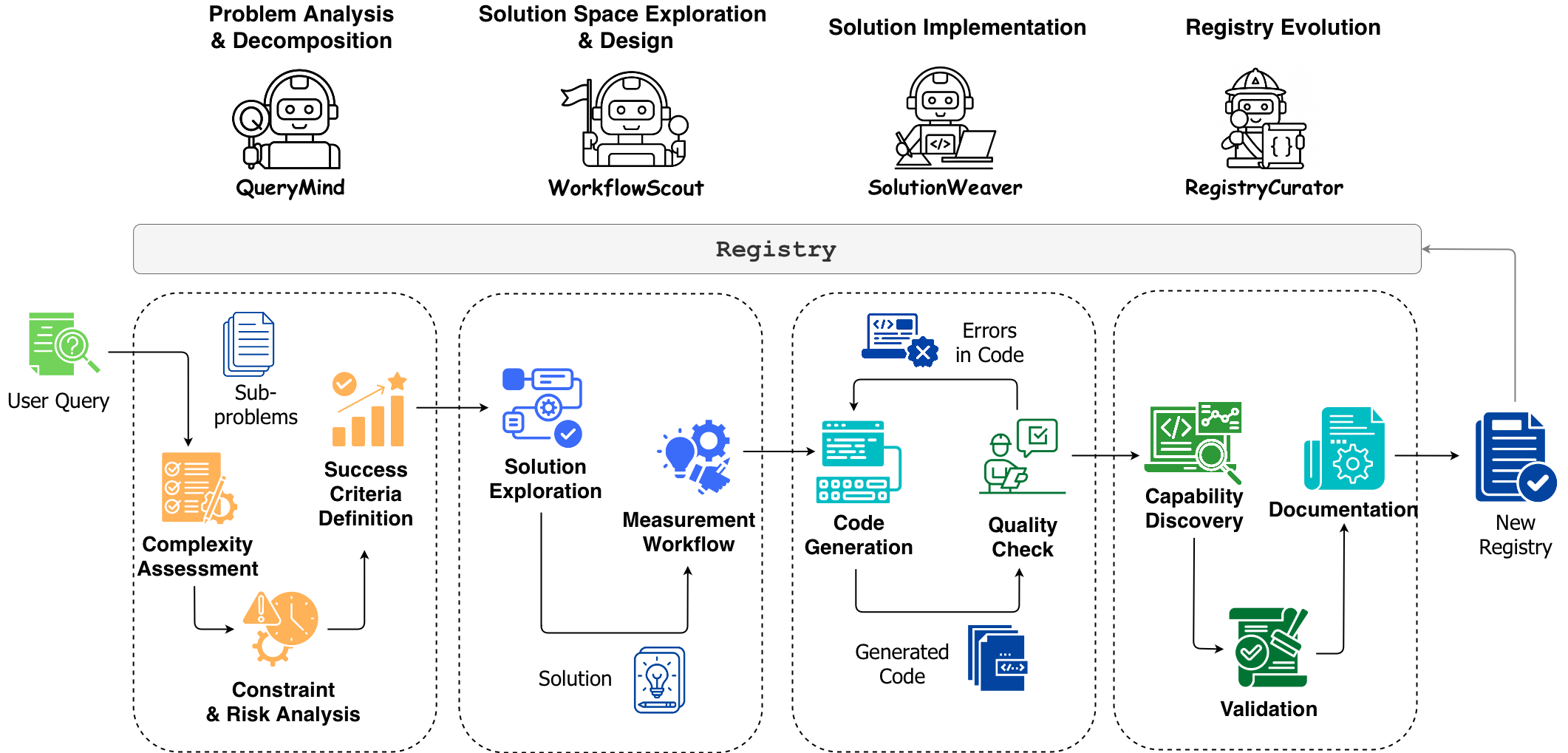

ArachNet’s architecture is decomposed into four tightly coordinated agents, each reflecting a sequential stage of expert reasoning in measurement workflow development: QueryMind (problem decomposition), WorkflowScout (workflow design), SolutionWeaver (workflow implementation), and RegistryCurator (capability extraction and evolution). These agents interact via a shared, curated Registry that encodes available measurement tool capabilities as compositional building blocks rather than exposing their raw implementations.

Figure 1: The four-agent ArachNet architecture showing the specialized roles and interactions among QueryMind, WorkflowScout, SolutionWeaver, and RegistryCurator.

Registry: Compositional Capability Abstraction

Central to ArachNet is the Registry, which abstracts tool capabilities into succinct API-style entries, describing each tool’s function, expected inputs/outputs, and constraints. This abstraction enables agents to explore compositionally feasible pathways without being obstructed by massive or inconsistent codebases. The Registry’s design supports efficient scaling with new tools and facilitates automatic generation and update pipelines, which future iterations could further optimize via autonomous documentation and code analysis.

QueryMind: Structured Problem Decomposition

QueryMind ingests natural language queries, systematically decomposes them into atomic measurement subproblems, surfaces latent dependencies, and explicitly codifies data, methodological, and technical constraints. It ensures tractability by preemptively highlighting required data and tool limitations—criteria often implicit or overlooked in naive automation attempts. This explicit separation of problem analysis from solution design mirrors human expert cognitive stages and supports agentic transferability.

WorkflowScout: Compositional Solution Design

WorkflowScout constructs candidate solution architectures by searching the design space of registry-exposed capabilities to fulfill all identified subproblems, performing explicit trade-off analysis for approaches requiring alternative toolchains or multi-framework integration. Unlike monolithic or greedy composition policies, WorkflowScout dynamically adjusts exploration depth based on query complexity and system constraints. It resolves dataflow, format translation, and validation dependencies at the design stage to enforce architectural rigor.

SolutionWeaver: Automated Code Synthesis

SolutionWeaver realizes the selected workflow design as executable code, orchestrating integrations across tools, handling data format translations, and embedding quality-assurance routines—sanity checks, uncertainty quantification, and data consistency verification—directly into the generated implementation. This systematizes quality assurance as a first-class concern, a notable improvement over error-prone post-hoc integration found in most semi-automated pipelines.

RegistryCurator: Workflow-Driven Capability Evolution

RegistryCurator evaluates successful workflow patterns, generalizes reusable integration or utility routines, and merges validated new capabilities into the registry. This enables organic, demand-driven registry evolution constrained by demonstrated utility, mitigating registry bloat and ensuring that registry growth tracks genuinely reusable analytical advancements. The agent also enforces documentation and interoperability requirements before promotion.

Empirical Validation

ArachNet is validated through progressive case studies spanning replication of benchmark expert-level analyses, multi-framework orchestration, and forensic root-cause investigations:

- Expert Solution Replication: For queries such as “Identify the impact at a country level due to a cable failure,” ArachNet synthesizes workflows matching the logic and functional coverage of established specialist systems (e.g., Xaminer), despite having access only to primitive building blocks, thus demonstrating the sufficiency of compositional reasoning captured within the agents.

- Multi-Disaster and Multi-Framework Analysis: For complex scenarios—e.g., cascading failures across submarine cables or combined earthquake/hurricane impacts—ArachNet autonomously scopes whether integrated workflows are warranted or if single, multi-purpose functions suffice, maintaining proportionality and avoiding overengineering. It consistently achieves code-length reductions and integration latency improvements versus manual baselines.

- Automated Forensic Investigation: In forensic root-cause analyses, such as correlating latency spikes with submarine cable failures, ArachNet automates the full pipeline: temporal anomaly detection (on traceroute data), infrastructure correlation (using mapping registries), BGP validation, and confidence-ranked candidate identification. The system replaces protracted manual cross-tool synthesis with code that is both functionally correct and interpretable.

Across all levels, ArachNet’s agentic approach eliminates days to weeks of manual integration typically required and produces codebases of roughly 250–750 lines for complex scenarios, closely mirroring specialist solution structure and outputs.

Comparative Perspective and Trade-offs

ArachNet’s explicit multi-agent architecture distinguishes it from prior LLM-based systems for network research and operations (e.g., ChatNet, NADA, AgentResearcher) by supporting full-cycle automation of scenario decomposition, toolchain design, code generation, and registry evolution. Unlike Operator Copilot, which addresses individual metric retrieval, ArachNet supports general multi-step composition across heterogeneous measure domains without human-in-the-loop wiring for each task.

The approach trades off between:

- Domain Coverage: Effective for domains where measurement tooling can be exhaustively enumerated in the registry; less robust for domains with highly dynamic or undocumented tool availability.

- Code Reliability: While architectural and analytical reasoning is captured, non-domain-specific programming errors persist in outputs. These are easily remediated but indicate an area for further LLM codegen refinement or integration with static analysis/verification tools.

- Registry Maintenance Overhead: Manual curation of tool capabilities remains a bottleneck, though mitigable via automated documentation parsing and code analysis agents.

Research Implications and Future Work

ArachNet democratizes access to sophisticated Internet measurement studies, allowing non-experts to bootstrap complex analytical workflows directly from goal-oriented queries. It further enables expert productivity by delegating integration and dataflow concerns to agents, freeing human effort for methodology innovation. The open-sourcing of prompts and case studies fosters reproducibility and community extension.

Open Challenges

- Generalizability: Adapting ArachNet’s agentic template to domains with radically different tool architectures or query styles necessitates further research into transferable decomposition and design prompt patterns, as well as registry schema extensions.

- Verification and Trust: Establishing correctness of novel workflows in uncharted territory, absent ground truth, remains a core challenge. Future directions include consensus sampling via ensemble agent runs and formalization of methodological soundness checks within agent prompts.

- Conflict Handling and Provenance: Automated reconciliation of conflicting outputs from disparate tools, and provenance tracking across workflow execution, are crucial for robust, interpretable pipeline execution.

- Protocol Standardization: Adoption of standardized agent-to-agent and agent-to-tool communication protocols (e.g., MCP, A2A) can further streamline registry evolution, enable plug-and-play integration, and support modular upgradeability.

Conclusion

ArachNet empirically validates that agentic LLM-based workflows—built upon compositional, registry-driven architectures—can replicate, generalize, and innovate Internet measurement analyses previously restricted to seasoned experts. While immediate technical gains include significant reduction in integration complexity and analytical latency, ArachNet also establishes a conceptual framework for systematic automation of compositional scientific reasoning. Future research is poised to extend these paradigms across analytic domains and further address challenges in trust, verification, and ecosystem-wide protocol standardization.