Music Flamingo: Scaling Music Understanding in Audio Language Models (2511.10289v1)

Abstract: We introduce Music Flamingo, a novel large audio-LLM designed to advance music (including song) understanding in foundational audio models. While audio-language research has progressed rapidly, music remains challenging due to its dynamic, layered, and information-dense nature. Progress has been further limited by the difficulty of scaling open audio understanding models, primarily because of the scarcity of high-quality music data and annotations. As a result, prior models are restricted to producing short, high-level captions, answering only surface-level questions, and showing limited generalization across diverse musical cultures. To address these challenges, we curate MF-Skills, a large-scale dataset labeled through a multi-stage pipeline that yields rich captions and question-answer pairs covering harmony, structure, timbre, lyrics, and cultural context. We fine-tune an enhanced Audio Flamingo 3 backbone on MF-Skills and further strengthen multiple skills relevant to music understanding. To improve the model's reasoning abilities, we introduce a post-training recipe: we first cold-start with MF-Think, a novel chain-of-thought dataset grounded in music theory, followed by GRPO-based reinforcement learning with custom rewards. Music Flamingo achieves state-of-the-art results across 10+ benchmarks for music understanding and reasoning, establishing itself as a generalist and musically intelligent audio-LLM. Beyond strong empirical results, Music Flamingo sets a new standard for advanced music understanding by demonstrating how models can move from surface-level recognition toward layered, human-like perception of songs. We believe this work provides both a benchmark and a foundation for the community to build the next generation of models that engage with music as meaningfully as humans do.

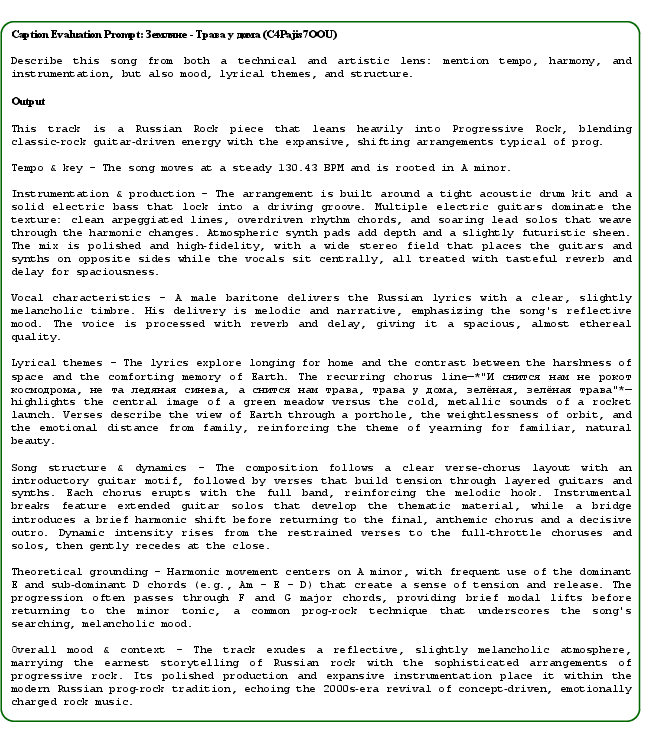

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces Music Flamingo, a smart computer model that listens to music and explains it in human language. Unlike earlier models that gave short, generic descriptions, Music Flamingo aims to understand music the way trained musicians do—by noticing details like rhythm, harmony, melody, lyrics, emotions, and even cultural context. The goal is to move from simple “surface” descriptions to deeper, layered understanding of full songs.

Goals and Questions

The researchers wanted to answer a few simple questions:

- How can we teach an AI to understand complex, layered music, not just basic sounds?

- Can an AI describe a song with rich detail (like key, tempo, chords, lyrics, mood, and structure)?

- Can it answer challenging questions about what happens at different moments in a song?

- Can it handle music from many cultures, not just short Western instrumental clips?

- Can we improve the model’s “reasoning”—its ability to think step-by-step about what it’s hearing?

How They Did It (Methods)

To build Music Flamingo, the team combined better training data with a careful training process that teaches the model to listen, analyze, and reason.

Here are the main steps explained in everyday terms:

- Building on a strong base: They started from an earlier audio–LLM called Audio Flamingo 3 and strengthened it, especially for understanding voices, because songs often include singing. This helps with lyrics, vocal style, and timing.

- Creating a big, better dataset (MF-Skills): Instead of short clips, they collected millions of full-length songs from around the world. They used music tools to measure low-level facts like beat (tempo), key, and chords. Then they asked a LLM with music knowledge to write long, detailed captions and smart question–answer pairs about each song. These covered:

- Surface features: tempo, key, instruments, sound quality

- Mid-level structure: chord progressions, rhythm, verse/chorus/bridge

- High-level meaning: lyrics, emotions, cultural styles and context

- They filtered the data to keep only high-quality examples. In the end, MF-Skills included about 5.2 million labeled samples.

- Teaching the model to “show its work” (MF-Think): They built another dataset of step-by-step “thinking” examples grounded in music theory. This teaches the model to explain how it reached an answer, like a student writing out math steps. This helps the model reason, not just guess.

- Reinforcement learning (GRPO): Think of this as training by feedback. The model tries multiple answers, and gets higher rewards when it:

- Follows the required format (first think, then answer)

- Gets the right answer on questions

- Matches structured details (like the correct genre, BPM, or key) in captions

- Over time, the model learns to produce clearer, more accurate, and well-structured explanations.

- Handling long songs and time: Because songs can be long, they extended the model’s memory to handle much longer audio and text. They also added time-aware signals—like putting timestamps on audio pieces—so the model understands what happens when (for example, “the chorus starts at 1:10” or “the key changes at 2:45”). Think of it like adding a timeline so the model doesn’t get lost.

Key Terms Explained Simply

- Audio–LLM (ALM): An AI that listens to audio and talks about it in text.

- Captioning: Writing a description of the audio, like a detailed review of a song.

- Question answering (QA): Answering questions about the audio, such as “Which instrument enters at the chorus?” or “What’s the tempo?”

- Chain-of-thought: The model writes out its thinking steps before the final answer—like showing your work in math.

- Reinforcement learning: Training by reward—good answers get positive feedback, bad ones don’t.

- GRPO: A specific reinforcement method where the model tries several drafts and learns from the best feedback.

- Tempo/BPM: How fast the music is (beats per minute).

- Key/Harmony: The musical “home” and how chords move from one to another.

- Structure: How the song is organized—intro, verse, chorus, bridge, outro.

What They Found (Results)

Music Flamingo performed better than other models on many tests:

- Music questions and reasoning: It scored higher on multiple benchmarks (like MMAU-Music and MuChoMusic), showing it can answer tougher, multi-step music questions.

- Music information tasks: It identified instruments and genres more accurately, and could handle detailed instrument classification.

- Lyrics transcription: It made far fewer mistakes when writing down lyrics from singing (lower word error rates), both in English and Chinese.

- Rich song captions: Human experts rated Music Flamingo’s descriptions much higher than previous models. It wrote longer, more accurate, and more meaningful captions that connected surface details (tempo, key) to deeper ideas (lyrics, emotions, structure).

Overall, it showed stronger, more musician-like understanding of songs, not just basic sound recognition.

Why It Matters (Implications)

- Better music discovery and recommendation: With rich, accurate descriptions, apps could suggest songs based on detailed features like mood changes, chord styles, or lyrical themes.

- Music education: Students could get clear explanations of song structure, harmony, and rhythm, like having a friendly music theory tutor.

- Cross-cultural understanding: The model can analyze music from many cultures, helping people explore styles they don’t already know.

- Music creation tools: Songwriters and producers could get smarter feedback or captions for their work, guiding creative decisions.

- Research and community: The team plans to release code, recipes, and datasets (for research use), which can help others build even better models.

Limitations and Future Work

- Cultural balance: Some music traditions are still underrepresented; they plan to include more diverse styles.

- Specialized skills: Certain detailed tasks (like advanced piano technique recognition) need more training.

- Even broader coverage: They aim to add more musical skills for a truly comprehensive music understanding system.

In short, Music Flamingo marks a big step toward AI that “listens like a musician,” connecting sound, structure, lyrics, and culture to explain music in a deep and human-like way.

Knowledge Gaps

Knowledge Gaps, Limitations, and Open Questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored in the paper, framed to guide future research.

- Data composition and representativeness are not quantified: the paper claims 3M+ culturally diverse songs, but provides no transparent distribution across regions, languages, genres, eras, recording conditions, or vocal styles; a detailed breakdown and imbalance analysis are needed to diagnose coverage gaps and biases.

- Copyright, licensing, and provenance of “in-the-wild” songs are unclear: the paper does not specify data sources, rights status, filtering for copyrighted content, or consent protocols; clear documentation and auditable provenance pipelines are required to ensure ethical, compliant use and release.

- Potential test contamination is not addressed: no deduplication or near-duplicate checks are reported across MF-Skills, MF-Think, and evaluation benchmarks (e.g., GTZAN, NSynth, MMAU), nor are track-level overlaps with training sets quantified; a robust leak-prevention protocol is needed.

- Label correctness is uncertain due to tool limitations: MIR tools used for metadata (madmom, Essentia, Chordino, Parakeet) have known error profiles (e.g., key/chord detection in polyphonic, genre-agnostic contexts, lyrics under heavy mixing), yet their error rates and calibration on these datasets are not reported; systematic validation and error modeling are required.

- LLM-generated captions/QAs and CoT introduce circularity risks: captions, QAs, and structured metadata used for rewards are often produced or verified by frontier LLMs and the model itself, without independent ground truth; external, human-validated and signal-level evaluations are needed to reduce self-reinforcement and bias.

- Absence of timestamp-level evaluation: claims of improved temporal understanding (form, chord changes, key modulations, verse/chorus boundaries) are not validated with time-aligned metrics; benchmarks and metrics for timestamp accuracy and boundary detection are needed.

- Reward design for captioning is fragile: the “structured thinking reward” relies on string matches against LLM-produced metadata, which can be gamed and does not guarantee auditory faithfulness; reward functions grounded in verified MIR outputs, human annotations, and calibration metrics should be developed.

- Chain-of-thought reliability is not audited: CoT steps are generated by gpt-oss-120b and filtered with the trained model, but no external audit quantifies factuality, logical consistency, or audio-grounding of the reasoning; third-party verification and error taxonomies of CoT are needed.

- Negative transfer is underexplored: performance drops on Music AVQA suggest trade-offs introduced by post-training; ablations are needed to quantify when RL/CoT improve or impair specific tasks and how to mitigate regressions.

- Lack of comprehensive ablations: the paper does not isolate the effects of (i) multilingual/multi-talker ASR pretraining, (ii) MF-Skills SFT, (iii) RoTE time embeddings, (iv) MF-Think CoT, and (v) GRPO; controlled ablation studies are necessary to attribute gains.

- RoTE’s benefits and limitations are not quantified: no report of improvements from Rotary Time Embeddings versus RoPE, nor analysis under tempo fluctuation (rubato), variable sampling rates, and beat-synchronous representations; comparative studies are needed.

- Long-context audio handling is unspecified: the model increases text context to ~24k tokens, but how full-length audio (up to 20 minutes) is chunked, indexed, or streamed for consistent global reasoning is not detailed; scalable long-audio strategies and consistency tests are needed.

- Cross-cultural theory and non-Western music understanding are not rigorously evaluated: tasks like raga identification, tala/polyrhythm recognition, microtonal intonation, and ornamentation are described but not benchmarked with expert-validated datasets; standardized non-Western MIR benchmarks are needed.

- Lyrics transcription coverage is narrow: results are reported for Chinese and English only; broader evaluation across languages with diverse phonotactics, script systems, and singing techniques (rap, growl, melisma) is needed.

- Instrument-specific and performance-technique understanding is limited: fine-grained recognition (e.g., piano pedaling, bowing articulation, guitar techniques, vocal registers) is cited as a gap but not benchmarked; targeted datasets and metrics should be built.

- Robustness to realistic conditions is untested: effects of live recordings, crowd noise, compression artifacts, mixing styles, mastering differences, and low SNR are not measured; stress-testing and robustness benchmarks are needed.

- Distinguishing audio-grounded analysis from text priors remains open: the paper critiques text-derived shortcuts but does not provide protocols to quantify audio versus metadata reliance; sensory-grounding tests and counterfactual audio manipulations should be introduced.

- Human evaluation lacks detail and rigor: expert rater recruitment, instructions, intercoder reliability (e.g., Cohen’s κ), and cross-cultural raters are not reported for SongCaps; standardized, transparent human evaluation protocols are needed.

- LLM-as-a-judge risks bias: GPT-based scoring of coverage/correctness is vulnerable to style bias and hallucination; multi-judge ensembles, calibration against human ratings, and audio-grounded consistency checks should be implemented.

- Comparisons to MIR specialist systems are limited: evaluations focus on LALMs; rigorous baselines against specialist MIR models (e.g., state-of-the-art chord recognition, key change tracking, structure segmentation) would clarify where LALMs help or lag.

- Source separation and pitch tracking are not integrated: the paper relies on multi-talker ASR to parse overlapping voices, but no explicit separation or pitch-aware modeling is reported; incorporating source separation and robust pitch/intonation tracking may improve lyric alignment and timbre analysis.

- Efficiency and deployment are not addressed: training uses 128×A100 (80GB), but inference latency, memory footprint, streaming/online operation, and pathways for distillation or edge deployment are not discussed; practical deployment strategies remain open.

- Model architecture and size are under-specified: parameter counts, encoder details, fusion mechanisms, and tokenizer configurations are not fully documented; full reproducibility requires transparent model specs and training logs.

- Safety and ethical dimensions are unexplored: risks of amplifying cultural stereotypes, misinterpretation of lyrics, or enabling misuse (e.g., illicit metadata extraction) are not discussed; safety evaluations and mitigation strategies are needed.

- Integration with generative music systems is only hypothesized: the claim that richer captions help generative models is not empirically tested; studies quantifying benefits to controllability, faithfulness, and user satisfaction in generation are needed.

- Multimodal extensions (audio+video+symbolic) are unexamined: many songs have associated videos and scores/lead sheets; exploring joint modeling with symbolic music and visual performance cues could enhance structure and technique understanding.

- Time-aligned explanations are not provided: captions discuss structure, chords, and dynamics, but do not include explicit, verifiable timecodes; developing time-stamped explanatory outputs and evaluating their accuracy would improve trust and utility.

- Open-source release specifics are unclear: timelines, license terms (“research-only”), redaction of copyrighted audio, and mechanisms for community auditing are not provided; concrete release plans and governance are needed.

Practical Applications

Immediate Applications

Below are practical, deployable applications that can be implemented now using the paper’s released methods, datasets, and demonstrated capabilities.

- Rich catalog enrichment and automated music metadata generation (Music industry; software)

- What: Generate detailed, theory-aware captions and layered metadata (key, tempo, chord progressions, structure, instrumentation, lyrical themes, cultural context) for back catalogs and new releases.

- Tools/workflows: Batch-processing pipeline that feeds songs into a Music Flamingo–powered

metadata_enrichmentAPI; writebacks to label/publisher CMS and data warehouses; QA dashboards using the paper’s SongCaps rubric for human-in-the-loop verification. - Assumptions/dependencies: Commercial rights to process audio; the model is currently research-only—commercial deployment requires licensing or internal re-training; compute for long-context inference on full-length tracks.

- Multilingual lyrics transcription and alignment for vocals (Accessibility; streaming platforms; creator tools)

- What: High-accuracy lyric transcription (WER improvements shown for Chinese and English) and alignment to timecodes, enabling karaoke, subtitle tracks, and sing-along features.

- Tools/workflows:

lyrics_transcribe_alignservice with forced-alignment outputs; integration into player UIs; export to subtitle formats (e.g., LRC, SRT); optional translation layer. - Assumptions/dependencies: Vocal prominence and mix quality affect accuracy; for overlapping vocals, quality may vary with genre/mix density; policy compliance for user-generated content.

- Advanced search and recommendation with theory-aware descriptors (Streaming; music discovery; enterprise search)

- What: Index tracks using layered descriptors (e.g., “minor-key ballads with gospel-influenced backing vocals and a verse–chorus–bridge form”) to drive intent-based search, editorial workflows, and playlists.

- Tools/workflows:

semantic_music_indexservice combining MF-generated metadata with vector search (e.g., FAISS); editorial UI for filtering by structure/harmony/lyrical themes. - Assumptions/dependencies: Initial tuning for genres with underrepresented cultural forms; human editorial oversight for sensitive themes.

- Sync licensing support and cue sheet automation with time-aware analysis (Media/Advertising; legal ops)

- What: Produce timecode-aligned breakdowns of song sections, motif recurrence, lyrical themes, and moods to streamline sync decisions and cue-sheet generation.

- Tools/workflows:

cue_sheet_autopipeline that outputs section maps (intro/verse/chorus/bridge), mood arcs, lyric topic highlights; integration with rights management systems. - Assumptions/dependencies: Accurate time segmentation depends on consistent tempo and clear structure; legal review still needed before submission.

- Ethnomusicology and cultural analytics dashboards (Academia; cultural institutions)

- What: Cross-cultural analysis of musical forms, ragas/modes, polyrhythms, instrumentation trends, and lyric themes across large corpora.

- Tools/workflows: Data lake ETL + MF-Skills-driven

cultural_traits_extractor; interactive visualizations (genre vs. form vs. geography); export to CSV/Parquet for empirical studies. - Assumptions/dependencies: Known model limitations on underrepresented traditions; require culturally diverse evaluation sets; IRB or ethical review for sensitive datasets.

- Music education assistants: theory-grounded explanations and practice aids (Education; edtech; daily life)

- What: Explain harmonic movements, form, phrasing, and production choices in language accessible to learners; generate practice notes tied to timecodes.

- Tools/workflows: Classroom assistant that ingests assigned tracks and outputs guided analyses; DAW-integrated overlay of chord/key changes;

study_companionchatbot using MF-Think style chain-of-thought. - Assumptions/dependencies: Teacher oversight; correct handling of rare voicings/advanced techniques; transparent uncertainty when model confidence is low.

- Creator and producer feedback: structure/mix notes extracted from audio (Software; creator tools)

- What: Provide actionable notes on arrangement dynamics, instrumentation balance, and vocal phrasing; identify repeated sections for efficient editing.

- Tools/workflows: DAW plug-in with timeline overlays (keys/chords/sections); “mix notes” generated via structured reasoning rewards; export markers to Ableton/Logic/Reaper.

- Assumptions/dependencies: Not a substitute for mastering engineers; production-specific feedback may require genre-tuned models.

- Content moderation and sensitive-topic detection through lyrics (Policy; safety; platforms)

- What: Flag explicit content, self-harm mentions, hate speech, and other policy-relevant lyric themes with timestamps.

- Tools/workflows:

lyric_safety_scanservice with policy taxonomies and escalation thresholds; human review queue; audit logs. - Assumptions/dependencies: Cultural context can change interpretation; false positives for metaphorical or historical lyrics must be managed; transparency and appeal mechanisms required.

- Rights and royalty auditing assistance (Music industry; finance; legal tech)

- What: Match lyrical content, structure, and instrumentation signatures to improve catalog deduplication and potential cover detection; enhance reporting consistency.

- Tools/workflows:

royalty_audit_assistthat cross-references MF-derived signatures with PRO data; anomaly detection on cue sheets and declarations. - Assumptions/dependencies: Robust identity resolution still requires fingerprinting and legal validation; MF complements but doesn’t replace acoustic fingerprint systems.

- Dataset curation and benchmarking improvements for audio models (Academia; ML research)

- What: Use MF-Skills and MF-Think to train/evaluate reasoning-centric audio LMs beyond surface-level music tasks; adopt structured reward training (GRPO) and time grounding (RoTE).

- Tools/workflows: Reproducible research pipelines;

structured_rewardstoolkit for caption QA;RoTEmodule for any time-series tokenization. - Assumptions/dependencies: Research-only licenses; need compute and long-context handling; careful prompt hygiene if using external LLMs.

- Cross-lingual lyric translation with musicality preservation (Accessibility; education; daily life)

- What: Translate and annotate lyrics with prosody/timing notes to preserve singability in karaoke or learning contexts.

- Tools/workflows:

lyric_translate_alignwith prosody hints; bilingual subtitles; educator dashboards. - Assumptions/dependencies: Cultural idioms and rhyme schemes may be lost; optional human localization recommended.

- Comparative and structural reasoning for playlist curation (Streaming; editorial)

- What: Explain similarities/differences between tracks (key relationships, motif reuse, vocal styles) to justify playlist cohesion and transitions.

- Tools/workflows:

compare_tracksAPI producing human-readable rationales aligned to timecodes; embed in editorial CMS. - Assumptions/dependencies: Requires high-fidelity inputs and accurate key/modulation detection; editorial review for brand voice.

- Audio–language teaching aids for speech-in-music contexts (Education; accessibility)

- What: Teach phoneme recognition and diction in singing; highlight enunciation and breath control segments.

- Tools/workflows:

vocal_phoneme_coachusing MF’s strengthened speech skills; time-aligned phoneme maps; practice feedback loops. - Assumptions/dependencies: Genre/microphone technique can affect phoneme clarity; not a clinical tool.

- Research reproducibility and evaluation standardization (Academia; policy for ML reproducibility)

- What: Adopt SongCaps-style evaluation with human and LLM-as-a-judge for music captions, reducing reliance on lexical overlap metrics.

- Tools/workflows:

songcaps_evalwith scoring rubrics (coverage, correctness); crowd-sourcing templates. - Assumptions/dependencies: LLM-as-a-judge introduces its own biases; human expert panels remain essential for gold evaluations.

Long-Term Applications

The following applications are feasible but require further research, scaling, licensing, validation, or integration efforts before robust deployment.

- Human-in-the-loop co-listening and music analysis companions (Consumer software; education)

- What: Conversational agents that “listen” with the user, track emotional arcs, annotate structure/harmony, and adapt analyses to user goals (learning, discovery, therapy).

- Tools/workflows: Real-time streaming inference with low latency; session memory across multiple tracks; personalized reasoning prompts.

- Assumptions/dependencies: Efficient long-audio streaming; robust personalization; ethical guardrails for sensitive topics.

- Generative music models conditioned on layered, theory-aware captions (Music generation; creator tools)

- What: Use MF-style rich captions as conditioning to steer generative systems toward precise harmony/structure/production targets.

- Tools/workflows:

caption_to_generationbridges; joint training with music diffusion/transformer models; feedback loops via structured rewards. - Assumptions/dependencies: Licensing for training data; controlling plagiarism/copyright; evaluation metrics for musicality and originality.

- Cross-cultural competence expansion and bias auditing (Policy; academia; platforms)

- What: Systematic coverage of underrepresented traditions; automated audits that surface gaps in model performance across cultures and styles.

- Tools/workflows:

cultural_auditbenchmark suites; targeted data acquisition; governance dashboards. - Assumptions/dependencies: Access to diverse, high-quality labeled data; collaboration with cultural experts; community standards for fairness.

- Live performance and broadcast analysis (Broadcast; events; creator tools)

- What: Real-time structure tracking, lyric transcription, and dynamic mix notes during concerts or live streams.

- Tools/workflows: Edge inference on audio feeds;

live_music_monitorwith robust latency and error recovery; integration with broadcast mixers. - Assumptions/dependencies: Strong noise robustness; overlapping vocals and crowd noise; legal permissions and privacy.

- Instrument-technique and micro-expression recognition (MIR+education; pro training)

- What: Fine-grained detection of fingerings, articulations, pianistic pedaling, guitar picking patterns, and microtiming.

- Tools/workflows: High-resolution encoders; specialized technique datasets; DAW overlays for technical coaching.

- Assumptions/dependencies: Requires dedicated data and sensors (e.g., contact mics, MIDI), beyond current audio-only scope.

- Rights identification beyond fingerprints (Legal tech; finance)

- What: Combine theory-aware descriptors and lyrical themes with acoustic fingerprints to improve cover/derivative work detection and provenance tracking.

- Tools/workflows:

rights_id_plusthat fuses MIR signatures and MF descriptors; probabilistic match scoring; legal review workflows. - Assumptions/dependencies: High-precision thresholds; court-tested methodologies; mitigation of false matches.

- Accessibility compliance automation across platforms (Policy; platforms)

- What: End-to-end pipelines that generate and validate time-aligned lyrics, translations, and descriptive captions to meet accessibility mandates at scale.

- Tools/workflows:

accessibility_automationwith QA gates; compliance reporting; audit trails. - Assumptions/dependencies: Standardization across jurisdictions; defined quality thresholds; human verification.

- Clinical-grade music therapy personalization (Healthcare)

- What: Tailor playlists and interventions using MF-derived emotional arcs, tempo/key profiles, and lyrical themes to match therapy goals (e.g., anxiety reduction, memory recall).

- Tools/workflows:

therapy_matcherintegrating patient profiles; clinician dashboards; outcome tracking. - Assumptions/dependencies: Clinical validation, data privacy, IRB approvals; careful handling of lyrical content triggers.

- Knowledge graphs of global music structure and meaning (Academia; platforms)

- What: Build machine-readable graphs linking songs’ structures, harmonic pathways, lyrical motifs, and cultural contexts for large-scale analysis and recommendation.

- Tools/workflows:

music_kg_builderwith schema for harmony/form/themes; SPARQL/GraphQL endpoints. - Assumptions/dependencies: Consistency of annotations; community-maintained ontologies; licensing for public datasets.

- Real-time editorial assistance for sync and marketing (Media; advertising)

- What: On-the-fly thematic matching of scenes to song sections (e.g., chorus lifts for climactic cuts), with evidence-based rationales.

- Tools/workflows:

scene_to_song_matchthat ingests rough cuts and suggests aligned song segments; human override. - Assumptions/dependencies: Accurate audiovisual alignment; robust scene understanding beyond audio; creative approval cycles.

- Transfer of RoTE time-grounding to other time-series modalities (Software; broader AI)

- What: Apply Rotary Time Embeddings to biosignals, sensor streams, or financial tick data where absolute time grounding improves reasoning.

- Tools/workflows:

rote_time_modulepackaged for general LLM tokenizers; benchmarks for non-audio tasks. - Assumptions/dependencies: Validation per domain; tokenization schemes compatible with absolute time stamps.

- Standardization of evaluation practices for music AI (Policy for AI governance; academia)

- What: Establish community standards for layered caption evaluation (coverage, correctness, cultural grounding) and reasoning transparency with chain-of-thought.

- Tools/workflows: Open benchmarks and scoring rubrics; shared leaderboards; documented uncertainty reporting.

- Assumptions/dependencies: Consensus building across stakeholders; safeguards for CoT disclosure (privacy, bias).

Cross-cutting assumptions and dependencies

- Licensing and usage: The paper notes research-only release; commercial use requires appropriate licenses or internal training on owned/cleared data.

- Compute and latency: Long-context inference on full-length songs may need GPU/accelerator resources and optimization for production latency.

- Cultural coverage: Current limitations on underrepresented traditions; performance may vary across languages, styles, and recording conditions.

- Human oversight: Many applications (education, editorial, legal, clinical) require human-in-the-loop review and domain expertise.

- Integration with external tools: For some workflows, MF relies on or benefits from MIR tools (beat trackers, chord estimators) and separation/ASR modules.

- Evaluation and safety: LLM-as-a-judge introduces bias; sensitive lyric themes need careful moderation and auditability.

Glossary

- Accuracy Reward: A reinforcement learning signal that scores how correct a model’s final answers are in QA tasks. "we employ the accuracy reward to encourage the model to generate accurate final answers."

- Advantage (RL): A quantity in policy-gradient methods measuring how much better a sampled action is than average, used to update the policy. "to estimate the advantage."

- ALMs (Audio–LLMs): Models that reason over auditory inputs paired with language, covering speech, sounds, and music. "Within this space, ALMs focus specifically on reasoning over auditory inputs such as speech, sounds, and music."

- Automatic Speech Recognition (ASR): Technology that transcribes spoken audio into text. "such as automatic speech recognition (ASR)"

- BPM (Beats Per Minute): A measure of tempo indicating the number of beats occurring in one minute. "low-level information (tempo, BPM, keys)"

- Chain-of-Thought (CoT): Explicit, step-by-step reasoning traces that guide the model to a final answer. "a high-quality Chain-of-Thought (CoT) dataset used for cold-start reasoning."

- CHIME: A multi-speaker meeting/speech dataset commonly used to train and test ASR in overlapping speaker scenarios. "including CHIME"

- Cold-start reasoning: An initial phase where the model is trained to produce explicit reasoning before reinforcement learning fine-tuning. "used for cold-start reasoning."

- Cross-modal retrieval: Retrieving items across different modalities (e.g., text from audio or audio from text) via a joint embedding space. "enabling tasks like cross-modal retrieval."

- Encoder–decoder ALMs: Architectures that augment decoder-only LLMs with audio encoders to process and reason over audio. "Encoder–decoder ALMs (often called Large Audio–LLMs, LALMs), which augment decoder-only LLMs with audio encoders."

- Encoder-only ALMs: Architectures that learn joint audio–text embeddings, typically used for retrieval and matching. "Encoder-only ALMs, which learn a joint embedding space for audio and text, enabling tasks like cross-modal retrieval."

- Fully sharded training: A distributed training technique that shards model states across devices to handle large contexts and reduce memory usage. "adopt fully sharded training to handle the increased memory requirements."

- GRPO (Group Relative Policy Optimization): A reinforcement learning algorithm that uses group-wise average rewards instead of a value function to compute advantages. "GRPO obviates the need for an additional value function and uses the average reward of multiple sampled outputs for the same question to estimate the advantage."

- Harmonic analysis: The paper of chord progressions and tonal relationships to understand musical structure. "toward tasks requiring temporal understanding, harmonic analysis, lyrical grounding, and other skills."

- Importance sampling ratio: The ratio used in off-policy RL updates to correct for distribution mismatch, often clipped for stability. "the clipping range of the importance sampling ratio"

- KL-penalty: A regularization term based on KL divergence that keeps the learned policy close to a reference policy. "the regularization strength of the KL-penalty term that encourages the learned policy to stay close to the reference policy"

- LALMs (Large Audio–LLMs): Decoder-based LLMs augmented with audio encoders to understand and reason about audio. "Large Audio–LLMs, LALMs"

- LLM-as-a-judge: Using a LLM to evaluate outputs (e.g., captions) for correctness and coverage. "we evaluate captions using human-expert judgments and LLM-as-a-judge assessments."

- madmom: A Python library for music signal processing, notably used for beat tracking. "including madmom (beat)"

- MMAU: A benchmark for multimodal audio understanding that includes music-focused tasks. "On MMAU-Music, it reaches a competitive 76.83 accuracy"

- MMAU-Pro: A more challenging music understanding benchmark emphasizing difficult, less biased tasks. "on the tougher MMAU-Pro-Music ... Music Flamingo scores 65.6"

- MuChoMusic: A benchmark evaluating perceptual music understanding across diverse attributes. "MuChoMusic (perceptual version)"

- Music Information Retrieval (MIR): A research field focused on extracting, classifying, and retrieving information from music audio. "music understanding has a long history in Music Information Retrieval (MIR), encompassing retrieval, classification, and captioning."

- Parakeet: An audio model/tool used here for lyric extraction/transcription from songs. "Parakeet (lyrics)"

- Polyrhythms: Multiple contrasting rhythmic patterns layered simultaneously, common in various musical traditions. "polyrhythms in African drumming"

- RoPE (Rotary Position Embeddings): A positional encoding method applying rotations in embedding space based on token indices. "Unlike standard RoPE, where the rotation angle θ depends on the token index i as θ ← -i ⋅ 2π"

- Rotary Time Embeddings (RoTE): A time-grounded variant of rotary embeddings that uses absolute timestamps rather than token indices. "we employ Rotary Time Embeddings (RoTE)"

- Structured Thinking Reward: A custom RL reward that scores captions against structured metadata to encourage theory-grounded descriptions. "The structured thinking reward function computes a string match for each answer"

- Supervised Fine-Tuning (SFT): Training the model on labeled data to prime its reasoning before RL optimization. "we first perform SFT of the music foundation model"

- Temporal understanding: The ability to perceive and reason about time-dependent musical events (beats, changes, phrasing). "toward tasks requiring temporal understanding"

- Turn-taking: Alternation of speakers/singers; in audio models, the capability to parse who speaks/sings when. "enabling the model to parse turn-taking and overlapping voices"

- Word Error Rate (WER): A standard metric for transcription accuracy measuring the rate of incorrect words. "It also delivers a significantly lower WER on Chinese and English lyrics transcription"

Collections

Sign up for free to add this paper to one or more collections.