- The paper presents CMP, a coordination-free lock-free queue design using cyclic memory protection to achieve strict FIFO ordering, unbounded capacity, and robust memory safety.

- It employs a lightweight atomic-based cycle counter and a sliding protection window to decouple memory reclamation from inter-thread coordination.

- Experimental results show up to a 425% throughput improvement and lower latency compared to traditional lock-free queues in high contention environments.

Cyclic Memory Protection: Coordination-Free Lock-Free Queues with Bounded Reclamation

Introduction

Concurrent queues are fundamental for modern parallel computing, required to simultaneously achieve unbounded capacity, strict FIFO semantics, and scalable, lock-free high-performance. Traditional lock-free designs inject significant complexity to prevent classical hazards such as ABA and use-after-free, often sacrificing strict ordering, unboundedness, or performance, especially under high thread counts which are prevalent in large-scale AI training and inference systems. "No Cords Attached: Coordination-Free Concurrent Lock-Free Queues" introduces Cyclic Memory Protection (CMP), a fully coordination-free, lock-free queue design that revisits the simplicity of original FIFO queues while achieving strict semantics and scalability previously considered incompatible.

CMP Algorithmic Framework

CMP's innovation fundamentally revolves around decoupling memory safety from inter-thread coordination through temporal (cycle-based) and state-based protection, both enforced via lightweight atomic invariants rather than expensive runtime protocols.

Key properties:

- Unbounded capacity—no fixed size or preallocation required

- Strict FIFO ordering—never relaxes global enqueue/dequeue order

- Lock-free progress—non-blocking for both enqueue and dequeue, system-wide

- Memory safety—eliminates ABA and UAF via dual protection

- Bounded reclamation—guaranteed progress even if threads crash or stall, unlike epoch/hazard-pointer techniques

- Performance scalability—cost per operation is independent of thread count in the typical case

The design centers around a sliding protection window: every node is tagged at enqueue with a strictly increasing cycle counter. A configurable window W determines the "age" range within which nodes are protected from reclamation. Only nodes in CLAIMED state and outside this window are reclamation candidates, decoupling garbage collection from thread liveness.

Data Structure and Operations

Enqueue

CMP's enqueue is a simplified Michael-Scott variant with all complex "helping" mechanisms eliminated. Each new node is allocated, tagged with the next cycle, and appended to the tail using CAS, maintaining strict temporal order and physical list order.

Dequeue

Dequeue operations use a scan cursor optimization—allowing threads to start from the likely earliest available node, yielding O(1) average complexity. Dequeue claims a node atomically (AVAILABLE → CLAIMED via CAS), updates the global maximal dequeued cycle, and atomically acquires the payload, ensuring no duplicates or race conditions, including under contention or preemption.

Reclamation

Reclamation is triggered periodically (after every N enqueues), scanning only as much of the list as is safe, with no inter-thread global epochs, announcements, hazard-pointer handshakes, or quiescent points. A batch of nodes are reclaimed if both their state and cycle boundaries are safe; this is enforced via straightforward atomic reads and a single batch head-pointer CAS. Advancement is monotonic, and reclamation cannot get stuck due to a failed or stalled thread.

Theoretical Guarantees

CMP proves full linearizability: successful enqueue and dequeue CAS operations represent effective commit points in the global order. FIFO properties are strengthened because, by construction, the dequeue can only claim the very first node in AVAILABLE state, and nodes can only be appended strictly at the end.

Bounded reclamation is mathematically guaranteed: for a window W, no node can be reclaimed before it is W cycles old from the latest dequeued cycle, regardless of thread failures. This prevents the classic problems of epoch protection or hazard pointers, where stalled threads can block reclamation indefinitely and cause memory leakage.

Safety against ABA and UAF is ensured: since the cycle field is immutable and type-stable (nodes are only recycled after thorough pointer clears), CAS-based advancement and the temporal window eliminate all classic hazards.

Lock-freedom is maintained—failed operations only indicate another thread's successful progress—and the scan cursor ensures high concurrency does not degrade performance or operational latency.

Experimental Evaluation

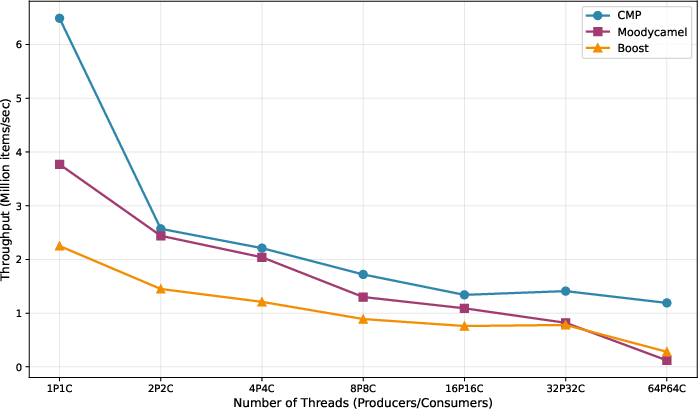

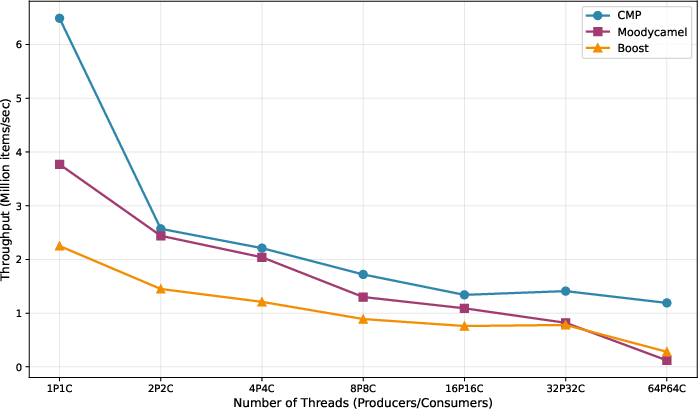

CMP's performance was systematically compared to two widely deployed production implementations: Moodycamel (relaxed-FIFO, high-throughput, per-producer queues) and Boost.Lockfree (strict FIFO, hazard-pointer protected Michael-Scott). Benchmarks measured both peak throughput and latency across a range of producer-consumer configurations, including artificially stressed workloads with synthetic computation and cache contention.

CMP consistently outperforms prior approaches, especially under high contention:

- 64P64C (64 producers, 64 consumers): CMP shows 1.19M items/sec throughput, a 425% improvement over alternatives under peak contention.

- Low-contention regime: 72% higher throughput than Moodycamel, 188% higher than Boost (1P1C).

- Across all regime, scales linearly in thread count with graceful degradation and no catastrophic performance collapse.

Figure 1: A comparison of throughput across thread configurations, illustrating CMP's consistent scaling and dominance at high contention, where it provides a 425% improvement over the next best alternative.

Latency analysis reveals lower average and tail (P99) costs, particularly for dequeues under contention, attributed to reduced synchronization and lock-free memory management:

- In balanced contention (4P4C): 38% lower dequeue latency compared to Moodycamel.

- At scale (32P32C, 64P64C): 10–14% lower enqueue latency and 30–70% lower dequeue latency.

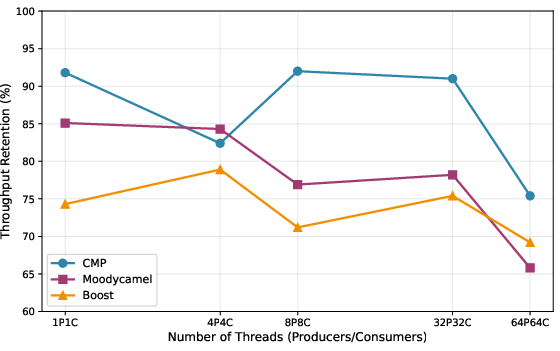

When subjected to synthetic load (memory pressure and scheduling interference):

CMP’s strong P99 performance and tight baseline-to-stress retention underscore the advantage of removing inter-thread coordination from memory safety mechanisms.

Trade-offs, Implementation Considerations, and Applications

Protection window selection (W) is workload-dependent. Larger W increases fault tolerance (covers longer dequeue stalls), but at the cost of additional memory retention; however, unbounded memory pressure is eliminated (no infinite leakage). Real systems can dynamically adapt W based on observed tail-latency characteristics.

Hardware and language constraints: CMP avoids DCAS or obscure atomics; operates on single-pointer-sized CAS, making it broadly implementable in both C++ and managed languages (with pool-type stability). The fast-path requires 3–5 atomics for enqueue, 4–9 for dequeue, enabling deployment on ARM, x86, and RISC-V.

Practical deployment: Given integration experience in production systems (e.g., vector search, real-time communication pipelines), CMP is effective for scale-out AI/ML pipelines, distributed logging, telemetry ingestion, and producer-consumer workloads with non-cooperating (possibly failing) agents.

Implications and Future Research

The CMP algorithm demonstrates that coordination is not a necessary cost for correctness or memory safety in high-concurrency FIFO queue design. This result directly challenges assumptions underlying decades of memory reclamation innovation (hazard pointers, QSBR, epoch-based schemes), which were built on paradigms of infinite hazard protection at enormous practical cost. CMP sidesteps the liveness bottlenecks inherent in coordination-based schemes, enabling deployment in adversarial production settings where threads can stall, crash, or be preempted for extended durations.

This architecture suggests further optimizations: for instance, segmenting the queue as in Moodycamel could permit even more favorable data locality without sacrificing CMP's strict semantics and lock-free properties. Similarly, adaptive windowing and memory structure tuning could offer tighter memory bounds and latency guarantees for real-time, predictable applications.

CMP’s influence extends to any lock-free data structure where protection and reclamation overheads have historically limited parallel scalability and correctness.

Conclusion

CMP reestablishes the foundational simplicity of lock-free queues, showing that strict FIFO, unboundedness, memory safety, and high scalability are not mutually exclusive, but can be unified through simple, temporal invariants. By eliminating the need for inter-thread coordination in the fast path and in reclamation, CMP delivers strong theoretical and practical advantages—up to 4x throughput at scale—and robust resilience to system faults. The work creates a new blueprint for high-concurrency data structures required by contemporary AI and database systems, with further extensibility possible for the next generation of lock-free programming abstractions.