4D Neural Voxel Splatting: Dynamic Scene Rendering with Voxelized Guassian Splatting (2511.00560v1)

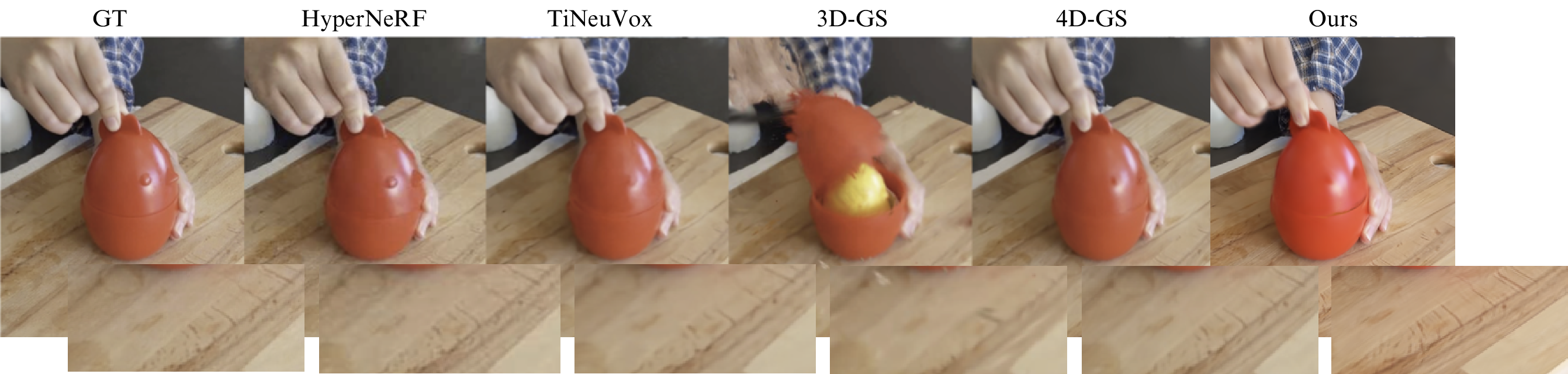

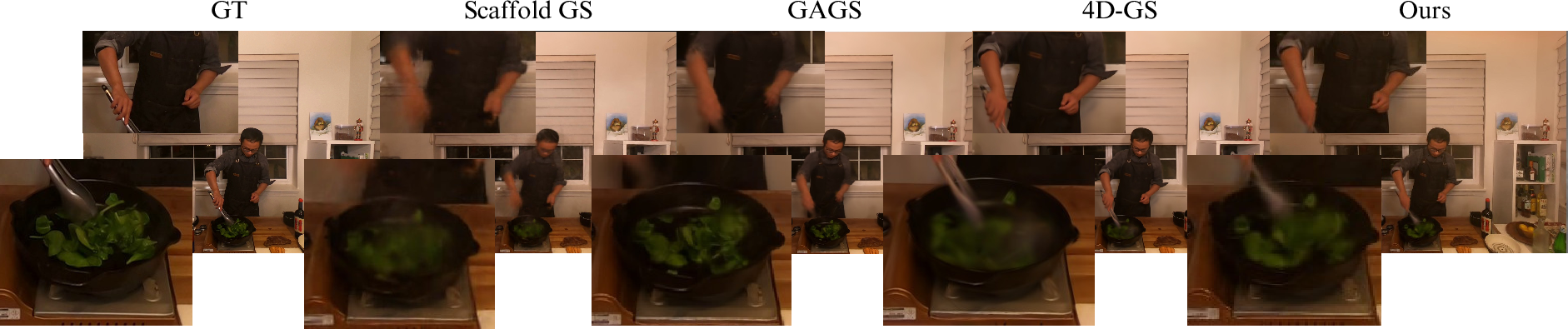

Abstract: Although 3D Gaussian Splatting (3D-GS) achieves efficient rendering for novel view synthesis, extending it to dynamic scenes still results in substantial memory overhead from replicating Gaussians across frames. To address this challenge, we propose 4D Neural Voxel Splatting (4D-NVS), which combines voxel-based representations with neural Gaussian splatting for efficient dynamic scene modeling. Instead of generating separate Gaussian sets per timestamp, our method employs a compact set of neural voxels with learned deformation fields to model temporal dynamics. The design greatly reduces memory consumption and accelerates training while preserving high image quality. We further introduce a novel view refinement stage that selectively improves challenging viewpoints through targeted optimization, maintaining global efficiency while enhancing rendering quality for difficult viewing angles. Experiments demonstrate that our method outperforms state-of-the-art approaches with significant memory reduction and faster training, enabling real-time rendering with superior visual fidelity.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about making realistic 3D videos of moving scenes (like a person dancing or objects shifting) that can be viewed from any angle, in real time, without using a lot of computer memory. The authors introduce a method called 4D Neural Voxel Splatting (4D-NVS), which mixes two ideas:

- Voxels: 3D pixels (like tiny cubes) that store information about the scene.

- Gaussian splatting: rendering a scene using lots of soft blobs that blend together to form an image.

“4D” means they handle both space (3D) and time (the 4th dimension), so the system can model motion.

What questions does the paper try to answer?

The paper focuses on three simple goals:

- How can we show moving 3D scenes quickly and smoothly (real-time) without needing huge amounts of memory?

- How can we keep image quality high while reducing training time from hours to minutes?

- Can we make the system smart enough to fix the hard camera angles (the views that look bad) without slowing everything down?

How did they do it?

The method has a few key ideas. To make them easy to understand, think of building a movie set for a camera:

- A voxel grid is like a well-organized map of the scene made of tiny cubes. These voxels act like “anchors” or “stage markers” that help place objects.

- Gaussian splats are like soft, glowing confetti pieces that, when projected onto the camera, blend into a realistic picture. Instead of storing all confetti for every moment, the system creates them only when the camera needs them.

Here’s the approach in plain terms:

- Decouple space and time: They separate the scene’s structure (where things are) from motion (how things change over time). This lets the system reuse the same basic structure and only apply changes over time.

- Generate on-demand: For each frame and camera, the system only creates the blobs it needs for the visible parts of the scene. This saves a lot of memory.

- Deform smartly over time: A compact “deformation field” acts like a gentle wind map that tells each blob how to move, rotate, and scale as time passes. They use a technique called HexPlane, which breaks the 4D space-time into six simple 2D planes (XY, XZ, YZ, XT, YT, ZT) to keep it efficient.

- Selective deformation: They learned that changing everything about each blob (position, size, rotation, color, opacity) makes training unstable. So they only change geometry (position, scale, rotation) and keep appearance (color and opacity) fixed. This makes learning more reliable.

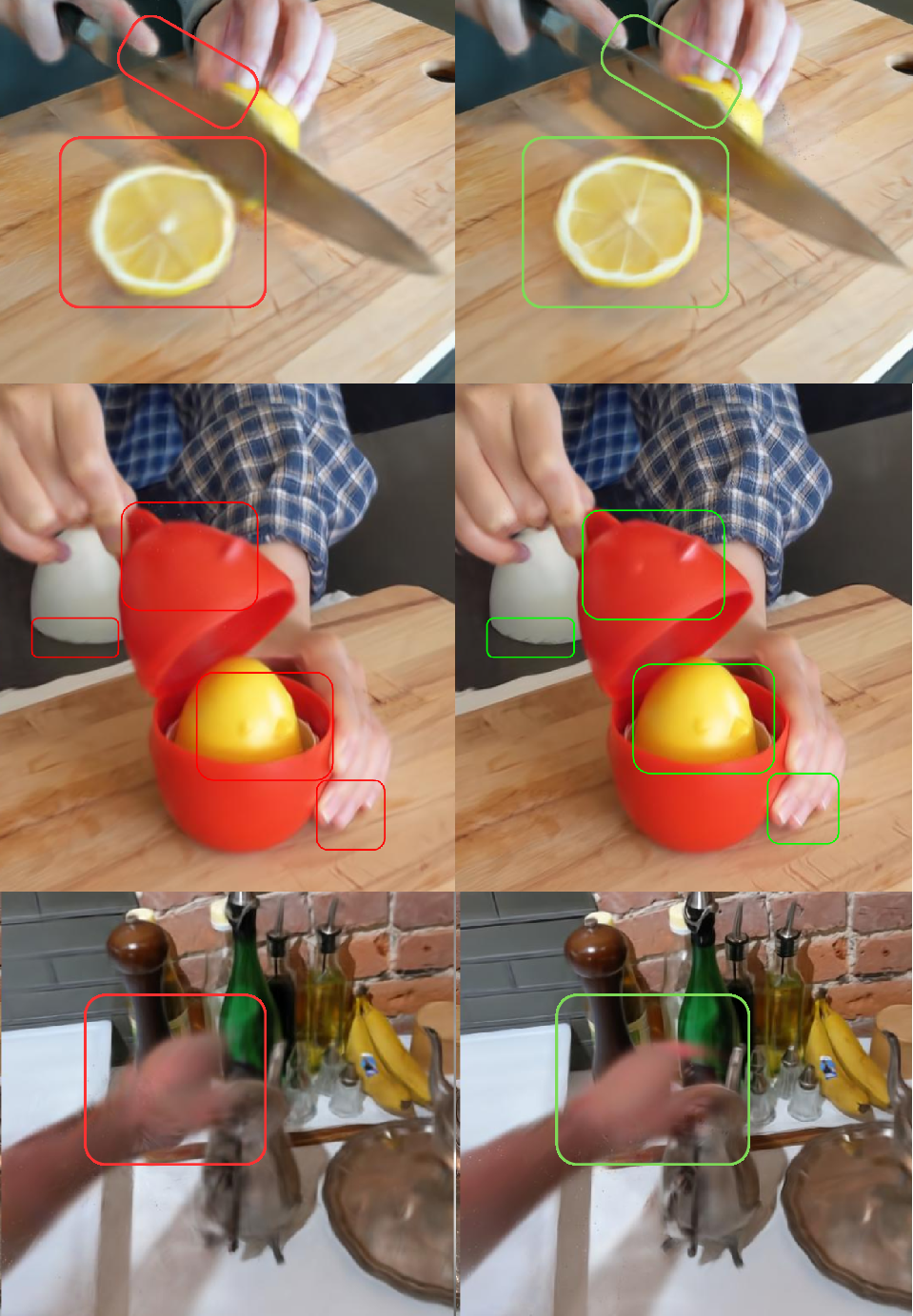

- View refinement: The system watches which camera angles look worse during training. It then focuses extra effort on those angles, adding more blobs and fine-tuning them. Think of it as giving extra attention to the tricky parts of the scene without wasting time on parts that already look good.

Training happens in three stages:

- Coarse start: Learn the basic shape of the scene using mixed time frames.

- Fine temporal training: Learn motion over time using the deformation field.

- View refinement: Fix the angles that still look rough with targeted improvements.

What did they find, and why is it important?

The authors tested their method on well-known datasets and found:

- Much faster training: Minutes instead of hours (for example, around 13–25 minutes versus many hours).

- Real-time rendering: Smooth playback at tens of frames per second (like 43–45 FPS), which is great for VR/AR and interactive tools.

- Lower memory use: They use less GPU memory than other strong methods, making it possible to run on regular consumer hardware.

- High image quality: They reached better or competitive scores (like higher PSNR and SSIM), meaning images look sharp and consistent.

In short, it hits a sweet spot: fast, memory-friendly, and high-quality.

What’s the impact?

This work matters because it makes realistic 3D videos of moving scenes practical:

- VR/AR and games can render dynamic scenes smoothly without requiring expensive hardware.

- Robots and devices with limited memory (like small GPUs on drones or home assistants) can quickly learn and adapt to changing environments.

- Creators can interactively edit or explore scenes in real time.

- The idea of separating space from time and only deforming geometry could guide future designs that are both stable and efficient.

There are still challenges (like very large motions and occasional visual “popping”), but the approach opens the door to fast, high-quality 4D rendering for many real-world applications.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a consolidated list of what remains missing, uncertain, or unexplored in the paper that future researchers could address:

- Dynamic appearance modeling is not supported: freezing color and opacity prevents learning time-varying reflectance, lighting, shadows, specular highlights, and exposure/white balance changes; investigate adding an appearance-time branch (e.g., XT/YT/ZT planes or a lightweight temporal appearance MLP) with stability controls.

- Temporal coherence is not explicitly evaluated: results rely on per-frame PSNR/MS-SSIM; add temporal metrics (e.g., flicker index, temporal SSIM, optical-flow warping error) and user studies to quantify inter-frame stability and perceived consistency.

- Sensitivity and robustness of the view refinement heuristics are untested: conduct a thorough analysis of the EMA thresholds, momentum, severity weighting, and gradient triggers across datasets; assess stability, reproducibility, and overfitting risks when focusing optimization on selected views.

- Scalability to very long sequences and large scenes is unclear: quantify how V (voxels), k (Gaussians per voxel), HexPlane resolution, and deformation network size scale with scene size/duration; test streaming/incremental training and memory behavior over hundreds/thousands of frames.

- Edge-device viability is claimed but not demonstrated: measure end-to-end latency, throughput, and energy on target edge platforms (e.g., NVIDIA Jetson with 4–8 GB), including rasterization, on-demand Gaussian generation, and deformation inference; provide reduced configurations that fit strict memory/compute budgets.

- Robustness to camera pose errors and imperfect SfM initialization is underexplored: integrate and evaluate joint pose refinement, rolling-shutter handling, multi-camera synchronization issues, or pose/noise-aware regularization to mitigate failures under large motion/pose inaccuracies.

- Handling of topology changes and severe non-rigid motion is not characterized: benchmark on scenes with self-occlusion, tearing, topology changes, fast articulated motion, and large deformations; probe whether a single global HexPlane field is sufficient or if part-aware/local deformation fields are needed.

- Causes and mitigation of popping artifacts remain unspecified: analyze failure modes (e.g., per-view Gaussian spawning, sorting, densification thresholds) and evaluate continuity constraints (temporal occupancy smoothing, visibility hysteresis, consistency losses) or improved rasterization/ordering schemes.

- Deformation of rotations is underconstrained: clarify whether quaternion outputs are normalized and how gimbal lock or invalid rotations are avoided; consider geodesic losses on SO(3) and stability-focused parameterizations (e.g., exponential maps).

- Lack of detailed memory breakdown: report per-component memory (voxel features, HexPlane grids at each level, deformation MLPs, per-view caches, rasterization buffers) and how they vary with hyperparameters to guide practitioners.

- Fairness and consistency of comparisons require clarification: ensure matched resolutions, training budgets, and evaluation protocols across baselines; disclose all retraining details and discuss the effect of multi-camera counts and scene motion complexity on reported metrics.

- Resolution–FPS–quality trade-offs are not mapped: provide curves showing the impact of resolution, k, voxel size, HexPlane resolution, and MLP widths on FPS, memory, and quality to enable informed deployment choices.

- No evaluation under challenging photometric conditions: test robustness to motion blur, dynamic lighting, flickering, exposure changes, and sensor noise; consider augmentations or photometric calibration modules.

- Generalization beyond per-scene training is not discussed: explore whether voxel and HexPlane parameters can be pre-trained and adapted (few-shot or finetuning), and quantify cross-scene generalization or warm-start benefits.

- Interaction between selective geometric deformation and fixed appearance is not fully analyzed: paper whether geometry-only deformation misaligns appearance (e.g., disocclusions, shadow movement) and whether limited appearance adaptation can be added without destabilizing training.

- On-demand Gaussian generation may induce per-view inconsistencies: investigate caching/reuse strategies across adjacent frames and views, or consistency regularizers that penalize large per-view discrepancies in generated primitives.

- Occupancy/anchor growth and pruning strategy is under-specified: detail the algorithm, thresholds, and schedules; assess its impact on stability, memory, and quality, and compare against alternative density control methods.

- Loss design and formulation need clarification: the volume regularization term’s exact definition and gradients are not clearly specified; provide the precise formula, rationale, and sensitivity to the weight λ_vol across datasets.

- HexPlane aggregation choices are not explored: evaluate alternative feature fusion (concatenation + learned mixing, attention/gating, per-plane weights) and multi-resolution strategies for better capacity–efficiency trade-offs.

- Multi-object and multi-part dynamics are not explicitly supported: examine whether separate deformation fields or instance-aware voxel partitions improve complex interactions, collisions, and occlusions.

- Streaming/online updates with dynamic scene changes are not addressed: design and evaluate mechanisms for adding/removing objects, adapting to newly observed regions, and preventing catastrophic forgetting during continuous operation.

- Practical deployment details are missing: specify I/O pipelines (frame buffering, camera synchronization), latency budgets, batching strategies, and how the refinement stage integrates into real-time loops without interrupting live rendering.

- Reproducibility resources are not indicated: provide code, configs, seeds, and dataset splits; include a comprehensive training script with default hyperparameters, logging of per-view stats, and guidelines for hyperparameter tuning.

Practical Applications

Immediate Applications

The paper’s 4D Neural Voxel Splatting (4D-NVS) delivers real-time dynamic scene rendering with low memory use and fast training, enabling the following deployable use cases on consumer and mid-range GPUs.

- Media/Entertainment (VR/AR 6-DoF video production and playback)

- Use: Capture dynamic scenes (people, props, motion) and render photorealistic 6-DoF experiences for VR/AR apps and immersive films.

- Tools/Products/Workflows: Unity/Unreal plugin for 4D-NVS; studio capture pipeline with multi-view rigs; automated “view-adaptive refinement” pass to fix problematic shots; asset export for game engines.

- Assumptions/Dependencies: Multi-view capture (e.g., 15–20 cameras for complex motion); accurate COLMAP calibration; moderate motion without severe occlusions; GPU with ~3–8GB VRAM; per-scene training time (~13–17 minutes) acceptable for production.

- Sports and Live Event Replays (broadcast and fan engagement)

- Use: Generate interactive volumetric replays and highlights with multiple viewpoints, enabling viewers to “move the camera” in post.

- Tools/Products/Workflows: Broadcast-side 4D-NVS render farm; a replay pipeline that ingests multiview footage and selectively refines hard angles using PSNR/gradient-based detection; web player for 6-DoF highlights.

- Assumptions/Dependencies: Synchronized camera arrays; legal permissions; sufficient compute for near-real-time turnaround; manageable motion complexity to avoid popping artifacts.

- Robotics (edge deployment for dynamic scene understanding)

- Use: Rapid reconstruction of dynamic environments for navigation/manipulation on resource-constrained platforms (e.g., NVIDIA Jetson with 4–8GB).

- Tools/Products/Workflows: ROS node integrating 4D-NVS; on-demand Gaussian generation to keep memory low; “refinement stack” to prioritize views where the robot struggles (occlusions, fast motion).

- Assumptions/Dependencies: Performance on Jetson will be lower than RTX 4090; reliance on accurate camera poses; limited tolerance to very large motions; scene-specific training; integration with SLAM/SfM.

- Digital Twins for Factories/Warehouses (operations monitoring)

- Use: Efficient updates to dynamic site models (conveyors, human workers) with high visual fidelity and fast iteration for incident review and training.

- Tools/Products/Workflows: 4D-NVS “twin update” service that retrains on new camera batches; viewpoint-aware refinement of high-traffic camera feeds; dashboard embedding of real-time renderings.

- Assumptions/Dependencies: Stable camera infrastructure; periodic batch training acceptable; IT integration and data governance.

- Telepresence and Remote Collaboration (enterprise/Education)

- Use: 6-DoF volumetric telepresence sessions with low memory footprint on office desktops; improved visual quality for difficult angles via targeted refinement.

- Tools/Products/Workflows: Volumetric meeting app; multi-camera capture in meeting rooms; GPU rendering node; selective densification for participants’ viewpoints.

- Assumptions/Dependencies: Network bandwidth for multiview ingest; room setup with multiple cameras; privacy/security policies.

- E-commerce and Marketing (retail)

- Use: Interactive product demos (e.g., clothing drape, gadgets in motion) for 6-DoF web/AR experiences that reduce returns and increase engagement.

- Tools/Products/Workflows: Merchant capture kits; cloud service to train 4D-NVS models quickly; web viewer integration; “challenging view” refinement for key product angles.

- Assumptions/Dependencies: Multiview capture availability; content rights; compute budget for batch training.

- Education (teaching and labs in graphics/vision/robotics)

- Use: Rapid scene capture and dynamic rendering labs; curriculum modules demonstrating voxel-based and Gaussian splatting techniques.

- Tools/Products/Workflows: Course kits with small multiview rigs; open-source 4D-NVS demos; assignments on HexPlane and selective deformation; ablation experiments.

- Assumptions/Dependencies: Access to commodity GPUs; instructor familiarity; safe capture environments.

- Public Safety Training (policy/municipal)

- Use: Fast volumetric reconstructions of training scenarios (crowd movement, fire drills) with realistic dynamics for responder training.

- Tools/Products/Workflows: Command-center GPU nodes; scenario library captured with multiview cameras; refinement to prioritize views relevant to procedures.

- Assumptions/Dependencies: Procurement, privacy constraints, data retention policies; training time fits exercise schedules.

- Social/Creator Tools (daily life)

- Use: Hobbyist 6-DoF captures of performances or sports using multiple smartphones and a gaming PC for post-processing; interactive sharing.

- Tools/Products/Workflows: Mobile capture app (time-synced, COLMAP calibration), desktop 4D-NVS trainer, AR viewer; community presets for refinement.

- Assumptions/Dependencies: Multi-device synchronization and calibration; desktop GPU; tolerance for short training delays.

- Research Pipelines (academia)

- Use: Benchmarking dynamic scene methods and dataset curation with reproducible, memory-efficient models; exploring deformation strategies and view refinement.

- Tools/Products/Workflows: 4D-NVS baseline for new datasets; tooling for PSNR/gradient-based view selection; ablations on HexPlane, TV/volume losses, and deformation.

- Assumptions/Dependencies: Availability of datasets; reproducibility and licensing; standard evaluation metrics.

Long-Term Applications

These applications build on 4D-NVS’s methods but require further research, scaling, hardware acceleration, or ecosystem development.

- On-device Mobile AR Capture and Playback (software/consumer)

- Use: Real-time training/inference on smartphones/tablets for 6-DoF dynamic scenes captured by a few cameras or even a single moving device.

- Tools/Products/Workflows: Mobile-optimized 4D-NVS with quantization/pruning; incremental/online training; codec-friendly representations.

- Assumptions/Dependencies: Mobile GPU acceleration, efficient compression, robust single-view initialization; power/battery constraints.

- Real-time Broadcast-Level Volumetric Streaming (media)

- Use: End-to-end pipeline for live events with sub-second latency, including dynamic motion, view selection, and adaptive refinement on the fly.

- Tools/Products/Workflows: Hardware-accelerated splatting; streaming protocols and volumetric codecs; distributed render clusters with view-priority scheduling.

- Assumptions/Dependencies: Standards for volumetric video; SLAs for latency; high-bandwidth infrastructure.

- Lifelong Online Reconstruction for Robots (robotics)

- Use: Continuous updating of dynamic scene models during operation; selective refinement tied to active task viewpoints (grasping, navigation).

- Tools/Products/Workflows: Online training loop; uncertainty-driven view selection; scheduling between perception and policy; ROS2 integration.

- Assumptions/Dependencies: Efficient online optimization; robust to large/fast motions; safety guarantees; compute on edge platforms.

- City-Scale Dynamic Digital Twins (urban planning/policy)

- Use: Temporal-aware twins for districts (pedestrian flow, traffic, public events), enabling planning, simulation, and incident analysis.

- Tools/Products/Workflows: Federated 4D-NVS across camera networks; privacy-preserving aggregation; policy dashboards with viewpoint-aware refinement.

- Assumptions/Dependencies: Governance frameworks, anonymization; standardized camera calibration across agencies; scalable storage/compute.

- Healthcare Simulation and Training (healthcare)

- Use: High-fidelity visualization of dynamic anatomy (organ motion, instrument-tissue interactions) for surgical training and rehearsal.

- Tools/Products/Workflows: Operating-room capture rigs; medically compliant data pipelines; integration with surgical simulators; selective deformation tuned for soft-tissue dynamics.

- Assumptions/Dependencies: Regulatory compliance (HIPAA/GDPR), sterile and safe capture; domain-specific validation; specialized hardware.

- Volumetric Video Standards and Codecs (policy/standards)

- Use: Formalization of representation, streaming, and storage (e.g., MPEG-like standards) incorporating voxelized Gaussian splatting and deformation fields.

- Tools/Products/Workflows: Reference encoder/decoder; test corpora; interoperability profiles; evaluation suites for visual quality and latency.

- Assumptions/Dependencies: Multi-stakeholder alignment; IPR/licensing; long-term maintenance.

- Simulation for Autonomous Systems (transportation/robotics)

- Use: Memory-efficient rendering of dynamic actors (pedestrians, cyclists) in training simulators; improved diversity via selective deformation models.

- Tools/Products/Workflows: Scenario generator leveraging 4D-NVS; plug-in for autonomy simulators; view-priority refinement for sensor placements (cameras/LiDAR).

- Assumptions/Dependencies: Realism and domain transfer validation; sensor model integration; performance on large-scale datasets.

- Consumer 6-DoF Social Platforms (daily life/media)

- Use: Platforms where users capture and share volumetric “moments” with interactive viewpoints; creators monetize dynamic 3D content.

- Tools/Products/Workflows: Capture kits, cloud training, CDN for volumetric assets; client viewers across mobile/VR; creator analytics.

- Assumptions/Dependencies: UX for multiview capture; content moderation and privacy; compression standards; device compatibility.

- Industrial Inspection and Maintenance (energy/manufacturing)

- Use: Dynamic digital twins for machinery under operation, with targeted refinement on abnormal motion or vibration hotspots detected by sensors.

- Tools/Products/Workflows: Sensor-visual fusion; anomaly-triggered refinement; maintenance dashboards; archival of time-varying 3D states.

- Assumptions/Dependencies: Safe sensor placement; synchronization; domain-specific thresholds; integration with CMMS systems.

- Education at Scale (education)

- Use: Affordable classroom kits and cloud resources to teach dynamic 3D methods; standardized curricula and interactive labs across institutions.

- Tools/Products/Workflows: Managed educational cloud with GPU quotas; courseware integrating 4D-NVS; auto-graded labs and ablations.

- Assumptions/Dependencies: Funding and access to hardware; educator training; ongoing support.

Cross-cutting assumptions and feasibility notes

- Capture requirements: Most applications assume multi-view camera setups and reliable calibration/poses (COLMAP or equivalent). Single-view or monocular capture would require future research on robust initialization and motion estimation.

- Motion complexity: The method currently struggles with very large/rapid motions and exhibits occasional popping artifacts; pipeline adaptations (better motion priors, rasterization improvements) are needed for high-stress scenarios.

- Hardware and performance: Reported real-time performance (e.g., ~43 FPS) is on high-end GPUs (RTX 4090). Edge/mobile deployment will require optimization, quantization, and possible hardware acceleration.

- Training paradigm: 4D-NVS is per-scene trained (3-stage pipeline). For truly live/continuous scenarios, online/incremental learning and streaming codecs must be developed.

- Data governance: Volumetric captures raise privacy, safety, and IP concerns. Policy-aligned workflows (consent, anonymization, retention) are essential for public-facing deployments.

Glossary

- 3D Gaussian Splatting (3D-GS): An explicit point-based rendering technique that represents scenes with 3D Gaussian primitives and splats them onto the image plane for real-time rendering. "3D Gaussian Splatting (3D-GS) \cite{3dgs} emerged as an efficient alternative, representing scenes with colored 3D Gaussian primitives rendered via projective splatting rather than volumetric rendering."

- 4D Gaussian Splatting (4D-GS): An extension of 3D-GS for dynamic scenes that deforms canonical Gaussians over time using learned fields to avoid per-frame memory growth. "For dynamic scenes, 4D Gaussian Splatting (4D-GS) \cite{4dgs} extends 3D-GS with learned deformation fields to transform canonical Gaussians over time, avoiding the memory overhead of per-frame Gaussian sets."

- 4D Neural Voxel Splatting (4D-NVS): The proposed method combining voxel anchors with neural Gaussian generation and temporal deformation for efficient dynamic scene rendering. "we propose 4D Neural Voxel Splatting (4D-NVS), which combines voxel-based representations with neural Gaussian splatting for efficient dynamic scene modeling."

- alpha-blending: A compositing technique that accumulates alpha values to blend translucent contributions along the viewing direction. "applies -blending:"

- anchor points: Fixed positions (often voxel centers) used as structured references to generate and decode nearby Gaussian attributes. "Scaffold-GS \cite{scaffoldgs} generates Gaussians from structured anchor points rather than optimizing individual primitives."

- anisotropic 3D Gaussians: Gaussian primitives with direction-dependent covariance, allowing non-uniform spread across axes. "3D Gaussian Splatting (3D-GS) \cite{3dgs} represents scenes using anisotropic 3D Gaussians, each defined by a mean position and covariance matrix :"

- bilinear interpolation: A grid-based interpolation method that blends values linearly in two dimensions. "we extract features via bilinear interpolation from each plane and aggregate them:"

- camera frustum: The truncated pyramidal volume defining the region of space visible to the camera. "identify voxels within the camera frustum, significantly reducing computational overhead"

- canonical space: A reference, time-independent configuration to which deformations are applied for dynamic modeling. "Canonical-space methods like 4D-GS \cite{4dgs} reduce this but rely on heavy deformation networks and costly backward mapping."

- covariance matrix: A matrix encoding the shape and orientation of a Gaussian’s spread. "where the covariance matrix is constructed as using scaling matrix and rotation matrix ."

- deformation fields: Learned mappings that displace and transform geometric properties over time. "employs a compact set of neural voxels with learned deformation fields to model temporal dynamics."

- densification: The process of adding more primitives (e.g., splitting Gaussians) in challenging regions to improve quality. "View refinement stage for underperforming viewpoints through adaptive densification."

- differentiable optimization: Gradient-based training of rendering models enabled by differentiable operations in the pipeline. "This tile-based rasterization enables real-time rendering with differentiable optimization."

- EMA (exponentially weighted moving average): A running average that weights recent observations more to track trends robustly. "compare it against an exponentially weighted moving average (EMA) to identify statistical outliers:"

- HexPlane: A factorized 4D representation that encodes space-time using six 2D planes for efficiency. "HexPlane factorizes the 4D space-time volume into six 2D planes: three spatial planes (XY, XZ, YZ) and three space-time planes (XT, YT, ZT)."

- MLP (Multi-Layer Perceptron): A feedforward neural network used to map features to attributes like color and opacity. "Neural Radiance Fields (NeRF) \cite{nerf} revolutionized novel view synthesis by representing scenes with MLPs that output view-dependent color and density."

- multi-resolution hashed grids: Compact grid encodings indexed by hash functions across multiple scales to speed training and inference. "Instant-NGP \cite{instant-ngp}, which employed multi-resolution hashed grids for faster training and inference."

- Neural Radiance Fields (NeRF): An implicit scene representation that predicts color and density with MLPs for view synthesis. "Neural Radiance Fields (NeRF) \cite{nerf} revolutionized novel view synthesis by representing scenes with MLPs that output view-dependent color and density."

- neural voxels: Voxel cells with learned feature vectors that condition the generation of neural Gaussians. "employs a compact set of neural voxels with learned deformation fields to model temporal dynamics."

- popping artifacts: Sudden visual changes (appearance/disappearance or ordering) causing flicker in dynamic rendering. "Although introducing view-dependent sorting solves the ``static'' part of the popping artifact, it is not entirely eliminated."

- projective splatting: Rendering technique that projects 3D primitives to 2D Gaussians on the image plane and rasterizes them. "rendered via projective splatting rather than volumetric rendering."

- PSNR (Peak Signal-to-Noise Ratio): A quantitative metric (in dB) for image reconstruction fidelity. "We track the PSNR of each rendered view and compare it against an exponentially weighted moving average (EMA) to identify statistical outliers:"

- quaternion: A four-parameter rotation representation used for stable orientation updates. "output 3D position offsets, quaternion rotations, and 3D scale factors, respectively."

- rasterization (tile-based): Screen-space rendering that processes pixels in tiles for efficiency and parallelism. "This tile-based rasterization enables real-time rendering with differentiable optimization."

- spherical harmonics: Orthogonal basis functions used to model view-dependent color/opacity efficiently. "Each Gaussian has associated color and opacity (modeled with spherical harmonics) ."

- Structure-from-Motion (SfM): A method to recover camera poses and sparse 3D points from images. "We initialize neural voxels using the sparse point cloud from Structure-from-Motion (SfM) \cite{colmap}"

- total variation loss: A regularizer that encourages spatial smoothness by penalizing local gradients. "Optimize with color loss, total variation loss, and scaling regularization"

- visibility culling: Eliminating non-visible elements before rendering to save computation. "we perform visibility culling to identify voxels within the camera frustum"

- volumetric ray marching: Sampling along rays through a volume to integrate color and density for rendering. "early NeRF models suffered from slow rendering due to costly volumetric ray marching."

- volumetric rendering: Rendering by integrating volumetric contributions along rays in a 3D field. "rather than volumetric rendering."

- voxel grids: 3D grids of cells storing features or primitives for spatial representation. "We extend 3D voxel grids to 4D by treating time as an additional dimension in the voxel feature space"

- view-dependent sorting: Reordering primitives per viewpoint to reduce artifacts and ensure correct compositing. "Although introducing view-dependent sorting solves the ``static'' part of the popping artifact"

- viewing frustum: The camera’s field-of-view volume within which objects are considered for rendering. "Neural Gaussians are generated on-demand within the viewing frustum."

Collections

Sign up for free to add this paper to one or more collections.