- The paper introduces a novel framework integrating deep learning-based video analytics with sensor data for real-time failure prediction.

- The methodology achieved a YOLOv11 detection accuracy of 94.2% with a low false alarm rate of 2.8%, reducing downtime and saving costs.

- The modular system architecture enables scalability and quick integration with process control, supporting proactive maintenance planning.

Process-Integrated Computer Vision for Real-Time Failure Prediction in Steel Rolling Mills

Introduction

This paper presents a comprehensive framework for integrating computer vision with process control in steel rolling mills to enable real-time failure prediction and anomaly detection. The approach leverages industrial-grade cameras, deep learning-based video analytics, and sensor data fusion to address the limitations of traditional sensor-based monitoring systems. The system is designed for deployment as an independent video server, minimizing computational overhead on existing PLCs and ensuring scalability across production lines. The deployment paper demonstrates the system's efficacy in improving detection accuracy, reducing downtime, and providing actionable insights for proactive maintenance.

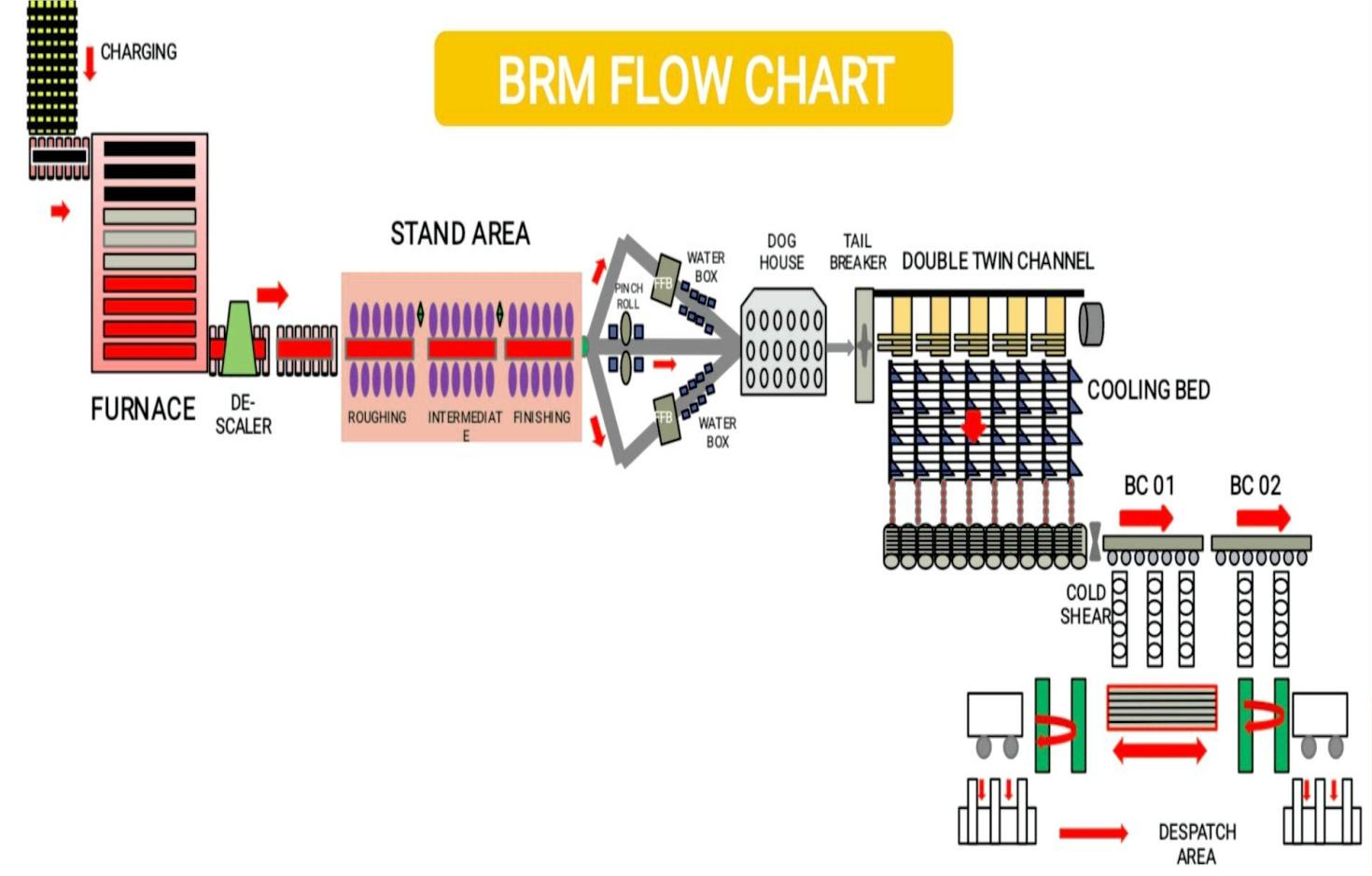

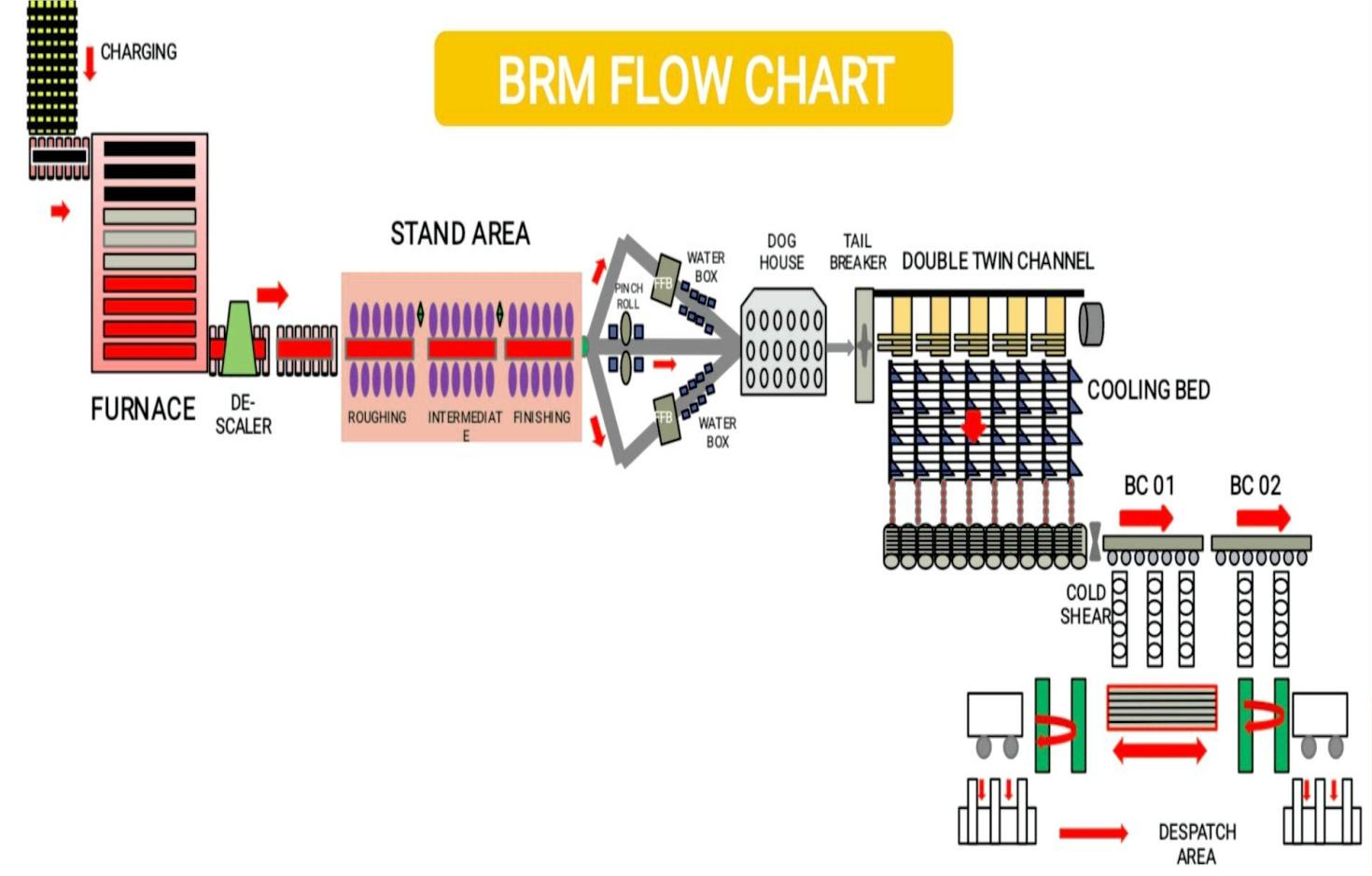

Figure 1: Steel Bar Rolling Mill Layout.

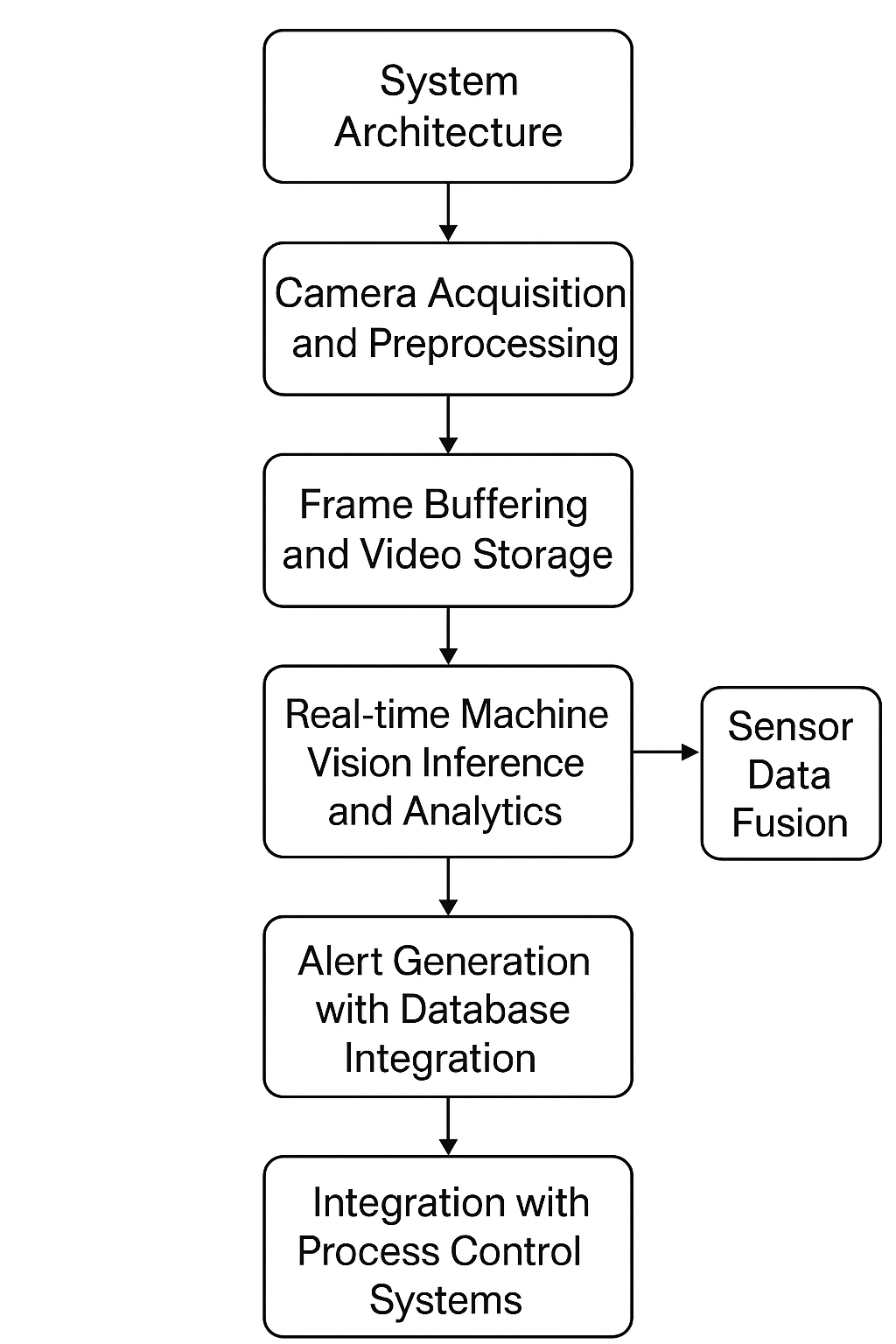

System Architecture and Methodology

The proposed architecture consists of several modular components: camera acquisition and preprocessing, frame buffering and storage, real-time machine vision inference, sensor data fusion, alerting, and web-based visualization. High-speed Baumer cameras are installed at critical locations to capture visual cues such as hot bar motion, roller alignment, and auxiliary equipment operation. The video streams are transmitted to a centralized server equipped with GPU/CPU resources for deep learning inference.

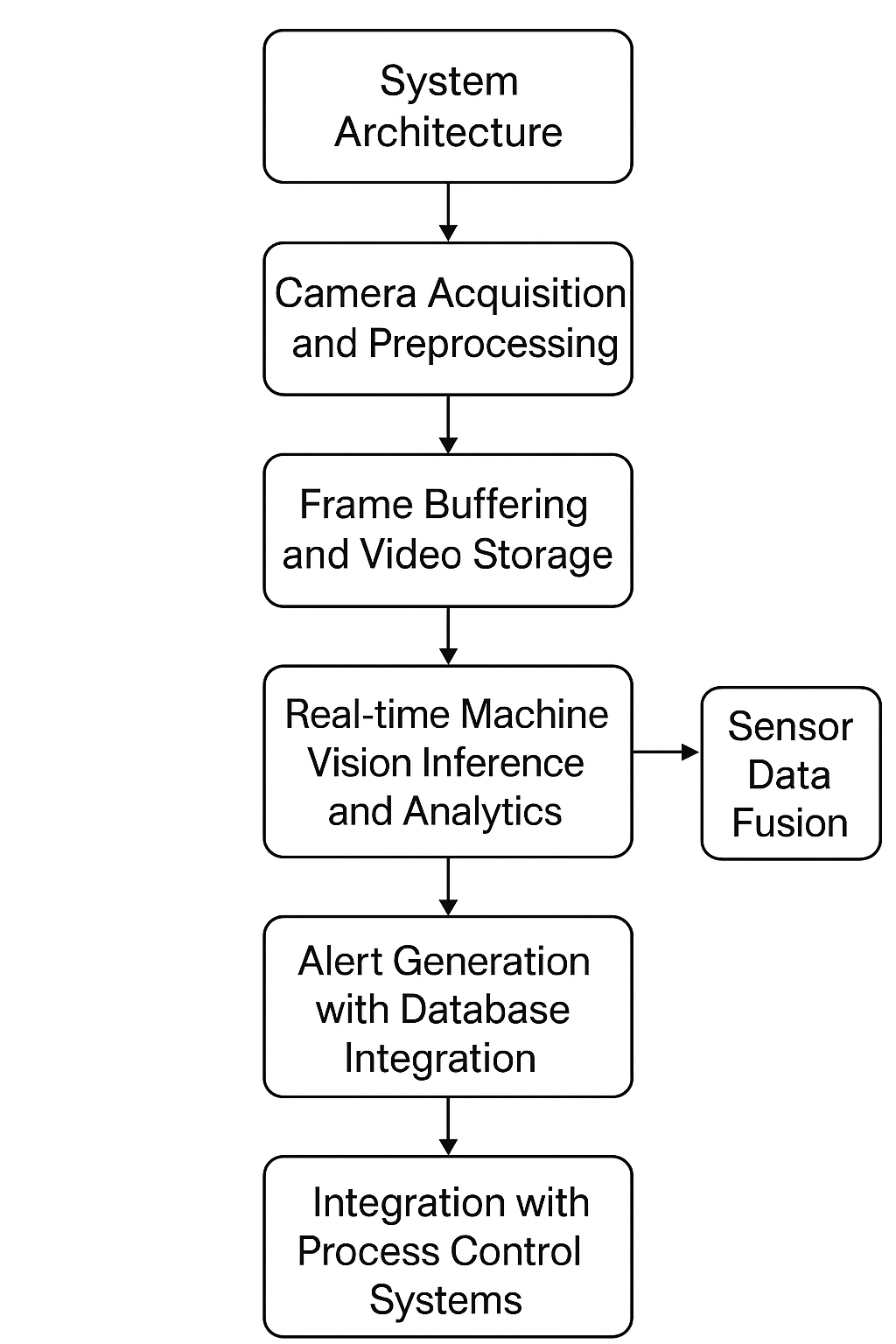

Figure 2: System architecture and workflow of the proposed process-integrated computer vision framework in steel rolling mills.

Camera Acquisition and Preprocessing

Cameras are configured via the NeoAPI SDK with exposure control to handle challenging illumination. Frames are validated for pixel format and converted to RGB, with acquisition loops maintaining ~45 FPS. Redundancy mechanisms ensure automatic reconnection in case of disconnection.

Frame Buffering and Storage

A thread-safe buffer queue synchronizes data transfer between acquisition and processing. Video streams are archived in two-minute clips, organized by date, time, and rod profile, supporting both real-time and offline analysis.

Real-Time Machine Vision Inference

YOLO-based models, with profile-specific weights, are used for object detection and tracking. Key features extracted include:

- Rod detection and vibration analysis via center coordinate tracking and vertical displacement monitoring.

- Flapper and diverter displacement measurement, with pixel-level shifts calibrated to millimeter displacements.

- Rod presence and billet duration estimation for throughput and short metal event detection.

Sensor Data Fusion

Auxiliary process signals from PLCs (e.g., operational status, dividing cut data, material presence) are integrated via a Redis-based communication layer. This enables conditional activation of the vision pipeline and dynamic suppression of false alerts, increasing true positive rates.

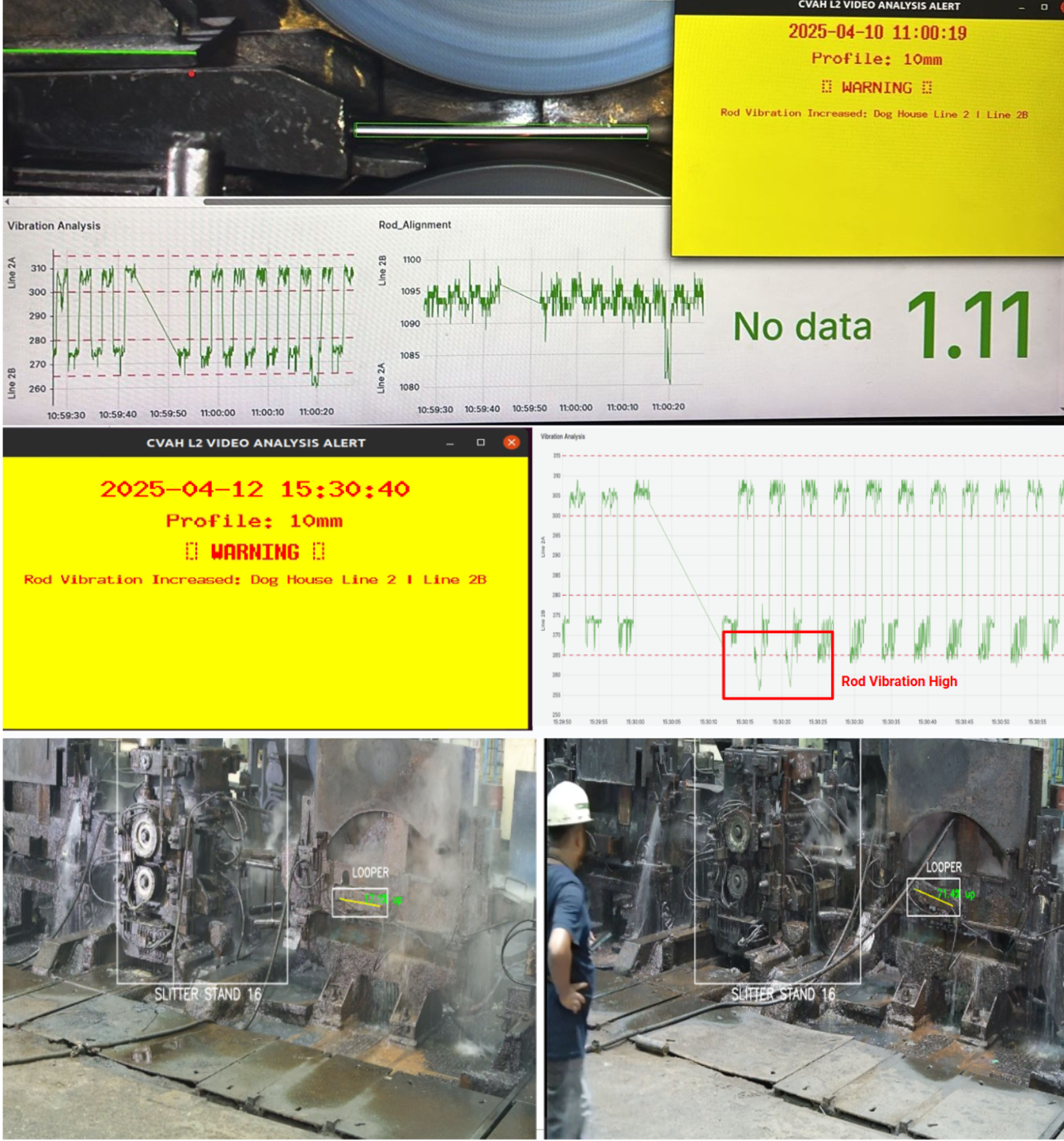

Alerting and Database Integration

All features and statistics are logged in an InfluxDB time-series database. An alerting module triggers notifications for excessive vibration, misalignment, or abnormal billet lengths, providing operators with actionable insights.

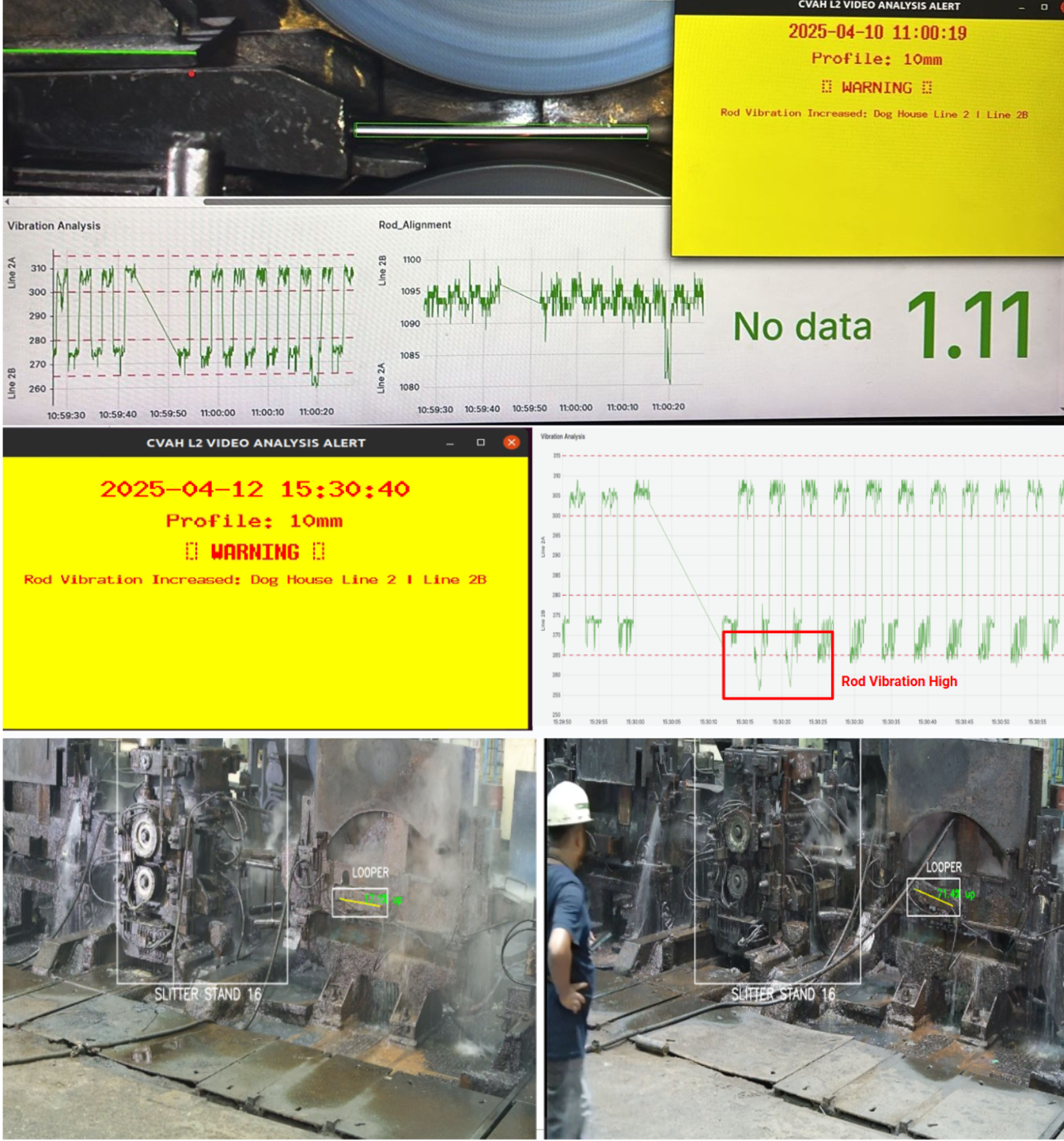

Figure 3: Real-time alert window displaying vibration and misalignment notification in rolling mill.

Web-Based Visualization

A FastAPI server streams processed video with overlaid detections and alerts. Grafana dashboards, backed by InfluxDB, provide long-term trend visualization and process statistics.

Figure 4: Web-based integrated visualization Dashboard.

Scalability

The system supports parallel deployment of multiple cameras and detection models, with distributed GPU processing. Storage management ensures efficient long-term data retention and retrieval.

Experimental Results

The system was deployed in a fully operational steel bar rolling mill for six months, with a dataset of ~28,000 labeled frames across five rolling dimensions. Key performance metrics include:

- Detection Accuracy: YOLOv11 models achieved [email protected] of 94.2% across dimensions. Vibration anomaly recall was 92.5%, misalignment recall 90.7%, and rod presence detection exceeded 99%. Fusion with process signals reduced the false alarm rate to 2.8%.

- System Latency and Throughput: End-to-end latency averaged 280 ms per frame, with processing sustained at 42 FPS on a centralized GPU server. Database and alerting overhead was negligible (<5 ms).

- Operational Impact: The system prevented approximately 10 cobbles per month, translating to significant cost savings (Rs. 1.15 Cr/month) and reduced downtime.

- Scalability: The modular design enabled seamless scaling across additional production lines and rod profiles.

Implications and Future Directions

The integration of computer vision with process control and sensor data in steel rolling mills demonstrates a robust approach to real-time anomaly detection and failure prediction. The system's architecture, emphasizing independence from PLCs and modular scalability, addresses key challenges in industrial deployment, including computational overhead, latency, and integration complexity.

The strong numerical results—particularly the high detection accuracy and low false alarm rate—underscore the viability of deep learning-based vision systems in harsh industrial environments. The fusion of visual and process signals is critical for reducing false positives and enhancing interpretability.

Future research directions include:

- Extending the framework to additional process stages and equipment types.

- Incorporating advanced temporal models (e.g., transformers) for improved sequence-based anomaly detection.

- Exploring self-supervised and continual learning to adapt to evolving process conditions.

- Integrating predictive maintenance scheduling and automated root cause analysis.

Conclusion

This work establishes a practical, scalable, and effective framework for process-integrated computer vision in steel rolling mills. By combining deep learning-based video analytics with sensor data fusion and real-time alerting, the system significantly enhances failure prediction, operational reliability, and maintenance planning. The demonstrated deployment results provide a strong foundation for broader adoption of AI-driven monitoring in complex industrial environments.