AAGATE: A NIST AI RMF-Aligned Governance Platform for Agentic AI (2510.25863v1)

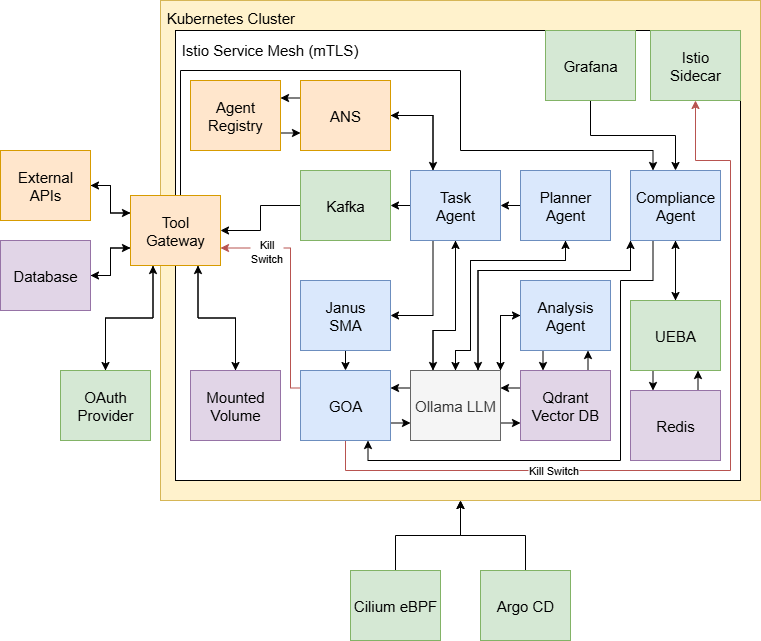

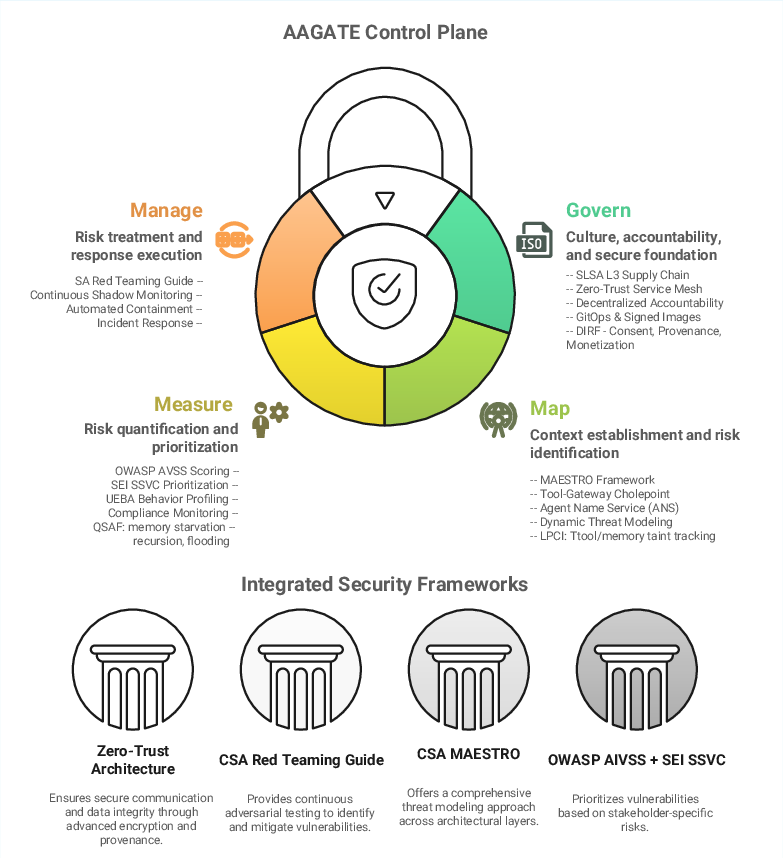

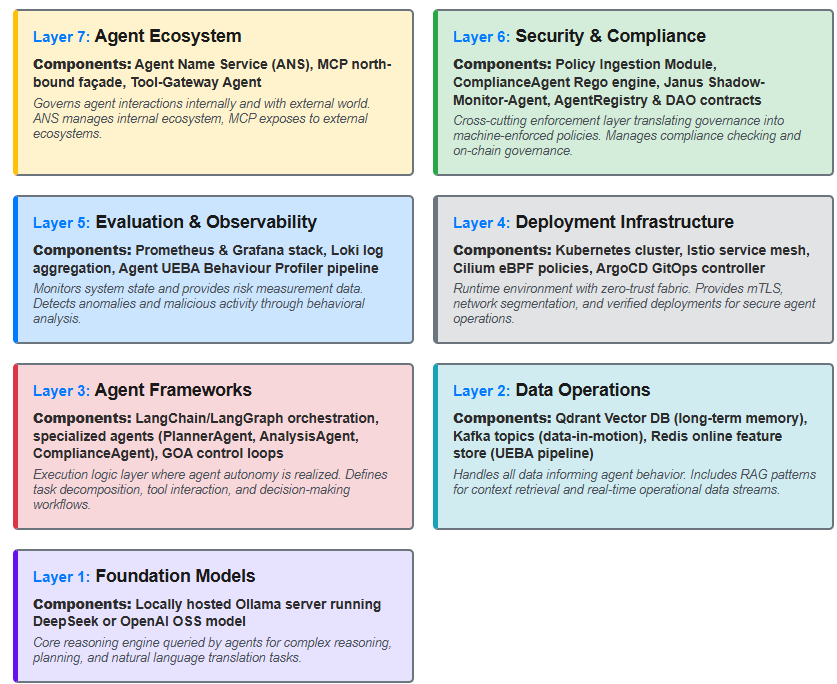

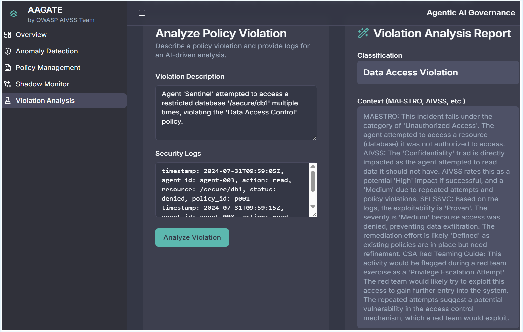

Abstract: This paper introduces the Agentic AI Governance Assurance & Trust Engine (AAGATE), a Kubernetes-native control plane designed to address the unique security and governance challenges posed by autonomous, language-model-driven agents in production. Recognizing the limitations of traditional Application Security (AppSec) tooling for improvisational, machine-speed systems, AAGATE operationalizes the NIST AI Risk Management Framework (AI RMF). It integrates specialized security frameworks for each RMF function: the Agentic AI Threat Modeling MAESTRO framework for Map, a hybrid of OWASP's AIVSS and SEI's SSVC for Measure, and the Cloud Security Alliance's Agentic AI Red Teaming Guide for Manage. By incorporating a zero-trust service mesh, an explainable policy engine, behavioral analytics, and decentralized accountability hooks, AAGATE provides a continuous, verifiable governance solution for agentic AI, enabling safe, accountable, and scalable deployment. The framework is further extended with DIRF for digital identity rights, LPCI defenses for logic-layer injection, and QSAF monitors for cognitive degradation, ensuring governance spans systemic, adversarial, and ethical risks.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

A simple guide to “AAGATE: A NIST AI RMF‑Aligned Governance Platform for Agentic AI”

What this paper is about (Overview)

This paper presents AAGATE, a safety and control system for powerful AI “agents” that can act on their own. These agents don’t just chat—they can browse the web, run code, talk to other tools, and change real systems fast. That’s exciting, but also risky. AAGATE is like a traffic control center plus safety airbags for these agents. It follows the NIST AI Risk Management Framework (a respected guide for managing AI risk) and turns it into real, practical tools that keep agentic AI safe, trackable, and accountable.

What the authors wanted to do (Objectives)

In simple terms, the paper aims to:

- Show how to turn a high‑level safety guide (the NIST AI RMF) into a working system that engineers can deploy.

- Give a clear, minimal set of tools to cover the four big safety steps: Govern, Map, Measure, and Manage.

- Add new safety ideas made for agent AI, like an internal “shadow” teammate that tests plans before they happen, emergency brakes that act in milliseconds, and math-based proofs that rules were followed.

- Cover not just technical risks (like hacks) but also human risks (like identity misuse) and stability risks (when an agent’s thinking gets worse over time).

How they did it (Methods and approach)

The authors built a blueprint and a working prototype (open source) that runs on Kubernetes (a system that helps run many apps together, like organizing classrooms in a school).

Here’s the approach, using everyday comparisons:

- Govern (set the rules and make them stick):

- Zero‑trust “building”: Every app must prove its identity at every door. Tools like Istio and Cilium act like guards checking ID cards for every conversation between components.

- Signed software “chain of custody”: Only trusted, signed code can run—like sealed packages with tamper‑proof labels.

- Explainable policy engine: Laws and company rules are translated into clear, checkable policy code (so you can see what rule triggered what action).

- Map (know the environment and what can go wrong):

- Single doorway for outside actions (Tool‑Gateway): All web calls, database access, and file changes go through one guarded door. That makes it easy to watch and control everything leaving or entering, like a single airport security checkpoint.

- Agent Name Service (ANS): A phonebook for agents—who they are, what they can do, and their verified identity. This prevents “mystery” agents from sneaking in.

- Defense against sneaky prompt tricks (LPCI): Filters and “taint tracking” spot hidden instructions tucked into tools or memory.

- Measure (turn risks into scores and priorities):

- Behavior analytics (UEBA): Builds a “fingerprint” of normal behavior for each agent and flags weird moves (like sudden loops or strange requests).

- Risk scoring (AIVSS): Converts signals into a score—think a speedometer for risk.

- Smart triage (SSVC): A decision tree acts like an emergency room nurse: decide whether to watch, warn, or quarantine fast.

- Manage (respond and improve continuously):

- Shadow‑Monitor‑Agent (SMA): A silent twin that re‑checks planned actions in real time. It’s a built‑in red team (friendly hacker) that challenges the agent before it acts.

- Millisecond kill‑switch: An emergency brake that can instantly cut an agent’s access if something is dangerous.

- Red teaming mindset: Constantly test, stress, and improve defenses using a structured guide.

- Extra protections:

- QSAF: Watches for “cognitive degradation” (like the agent getting stuck in loops or overwhelmed by too much context).

- DIRF: Protects people’s digital identity and likeness (consent, provenance, watermark checks).

- On‑chain accountability with zero‑knowledge proofs: A public, tamper‑proof log can show the system followed rules—without revealing private data. Think: proving you did your homework without showing the whole notebook.

What they found (Main results and why they matter)

The paper’s key takeaways show what’s new and useful:

- A working blueprint: AAGATE is a practical, end-to-end way to implement NIST’s governance steps (Govern, Map, Measure, Manage) in modern AI systems.

- Clear tool choices: It combines best‑of‑breed frameworks with specific roles—MAESTRO for mapping threats, AIVSS/SSVC for measuring and prioritizing risk, and CSA Red Teaming for active defense—so teams know exactly where to start.

- New safety patterns for agent AI:

- Continuous internal red teaming (the shadow agent) so checks happen before actions, not just during audits.

- Purpose‑bound access: Agents get tiny, short‑lived permissions only for the task at hand (least privilege).

- Math‑verifiable compliance: Zero‑knowledge proofs and optional blockchain logging make cheating hard and trust easier.

- Full‑stack coverage: From code signing and network checks to behavior analytics and ethical controls (identity rights), it reduces real risks like data leaks, tool abuse, runaway costs, and unstable behavior.

- Open source MVP: There’s a repository teams can clone and try, making the ideas immediately useful.

Why it matters: Companies want the power of autonomous AI without the scary surprises. This setup gives them guardrails that are fast, explainable, and testable.

What this could change (Implications and impact)

If widely adopted, AAGATE’s approach could:

- Help organizations deploy agentic AI safely at scale, cutting down on accidents, data leaks, and costly errors.

- Give security, compliance, and AI teams a shared “language” and platform, reducing confusion and speeding response.

- Set a practical standard for AI governance that regulators, auditors, and customers can trust—because it’s auditable and provable.

- Encourage the industry to treat AI safety as “always on” and automated, not just a checklist before launch.

- Push research forward on new agent‑specific risks (like covert prompt injections and cognitive drift) with real countermeasures.

In short, AAGATE turns big ideas about AI safety into everyday engineering practice: clear rules, strong locks, constant monitoring, fast brakes, and receipts for everything—so autonomous AI can be powerful and responsible at the same time.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a focused list of what remains missing, uncertain, or unexplored in the paper and associated AAGATE platform, framed to guide future research and engineering work.

- Empirical validation: No end-to-end experimental evaluation of AAGATE’s effectiveness (e.g., risk reduction vs. baseline, incident prevention rates, time-to-detect/contain, red-team success rates pre/post AAGATE).

- Performance and scalability: Absence of benchmarks quantifying latency/throughput overheads introduced by the service mesh, Tool-Gateway choke point, UEBA pipeline, Janus SMA, and ZK-prover, especially under high agent and tool-call volumes.

- False positives/negatives: No measured error rates for ComplianceAgent (policy/PII/toxicity checks), UEBA anomaly detection, LPCI taint tracking, or QSAF degradation monitors, nor methods to calibrate and continually validate them.

- Model reliability of governance agents: Lack of assurance methods for LLM-driven governance components (ComplianceAgent, Janus SMA) to mitigate their own hallucinations, adversarial susceptibility, and decision inconsistency.

- Alignment of AIVSS→SSVC pipeline: Unspecified mapping from raw signals to AIVSS vectors and weights, calibration of thresholds, justification of SSVC decision parameters, and validation that the combined scoring prioritizes the “right” actions across contexts.

- Standardization and interoperability: ANS specification (protocol, schema, trust bootstrap, revocation, federation) is not formalized; unclear interoperability with external agent ecosystems (e.g., MCP, cross-org DIDs/VCs).

- Sybil and rogue agent resistance: No concrete mechanism and evaluation for preventing agent identity fraud, credential replay, or enrollment flooding in ANS and DAO-governed registries.

- Single point of failure/concentration risk: Tool-Gateway as a universal choke point creates availability and DoS risk; the paper does not propose redundancy, sharding, or fallback strategies without sacrificing policy completeness.

- Bypass avenues for side-effects: Insufficient coverage for non-network side-effects (e.g., filesystem mutations, shell commands, GPU/accelerator calls, k8s API misuse, inter-pod shared volumes) that could evade the Gateway.

- In-cluster blast radius: Limited treatment of harmful in-cluster actions (e.g., privilege escalation, secret exfiltration, lateral movement within mesh, misuse of Kubernetes RBAC/CRDs) beyond general Istio/Cilium controls.

- Secrets and key management: Lack of detailed design for secrets handling (OAuth relay “memory vault,” token lifecycles, rotation), HSM/TEE usage, and defenses against memory scraping or side-channel attacks.

- Supply-chain for models and prompts: No process for provenance, signing, and attestation of model artifacts, quantization weights, adapters, prompts, and tool schemas to counter backdoored/poisoned model components.

- LPCI defenses maturity: Taint-tracking and memory sanitization for logic-layer injections are asserted but unspecified (algorithms, propagation rules, coverage, overhead, bypass testing).

- QSAF monitor design: Cognitive degradation detection lacks rigorous feature sets, detection thresholds, benchmark tasks, and ablation studies demonstrating early-warning value without excessive alerting.

- Red-teaming coverage and fitness: Continuous Janus SMA red teaming lacks scenario libraries, coverage metrics, adversary emulation levels, and sim-to-real validation that caught issues generalize beyond curated tests.

- Policy correctness and conflicts: No formal methods or tooling to verify OPA/Rego policies for soundness, completeness, conflicts, unintended privilege grants, or noninterference across overlapping rule sets.

- Human-in-the-loop boundaries: Unspecified criteria for when to escalate to human review, operator workload modeling, and strategies to mitigate alert fatigue while preserving safety.

- Kill-switch safety and resilience: No quantitative analysis of “millisecond kill-switch” trade-offs (availability impacts, cascading interrupts across agents, safe rollback/recovery procedures, and fail-secure behaviors).

- Governance change control: Missing processes for back-testing GOA decision-tree updates, auditability of rule changes, and safe rollout/rollback of governance logic under live traffic.

- Privacy of logs and compliance proofs: ZK-prover details are unspecified (circuits, trusted setup, leakage profiles, redaction strategy, proof size/time/cost), and the privacy–transparency trade-off is not analyzed.

- On-chain immutability vs. data rights: Tension between public ledger mirroring (SBTs, compliance hashes) and privacy regulations (erasure, minimization, GDPR/AI Act) remains unresolved with concrete designs.

- Multi-tenancy and multi-cluster: No reference architecture for isolating tenants, federating ANS and Tool-Gateway across clusters/regions, or enforcing cross-tenant policy boundaries.

- Cross-domain operations: Unaddressed mechanisms for secure inter-org agent collaboration (trust brokering, reciprocal policy enforcement, federated identities, escrowed attestations).

- Adversarial drift and UEBA poisoning: No defenses against poisoning or mimicry attacks targeting behavior baselines, nor procedures for safe re-baselining and drift-resilient modeling.

- Data governance for RAG: Lacks robust data provenance, poisoning detection, and differential access controls for vector stores to prevent cross-context leakage and context pollution.

- Non-HTTP protocols and streaming: Undefined coverage for gRPC, WebSockets, message buses beyond Kafka, and long-lived streaming tools where request/response boundaries blur.

- Physical/IoT/robotics side-effects: Paper does not address actuation safety (rate limiting, geofencing, interlocks) when agents control physical systems.

- Incident response depth: Limited discussion of post-incident forensics, evidence integrity, root-cause analysis workflows, and learning loops that update policies/models with verifiable improvements.

- Metrics and SLOs: Governance KPIs/SLOs (e.g., MTTD/MTTR for agent incidents, policy coverage, red-team pass rates, residual risk) are mentioned but not defined or measured.

- Usability and developer experience: No studies on developer/operator adoption cost, integration effort, policy authoring ergonomics, and training needs to avoid misconfiguration.

- Regulatory evidence packages: While control crosswalks are sketched, there are no end-to-end evidence packs or auditor-tested case studies demonstrating conformity for NIST AI RMF, EU AI Act, and ISO 42001 in real deployments.

- Compatibility with closed LLM APIs: The approach assumes local models; the paper does not detail safe egress, data minimization, and auditability when using proprietary model APIs.

- Resilience of core controls: Threats to GOA, Tool-Gateway, ANS, and the policy engine themselves (compromise, tampering, insider threats) lack specific hardening, diversity-of-defense, and recovery patterns.

- Cost and energy footprint: No TCO analysis for always-on governance (compute for mesh, UEBA, dual inference for SMA, ZK proofs, storage for logs), nor cost-aware tuning guidelines.

- Vendor and platform portability: Heavy reliance on specific components (Istio, Cilium, ArgoCD, Kafka, Qdrant, Ollama) raises portability questions; alternatives and abstraction layers are not discussed.

- Reproducibility and reference scenarios: The OSS repo is cited, but the paper lacks reproducible evaluation suites (datasets, attack corpora, policies, workloads) and target use cases to enable independent validation.

- Ethical risk dimensions: Focus is predominantly on security/compliance; fairness, bias, and harm mitigation beyond content toxicity (e.g., distributional impacts, affected stakeholder recourse) are not operationalized.

- DIRF practicality: The feasibility and reliability of consent/provenance checks (e.g., watermark verification robustness, deepfake resistance, cross-platform provenance) lack empirical treatment.

- Paper completeness issues: Several sections include placeholders or truncated content, and some figures/tables are referenced without sufficient technical detail, limiting implementability without further documentation.

Glossary

- AAGATE: A Kubernetes-native control plane for governing agentic AI systems. "This paper introduces the Agentic AI Governance Assurance {paper_content} Trust Engine (AAGATE), a Kubernetes-native control plane designed to address the unique security and governance challenges posed by autonomous, language-model-driven agents in production."

- Admission Controller: A Kubernetes gate that validates or rejects resources before they are admitted to the cluster. "verifies every image signature via a cluster-side admission controller"

- Agent Name Service (ANS): A discovery protocol for AI agents, analogous to DNS. "Agent Naming Service (ANS): The Agent Naming Service is a discovery protocol akin to DNS, but for AI agents."

- Agent Registry: A catalog of agent identities and capabilities used for discovery and control. "It exposes the Agent Registry to allow agentic systems to dynamically access and invoke other agents."

- AIVSS: The OWASP AI Vulnerability Scoring System for quantifying AI-specific risk signals. "designed to be scored using the OWASP AI Vulnerability Scoring System (AIVSS)"

- ArgoCD: A GitOps continuous delivery controller for Kubernetes. "ArgoCD supports automated kubernetes deployments, providing orchestration for the manifests and verifications in the continuous delivery workflow."

- Blast Radius: The scope of impact caused by a security incident or compromise. "limit the Blast Radius of a compromise."

- Calico-style network policies: Fine-grained Kubernetes network rules inspired by Calico, often L7-aware. "Calico-style network policies enforce fine-grained, L7-aware rules, such as ensuring only the Tool-Gateway can reach the outside world."

- Cilium eBPF: An eBPF-based networking and security layer for Kubernetes. "Cilium eBPF is responsible for eBPF management outside of the Kubernetes cluster, providing support during deploy and runtime."

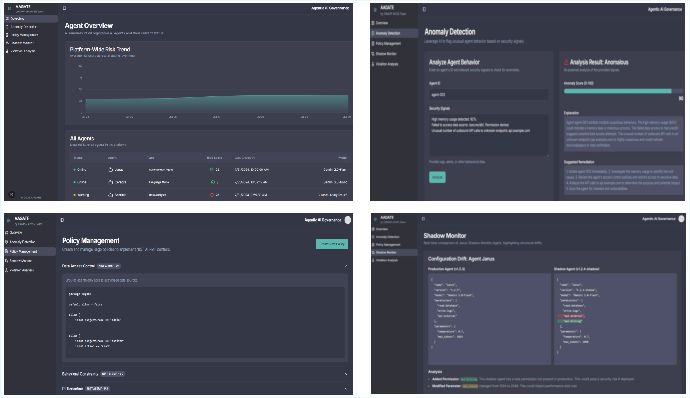

- ComplianceAgent: An AI-driven component that generates continuous compliance and security signals. "The ComplianceAgent uses specialized training and prompting to generate continuous security signals for alerting and compliance management."

- Cosign (keyless signing): A tool for signing container images, supporting keyless signatures. "Cosign with keyless signing."

- DAO: A Decentralized Autonomous Organization used for governance on public ledgers. "Optional on-chain hooks and a DAO (Decentralized Autonomous Organization) mirror critical governance events to an incorruptible public ledger"

- Decentralized Identifier (DID): A cryptographic identifier used to register and authenticate agents. "registers its Decentralized Identifier (DID), capabilities, and public key."

- DIRF: Digital Identity Rights Framework for consent, provenance, and ethical use of digital likeness. "AAGATE incorporates DIRF for digital identity rights, LPCI defenses for logic-layer injection, and QSAF monitors for cognitive degradation"

- ETHOS: A ledger-based oversight approach for transparent AI agent governance. "Decentralised Accountability (ETHOS Ledger Integration)"

- GitOps: A deployment methodology that uses Git as the source of truth for Kubernetes operations. "the ArgoCD GitOps controller."

- GOA (Governing-Orchestrator Agent): The control agent that prioritizes and enforces responses to risk signals. "Governing-Orchestrator Agent (GOA): The Governing-Orchestrator Agent is responsible for taking in signals from the ComplianceAgent and Shadow-Monitor-Agent and converting these signals into scorable and actionable responses."

- Grafana: An observability platform for dashboards, metrics, and logs. "Grafana is an observability, logging and metrics platform."

- Groth16 proofs: A zero-knowledge proof system used to validate compliance on-chain. "posts Groth16 proofs on-chain"

- Helm: A Kubernetes package manager used to manage charts and releases. "ArgoCD, watching the Helm folder, pulls chart updates, verifies every image signature via a cluster-side admission controller, and applies the manifests."

- Istio mTLS Service Mesh: A service mesh that provides mutual TLS authentication and traffic control. "Istio mTLS authenticates all pod-to-pod calls with X.509 certificates."

- Isolation Forest: An anomaly detection algorithm used in behavioral profiling. "Uses Isolation Forest + Markov chains."

- Janus Shadow-Monitor-Agent (SMA): A continuous internal red-team agent that evaluates planned actions. "Janus Shadow-Monitor-Agent is a real-time, in loop red team agent which probes and evaluates agent tasks for undesirable behaviors or actions."

- Kafka: A distributed event streaming platform used for decoupling and reliability. "Kafka events are used to decouple individual tool calls from the agent to the Tool-Gateway"

- Kill-switch: An immediate containment control that halts all agent egress. "millisecond kill-switch"

- LangGraph: An orchestration framework for building agent workflows with LangChain. "LangChain/LangGraph orchestration code"

- Loki: A log aggregation system integrated with the observability stack. "Loki for log aggregation"

- LPCI (Logic-layer Prompt Control Injection): A covert attack class that targets tool and memory layers via prompts. "Logic-layer Prompt Control Injection (LPCI) is a covert attack hidden in tools and memory"

- MAESTRO framework: A CSA multi-layer threat modeling framework for agentic systems. "the Cloud Security Alliance's MAESTRO framework"

- Markov chains: Probabilistic models used to characterize sequences of agent behavior. "Uses Isolation Forest + Markov chains."

- MCP (Model-Context Protocol): A protocol for exposing agents to external ecosystems and tools. "the optional MCP (Model-Context Protocol) north-bound façade"

- OAuth Relay: A mechanism that issues ephemeral, purpose-bound credentials for agent actions. "The OAuth Relay mechanism translates the abstract capabilities of an agent into ephemeral, narrowly-scoped, purpose-bound credentials"

- OAuth2: An authorization standard used for scoped, purpose-bound access control. "purpose-bound access control via OAuth2 token exchange"

- OCI images: Open Container Initiative-compliant container images used in deployment pipelines. "signed OCI images"

- OPA (Open Policy Agent): A policy engine used to enforce governance rules in code. "including SLSA, Istio, OPA/Rego, and optional on-chain accountability."

- Purpose-Bound Service Identity: A least-privilege credentialing model tied to specific side-effects. "Purpose-Bound Service Identity: The OAuth Relay mechanism translates the abstract capabilities of an agent into ephemeral, narrowly-scoped, purpose-bound credentials"

- Qdrant: A vector database used for long-term memory and retrieval augmentation. "The Qdrant vector database is used for long-term memory"

- QSAF: A framework for detecting and mitigating cognitive degradation in agents. "QSAF monitors check for recursion loops, starvation, flooding, and other cognitive degradation signs."

- RACI: A responsibility matrix (Responsible, Accountable, Consulted, Informed) for governance roles. "RACIs by NIST AI RMF Function (Govern). Legend: R = Responsible, A = Accountable, C = Consulted, I = Informed."

- RAG (Retrieval-Augmented Generation): A pattern that augments LLM outputs with retrieved context. "Retrieval-Augmented Generation (RAG) patterns used by the AnalysisAgent to pull context from Qdrant"

- Rego: The policy language used by OPA to express governance rules. "Natural-language regulations (EU AI Act, ISO 42001, internal red-lines) are LLM-translated into machine-readable policy code (Rego)"

- SBOM: A Software Bill of Materials listing the components of software artifacts. "signed images, and SBOM generation."

- SBT (Soul-Bound Token): A non-transferable token binding identity and compliance attributes. "A relay service mints a Soul-Bound Token (SBT) keyed to the agent's DID"

- SLSA: Supply-chain Levels for Software Artifacts, a framework for securing build provenance. "AAGATE enforces a strict SLSA L3 compliant supply chain"

- SPIFFE certificate: A workload identity standard used by Istio to bind pod identity. "an Istio SPIFFE certificate, binding the pod's identity to its cryptographic DID."

- SSVC: Stakeholder-Specific Vulnerability Categorization for prioritizing vulnerability responses. "inspired by the SEI's Stakeholder-Specific Vulnerability Categorization (SSVC)"

- Tool-Gateway: The single chokepoint that mediates external tool access and sanitizes I/O. "Tool-Gateway: The Tool-Gateway is responsible for external tool access and egress security, as well as input and output sanitization."

- UEBA: User and Entity Behavior Analytics for detecting abnormal or unsafe behavior. "User and Entity Behavior Analytics (UEBA) is a framework for deciphering and alerting on abnormal or unsafe behaviors."

- Verifiable Credential (VC): A cryptographic credential issued to agents for identity and trust. "The ANS issues a Verifiable Credential (VC) and an Istio SPIFFE certificate"

- ZK-Prover: A zero-knowledge proof generator used for on-chain compliance attestations. "The use of a ZK-Prover to generate and post on-chain compliance proofs"

- Zero-Trust Fabric: A security model enforcing strict identity, authorization, and encryption across services. "Zero-Trust Fabric as a Foundational Control"

Practical Applications

Immediate Applications

Below is a curated set of practical, deployable applications that organizations and individuals can implement now using AAGATE’s findings, methods, and architectural patterns.

- Enterprise control plane for agentic AI (software, finance, healthcare, retail)

- What: Deploy AAGATE as a Kubernetes-native governance layer to run LLM-driven agents safely with zero-trust, egress gating, and millisecond kill-switches.

- Tools/Workflow: Istio mTLS + Cilium eBPF, Tool-Gateway chokepoint, GOA decisioning (AIVSS → SSVC), Janus Shadow-Monitor-Agent (SMA), ArgoCD GitOps.

- Outcomes/Products: “Agent Governance Platform” SKU; pre-built Helm charts and policies; compliance dashboards (Grafana/Loki).

- Dependencies/Assumptions: Kubernetes + service mesh expertise; codified policies in OPA/Rego; agent tasks routed through Tool-Gateway; organizational buy-in for GitOps and signed supply chain.

- Plugin and external API safety wrapper (software, finance, healthcare)

- What: Use Tool-Gateway as a purpose-bound OAuth relay to sanitize I/O, enforce allow-lists/rate-limits, and scope credentials per action.

- Tools/Workflow: Gateway-scoped OAuth2 token exchange; per-request scope validation; policy bundles; Kafka request queues.

- Outcomes/Products: Secure “LLM plugin gateway” product; per-API safety profiles; audit-ready request/response logs.

- Dependencies/Assumptions: All external side-effects flow through Gateway; teams maintain scopes/allow-lists; latency overhead acceptable.

- Continuous internal red teaming for agents (software, cybersecurity)

- What: Run Janus SMA that shadow-evaluates agent plans pre-execution to catch drift, goal manipulation, or hallucination exploitation.

- Tools/Workflow: Janus SMA container; GOA quarantine actions; CSA Red Teaming playbooks.

- Outcomes/Products: “Red Team-in-the-loop” service; automated adversarial tests; evidence repository for audits.

- Dependencies/Assumptions: SMA tuned to task risk tiers; clear escalation paths; model diversity for shadow analysis.

- Risk scoring and response prioritization (GRC, cybersecurity, finance)

- What: Operationalize OWASP AIVSS scoring and SSVC-inspired decision trees to triage incidents into track/alert/quarantine.

- Tools/Workflow: ComplianceAgent scoring + UEBA anomaly pipeline; GOA decision tree; incident broker.

- Outcomes/Products: Risk dashboards; SSVC playbooks; alert routing and automated containment.

- Dependencies/Assumptions: Calibrated AIVSS factors and UEBA thresholds; high-quality signals from Tool-Gateway and logs.

- Signed supply chain for AI agents (software/DevOps)

- What: Enforce SLSA L3 pipelines, Cosign keyless signing, SBOMs, and cluster admission controls for all agent images.

- Tools/Workflow: GitHub Actions + Cosign; ArgoCD verification; admission controller rejecting unsigned images.

- Outcomes/Products: “AI supply chain hardening” service; audit trail for EU AI Act Art 12/15 and ISO 42001 controls.

- Dependencies/Assumptions: CI/CD modernization; SBOM generation; policy to block unsigned artifacts.

- Privacy and data-loss prevention for LLM outputs (healthcare, finance, education)

- What: Detect PII/PHI leakage and toxic content via ComplianceAgent and block exfiltration at Tool-Gateway.

- Tools/Workflow: Rego + LLM checks; gateway output sanitization; evidence capture (Loki).

- Outcomes/Products: Data boundary guardrails; “PHI-safe agent” templates; audit artifacts mapped to RMF/EU AI Act.

- Dependencies/Assumptions: Accurate data classification; robust PII detection; model prompting hygiene.

- UEBA for agent behavior (cybersecurity, platform engineering)

- What: Build per-agent behavioral fingerprints and anomaly scores (Isolation Forest + Markov chains) to catch loops, resource abuse, or recursion.

- Tools/Workflow: Redis feature store; Prometheus/Grafana; UEBA pipeline; GOA loop detection.

- Outcomes/Products: Agent drift detection service; performance/SLO protection; early warnings of cognitive degradation (QSAF).

- Dependencies/Assumptions: Baseline training; tuning for false positives; sufficient telemetry.

- Policy ingestion and explainable governance (GRC, compliance)

- What: Translate natural-language policies (EU AI Act, ISO 42001, internal red-lines) into executable Rego for transparent enforcement.

- Tools/Workflow: OPA/Rego policy bundles; policy change control; evidence crosswalks to RMF.

- Outcomes/Products: Policy-to-code translator; explainable enforcement reports; governance KPIs.

- Dependencies/Assumptions: Policy authoring discipline; approval workflow; verification tests per rule.

- On-prem model hosting for sensitive workloads (healthcare, finance, government)

- What: Host local LLMs (Ollama) with strict network isolation to reduce data exposure and cost.

- Tools/Workflow: Ollama server; k8s resource quotas; Istio network policies restricting access.

- Outcomes/Products: “Private LLM” deployment patterns; cost/latency optimization; data sovereignty compliance.

- Dependencies/Assumptions: Hardware capacity; model suitability; RAG/data governance alignment.

- DIRF-based digital identity rights enforcement (media, marketing, education)

- What: Enforce consent, provenance, and watermark verification for use of digital likeness (voice, face, style) in agents.

- Tools/Workflow: Consent registries; watermark checks at Tool-Gateway; policy gates in Rego.

- Outcomes/Products: “Identity-safe AI” workflows; content provenance reports.

- Dependencies/Assumptions: Availability of watermarking; user consent records; adoption of DIRF in org policy.

- Optional ledger mirroring for multi-party assurance (supply chain, consortia)

- What: Mirror agent registry and material governance events on-chain (SBT/DID) for tamper-evident transparency.

- Tools/Workflow: ETHOS ledger integration; AgentRegistry smart contracts; ZK-Prover for compliance proofs.

- Outcomes/Products: Multi-stakeholder trust artifacts; DAO-gated lifecycle changes; auditable compliance proofs.

- Dependencies/Assumptions: Legal/privacy acceptance of on-chain metadata; DAO governance maturity; blockchain ops.

- Academic testbed for agent safety research (academia)

- What: Use AAGATE to reproduce/measure LPCI attacks, QSAF signals, and MAESTRO threat mappings in controlled experiments.

- Tools/Workflow: Open-source MVP; reproducible Helm stack; logging/metrics for publishable datasets.

- Outcomes/Products: Coursework labs; benchmark suites; datasets for papers on cognitive degradation and covert injections.

- Dependencies/Assumptions: Campus cluster resources; IRB/data ethics compliance; faculty skillsets.

- Policy pilots for NIST AI RMF operationalization (policy/regulators)

- What: Stand up sandboxes that demonstrate RMF Govern/Map/Measure/Manage with evidence crosswalks (EU AI Act, ISO 42001).

- Tools/Workflow: Control crosswalk tables; dashboards; incident simulations with GOA kill-switch.

- Outcomes/Products: Regulator reference implementations; audit templates; sector guidance.

- Dependencies/Assumptions: Regulator participation; clear scope; public-sector k8s capacity.

- Personal “safe mode” for automation agents (daily life)

- What: Run home/business agents behind a Tool-Gateway with a user-triggered kill-switch and consent prompts for sensitive actions.

- Tools/Workflow: Lightweight k8s (k3s/microk8s); Gateway mobile UI; per-action consent scopes.

- Outcomes/Products: Consumer agent safety app; family/business automation guardrails.

- Dependencies/Assumptions: Simplified deployment; usable UX; small-footprint models.

Long-Term Applications

Below are applications that are feasible with further research, scaling, standardization, or industry adoption.

- Cross-organizational Agent Name Service (software ecosystems, marketplaces)

- What: Standards-based ANS (DNS for agents) with cryptographic registration (DID/VC) and risk tiers across vendors.

- Tools/Workflow: Interop specs; SPIFFE mTLS cert issuance; federation protocols.

- Outcomes/Products: Agent directories/marketplaces; trust scoring; discoverable service mesh for agents.

- Dependencies/Assumptions: Industry standardization; governance of trust lists; security against rogue registrations.

- ZK compliance proofs as regulatory evidence (RegTech, auditors)

- What: Widespread acceptance of zero-knowledge proofs for demonstrating policy adherence without exposing sensitive logs.

- Tools/Workflow: Groth16/Plonk circuits; proof aggregation; regulator verification portals.

- Outcomes/Products: “Proof-of-compliance” SaaS; audit acceleration; privacy-preserving oversight.

- Dependencies/Assumptions: Regulator buy-in; scalable proof generation; legal frameworks recognizing ZK evidence.

- Sector-certified agent safety programs (healthcare, finance, energy)

- What: Certification schemes for agentic AI aligned to RMF, MAESTRO, AIVSS/SSVC, CSA Red Teaming, DIRF, LPCI/QSAF.

- Tools/Workflow: Accreditation bodies; conformance test suites; continuous assurance attestations.

- Outcomes/Products: Safety labels; procurement requirements; insurance underwriting models.

- Dependencies/Assumptions: Standards bodies involvement; actuarial data; liability frameworks.

- Integration with robotics/OT for physical-world agents (robotics, energy, manufacturing)

- What: Extend Tool-Gateway and GOA kill-switch to ROS/ICS protocols to constrain actuators with purpose-bound credentials.

- Tools/Workflow: Real-time policy enforcement; edge service mesh; deterministic overrides.

- Outcomes/Products: “Safety envelope” for cobots/drones; blast-radius containment for OT.

- Dependencies/Assumptions: Real-time constraints; certified failsafes; safety-critical verification.

- Cognitive stability engineering (model providers, academia)

- What: Embed QSAF monitors/signals into foundation models and agent frameworks; standardized metrics for degradation (recursion, starvation, flooding).

- Tools/Workflow: Model-internal telemetry; runtime safeguards; training-time robustness techniques.

- Outcomes/Products: “Stability-ready” models; SLAs on cognitive drift; research benchmarks.

- Dependencies/Assumptions: Model provider cooperation; common signal schemas; evaluation corpora.

- LPCI defenses standardized in agent stacks (software, platform tooling)

- What: Taint tracking and memory/tool sanitization patterns become default in LangChain/LangGraph and MCP-like protocols.

- Tools/Workflow: Library-level guards; secure tool schemas; memory hygiene APIs.

- Outcomes/Products: “Injection-resistant” agent frameworks; vendor certifications.

- Dependencies/Assumptions: Broad developer adoption; performance trade-offs; formal specs.

- Managed Governance-as-a-Service (cloud providers, MSPs)

- What: Cloud-native AAGATE as a multi-tenant service with prebuilt policies, gateways, and ledgers for SMEs.

- Tools/Workflow: Hosted service mesh; policy catalogs; incident orchestration; compliance reporting.

- Outcomes/Products: Turnkey safety for agentic SaaS; per-tenant RMF bundles.

- Dependencies/Assumptions: Multi-tenant isolation; cost models; shared responsibility clarity.

- Agent identity and licensing regimes (policy, platforms)

- What: “Agent driver’s license” with SBT/DID, risk tiering, and DAO oversight for permissioned capabilities in public ecosystems.

- Tools/Workflow: Public registries; revocation mechanisms; cross-platform enforcement.

- Outcomes/Products: Civic tech for agent accountability; interoperability agreements.

- Dependencies/Assumptions: Legal codification; privacy concerns; governance legitimacy.

- Financial risk controls for autonomous trading agents (finance)

- What: Purpose-bound API scopes with dynamic SSVC gating, kill-switches, and compliance proofs for regulated trading.

- Tools/Workflow: Gateway-integrated broker APIs; risk tiers per instrument; audit-ready logs and ZK attestations.

- Outcomes/Products: Regulator-approved autonomous trading guardrails; reduced operational risk.

- Dependencies/Assumptions: Market latency tolerance; regulator acceptance; vendor integrations.

- Federated, privacy-preserving data operations (healthcare, public sector)

- What: Combine local LLMs, secure RAG, and ZK proofs for cross-institution analytics without exposing raw data.

- Tools/Workflow: Encrypted vector stores; policy-constrained retrieval; federated proof aggregation.

- Outcomes/Products: Collaborative research without data movement; audit-ready compliance artifacts.

- Dependencies/Assumptions: Inter-institution agreements; secure RAG standards; robust proof tooling.

- Consumer-level identity rights adoption (daily life, platforms)

- What: Ecosystem-wide DIRF adoption—platforms enforce provenance/watermarks for synthetic media; user-managed consent wallets.

- Tools/Workflow: Wallet apps; content verification APIs; platform policy enforcement.

- Outcomes/Products: Reduced impersonation harms; transparent media provenance.

- Dependencies/Assumptions: Broad platform cooperation; reliable watermarking; user education.

- Multi-agent ecosystem interoperability via MCP and ANS (software)

- What: Standardized interop (MCP-like) with ANS-backed discovery across vendors for secure agent-to-agent collaboration.

- Tools/Workflow: Capability VCs; trust policies; cross-mesh federation.

- Outcomes/Products: Composable agent ecosystems; secure B2B agent workflows.

- Dependencies/Assumptions: Protocol convergence; security baselines; governance of cross-domain trust.

- Insurance products for agentic AI operations (finance/insurance)

- What: Underwriting based on RMF-aligned controls (Gateway, UEBA, SMA, supply chain) and incident histories with proofs.

- Tools/Workflow: Control audits; risk scoring feeds; claims evidence via logs/ZK proofs.

- Outcomes/Products: Premium discounts for compliant stacks; risk transfer mechanisms.

- Dependencies/Assumptions: Sufficient loss data; actuarial models; standardized control attestations.

Collections

Sign up for free to add this paper to one or more collections.