- The paper introduces Decoupled MeanFlow, a technique that transforms flow models into flow map models to reduce discretization errors and enhance sampling speed.

- The approach decouples the encoder and decoder, allowing pretrained models to be fine-tuned without altering their architecture.

- Experimental results show significant improvements, with a 1-step FID score of 2.16 and competitive 4-step performance on ImageNet.

Decoupled MeanFlow: Turning Flow Models into Flow Maps for Accelerated Sampling

Abstract

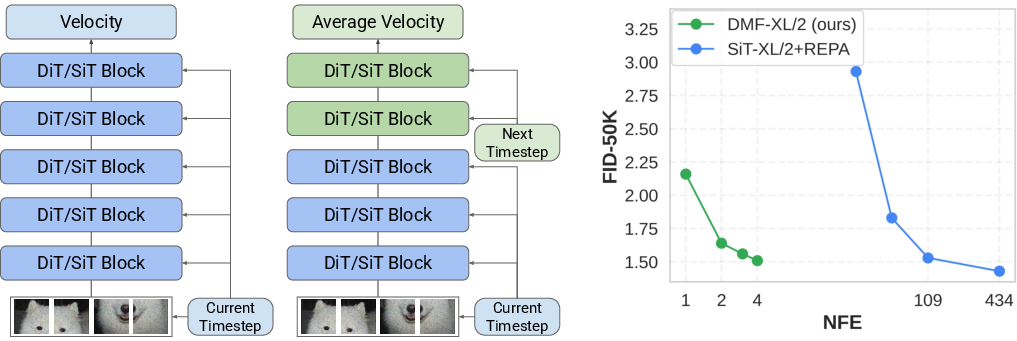

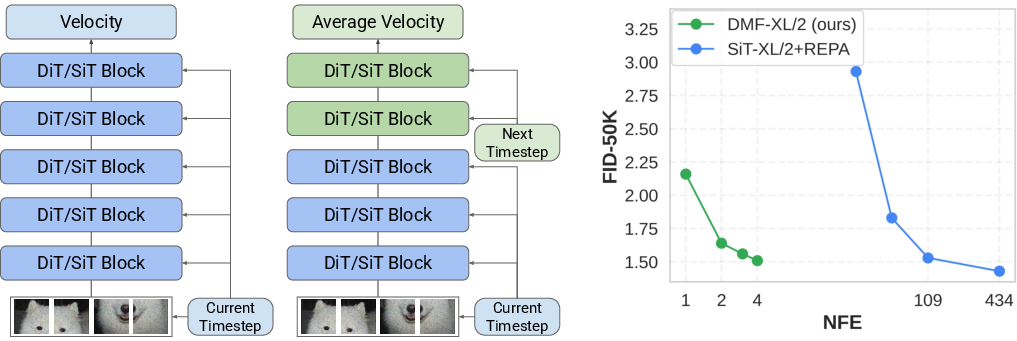

The research highlights a novel approach named Decoupled MeanFlow (DMF) aimed at improving sampling efficiency in denoising generative models, notably diffusion and flow-based models. These models, while adept at generating high-quality samples, traditionally require numerous denoising steps leading to inefficiencies primarily due to discretization errors. Flow maps come as a solution to estimate the average velocity between timesteps, thus alleviating discretization errors and enabling faster sampling. This paper introduces a decoding strategy that helps transition flow models into flow map models without necessitating architectural alterations. This method streamlines the integration of pretrained flow models and pushes the boundaries of generative efficiency, outperforming previous works with staggering speed increases.

Figure 1: Accelerating diffusion transformer via Decoupled MeanFlow.

Introduction

Diffusion models and flow models have rapidly emerged as leading techniques for producing high-quality visual content, significantly impacting image and video generation fields. However, the requirement for multiple complex iterations remains a bottleneck, challenging prospects for real-time applications. Consistent models, and now flow maps, offer promising alternatives by reducing the requisite sampling steps. While the MeanFlow technique has demonstrated these capabilities, this research introduces DMF as a method that leverages existing flow models for flow mapping, enhancing both the architectural simplicity and functional performance of the models.

Figure 2: Qualitative examples.

Methodology

The DMF approach innovatively separates the encoder and decoder of flow models, thereby creating a flexible framework which allows pretrained flow models to utilize new flow maps effectively. By doing so, the process ensures that the decoder can now notably focus on future timestep predictions, permitting higher precision in depicting subsequent states of the model. This intrinsic decoupling ensures that the representation of information by the encoder does not redundantly integrate subsequent timestep data, thereby optimizing the use of architectural resources.

Implementation

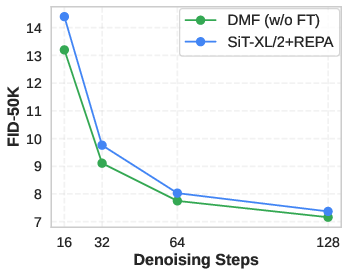

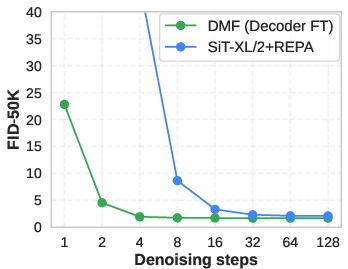

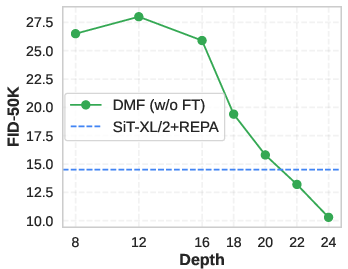

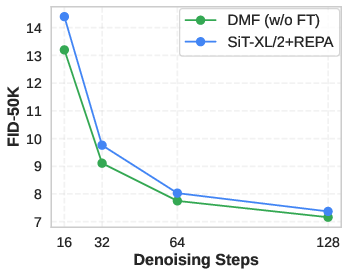

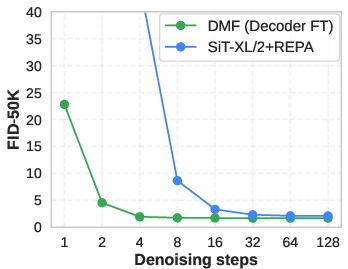

The DMF leverages existing pretrained models and facilitates their conversion via decoder-centric fine-tuning. It features robust techniques like Flow Matching warmup and adaptive loss functions, promoting efficient utilization of computational resources. This definitive approach not only scales seamlessly across model resolutions but also supports staggeringly fast inference capability.

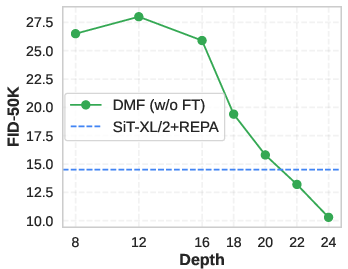

Figure 3: Varying depth in encoder-decoder configurations and their impact on performance.

Results

Demonstrating substantial improvements in image generation benchmarks, the DMF model achieves an unprecedented 1-step FID score of 2.16 on ImageNet 256×256, which signifies a major leap over existing models. At 4 steps, it reaches an FID of 1.51, achieving competence comparable to flow models but with significantly reduced computations. The results confirm DMF's efficacy, emphasizing its enhanced representation capabilities and matched performance against models that traditionally required extensive computation.

Conclusion

Decoupled MeanFlow sets a robust precedent for the future of efficient model architecture in AI-generated content. While primarily a theoretical advancement, DMF advocates a pragmatic approach to model reusability and efficient sample generation, showcasing potential impacts across various applications where rapid sample generation is crucial. As the need for efficient generative processes grows, DMF's simplicity and effectiveness will likely lead to broader research and substantial optimizations in architecture and inference strategies.