- The paper finds that public acceptance of AI significantly dropped post-Generative AI boom, with an increase in demand for human-only and human-AI collaborative decision-making in high-impact scenarios.

- It employs a robust two-wave survey with stratified sampling and weighting in Switzerland to capture nuanced shifts across diverse demographics.

- The study highlights widening educational, linguistic, and gender disparities in AI acceptance, underscoring the need for inclusive, participatory AI governance.

Reduced AI Acceptance After the Generative AI Boom: Evidence from a Two-Wave Survey Study

Introduction and Context

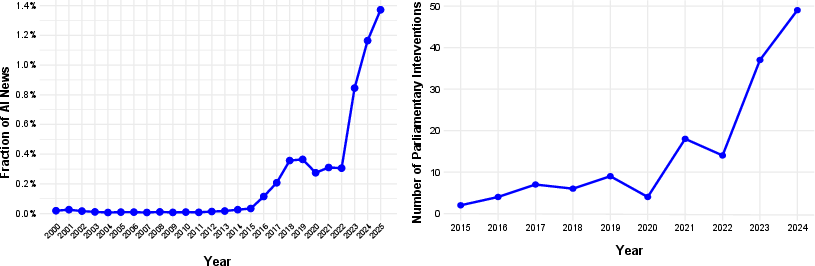

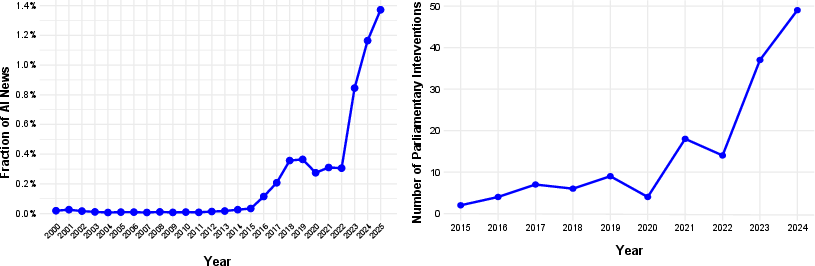

This paper presents a rigorous, population-representative, two-wave survey of Swiss adults, designed to quantify shifts in public attitudes toward AI before and after the generative AI (GenAI) boom, specifically the launch of ChatGPT. The research addresses a critical gap in the literature: the lack of longitudinal, context-sensitive, and demographically representative data on AI acceptance and preferences for human control in impactful decision-making scenarios. The paper is situated within the broader context of rapid GenAI adoption, increased media and political attention, and evolving regulatory frameworks such as the EU AI Act, which foregrounds the necessity of human oversight for high-risk AI systems.

Figure 1: The generative AI boom in Swiss media and politics, with a marked increase in AI-related news coverage and parliamentary interventions from 2015 to 2024.

Methodology

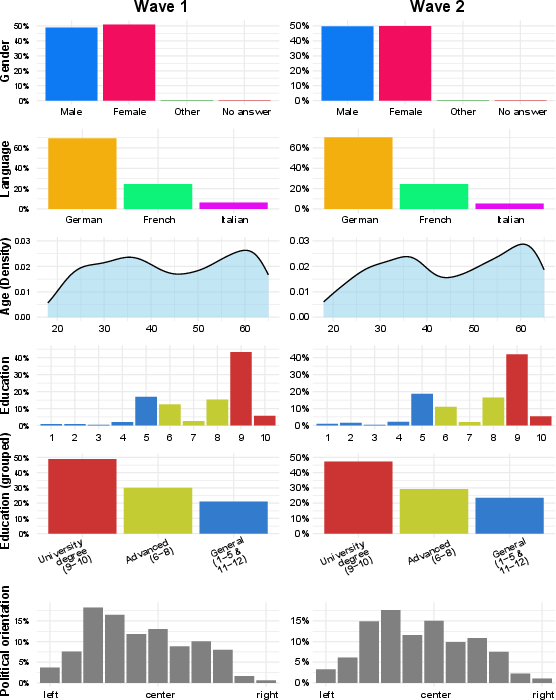

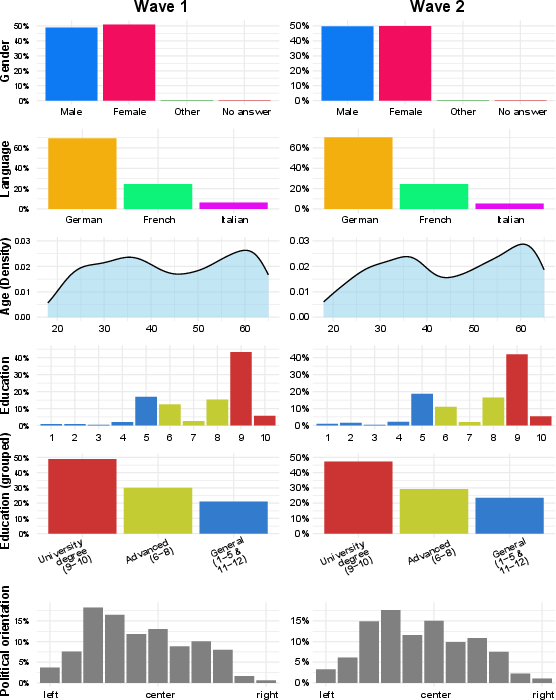

The research employs a two-wave survey design, with the first wave conducted in early 2022 (pre-GenAI boom) and the second in mid-2023 (post-GenAI boom). Both waves used stratified sampling and post-stratification weighting to ensure representativeness across age, gender, language region, education, and political orientation. The survey instrument included:

- A standardized AI primer to control for definitional ambiguity.

- Contextualized acceptance and human control questions across seven high-impact scenarios: fake news detection, insurance premium pricing, loan decisions, medical diagnosis, hiring, therapy discontinuation, and prisoner release.

- Fine-grained measurement of human control preferences, distinguishing between human-only, human-AI collaboration (AI-assisted human decision or AI decision with human oversight), and AI-only decision-making.

- Detailed demographic, digital literacy, and AI familiarity measures.

The sample sizes were n=1514 (wave 1) and n=1488 (wave 2), with high response quality ensured via attention checks and professional survey administration.

Figure 2: Demographic overview of survey respondents in both waves, confirming representativeness across key strata.

Key Findings

Decline in AI Acceptance and Increased Demand for Human Oversight

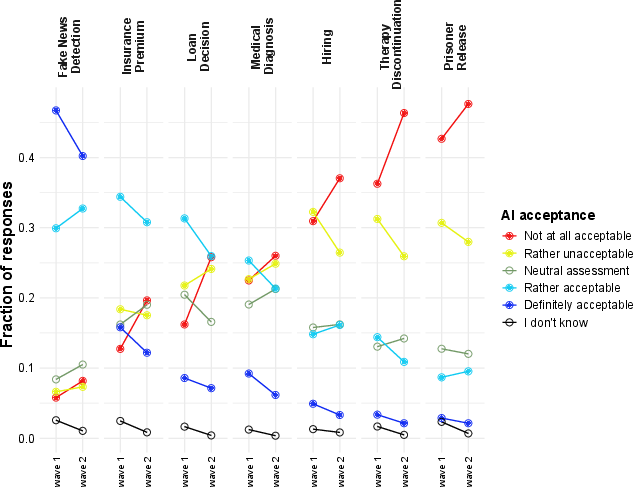

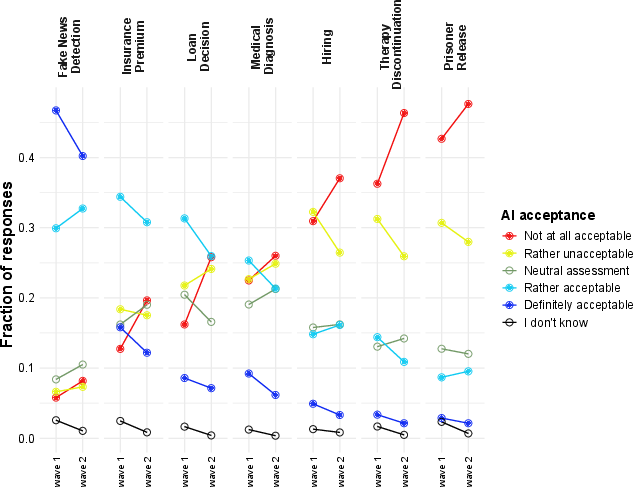

The GenAI boom is associated with a statistically significant reduction in public acceptance of AI across five of seven scenarios. The proportion of respondents finding AI "not acceptable at all" increased from 23% to 30%, while support for human-only decision-making rose from 18% to 26%. The decline in acceptance is most pronounced in high-impact scenarios (e.g., prisoner release, therapy discontinuation, hiring), but is also evident in domains with previously higher acceptance (e.g., fake news detection, insurance premiums).

Figure 3: Response distribution in % for acceptance questions across survey waves and scenarios, showing a shift toward lower acceptance post-GenAI boom.

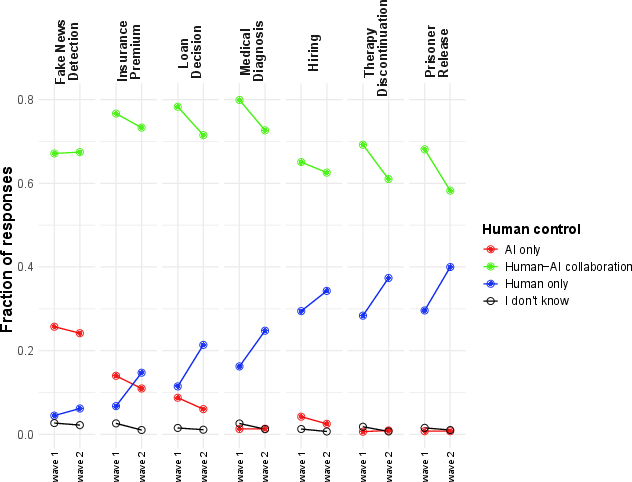

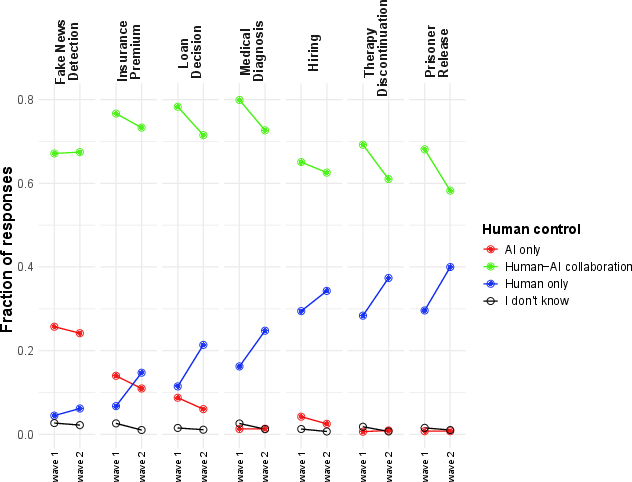

Figure 4: Response distribution in % for human control questions across survey waves and scenarios, with a marked increase in preference for human-AI collaboration and human-only decisions.

Contextuality of Acceptance and Control Preferences

Acceptance and control preferences are highly scenario-dependent. For example, only ~3% of respondents find fully autonomous AI acceptable for therapy discontinuation, while ~40% accept it for fake news detection. Human-AI collaboration is the modal preference in most scenarios, but the share of respondents favoring human-only decisions increases substantially post-GenAI boom, especially in sensitive contexts.

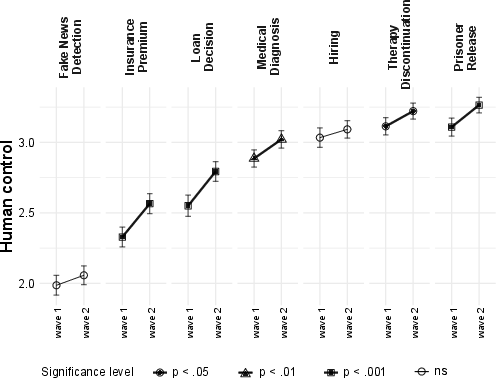

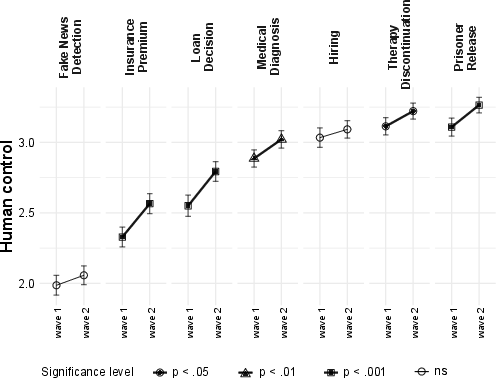

Figure 5: The generative AI boom is associated with a significant increase in required human control for five out of seven scenarios, with strong contextual dependence.

Amplification of Socio-Demographic Inequalities

The GenAI boom has exacerbated pre-existing disparities in AI acceptance and control preferences along educational, linguistic, and gender lines:

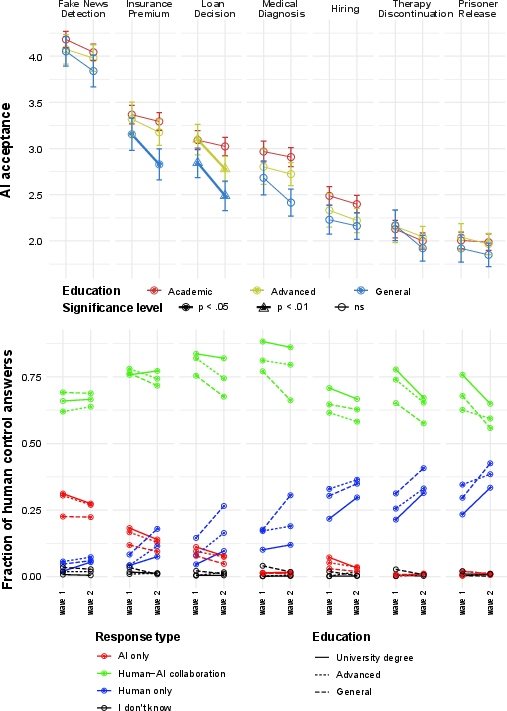

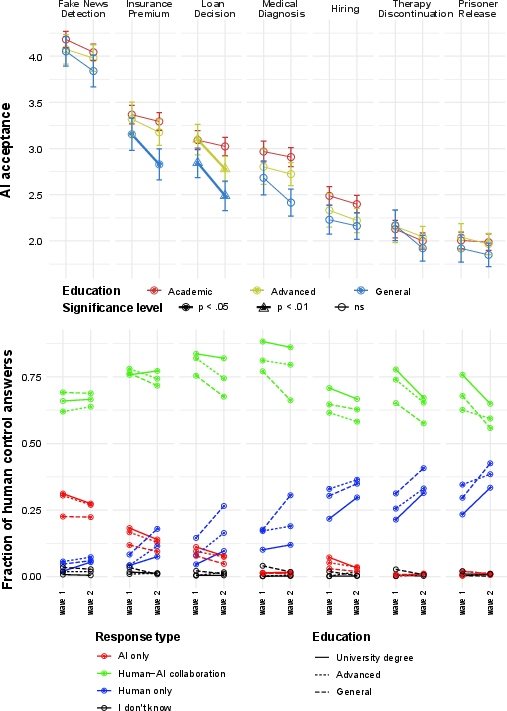

- Education: The gap in AI acceptance between university-educated and less-educated respondents widened post-boom. University-educated individuals maintained higher acceptance and lower demand for human control, while those with general education became more critical and more likely to demand human-only decisions.

Figure 6: AI acceptance (top) and human control (bottom) across education levels, with the education gap widening post-GenAI boom.

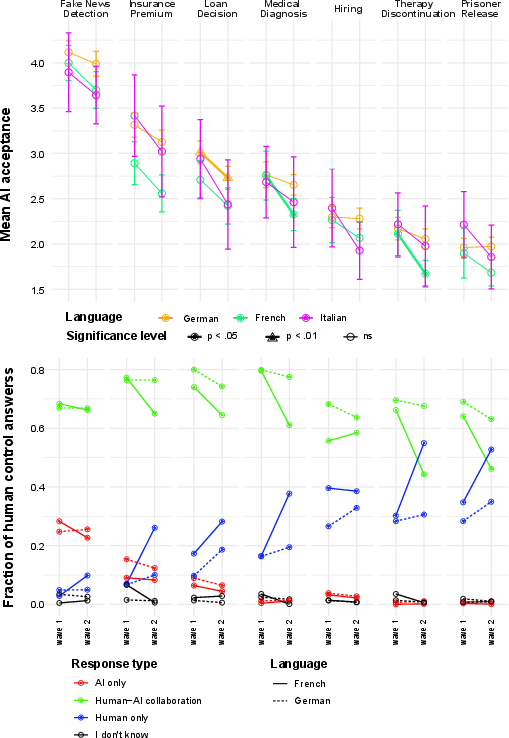

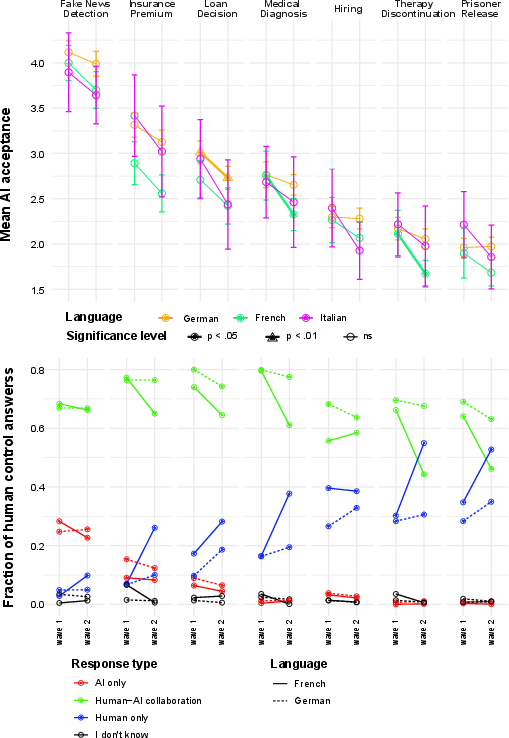

- Language Region: French-speaking respondents consistently exhibit lower AI acceptance than German speakers, with the gap increasing in medical scenarios after the GenAI boom. French speakers also show a stronger shift toward human-only decisions.

Figure 7: AI acceptance (top) and human control (bottom) across language regions, with increased skepticism among French speakers post-GenAI boom.

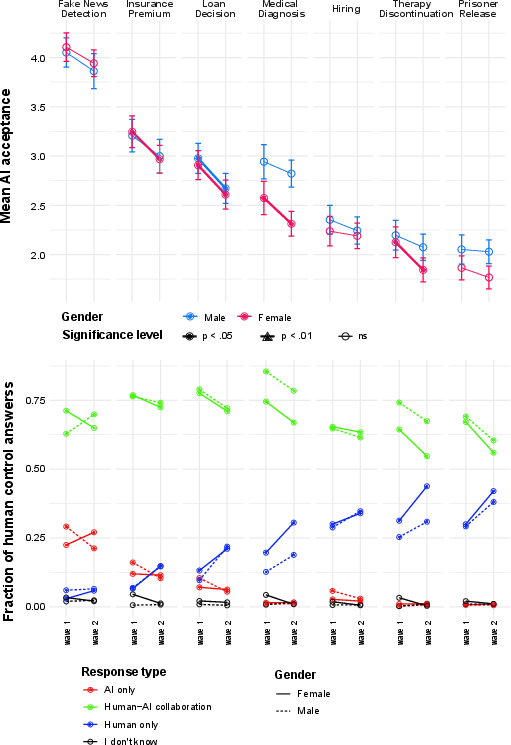

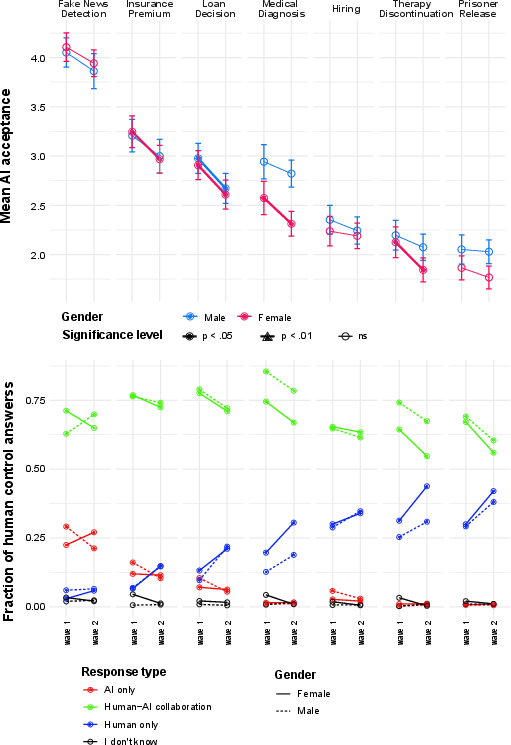

- Gender: Women display significantly lower AI acceptance than men, particularly in health-related scenarios, and the gender gap has widened post-GenAI boom. Women are more likely to prefer human-only decisions in these contexts.

Figure 8: AI acceptance (top) and human control (bottom) across genders, with the gender gap in health scenarios increasing after the GenAI boom.

Theoretical and Practical Implications

Theoretical Implications

- Temporal Instability of Public Attitudes: The paper demonstrates that public attitudes toward AI are not static but can shift rapidly in response to technological and discursive events (e.g., the GenAI boom). This temporal instability challenges the external validity of cross-sectional studies and underscores the need for longitudinal, context-sensitive research.

- Contextuality and Granularity: The pronounced scenario-dependence of acceptance and control preferences supports calls for granular, domain-specific approaches in both research and policy, rather than treating "AI" as a monolithic category.

- Amplification of Digital Inequalities: The widening of educational, linguistic, and gender gaps in AI acceptance post-GenAI boom suggests that rapid technological change may exacerbate, rather than ameliorate, existing digital divides.

Practical Implications

- Regulatory Design: The findings challenge industry assumptions about public readiness for AI deployment and highlight the necessity of aligning technological development and regulatory frameworks with evolving public preferences. The strong demand for human oversight, especially in high-impact scenarios, provides empirical support for the human-in-the-loop requirements in the EU AI Act and similar regulations.

- Participatory Governance: The amplification of demographic disparities suggests that participatory governance structures (e.g., citizen advisory boards, regulatory sandboxes) should be designed to ensure the inclusion of underrepresented and more skeptical groups.

- Communication and Framing: The paper highlights the influence of media and public discourse on AI attitudes, suggesting that responsible communication and framing are critical for managing public expectations and trust.

Methodological Considerations and Limitations

- Priming and Definition of AI: The use of a standardized AI primer mitigates, but does not eliminate, the challenge of definitional ambiguity. Public attitudes may still be influenced by broader cultural narratives and media framings.

- Survey vs. Experimental Approaches: While the survey captures population-level attitudes, it does not elucidate causal mechanisms or behavioral correlates. Future research should integrate experimental and field studies to triangulate findings and enhance ecological validity.

- Generalizability: The findings are specific to Switzerland, a highly innovative, multilingual, and digitally literate context. Cross-national replication is necessary to assess generalizability.

Future Directions

- Longitudinal and Cross-National Studies: Continued longitudinal monitoring and cross-national comparisons are essential to track the evolution of public attitudes and the impact of regulatory interventions.

- Mechanisms of Attitude Change: Experimental studies should investigate the causal mechanisms underlying shifts in acceptance and control preferences, including the roles of media framing, personal experience, and perceived risk.

- Inclusive Design and Education: Research and policy should focus on developing inclusive design practices and targeted educational interventions to mitigate the amplification of digital inequalities.

Conclusion

This paper provides robust evidence that the generative AI boom has led to a measurable decline in public acceptance of AI and an increased demand for human oversight in Switzerland, with these effects being highly context-dependent and disproportionately affecting less-educated, French-speaking, and female respondents. These findings have significant implications for the design of AI systems, regulatory frameworks, and public engagement strategies, underscoring the necessity of context-sensitive, participatory, and inclusive approaches to AI governance.