GSWorld: Closed-Loop Photo-Realistic Simulation Suite for Robotic Manipulation (2510.20813v1)

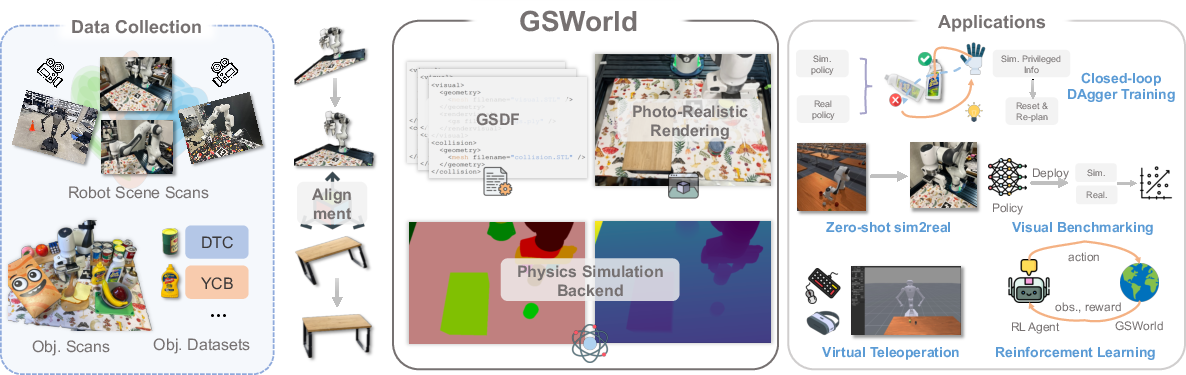

Abstract: This paper presents GSWorld, a robust, photo-realistic simulator for robotics manipulation that combines 3D Gaussian Splatting with physics engines. Our framework advocates "closing the loop" of developing manipulation policies with reproducible evaluation of policies learned from real-robot data and sim2real policy training without using real robots. To enable photo-realistic rendering of diverse scenes, we propose a new asset format, which we term GSDF (Gaussian Scene Description File), that infuses Gaussian-on-Mesh representation with robot URDF and other objects. With a streamlined reconstruction pipeline, we curate a database of GSDF that contains 3 robot embodiments for single-arm and bimanual manipulation, as well as more than 40 objects. Combining GSDF with physics engines, we demonstrate several immediate interesting applications: (1) learning zero-shot sim2real pixel-to-action manipulation policy with photo-realistic rendering, (2) automated high-quality DAgger data collection for adapting policies to deployment environments, (3) reproducible benchmarking of real-robot manipulation policies in simulation, (4) simulation data collection by virtual teleoperation, and (5) zero-shot sim2real visual reinforcement learning. Website: https://3dgsworld.github.io/.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces GSWorld, a “photo-realistic” robot simulator. Think of it like a high-quality video game level that looks almost exactly like a real room and behaves with real-world physics. GSWorld helps robots learn how to move and manipulate objects by training in this realistic simulator, then using the same skills on real hardware without extra tweaking. The big idea is to “close the loop”: use the same virtual environment to train, test, fix mistakes, and improve robot policies, so learning is faster and more reliable.

Key Objectives and Questions

The paper aims to answer simple, practical questions:

- How can we build a simulator that looks like the real world and uses the same robot controls?

- Can robots trained only in simulation work immediately in the real world (“zero-shot sim-to-real”)?

- Can we use the simulator to quickly fix robot mistakes after deployment?

- Can we fairly compare different robot policies in a consistent, realistic test environment?

- Can we make reinforcement learning in vision-based tasks work better by reducing the “visual gap” between sim and real?

Methods and Approach

What GSWorld is made of

GSWorld combines two things:

- Photo-realistic rendering: It uses a technique called “3D Gaussian Splatting.” Imagine rebuilding a room with lots of tiny, colored, fuzzy blobs that together form a sharp, realistic picture when viewed from any camera angle.

- Physics engines: These are the rules that make objects move, collide, and react like they do in real life (gravity, friction, contact).

A new asset format: GSDF

GSWorld introduces GSDF (Gaussian Scene Description File). A GSDF bundles:

- The realistic 3D “blob” model of rooms and objects,

- Robot descriptions (URDF — think of it like the robot’s blueprint),

- Collision shapes and material properties (e.g., how slippery or heavy something is).

This makes it easy to share and reuse scenes and robots across different simulators.

Building a “digital twin” of the real world

To train robots well, GSWorld carefully matches the real world. Here’s how:

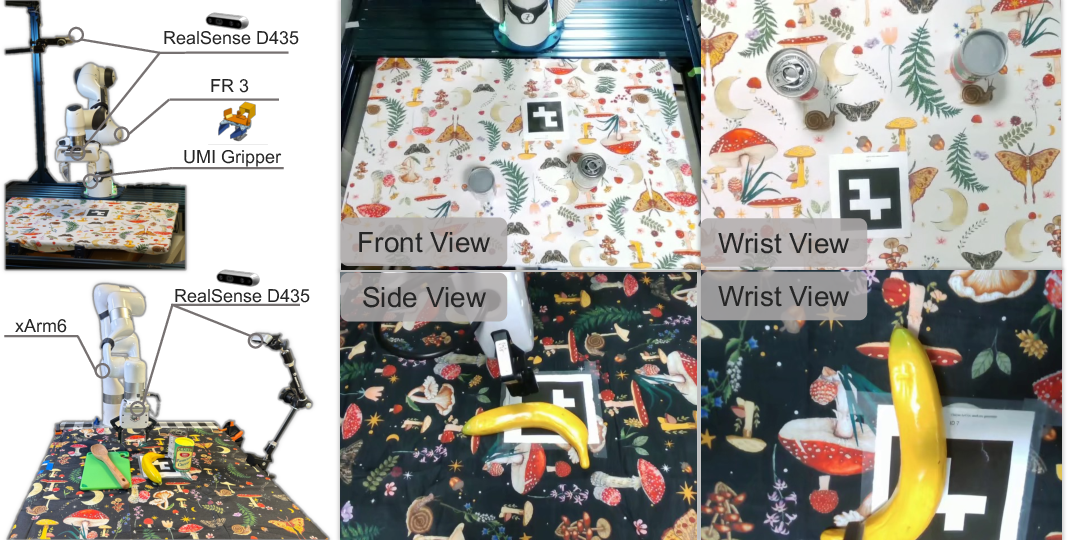

- Multi-view capture: They record the scene from several cameras (wrist camera on the robot and external cameras), along with the robot’s joint positions.

- Setting the correct size: They place special printed markers (ArUco markers — like simple QR codes) on the table. These help align the scale so objects are the right size in the simulator.

- Aligning the robot: They match the real robot to the simulated robot using a method called ICP (Iterative Closest Point). This is like sliding and rotating two 3D shapes until they fit perfectly.

- Adding objects: They reconstruct objects from photos, estimate physical properties (like mass), and optionally fill in unseen parts. Objects from known datasets are also included.

- Matching cameras and controls: The simulator uses the same camera positions and robot control commands as the real robot. This avoids translation errors and makes it much easier to transfer policies from sim to real.

Closing the loop

“Closed-loop” means the same simulator supports all stages:

- Train a robot policy,

- Evaluate it,

- Replay failures,

- Collect corrective data,

- Retrain to fix mistakes. Because the simulator looks real and controls the robot in its native way, improvements made in the sim carry over to the real robot with minimal fuss.

Main Findings and Why They Matter

Here are the main results the authors report:

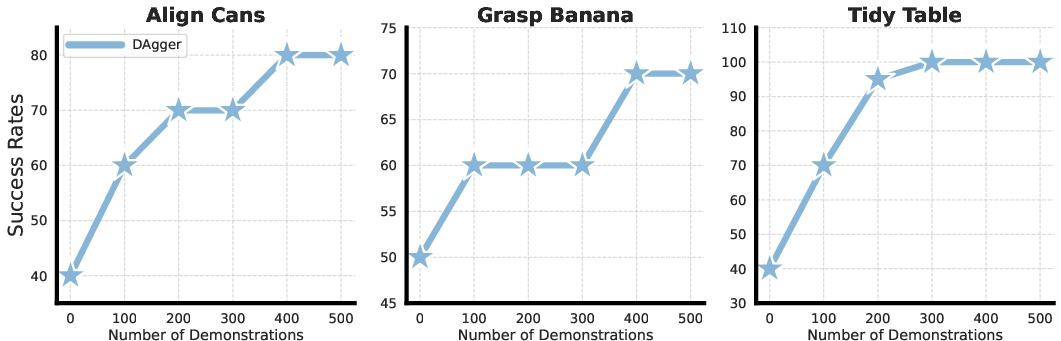

- Zero-shot sim-to-real works: Policies trained only in GSWorld can perform real tasks immediately on robots like FR3 and xArm6. This is important because it saves time and reduces the need for expensive, risky training on physical robots.

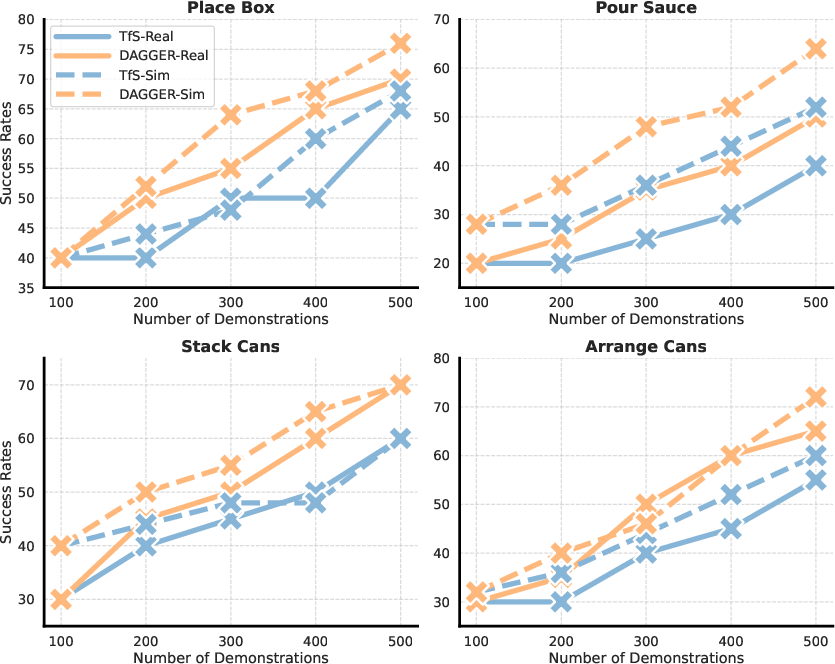

- DAgger improves policies fast: DAgger is a method where a “teacher” corrects the robot’s mistakes and adds those corrections to training data. GSWorld makes this easy by recreating failure states in the simulator and collecting high-quality corrections. Policies improved faster and more reliably than training from scratch.

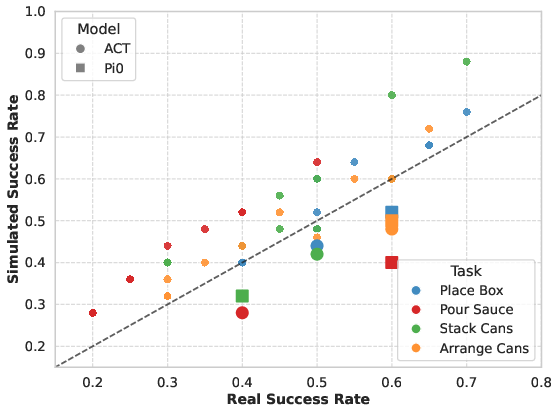

- Simulation performance predicts real performance: Results in GSWorld strongly correlate with real-world outcomes. That means you can test in sim and trust the results, which makes research faster and more reproducible.

- Virtual teleoperation works: Humans can control robots inside the simulator using a keyboard and mouse to collect training data, without needing the real robot every time.

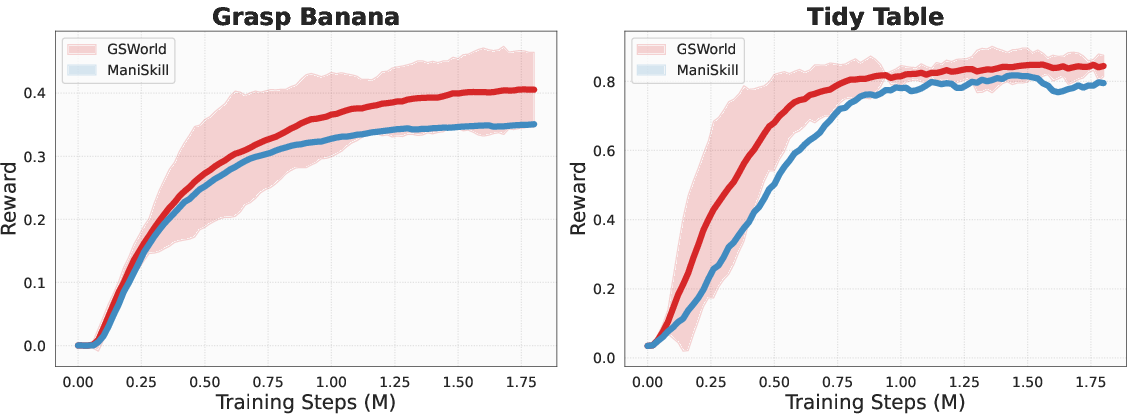

- Better visual reinforcement learning: By reducing the “visual gap” (how different simulated images look compared to real ones), GSWorld improved RL success rates on real tasks compared to a baseline simulator.

Simple Explanations of Key Terms

To keep things clear, here are a few terms in everyday language:

- Sim-to-real: Train a robot in simulation, then run the same policy on a real robot.

- Zero-shot: No extra fine-tuning or fixes needed before using the policy in the real world.

- Imitation Learning (IL): The robot learns by copying expert demonstrations.

- Reinforcement Learning (RL): The robot learns by trial and error, getting rewards for good actions.

- DAgger: A training method where a teacher adds corrections when the robot is about to make mistakes, and the robot learns from those corrections.

- 3D Gaussian Splatting (3DGS): A way to build realistic 3D scenes from photos using lots of tiny colored blobs that render quickly and look like the real world.

- URDF: A robot’s blueprint describing its parts and joints.

- ArUco marker: A printed square pattern used to measure scale accurately in images.

- ICP: A method to align two 3D shapes by moving one until it matches the other.

Implications and Potential Impact

GSWorld’s approach has several important benefits:

- Saves time and money by reducing how much training needs to happen on real hardware.

- Makes robot learning safer because more practice happens in the simulator.

- Encourages fair, reproducible benchmarks, so improvements reflect better algorithms, not lucky setups.

- Helps teams share realistic assets and compare policies across different robots and tasks.

- Speeds up innovation: because training, evaluation, and fixing mistakes all happen inside one consistent, photo-realistic loop, researchers can iterate faster and deploy more reliable robot skills.

In short, GSWorld points toward a future where robots can learn complex, vision-based skills in rich, realistic simulations and then succeed in the real world with minimal extra work.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a consolidated, actionable list of what remains missing, uncertain, or unexplored in the paper, aimed to guide future research.

- Photorealism under changing conditions: How robust are 3DGS renderings to changes in lighting, exposure, and shadows not seen during reconstruction? Investigate relighting/editable material pipelines for 3DGS and quantify transfer under illumination shifts.

- Challenging materials and geometry: Evaluate and improve handling of transparent/reflective objects, thin structures, specular highlights, and glossy surfaces where 3DGS often struggles; measure their impact on policy performance.

- Non-rigid and articulated visuals: Extend 3DGS assets to deformable objects and articulated mechanisms with view-consistent rendering during contact; assess sim2real for cloth, cables, bags, and lids.

- Physical parameter identification: Provide automated, scalable estimation of friction, restitution, damping, compliance, and contact parameters (beyond mass weighing); validate against real contact dynamics.

- Contact fidelity validation: Move beyond success rates to force/torque and trajectory-level sim–real agreement; define standardized metrics and benchmarks for contact-rich manipulation.

- Sensor realism and calibration: Model camera intrinsics/extrinsics errors, lens distortion, rolling shutter, auto-exposure/white balance, motion blur, and RGB-D noise; quantify how each factor affects transfer.

- Wrist-camera gap: The paper notes RL instability with wrist views; develop methods (stabilized egocentric viewpoints, active vision, regularization) and demonstrate vision-based RL with wrist cameras transferring to real.

- Scale alignment reliance: ArUco-based metric scaling may be brittle under occlusions/lighting; explore self-calibration via robot kinematics/hand–eye constraints and validate accuracy vs. markers.

- ICP alignment robustness: Characterize failure modes and error bounds of URDF–scene ICP (low texture, occlusions, shiny surfaces); provide uncertainty estimates and ablation on downstream policy sensitivity.

- Unobserved surfaces and inpainting: Systematically evaluate bottom surface completion (inpainting/amodal generation) on visual fidelity and collision accuracy; create automated validation tests.

- GSDF portability across engines: Quantify how differences between physics backends (contact solvers, integrators) affect sim2real; define a minimal GSDF standard mapping consistently to multiple engines.

- Computational performance and latency: Report FPS, per-env GPU/CPU memory, and latency budgets for closed-loop control with N parallel environments and multi-camera setups; analyze how latency affects control stability.

- Vision-based RL claims: Current RL actor uses proprioception only; demonstrate truly visual RL policies (actor conditioned on images), including wrist views, and perform controlled sim2real comparisons and ablations.

- Domain randomization for 3DGS: 3DGS is hard to relight; develop principled appearance randomization (relighting, BRDF perturbation, camera noise) and measure gains vs. standard 3D rendering DR.

- Benchmarking external validity: Correlation results use few tasks and 10 trials per policy; provide statistical power analyses, confidence intervals, cross-lab replication, and stress tests (camera pose/lighting perturbations).

- Task and embodiment diversity: Broaden beyond 7 tabletop tasks and 3 embodiments to long-horizon assembly, force-sensitive operations, deformables, multi-stage plans, and mobile manipulation; include bimanual learning results (not just teleop).

- Dynamic scene updates: Support online GSDF updates (object/camera/lighting changes), loop-closure, and re-localization; measure how stale reconstructions degrade performance over time.

- Multi-modal sensing: Integrate force–torque, tactile, audio, and depth in the closed loop; assess gains and alignment issues across sim and real for these modalities.

- Controller/action semantics: Standardize cross-embodiment action conventions (frames, impedance, gains) and quantify the impact of controller latency/impedance mismatches; evaluate torque-level policies in sim2real.

- DAgger realism gap: Simulated privileged resets may not reflect real recoverability; paper how sim-collected corrections translate on hardware, and develop safe, realistic recovery protocols.

- Collision mesh fidelity: Validate 2DGS-derived meshes against precise scans; quantify tolerances for collision mesh–visual mismatch and their impact on grasping/stability.

- Camera calibration procedures: Provide detailed, reproducible camera calibration and synchronization pipelines (time-stamping, multi-camera extrinsics), with error bars and their effect on transfer.

- Robustness to reconstruction failures: Characterize failure modes in COLMAP/3DGS (texture-poor scenes, motion blur), add automated diagnostics, and propose fallback strategies (fiducials, active capture).

- Fluid and complex physics: “Pour Sauce” likely approximates fluids; introduce and evaluate fluid/particle simulators and corresponding photorealistic rendering for liquid tasks.

- Artifact overfitting: Assess whether policies exploit 3DGS artifacts (floaters, holes); test on held-out real scenes and deliberately perturbed reconstructions to detect shortcut learning.

- Asset release and coverage: Detail GSDF dataset scale, diversity, licensing, and documentation; provide standardized procedures/tools to add new robots/objects/scenes with quality guarantees.

- Similarity metrics specification: The paper mentions a “suite of metrics” for visual/geometric/functional similarity but does not define them; publish metric definitions, thresholds, and reference baselines.

- Egocentric exploration stability: Develop exploration strategies, action-space constraints, or curriculum designs that prevent egocentric RL collapse and improve sim2real with wrist cameras.

- Sensitivity/uncertainty analysis: Quantify how errors in scale, extrinsics, mesh, and physics parameters propagate to task success; provide uncertainty-aware training/evaluation protocols.

- Determinism and reproducibility: Ensure cross-engine determinism, seed control, and bitwise reproducibility for rendering and physics; document known nondeterminisms and their mitigation.

- Teleoperation realism: Compare keyboard/mouse and VR teleop to real teleop with/without haptics; paper how teleop modality affects demonstration quality and downstream policy transfer.

- Real-time closed-loop constraints: Report end-to-end latency (render → observe → act) and its variability; analyze control stability margins and design latency-robust controllers.

- Multi-robot interactions: Evaluate scenarios with two or more robots interacting in one GSDF; paper perception interference, contact modeling, and policy coordination across embodiments.

Practical Applications

Practical, real-world applications of GSWorld

GSWorld introduces a closed-loop, photo-realistic simulation suite for robotic manipulation by fusing 3D Gaussian Splatting (3DGS) with physics engines, standardized GSDF assets, and a bidirectional real-to-sim-to-real workflow. Its core innovations—photo-realistic rendering with native action-space control, streamlined reconstruction with metric scale alignment, automated DAgger data collection, reproducible visual benchmarking, and scalable visual RL—unlock a range of practical applications across industry, academia, policy, and daily life.

Below, applications are grouped into immediate and long-term opportunities, with sectors, potential tools/workflows, and key assumptions/dependencies noted for each.

Immediate Applications

The following applications can be deployed now with the assets and code described in the paper.

- Zero-shot sim-to-real visual imitation learning for standard manipulation tasks

- Sectors: robotics, manufacturing, logistics

- Use cases: picking/placing, kitting, light assembly, kitchen tasks; onboarding new tasks for existing arms (e.g., FR3, xArm6) without on-robot data collection

- Tools/workflows: GSDF asset database; GSWorld Wrapper; ACT and Pi0 policies; ManiSkill as backend; motion planning via MPlib; object libraries (DTC, YCB)

- Assumptions/dependencies: accurate URDFs; camera intrinsics/extrinsics aligned; ArUco scale calibration; predominantly rigid objects; sufficient GPU resources; alignment of observation and action spaces to robot APIs

- Closed-loop DAgger correction and continuous policy improvement in deployment environments

- Sectors: robotics, manufacturing, logistics; academia

- Use cases: post-deployment failure diagnosis and targeted data collection; rapid iteration cycles with failure replay and recovery state sampling

- Tools/workflows: DAgger loop with privileged simulation info; scripted motion planning; automated failure replays; aggregated sim+real datasets

- Assumptions/dependencies: reconstructable scenes into GSDF; access to expert or scripted policies; motion planner coverage for tasks; reliable failure state capture

- Reproducible visual benchmarking of manipulation policies and vision-language-action (VLA) base models

- Sectors: academia, industry R&D

- Use cases: apples-to-apples comparisons across robots/scenes; ablation studies; evaluation of ACT/Pi0 variants with correlated sim-real performance

- Tools/workflows: shared GSDF assets with fixed camera intrinsics/extrinsics; standardized action semantics and tasks in ManiSkill; benchmarking scripts

- Assumptions/dependencies: consistent lighting/materials; community agreement on metrics; representing representative task distributions

- Virtual teleoperation for scalable simulated data collection

- Sectors: academia, education, industry R&D

- Use cases: keyboard/mouse teleop for quick data bootstrapping; VR teleop (e.g., HTC Vive) to collect demonstrations; remote annotation workflows

- Tools/workflows: ManiSkill click-and-drag teleop; triad-openvr + SteamVR; ACE-F for xArm6; dataset logging of states and visuals

- Assumptions/dependencies: operator availability; safe policy execution constraints; latency/streaming considerations; consistent mapping between teleop EE poses and robot control modes

- Visual RL training with reduced sim-to-real gap

- Sectors: robotics, software/ML

- Use cases: training SAC-like policies with privileged critic info and photorealistic observations; faster convergence via parallelism on single GPU

- Tools/workflows: asymmetric SAC; GSWorld parallelization only on moving Gaussians; domain randomization (e.g., color jitter); third-person camera setups

- Assumptions/dependencies: GPU memory and throughput; observation selection (third-person over wrist cams for exploration); minimal domain randomization may limit generalization beyond tested tasks

- Rapid onboarding of new workcells via streamlined GSDF creation

- Sectors: system integration, manufacturing engineering, robotics consulting

- Use cases: quick digital twin creation for new stations; alignment of robot URDFs to scene; physics-ready assets for training and validation

- Tools/workflows: ArUco-based metric scale alignment; ICP for robot/object transforms; K-NN segmentation of robot links; 2DGS for custom objects; mass weighing; optional amodal inpainting of unobserved object regions

- Assumptions/dependencies: clear line-of-sight for multi-view captures; static backgrounds during reconstruction; access to basic material and collision properties

- Pre-deployment safety rehearsal and failure diagnosis in a digital twin

- Sectors: safety engineering, policy/compliance

- Use cases: validating grasp strategies and motion plans; collision checks; step-through diagnosis of failure frames; documenting mitigations before physical deployment

- Tools/workflows: replay of failures inside the twin; collision mesh and gravity alignment; standardized action semantics for reproducible logs

- Assumptions/dependencies: sufficient physics fidelity for contact dynamics; hazard models; safety officer oversight; clear acceptance criteria

- Training dataset augmentation with photorealistic sim frames aligned to robot actions

- Sectors: software/ML, robotics

- Use cases: augment real datasets with photorealistic sim-rendered frames that keep action alignment; improve data diversity with controlled domain randomization

- Tools/workflows: GSWorld rendering wrapper; SH-based appearance; controlled lighting/material variations; augmentation pipelines

- Assumptions/dependencies: careful curation to avoid domain overfitting; labeling consistency; monitoring for dataset bias

- Cross-embodiment algorithm development and evaluation

- Sectors: academia, industry R&D

- Use cases: generalization studies across FR3, xArm6, and bimanual R1; embodiment-agnostic control strategy benchmarking; few-shot adaptation testing

- Tools/workflows: multiple GSDF embodiments with consistent interfaces; Pi0/ACT training pipelines; fixed task APIs

- Assumptions/dependencies: handling differences in kinematics and grippers; consistent calibration across embodiments

- Education and lab instruction using accessible digital twins

- Sectors: education

- Use cases: course assignments in manipulation; student teleop in photorealistic scenes; grading on reproducible benchmarks; lower-cost lab experiences

- Tools/workflows: GSWorld-enabled gym environments; keyboard/VR teleop; shared object libraries; cloud-hosted simulation instances

- Assumptions/dependencies: availability of modest compute; faculty familiarity with simulator stacks; institutional support for software deployment

Long-Term Applications

These applications will benefit from further research, scaling, and ecosystem development.

- GSDF asset marketplace and standard registry

- Sectors: industry platforms, academia

- Use cases: sharing validated digital twins for common tasks; interoperable assets across simulators; licensing and provenance tracking

- Tools/workflows: GSDF spec versions, validators, metadata schemas, hosting portals

- Assumptions/dependencies: community adoption; IP rights and licensing; quality assurance and curation

- Sim-to-real certification frameworks for deployment

- Sectors: policy/regulation, safety compliance

- Use cases: establishing test suites and acceptance criteria; correlating sim metrics with real safety outcomes; procurement standards

- Tools/workflows: standardized scenario coverage; sim-real correlation metrics; documented sim test artifacts

- Assumptions/dependencies: regulatory buy-in; liability frameworks; consensus on meaningful metrics

- Largely simulation-driven training of generalist robot assistants

- Sectors: consumer robotics, healthcare service robots, hospitality

- Use cases: pre-train VLA base models in diverse photorealistic twins; adapt with minimal real data via DAgger and teleop; reduce human labeling burden

- Tools/workflows: multi-scene GSDF libraries; generative domain randomization; mixed sim assets; scalable DAgger data generation

- Assumptions/dependencies: modeling dynamic humans; ethics, privacy and safety constraints; robust transfer to long-horizon, high-variability tasks

- Fully automated real2sim pipelines with minimal manual alignment

- Sectors: robotics software, digital twin providers

- Use cases: marker-less scale inference; automated robot/object segmentation; deformable physics parameter estimation (e.g., PhysTwin integration)

- Tools/workflows: learned reconstruction and alignment; active robot-assisted scans; parameter estimation for contact and elasticity

- Assumptions/dependencies: generalization across scenes and lighting; reliable perception under occlusions; scalable compute

- Factory and energy site digital twin operations platforms

- Sectors: manufacturing, energy, utilities

- Use cases: continuous policy improvement; virtual try-outs for new cells; predictive validation before line changes

- Tools/workflows: GSWorld integrated with MES/PLM; simulation clusters; DAgger “studio” for corrective labeling; RL farms

- Assumptions/dependencies: IT/security integration; uptime requirements; robust twin fidelity for complex machinery

- Cloud-based remote robotics services with sim-in-the-loop fallback

- Sectors: logistics, field service, retail fulfillment

- Use cases: remote operator pools; haptic/VR interfaces; sim preview before risky manipulation; fallback during network outages

- Tools/workflows: low-latency streaming; task-level “shadow” twins; automated state reconciliation between sim and real

- Assumptions/dependencies: network QoS; privacy/security; accurate state sync; operator training

- Photorealistic training for delicate healthcare tasks

- Sectors: healthcare logistics, pharmacy automation, hospital supply chain

- Use cases: training and validating manipulation in sterile environments; simulated risk assessment before ward deployment

- Tools/workflows: high-fidelity physics tuning; strict material properties; safety validation suites; human-in-the-loop corrections

- Assumptions/dependencies: stringent physics accuracy; regulatory constraints (e.g., FDA/EMA considerations); privacy controls for real captures

- Curriculum-scale adoption of digital twins in education

- Sectors: education

- Use cases: standardized lab modules; automated grading via reproducible benchmarks; remote learning labs; AR overlays for learning

- Tools/workflows: GSDF asset libraries and sharing; cloud lab orchestration; LMS integration

- Assumptions/dependencies: funding and compute availability; faculty training; student access policies

- Integration with generative 3D and amodal reconstruction

- Sectors: software/ML, robotics

- Use cases: synthesize unseen objects and occluded surfaces; text-to-GSDF workflows; augment task diversity at scale

- Tools/workflows: 3D object generation; amodal completion; simulation-aware filters for physics realism

- Assumptions/dependencies: reliability of generative models; mitigating physics mismatches; bias and safety checks

- Procurement, finance, and ROI modeling informed by standardized benchmarks

- Sectors: finance, strategy, procurement

- Use cases: sim-based comparative trials of robot vendors; TCO projections from benchmark performance; investment decision support

- Tools/workflows: KPI dashboards; cost-performance correlates from sim-real data; reproducible trial protocols

- Assumptions/dependencies: vendor participation; accurate cost capture; acceptance of sim-derived evidence in decision processes

Glossary

- 3D Gaussian Splatting (3DGS): An explicit neural rendering method representing scenes as collections of 3D Gaussians for fast, photorealistic rasterization. "couples 3D Gaussian Splatting (3DGS) with physics"

- Action space: The set of control commands a policy can issue, defined by the robot’s native interface. "operate in a mismatched action space"

- ArUco marker: A square fiducial marker used to determine absolute scale and pose in vision-based calibration. "include a printed ArUco marker"

- Asymmetric SAC: A variant of Soft Actor-Critic where the critic gets privileged (extra) information and the actor gets limited observations. "We trained asymmetric SAC"

- Bimanual manipulation: Robotic manipulation using two arms/hands to interact with objects. "single-arm and bimanual manipulation"

- Camera frustum: The pyramidal volume defining what the camera can see; used to cull out-of-view points before rendering. "removing points that lay outside the camera frustum."

- Camera intrinsics/extrinsics: Intrinsics are internal camera parameters; extrinsics define camera pose relative to the world. "fixed camera intrinsics/extrinsics"

- Center of mass: The point representing the average position of mass in an object; crucial for physical simulation. "including mass, center of mass, and inertia tensor"

- Closed-loop: A development cycle where training, evaluation, failure diagnosis, and relabeling happen in the same environment. "“Closed-loop” here means the same environment can be used to train, evaluate, diagnose failures, and relabel"

- COLMAP: A structure-from-motion and multi-view stereo pipeline used to initialize 3D reconstructions. "relies on COLMAP"

- Collision mesh: A simplified geometry used by physics engines to detect contacts and collisions efficiently. "attach collision meshes and material properties"

- Covariance matrix: A 3×3 matrix encoding the shape and orientation of each 3D Gaussian in splatting. "covariance matrix in the world frame."

- DAgger (Dataset Aggregation): An interactive imitation learning algorithm that iteratively collects corrective expert data from failure states. "DAgger is a solution to this case where corrective data is used to train the model to adapt to failure cases."

- Differentiable rendering: Rendering where gradients can be computed with respect to scene parameters, enabling optimization from images. "proposes to optimize robot kinematics from differentiable rendering."

- Digital twin: A high-fidelity virtual replica of a real-world environment or system used for simulation and analysis. "physical world and its digital twin."

- Domain randomization: Varying visual/physical properties in simulation to improve robustness and reduce sim-to-real gaps. "domain randomization and mixed simulation"

- DoF (Degrees of Freedom): Independent axes of motion in a robot’s kinematic chain. "two 6-DoF arms."

- Gaussian-on-Mesh representation: A hybrid asset format combining Gaussian splats atop mesh geometry for photorealistic yet structured scenes. "infuses Gaussian-on-Mesh representation with robot URDF and other objects."

- GSDF (Gaussian Scene Description File): A standardized asset format bundling Gaussian-based visuals with robot/object metadata for simulators. "we term GSDF (Gaussian Scene Description File)"

- ICP (Iterative Closest Point): An algorithm to align two 3D point sets by iteratively minimizing distances between corresponding points. "perform an ICP to compute the rigid transform"

- Imitation Learning (IL): Learning policies from expert demonstrations rather than trial-and-error. "Simulation Data Collection and Visual Imitation Learning (IL)."

- Inertia tensor: A matrix describing how an object's mass is distributed, influencing rotational dynamics. "including mass, center of mass, and inertia tensor"

- Jacobian: A matrix of partial derivatives mapping local changes to transformed coordinates; used in projecting covariances. "where is the Jacobian of the projection matrix"

- Joint position control: A control mode where actions specify target joint angles directly. "We mainly use joint position control for the robots."

- K-NN (k-nearest neighbors): A simple proximity-based method used here for segmenting robot links in reconstructed point clouds. "we use K-NN to segment robot links in ."

- Kinematics: The geometry of motion describing joint configurations and end-effector poses without considering forces. "optimize robot kinematics from differentiable rendering."

- ManiSkill: A robotics simulation platform providing physics, tasks, and parallel environments. "using ManiSkill~\cite{taomaniskill3} as the simulator backend."

- MPlib: A motion planning library used to generate expert trajectories. "We leverage the MPlib motion planner"

- Motion planner: An algorithm that computes feasible paths or trajectories for the robot to achieve goals. "run the motion planner to obtain corrective data"

- Mujoco-Jax: A JAX-accelerated version of MuJoCo emphasizing simulation efficiency. "Mujoco-Jax~\cite{zakka2025-mujocojax} exploits just-in-time compilers"

- NeRFs (Neural Radiance Fields): Implicit neural representations for volumetric rendering of scenes from images. "use NeRFs"

- Opacity: The per-Gaussian alpha controlling transparency and compositing order during splatting. "alpha_i is the opacity of the Gaussian conditioned on ."

- Projection matrix: The camera matrix mapping 3D points to the image plane for rendering. "according to the projection matrix of the camera"

- Proprioception: Internal robot sensing (e.g., joint positions) used by policies alongside visual input. "robot proprioceptions "

- PyBullet: A real-time physics engine widely used in robotics simulation. "combined 3DGS with PyBullet"

- Rasterization-based: Rendering via discrete projection and compositing rather than ray tracing; fundamental to 3DGS speed. "a rasterization-based method"

- Ray tracing: Physically-based rendering technique that simulates light paths for high-fidelity images. "ray-tracing techniques"

- Real-to-sim-to-real pipeline: A bidirectional workflow reconstructing real scenes into simulation and deploying trained policies back to hardware. "Our robust real-to-sim-to-real pipeline accurately aligns the simulation"

- Sim2real (simulation-to-real): Transferring policies trained in simulation to real-world deployment. "zero-shot sim2real policy transfer"

- Spherical harmonics: Basis functions used to encode view-dependent color in Gaussian splatting. "encoded with a spherical harmonics map"

- Surface fitting: Aligning geometric models to observed surfaces to estimate transforms and poses. "surface fitting (e.g., ICP)"

- URDF (Unified Robot Description Format): A standardized XML format describing robot geometry, kinematics, and dynamics. "aligns the robot URDF to the scene via surface fitting (e.g., ICP)."

- Virtual teleoperation: Human control of robots inside simulation, often via keyboard/mouse or VR, for data collection. "virtual teleoperation for data collection."

- Visual Benchmarking: Evaluating policies with standardized visual assets and setups to compare performance across scenes and robots. "reproducible visual benchmarking"

- Visual Reinforcement Learning (Visual RL): Learning policies from visual observations with reward-driven interaction. "Visual RL."

Collections

Sign up for free to add this paper to one or more collections.