- The paper introduces BugPilot’s FeatAdd method for naturally generating realistic, complex bugs that enhance SWE agent training with improved efficiency.

- It uses feature-driven code modifications that mimic actual development workflows, resulting in a 2% performance boost using half the training data.

- The method yields diverse, difficult bugs across multiple files, demonstrating robust real-world applicability compared to traditional synthetic datasets.

BugPilot: Complex Bug Generation for Efficient Learning of SWE Skills

The paper "BugPilot: Complex Bug Generation for Efficient Learning of SWE Skills" introduces a method for generating complex, diverse software bugs to aid in training software engineering agents. The focus is on creating realistic bugs through feature addition that mimic real-world software development challenges more closely than existing synthetic methods.

Introduction

Software engineering (SWE) tasks have seen significant advancements with LLM-based agents. However, these advancements rely heavily on proprietary models, and improving open-weight models remains difficult due to the lack of large, high-quality bug datasets. The paper addresses this by introducing BugPilot, emphasizing synthetic bug creation via realistic SWE workflows rather than intentional error injection.

BugPilot Methodology

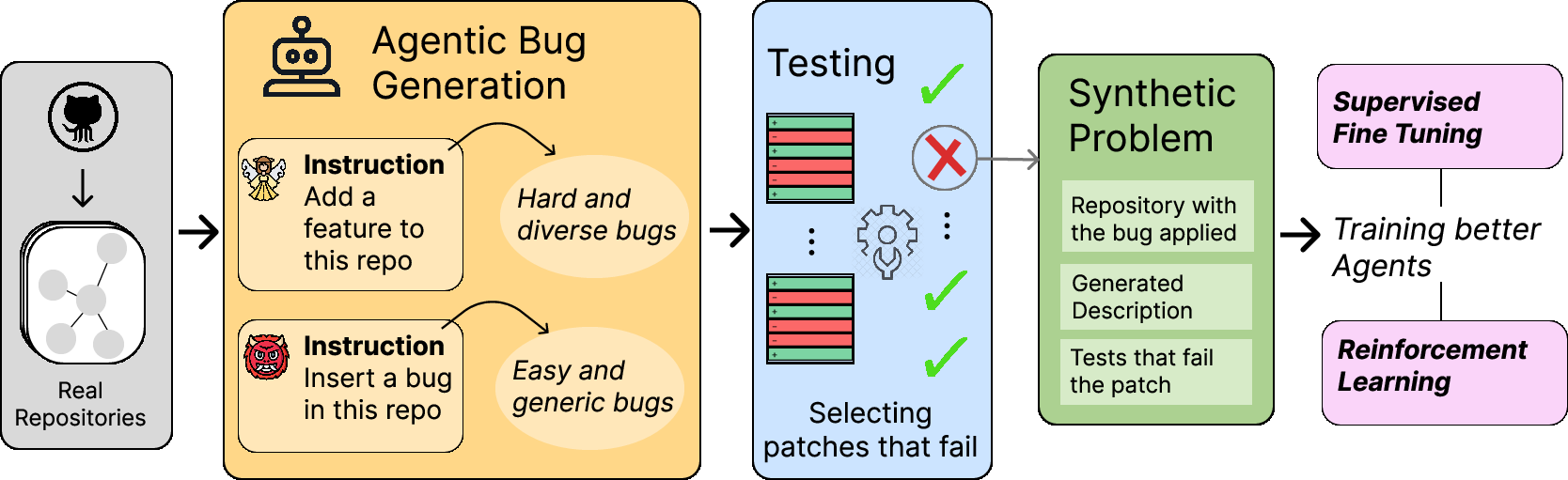

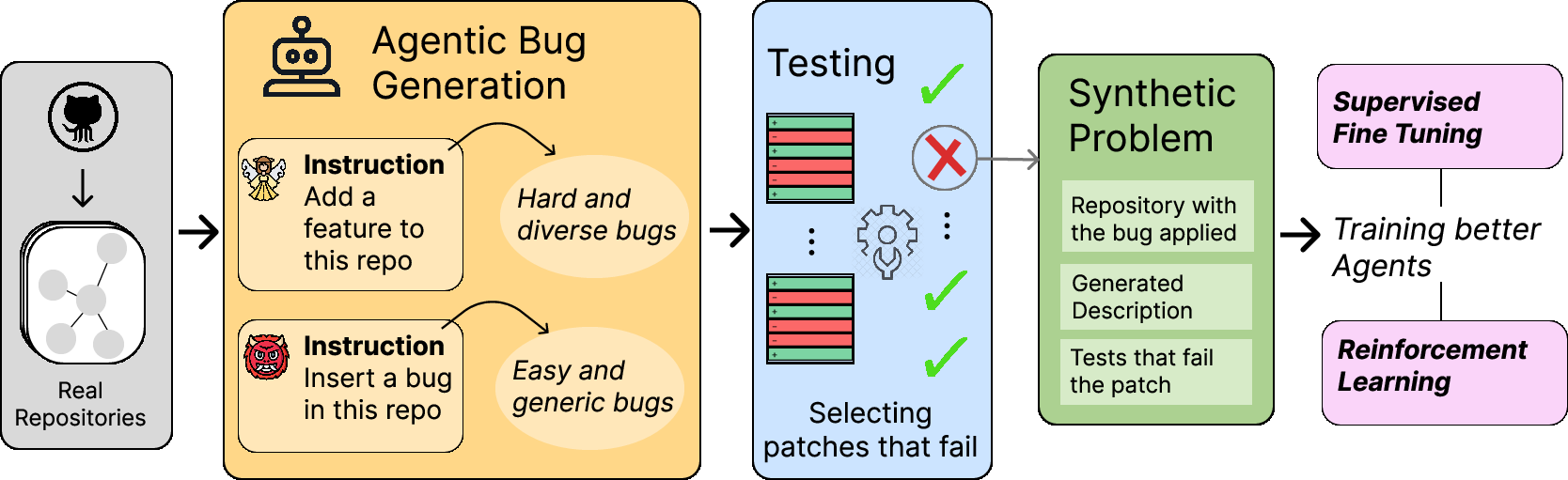

BugPilot centers around two main strategies: BugInstruct and FeatAdd. The former involves intentionally embedding bugs into the codebase, whereas FeatAdd engages SWE agents to add new features, with bugs arising naturally if the additions inadvertently break existing test suites.

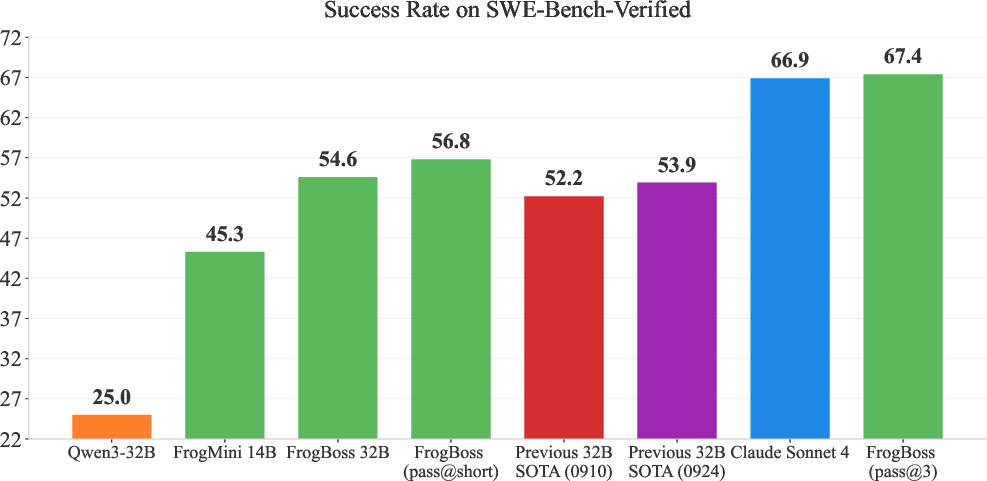

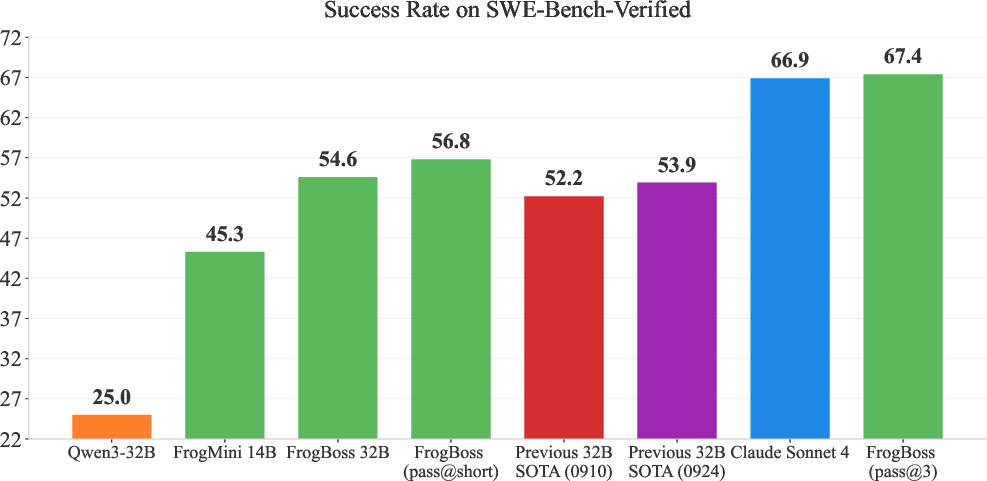

Figure 1: Comparison to previous SoTA results. FrogBoss achieves a 54.6\% pass@1 performance, highlighting the efficacy of FeatAdd-generated data.

BugPilot's FeatAdd methodology aligns more closely with natural bug generation. In contrast to synthetic bugs from local code perturbations, FeatAdd involves larger, more complex code changes distributed across multiple files, mimicking authentic development processes.

Empirical evaluations demonstrate that BugPilot's FeatAdd bugs outperform existing datasets in fostering model training efficiency, achieving a 2\% performance increase with half the training data required by competing methods. FrogBoss, using FeatAdd, consistently reaches state-of-the-art results on the SWE-Bench Verified dataset, marking a pass@1 of 54.6\%.

Figure 2: Illustration of the BugPilot pipeline involving agent-based feature addition.

Bug Characteristics and Dataset Analysis

Analyses reveal that FeatAdd bugs exhibit greater diversity and complexity compared to synthetic datasets like SWE-Smith. The distribution of FeatAdd bugs closely mirrors real-world scenarios, making them more challenging and effective for training SWE models.

Comparison of bug difficulty shows FeatAdd datasets as the most difficult among tested methods, with a significant drop in success rates for contemporary models, indicating the robustness and real-world applicability of the introduced bugs.

(Table 1)

Table 1: Bug statistics highlight that FeatAdd approach leads to significantly larger changes in codebases.

Implications and Future Work

BugPilot's contribution lies in its ability to generate high-quality, scalable training data essential for advancing open SOTA LLMs in SWE tasks. Future development could enhance the generation of specific bug types to bolster agent capabilities in targeted domains, expanding beyond bug-fixing to other SWE tasks like test generation.

Conclusion

The BugPilot framework provides a novel approach to synthetic bug generation that effectively boosts the learning efficiency and performance of SWE agents. By reflecting realistic development workflows, BugPilot stands as a valuable asset for the progression of open-weight LLMs, fostering more resilient and adaptable SWE systems in real-world applications.