- The paper presents a dual-network approach that integrates Deep-UNet segmentation with a transformer-based metadata module to improve diagnostic accuracy.

- It employs data balancing and augmentation strategies to address class imbalance, resulting in enhanced Dice and IoU metrics.

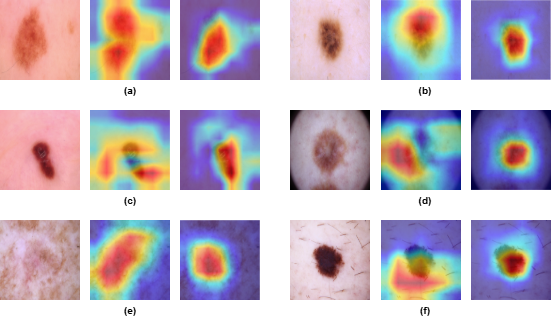

- Grad-CAM visualizations confirm that the model focuses precisely on lesion areas, increasing interpretability and clinical trust.

Introduction

The paper addresses the challenge of automating skin cancer diagnosis from dermoscopic images, a task fraught with difficulties due to intraclass variability and subtle interclass differences. The approach combines lesion segmentation and clinical metadata fusion within a dual-network, attention-based framework to enhance both accuracy and interpretability. The model consists of a novel Deep-UNet for lesion segmentation and a classification mechanism leveraging DenseNet201 encoders and a transformer-based metadata embedding module. This dual-input design directs focus to pertinent pathological regions, potentially increasing trust in its predictions.

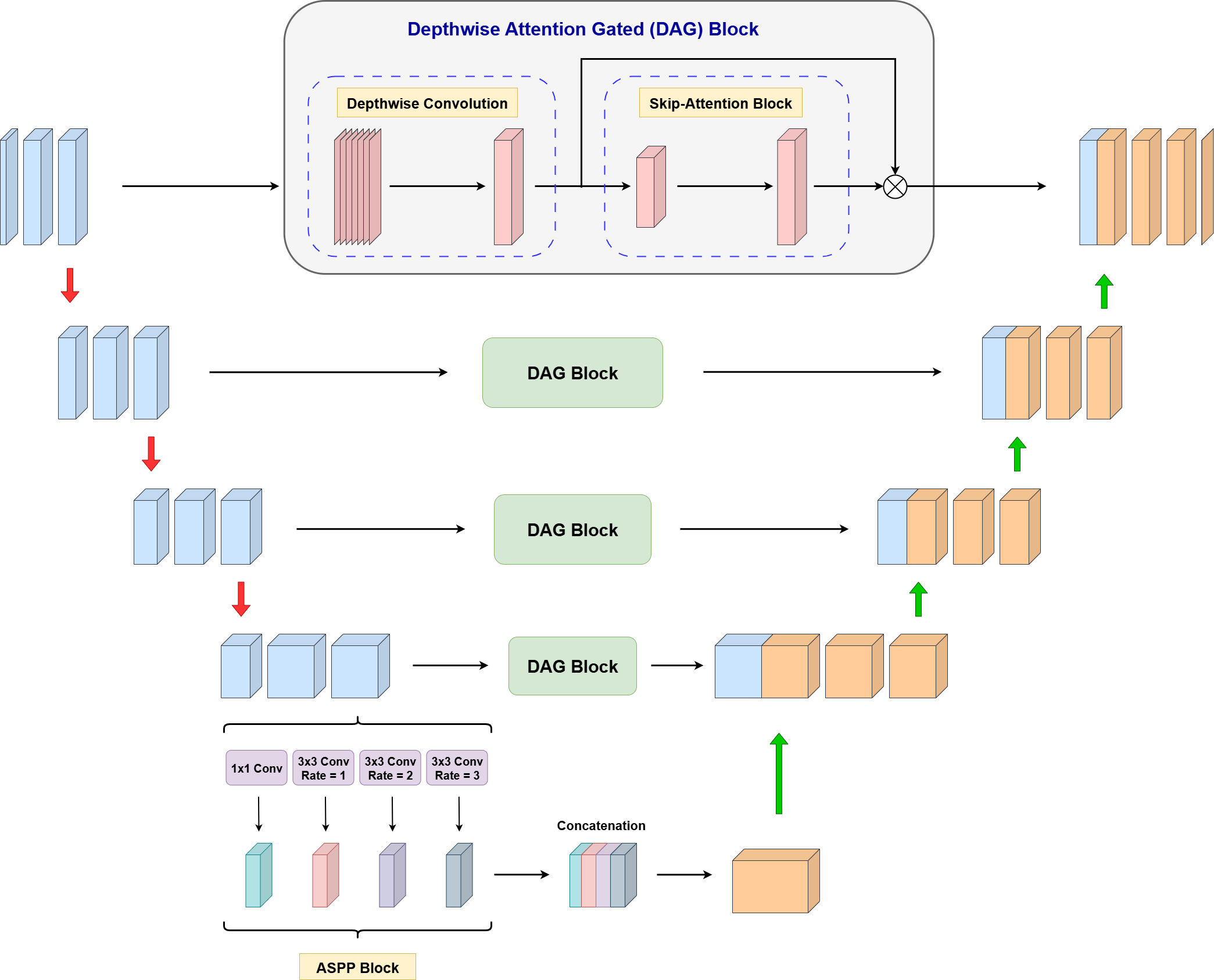

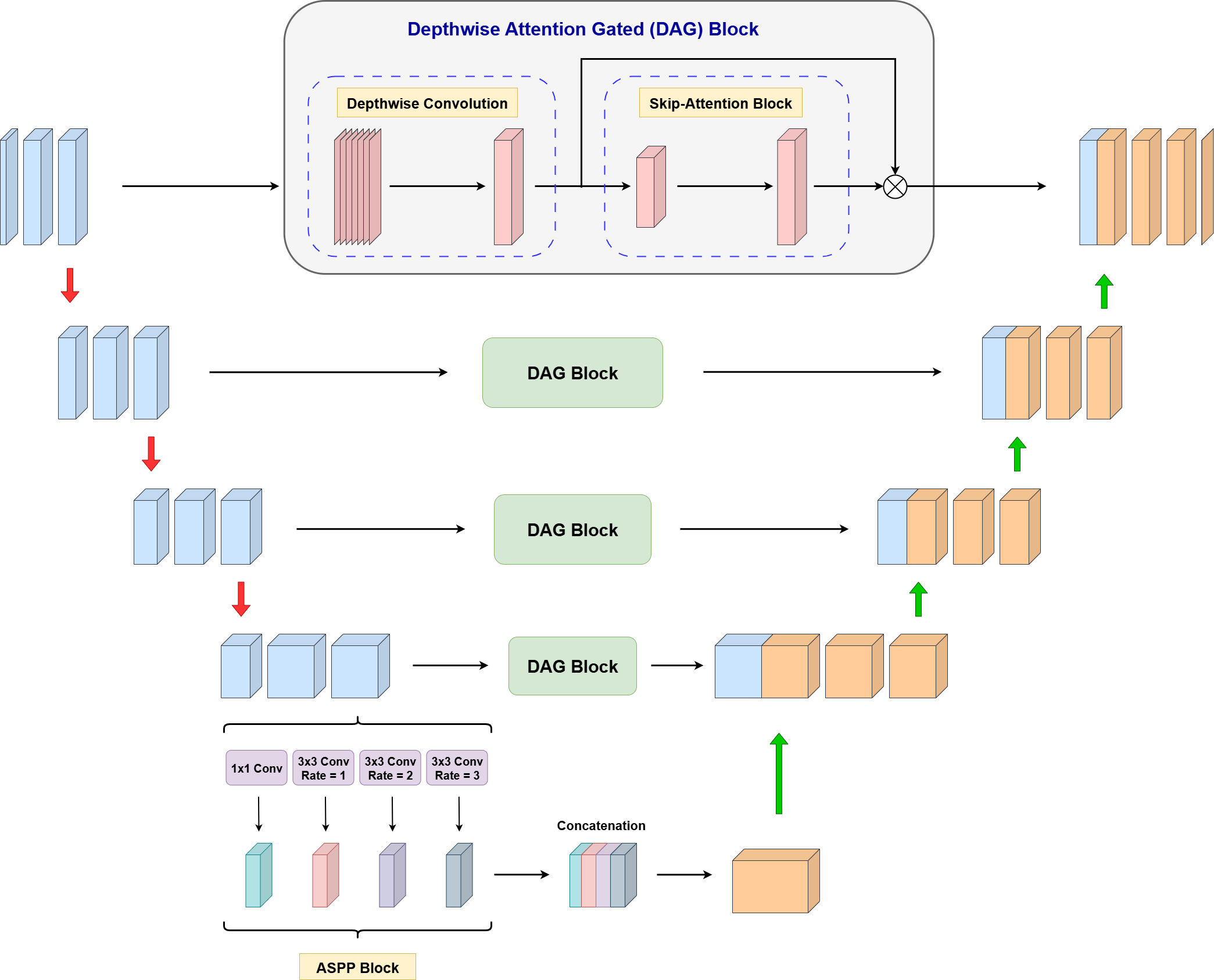

Deep-UNet Architecture for Segmentation

The Deep-UNet developed in this work enhances traditional U-Net structures by using an EfficientNet-B3 encoder and integration of Dual Attention Gates (DAG) and Atrous Spatial Pyramid Pooling (ASPP) for multi-scale feature extraction, focusing on lesion details.

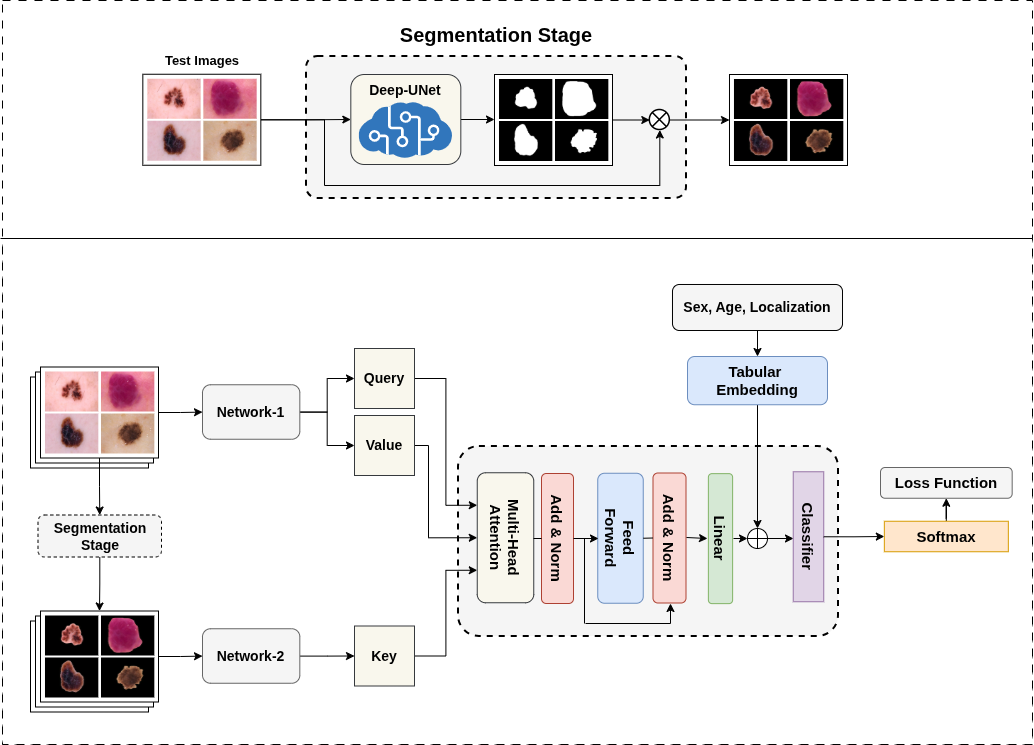

Figure 1: Overview of the proposed Deep-UNet architecture for skin lesion segmentation. The model uses an EfficientNet-B3 encoder, an ASPP bottleneck for multi-scale feature extraction, and a decoder with Depthwise Attention Gated (DAG) skip connections and deep supervision outputs.

The design captures fine-grained lesion details while efficiently handling variability in lesion appearance and boundaries. Training employs deep supervision with auxiliary outputs that offer additional guidance during learning, ensuring that deeper layers also capture meaningful features early in training. This contributes to a significantly improved Dice coefficient and Intersection over Union (IoU) when evaluated on the ISIC 2018 dataset, demonstrating superior efficacy compared to baseline models.

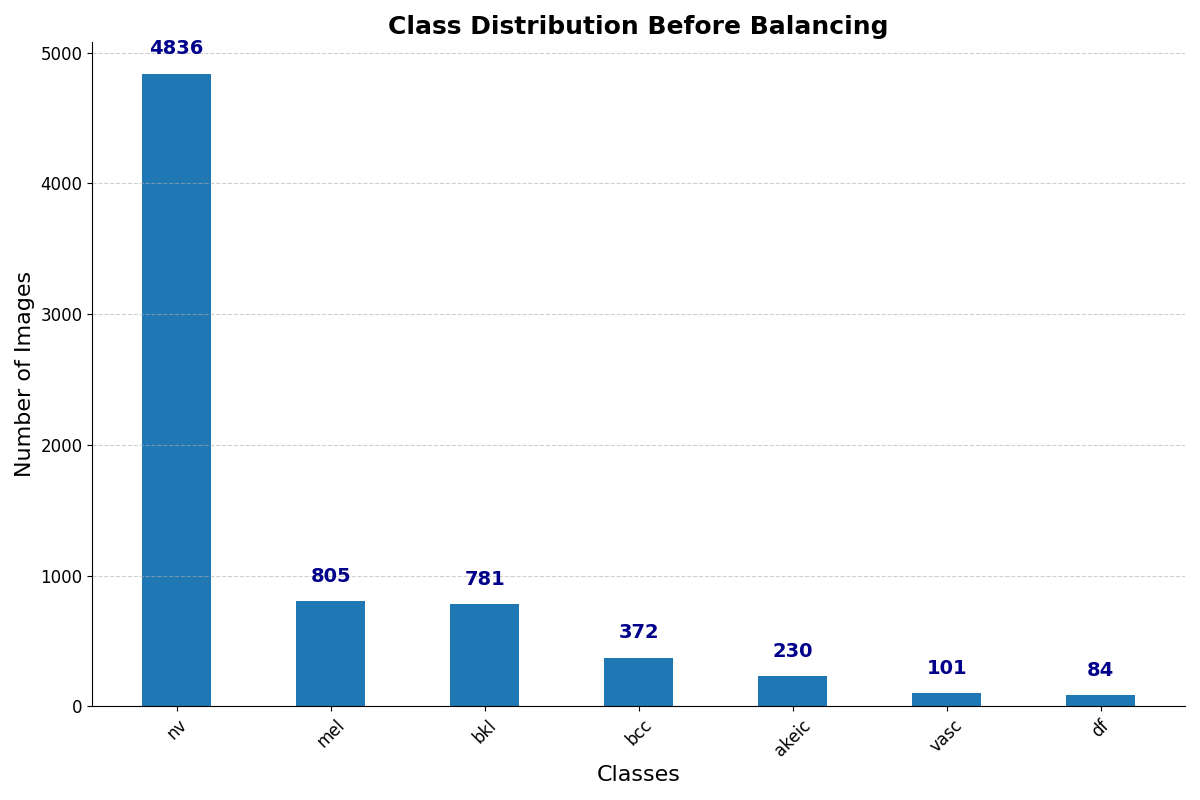

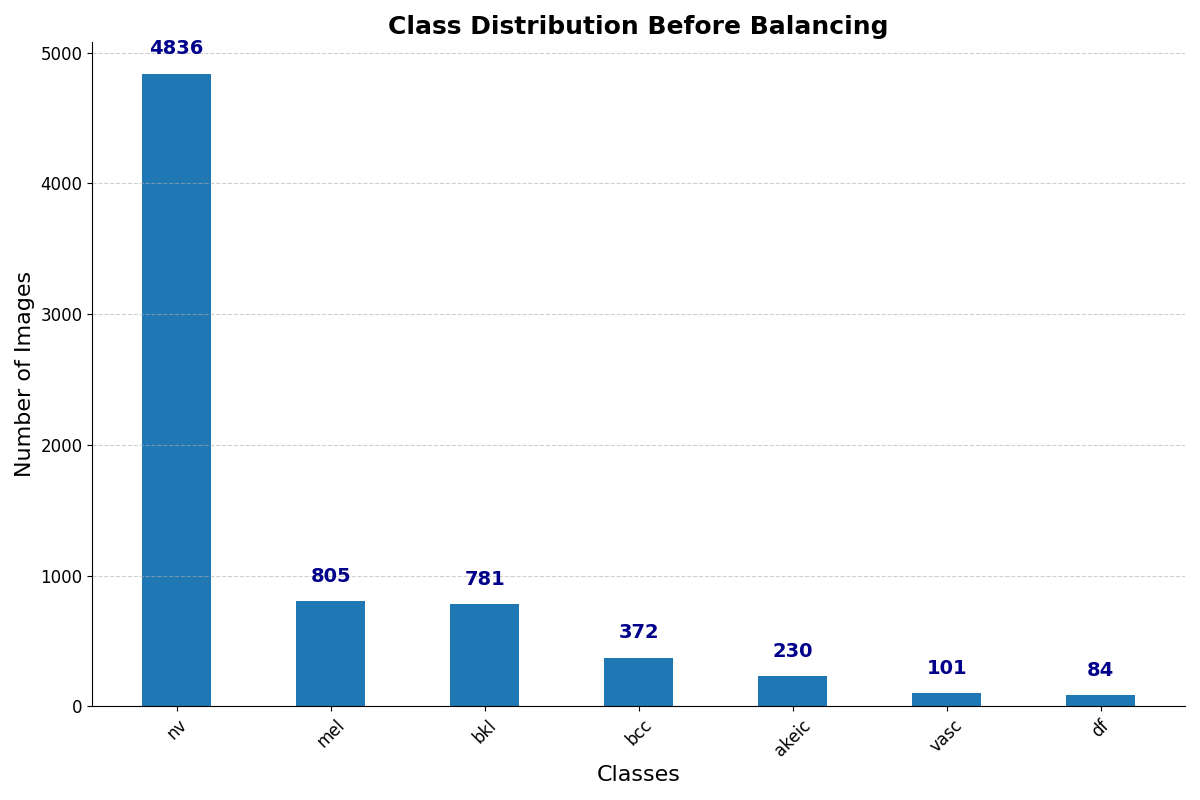

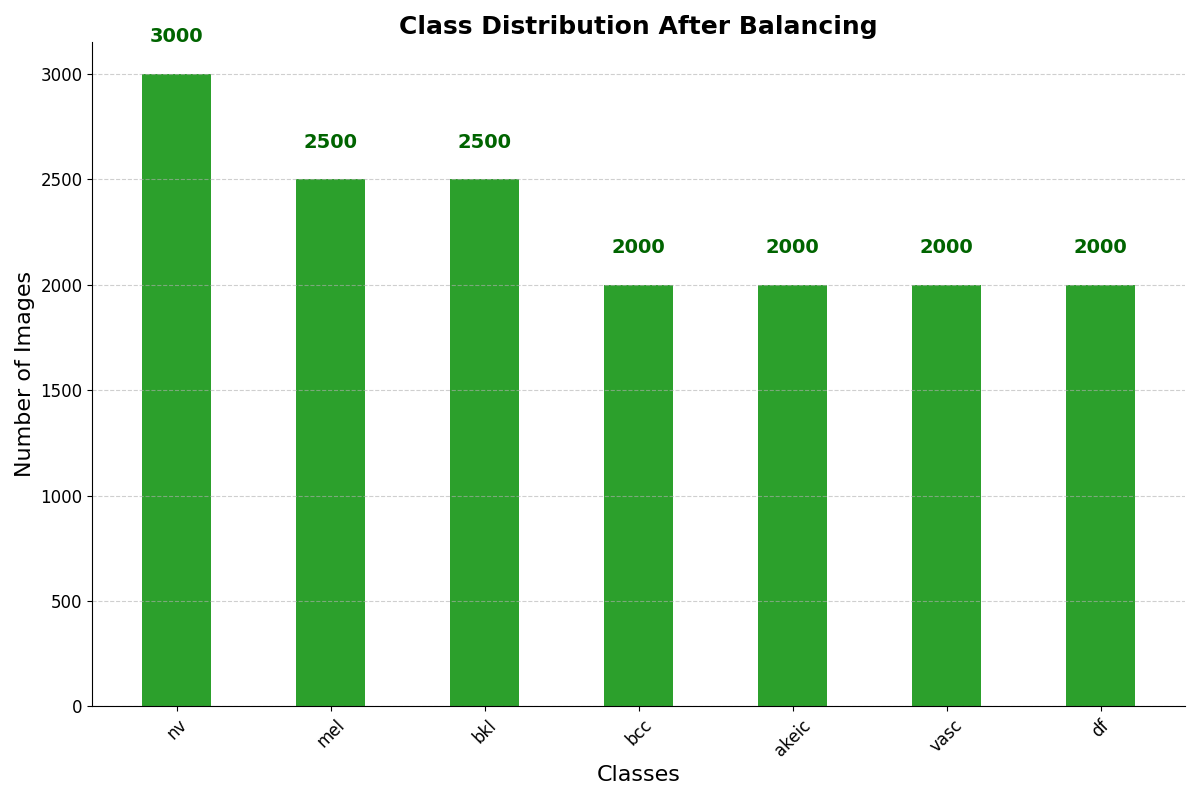

Dataset Balancing and Augmentation

Addressing class imbalance in datasets like HAM10000 and ISIC 2019, balancing strategies and image augmentations ensure a more representative training set. Techniques used include horizontal flips and random rotations to introduce variability while maintaining normalization standards.

Figure 2: Class distribution in the training dataset before (left) and after (right) balancing for the HAM10000 dataset.

Such a balanced dataset corrects biases while preventing overfitting, thus reinforcing model generalization without compromising dataset integrity.

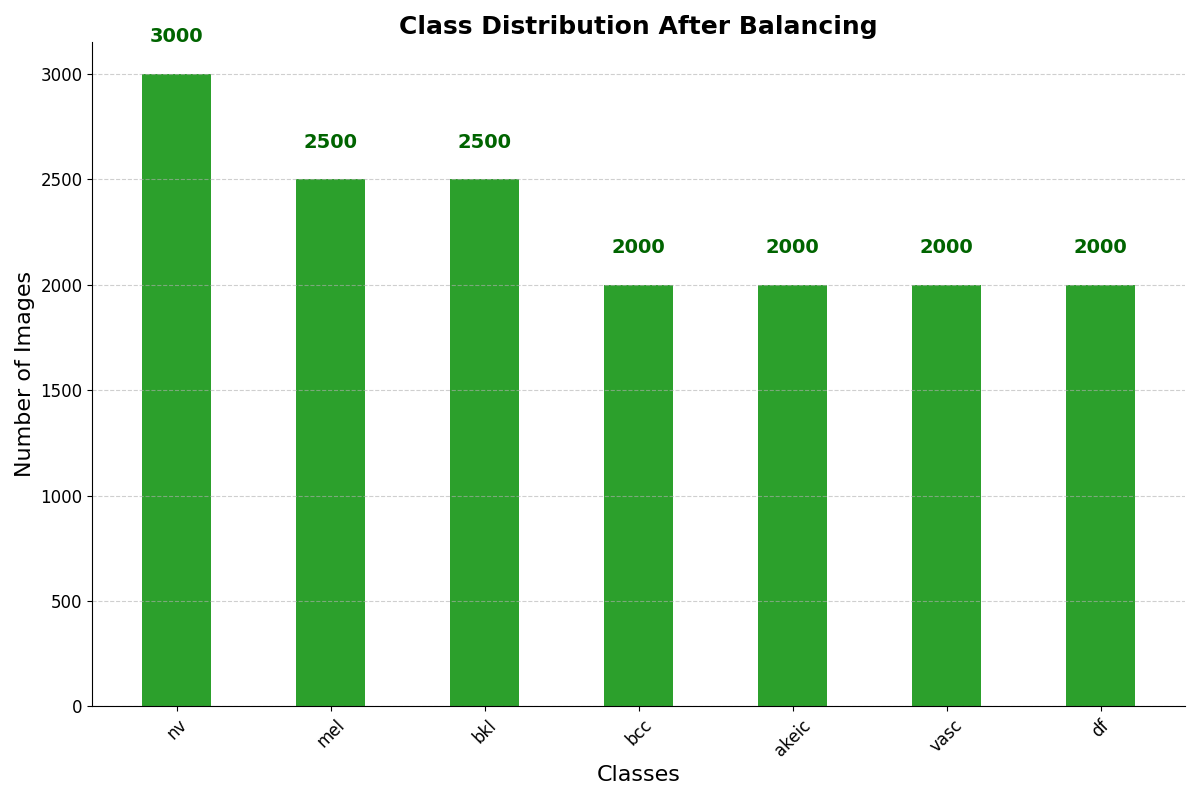

The Transformer Dual-Branch Network (TDBN) innovatively integrates two parallel DenseNet201 encoders—handling original and segmented lesion images respectively—and a Multi-Head Cross-Attention (MHCA) mechanism. This setup merges global and lesion-specific features, augmented further by clinical metadata encoded through a transformer-based module.

Figure 3: Architecture of the proposed Transformer Dual-Branch Network (TDBN), integrating dual DenseNet encoders, multi-head cross-attention, transformer-based metadata embedding, and additive multimodal fusion.

The resulting model produces significant improvements when tested on HAM10000 and ISIC 2019 datasets, outperforming traditional architectures like ResNet50 and standard DenseNet201. The TDBN framework demonstrates impressive accuracy and area under the curve (AUC) scores, substantiating its capacity to handle the complex task of skin lesion classification while integrating rich contextual and clinical data.

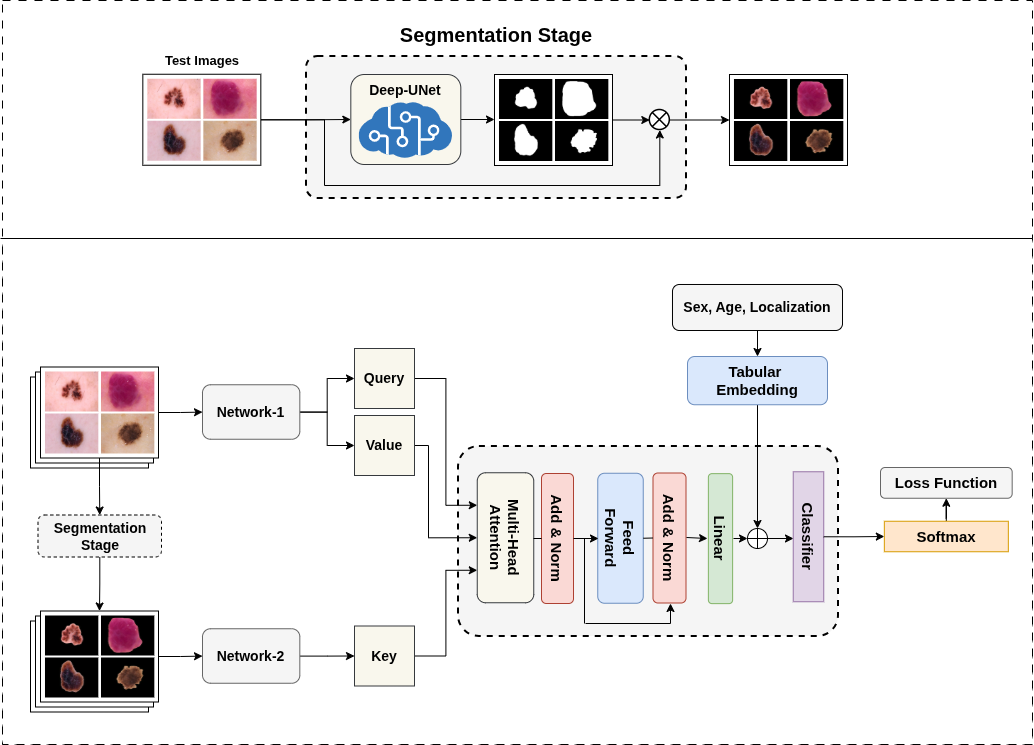

Model Explainability via Grad-CAM

To address the "black-box" nature of deep learning models, the paper employs Grad-CAM for visual insights into the model's decision-making process. By generating heatmaps, it is confirmed that the dual-network model focuses primarily on lesion areas, unlike some conventional models that may erroneously prioritize background artifacts.

Figure 4: Grad-CAM visualizations for four different examples. Each set (a-f) shows (left to right): the Original Image, the heatmap from a baseline DenseNet201, and the heatmap from our Proposed Model. Our model's activations are consistently and precisely focused on the lesion area, unlike the baseline's diffuse or misplaced focus, demonstrating superior explainability and reliability.

Such enhanced explainability is crucial for fostering clinical trust, holding promise for real-world diagnostic applications.

Conclusion

The proposed framework successfully integrates lesion segmentation with clinically relevant data, forming a dual-network system that targets improved accuracy and interpretability. The paper's results indicate a marked advancement in explainable AI for healthcare, achieving superior performance metrics and decision reliability. Future work could extend this system's generalizability to encompass a broader range of clinical data and diverse datasets, advancing towards deployment in clinical environments.