- The paper introduces a modular AI platform integrating multiple sensors to monitor poultry welfare and farm productivity.

- It employs advanced algorithms (CMA-ES, MAP-Elites, Conv-DAE) for optimal camera placement, anomaly detection, and precise egg counting.

- The system achieves 100% egg-count accuracy and <2% error in production forecasting, demonstrating robust edge deployment efficacy.

System Overview and Architecture

The Poultry Farm Intelligence (PoultryFI) platform presents a modular, multi-sensor AI system designed to address the dual objectives of animal welfare and operational efficiency in poultry farming. The architecture integrates six core modules: Camera Placement Optimizer, Audio-Visual Monitoring Module (AVMM), Analytics and Alerting Module (AAM), Real-Time Egg Counting, Production and Profitability Forecasting Module (PPFM), and a Recommendation Module. The system is engineered for deployment on low-cost edge hardware, with a focus on scalability, robustness, and ease of integration into existing farm operations.

Figure 1: PoultryFI modules grouped by their primary impact on Farm Operations, Animal Welfare, or both.

The modular design enables independent development and deployment of each component, facilitating rapid iteration and adaptation to diverse farm environments. The system eschews invasive individual animal tracking in favor of population-level behavioral analytics, leveraging synchronized audio-visual and environmental data streams to generate actionable insights.

Figure 2: Overview of the PoultryFI System, illustrating data flow between modules and sources.

Camera Placement Optimization

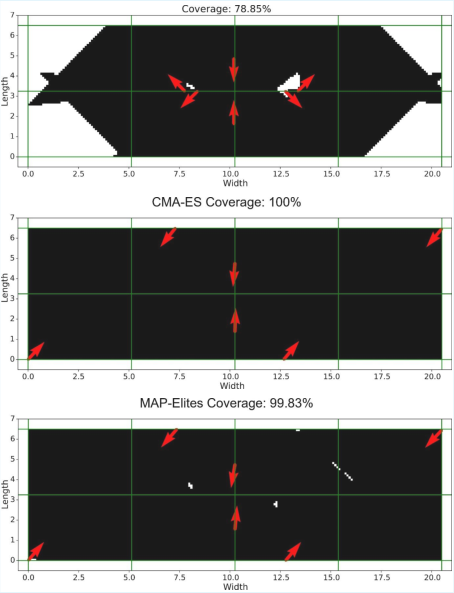

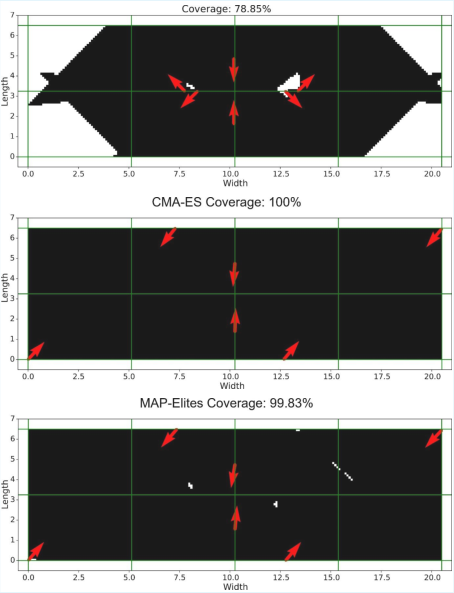

Optimal sensor deployment is critical for comprehensive monitoring. The Camera Placement Optimizer employs Covariance Matrix Adaptation Evolution Strategy (CMA-ES) and MAP-Elites, a quality-diversity algorithm, to maximize visual coverage with minimal hardware. The optimizer models the poultry house as a 2D grid, encoding each camera by position, orientation, and field of view. The fitness function quantifies the proportion of the area covered, and MAP-Elites explores diverse beam utilization strategies.

Empirical results demonstrate that both CMA-ES and MAP-Elites achieve 100% coverage with six cameras in a 20.5m × 6.5m house, with the deployed solution exhibiting bilateral symmetry and minimal blind spots.

Figure 3: Camera placement solutions: manual, CMA-ES optimized (deployed), and MAP-Elites (diverse).

Audio-Visual Monitoring and Anomaly Detection

The AVMM is built on distributed Raspberry Pi nodes, each equipped with a camera, microphone, and local storage, coordinated by a central Synchronization and Processing Engine (SPE). The system continuously acquires audio (24/7, 58s windows) and video (20h/day), with all hardware housed in custom enclosures for environmental resilience.

Audio-Based Anomaly Detection

A convolutional denoising autoencoder (Conv-DAE) with a ResNet18 encoder is trained unsupervised on Mel-spectrograms of farm audio, learning a manifold of normal flock vocalizations. Anomalies are detected via elevated reconstruction error, with PCA and k-means clustering revealing semantic clusters corresponding to resting, normal, and stressed states. This approach circumvents the need for unreliable expert annotation and generalizes across farms.

Audio-Based Feeding Detection

Feeder activity is detected by training a lightweight feedforward classifier on the frozen encoder embeddings, achieving an AUC of 0.9944 on the test set with a two-hidden-layer architecture. Majority-vote smoothing over sliding windows ensures robustness to noise.

Video-Based Motion Detection

Motion is quantified using MOG2 background subtraction, producing a motion score per recording. This metric serves as a proxy for flock activity, enabling detection of both lethargy and panic.

Figure 4: Daily profile of audio indicator averages, with dynamic thresholds for alerting.

Figure 5: Daily profile of video indicator averages, with dynamic thresholds for alerting.

Analytics, Alerting, and Forecasting

The AAM aggregates AVMM outputs and environmental sensor data (temperature, humidity) to generate real-time alerts and daily operational profiles. Short-term forecasting of environmental variables is performed using linear regression models with historical profiles, achieving RMSEs below 1°C for temperature and 2% for humidity over a 3-hour horizon. Dynamic, time-of-day-specific thresholds are established for behavioral metrics, minimizing false positives and ensuring context-aware alerting.

Figure 6: Temperature profile for multiple day intervals, illustrating temporal patterns.

Figure 7: Humidity profile for multiple day intervals, illustrating temporal patterns.

Real-Time Egg Counting

The Real-Time Egg Counting module retrofits existing grading machines with a Raspberry Pi 5 and Pi Camera V2. EfficientDet-Lite0, trained on a custom dataset, is deployed for egg detection and tracking. Calibration is automated via DBSCAN and Hough transform to identify weight gate ROIs. On Raspberry Pi 5, the system achieves 100% counting accuracy at 0.046s/frame, matching desktop performance and outperforming YOLOv8n and Pi 4 configurations.

Figure 8: Egg counting results across devices and models, demonstrating edge deployment feasibility.

Production and Profitability Forecasting

The PPFM integrates multimodal features—historical production, environmental data, AVMM indicators, and flock demographics—into a linear regression model predicting 10-day average egg production. Feature engineering and ablation studies identify the most predictive variables, with the final model achieving a test MAE of 0.01 (less than 2% of average daily production). The model also estimates cost-per-egg by integrating feed consumption and cost data.

Figure 9: Predictions of the final egg productivity model, with test error below 2% of average daily production.

Recommendation Module

The Recommendation Module synthesizes alerts, forecasts, and external weather data to generate prescriptive, context-aware management guidance. Recommendations are dynamically tailored to current and forecasted conditions, encompassing environmental control, feeding, and operational planning. The system supports phased adoption, allowing farms to incrementally integrate modules according to operational needs.

All modules were deployed and validated in a commercial, open poultry house (1,200 capacity, ~750 birds during trials) in Cyprus. The system demonstrated:

- 100% egg-counting accuracy on Raspberry Pi 5

- Robust anomaly detection in audio and video streams

- Reliable short-term environmental forecasting (RMSE < 1°C, <2% humidity)

- Egg production forecasting with <2% error over a 10-day horizon

- Effective, context-aware alerting and recommendations

Implications, Limitations, and Future Directions

PoultryFI demonstrates that multi-modal, edge-deployed AI can deliver continuous, non-invasive welfare monitoring and operational analytics in commercial farm settings. The modular architecture supports scalability and adaptation to diverse environments and species. However, challenges remain in handling rare events, ambient noise variability, and visual occlusions. Generalizability across farm types and climates requires further study.

Future work should explore deeper integration of welfare and forecasting models, reinforcement learning for automated control, and broader field trials. Commercially, integration with farm management software and mobile interfaces will facilitate adoption.

Conclusion

PoultryFI establishes a comprehensive, low-cost AI platform for precision livestock farming, integrating sensor optimization, multi-modal welfare analytics, real-time production tracking, predictive modeling, and prescriptive decision support. The system advances the state of the art in scalable, actionable farm intelligence, with demonstrated efficacy in real-world deployment. Its modular, edge-centric design provides a blueprint for future AI-driven agricultural systems targeting both productivity and animal welfare.