NANO3D: A Training-Free Approach for Efficient 3D Editing Without Masks (2510.15019v1)

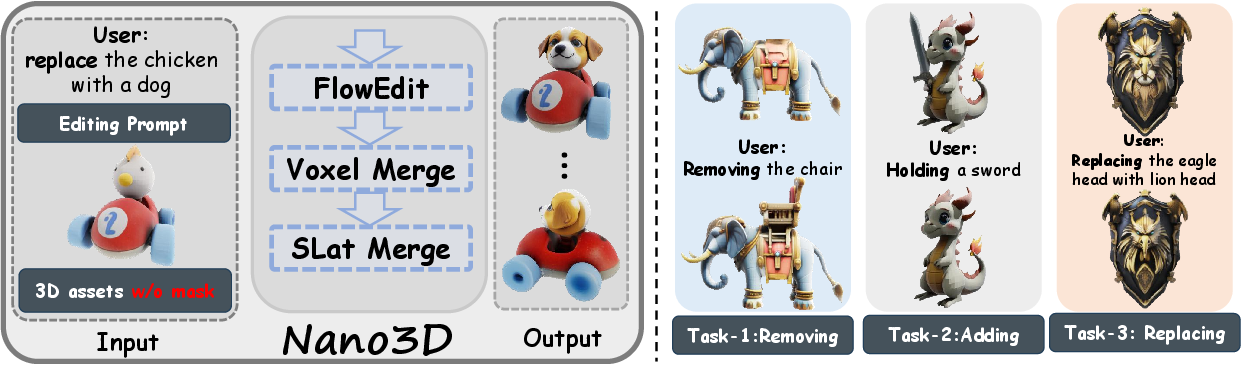

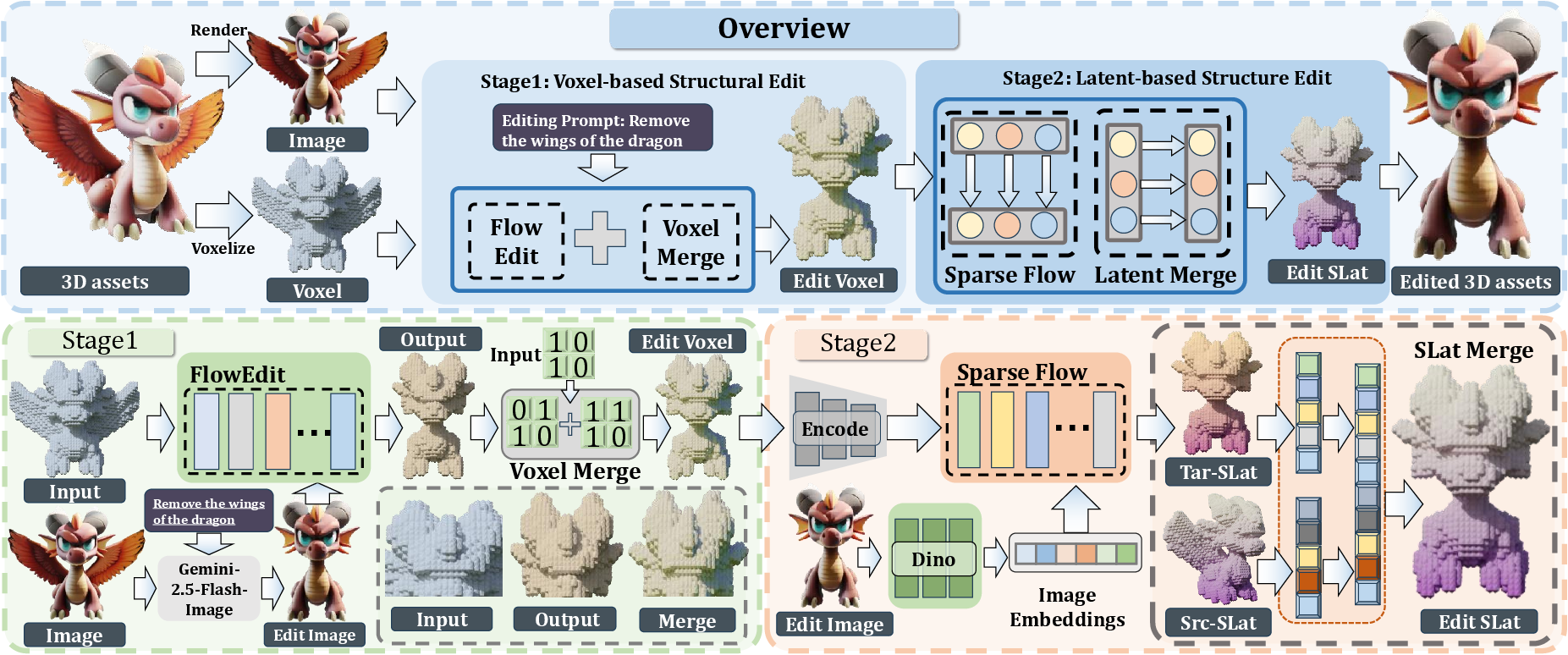

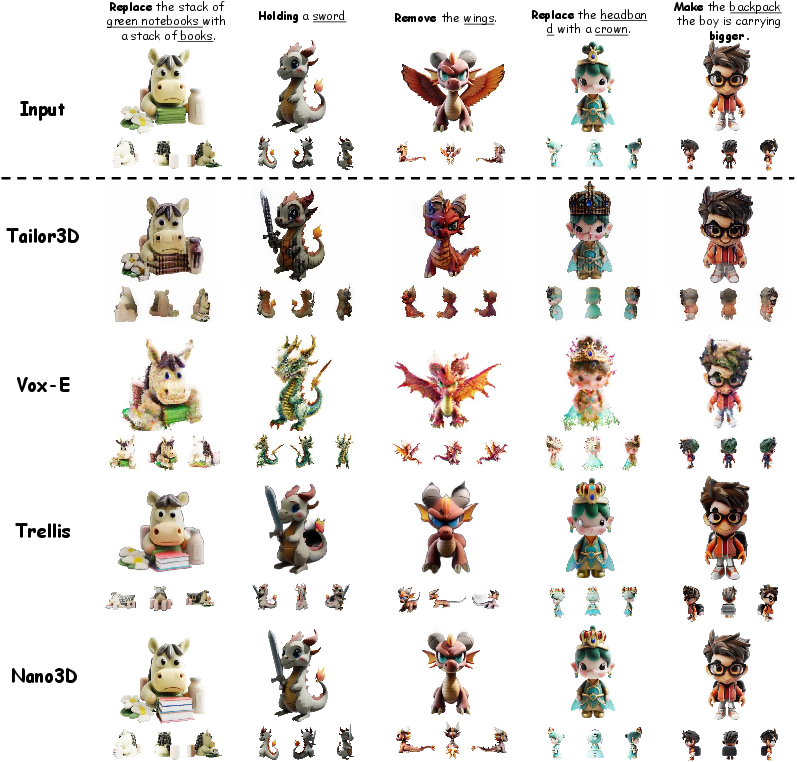

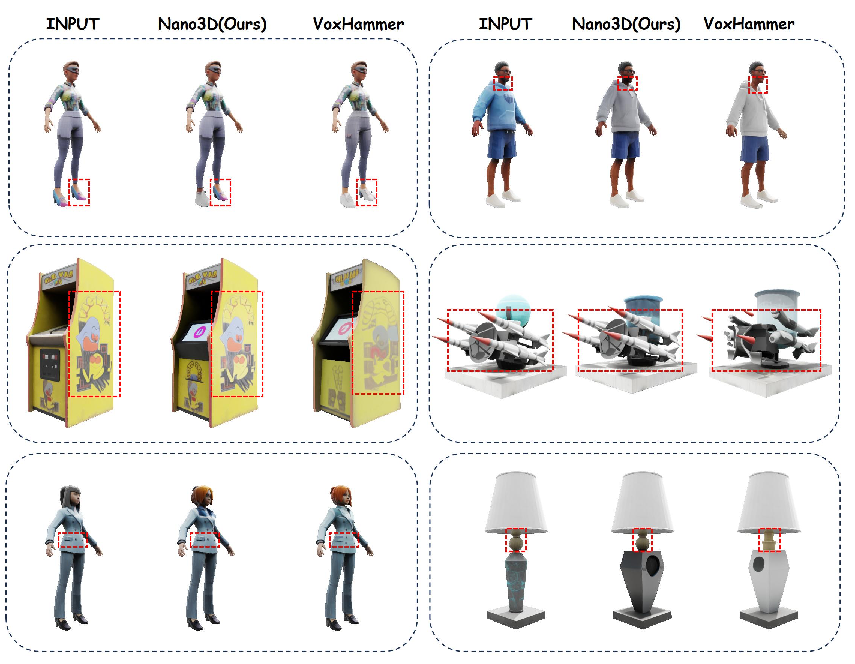

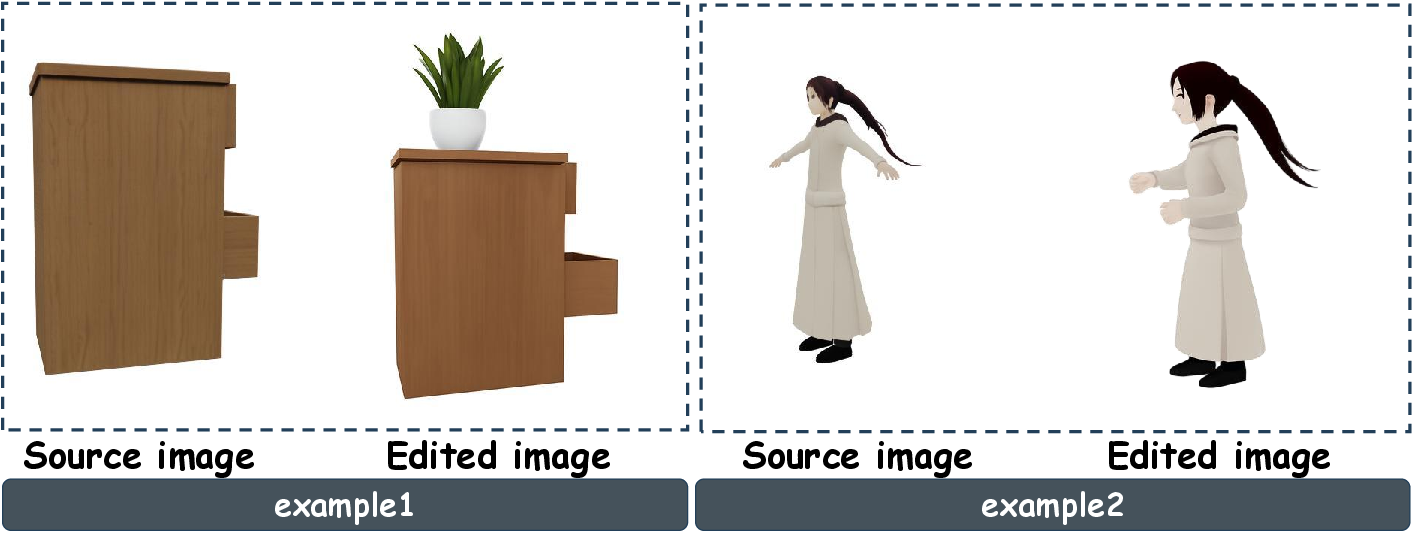

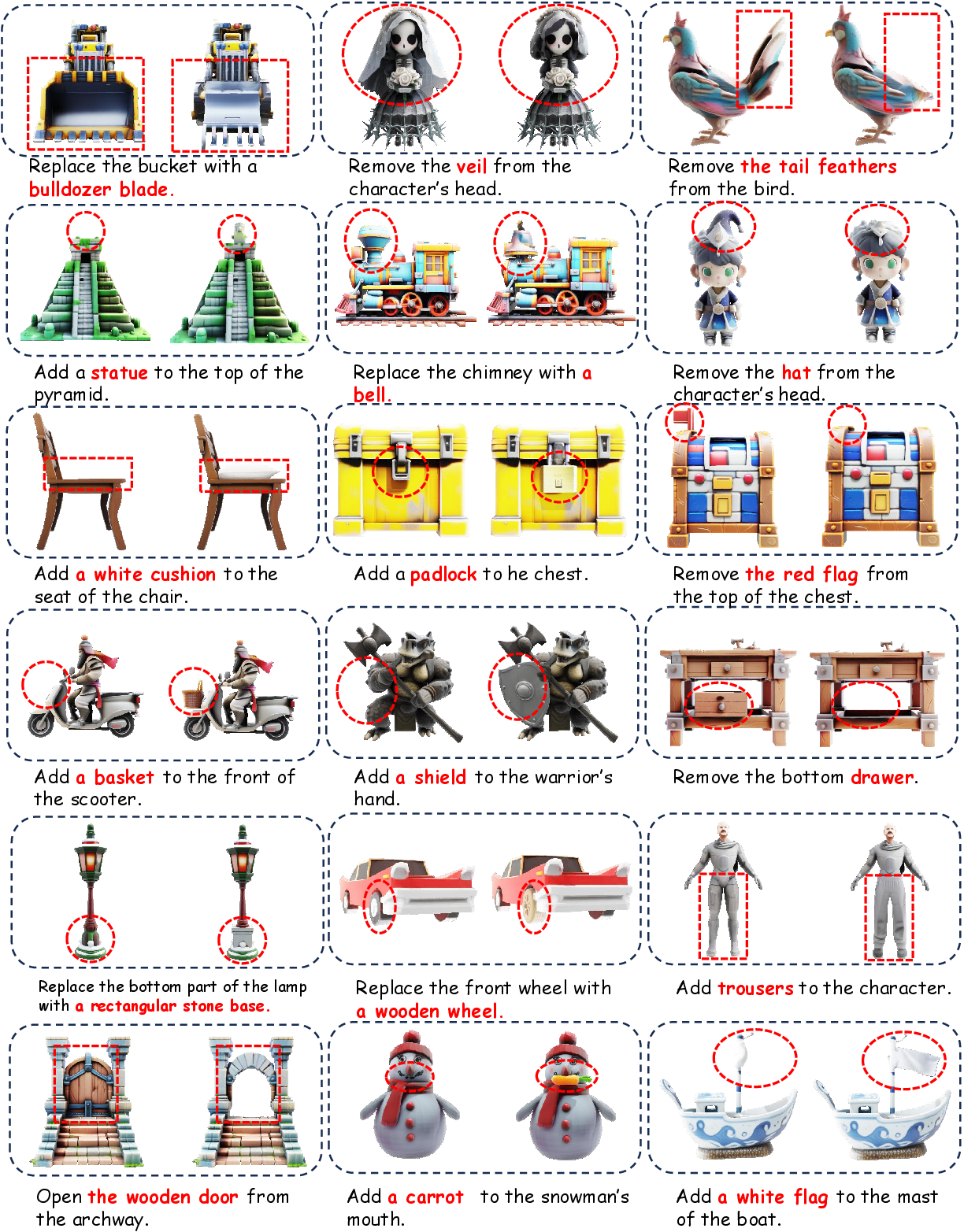

Abstract: 3D object editing is essential for interactive content creation in gaming, animation, and robotics, yet current approaches remain inefficient, inconsistent, and often fail to preserve unedited regions. Most methods rely on editing multi-view renderings followed by reconstruction, which introduces artifacts and limits practicality. To address these challenges, we propose Nano3D, a training-free framework for precise and coherent 3D object editing without masks. Nano3D integrates FlowEdit into TRELLIS to perform localized edits guided by front-view renderings, and further introduces region-aware merging strategies, Voxel/Slat-Merge, which adaptively preserve structural fidelity by ensuring consistency between edited and unedited areas. Experiments demonstrate that Nano3D achieves superior 3D consistency and visual quality compared with existing methods. Based on this framework, we construct the first large-scale 3D editing datasets Nano3D-Edit-100k, which contains over 100,000 high-quality 3D editing pairs. This work addresses long-standing challenges in both algorithm design and data availability, significantly improving the generality and reliability of 3D editing, and laying the groundwork for the development of feed-forward 3D editing models. Project Page:https://jamesyjl.github.io/Nano3D

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces Nano3D, a new way to edit 3D objects (like game characters or toys) quickly and accurately without extra training or drawing masks. It lets you remove, add, or replace parts of a 3D model while keeping the rest exactly the same. The team also builds a huge dataset (over 100,000 examples) to help future systems learn 3D editing even better.

What questions does it try to answer?

In simple terms, the paper focuses on three questions:

- How can we edit only certain parts of a 3D object (like removing a dragon’s wings) without accidentally changing other parts?

- Can we do these edits fast, without retraining big AI models or painting detailed masks?

- Can we build a large, high-quality 3D editing dataset to train future “one-click” 3D editing tools?

How does Nano3D work?

Think of a 3D object like a sculpture made of tiny Lego bricks (called voxels). Nano3D edits this sculpture in two careful steps—first the shape, then the look (colors and textures)—and uses a “smart merging” trick to keep everything else unchanged.

Here are the main ideas, with everyday analogies:

1) FlowEdit: guiding the change smoothly

- Analogy: Imagine you’re turning one sketch into another by morphing it smoothly, so you don’t lose important structure.

- What it does: FlowEdit helps plan a path from the original object to the edited version using a source image (the object’s front view) and a target image (the edited front view). It avoids heavy “undo-then-redo” steps and instead “slides” the model toward the target in small, controlled moves.

- Why it helps: This keeps the structure stable and avoids messy artifacts.

2) TRELLIS: the 3D generator under the hood

- Analogy: TRELLIS is like a two-part builder. First, it lays out which Lego bricks are used (the shape). Then, it attaches rich “blueprints” to those bricks that describe fine details and appearance.

- Two stages:

- Structure (ST): predicts which voxels (3D pixels/Legos) are occupied, forming the object’s basic shape.

- SLAT (Structured Latents): adds compact “instructions” to each occupied voxel to capture fine geometry and textures, which a decoder turns into the final 3D model.

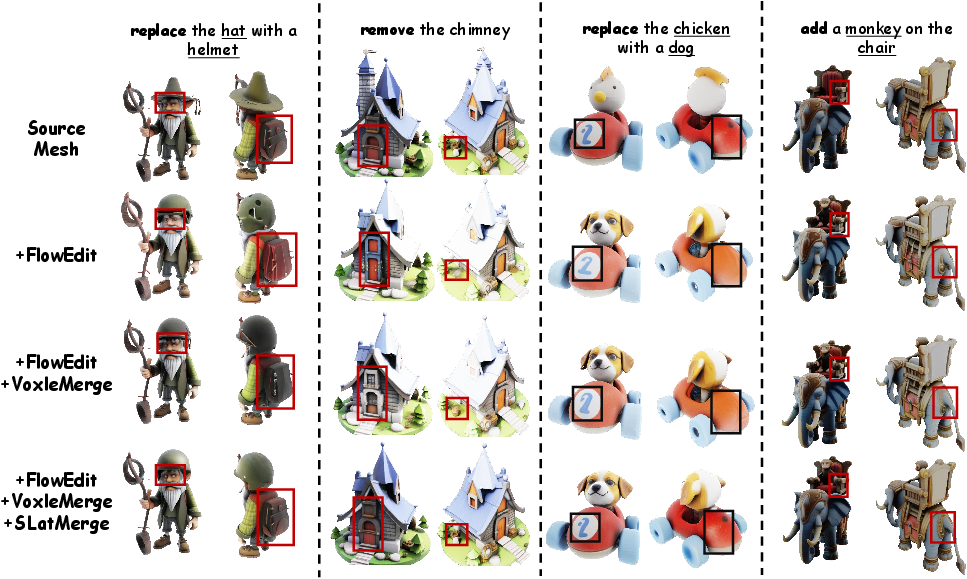

3) Voxel/Slat-Merge: smart cut-and-paste for reliability

- Problem: Even careful edits can accidentally nudge parts you didn’t want to change.

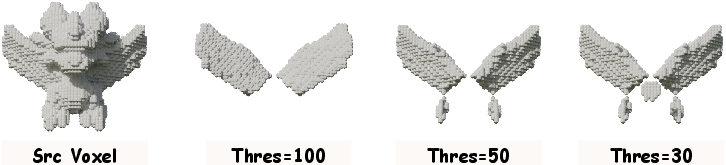

- Solution: After editing, Nano3D compares the new and old shapes to find only the regions that truly changed (like detecting where the wings used to be). It does this with:

- Voxel-Merge: selects only the meaningful changed chunks of voxels (using a “connected groups” check to ignore tiny, noisy changes), then swaps just those parts into the original shape.

- Slat-Merge: does the same kind of targeted merging for the detailed appearance “blueprints” (SLAT), so textures in unedited regions stay identical.

- Analogy: It’s like using a smart stencil: only the edited areas are replaced; everything else is preserved perfectly.

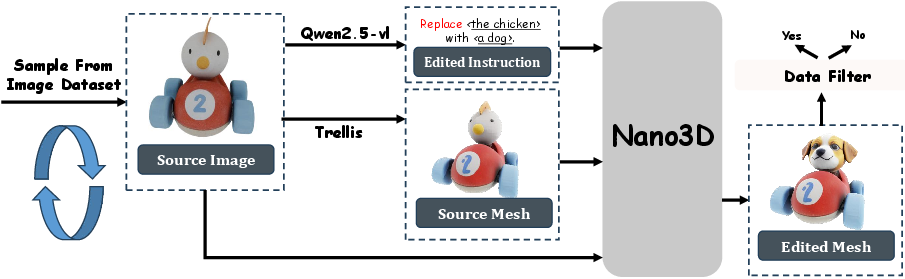

4) A simple pipeline for creating lots of training data

To build their 100k-example dataset (Nano3D-Edit-100k), they follow a practical recipe:

- Pick an image of a 3D object’s front view.

- Ask a vision-LLM to write a simple edit instruction (like “remove the wings” or “add a handle”).

- Rebuild the 3D object from the image using TRELLIS (so everything stays consistent).

- Create the edited target image using a strong 2D editor (e.g., Nano Banana or Flux-Kontext).

- Run Nano3D to convert the original 3D object into the edited 3D object that matches the edited image.

- Filter low-quality results automatically.

What did they find?

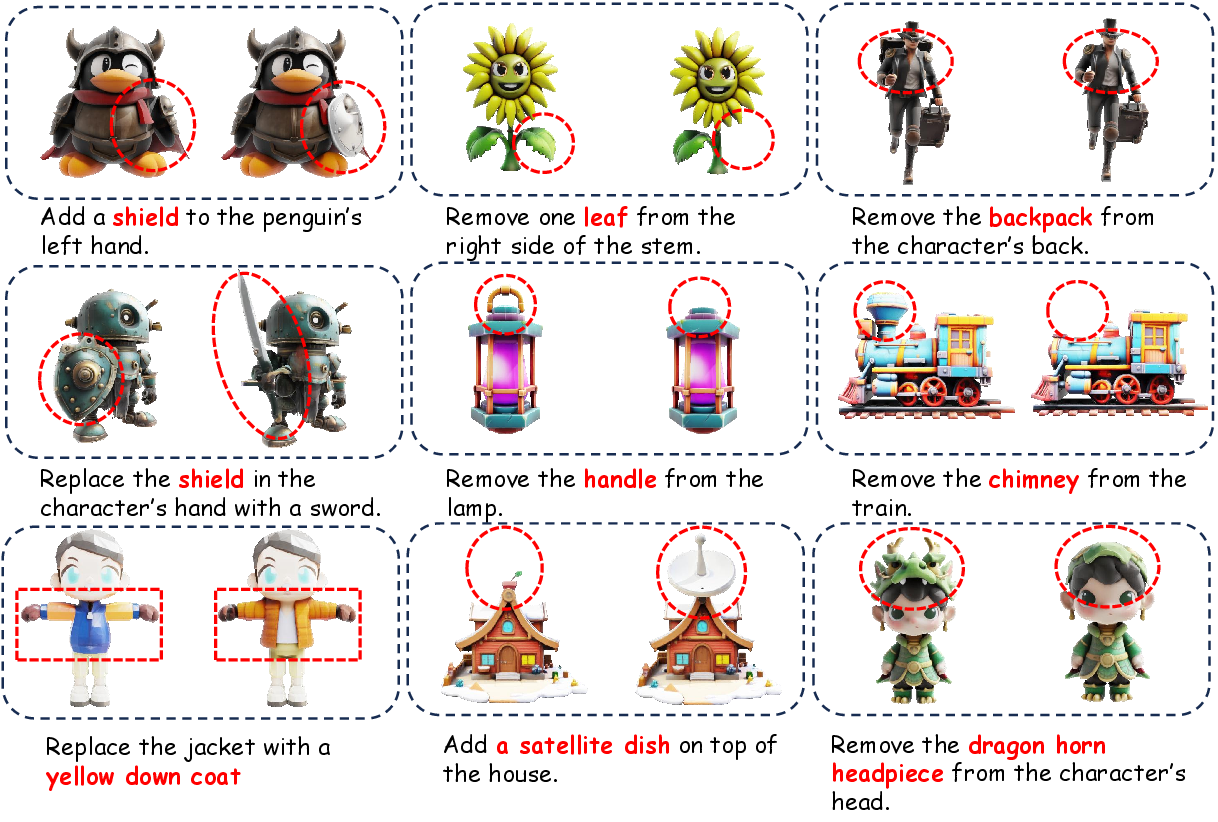

The authors show that Nano3D:

- Keeps unedited parts almost exactly the same across different views. In other words, it edits only what you asked for.

- Matches the edit instruction well (for example, if you say “remove the wings,” the wings are gone without harming the body).

- Produces clean, high-quality shapes and textures.

When compared to other methods:

- Tailor3D and Vox-E often blur shapes, misalign edits, or introduce artifacts.

- A strong 3D generator (TRELLIS) improves appearance edits, but Nano3D goes further by maintaining shape consistency better, especially for local, part-level edits.

The team also reports better scores on standard measures:

- Shape closeness (lower is better): Nano3D shows a smaller difference from the original in unedited areas.

- Instruction alignment (higher is better): Nano3D more closely matches the intended edit.

- Visual quality (lower FID is better): Nano3D renders look cleaner and more realistic.

Finally, they release Nano3D-Edit-100k, the first large 3D editing dataset at this scale, and show it’s higher quality than previous 3D editing datasets.

Why does this matter?

- Faster, training-free editing: You don’t have to retrain giant models or paint masks—edits are quick and user-friendly.

- Reliable local changes: You can add, remove, or replace parts without ruining the rest of the model.

- Better tools ahead: The big dataset they created can help researchers train instant, feed-forward 3D editors (think: “type an instruction, get the result in real time”), similar to how image editing has evolved.

Bottom line

Nano3D is like a careful 3D surgeon: it makes precise edits where you want them and leaves everything else untouched. It combines a smooth editing path (FlowEdit), a strong 3D builder (TRELLIS), and a smart merge strategy (Voxel/Slat-Merge) to deliver clean, consistent results—no masks, no retraining. By also releasing a massive, high-quality dataset, the authors pave the way for the next generation of fast, reliable 3D editing tools used in games, animation, design, and robotics.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, focused list of what remains missing, uncertain, or unexplored in the paper, stated concretely to guide future research.

- The method currently supports only localized edits; handling global transformations (re-posing, scaling, large topology restructuring, scene-level edits) is not addressed.

- Editing is guided by a single front-view image; there is no multi-view or 3D-aware guidance, leaving consistency on occluded/back-facing surfaces unverified.

- The 2D image editing stage is the dominant failure source, yet there is no mechanism to detect or correct 2D-to-3D inconsistencies; joint 2D–3D editing or multi-view synthesis remains unexplored.

- Voxel/Slat-Merge relies on heuristic masks derived via XOR plus connected component selection with fixed neighborhood connectivity and threshold τ/k; sensitivity, robustness, and principled or learned mask selection are not studied.

- There is no formal analysis of how adapting FlowEdit to TRELLIS stage-1 voxel/latent space preserves 3D occupancy/topology; conditions under which velocity blending maintains structure remain unproven.

- SLat-Merge uses hard replacement (z_src ⊕ M) without boundary-aware blending or continuity constraints; potential seams or texture discontinuities are neither modeled nor measured.

- Evaluation relies on DINO-I and FID on rendered images; 3D-native metrics for edit correctness (e.g., part-level IoU, boolean operation accuracy), topology/manifoldness, normal/curvature preservation, and self-intersections are missing.

- Preservation of non-edited regions is measured only by global Chamfer Distance; there is no spatially localized metric, per-part analysis, or quantitative measure of edited-region goal achievement (precision/recall of “removed/added/replaced” parts).

- Results are reported on 100 cases; there is no large-scale evaluation across the 100k dataset, no per-category breakdown, nor analysis by edit type or edit magnitude.

- Efficiency claims lack standardized runtime/memory benchmarks for Nano3D itself across asset sizes; comparative latency with baselines is not reported.

- Portability beyond TRELLIS is not validated; whether FlowEdit+Merge generalizes to other 3D priors (e.g., Hunyuan3D, TriposG, UniLat3D) is an open question.

- The impact of TRELLIS VAE reconstruction loss on fine geometry and texture fidelity (edited vs. unedited regions) is acknowledged but unquantified.

- Robustness is not evaluated on thin structures, high-frequency details, articulated/rigged objects, or edits that induce large topological changes (holes, tunnels).

- Mask-guided editing is shown only qualitatively; there is no quantitative comparison of controllability and accuracy versus mask-based baselines or user studies on interactive control.

- The merging strategy assumes clean XOR differences; failure modes under noisy occupancy, mis-registrations, partial overlaps, or cases requiring soft blending are not analyzed.

- The pipeline edits TRELLIS-reconstructed assets rather than original meshes; efficient and accurate sLat inference for real-world meshes (without heavy multi-view rendering) remains unaddressed.

- Dataset instructions are constrained to three templates (add/remove/replace); linguistic diversity, compositionality, spatial reasoning, and complex constraints are not explored.

- Quality filtering uses VLM compliance on 2D images only; there is no 3D-aware validator to automatically check structural preservation in non-edited regions or correctness of edited parts.

- Nano3D-Edit-100k stores SLAT and voxel sums but omits explicit meshes and part-level annotations; this limits training/evaluation for methods requiring mesh topology or semantic parts.

- No mechanisms enforce symmetry/global consistency (e.g., bilateral edits) or propagate edits around the 360° view; how to maintain global coherence is open.

- Safety, licensing, and bias issues for internet-sourced assets and auto-generated instructions are not discussed; dataset release details (splits, metadata, seeds, reproducibility) are limited.

- Hyperparameters (n_max, n_min, n_avg, CFG scales, λ, τ, neighborhood connectivity) are fixed; there is no sensitivity analysis or auto-tuning strategy per asset/instruction.

- The effect of using multi-view edited images versus a single view is not ablated; the role of DINOv2 features and alternative backbones in stage-2 conditioning is unstudied.

- Path planning in FlowEdit (source/target velocity weighting) lacks criteria or adaptation to user intent (edit magnitude vs. source preservation); learning/edit-aware weighting remains an open design question.

Practical Applications

Below is an overview of practical, real-world applications enabled by the paper’s contributions—training-free 3D editing without masks (Nano3D), the region-aware Voxel/Slat-Merge strategy, integration with TRELLIS reflow generation, and the Nano3D-Edit-100k dataset. Applications are grouped by deployment timeline and include sector links, emergent tools/workflows, and key assumptions/dependencies.

Immediate Applications

These can be implemented today with available models (TRELLIS, Nano3D, FlowEdit, Nano Banana/Flux-Kontext) and moderate engineering effort.

- Gaming, VFX, and Animation

- Use case: Fast, localized 3D asset touch-ups (remove/add/replace parts), while preserving unedited regions and multi-view consistency; e.g., remove wings from a creature, add clips to a backpack, swap a sword hilt.

- Tools/workflows: Blender/Maya/Unreal/Unity plugins that send the selected mesh + text instruction + front-view to a Nano3D service; batch asset retouching pipelines for studios; QA gates using Chamfer Distance to verify non-edited region integrity.

- Dependencies: Access to TRELLIS and Nano3D; GPU inference; reliable front-view image edits (Nano Banana/Flux-Kontext); primarily localized edits (global re-shaping limited); conversion from SLAT to GLB/mesh (Flexicube).

- E-commerce and Product Visualization

- Use case: Create product variants on the fly (e.g., remove a logo, add a strap, swap handles) for configurators and A/B testing with consistent geometry across views.

- Tools/workflows: Web configurator backend calling Nano3D; asset libraries stored as SLAT for quick edit→decode; automated compliance passes to remove marks or prohibited features.

- Dependencies: High-fidelity appearance consistency in unedited areas; dimension tolerances; SLAT→mesh conversion time; legal clearance for edited variants.

- Robotics Simulation and Training

- Use case: Rapid modification of simulation assets (add graspable handles, remove protrusions, swap attachments) while retaining consistent multi-view geometry for perception pipelines.

- Tools/workflows: ROS/Unity-based sim integration that edits props via text instructions; automated scenario generation using Nano3D-Edit-100k-derived templates; integrity checks using CD/FID.

- Dependencies: Edits are geometric/visual only—material and physics parameters must be updated separately; localized edits favored; accurate mapping from shape change to physics.

- Digital Twins and AEC (Architecture, Engineering, Construction)

- Use case: Minor retrofits to equipment models (add sensor mount, remove bracket, replace cover) without mask authoring, preserving surrounding geometry.

- Tools/workflows: CAD/DCC bridge to SLAT; asset-management systems invoking Nano3D for maintenance updates; versioning with CD-based integrity audits.

- Dependencies: Precision requirements (TRELLIS VAE reconstruction loss may be unacceptable for tight tolerances); limited scope to part-level edits; format conversion to BIM/CAD.

- Content Moderation and Compliance for 3D Asset Libraries

- Use case: Batch removal or replacement of restricted elements (e.g., trademarks, unsafe sharp features) across large libraries with proof of non-edited region preservation.

- Tools/workflows: Automated edit pipelines with instruction templates (Add/Remove/Replace); compliance dashboards reporting CD/DINO-I/FID.

- Dependencies: Accurate instruction-to-image edits (front-view is a bottleneck); human-in-the-loop review; asset licensing constraints.

- Education (3D Modeling Courses and Workshops)

- Use case: Teaching 3D editing via text instructions and mask-free workflows; assignments demonstrating localized edits and validation with CD/DINO-I.

- Tools/workflows: Classroom notebooks/Colab using Nano3D; curated asset packs; lecture demos comparing baselines (SDS, multi-view editing) to Nano3D.

- Dependencies: GPU access; simplified assets; basic understanding of SLAT and voxel representations.

- Academic Dataset Curation and Benchmarking

- Use case: Immediate use of Nano3D-Edit-100k to train and benchmark new feedforward 3D editing models; quantitative evaluation with CD, DINO-I, FID; ablation studies on Voxel/Slat-Merge and thresholds.

- Tools/workflows: Training loops on SLAT (stored representations reduce compute); benchmarking scripts; instruction generation with Qwen2.5-VL templates (Add/Remove/Replace).

- Dependencies: Dataset licensing and ethical use; compute budgets; reproducibility across alternative reflow models.

- AR/VR Avatar and Filter Personalization

- Use case: Quickly adjust or personalize avatar accessories and props (add a hat, remove a visor, replace jewelry) with consistent multi-view rendering in AR.

- Tools/workflows: Lens Studio/ARKit pipelines calling Nano3D; export to engine-ready meshes; optional user text input as instruction.

- Dependencies: Rigging/skin weights are not edited—manual retargeting still needed; front-view alignment; mobile runtime constraints.

- 3D Printing Prep

- Use case: Small functional edits to printable models (add a tab, remove a notch, replace a clip) with preserved geometry elsewhere; pre-print validation via CD.

- Tools/workflows: Slicer integration; SLAT→watertight mesh pipeline; automated checks for manifoldness post-edit.

- Dependencies: Mesh cleanup steps; tolerances for fitting; Flexicube conversion time and quality.

- Asset QA and Governance

- Use case: Establish an automated QA step ensuring that edits are localized—compare pre/post meshes with CD on non-edited regions; log DINO-I and FID for quality tracking.

- Tools/workflows: CI pipelines for asset builds; dashboards with thresholds (e.g., τ for Voxel/Slat-Merge).

- Dependencies: Threshold tuning by asset class; agreement on acceptance metrics; version control.

Long-Term Applications

These require further research, scaling, integration, or new models trained on Nano3D-Edit-100k.

- Real-Time, Feedforward 3D Editing Models

- Use case: Millisecond-level localized edits directly in DCC tools or game engines, trained on Nano3D-Edit-100k; interactive “type-to-edit” experiences.

- Tools/workflows: DiT-based feedforward 3D editors; on-device models for artists; inference caches for asset libraries.

- Dependencies: Large-scale supervised training; generalization across categories and materials; hardware acceleration.

- Scene-Level Editing and Part-Aware Design

- Use case: Editing multiple objects or semantic parts in complex scenes (e.g., move/replace room fixtures, edit assemblies) with cross-object consistency and no masks.

- Tools/workflows: Integration with part-aware generation (e.g., OmniPart/PartGen); multi-object Voxel/Slat-Merge; spatial constraints and collision awareness.

- Dependencies: Robust part segmentation/semantics; expanded merging strategies; scene physics and layout constraints.

- Consumer-Grade Mobile 3D Editors

- Use case: Smartphone apps for quick 3D customization (toys, figurines, avatars) using text edits; instant previews in AR.

- Tools/workflows: Compressed/quantized reflow models; edge inference; simplified SLAT codecs for mobile storage.

- Dependencies: Model distillation and optimization; energy-efficient hardware; UX for 3D edits.

- Robotics: On-the-Fly Tool/Prop Retargeting

- Use case: Real-time shape adjustments to objects for better grasp/manipulation; generate tool variations and test in simulation.

- Tools/workflows: Closed-loop integration with grasp planners and physics engines; property estimation from edited geometry.

- Dependencies: Mapping shape edits to physical affordances and safety; certification for industrial robots.

- Healthcare and Biomed

- Use case: Editable anatomical models or prosthetic prototypes (add/remove localized features) with multi-view consistency for pre-surgical planning.

- Tools/workflows: Medical imaging→SLAT pipeline; clinical review tools using CD/DINO-I as quality signals; audit logs of edits.

- Dependencies: Regulatory approvals; clinical-grade accuracy beyond VAE constraints; validated workflows for patient safety.

- Manufacturing/CAD Co-Pilot

- Use case: Bridging parametric CAD with free-form mask-free edits (localized modifications that preserve surrounding geometry); rapid design iteration.

- Tools/workflows: CAD plug-ins that round-trip between CAD and SLAT; constraint-repair after free-form edits; tolerance-aware merging.

- Dependencies: Reliable constraint solving; high-precision decoding; integration with PLM systems.

- Cultural Heritage and Restoration

- Use case: Inpainting and restoration of damaged artifacts (localized additions/removals) maintaining consistency across views for archival purposes.

- Tools/workflows: Expert-in-the-loop restoration tools; provenance tracking and edit explainability; museum-grade QA metrics.

- Dependencies: Curatorial oversight; reproducibility standards; ethical restoration policies.

- Policy and Standards for 3D Editing Quality

- Use case: Establish benchmarks and acceptance criteria (CD, DINO-I, FID) for mask-free 3D editing; dataset documentation standards (SLAT format, voxel diffs).

- Tools/workflows: Open consortiums for 3D editing audits; transparent reporting pipelines; licensing schemas for derivative assets.

- Dependencies: Community adoption; IP rights and attribution mechanisms; responsible release of large 3D datasets.

- Licensing-Aware Asset Marketplaces

- Use case: Marketplaces that permit controlled localized edits (e.g., approved variants) with automated compliance checks and provenance.

- Tools/workflows: Policy-compliant Nano3D services; audit trails; automatic rejection of non-local edits exceeding CD thresholds.

- Dependencies: Rights management infrastructure; standardized edit logs; legal frameworks for derivatives.

- Energy/Infrastructure Digital Twin Maintenance

- Use case: Mask-free localized updates to equipment twins (e.g., adding sensor housings, removing obsolete features) with minimal scene disturbance.

- Tools/workflows: Twin platform integrations; SLAT repositories for assets; governance dashboards tracking CD-based integrity.

- Dependencies: High accuracy and alignment with engineering specs; safety validation; integration with BIM/SCADA data.

Assumptions and dependencies common across applications:

- Access to pretrained TRELLIS (or comparable reflow model), Nano3D pipeline, and an image editor (Nano Banana or Flux-Kontext) to produce reliable front-view edits.

- Compute requirements (GPUs) and conversion from SLAT to explicit meshes (Flexicube) with acceptable runtime and quality.

- Stronger performance on localized edits; global structural changes and rigging/animation are out of scope in the current framework.

- Thresholds and connectivity choices in Voxel/Slat-Merge (e.g., τ, 6/18/26-neighborhood) affect edit localization and quality; may need per-category tuning.

- Licensing/IP considerations for dataset use and edited assets; potential need for human oversight in compliance-sensitive workflows.

Glossary

- Autoregressive (AR) models: Generative models that produce outputs in an ordered sequence, enabling structured 3D synthesis. "autoregressive (AR) models~\cite{weng2024pivotmesh,zhao2025deepmeshautoregressiveartistmeshcreation,chen2024meshanythingv2, wei2025octgptoctreebasedmultiscaleautoregressive, chen2025autopartgenautogressive3dgeneration} enable fine-grained conditional control and structured synthesis through ordered generation."

- Chamfer Distance (CD): A metric that measures the distance between two point sets, commonly used to evaluate 3D shape similarity. "we assess non-edited regions against the original 3D object using Chamfer Distance (CD)~\cite{fan2017cd}."

- Classifier-Free Guidance (CFG): A technique to adjust conditional generation strength in diffusion/flow models by scaling guidance terms. "The CFG guidance scales for and are set to 1.5 and 5.5, respectively"

- CLIPScore: A text–image alignment metric based on CLIP embeddings. "evaluate textâimage alignment using CLIPScore~\cite{hessel2021clipscore}"

- Connectivities (6/18/26-neighborhoods): Voxel neighborhood definitions used to identify connected components in 3D grids. "Under different connectivities (, 6/18/26-neighborhoods), is decomposed to several components "

- DINO-I: An image–image alignment metric derived from DINO features to quantify semantic adherence. "we employ the DINO-I~\cite{caron2021dinoi} metric to quantify adherence to the target edited image."

- DINOv2: A pretrained vision transformer used to extract robust image features. "DINOv2~\cite{oquab2023dinov2} features"

- FID: Fréchet Inception Distance; evaluates generative image quality and diversity via feature distribution comparisons. "we use FID~\cite{heusel2017fid} on rendered multi-view images to measure fidelity and diversity."

- Flexicube: A post-processing module for converting latent 3D representations to explicit meshes, noted for high computational cost. "the predominant computational cost arose from the Flexicube module, which consumed nearly 4.5 minutes per pair"

- Flow Transformer: The flow-based backbone in TRELLIS Stage 1 that updates geometry via learned velocity fields. "Stage 1 modifies geometry via Flow Transformer with FlowEdit"

- FlowEdit: A training-free, inversion-free image editing algorithm for flow models that constructs an ODE path between source and target. "FlowEdit is a text-guided image editing method tailored for text-to-image flow models."

- GLB: The binary GLTF 3D mesh format for efficient asset storage and exchange. "explicit GLB meshes"

- Large reconstruction models (LRMs): Models that reconstruct 3D assets from images, often used after multi-view editing. "with large reconstruction models (LRMs)~\cite{qi2024tailor3d, chen2024mvedit, barda2025instant3dit, huang2025edit360, erkocc2025preditor3d, bar2025editp23, zheng2025pro3deditor, li2025cmd, gao20243d}"

- Multi-view editing then reconstruction: A pipeline that edits multiple rendered views and reconstructs the 3D object, often causing inconsistencies. "the ``multi-view editing then reconstruction'' paradigm~\cite{qi2024tailor3d}"

- Occupancy: Whether a voxel cell is filled by the object, used to form sparse 3D structures. "a voxel grid is employed to estimate occupancy"

- Ordinary Differential Equation (ODE) trajectory: A continuous path in latent space computed by integrating velocity fields for editing transformations. "FlowEdit constructs an ordinary differential equation (ODE) trajectory in the latent space from the source prompt to the target prompt."

- Rectified flows (reflows): Flow-based generative models that straighten sampling trajectories for fast, high-fidelity synthesis. "rectified flows (reflows)~\cite{liu2022rectifiedflow}"

- RePaint-based method: An inpainting approach used to edit images by conditioning on known regions. "TRELLIS, which leverages a RePaint-based method."

- Score Distillation Sampling (SDS): An optimization technique that distills scores from 2D diffusion models to guide 3D representation updates. "such as those based on Score Distillation Sampling (SDS)~\cite{sella2023voxe}"

- SLat-Merge: A mask-based merging strategy that combines edited and original structured latents to preserve appearance consistency. "we further introduce SLat-Merge."

- SLAT (Structured Latents): A 3D representation comprising activated voxels each with a local latent vector encoding geometry and appearance. "Structured Latents (SLAT) â a set of activated voxels, each associated with a local latent vector"

- SLAT-VAE decoder: The decoder that reconstructs full 3D assets from SLAT representations. "a powerful SLAT-VAE decoder reconstructs the complete 3D asset"

- Sparse Flow Transformer: The flow model in TRELLIS Stage 2 that predicts structured latents for the occupied voxels. "Stage 2 generates structured latents with Sparse Flow Transformer"

- Sparse Latent (SLAT) stage: The second TRELLIS stage that infers local latent vectors for previously detected voxels. "In the Sparse Latent (SLAT) stage, local latent vectors are further inferred"

- Structure Prediction (ST) stage: The first TRELLIS stage that predicts voxel occupancy to form a sparse structure. "In the Structure Prediction (ST) stage, a voxel grid is employed to estimate occupancy"

- TRELLIS: A reflow-based 3D generative framework that disentangles geometry and appearance in a unified latent space. "Trellis represents a 3D asset using Structured Latents (SLAT)"

- Triplanes: A 3D representation that uses three orthogonal feature planes to bridge 2D and 3D modeling. "or directly manipulate triplanes as a bridge between 2D and 3D"

- VAE: Variational Autoencoder; used to encode/decode structured latent representations of 3D assets. "encoded into a structured latent representation via a VAE~\cite{kingma2013vae}"

- Velocity field: The vector field in flow models that defines how latents evolve during generation or editing. "By leveraging a weighted combination of the source and target velocity fields"

- ViLT R-Precision: A retrieval-based metric using ViLT to assess text–image alignment via ranked precision. "ViLT R-Precision~\cite{kim2021vilt}"

- Vision-LLM (VLM): A model that jointly understands images and text, used to auto-generate editing instructions. "An editing instruction is automatically generated using the vision-LLM Qwen-VL-2.5~\cite{bai2025qwen25vltechnicalreport}"

- Voxel-Merge: A region-aware merging method that transfers edited voxel components while preserving unedited regions. "we introduce a region-aware merging strategy, Voxel-Merge"

Collections

Sign up for free to add this paper to one or more collections.