- The paper presents NetMasquerade, a novel hard-label black-box attack that combines Traffic-BERT pretraining with RL-based adversarial generation.

- The method mimics benign traffic patterns by encoding packet size and IPD sequences, achieving an average success rate above 96% with minimal modifications.

- Experimental results reveal high throughput and low perturbation overhead, challenging the effectiveness of traditional feature-space defenses.

Hard-Label Black-Box Evasion Attacks on ML-Based Malicious Traffic Detection

Introduction and Threat Model

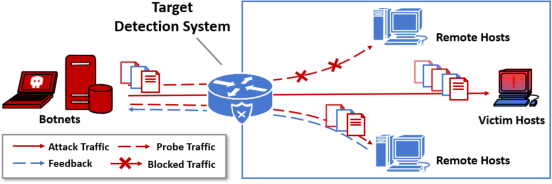

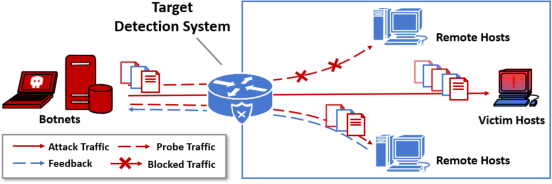

The paper presents NetMasquerade, a hard-label black-box evasion attack targeting ML-based malicious traffic detection systems. The threat model assumes an external adversary controlling botnets to deliver malicious traffic through an in-line ML-based detector at the victim's ingress link. The attacker has no access to model internals, parameters, or training data, and can only observe binary pass/fail feedback by probing the system. This setting reflects real-world deployments where detection systems are closed-source or cloud-hosted, and only allow minimal feedback via network responses.

Figure 1: Network topology illustrating the adversary's interaction with the in-line ML-based detection system.

NetMasquerade Architecture

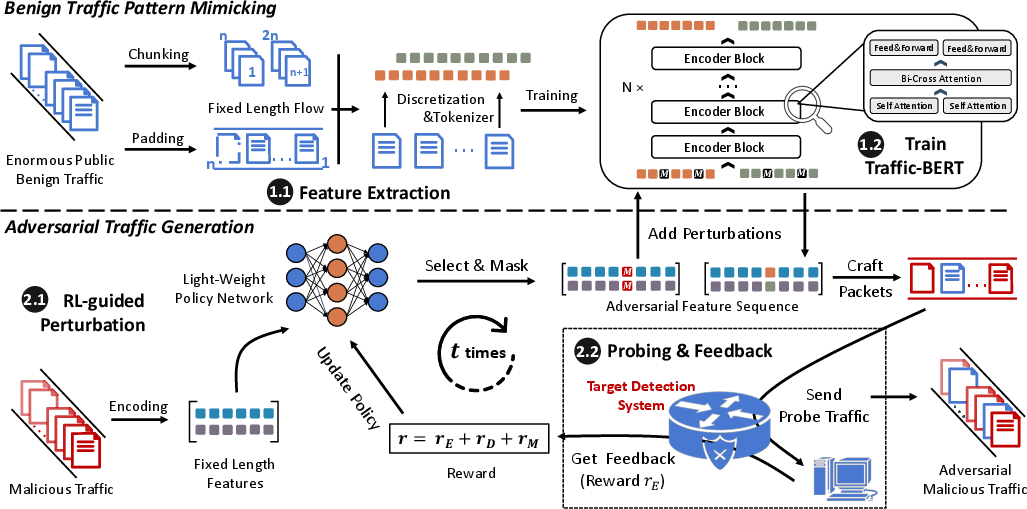

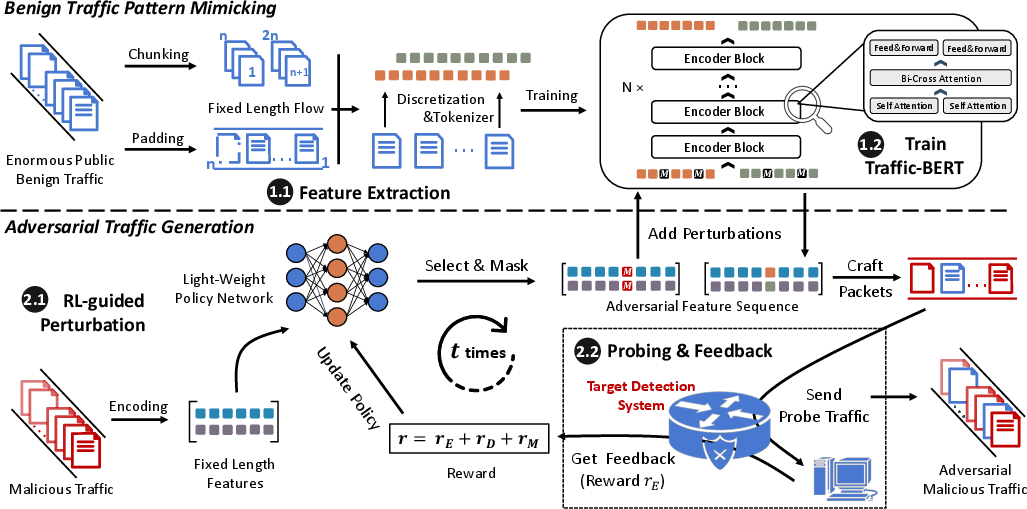

NetMasquerade is a two-stage framework:

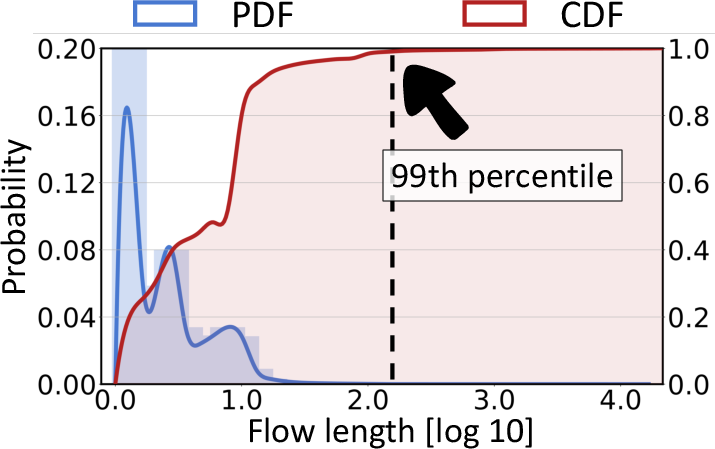

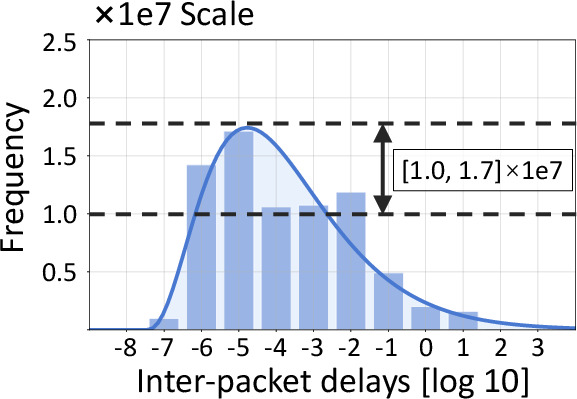

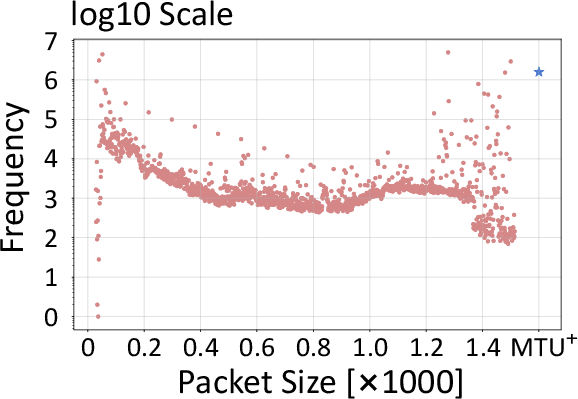

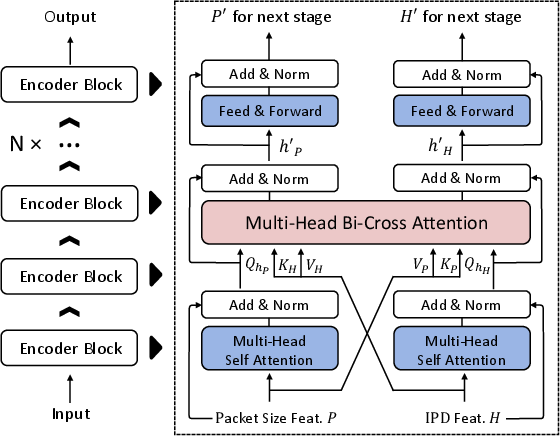

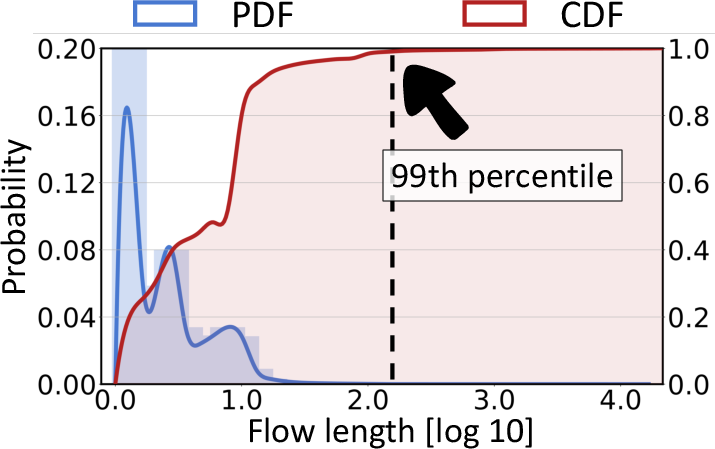

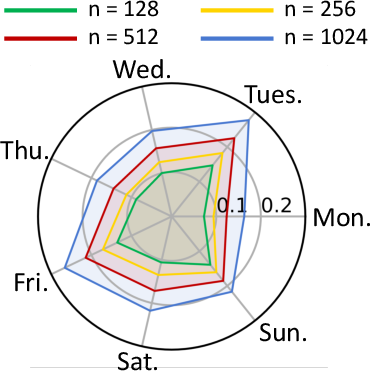

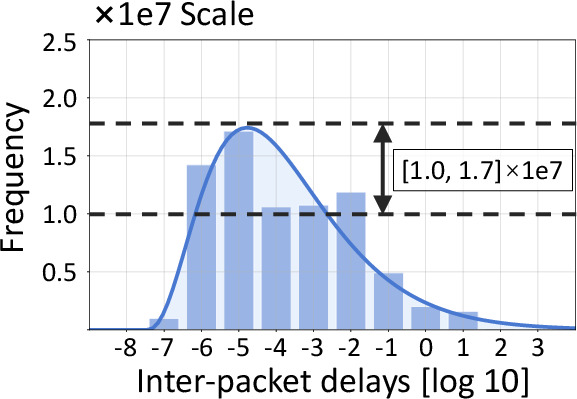

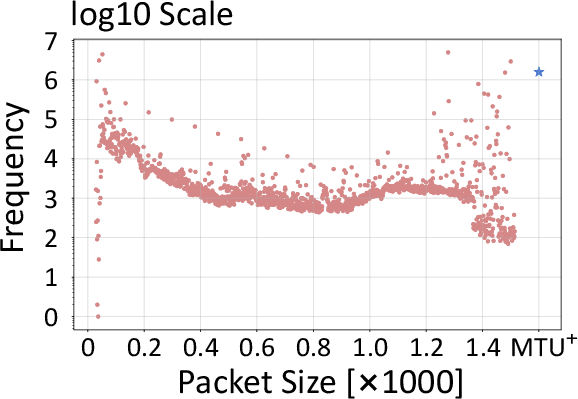

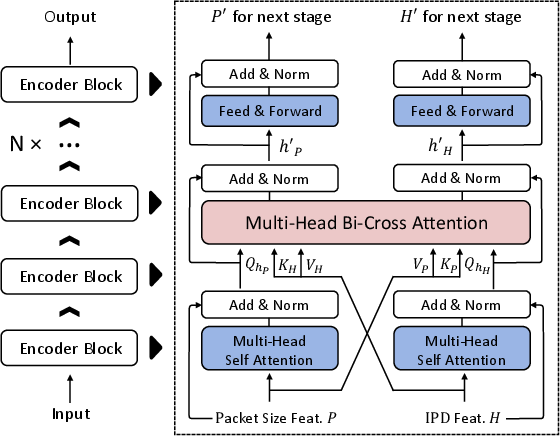

- Benign Traffic Pattern Mimicking: Traffic-BERT, a BERT-based model with a network-specialized tokenizer and bi-cross attention, is pre-trained to capture complex benign traffic patterns from public datasets. It encodes packet size and inter-packet delay (IPD) sequences, using padding and chunking to handle variable-length flows and logarithmic binning for IPDs.

- Adversarial Traffic Generation: An RL agent manipulates malicious traffic to mimic benign patterns with minimal modifications. The RL process is formulated as a finite-horizon MDP, where states are packet feature sequences, actions are packet modifications or chaff insertions, and rewards combine evasion success, dissimilarity penalty (edit distance), and effectiveness penalty (preserving attack semantics).

Figure 2: High-level design of NetMasquerade, showing the two-stage pipeline.

Traffic-BERT: Benign Pattern Modeling

Traffic-BERT extends transformer architectures to multi-feature network traffic modeling. It introduces bi-cross attention to fuse packet size and IPD sequences, enabling the model to learn cross-modal dependencies. Training uses a Mask-Fill task, masking 15% of tokens in both sequences and requiring the model to reconstruct them, thus learning to generate realistic benign traffic features.

Figure 3: Flow length distribution and entropy ratios, motivating the padding/chunking strategy for variable-length flows.

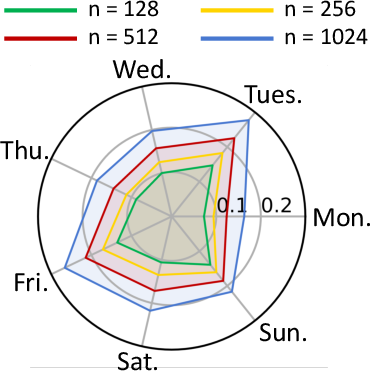

Figure 4: Packet size and IPD distribution in benign traffic, informing the tokenization and embedding design.

Figure 5: Core design of Traffic-BERT, highlighting the bi-cross attention mechanism for multi-feature fusion.

RL-Based Adversarial Traffic Generation

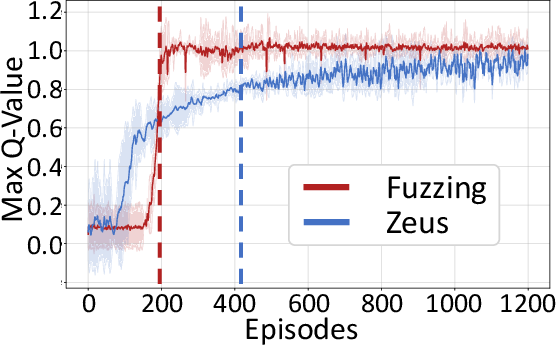

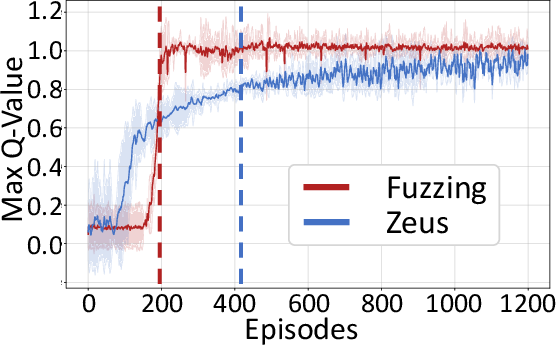

The RL agent uses GRUs for policy and Q-networks, optimizing via Soft Actor-Critic (SAC) to balance exploration and exploitation. Actions are constrained to domain-agnostic modifications (packet size/IPD changes, chaff insertion) to preserve attack functionality. Invalid action masking ensures only feasible modifications are considered. The reward function penalizes excessive modifications and loss of attack effectiveness, driving the agent to minimal, stealthy perturbations.

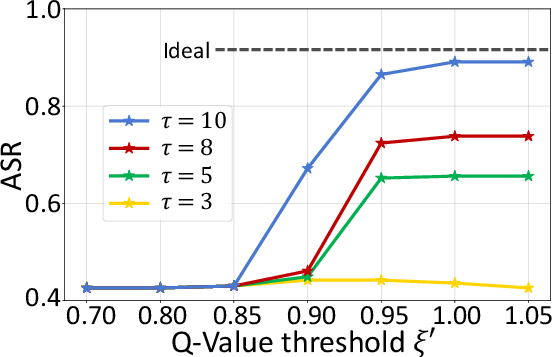

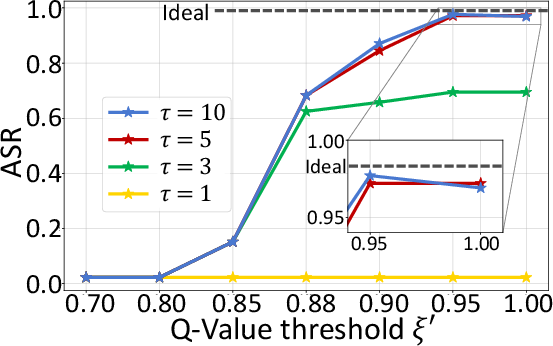

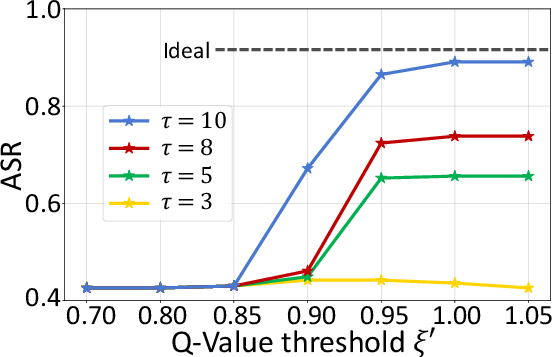

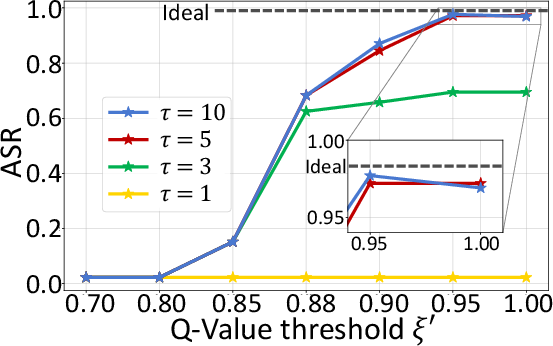

During inference, when feedback is unavailable, the agent uses Q-value thresholds learned during training to terminate adversarial generation, ensuring practical deployment without real-time feedback.

Experimental Evaluation

NetMasquerade is implemented with PyTorch and Intel DPDK for high-throughput packet emission. It is evaluated against six state-of-the-art detection systems (Whisper, FlowLens, NetBeacon, Vanilla+RNN, CICFlowMeter+MLP, Kitsune) across 80 attack scenarios, including reconnaissance, DoS, botnet, and encrypted web attacks.

Key results:

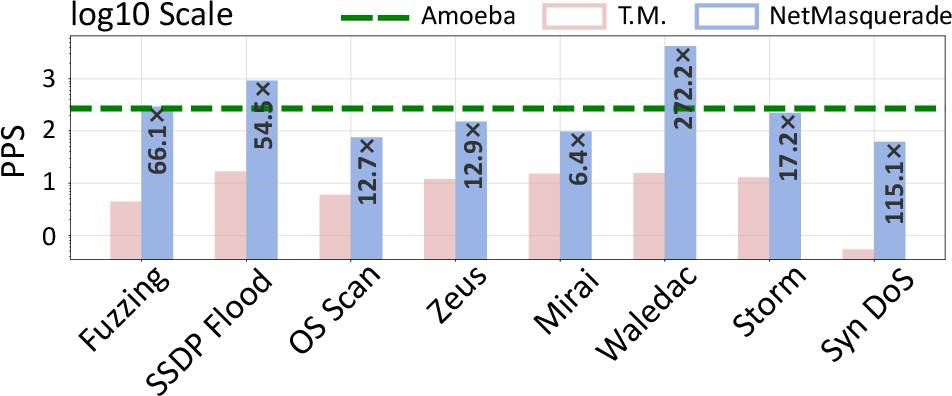

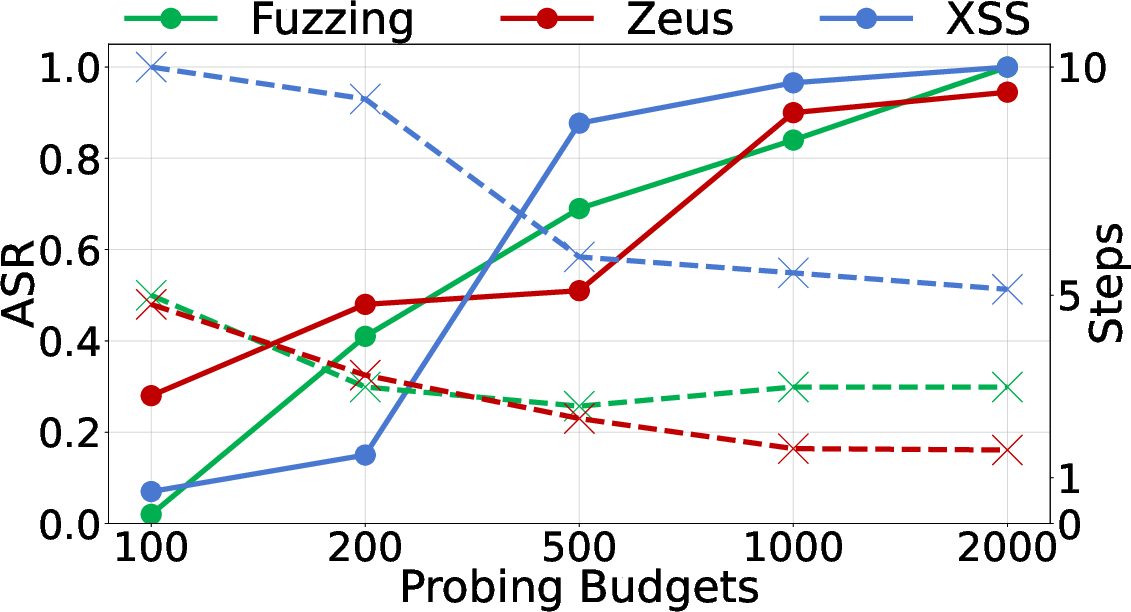

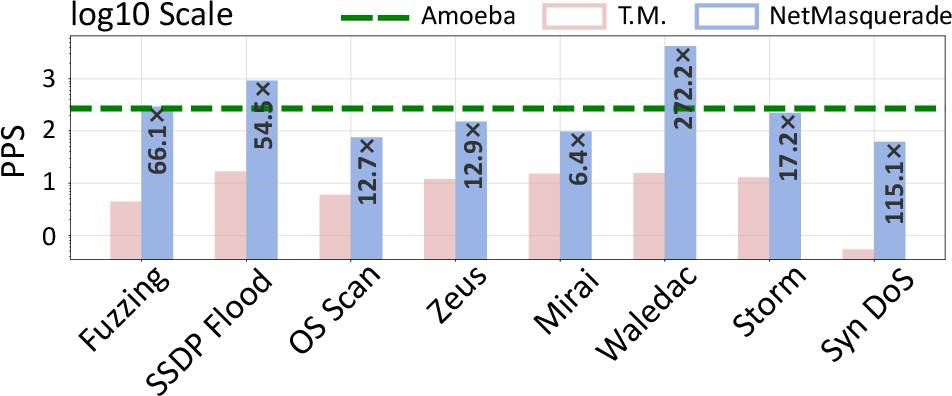

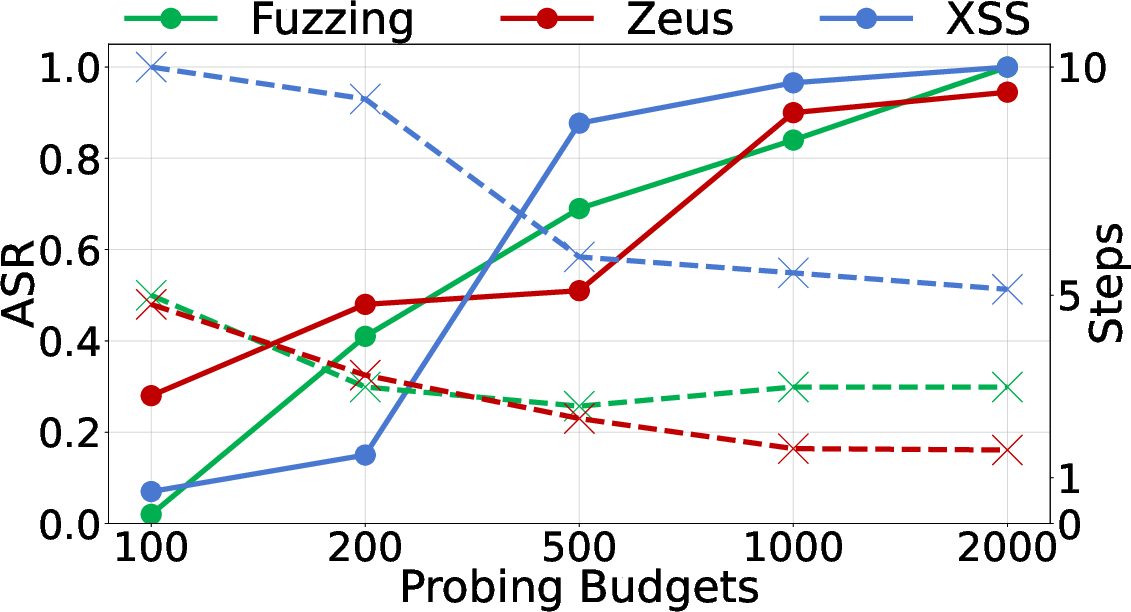

- Attack Success Rate (ASR): NetMasquerade achieves ASR between 0.7475 and 0.999, averaging over 96.65% across all scenarios, outperforming baselines (Random Mutation, Mutate-and-Inject, Traffic Manipulator, Amoeba) by up to 21.88%.

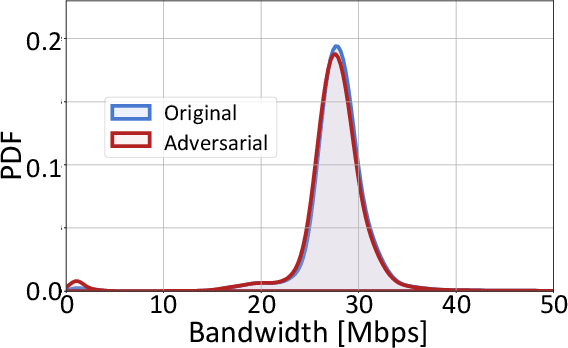

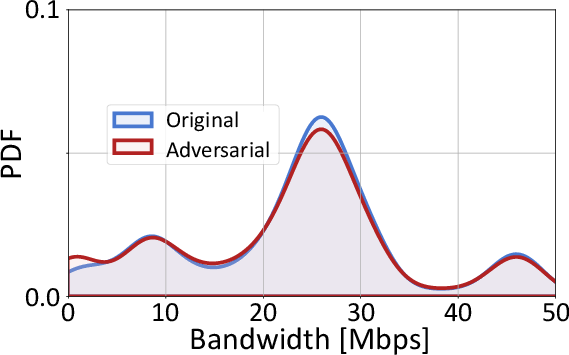

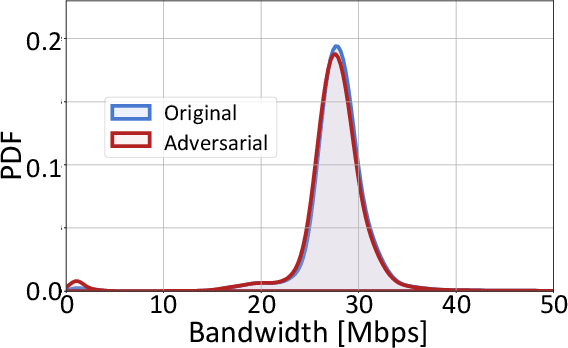

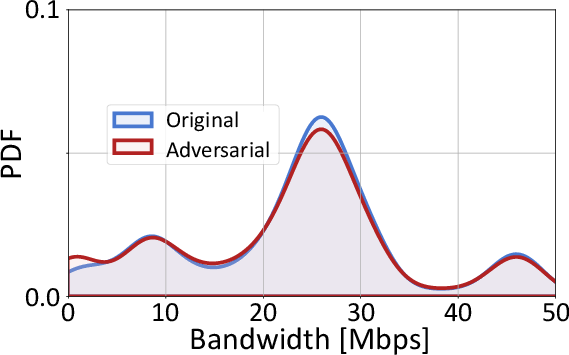

- Minimal Modifications: No more than 10 steps per flow, with KL divergence between original and adversarial bandwidth distributions as low as 0.013, indicating negligible impact on traffic characteristics.

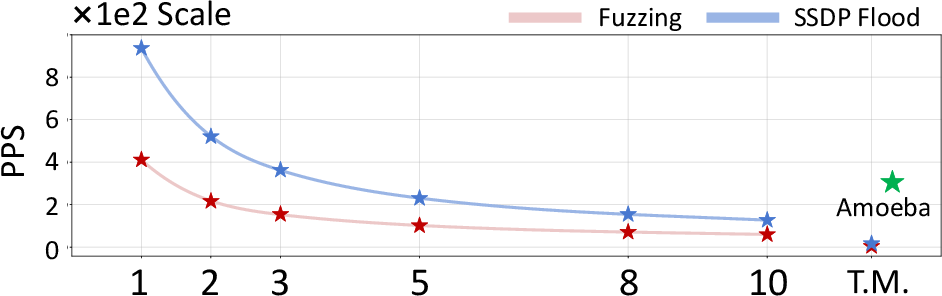

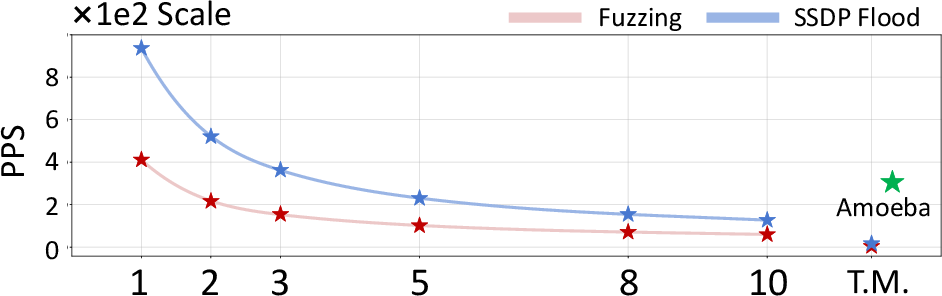

- Efficiency: Throughput reaches 4,239 packets/sec, with RL convergence in under 1 hour and Traffic-BERT pretraining completed offline.

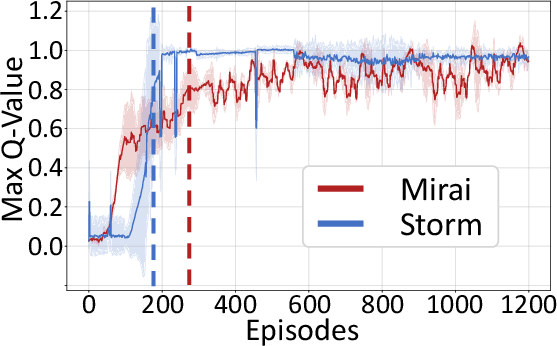

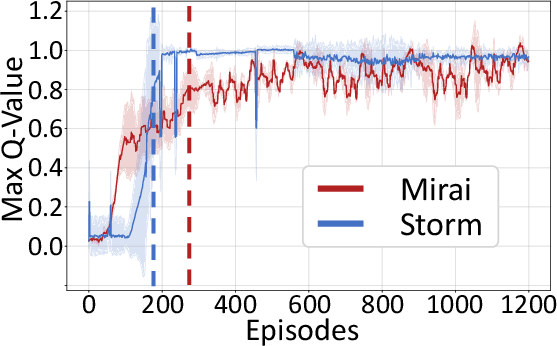

Figure 6: Max Q-Value during RL training, demonstrating rapid convergence and stable learning.

Figure 7: Relationship between ASR and Q-Value threshold under different step limits, showing optimal evasion with minimal modifications.

Figure 8: Bandwidth of DoS attack, confirming preservation of attack effectiveness after adversarial transformation.

Figure 9: Efficiency comparison of NetMasquerade and baselines, highlighting superior throughput and scalability.

Robustness, Limitations, and Deep Dive

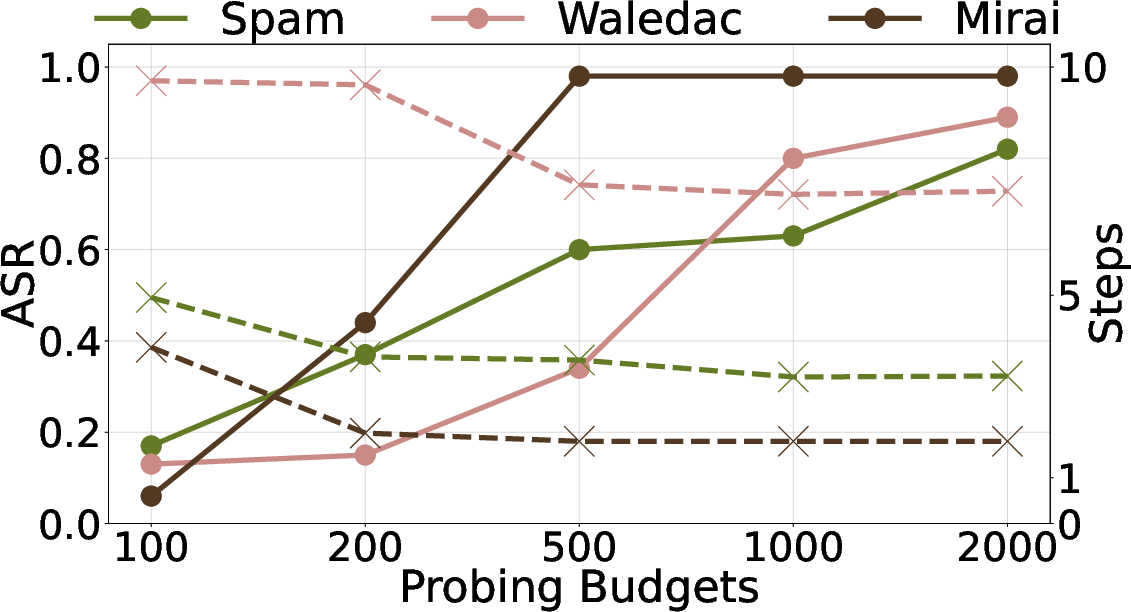

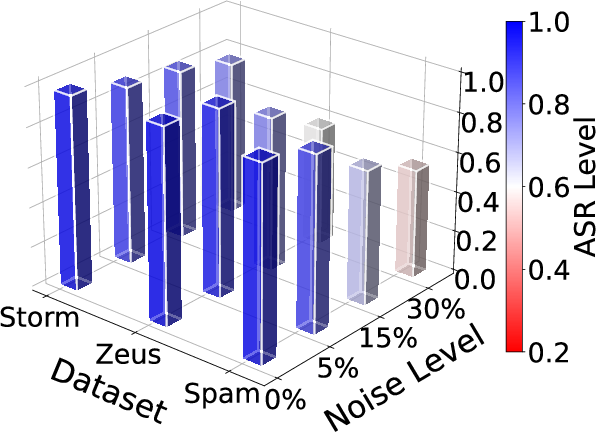

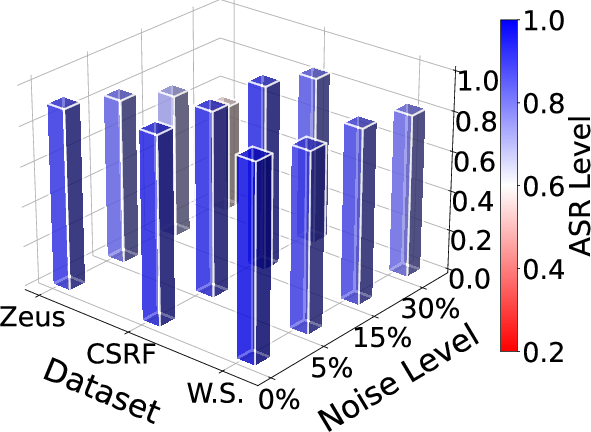

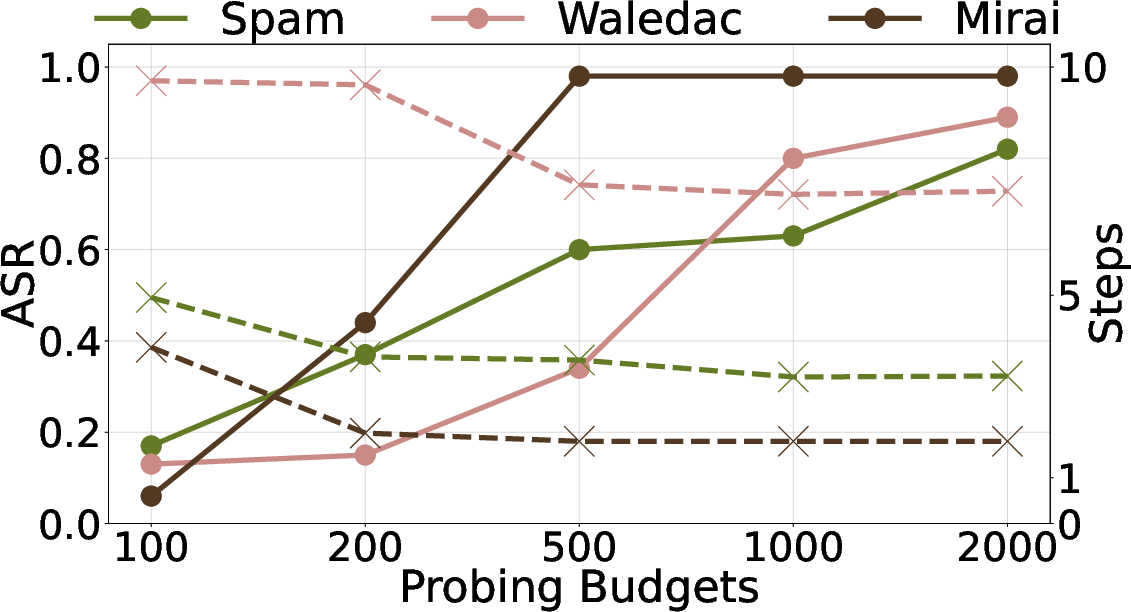

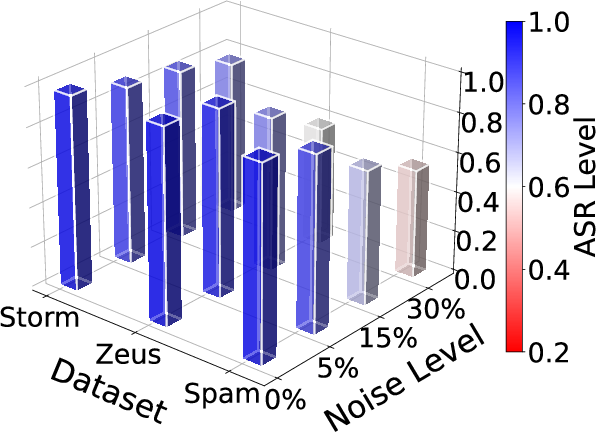

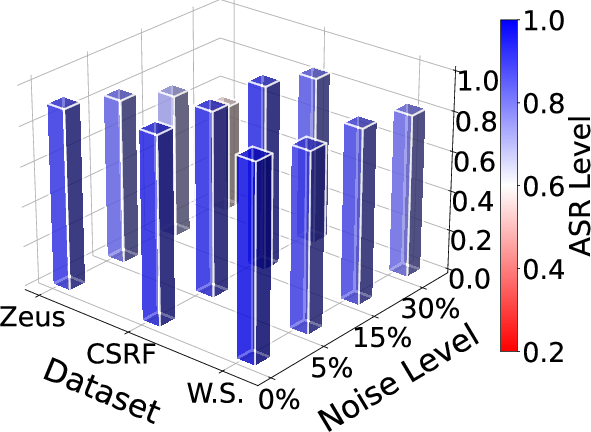

NetMasquerade maintains high ASR under limited probing budgets and moderate feedback noise, with graceful degradation at high noise levels. Ablation studies confirm the necessity of both Traffic-BERT and RL stages; removing either significantly reduces ASR, especially in high-speed or complex scenarios.

Figure 10: ASR under different probing budgets, showing rapid learning and efficient probe utilization.

Figure 11: ASR under different noise levels, demonstrating robustness to unreliable feedback.

Defenses and Implications

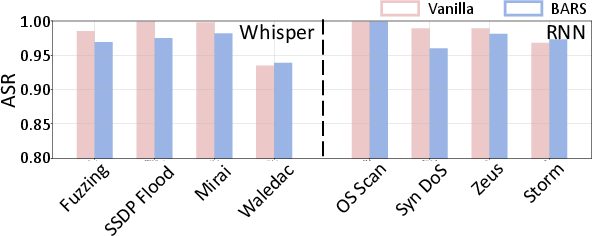

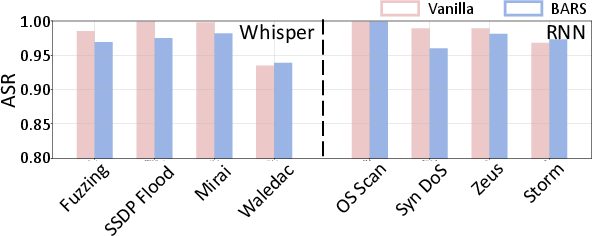

Feature-space defenses (adversarial training, randomized smoothing, BARS) are ineffective against NetMasquerade, as traffic-space manipulations (packet insertions/modifications) induce large feature-space perturbations beyond certified bounds.

Figure 12: ASR against BARS, illustrating the ineffectiveness of feature-space robustness certification.

Guidelines for defense:

- Traffic-space adversarial training (packet-level perturbations) is necessary.

- Certification should bound traffic-space modifications (e.g., number of inserted packets).

- Introducing randomness in model architecture or parameters at inference can increase attack difficulty.

Conclusion

NetMasquerade demonstrates that ML-based malicious traffic detection systems remain vulnerable to hard-label black-box evasion attacks. By combining a pre-trained benign traffic model (Traffic-BERT) with RL-based minimal adversarial generation, NetMasquerade achieves high evasion rates, low modification overhead, and practical efficiency. The results challenge the sufficiency of feature-space robustness and highlight the need for traffic-space-aware defenses. Future work should explore dynamic model randomization and traffic-space certification to enhance robustness against adaptive adversaries.