NoisePrints: Distortion-Free Watermarks for Authorship in Private Diffusion Models (2510.13793v1)

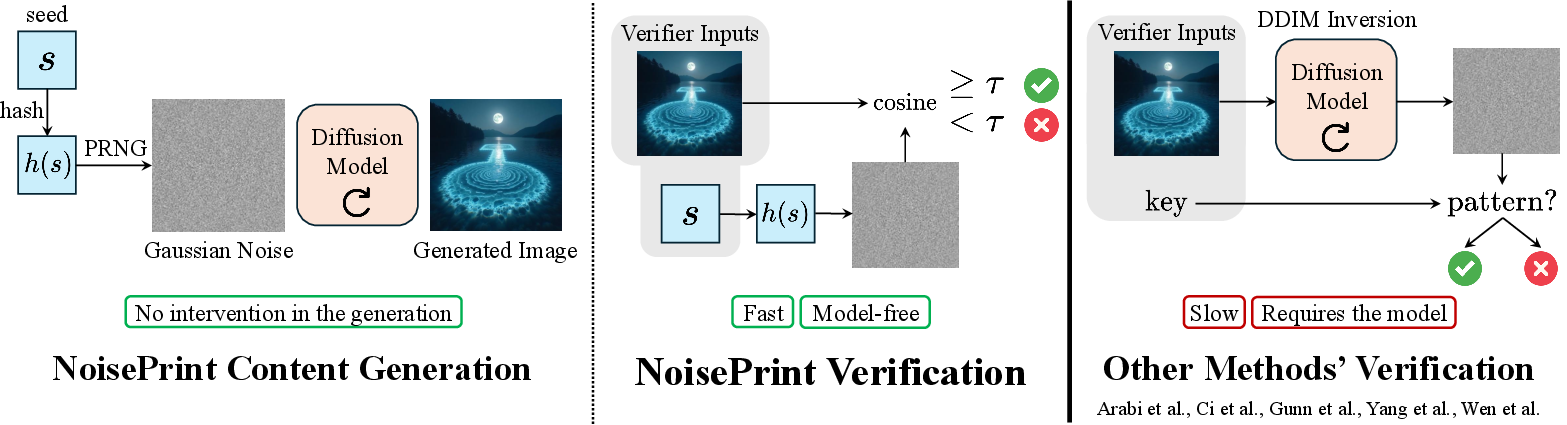

Abstract: With the rapid adoption of diffusion models for visual content generation, proving authorship and protecting copyright have become critical. This challenge is particularly important when model owners keep their models private and may be unwilling or unable to handle authorship issues, making third-party verification essential. A natural solution is to embed watermarks for later verification. However, existing methods require access to model weights and rely on computationally heavy procedures, rendering them impractical and non-scalable. To address these challenges, we propose , a lightweight watermarking scheme that utilizes the random seed used to initialize the diffusion process as a proof of authorship without modifying the generation process. Our key observation is that the initial noise derived from a seed is highly correlated with the generated visual content. By incorporating a hash function into the noise sampling process, we further ensure that recovering a valid seed from the content is infeasible. We also show that sampling an alternative seed that passes verification is infeasible, and demonstrate the robustness of our method under various manipulations. Finally, we show how to use cryptographic zero-knowledge proofs to prove ownership without revealing the seed. By keeping the seed secret, we increase the difficulty of watermark removal. In our experiments, we validate NoisePrints on multiple state-of-the-art diffusion models for images and videos, demonstrating efficient verification using only the seed and output, without requiring access to model weights.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces NoisePrints, a new way to add “watermarks” to AI‑generated images and videos made by diffusion models. Unlike many watermarks, NoisePrints doesn’t change the look of the picture at all. It uses the random starting “noise” (like TV static) that the model begins with as a hidden signature. This helps artists and small organizations prove they made a piece of content, even if the AI model is private and not shared with others.

Key Objectives

The paper sets out to do three main things:

- Make authorship proof possible without touching or accessing the private AI model.

- Keep images and videos looking exactly as they would normally (no visible or hidden distortions added).

- Provide a fast, practical, and secure way to verify ownership that works across different models and even against common edits and attacks.

How It Works (Methods, in simple terms)

Think of a diffusion model as a machine that turns “random noise” into a clear picture. That process starts from a random seed—a number that tells the machine how to generate its first noisy frame.

Here are the key ideas explained with everyday analogies:

- Random seed: Like a unique recipe code. If you start with the same seed, you’ll get the same final image (with the same model and settings).

- Initial noise: Like TV static that the model gradually turns into the final image. Surprisingly, this static leaves a subtle “imprint” on the final picture that you can measure.

- Hashing the seed: Before using the seed, the system runs it through a one-way “hash” function (think of it like shredding a message into confetti that can’t be put back together). This makes it impossible for an attacker to reverse the image back to find the original seed.

- VAE encoder: Many models share a standard “encoder” that turns images into a compact representation (like a list of numbers). The encoder is public, so verifiers can use it.

- Cosine similarity: Imagine two arrows. If they point the same way, their cosine similarity is high; if they point differently, it’s low. NoisePrints compares the “arrow” of the final image’s encoded numbers with the “arrow” of the original noise from the seed. If they align enough (above a secret threshold), the claim “I generated this with seed s” is accepted.

Verification steps, simplified:

- The claimant keeps the seed secret while generating the image/video.

- To prove authorship, they share the image and the seed with a verifier.

- The verifier:

- Encodes the image into numbers using the public encoder.

- Recreates the initial noise from the seed (after hashing).

- Computes how aligned these two are (cosine similarity).

- Accepts the claim if the alignment is above a carefully chosen threshold.

Handling tricky cases:

- Geometric edits (like rotating or cropping): These can misalign the image and its original noise. The paper adds a “dispute protocol” where the rightful owner can submit the correct transformation (e.g., “rotate by 90°”), so the verifier can re-align and check again.

- Proving ownership without revealing the seed: The paper uses zero‑knowledge proofs—this is like proving you know the password without saying it. You convince the verifier the check would pass, but you don’t reveal the seed itself. This makes it harder for attackers to remove the watermark.

Main Findings and Why They Matter

- Strong correlation: The initial noise (from the seed) and the final image’s encoded representation are surprisingly well‑aligned. That alignment is consistent enough to serve as a watermark.

- Security against guessing: The chance that a random seed (not the true one) passes verification is astronomically small (the paper targets a false positive rate near —so small that guessing your way to a match is practically impossible).

- Model‑free verification: Verifiers don’t need access to the private model. They only need:

- The public encoder (often shared across models),

- The claimed seed,

- The image or video.

- Speed: NoisePrints is much faster to verify than methods that “invert” the diffusion process (which is heavy). Depending on the model, it’s roughly 14–213 times faster to verify.

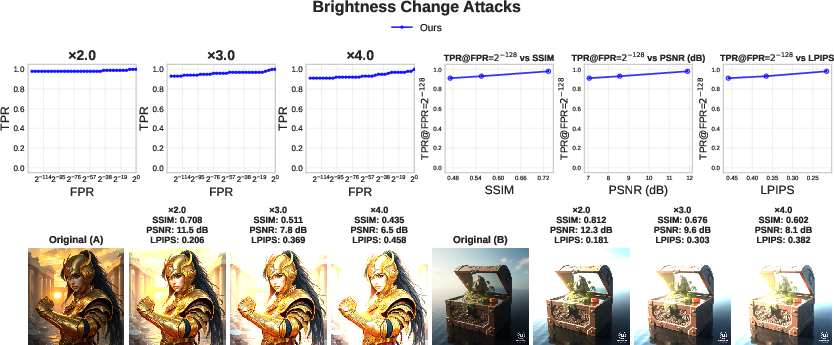

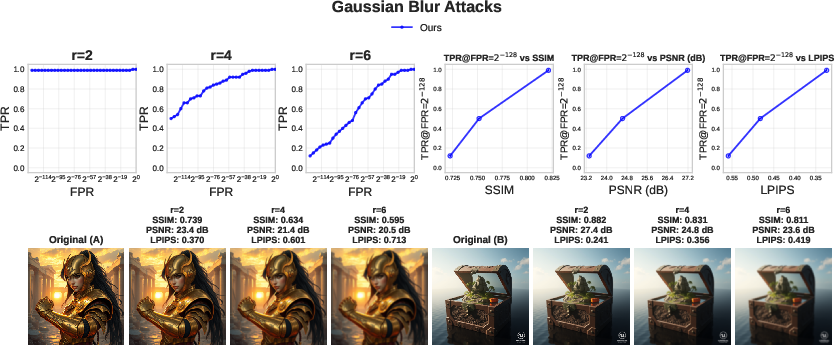

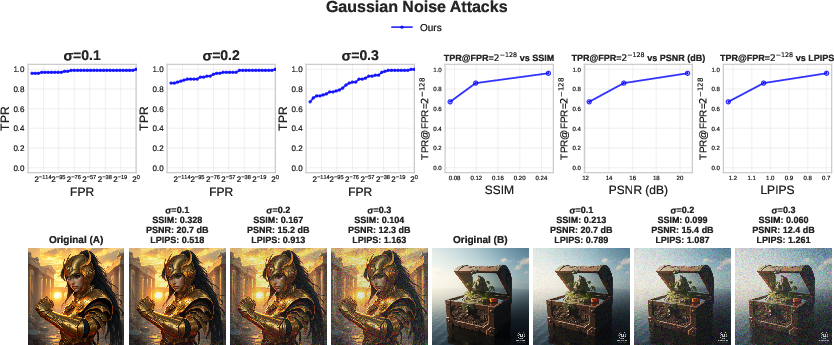

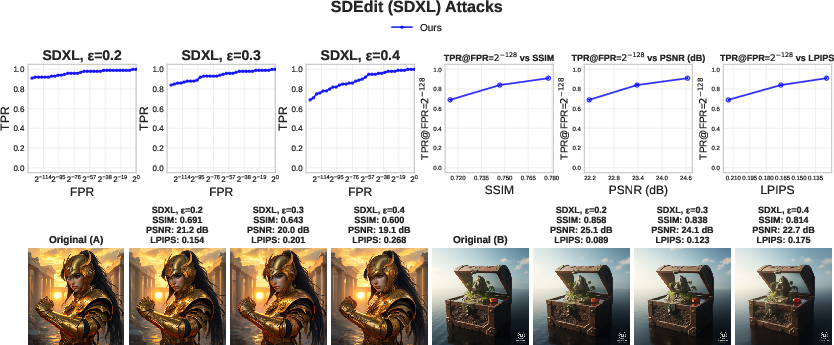

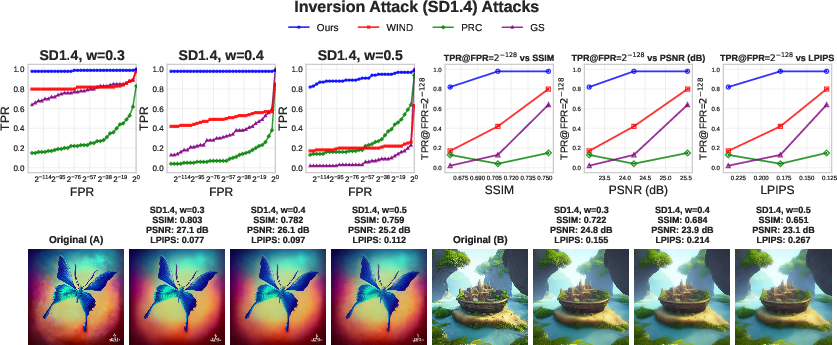

- Robustness: It works well even after common edits like compression, blur, brightness changes, resizing, and regeneration attacks (where an attacker re-runs the image through another model to wash out watermarks). It also holds up better than several existing watermark methods in many tested scenarios.

Implications and Impact

NoisePrints makes it practical for creators—especially those using private or proprietary models—to prove ownership of their AI‑generated images and videos without changing how those images look. Because it’s:

- Distortion‑free (no added artifacts),

- Fast and lightweight (easy to run at scale),

- Secure against guessing,

- Usable without accessing model weights,

it can become a useful tool in copyright and authorship disputes. It’s not perfect—no watermark system is—but it raises the bar for attackers and helps build trust and accountability in generative media.

Simple limitations to keep in mind:

- It relies on having access to the model’s public encoder (VAE). If the encoder isn’t available, verification is harder.

- It’s not designed to tell if a picture is “real” or “AI‑generated”; it only helps prove authorship for content generated by diffusion models.

- Extremely clever geometric or adversarial edits may still cause issues. The dispute protocol helps, but perfect protection isn’t possible.

Overall, NoisePrints offers a practical, secure, and fast way for creators to link their content to their seeds—like a hidden, built‑in signature that travels with the image or video—without changing how the content looks.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored, framed so future researchers can act on each item.

- Formal theory for “noise imprint” persistence: provide rigorous bounds (beyond heuristic optimal-transport intuition) on expected cosine similarity between initial noise and VAE latents across diffusion/flow architectures, schedules, and data domains.

- Deterministic-sampler assumption: evaluate NoisePrints under stochastic samplers (e.g., ancestral sampling, stochastic CFG, dropout/LoRA randomness, temperature scaling); quantify degradation and propose sampler-agnostic verification variants.

- Public VAE requirement: test sensitivity when the verifier’s encoder differs from the model’s training-time VAE (version, weights, pre/post-processing); develop encoder-agnostic or adapter-based verification when the VAE is private or mismatched.

- Threshold calibration under real nulls: empirically validate FPR/TPR on large, diverse datasets to check whether the spherical-cap model fits actual embedding statistics; design per-image or prompt-adaptive thresholds to control false positives for hard cases.

- Seed hashing and PRNG choices: specify recommended cryptographic hash and CSPRNG; quantify risks if platforms use non-cryptographic PRNGs; measure portability across common ML toolchains (PyTorch/Numpy/TF) and OS RNGs.

- Geometric robustness beyond rotation/crop/scale: extend evaluation and dispute protocol to translation, perspective/affine shear, nonrigid warps, content-aware resize; paper automated recovery of alignment (inverse transform estimation) at scale.

- Dispute protocol tie cases: analyze scenarios where both claimants pass self and cross checks; propose tie-breakers (e.g., minimal alignment transform, timestamped proofs, cryptographic commitments, provenance metadata).

- Joint injection+removal attacks: benchmark adversaries that simultaneously decorrelate from the true seed and correlate to a forged seed (multi-objective latent optimization) while preserving perceptual similarity; design defenses or detectability criteria.

- Attacker with full model access: characterize how much correlation can be removed (and forged) given access to the original weights; derive bounds on removal at fixed LPIPS/SSIM and propose countermeasures.

- Prompt omission: paper whether including prompt, CFG, and pipeline metadata in verification improves security; assess risk that different seeds can produce visually indistinguishable images that complicate ownership claims.

- Video-specific edits: evaluate robustness to frame-rate changes, frame drops/insertions, temporal warps, stabilization, inter-frame interpolation, re-encoding; define temporal aggregation of NoisePrints (per-frame vs. global) and alignment under edits.

- Color/codec pipeline effects: test robustness to color-space conversions (sRGB/linear/HDR), gamma/tone-mapping, chroma subsampling, and modern codecs (HEIC/WebP/AVIF/H.265/H.266) for both images and videos.

- Cross-model regeneration: quantify verification when watermarked content is edited or regenerated by different models/VAEs and under model fine-tuning or LoRA adapters; identify failure modes and mitigation strategies.

- Systematic failure cases: characterize prompts/content types yielding low NoisePrint scores (the observed outlier prompt, textures, large flat regions, heavy compression); build content-aware safeguards or preprocessing to stabilize verification.

- Real/fake detection limitation: explore whether spatially localized correlation profiles or multi-scale latents can help distinguish synthetic from real images without enabling watermark injection into real images.

- ZKP numerical fidelity: quantify fixed-point rounding error and prove completeness/soundness margins; set conservative slack around τ to avoid borderline proof forgery; compare alternative field sizes and integer formats.

- ZKP scalability and costs: report (and optimize) proof size, prover time, and verifier time for image and video latents; explore hardware acceleration, streaming proofs, or polynomial commitments to avoid chunking.

- Binding and non-repudiation: formalize how seeds, public strings, and identities are bound; integrate signatures, time-stamps, and public ledgers to prevent replay or seed reuse; define revocation/update procedures.

- Trust in public parameters: address who publishes PRNG, hash, and encoder definitions; prevent adversarial parameter manipulation (e.g., biased RNG or altered encoder) by standardization and audited registries.

- VAE/encoder reproducibility: paper cross-implementation variability (quantization, precision, preprocessing) and its impact on cosine scores; define canonical encoders or normalization steps for consistent verification.

- Steganalysis-style averaging: evaluate whether aggregating many verified outputs leaks exploitable templates despite hashed seeds; propose defenses (seed domain separation, per-user salts, or protocol-level rate limits).

- Evidentiary standards: align verification thresholds and dispute procedures with legal norms; specify confidence levels, reporting formats, and auditor roles for real-world authorship resolution.

- Multi-resolution/aspect ratios: assess how scaling to different sizes or aspect ratios affects latent alignment; design resolution-agnostic embeddings or normalization pipelines.

- Seed reuse and cross-image interference: analyze whether reusing the same seed (or seed prefix) across many generations creates cross-correlations or privacy risks; recommend seed management policies.

Practical Applications

Immediate Applications

The following applications can be deployed today using the paper’s methods and verification protocol (seed + output only, no model access), with optional zero-knowledge proofs (ZKPs) to avoid revealing seeds. Each item notes practical workflows, sectors, and key assumptions/dependencies that affect feasibility.

- Authorship certificates for AI-generated media (media, software, legal)

- What: Bundle a generated asset with a verifiable “authorship pack” that includes the seed (or a ZK proof of threshold pass), the public PRNG spec, the VAE ID/version, and a timestamp.

- Tools/workflows:

- NoisePrints SDK/CLI to compute the cosine-based score and produce a signed JSON/CBOR “certificate”

- Optional ZK prover service that outputs a proof bound to a public string (e.g., “Image by Studio X”)

- Integration with C2PA-like manifests to attach certificate files

- Assumptions/dependencies: Deterministic sampler; the same public VAE at verification time; seed secrecy unless ZKP is used; known PRNG and cryptographic hash.

- Third-party verification services for private models (media platforms, marketplaces, APIs)

- What: Independent verifiers accept (x, seed) or (x, ZK proof) and render a pass/fail with calibrated FPR (e.g., 2-128). Ideal for platforms hosting content from private diffusion models.

- Tools/workflows:

- REST microservice and browser widget for upload-and-verify

- Batch verification for large catalogs and content moderation triage

- Assumptions/dependencies: Public access to the VAE used by the model; published PRNG spec and hash function.

- Dispute resolution protocol for conflicting claims (marketplaces, agencies, freelancers)

- What: When two parties claim ownership of similar content, use the paper’s self-check + cross-check with claimant-provided geometric transforms to resolve geometric misalignment and injection-only attempts.

- Tools/workflows:

- “Dispute mode” verifier UI: upload two claims (xi, si, gi), automatic cross-check evaluation

- Lightweight mediation reports for clients/legal teams

- Assumptions/dependencies: Publicly agreed transform family; both parties accept the dispute protocol rules; verifier neutrality.

- Robust, low-cost provenance for video generation (entertainment, advertising, social video)

- What: Provenance verification for high-dimensional video is feasible and cost-effective, avoiding costly inversion—crucial for streaming/short-form video at scale.

- Tools/workflows:

- Pipeline hook in video gen tools to capture seeds and produce authorship proofs

- Batch verifier that ingests clips and returns pass/fail and confidence

- Assumptions/dependencies: Public VAE; consistent latent dimensions; deterministic sampling.

- API feature for hosted diffusion services: “Prove ownership” (software, cloud AI providers)

- What: Offer an endpoint that returns either a seed escrow token or a ZK proof alongside generated outputs; users can verify via third parties.

- Tools/workflows:

- Seed handling and hashing server-side; optional seed escrow with periodic ZK proof minting

- Customer dashboard to retrieve proofs and regenerate certificates

- Assumptions/dependencies: Trust in provider’s seed logging; deterministic sampler; alignment on PRNG/hashing standards.

- Enterprise asset lineage and governance for synthetic data (enterprise AI governance, compliance)

- What: Record NoisePrints for synthetic images/videos used in marketing, product design, or training data to establish provenance in audits.

- Tools/workflows:

- DAM/CMS plugin that stores proofs and allows internal verification

- Audit logs tying assets to owners and project IDs via public strings in ZK proofs

- Assumptions/dependencies: Shared VAE access within the org; consistent seed storage and key management.

- Academic reproducibility and private-model transparency (academia, R&D labs)

- What: Authors share figures with seeds or ZK proofs to demonstrate generated content provenance without releasing model weights.

- Tools/workflows:

- “Artifact” bundles attached to papers (images + per-image proof + VAE ID)

- Reviewer-side CLI for offline verification

- Assumptions/dependencies: Public VAE (or an escrowed encoder); deterministic sampling.

- Stock and UGC marketplaces: upload-time verification and badge (creative platforms)

- What: Require NoisePrint verification upon submission; display “Verified AI-authored” badge; reduce disputes over originality and support takedowns.

- Tools/workflows:

- Upload filter pipe that runs verification at ingestion

- Visible, standardized badge tied to the proof

- Assumptions/dependencies: VAE matching; FPR calibrated and published; community acceptance of badge semantics.

- Creative-tool plugins (Photoshop/GIMP/Krita/Blender/DaVinci Resolve)

- What: When invoking a diffusion module, automatically capture seeds and create proof files; a “Verify” panel lets users test content prior to delivery.

- Tools/workflows:

- Plugin SDKs that wrap generation calls

- Exporters that embed proofs into metadata sidecars

- Assumptions/dependencies: The tool’s diffusion module uses deterministic samplers and a known VAE.

- Privacy-preserving proof sharing (daily life creators, freelancers)

- What: Share ownership proof without revealing seeds (e.g., via a QR code linking to a ZK proof viewer), protecting against seed re-use or forgery.

- Tools/workflows:

- ZK proof generator + static proof viewer page

- Public-string binding (identity or company name) inside the proof

- Assumptions/dependencies: ZKP computation resources; chunked proof generation for large latents (as in the paper’s implementation).

- Cost-efficient platform moderation triage (trust & safety)

- What: Rapidly screen flagged posts claiming AI authorship; use NoisePrints as a first-pass filter before any heavier forensic analysis.

- Tools/workflows:

- Batch cosine-verification queues

- Policy workflows: “claims verified,” “claims unverified—send to forensics”

- Assumptions/dependencies: Access to correct VAE; calibrated thresholds; known model families in scope.

- Contractual deliverables with verifiable originality (agencies, ads, publishing)

- What: Agencies deliver creatives with embedded proofs; clients independently verify authorship.

- Tools/workflows:

- Contract templates referencing a verification standard (seed/ZK proof, VAE ID)

- Client-side verification portal

- Assumptions/dependencies: Contractual recognition of the verification method; standardized metadata.

- Forensic support in copyright disputes (legal, insurance)

- What: Provide verifiers’ reports backed by cryptographic-level FPR thresholds for evidence packs in DMCA or insurance claims.

- Tools/workflows:

- Exportable verification logs and expert declarations

- Chain-of-custody procedures for assets and proofs

- Assumptions/dependencies: Jurisdictional acceptance of such technical evidence; clear threat-model disclosure.

Long-Term Applications

The following applications require standardization, further research on robustness/coverage, productization at scale, or ecosystem coordination (e.g., policy, legal, hardware support).

- Provenance standards with NoisePrint integration (policy, standards, C2PA ecosystem)

- What: Extend content provenance standards to include seed-based authorship proofs and ZK proof manifests; enable interoperable, model-agnostic verification.

- Dependencies: Multi-stakeholder agreement on metadata fields (VAE ID/hash, PRNG/hash specs), governance for threshold choices, and dispute protocol schemas.

- Platform-level provenance graphs and chain-of-edits (software platforms, media)

- What: Maintain a graph of transformations and ownership claims; integrate the dispute protocol for geometric misalignments and injection-only attempts at scale.

- Dependencies: Standardized transform families; agreed-upon rules for cross-check; storage of incremental proofs across edits.

- Privacy-preserving royalty and licensing settlement (finance for creative economy)

- What: Use ZK proofs to claim usage and ownership while preserving creator privacy; automate royalty splits based on verified authorship events.

- Dependencies: On-chain/off-chain registries, ZK scalability for large media, licensing smart contracts or trusted clearinghouses.

- Hardware-assisted seed custody and proof generation (devices, secure enclaves)

- What: Store seeds in TPM/TEE/HSM; generate ZK proofs inside secure enclaves; deploy on cameras/phones with generative features to prevent seed leakage.

- Dependencies: Vendor support, enclave attestation, APIs for PRNG/hash in hardware.

- Real-time moderation and streaming verification for video (social platforms, live events)

- What: Low-latency, chunk-wise NoisePrint checks on streaming content; sliding-window verification for long media.

- Dependencies: Efficient VAE encoders for video, chunked thresholds, scalable infrastructure.

- Legal acceptance and evidentiary guidelines (policy, courts)

- What: Establish jurisprudence and guidance on seed-based/ZK-based authorship proofs; align with copyright regimes and DMCA processes.

- Dependencies: Amicus briefs, standards bodies’ endorsement, education of courts and regulators.

- Cross-modal extensions (audio, 3D, text) (multimedia, gaming, publishing)

- What: Adapt correlation-based verification to other modalities with analogous latent/noise structures and public encoders.

- Dependencies: Empirical validation of noise–output correlations; standardized encoders analogous to VAEs; modality-specific robustness studies.

- Robustness to broader transformations and adaptive attacks (security R&D)

- What: Extend the dispute protocol and verification to handle more complex transforms (e.g., nonrigid transforms, heavy crops) and targeted decorrelation attacks.

- Dependencies: Research on alignment-invariant embeddings, synchronization tricks that preserve “no modification to generation” ethos, and calibrated risk disclosures.

- Enterprise governance for synthetic training data lineage (ML governance)

- What: Ensure all synthetic training inputs have verifiable provenance, reducing contamination risk and aiding audits for regulated sectors (healthcare, finance).

- Dependencies: Organization-wide adoption; data catalog integration; policies defining acceptable FPR/TPR thresholds.

- Universal VAE registry and compatibility layers (software infrastructure)

- What: Public registries mapping VAE versions/hashes to model families; compatibility tools to select the correct encoder for verification when the generator is private.

- Dependencies: Community-maintained registry; publisher cooperation; fallbacks when VAEs are proprietary.

- Escrow-backed seed management and delegation (services, enterprises)

- What: Seed escrow with time-limited delegation and revocation, enabling teams/contractors to generate on behalf of a brand while preserving ownership claims.

- Dependencies: Key management UX, access controls, auditable policies, and ZK-bound statements attesting delegation.

- Policy-driven content labeling and consumer transparency (public policy, platforms)

- What: Mandate disclosure badges for AI-generated content with verifiable claims; empower consumers to verify authenticity and authorship without platform trust.

- Dependencies: Regulatory guidance; platform cooperation; consumer-grade verification tools.

- Research on theoretical underpinnings of noise–output correlation (academia)

- What: Formalize and generalize the optimal-transport basis for persistent correlations, especially for few-step samplers and flow models; derive tighter bounds.

- Dependencies: Access to diverse models; shared benchmarks; reproducible protocols.

Key Assumptions and Dependencies That Cut Across Applications

- Public encoder availability: The verifier needs the same VAE (or functionally equivalent encoder) used in generation. Many models share VAEs, but not all.

- Deterministic sampling: The generation path must be deterministic for a given seed (e.g., DDIM/flow samplers with fixed settings).

- Seed secrecy and binding: Seeds should be kept secret, or replaced by ZK proofs bound to a public string; seeds must be hashed before PRNG init to prevent targeted manipulation.

- Published primitives: The PRNG and cryptographic hash used for seed → noise must be public and secure; thresholds must be clearly documented and calibrated per latent dimensionality.

- Threat model disclosure: NoisePrints are not designed for real/fake detection; perfect robustness is unattainable. Verification claims should include assumed attack bounds (e.g., geometric transforms considered, perceptual similarity constraints).

- Compute constraints for ZKPs: ZK proofs at video scale require chunking/aggregation and careful engineering; proof generation is heavier than cosine verification and may need dedicated services.

Glossary

- Beta distribution: A continuous probability distribution defined on [0, 1], often used in Bayesian statistics and shape modeling; here it describes the distribution of a coordinate of a uniform random point on the sphere. "i.e.\ the distribution mapped affinely from to ."

- CirC: A compiler and toolchain for zero-knowledge circuits that translates high-level descriptions into constraint systems. "We use the CirC~\citep{ozdemir2022circ} toolchain to write our circuit in a front-end language called Z# and then compile it to an intermediate representation called R1CS."

- Classifier-free guidance (CFG): A technique in diffusion model sampling that adjusts the influence of conditioning (e.g., prompts) without requiring a classifier, improving fidelity to the prompt. "DDIM inversion is performed with an empty prompt, $50$ steps, and no classifier-free guidance (CFG)."

- Collision resistant: A property of cryptographic hash functions meaning it is computationally infeasible to find two distinct inputs with the same output. "We require to be deterministic, efficient, and cryptographically secure (collision resistant, pre-image resistant, and producing uniformly distributed outputs)."

- Concentration of measure phenomenon: A high-dimensional probability principle where random variables become sharply concentrated around their mean or typical value. "In high dimensions, by the concentration of measure phenomenon, such vectors are almost orthogonal, hence their inner product is tightly concentrated around zero."

- Cosine similarity: A normalized inner product measuring the angle between two vectors, used here to correlate image latents with initial noise. "We define the NoisePrint score as the cosine similarity between the embedded image and the noise:"

- DDIM inversion: A procedure that approximately recovers the initial noise of a diffusion process (from an output), enabling analysis or attacks. "DDIM inversion is performed with an empty prompt, $50$ steps, and no classifier-free guidance (CFG)."

- False positive rate (FPR): The probability of incorrectly accepting a claim (e.g., authorship) when it is false, used to calibrate verification thresholds. "We begin by assessing the reliability of verification in the absence of attacks, measuring the true positive rate (TPR) at a fixed false positive rate (FPR)."

- Flow matching: A training framework for generative models that learns velocity fields to transport noise to data via optimal transport-like flows. "Flow matching models are trained with conditional optimal transport velocity fields, and the learned velocity field is often simpler than that of diffusion models and produces straighter paths \citep{lipman2022flowmatching}."

- Gaussian noise: Random noise drawn from a normal distribution, used as the initial state in diffusion/flow generative processes. "The PRNG produces Gaussian noise "

- Geodesic metric: The distance measured along the shortest paths on a manifold (e.g., on a sphere), used in concentration inequalities. "The map is $1$-Lipschitz (with respect to the geodesic or Euclidean metric restricted to the sphere)"

- KL-regularized: Refers to regularization using Kullback–Leibler divergence to encourage distributions (e.g., latent spaces) to follow desired priors. "assuming the target is a KL-regularized high dimensional VAE latent space"

- Latent diffusion models (LDMs): Diffusion models that operate in a compressed latent space rather than pixel space to improve efficiency and quality. "We present our method in the context of latent diffusion models (LDMs)~\citep{rombach2021highresolution, podell2024sdxl, flux2024}, which have become the standard in recent diffusion literature."

- LPIPS: Learned Perceptual Image Patch Similarity, a metric that correlates with human visual perception for comparing images. "To measure the perceptual similarity between the original image and the attacked one, we use SSIM, PSNR, and LPIPS~\citep{zhang2018perceptual}."

- Mirage proof system: A zero-knowledge proof backend used to generate proofs over constraint systems like R1CS. "We then use CirC to produce a ZKP on the R1CS instance using the Mirage~\citep{251580} proof system."

- Optimal transport: A mathematical framework for transforming one probability distribution into another while minimizing a cost. "Optimal transport studies the problem of moving probability mass from one distribution to another while minimizing a transport cost function."

- Pre-image resistant: A property of cryptographic hash functions making it infeasible to find an input that hashes to a given output. "We require to be deterministic, efficient, and cryptographically secure (collision resistant, pre-image resistant, and producing uniformly distributed outputs)."

- Probability flow ODE: An ordinary differential equation describing a deterministic transport from noise to data in diffusion models, equivalent to a flow. "demonstrate that the mapping between noise and data of the probability flow ODE of diffusion models coincides with the optimal transport map"

- PRNG: Pseudorandom number generator; a deterministic algorithm that produces a sequence of numbers approximating randomness. "we first apply a fixed cryptographic hash and use the result to initialize the PRNG."

- PSNR: Peak Signal-to-Noise Ratio; a metric that quantifies the fidelity of a reconstructed or modified image relative to a reference. "To measure the perceptual similarity between the original image and the attacked one, we use SSIM, PSNR, and LPIPS~\citep{zhang2018perceptual}."

- Pseudorandom error-correcting code: A code-based technique for embedding recoverable signals that appear random, used for watermarking noise. "or by sampling the noise with pseudorandom error-correcting code~\citep{gunn2025undetectable,christ2024undetectable}."

- Regularized incomplete beta function: A special function used to express probabilities related to beta distributions and spherical caps. "where is the regularized incomplete beta function"

- Rectified flow: A flow-based generative modeling technique that straightens transport paths and reduces transport cost. "\cite{liu2022flowstraightfastlearning} prove that rectified flow leads to lower transport costs compared to any initial data coupling for any convex transport cost function , and recursive applications can only further reduce them."

- R1CS: Rank-1 Constraint System; an intermediate representation for arithmetic circuits used in zero-knowledge proofs. "then compile it to an intermediate representation called R1CS."

- SDEdit: A regeneration method that adds noise to an image and denoises it via diffusion to produce a perceptually similar image. "We further examine robustness under a wide range of attacks, including post-processing operations, geometric transformations, SDEdit-style regeneration \citep{meng2021sdedit}"

- Spherical cap: A region on a sphere defined by all points within a given angular distance from a center point. "it means that the random noise falls into a spherical cap of angular radius around ."

- SSIM: Structural Similarity Index Measure; a perceptual metric for image quality that models human visual perception. "To measure the perceptual similarity between the original image and the attacked one, we use SSIM, PSNR, and LPIPS~\citep{zhang2018perceptual}."

- True positive rate (TPR): The proportion of correctly accepted valid claims (e.g., genuine watermarks) among all valid cases. "For each attack, we report the empirical true positive rate (TPR) as a function of the false positive rate (FPR)."

- Variational autoencoder (VAE): A generative model that learns a probabilistic latent space via an encoder-decoder with a variational objective. "An LDM consists of (i) a diffusion model that defines the denoising process, and (ii) a VAE , where encodes images into latents and decodes latents back into pixels."

- Zero-knowledge proofs (ZKPs): Cryptographic protocols that prove statements without revealing any information beyond their truth. "Zero-knowledge proofs (ZKPs) allow a prover to convince a verifier that a statement is true without revealing to anything beyond its validity."

Collections

Sign up for free to add this paper to one or more collections.