InfiniHuman: Infinite 3D Human Creation with Precise Control (2510.11650v1)

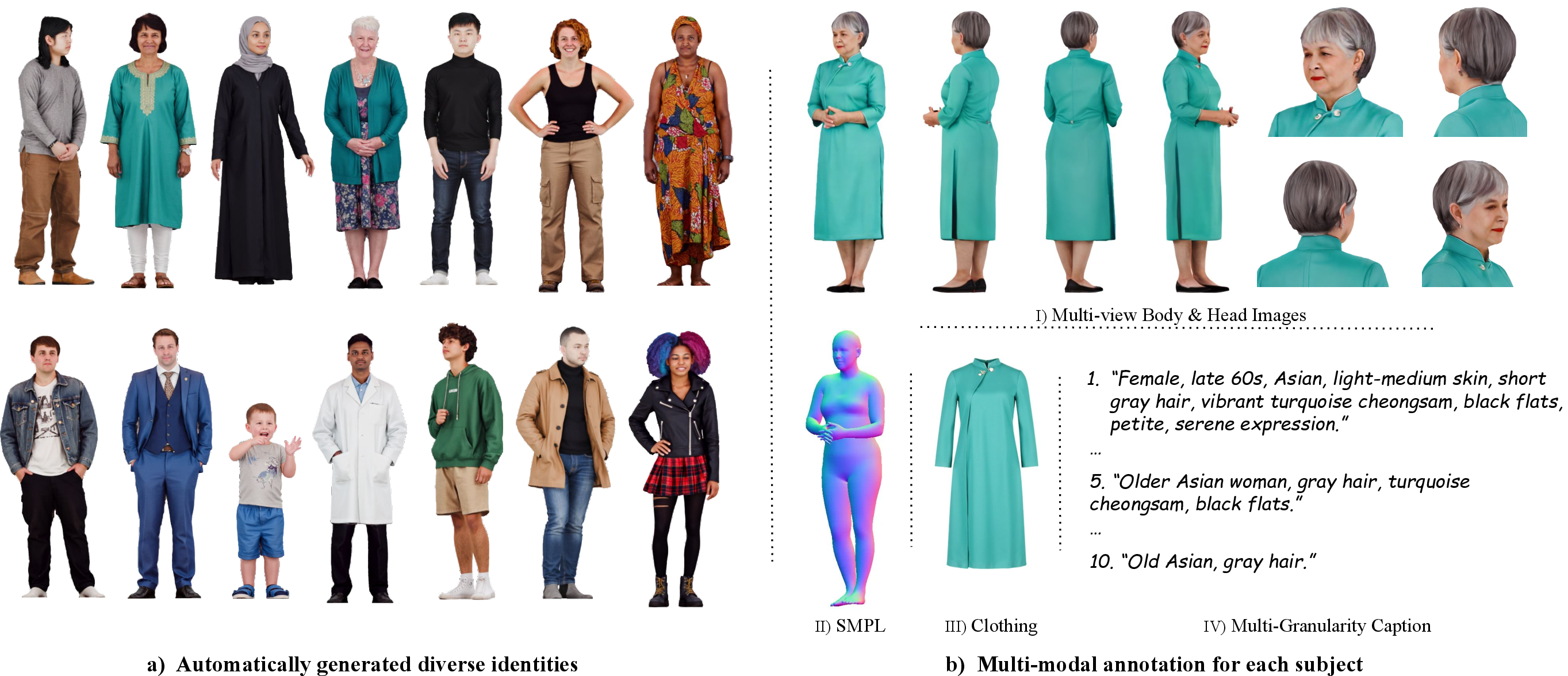

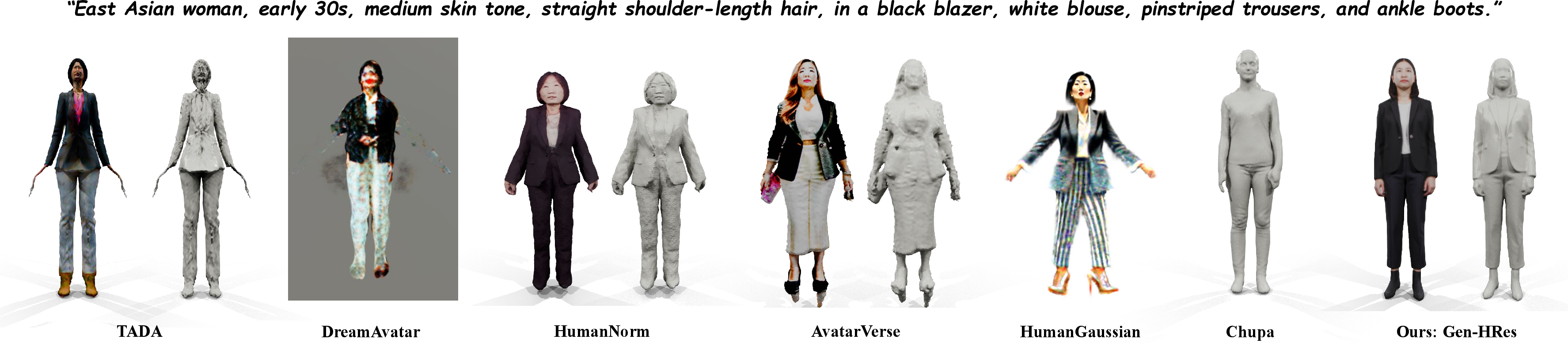

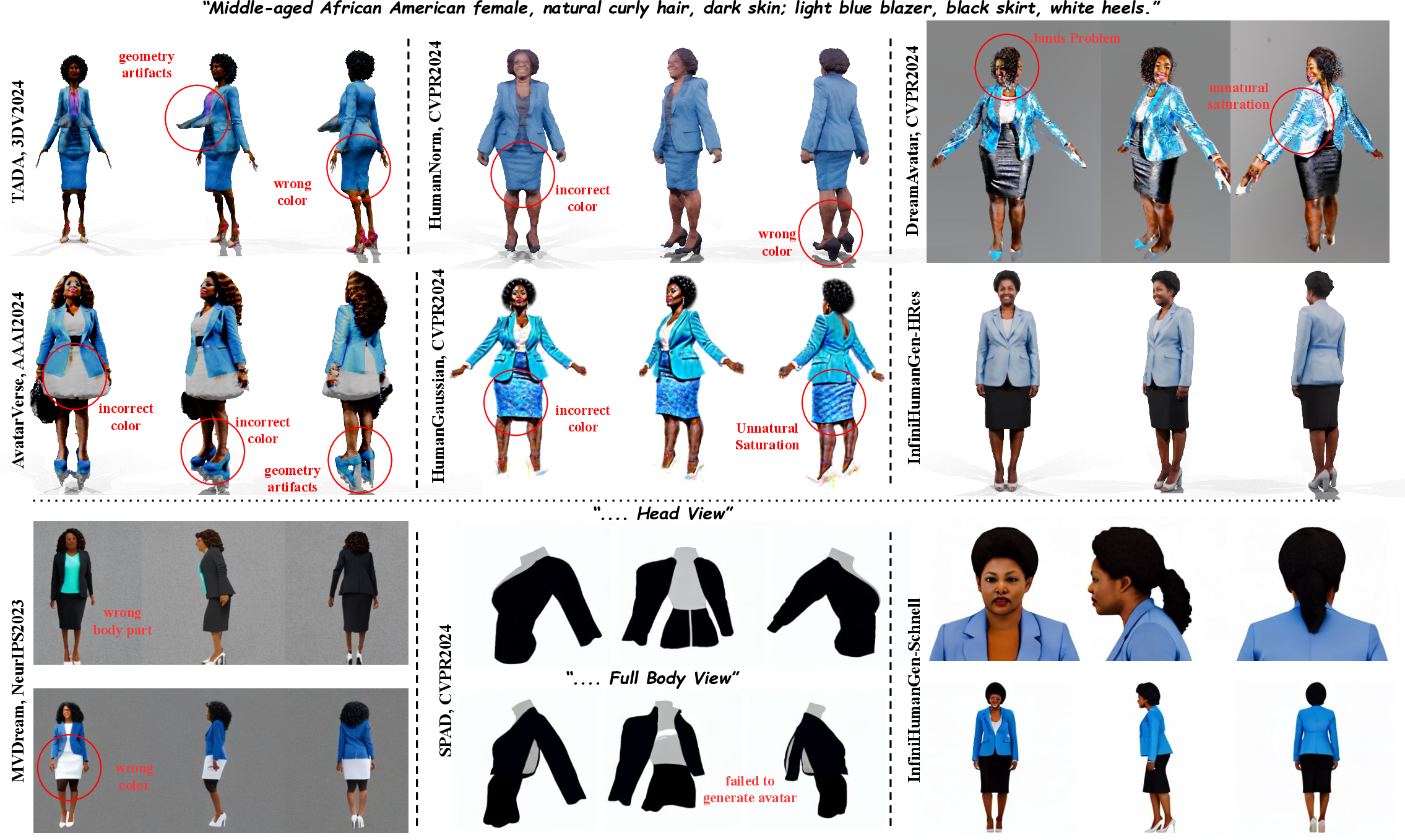

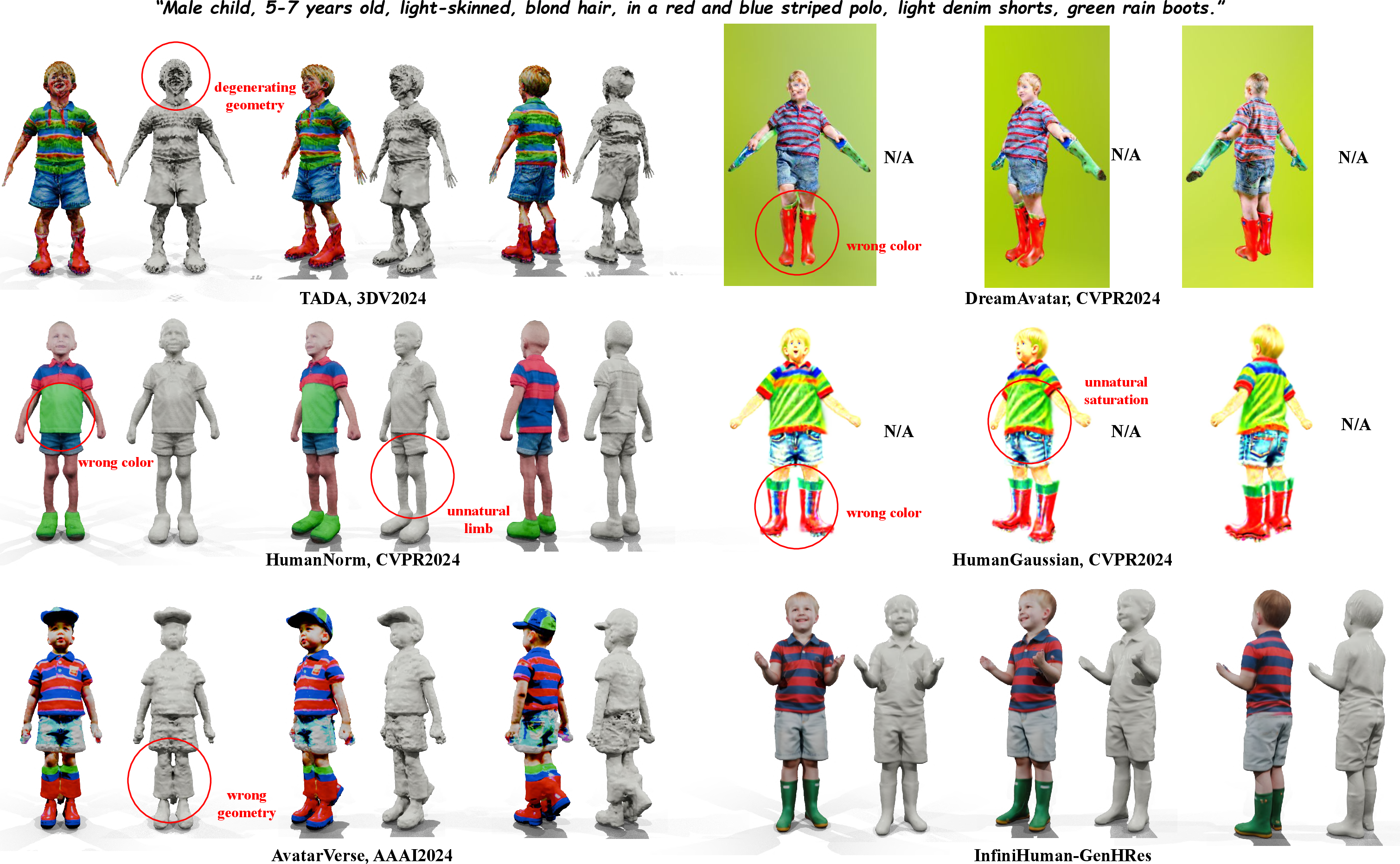

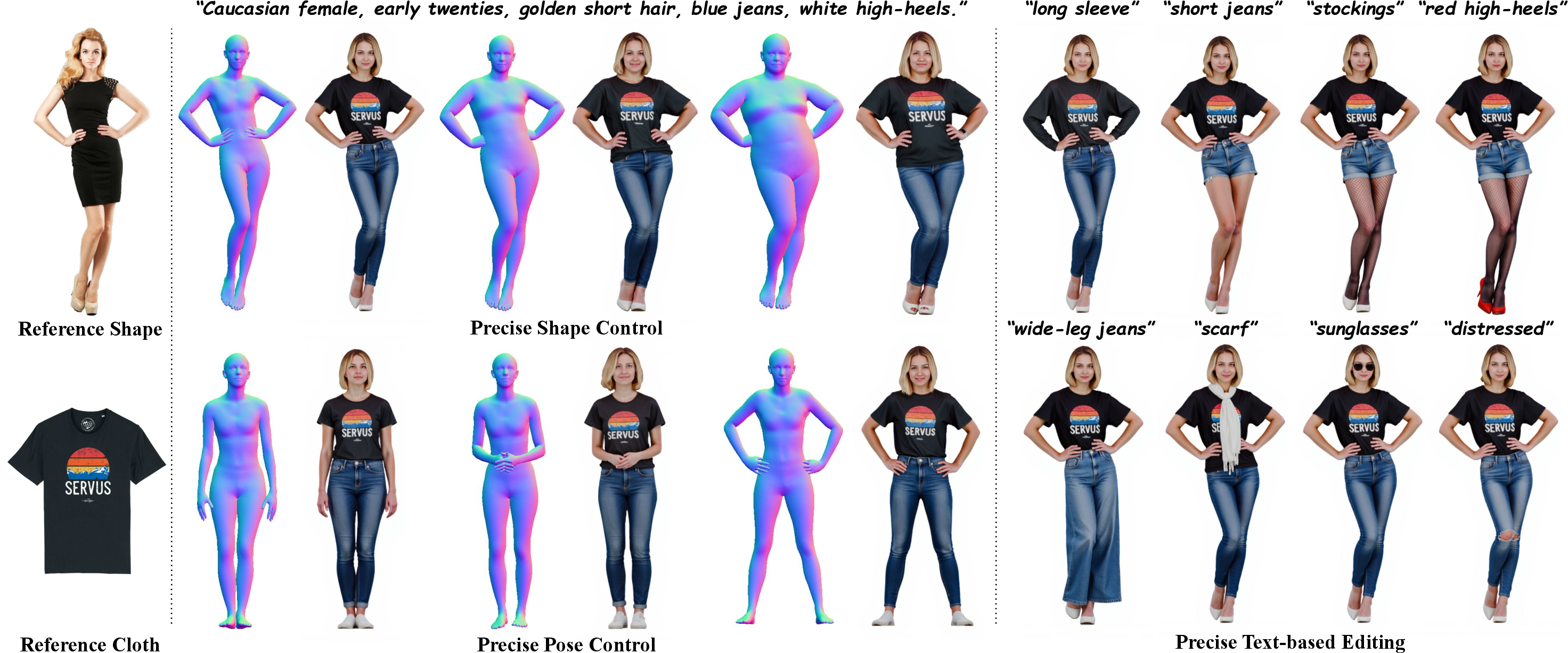

Abstract: Generating realistic and controllable 3D human avatars is a long-standing challenge, particularly when covering broad attribute ranges such as ethnicity, age, clothing styles, and detailed body shapes. Capturing and annotating large-scale human datasets for training generative models is prohibitively expensive and limited in scale and diversity. The central question we address in this paper is: Can existing foundation models be distilled to generate theoretically unbounded, richly annotated 3D human data? We introduce InfiniHuman, a framework that synergistically distills these models to produce richly annotated human data at minimal cost and with theoretically unlimited scalability. We propose InfiniHumanData, a fully automatic pipeline that leverages vision-language and image generation models to create a large-scale multi-modal dataset. User study shows our automatically generated identities are undistinguishable from scan renderings. InfiniHumanData contains 111K identities spanning unprecedented diversity. Each identity is annotated with multi-granularity text descriptions, multi-view RGB images, detailed clothing images, and SMPL body-shape parameters. Building on this dataset, we propose InfiniHumanGen, a diffusion-based generative pipeline conditioned on text, body shape, and clothing assets. InfiniHumanGen enables fast, realistic, and precisely controllable avatar generation. Extensive experiments demonstrate significant improvements over state-of-the-art methods in visual quality, generation speed, and controllability. Our approach enables high-quality avatar generation with fine-grained control at effectively unbounded scale through a practical and affordable solution. We will publicly release the automatic data generation pipeline, the comprehensive InfiniHumanData dataset, and the InfiniHumanGen models at https://yuxuan-xue.com/infini-human.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces InfiniHuman, a system that can create realistic 3D people (avatars) quickly and with very precise control. It lets you describe a person with text, choose a body shape, and even pick exact clothes from an image, then automatically builds a 3D character that matches those choices. The authors also built a huge, automatically generated dataset called InfiniHumanData with 111,000 different “people” to train their models.

What questions does the paper try to answer?

The paper focuses on a simple but powerful idea: Instead of spending lots of money and time scanning real people to get training data, can we use existing AI models to automatically generate unlimited, detailed, high-quality 3D humans—and give users fine-grained control over how they look?

Put simply:

- Can AI create endless realistic 3D people?

- Can users control features like age, ethnicity, body shape, pose, and clothing?

- Can this be done faster and better than previous methods?

How did they do it? (Methods explained simply)

Think of InfiniHuman as an assembly line of smart AI helpers working together to build believable 3D people. There are two main parts:

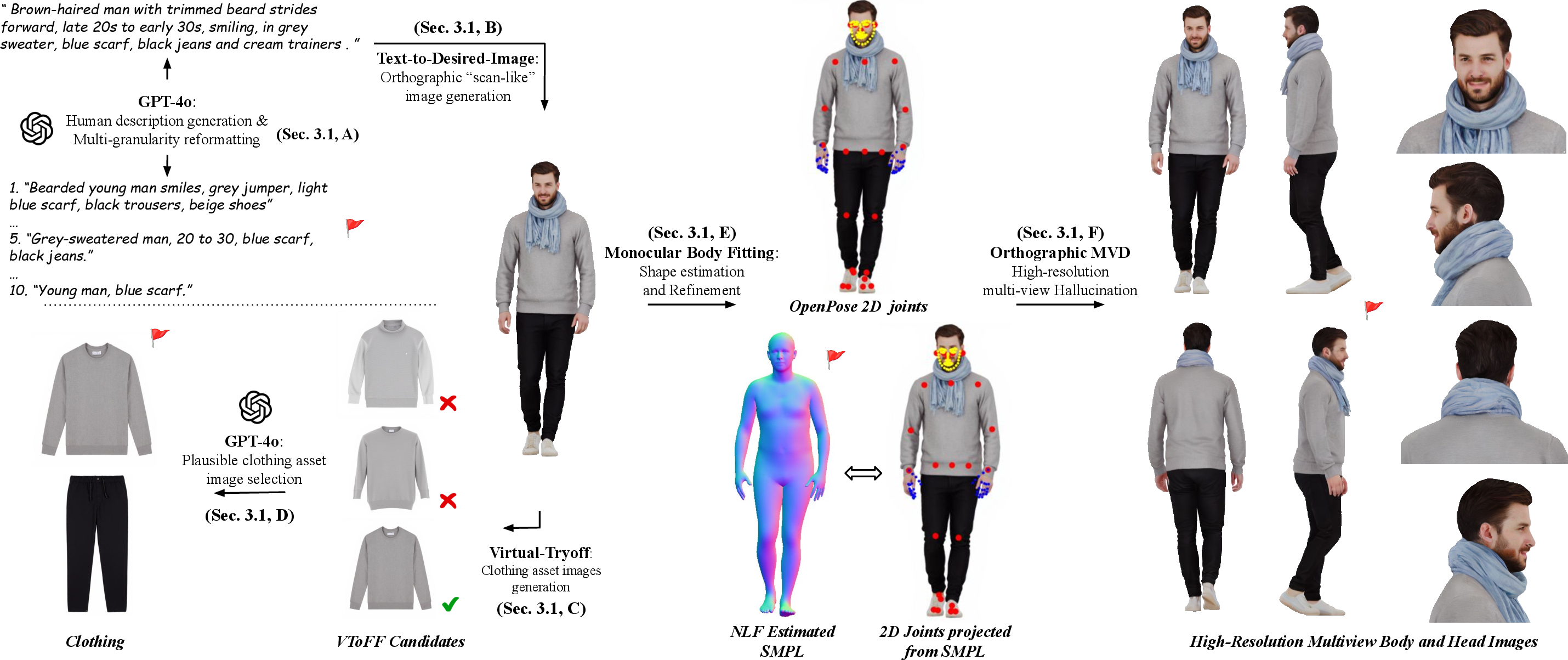

1) InfiniHumanData: Automatically making a giant training dataset

The team created a pipeline that uses several “foundation models” (large pre-trained AIs) to produce training data without manual effort.

- Multi-level text descriptions: They use AI to write detailed captions about each person (like “a middle-aged woman with curly hair wearing a denim jacket”), and also shorter versions. This helps the model understand both big-picture traits and fine details.

- Orthographic images (like blueprints): They fine-tune an image model to produce “scan-like” pictures with flat, even lighting and no camera perspective. Imagine drawings that look like technical blueprints—these make it easier to reconstruct 3D shapes.

- Clothing extraction (Virtual-TryOff): If you give a photo of a person, their system can “extract” the clothing into a clean garment image. Think of a digital tailor who can remove just the jacket from a photo so you can reuse it.

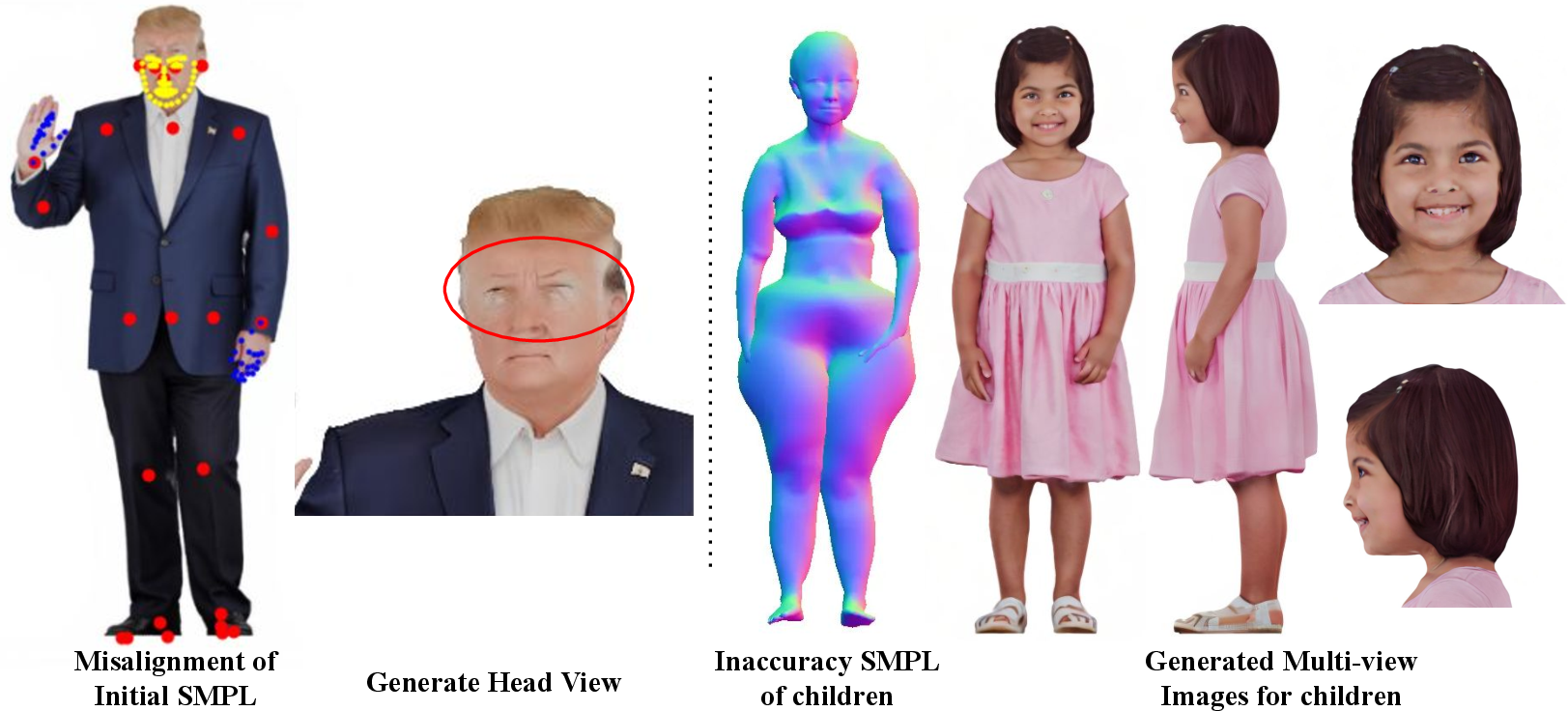

- Body shape and pose fitting: They fit a standard digital mannequin called SMPL (a common 3D human model) to the images. This aligns the 3D skeleton and shape with the pictures so everything matches.

- Multi-view generation: Using diffusion models (AI that “paints” images step by step), they create consistent views of the person from the front, side, and back, which is crucial for building a solid 3D model.

Result: InfiniHumanData contains 111,000 diverse identities with text descriptions, clothing images, multi-view pictures, and exact body shape parameters. In user tests, people often couldn’t tell these AI-generated images apart from real 3D scan renders.

2) InfiniHumanGen: The actual 3D avatar makers

Using that dataset, they train two complementary generators:

- Gen-Schnell (fast): Creates a 3D avatar in about 12 seconds using “Gaussian splatting”. Imagine building a sculpture out of tiny, colored blobs that together form the person. It’s quick and looks good, but very fine details (like small logos or facial textures) can be a bit blurry.

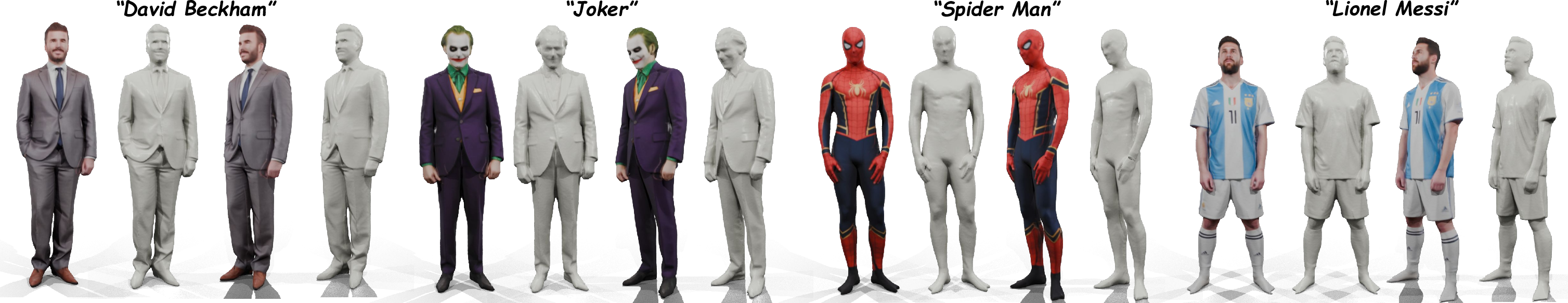

- Gen-HRes (high-resolution): Takes around 4 minutes and produces a clean, detailed 3D mesh with high-quality textures—like a carefully painted action figure. It uses all the inputs (text, body shape, clothes) and delivers very realistic results that match your instructions.

Both models accept:

- Text (“a teen boy in a red hoodie with glasses”),

- Body shape/pose (via SMPL),

- Clothing images (exact garments you want the avatar to wear).

Key ideas and technical terms in everyday language

- Foundation models: Big, general-purpose AI tools trained on huge amounts of data. The authors “distill” or repurpose their abilities for this specific task.

- Diffusion models: A type of AI that starts with noise and gradually paints a realistic image; think of it as “refining a blurry picture step by step.”

- Orthographic views: Images without perspective distortion (like blueprints). They make 3D reconstruction easier because sizes don’t change with distance.

- SMPL: A standard 3D human “mannequin” used by researchers to represent shape and pose.

- Gaussian splatting: Building a 3D scene using many tiny colored blobs—fast to render and good for quick results.

- Multi-view consistency: Making sure the front, side, and back views all depict the same person with matching details.

What did they find, and why does it matter?

Here are the main results, explained simply:

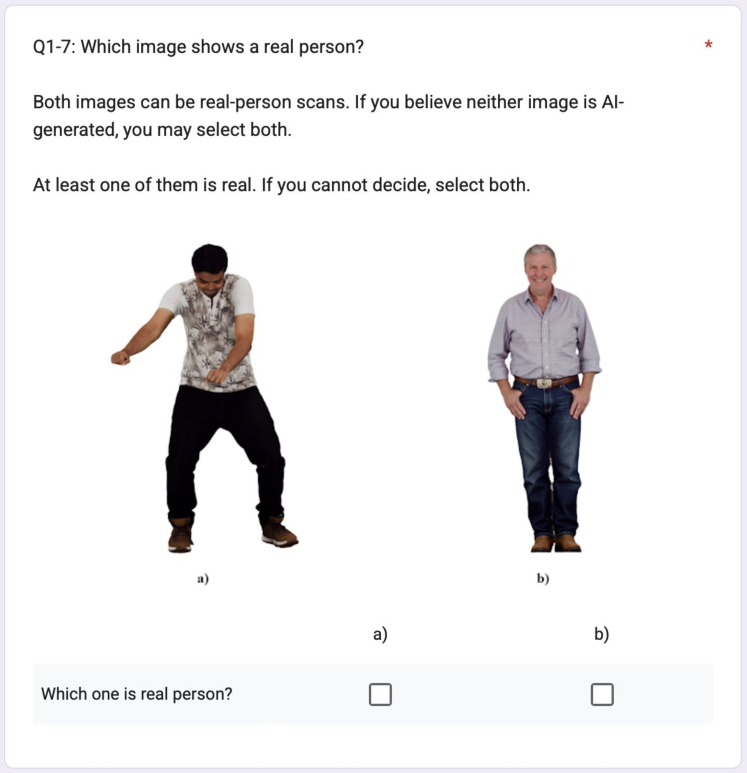

- Realism: In tests where people chose which images looked more realistic, InfiniHuman’s results often matched or beat real scan-based renders. That’s a big deal—it means AI-generated people can look just as real.

- Control: The system follows instructions very well. You can control high-level traits (age, ethnicity, gender) and precise details (specific jacket, color of glasses, accessories).

- Speed: Gen-Schnell is fast (seconds), and Gen-HRes is much faster than common high-quality methods (minutes instead of hours).

- Scale: The dataset has 111,000 different identities with rich labels. That’s huge, and it was built automatically, which saves cost and effort.

- Applications: It supports clothing try-on (change clothes while keeping the person’s identity), re-animation (move the avatar with motion data), and even 3D printing of figurines.

In short, they outperform previous state-of-the-art methods in visual quality, speed, and how precisely you can control the avatar.

Why is this important? (Implications and impact)

- Democratization: Anyone—from indie game developers to fashion startups—can create high-quality 3D people without expensive scanning or long manual work.

- New creative tools: Designers can test clothing digitally, gamers can personalize avatars, and VR apps can build diverse digital crowds and characters quickly.

- Research boost: The authors plan to release the dataset, models, and pipeline, so others can build on this work and expand it further.

- Future improvements: They suggest training faster high-resolution models and better handling of hidden body parts (like areas covered by clothes) to improve texture quality even more.

Overall, InfiniHuman shows that with smart use of existing AI models, we can generate unlimited realistic 3D humans—quickly, cheaply, and with fine-grained control over every detail.

Knowledge Gaps

Below is a concise, actionable list of the knowledge gaps, limitations, and open questions that remain unresolved by the paper. These are framed to guide follow-up research and reproducible experimentation.

- Quantitative shape/pose fidelity: The paper lacks metrics quantifying how closely generated avatars follow input SMPL pose and shape. Future work should report MPJPE/PA-MPJPE for joints, surface-to-surface distances, and silhouette IoU against SMPL guidance across poses and body shapes.

- Occluded texture reconstruction: Mesh carving from orthographic views produces artifacts in self-occluded regions (e.g., back of garments, under arms). A learned 3D generative prior (e.g., neural radiance fields or diffusion priors in UV space) for inpainting occlusions is not explored.

- High-resolution end-to-end 3D-GS: Gen-Schnell is limited by 256×256 pretraining and yields blurry facial/clothing details. An explicit path to scale multi-view diffusion and GS decoding to ≥768×768 (memory, sampling strategy, architecture) is not presented.

- Orthographic assumption and lighting: The system depends on orthographic camera and uniform lighting. Generalization to perspective cameras, complex lighting/appearance effects, and in-the-wild conditions is untested and methodologically unsupported.

- Identity control mechanism: Identity preservation relies on fixing the diffusion noise seed, which is brittle. There is no explicit identity embedding or encoder for locking and editing identity across garments/poses and sessions.

- Modular clothing representation: Outputs are fused body+clothes meshes; garments cannot be swapped post-generation. A method to produce layered, detachable garment meshes with consistent body–cloth separation is not provided.

- Back-side garment details: Clothing control uses a single 2D garment image; back/side patterns and structural details are ambiguous. The paper does not address multi-view garment asset acquisition or 3D garment reconstruction.

- Generalization of “Try-Off”: The Instruct-Virtual-TryOff module is not quantitatively evaluated on challenging real photos (transparent/reflective fabrics, layered outfits, strong occlusions, logos/patterns). Robustness benchmarks and failure analyses are missing.

- Hand and facial fidelity: There is no systematic evaluation of fine-grained hands (fingers) and facial details (pores, hairstyles, accessories). Failure cases on thin structures (glasses, jewelry, lace) and hair volumes are not analyzed.

- Animation quality and deformation: Re-animation uses SMPL skinning weight transfer, but artifacts (cloth-body interpenetrations, deformation of loose garments, hand/finger deformation) are not quantified or mitigated.

- No facial expression rigging: The approach does not generate rigs/blendshapes for expressions or speech; only body pose/shape is controlled. Methods for creating expressive face rigs from the generated assets remain unexplored.

- Dataset coverage and fairness: Demographic distribution statistics (age, gender, ethnicity, body size/shape) for the 111K identities are not reported, and no fairness audit is provided. Bias measurement and mitigation strategies are absent.

- Children and non-standard bodies: SMPL is adult-focused; fidelity on children, elderly, disabled bodies (prostheses, wheelchairs), pregnancy, and extreme BMI is not systematically evaluated, despite qualitative claims of “tolerance.”

- Identity uniqueness and deduplication: Procedures and metrics for identity deduplication (near-duplicates, mode collapse) are not described. Perceptual hashing or embedding-based dedup and coverage analysis are needed.

- Caption accuracy and label quality: GPT-generated multi-granularity captions may hallucinate or stereotype; there is no validation set, inter-annotator checks, or error rate reporting for text-label correctness.

- Evaluation metrics suitability: FID/CLIP (2D-centric) may not reflect 3D quality, geometry fidelity, or multi-view consistency. The paper omits 3D-specific metrics (e.g., normal consistency, view-consistency scores, mesh quality measures).

- Realism beyond “scan-like” renders: The user paper compares to orthographic scan renderings under uniform lighting, not to real photographs. Real-world realism and domain gap to natural images remain unassessed.

- Content safety and rights: The system can generate famous people by name; privacy/IP, consent, and likeness-rights safeguards are not established. There is no discussion of watermarking, provenance, or NSFW filtering.

- Licensing and data provenance: Use of commercial scans (e.g., Twindom) to fine-tune components lacks a clear licensing and redistribution statement. The legal status of derived assets/tuning checkpoints is not clarified.

- Proprietary dependency and reproducibility: The pipeline depends on GPT-4o for captioning and sample rejection. Open-source replacements, quality trade-offs, and reproducibility without proprietary APIs are not examined.

- Environmental and economic cost: The “theoretically unlimited” claim is not balanced with compute/energy costs per identity, end-to-end throughput, or scaling curves. Cost–quality trade-off analysis is missing.

- Perspective multi-view diffusion: Row-wise attention assumes orthographic epipolar geometry; a perspective-aware multi-view attention or 3D camera conditioning is not explored.

- Temporal consistency under long animations: The stability of textures and geometry under extended motion sequences (flicker, drift) is not evaluated quantitatively.

- Material and relighting: Outputs are baked textures; no material decomposition (albedo/normal/roughness) or relightable avatar generation is provided. Relighting and physically based rendering consistency remain open.

- Mesh from Gaussians: Gen-Schnell outputs 3D-GS without a robust meshing pipeline. Techniques to extract watertight, animation-ready meshes from Gaussians with detail retention are not addressed.

- Train/val/test splits and benchmarks: Public dataset splits, standardized prompts, and evaluation protocols for controllability (text, shape, clothing) are not defined, hindering fair comparison and progress tracking.

- Robust pose controllability: Extreme poses, self-contact, crouching/sitting, and fast motion poses are not stress-tested; failure modes of SMPL fitting and generation under such conditions are unknown.

- Security and misuse detection: No mechanisms are proposed for detecting or preventing impersonation, deepfake misuse, or harmful content generation within the pipeline.

- Ablations on multigranular text: The benefit of hierarchical caption granularity is not quantified (e.g., alignment improvements vs. single-level captions). Controlled ablations are missing.

- Open-vocabulary accessories: While some examples exist, systematic coverage and accuracy of rare accessories (cultural garments, protective gear, uniforms) are not measured.

- Integration into downstream toolchains: Interoperability (rig standards, UV layout conventions, PBR texture sets) and pipeline readiness for production (game engines, DCC tools) are not evaluated.

Practical Applications

Immediate Applications

The items below summarize practical, deployable use cases enabled by InfiniHuman’s dataset (InfiniHumanData) and generative pipeline (Gen-Schnell and Gen-HRes), with sectors, workflows, and feasibility notes.

- Rapid avatar creation for games and XR

- Sectors: gaming, AR/VR, software

- What: Generate playable characters and diverse NPCs on demand with text, body shape (SMPL), and clothing image controls. Use Gen-Schnell for rapid iteration and Gen-HRes for hero assets.

- Workflow: Prompt → Gen-Schnell (12s 3D-GS) for prototyping → Gen-HRes (~4 min textured mesh) → import via FBX/glTF/VRM into Unity/Unreal; rig via SMPL skinning weights; animate with mocap libraries.

- Tools: Gen-Schnell, Gen-HRes, SMPL, PSHuman, Gaussian Splatting; engine importers.

- Assumptions/Dependencies: License permits commercial use; available GPU (consumer or cloud); texture artifacts possible in self-occluded areas; adherence to IP when generating likenesses.

- Fashion e-commerce: scalable “model-on-garment” imagery

- Sectors: fashion, retail, advertising

- What: Automate product pages with diverse demographics wearing catalog items; generate multi-view images and 3D assets.

- Workflow: Catalog photo → Instruct-Virtual-TryOff (garment extraction) → Gen-HRes multi-view pipeline → batch renders for product pages; optional 3D viewer (USDZ/GLB).

- Tools: OminiControl-based TryOff, Gen-HRes, orthographic multi-view diffusion, PSHuman volumetric carving.

- Assumptions/Dependencies: Accurate garment extraction from mixed lighting; brand IP clearance; orthographic style required for best reconstruction; human review for color/fit edge cases.

- Digital fashion design prototyping

- Sectors: fashion design, CAD, PLM

- What: Iterate garment silhouettes and materials on many body shapes rapidly, before physically sampling.

- Workflow: Design concept → text + cloth reference → Gen-HRes multi-view → review/feedback → update prompt or cloth image → re-generate.

- Tools: Gen-HRes, SMPL conditioning; integration with CLO3D/Browzwear pipelines.

- Assumptions/Dependencies: Visual fidelity is high; physical drape/fit not simulated; pair with cloth simulation tools for production decisions.

- Film/VFX/advertising crowd generation

- Sectors: media/entertainment

- What: Create controlled, diverse extras and stand-ins; localize demographics and costumes quickly.

- Workflow: Scene brief → prompt-controlled cohort generation (Gen-Schnell for throughput) → hero extras with Gen-HRes → render passes for compositing.

- Tools: Gen-Schnell, Gen-HRes; SMPL-based re-animation; USD/FBX export.

- Assumptions/Dependencies: Legal review for likeness; integrate with studio lighting/matching pipelines; QC for consistency.

- Social telepresence and avatars for communication platforms

- Sectors: consumer apps, XR

- What: Personalized avatars aligned to user’s preferences, with fine-grained control over identity, clothing, and accessories.

- Workflow: Onboarding prompt + reference photo/garment → Gen-HRes → export to VRM/GLB → integrate with video conferencing or social VR.

- Tools: Gen-HRes; VRM pipeline; SMPL retargeting to live motion.

- Assumptions/Dependencies: Privacy-safe handling of user inputs; device rendering constraints; moderation for inappropriate content.

- Virtual try-on from real photos (TryOff → TryOn)

- Sectors: retail, influencer marketing

- What: Extract garments from photos and render on diverse models; preserve identity while swapping outfits.

- Workflow: Photo ingestion → TryOff → avatar generation across demographics → multi-view renders → A/B test conversions.

- Tools: Instruct-Virtual-TryOff (OminiControl finetune), Gen-HRes.

- Assumptions/Dependencies: Robustness to messy inputs; occlusions/complex garments; careful disclosure (synthetic imagery).

- Custom figurine fabrication

- Sectors: consumer 3D printing, collectibles

- What: Generate watertight meshes for 3D-printed figurines from prompts and wardrobe images.

- Workflow: Prompt/clothing → Gen-HRes → STL export → print → finishing.

- Tools: Gen-HRes; mesh watertightness checks; slicing software.

- Assumptions/Dependencies: Legal rights to likeness; ensure printability and structural integrity.

- Academic benchmarking and data augmentation

- Sectors: academia, ML research

- What: Use InfiniHumanData (111K identities) for training/benchmarking controllable text-to-3D, multi-view diffusion, and pose-conditioned generation; paper identity-level controllability and cross-modal alignment.

- Workflow: Download dataset → train models with multi-granularity text, SMPL, clothing images → evaluate on alignment (CLIP/FID), user studies, controllability.

- Tools: InfiniHumanData, Gen-Schnell/Gen-HRes code; SMPL; OpenPose; PSHuman.

- Assumptions/Dependencies: Synthetic-to-real domain gap; dataset license; ethical review for demographic balancing.

- Privacy-preserving synthetic data for vision/graphics

- Sectors: software, policy, compliance

- What: Replace sensitive real scans with synthetic avatars for model development and demos; reduce PII exposure.

- Workflow: Internal datasets → synthetic replacements via InfiniHumanData/Gen-HRes → internal QA → deployment.

- Tools: Dataset + generation stack.

- Assumptions/Dependencies: Policy acceptance of synthetic substitutes; transparency requirements.

- Ergonomics and UI testing with diverse body shapes

- Sectors: product design, automotive, workspace design

- What: Visual evaluations of fit/visibility across ages and body morphologies without recruiting participants.

- Workflow: Define target cohorts → generate avatars with SMPL shapes → test in virtual mockups → iterate.

- Tools: SMPL parameter control; Gen-HRes; CAD visualization.

- Assumptions/Dependencies: Visual-only; not biomechanically accurate; pair with human factors expertise.

Long-Term Applications

These use cases require further research, scaling, or integration (e.g., higher-resolution end-to-end models, physics, real-time performance, policy frameworks).

- Real-time, high-resolution end-to-end 3D generation

- Sectors: gaming, XR, live streaming

- What: Achieve Gen-Schnell-quality speed with Gen-HRes-level detail for on-the-fly avatar updates on consumer devices.

- Path to deployment: Train higher-resolution 3D-GS models; optimize inference; edge/on-device acceleration.

- Dependencies: Model scaling; hardware acceleration; memory-efficient multi-view pipelines.

- Physically accurate virtual try-on and digital fitting

- Sectors: fashion, retail, manufacturing

- What: Combine precise garment extraction with cloth simulation to predict drape, fit, and comfort across body morphologies.

- Path to deployment: Integrate with cloth simulators; calibrate materials; validate against real fittings.

- Dependencies: Material models; physics validation; standardized garment parameterization.

- Healthcare/assistive device pre-fitting and planning

- Sectors: healthcare, medtech

- What: Use controllable body shapes for preliminary fitting of orthotics, exoskeletons, and wearables; reduce clinic visits.

- Path to deployment: Calibrate with patient scans; validate anthropometrics; regulatory approvals.

- Dependencies: Medical accuracy; data governance; clinician-in-the-loop workflows.

- Crowd simulation for safety and robotics

- Sectors: robotics, urban planning, safety

- What: Populate simulation environments with photorealistic humans to train navigation, perception, and evacuation planning.

- Path to deployment: Pair avatars with realistic motion, behavior models, and sensor simulation; domain adaptation for real-world transfer.

- Dependencies: Motion realism; multi-agent behavior; sim-to-real calibration.

- Embodied AI tutors and therapists

- Sectors: education, healthcare

- What: Personalized digital humans delivering contextual instruction or therapy with cultural and age appropriateness.

- Path to deployment: Integrate with dialog models; ensure safety/ethics; longitudinal efficacy studies.

- Dependencies: Alignment, trust, accessibility; regulatory/ethical guidelines.

- Smart retail mirrors and on-device AR dressing

- Sectors: retail tech, mobile

- What: Real-time avatar generation and garment visualization on smart mirrors or smartphones.

- Path to deployment: On-device inference; camera calibration; privacy-preserving pipelines.

- Dependencies: Edge compute; UX; legal compliance.

- Synthetic demographic balancing and bias auditing at scale

- Sectors: ML governance, policy

- What: Generate matched cohorts to probe and correct model biases in vision and graphics.

- Path to deployment: Protocols for synthetic data auditing; cross-validation against real-world performance.

- Dependencies: Domain gap understanding; standardized fairness metrics; governance buy-in.

- Standards and watermarking for synthetic identities

- Sectors: policy, cybersecurity

- What: Develop asset-format standards (VRM/glTF/USD) with provenance/watermarking for synthetic humans to mitigate deepfake risks.

- Path to deployment: Industry consortia; infrastructure for provenance tracking; regulatory alignment.

- Dependencies: Cross-platform support; legal frameworks; watermark robustness.

- Industrial training simulations with controllable avatars

- Sectors: enterprise training, safety

- What: Scenario-based training with diverse avatars and scripted accessories/equipment.

- Path to deployment: Integrate with LMS; low-latency generation; analytics on performance.

- Dependencies: Real-time pipelines; content moderation; scenario authoring tools.

- Cultural heritage and large-scale historical reconstructions

- Sectors: museums, edutainment

- What: Populate time-period accurate crowds with text-controlled costumes and demographics.

- Path to deployment: Curate historically grounded prompts; expert review; public dissemination.

- Dependencies: Expert curation; IP on costume references; accuracy standards.

Cross-cutting assumptions and dependencies affecting feasibility

- Licensing and release: The paper commits to public release of code/data; actual license terms (commercial use, attribution) will determine many applications.

- Compute: Gen-HRes (~4 minutes/subject) and Gen-Schnell (~12 seconds) currently presume GPU access; scaling to enterprise or on-device use needs optimization.

- Foundation models: Reliance on FLUX, GPT-4o, OminiControl variants, OpenPose, SMPL, PSHuman; version changes and model licenses may affect reproducibility and commercial viability.

- Visual vs physical fidelity: High visual realism does not imply physical accuracy (e.g., fabric drape, biomechanics); applications needing physics require integration with simulators and validation.

- Legal/ethical constraints: Generating “famous people” or real likenesses carries IP, consent, and privacy risks; moderated workflows and filters may be necessary.

- Demographic coverage and bias: Although the dataset targets diversity, ongoing auditing and curation are required to avoid representational gaps and stereotype reinforcement.

- Orthographic constraints: Best multi-view consistency currently depends on orthographic views and uniform lighting; generalization to unconstrained camera/lighting needs further work.

- Self-occlusion artifacts: Mesh carving can produce texture artifacts in self-occluded regions; data-driven reconstruction may reduce these issues over time.

Glossary

- Barycentric interpolation: A technique to interpolate values (e.g., positions or weights) inside a triangle using its vertices’ barycentric coordinates; used to transfer motion or skinning weights to mesh surfaces. "barycentric interpolation of SMPL skinning weights"

- CLIP Score: A metric that measures semantic alignment between images and text using CLIP embeddings. "CLIP Score"

- Epipolar attention: An attention mechanism that leverages epipolar geometry to relate corresponding pixels across views, improving multi-view consistency. "simplified epipolar attention"

- Epipoles: Points where the baseline between cameras intersects the image planes; in orthographic setups they align horizontally, structuring cross-view correspondence. "horizontal epipoles"

- FID (Fréchet Inception Distance): A metric that evaluates image generation quality by comparing distributions of features from real and generated images. "We also report quantitative numbers in FID between generated results and rendered images from human scans."

- Field of View (FoV): The angular extent of the observable scene; setting a small FoV approximates orthographic viewing for pose estimation. "by setting FoV to 0.1"

- Flow matching: A generative modeling objective that learns a vector field to transport noise to data samples, enabling fast, stable synthesis. "flow matching objective:"

- Foundation models: Large, pretrained models (e.g., vision-language or diffusion) whose broad capabilities can be adapted (“distilled”) for specific tasks. "foundation models"

- Gaussian noise: Random noise with a normal distribution used in diffusion processes; fixing it can control identity or variation in generation. "initial Gaussian noise"

- Gaussian splatting: A 3D representation that renders scenes by projecting collections of 3D Gaussians, enabling fast, differentiable rendering. "Gaussian splatting output"

- Instruct-Virtual-TryOff: An instruction-driven image-to-image task that extracts clean garment images from dressed-person photos, reversing virtual try-on. "Instruct-Virtual-TryOff"

- Janus problem: A multi-view inconsistency artifact where a model produces two or more conflicting faces or appearances across views. "Janus problem"

- Latent space: The learned, compressed representation space of a model (e.g., a VAE) where images or conditions are encoded for generation. "latent space"

- LoRA adapter: A low-rank adaptation technique for fine-tuning large models efficiently by injecting small trainable matrices. "LoRA adapter"

- Multi-view diffusion (MVD): A diffusion framework that generates multiple consistent views of an object or scene, often with geometry-aware conditioning. "multi-view diffusion (MVD) model"

- Neighbor-only attention mechanisms: Attention restricted to local temporal or spatial neighborhoods (e.g., in video diffusion), which can limit global consistency. "neighbor-only attention mechanisms"

- OpenPose: A 2D keypoint detection framework for human pose estimation, used to align or refine 3D parametric body fits. "OpenPose 2D joint alignment"

- Orthographic multi-view attention: Cross-view attention structured by orthographic geometry (e.g., row-wise alignment) to enforce multi-view consistency. "orthographic multi-view attention"

- Orthographic projection: A projection model without perspective foreshortening, ideal for multi-view consistency and geometric alignment. "orthographic projections"

- Reprojection error: The discrepancy between projected 3D points and detected 2D keypoints; minimized to refine pose/shape estimation. "reprojection error"

- Skinning weights: Per-vertex weights that determine how mesh vertices move with an underlying skeleton in character animation. "SMPL skinning weights"

- Splatting decoder: A decoder that converts multi-view or 2D features into a 3D Gaussian splat representation to enforce cross-view consistency. "splatting decoder"

- SMPL: A parametric human body model (Skinned Multi-Person Linear) that represents shape and pose via learned blend shapes and joint parameters. "SMPL parameters"

- SMPL normal maps: Surface-normal renderings of a SMPL mesh that provide geometric guidance to image or diffusion models. "SMPL normal maps"

- UNet denoiser: The UNet-based neural network used in diffusion models to predict and remove noise at each step of the generative process. "UNet denoiser"

- VAE (Variational Autoencoder): A generative model that learns a probabilistic latent space, enabling encoding/decoding of images with regularization. "pretrained VAE"

- Virtual Try-On: Techniques that overlay or synthesize garments onto people in images to visualize apparel on different bodies. "Virtual-TryOn datasets"

- Volumetric carving: A reconstruction method that carves a volumetric representation using multi-view silhouettes or constraints to obtain a 3D shape. "volumetric carving"

- Watertight mesh: A 3D mesh without holes or gaps, suitable for operations like 3D printing or simulation. "watertight 3D meshes"

Collections

Sign up for free to add this paper to one or more collections.