- The paper introduces a per-scene personalized colorizer that propagates color from a key view to ensure cross-view and cross-time consistency.

- The method integrates single-view augmentation and Lab Gaussian splatting, achieving superior vibrancy and lower error metrics in 3D scene colorization.

- The approach demonstrates enhanced color realism and controllability over previous baselines, validated by extensive experimental and ablation studies.

Color3D: Controllable and Consistent 3D Colorization with Personalized Colorizer

Introduction and Motivation

Color3D addresses the challenge of reconstructing colorful 3D scenes from monochromatic multi-view images or videos, a problem of significant importance for digital art, cultural heritage restoration, and immersive content creation. Existing 3D colorization methods either average color predictions across views—leading to desaturated, inconsistent, and uncontrollable results—or are limited to static scenes. Color3D introduces a unified, controllable framework for both static and dynamic 3D scene colorization, leveraging a per-scene personalized colorizer to propagate color from a single key view to all other views and time steps, thereby ensuring cross-view and cross-time consistency without sacrificing chromatic richness or user control.

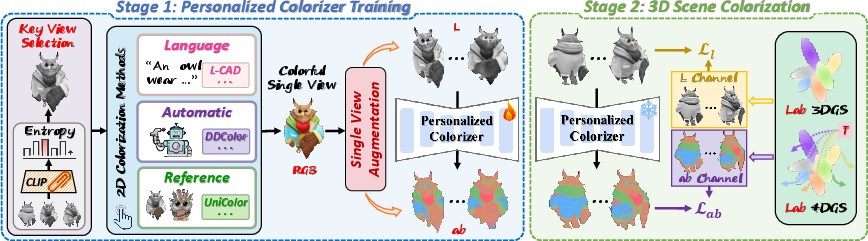

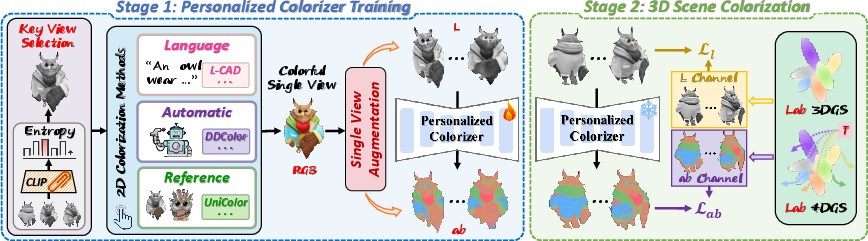

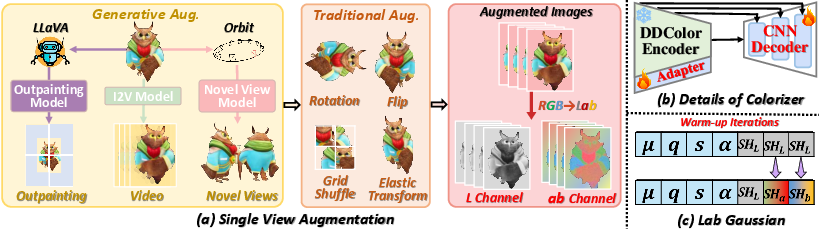

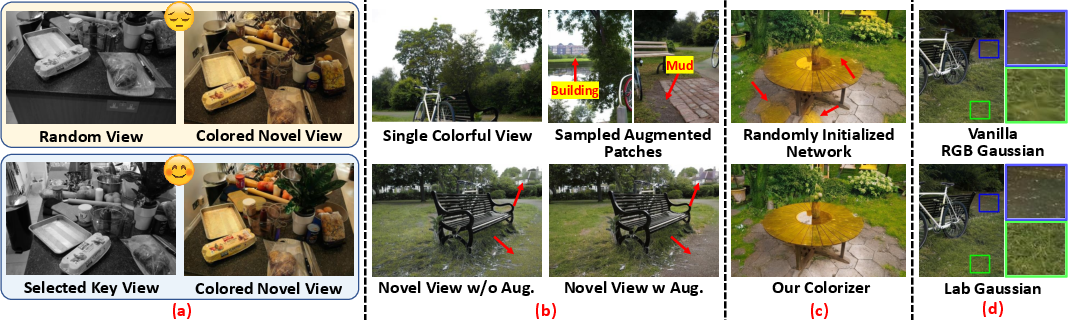

Figure 1: The overall pipeline of Color3D, illustrating key view selection, single-view colorization and augmentation, personalized colorizer fine-tuning, and consistent 3D scene reconstruction via Lab color space Gaussian splatting.

Methodology

Pipeline Overview

Color3D operates in two main stages:

- Personalized Colorizer Training:

- Key View Selection: The most informative view is selected from the input set using a feature entropy-based criterion, maximizing the diversity of scene content captured.

- Single-View Colorization: An off-the-shelf 2D colorization model (supporting language, reference, or automatic control) is applied to the key view.

- Single-View Augmentation: Both generative (outpainting, image-to-video, novel view synthesis) and traditional (rotation, flip, grid shuffle, elastic transform) augmentations are applied to the colorized key view, expanding the training set while maintaining color consistency.

- Personalized Colorizer Fine-Tuning: A scene-specific colorizer is fine-tuned on the augmented set, learning a deterministic, variation-agnostic color mapping.

- 3D Scene Colorization and Reconstruction:

- The personalized colorizer infers consistent chromatic content for all other views/frames.

- 3D scene reconstruction is performed using 3D Gaussian Splatting (3DGS) or 4DGS (for dynamic scenes), with a dedicated Lab color space representation to decouple luminance and chrominance during optimization.

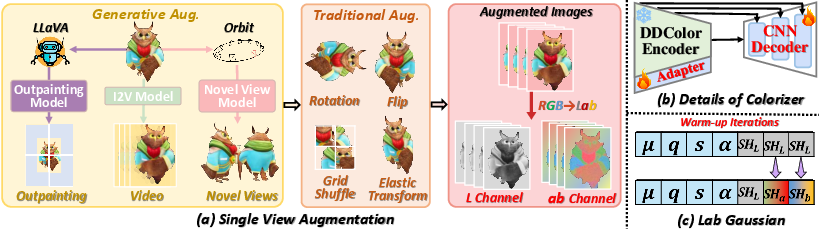

Figure 2: (a) Single view augmentation scheme combining generative and traditional augmentations. (b) Colorizer architecture with frozen DDColor encoder, trainable adapters, and CNN decoder. (c) Lab Gaussian representation with luminance warm-up and subsequent full Lab optimization.

Personalized Colorizer Design

The colorizer architecture consists of a frozen DDColor encoder, lightweight trainable adapters, and a CNN-based decoder. Only the adapters and decoder are updated during fine-tuning, ensuring efficient per-scene adaptation while retaining high-level semantic feature extraction. Training uses an L1 loss on the Lab color space ab channels, promoting robust, semantically consistent color propagation.

Lab Gaussian Splatting

Color3D reformulates the standard 3DGS/4DGS pipeline to operate in the Lab color space, with separate SH coefficients for L, a, and b channels. This decoupling allows for independent optimization of luminance (structural fidelity) and chrominance (color), improving convergence and reducing artifacts. A warm-up phase optimizes only the L channel before introducing color, stabilizing geometry and motion learning.

Experimental Results

Static 3D Scene Colorization

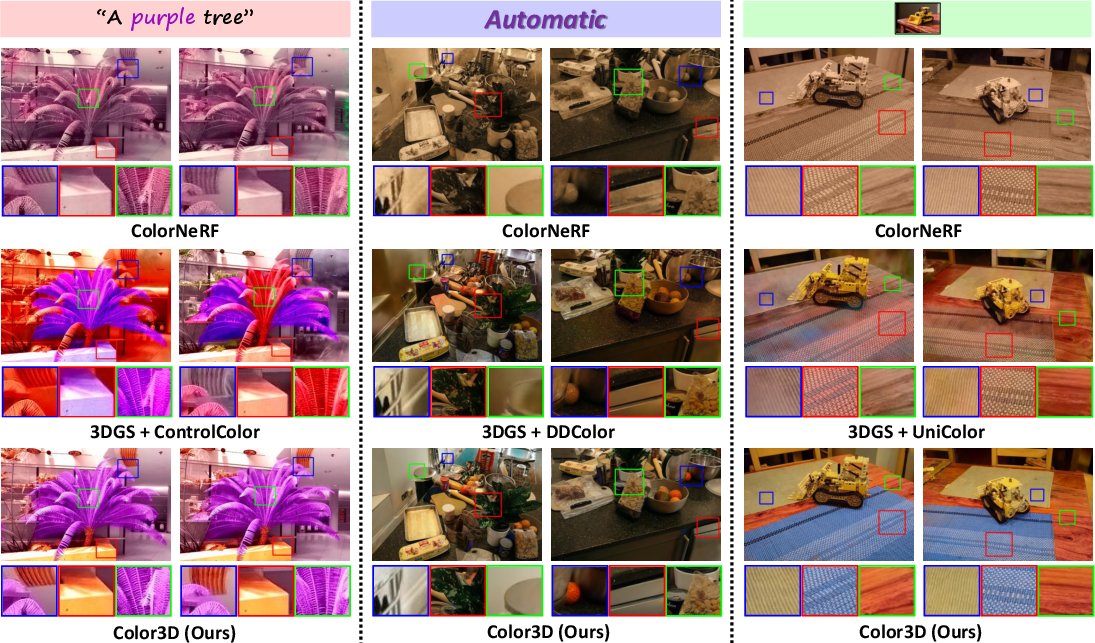

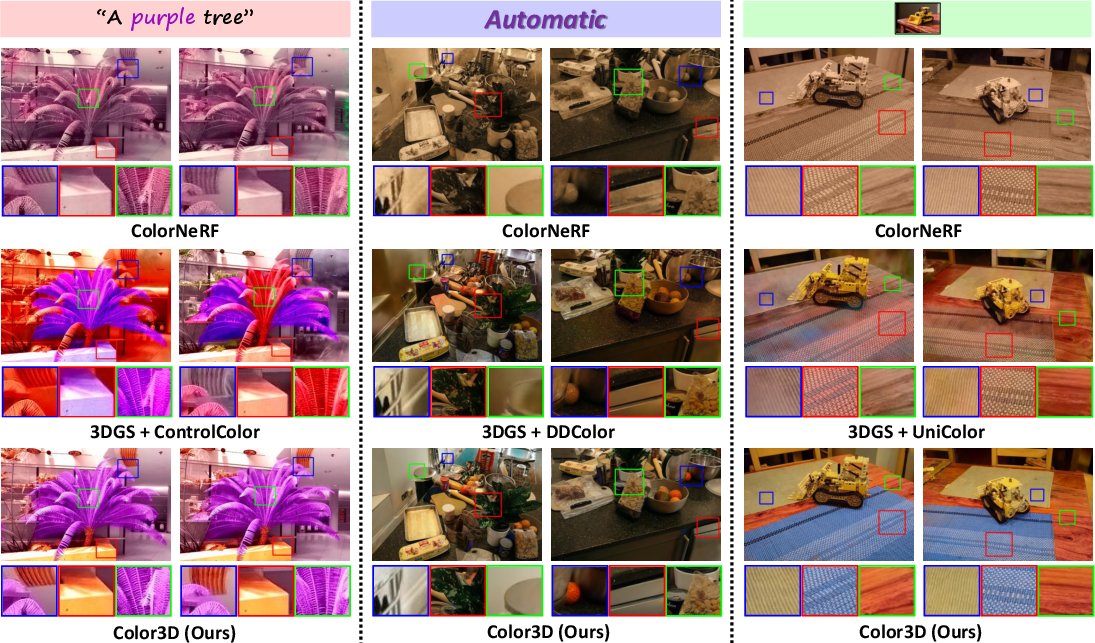

Color3D demonstrates substantial improvements over prior methods on LLFF and Mip-NeRF 360 datasets. Quantitatively, it achieves lower FID and Matching Error (ME), and higher CLIP and Colorful scores, indicating superior color realism, consistency, and user intent alignment. Qualitatively, Color3D produces more vivid, color-accurate, and multi-view consistent results compared to both direct 2D colorizer application (which suffers from severe inconsistency) and color-averaging approaches (which yield desaturated, uniform outputs).

Figure 3: Qualitative comparisons on static 3D scene colorization benchmarks. Color3D yields more color-accurate and color-rich results with strong multi-view consistency.

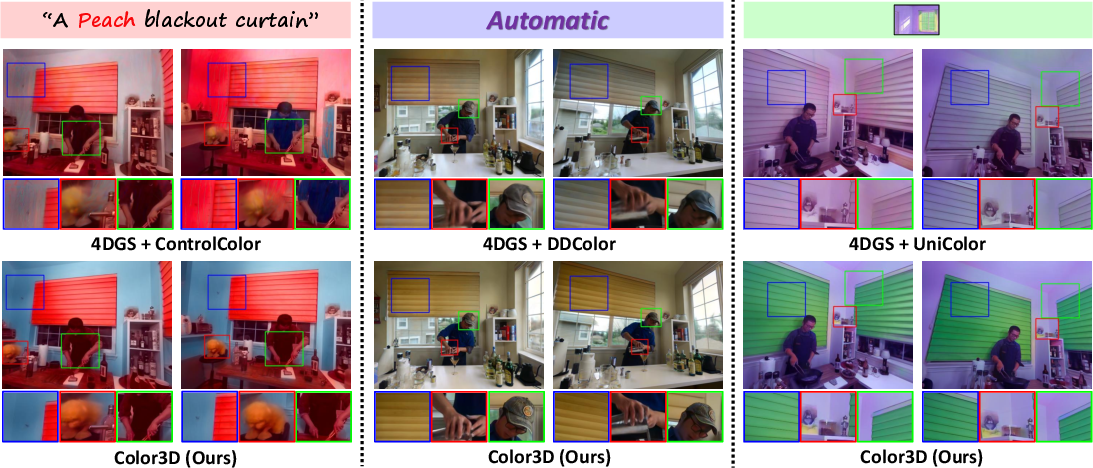

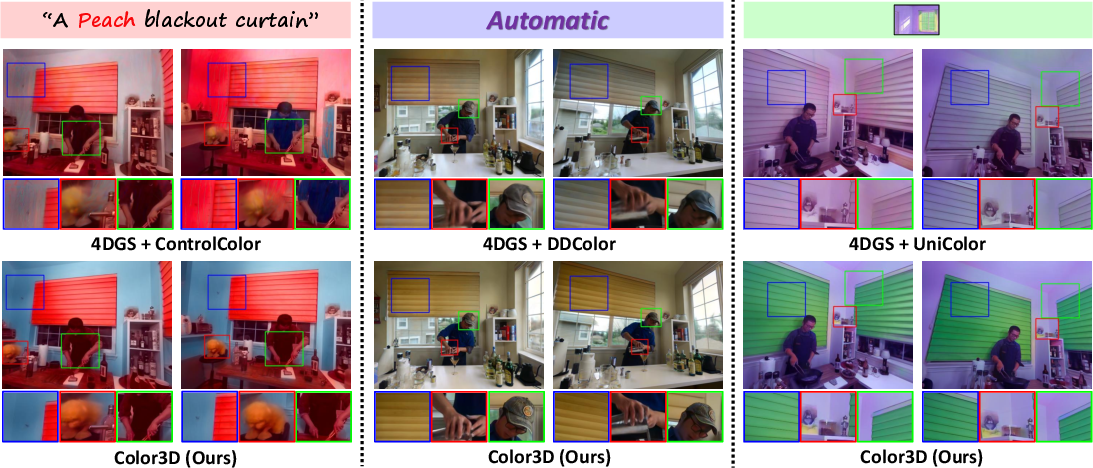

Dynamic 3D Scene Colorization

On the DyNeRF dataset, Color3D outperforms 4DGS+2D colorizer and other baselines, achieving lower FID and ME, and higher CLIP scores. The method maintains spatial-temporal coherence and vivid colorization across frames, a capability not addressed by previous approaches.

Figure 4: Qualitative comparisons on dynamic 3D scene colorization benchmarks. Color3D achieves spatial-temporal coherence and perceptually realistic color.

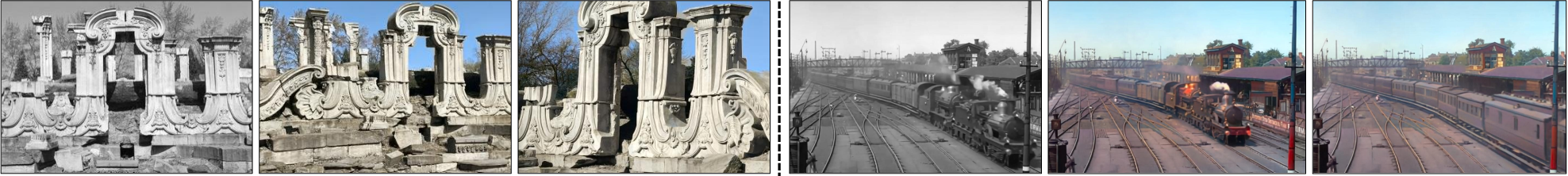

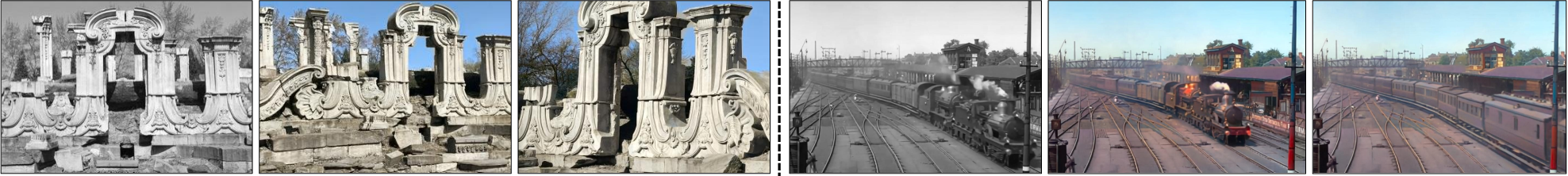

Real-World and In-the-Wild Applications

Color3D generalizes to in-the-wild multi-view images and historical monochrome videos, producing realistic, consistent colorizations suitable for legacy content restoration.

Figure 5: Left: Static 3D scene colorization from in-the-wild monochrome images. Right: Dynamic 3D scene colorization from historical monochrome video.

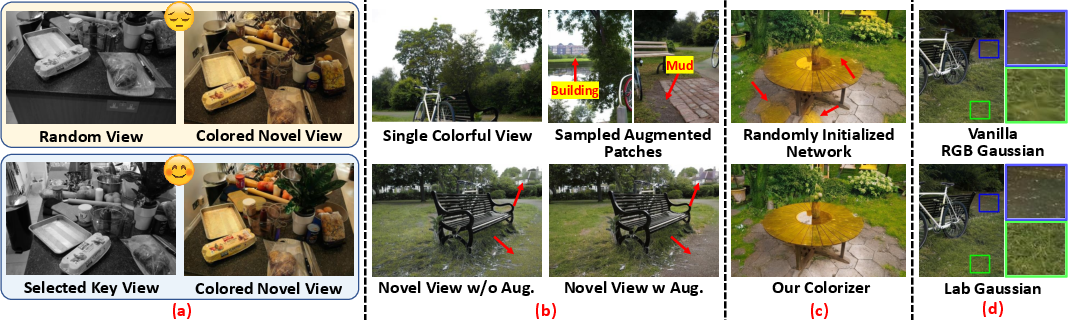

Ablation Studies

Ablation experiments confirm the contribution of each component:

- Key View Selection: Increases color richness and generalization.

- Single View Augmentation: Expands sample diversity, improving robustness to unseen content.

- Personalized Colorizer: Ensures semantically consistent, variation-agnostic color mapping.

- Lab Gaussian Representation: Reduces blurring and ghosting, enhancing structural fidelity.

Figure 6: Visual ablation paper showing the impact of (a) key view selection, (b) single view augmentation, (c) personalized colorizer, and (d) Lab Gaussian representation.

Analysis and Discussion

Trade-offs and Implementation Considerations

- Personalization Overhead: The per-scene colorizer fine-tuning adds approximately eight minutes per scene, a reasonable trade-off for the achieved consistency and controllability.

- Generalization: The method is robust to moderate viewpoint and content variation but may struggle with highly out-of-domain or unobserved regions, as with all single-view-based approaches.

- Integration: Color3D is compatible with any 2D colorization model, supporting language, reference, or automatic control, and can be adapted to various 3D reconstruction backbones (e.g., NeRF, 3DGS).

- Resource Requirements: The pipeline is implemented in PyTorch and tested on RTX A6000 GPUs, with memory and compute demands comparable to standard 3DGS/4DGS pipelines.

Comparison to Editing and Stylization Methods

3D editing (e.g., GaussianEditor) and stylization (e.g., Ref-NPR) methods are suboptimal for colorization, as they prioritize semantic or global appearance transfer over local chromatic fidelity and consistency. Color3D, by contrast, is specifically designed for faithful, controllable, and consistent colorization.

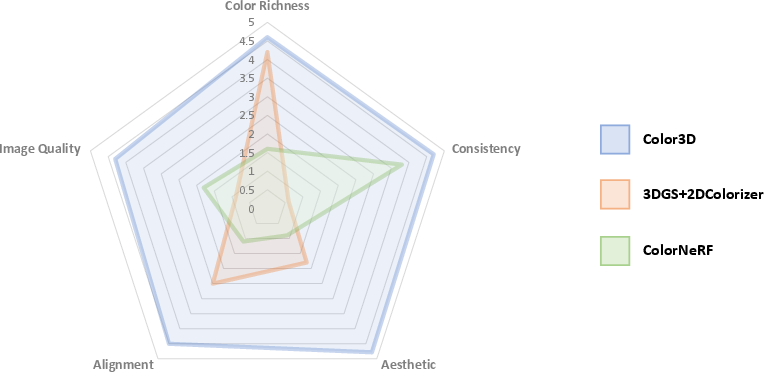

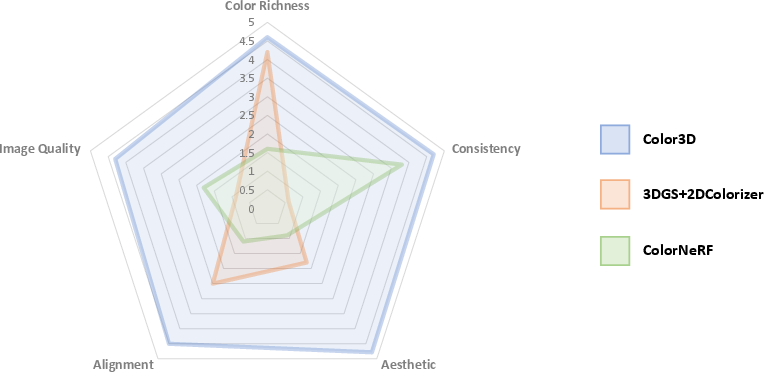

User Study

A user paper confirms that Color3D is preferred across all aspects—color richness, consistency, aesthetic quality, and alignment with user intent—over both direct 2D colorization and color-averaging baselines.

Figure 7: User paper results showing Color3D's superior performance across all evaluated aspects.

Extensions and Future Directions

- 3D Recoloring: The framework naturally extends to 3D scene recoloring by editing the key view and propagating changes via the personalized colorizer.

- Universal Scene Manipulation: The per-scene personalization paradigm can be generalized to other scene attributes (illumination, white balance, style transfer).

- Generative Priors: Future work may integrate stronger generative priors to handle highly unobserved or out-of-domain content, further enhancing color diversity and robustness.

Conclusion

Color3D introduces a principled, unified approach to controllable and consistent 3D colorization for both static and dynamic scenes. By recasting 3D colorization as a single-view propagation problem and leveraging a per-scene personalized colorizer, the method achieves strong cross-view and cross-time consistency, vivid chromaticity, and flexible user control. The Lab Gaussian representation further enhances structural fidelity and optimization stability. Extensive experiments and user studies validate the method's superiority over existing baselines. The per-scene personalization strategy opens new avenues for scene-level editing and manipulation in 3D vision and graphics.