The Attacker Moves Second: Stronger Adaptive Attacks Bypass Defenses Against Llm Jailbreaks and Prompt Injections (2510.09023v1)

Abstract: How should we evaluate the robustness of LLM defenses? Current defenses against jailbreaks and prompt injections (which aim to prevent an attacker from eliciting harmful knowledge or remotely triggering malicious actions, respectively) are typically evaluated either against a static set of harmful attack strings, or against computationally weak optimization methods that were not designed with the defense in mind. We argue that this evaluation process is flawed. Instead, we should evaluate defenses against adaptive attackers who explicitly modify their attack strategy to counter a defense's design while spending considerable resources to optimize their objective. By systematically tuning and scaling general optimization techniques-gradient descent, reinforcement learning, random search, and human-guided exploration-we bypass 12 recent defenses (based on a diverse set of techniques) with attack success rate above 90% for most; importantly, the majority of defenses originally reported near-zero attack success rates. We believe that future defense work must consider stronger attacks, such as the ones we describe, in order to make reliable and convincing claims of robustness.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper looks at how to properly test “defenses” that try to keep LLMs—like chatbots and AI assistants—safe. Two big problems they aim to stop are:

- Jailbreaks: tricks that make an AI break its safety rules.

- Prompt injections: hidden instructions slipped into text or tools that make the AI do something the user didn’t intend.

The main message: many current tests make these defenses look strong, but when you try smarter, more flexible attacks, most defenses fail. The authors show how to evaluate defenses using stronger “adaptive attackers” who change their strategy to beat the defense.

Key Questions

The paper asks simple but important questions:

- Are we testing AI safety defenses in a fair and realistic way?

- What happens if we use stronger, smarter attacks that adapt to the defense?

- Do popular defenses still work under those tougher tests?

- How should researchers and companies evaluate defenses so they can trust the results?

Methods and Approach

Think of defending an AI like locking your house. If you only test the lock with a weak push, it might look great. But a real burglar will try many different ways—picking, prying, sneaking—to get in. The authors test AI defenses using attackers that try hard and adapt.

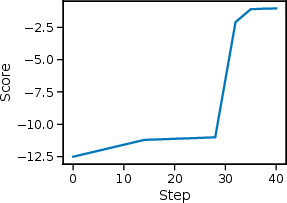

They describe a simple “attack loop” that repeats:

- Propose: come up with possible trick prompts.

- Score: try them and see how well they work.

- Select: keep the best attempts.

- Update: change the strategy based on what worked, then try again.

They tried four kinds of attackers:

- Gradient-based: like gently turning many tiny knobs to see which word changes push the AI toward dangerous behavior. This is math-heavy, and not always reliable for text.

- Reinforcement learning (RL): like teaching an AI to be a better attacker by trial and error with “rewards” for success.

- Search-based: like exploring a huge maze by trying many variations, keeping the best ones, and mixing them to get even better tricks.

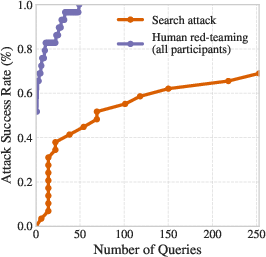

- Human red-teaming: real people creatively crafting prompts—often the most effective.

They also tested under different “access” levels to the model:

- White-box: seeing inside the model’s “engine” (full details).

- Black-box with scores: only seeing the model’s confidence for each word or token.

- Black-box generation only: just seeing the final output text.

Importantly, they don’t limit the attacker’s computing power. The goal is to see if the defense is truly strong, not just hard to break with a small budget.

Main Findings and Why They Matter

Across 12 well-known defenses, the stronger adaptive attacks worked most of the time—often above 90% success—even when the original papers reported near-zero success using weaker tests. The defenses they broke spanned several strategies:

- Prompting defenses (e.g., Spotlighting, Prompt Sandwiching, RPO): defenses that rely on carefully written instructions. These were bypassed by search and RL attacks.

- Training on attacks (e.g., Circuit Breakers, StruQ, MetaSecAlign): defenses that fine-tune models on known attack data. These didn’t generalize to new, smarter attacks.

- Detectors/filters (e.g., Protect AI, PromptGuard, PIGuard, Model Armor): separate models that try to flag dangerous prompts. Adaptive attackers still got past them, and humans were especially good at slipping through.

- “Secret knowledge” defenses (e.g., Data Sentinel, MELON): methods that hide a secret check or run a clever second pass. Attackers learned to avoid the hidden checks or make the model behave differently between runs.

Other key lessons:

- Static test sets (re-using old attack prompts) are misleading. Defenses may overfit and look strong but fail on new attacks.

- Automated safety raters (models that judge if outputs are safe) can be tricked too, so they’re helpful but not fully reliable.

- Human red-teaming remains very powerful and often outperforms automated attacks.

Why this matters: If we rely on weak evaluations, we get a false sense of security. Systems may look safe but aren’t, which could allow harmful outputs or unintended actions in the real world.

Implications and Potential Impact

This paper raises the bar for how we should test AI safety defenses:

- Treat evaluation like computer security: assume smart, adaptive attackers with time and resources.

- Don’t rely on fixed datasets of old attacks; include adaptive, evolving strategies and people.

- Use multiple methods (RL, search, humans) and stronger threat models (white-box, black-box).

- Make defenses easy to test openly (share code, allow human testing) so weaknesses are found early.

- See filters and detectors as useful—but limited—parts of a bigger safety strategy.

In short, if we want truly robust AI defenses, we must challenge them with the strongest attacks we can build. Only then can we trust that an AI will stay safe when people try to trick it.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise list of what remains missing, uncertain, or unexplored based on the paper. Each item is phrased to be actionable for future research.

- Lack of a unified evaluation protocol: no standardized budgets (queries, tokens, wall-clock), success criteria, or threat-model tiers to enable apples-to-apples comparison across defenses.

- Cross-defense comparability is limited: evaluations follow each defense’s original setup, preventing controlled, consistent comparisons under identical tasks, models, and metrics.

- Compute/efficiency of attacks is under-characterized: no cost–success curves, minimal-query analyses, or marginal gains per additional compute for RL, search, and gradient attacks.

- Realistic attacker constraints are not studied: results assume large compute; missing evaluations under API rate limits, cost caps, latency limits, and partial information (e.g., proxy access, intermittent feedback).

- Threat-model specification per experiment is incomplete: unclear mapping of each result to white-box, black-box-with-logits, or generation-only; repeat studies under stricter black-box conditions are needed.

- Reliability and robustness of auto-raters remain uncertain: limited auditing of evaluator susceptibility to adversarial examples; need evaluator stress tests, adversarial training, and human–model agreement analyses.

- Human red-teaming methodology lacks rigor and reproducibility: no controlled attacker-knowledge tiers, inter-annotator agreement, sample size justification, or longitudinal repeatability checks.

- Missing cost–benefit analysis of defenses: no systematic measurement of false positive rates, helpfulness degradation, task success, and user utility under adaptive attacks.

- Limited model coverage: evaluations span a small set of base models (and at least one proprietary model); need broader sweeps across architectures, sizes, instruction-tuning styles, and providers.

- Generalization across modalities is untested: no paper on multimodal models or agents with vision/audio inputs where jailbreaks and injections may differ.

- Transferability of attacks is not characterized: unknown whether learned triggers transfer across models, tasks, languages, or defense mechanisms; need universal trigger benchmarks.

- Mechanistic understanding is shallow: no causal or interpretability analyses explaining why defenses fail (e.g., how models privilege adversarial “system-like” text vs trusted context).

- Defense composability is not systematically evaluated: stacking multiple defenses is anecdotal; need controlled studies of interaction effects and correlated failure modes.

- Randomization-based defenses are not examined: no tests of randomized prompts, instruction shuffling, response randomization, or secret rotation against expectation-over-transforms–aware attackers.

- Long-context and memory vulnerabilities are underexplored: missing evaluations for multi-turn, persistent memory, and very long context windows where injections can persist or amplify.

- Multilingual and encoding-based attacks are not evaluated: no analysis of cross-lingual attacks, Unicode/whitespace obfuscations, or encoding smuggling robustness.

- Agent/tooling ecosystem security is not comprehensively tested: limited to a few agent benchmarks; need live tools, OS-level permissions, capability-based sandboxes, and end-to-end audit trails.

- Canary/secret-based defenses need principled limits: no information-theoretic or game-theoretic analysis of what secrecy can guarantee under adaptive attackers with partial knowledge.

- Robust optimization for LLM safety is not developed: open whether inner-loop adversarial training (à la PGD) is feasible for text/agents; need scalable formulations, stability analyses, and compute estimates.

- Certified robustness is absent: no formal definitions or certifiable guarantees for jailbreak/prompt-injection resistance under well-scoped perturbation models.

- Detection of ongoing adaptive attacks is not studied: lack of meta-detectors for attack patterns, query anomaly detection, and cost-aware throttling strategies validated against adaptive evasion.

- Economic realism is missing: no attacker/defender cost modeling (compute, API spend, time-to-first-breach), making practical risk unclear for different adversary profiles.

- Severity-aware evaluation is missing: beyond attack success rate, no standardized metrics for harm severity, tool-call criticality, or downstream impact scoring.

- Defensive retraining dynamics are unknown: not shown whether incorporating these adaptive attacks into training yields durable gains vs rapid overfitting and subsequent bypass.

- Post-processing/repair pipelines are not evaluated: no analysis of iterative refuse–revise loops, multi-pass sanitization, or constrained decoding as defense components.

- System-level mitigations are underexplored: little on policy engines, privilege separation, constrained interpreters, or verified tool interfaces as complementary guardrails.

- Reproducibility and openness are unclear: code, prompts, attack logs, and red-team data availability are not specified; open artifacts are needed for community verification.

- Longitudinal robustness is untested: no rolling or time-evolving evaluations to see if defenses remain effective as attackers adapt over weeks or months.

- Cross-domain transfer is not measured: unknown whether attacks crafted for classification transfer to coding, retrieval-augmented tasks, or autonomous agents.

- Ethics and dual-use governance need structure: concrete protocols for responsible release of strong adaptive attacks, safe challenge platforms, and controlled access to high-risk artifacts.

Practical Applications

Immediate Applications

Below are actionable, deployable use cases that organizations can adopt now, grounded in the paper’s findings that stronger adaptive attacks (RL, search-based, gradient-based, and human red-teaming) readily bypass many current LLM defenses.

- Upgrade pre-deployment security testing with adaptive attacks

- Sectors: software, cloud platforms, finance, healthcare, education, public-sector digital services

- Tools/workflows: integrate a PSSU-style attacker harness (Propose–Score–Select–Update) using RL (e.g., GRPO-style), LLM-guided genetic search, gradient-based methods, plus human red-teaming; test under black-box (generation/logits) and white-box access when available

- Assumptions/dependencies: sufficient compute; access to model APIs/logits; permissioned testing environments; safety review for handling harmful prompts

- Harden agentic applications (tool-use, automation) before launch

- Sectors: robotics/RPA, enterprise SaaS, customer support automation, DevOps copilots

- Tools/workflows: run adaptive prompt-injection tests on dynamic agent benchmarks (e.g., AgentDojo); enforce least-privilege tools, allowlists, explicit approvals, sandboxing, audit trails for tool calls

- Assumptions/dependencies: mature tool governance (capability scoping, isolation); QA environments mirroring production integrations

- Establish continuous human red-teaming programs

- Sectors: model providers, platform companies, regulated enterprises

- Tools/workflows: periodic red-teaming events/bug bounties; expert panels; attack library curation; use structured scorecards for attack success rate (ASR) under adaptive settings

- Assumptions/dependencies: budget for incentives; clear scopes and rules; secure logging; legal/ethical oversight

- Revise safety metrics and reporting to reflect adaptive adversaries

- Sectors: enterprise AI governance, model evaluation teams

- Tools/workflows: report ASR against adaptive RL/search/human attacks (not just static prompts); include threat-model details (white/black-box), compute budgets, and evaluation reproducibility

- Assumptions/dependencies: management buy-in; standardized documentation templates; reproducible test harnesses

- Vendor/procurement due diligence for LLM components

- Sectors: all enterprises integrating third-party LLMs or “AI firewalls”

- Tools/workflows: require vendors to produce adaptive attack evaluations; mandate red-team attestations; include minimum robustness criteria in contracts

- Assumptions/dependencies: contractual leverage; independent verification capability or trusted third-party auditors

- Treat detectors as guardrails, not guarantees

- Sectors: content moderation, agent platforms, productivity software

- Tools/workflows: combine detectors with process controls (approval steps, tool sandboxing, provenance tagging) rather than stacking detectors alone; document detector false/true positive rates under adaptive attacks

- Assumptions/dependencies: operations readiness; monitoring for failure modes; willingness to accept some friction for safety-critical actions

- Improve training pipelines beyond static adversarial datasets

- Sectors: model development teams, research labs

- Tools/workflows: incorporate on-the-fly adversarial generation (adaptive RL/search inside the training/eval loop); avoid overfitting to fixed jailbreak sets

- Assumptions/dependencies: compute scaling; data governance; careful objective design to avoid reward hacking

- Robust content moderation and evaluation for safety-critical outputs

- Sectors: social platforms, education technology, health advice assistants

- Tools/workflows: blend automated raters with targeted human review for high-risk tasks; rotate/adapt raters and prompts to reduce adversarial overfitting

- Assumptions/dependencies: human review capacity; triage policies; escalation paths

- Organizational policy updates for AI deployment

- Sectors: enterprise governance, public agencies

- Tools/workflows: require adaptive evaluation (including human red-teaming) before production; set minimum compute budgets and threat-model baselines; define rollback procedures when ASR exceeds thresholds

- Assumptions/dependencies: leadership support; policy enforcement mechanisms; clear risk tolerance

- End-user hygiene with LLM agents (daily life)

- Sectors: consumers, small businesses, educators

- Tools/workflows: avoid pasting untrusted text into agents with tool access; enable logs; prefer “constrained” modes; review tool actions before execution

- Assumptions/dependencies: agent UI/UX support for approvals and logs; user education resources

Long-Term Applications

Below are opportunities that require further research, scaling, standardization, or architectural redesign to realize robust defenses in the face of adaptive attacks.

- Standardized adaptive evaluation and certification

- Sectors: policy/regulation, standards bodies (e.g., NIST-like), industry consortia

- Tools/workflows: formal evaluation suites with defined threat models (white/black-box), compute budgets, attacker families (RL/search/gradient/human), and reporting schemas; certification programs for safety-critical deployments

- Assumptions/dependencies: multi-stakeholder coordination; public benchmarks; accredited testing labs

- More efficient automated adaptive attack algorithms

- Sectors: academia, model providers

- Tools/workflows: improved gradient estimation in discrete spaces; curriculum RL for attack discovery; scalable mutators; open-source attacker frameworks

- Assumptions/dependencies: research funding; shared datasets; access to target models for reproducibility

- Robust optimization for LLM safety (adversarial training at scale)

- Sectors: frontier model labs, defense research

- Tools/workflows: integrate adaptive attacker loops inside training; formalize attack spaces and objectives; balance safety with capability retention

- Assumptions/dependencies: large compute budgets; careful measurement to avoid reward hacking; privacy/security of training data

- Agent architecture redesign with capability-based security

- Sectors: agent platforms, robotics, enterprise automation

- Tools/workflows: strict separation of trusted/untrusted inputs; content provenance; transactional tool calls; ephemeral sandboxes; typed interfaces with policy-as-code checks; “conditional execution under audit”

- Assumptions/dependencies: platform-level changes; developer tooling; performance trade-offs

- Real-time monitoring for task drift and injection

- Sectors: operations, safety engineering

- Tools/workflows: instrumentation for activation deltas and intent drift; anomaly detection on tool-call sequences; run shadow evaluations (dual-run strategies) without leakage to attackers

- Assumptions/dependencies: telemetry access; privacy-preserving logging; robust baselines to minimize false alarms

- Formal safety properties and verification for LLM tool use

- Sectors: formal methods, safety-critical industries (healthcare, finance, energy, transportation)

- Tools/workflows: type systems and contracts for tools; provable isolation boundaries; bounded-adversary models; policy proofs for certain classes of tasks

- Assumptions/dependencies: theoretical advances; standardized tool schemas; acceptance by regulators and practitioners

- Security Ops platforms for LLMs (new product category)

- Sectors: cybersecurity, MLOps

- Tools/workflows: “LLM SecOps” suites offering attack simulation as-a-service, adaptive evaluation pipelines, incident response for agentic failures, compliance reporting dashboards

- Assumptions/dependencies: market maturation; integration with CI/CD and model registries; reliable ROI models

- Education and workforce development in LLM security

- Sectors: academia, professional certification bodies

- Tools/workflows: curricula on adaptive attack design, agent safety engineering, responsible red-teaming; certifications for evaluators/red-teamers

- Assumptions/dependencies: funding; industry partnerships; practical lab infrastructure

- Sector-specific resilient AI deployments

- Sectors: healthcare (clinical decision support triage agents), finance (research/trading assistants), education (tutors with restricted tools), energy (grid ops agents), robotics (physical-world task agents)

- Tools/workflows: tailored capability scoping, human-in-the-loop checkpoints, adaptive pre-deployment audits, continuous safety monitoring

- Assumptions/dependencies: domain regulations; integration with legacy systems; safety case development and validation

- Policy and regulatory frameworks mandating adaptive evaluations

- Sectors: government, public-sector agencies

- Tools/workflows: minimum safety requirements (adaptive red-teaming, independent audits), capability risk labeling, compute budget thresholds for evaluations, public transparency reports

- Assumptions/dependencies: legislative processes; alignment with international standards; enforcement mechanisms

- Infrastructure and API support for safer evaluations

- Sectors: model providers, cloud platforms

- Tools/workflows: secure test modes with logits/telemetry; sandboxed tool invocations for evaluation; synthetic environments mirroring production integrations

- Assumptions/dependencies: provider willingness to expose diagnostic signals; privacy-by-design; secure isolation from production data

These applications reflect the paper’s core insight: defenses that appear strong under static or weak evaluations often fail under adaptive attacks. Practical safety requires stronger evaluation regimes, defense-in-depth workflows, and architectural changes that assume capable adversaries with significant compute and ingenuity.

Glossary

- Adaptive attacks: Attacks that explicitly tailor their strategy to the design of a specific defense, often using significant compute to optimize success. "None of the 12 defenses across four common techniques is robust to strong adaptive attacks."

- Adversarial examples: Inputs intentionally perturbed at test time to cause a model to err, typically without obvious changes to humans. "adversarial examples~{szegedy2014intriguing, biggio2013evasion} (inputs modified at test time to cause a misclassification)"

- Adversarial machine learning: The paper of how learning systems behave under intentional attacks and how to make them robust. "Evaluating the robustness of defenses in adversarial machine learning has proven to be extremely difficult."

- Adversarial training: Training a model on adversarially generated inputs to improve robustness against similar attacks. "Only adversarial training that performs robust optimization--where perturbations are optimized inside the training loop--has been shown to yield meaningful robustness"

- AgentDojo: A benchmark environment for evaluating attacks and defenses in LLM agent settings. "For prompt injection, we use both an agentic benchmark like AgentDojo~\citep{Debenedetti2024AgentDojo}"

- Agentic: Referring to LLMs acting as agents with tools or actions, often requiring specialized robustness evaluation. "MetaSecAlign targets agentic robustness"

- Attack success rate (ASR): The fraction of attack attempts that achieve their objective against a defense or model. "attack success rates (ASR) as low as ."

- Backdoor defenses: Methods designed to detect or mitigate hidden triggers implanted during training that cause targeted misbehavior. "backdoor defenses~\citep{zhu2024breaking, qi2023revisiting}"

- Beam search: A heuristic search algorithm that explores a set of the best candidates at each step to generate sequences. "leveraging heuristic perturbations, beam search, genetic operators, or LLM-guided tree search"

- BERT-based classifier: A classifier built by fine-tuning the BERT LLM architecture for detection or classification tasks. "a fine-tuned BERT-based~\citep{devlin2019bert} classifier"

- Blackbox (generation only): A threat model where the attacker only sees the final generated outputs, not internal states or scores. "(III) Blackbox (generation only):"

- Blackbox (with logits): A threat model where the attacker can query the model and observe output scores (e.g., logits), but not internal parameters or gradients. "(II) Blackbox (with logits):"

- Canary signal: A hidden, secret token or phrase used to detect whether a model followed untrusted instructions or leaked protected information. "hide a secret ``canary'' signal inside the evaluation process"

- Circuit Breakers: A defense that trains models against curated jailbreak attacks to prevent harmful generations. "Circuit Breakers primarily target jailbreak attacks"

- Data Sentinel: A defense that uses a honeypot-style prompt to test whether inputs cause task redirection or injection, flagging unsafe behavior. "Data Sentinel uses a honeypot prompt to test whether the input data is trustworthy."

- Embedding space: The continuous vector space where tokens are represented for neural processing, enabling gradient-based manipulations. "by estimating gradients in embedding space"

- GCG: A gradient-based attack that optimizes adversarial token suffixes against LLMs. "For example, GCG takes 500 steps with 512 queries to the target model at each step to optimize a short suffix of only 20 tokens~\citep{zou2023universal}."

- Genetic algorithm: An evolutionary search method that uses mutation and selection to iteratively improve candidate prompts or triggers. "our version of the search attack uses a genetic algorithm with LLM-suggested mutation."

- GRPO: A reinforcement-learning algorithm variant used to update LLM policies during adversarial prompt optimization. "The weights of the LLM is also updated by the GRPO algorithm~\citep{shao2024deepseekmath}."

- HarmBench: A benchmark designed to evaluate jailbreak defenses using harmful or restricted content prompts. "For jailbreaks, we use HarmBench~\citep{mazeika2024harmbench}."

- Honeypot prompt: A planted instruction designed to reveal whether the model is following malicious or untrusted inputs. "uses a honeypot prompt"

- Human red-teaming: Expert humans crafting adversarial inputs to probe and break defenses through creativity and iteration. "human red-teaming succeeds on all of the scenarios"

- Jailbreaks: Attacks that coerce a model into producing restricted or harmful outputs contrary to its safety policies. "defenses against jailbreaks and prompt injections"

- Logits: The raw, pre-softmax scores output by a model for each token or class, often exposed in some black-box settings. "the model's output scores (e.g., logits or probabilities)"

- MELON: A defense that detects prompt injections by comparing tool-use behavior across a normal and a dummy summarization run. "MELON adopts a different but related strategy."

- Membership inference: Attacks or analyses that test whether specific data points were part of a model’s training set. "membership inference defenses~{aerni2024evaluations, choquette2021label}"

- MetaSecAlign: A defense method targeting agent robustness, evaluated on agentic benchmarks. "MetaSecAlign targets agentic robustness"

- Minimax objective: An optimization setup aiming to minimize the worst-case loss, often used to heighten sensitivity to attacks. "The detector is fine-tuned with a minimax objective"

- Model Armor: A proprietary detector/guardrail system used to filter unsafe prompts or outputs. "Protect AI, PromptGuard, and Model Armor (with Gemini-2.5 Pro as the base model)."

- PIGuard: A detector trained to classify prompts as benign or injected/jailbreaking, used as a guardrail. "PIGuard~\citep{li2025piguard}"

- Policy-gradient algorithms: RL methods that update a policy directly by estimating gradients of expected reward. "policy-gradient algorithms to progressively improve attack success."

- Poisoning defenses: Methods that prevent or mitigate training-time manipulation of data intended to subvert the learned model. "poisoning defenses~{fang2020local, wen2023styx}"

- Projected gradient descent: An iterative attack/optimization method that takes gradient steps and projects back into a valid constraint set. "simple and computationally inexpensive algorithms (e.g., projected gradient descent)"

- Prompt injections: Inputs that try to override or redirect a model’s instructions, often causing unintended actions or data exfiltration. "prompt injections (which aim to prevent an attacker from eliciting harmful knowledge or remotely triggering malicious actions, respectively)"

- Prompt Sandwiching: A defense that repeats the trusted prompt after untrusted input to keep the model focused on the intended task. "Prompt Sandwiching repeats the trusted user prompt after the untrusted input so the model does not âforgetââ it."

- PromptGuard: A detector/guardrail system trained to flag and block malicious prompt patterns. "PromptGuard~\citep{chennabasappa2025llamafirewall}"

- Protect AI Detector: A classifier-based detector for prompt injection/jailbreak content, used to filter unsafe inputs. "Protect AI Detector~\citep{deberta-v3-base-prompt-injection-v2}"

- Reinforcement learning (RL): A learning paradigm where policies are optimized via rewards from interacting with an environment, here used to generate adversarial prompts. "Reinforcement-learning methods view prompt generation as an interactive environment"

- Reward-hacking: Behaviors where a model exploits flaws in an automated scoring or reward signal to appear successful without truly meeting the objective. "susceptible to adversarial examples and reward-hacking behaviors"

- Robust optimization: Training that explicitly optimizes for worst-case perturbations within a loop to improve true robustness. "robust optimization--where perturbations are optimized inside the training loop--"

- Spotlighting: A defense that tags trusted text segments and instructs the model to prioritize them to resist injections. "Spotlighting marks trusted text with special delimiter tokens and instructs the model to pay extra attention to those segments"

- StruQ: A defense/evaluation that checks if injections can redirect generation to a fixed target phrase rather than the intended task. "StruQ specifically evaluates whether an adversary can change the modelâs generations away from the intended task toward a fixed target phrase"

- Tool calls: Actions by an LLM agent invoking external tools/APIs (e.g., file operations, emails) as part of task execution. "records all tool calls"

- Whitebox: A threat model where the attacker has full knowledge of model architecture, parameters, internal states, and gradients. "(I) Whitebox:"

Collections

Sign up for free to add this paper to one or more collections.