- The paper introduces the UWE metric to bridge the gap between offline evaluation and online driving performance.

- It shows that traditional metrics like QCE and TRE have low correlation with online safety outcomes in complex urban scenarios.

- Experimental validation reveals UWE's 13% improvement and strong correlation with metrics like driving score in both simulation and real-world settings.

Scalable Offline Metrics for Autonomous Driving: A Technical Analysis

Introduction

The paper "Scalable Offline Metrics for Autonomous Driving" (2510.08571) addresses a critical challenge in autonomous vehicle (AV) research: the disconnect between offline evaluation metrics and actual online driving performance. Offline evaluation, which leverages pre-collected datasets and ground-truth annotations, is widely used due to its safety and scalability. However, the paper demonstrates that traditional offline metrics (e.g., L2 loss, QCE, TRE) exhibit poor correlation with online metrics such as collision rates and driving scores, especially in complex urban scenarios. The authors propose an uncertainty-weighted error (UWE) metric, leveraging epistemic uncertainty via MC Dropout, to better predict online performance from offline data.

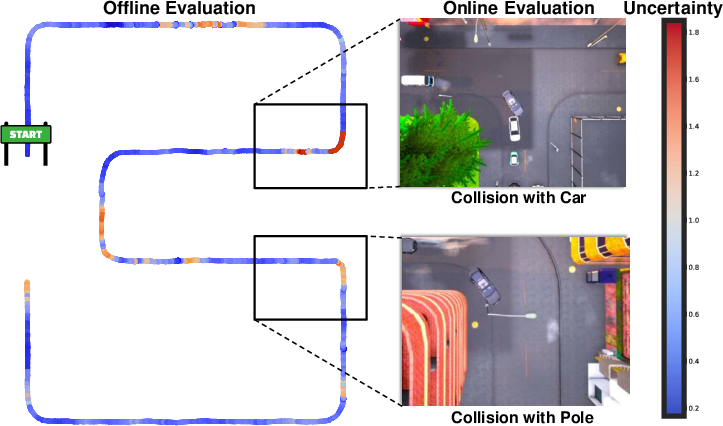

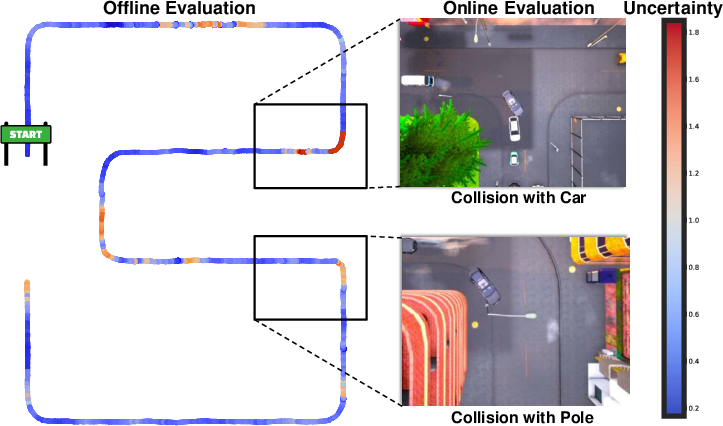

Figure 1: Model uncertainty-weighted errors computed offline can estimate online errors such as collisions and infractions, enabling scalable evaluation without privileged perception or agent prediction models.

The paper formalizes the evaluation task as estimating online performance (e.g., collision rates, infractions, route completion) using offline metrics computed over a dataset D={(oi,ai)}i=1N, where oi includes sensor observations and ai is the ground-truth action. The policy π maps observations to actions. Offline metrics are typically expectations of a loss function, possibly weighted by a scalar αi:

E(oi,ai)∼D[αiL(ai,π(oi))]

Traditional metrics include MAE, MSE, QCE, TRE, and FDE. Online metrics, as defined in the CARLA Leaderboard, include driving score (DS), success rate (SR), collisions, infractions, and route completion.

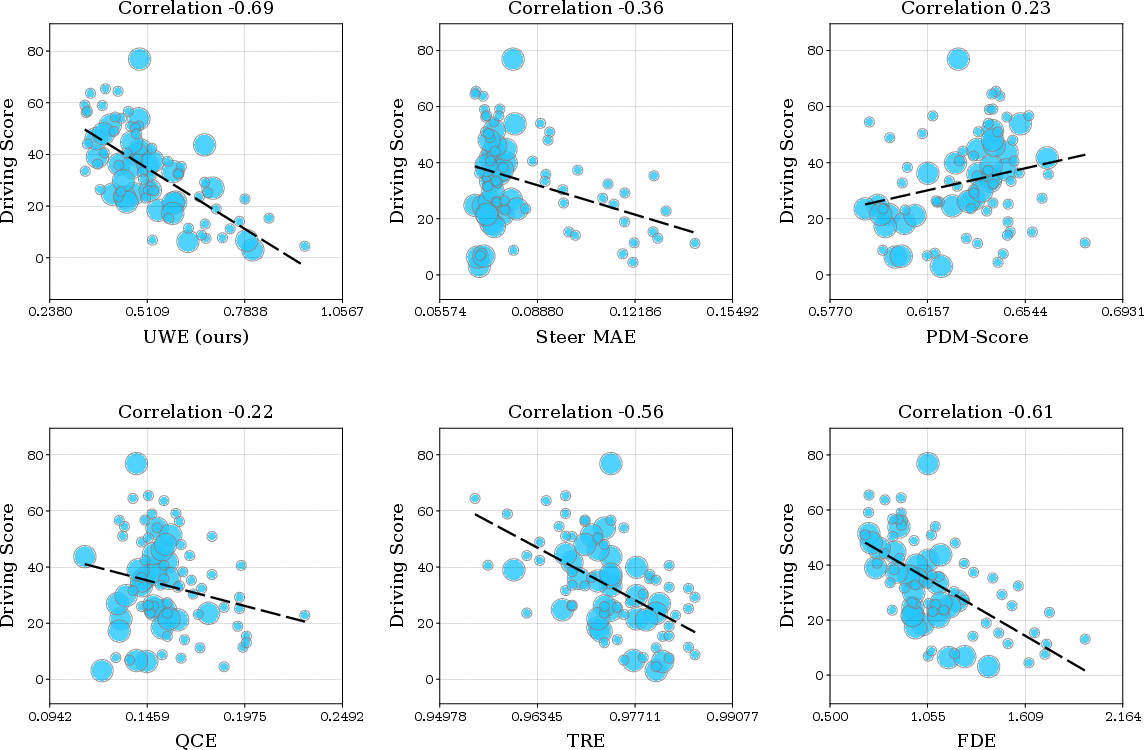

Limitations of Existing Offline Metrics

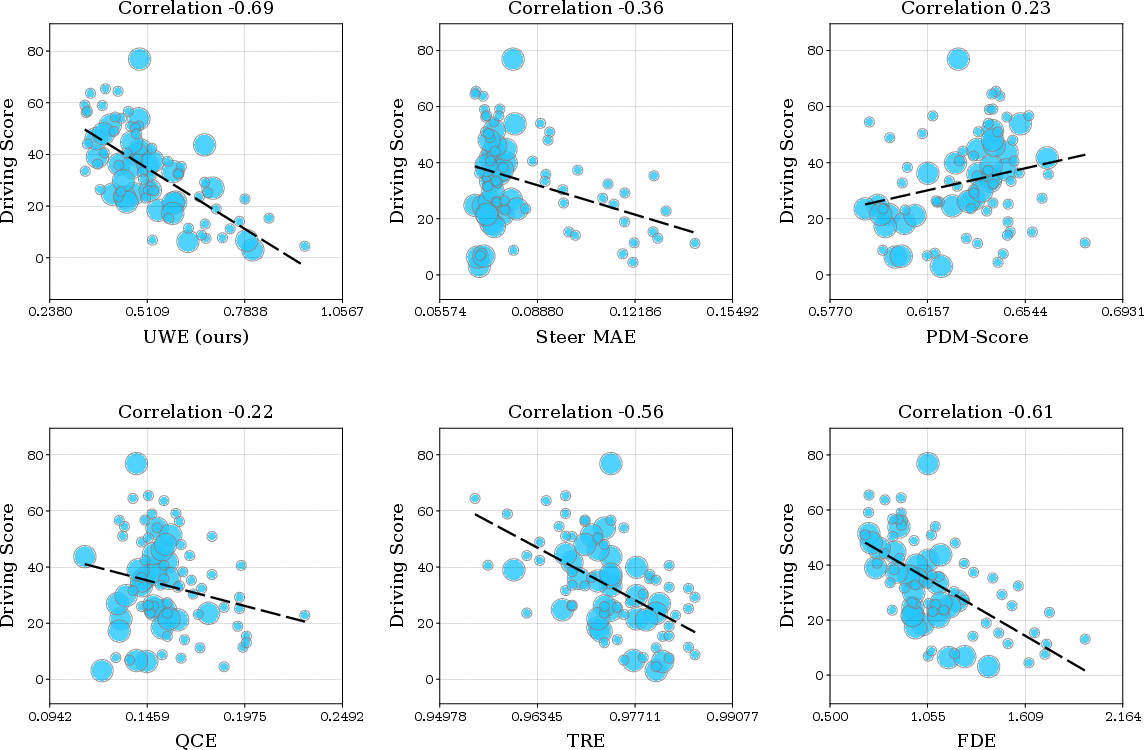

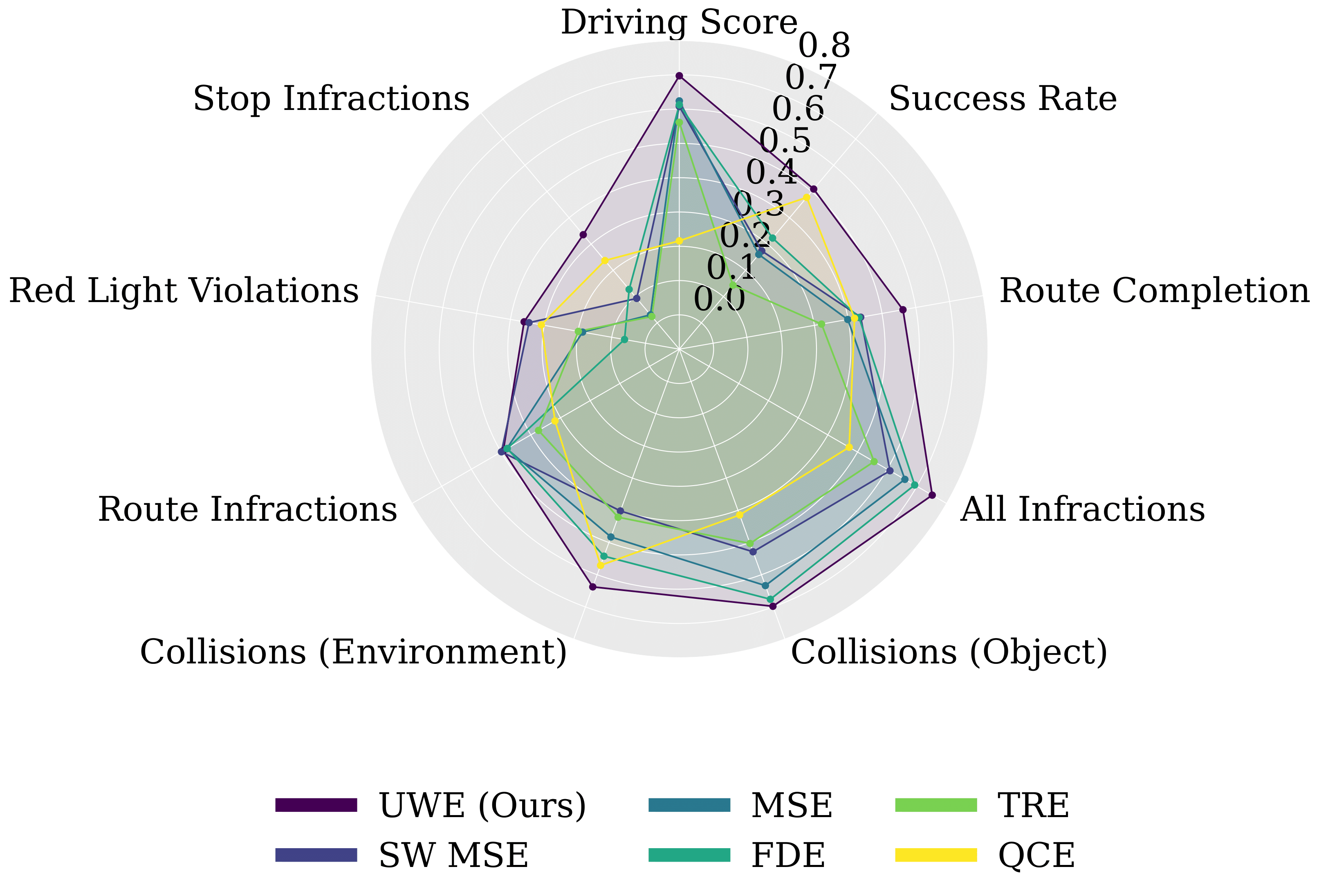

Through extensive simulation experiments in CARLA (Longest6 benchmark, 36 routes, diverse weather/town settings), the paper demonstrates that standard offline metrics (QCE, TRE, FDE) have low correlation with online driving score and infractions. For example, QCE and TRE, previously reported as effective, show Pearson correlations of 0.22 and 0.56, respectively, with driving score in realistic settings. This is attributed to:

- Data imbalance: Real-world datasets are dominated by uneventful driving, obscuring performance in safety-critical scenarios.

- Metric insensitivity: L2/MAE metrics cannot distinguish between maneuvers with identical errors but different safety outcomes.

- Closed-loop compounding: Minor errors in online deployment can propagate, leading to catastrophic failures not captured offline.

Figure 2: Correlation analysis of offline metrics (TRE, QCE, PDM, FDE) with online driving score in simulation; most metrics show poor correlation except for the proposed UWE.

Uncertainty-Weighted Error (UWE) Metric

The core contribution is the UWE metric, which weights offline errors by model epistemic uncertainty. Uncertainty is estimated via MC Dropout: for each input, K stochastic forward passes are performed, and the variance ui of predictions is computed. The UWE is then:

UWE=t=1∑nβtE(oi,ai)∼D[uiγLt(ai,π(oi))]

where Lt are different offline metrics, βt are optimized weights, and γ scales the uncertainty. This approach prioritizes errors in uncertain (rare or challenging) scenarios, which are more likely to cause online failures.

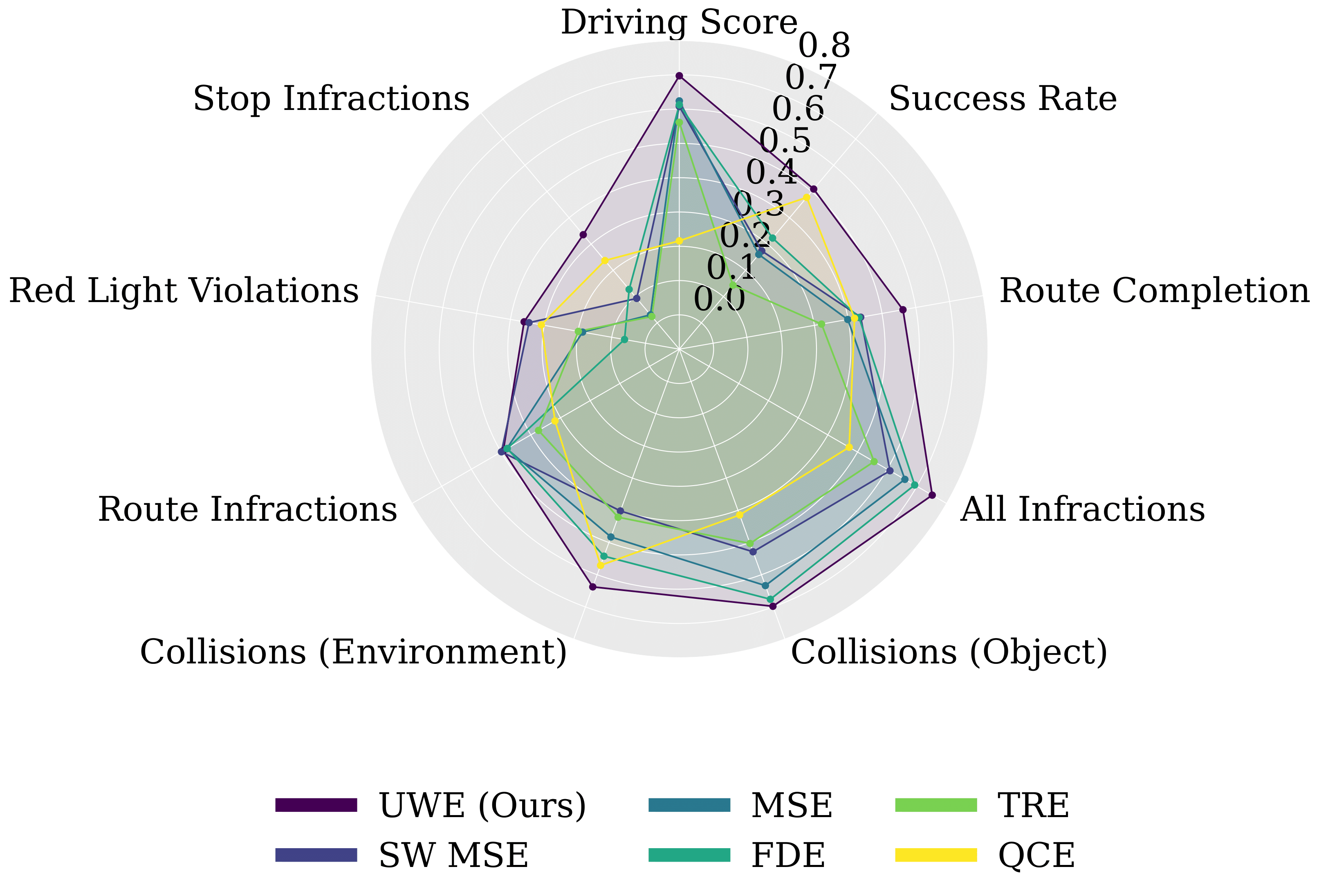

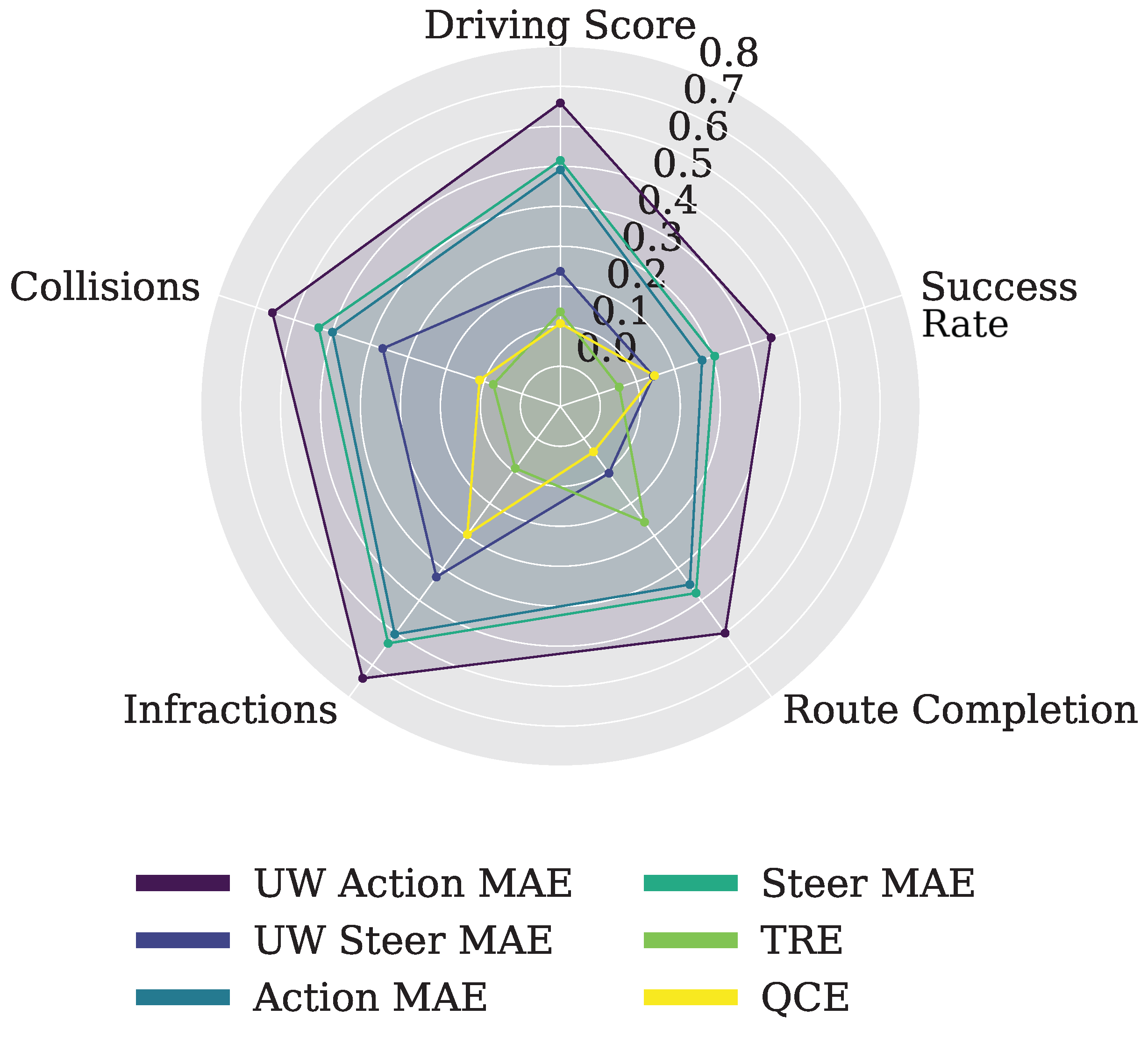

Figure 3: UWE achieves the highest correlation with online driving score and infractions in simulation, outperforming all baselines.

Experimental Validation

Simulation

- 82 models evaluated (varied backbones, inputs, epochs, dropout rates).

- UWE achieves a Pearson correlation of 0.69 with driving score, a 13% improvement over prior metrics.

- UWE outperforms NAVSIM's PDM score in reactive, complex scenarios, as PDM relies on non-reactive assumptions and privileged information.

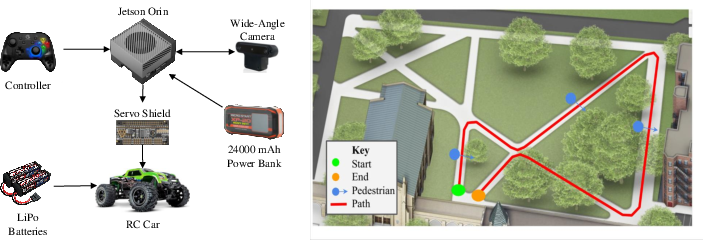

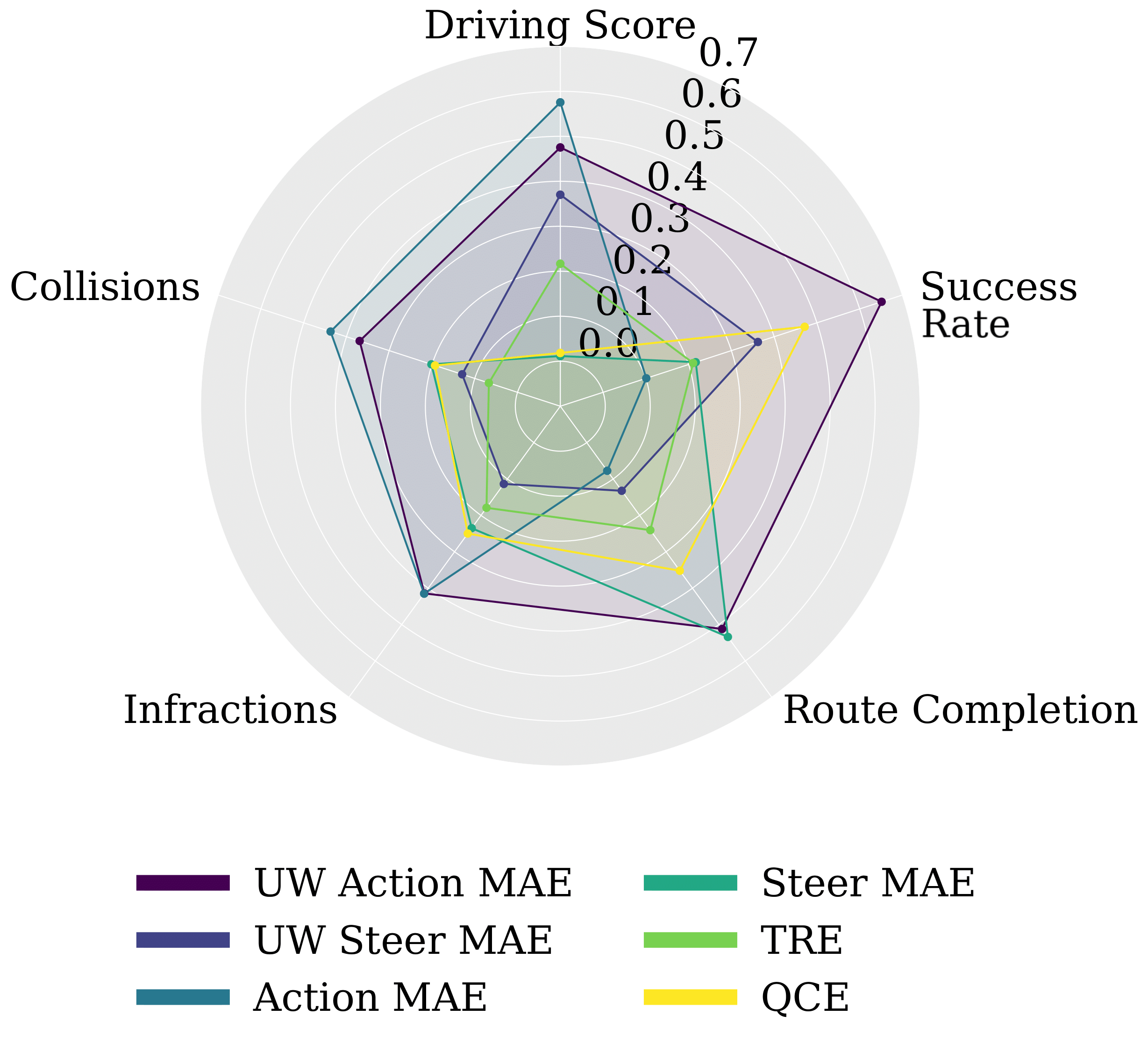

Real-World

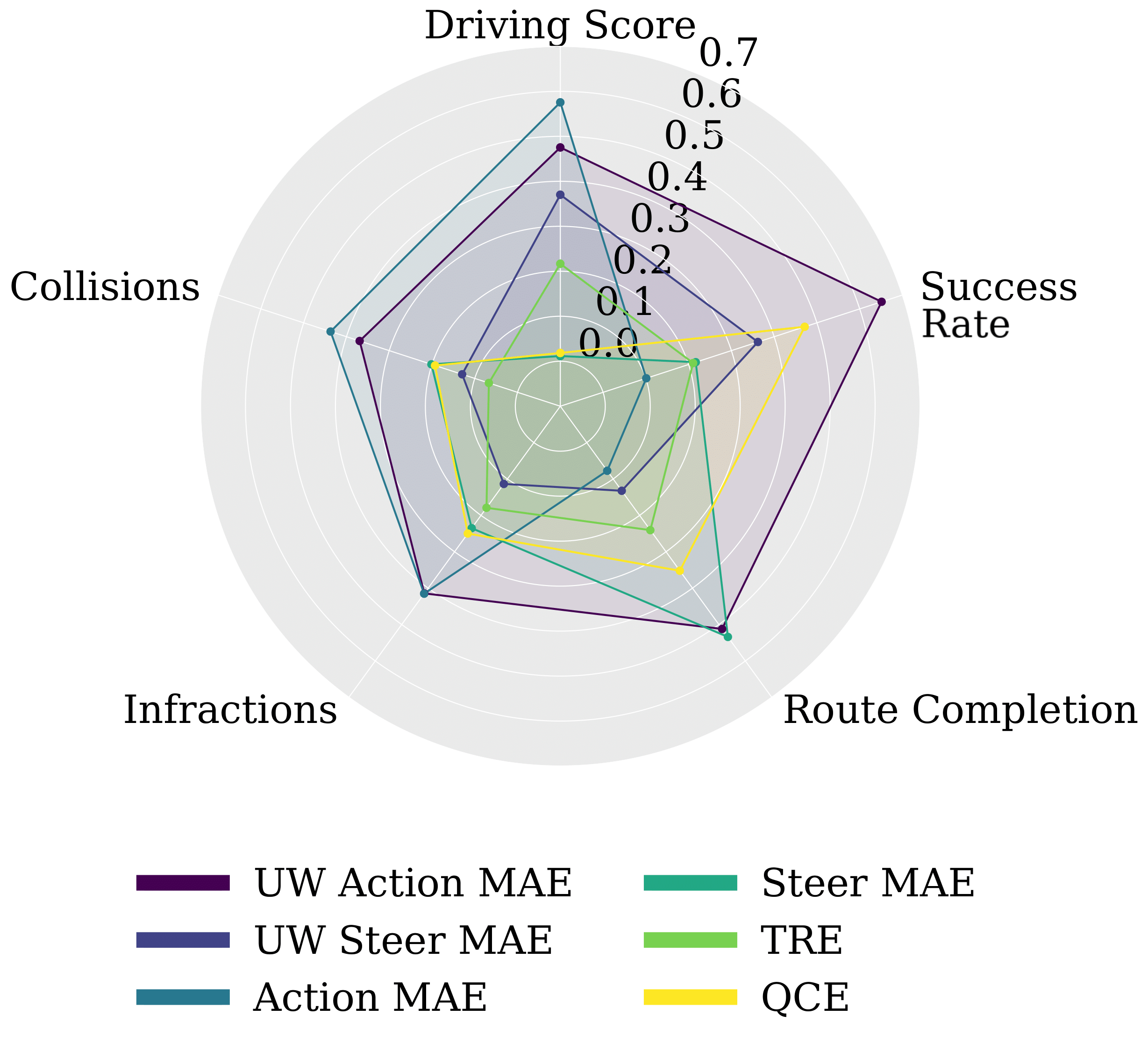

Figure 5: UWE shows consistent, superior correlation with online metrics in both targeted and naturalistic real-world driving scenarios.

Ablation and Trade-offs

- Ensemble-based uncertainty estimation further increases correlation (0.80), but MC Dropout is more computationally efficient for large models.

- UWE is robust to the choice of model for uncertainty estimation; correlation remains high when using fixed weights from different epochs.

- UWE does not require HD maps or privileged simulation design, unlike NAVSIM, making it broadly applicable.

Implementation Considerations

- MC Dropout can be implemented by enabling dropout layers during inference and averaging predictions.

- UWE can be computed for any offline metric; combining multiple metrics (e.g., MAE, MSE) further improves coverage of edge cases.

- For real-world deployment, lightweight architectures (e.g., 5-layer CNN, RegNetY variants) suffice for uncertainty estimation.

- UWE is scalable to large datasets and diverse policy architectures, requiring only model predictions and uncertainty estimates.

Implications and Future Directions

The findings have significant implications for AV policy evaluation:

- Offline metrics must account for epistemic uncertainty to reliably predict online performance, especially in safety-critical scenarios.

- UWE enables scalable, annotation-free evaluation, facilitating rapid iteration and deployment of AV models.

- Future work should extend UWE validation to full-scale vehicles, more complex urban environments, and finer-grained online metrics (e.g., subtle infractions).

- Integration with OPE and offline RL frameworks may further improve policy evaluation and training efficiency.

Conclusion

The paper provides a rigorous analysis of the limitations of traditional offline metrics for autonomous driving and introduces the uncertainty-weighted error metric as a scalable, effective alternative. UWE demonstrates superior correlation with online driving performance in both simulation and real-world settings, without requiring privileged information or complex simulation design. This work advances the state-of-the-art in AV policy evaluation and lays the groundwork for safer, more reliable offline assessment methodologies.