- The paper presents a hybrid AC-BiFPN and Transformer framework that significantly improves multi-scale feature extraction and long-range dependency modeling for generating coherent TBI radiology reports.

- The model outperforms traditional CNN-based approaches with superior NLG metrics (BLEU, METEOR, ROUGE, CIDEr) and robust clinical classification scores on the RSNA Intracranial Hemorrhage Detection dataset.

- The system offers rapid, contextually relevant report generation that supports emergency radiology decision-making and enhances educational feedback through an interactive chatbot interface.

AI-Driven Radiology Report Generation for Traumatic Brain Injuries: An Expert Analysis

Introduction

This paper presents a hybrid deep learning framework for automated radiology report generation in the context of traumatic brain injuries (TBI), integrating an Augmented Convolutional Bi-directional Feature Pyramid Network (AC-BiFPN) for multi-scale image feature extraction with a Transformer-based decoder for natural language report generation. The system is evaluated on the RSNA Intracranial Hemorrhage Detection dataset, demonstrating superior performance over conventional CNN-based approaches in both diagnostic accuracy and report coherence. The work addresses critical challenges in emergency radiology, including the need for rapid, accurate, and contextually relevant reporting, and provides a foundation for both clinical support and medical education.

Automated radiology report generation has evolved from early CNN-based image captioning models to sophisticated multimodal architectures that fuse visual and textual information. Prior work has established the efficacy of memory-augmented transformers, multimodal fusion, and attention mechanisms for improving report quality and clinical relevance. However, challenges persist in handling missing modalities, capturing long-range dependencies, and extracting multi-scale features critical for detecting subtle and complex anomalies in neuroimaging.

The AC-BiFPN architecture, originally developed for efficient multi-scale feature fusion, has demonstrated improved performance in medical image analysis tasks, particularly in scenarios requiring the detection of both fine-grained and global patterns. Transformers, with their self-attention mechanisms, have become the de facto standard for sequence modeling in NLP and are increasingly adopted for medical report generation due to their ability to model complex dependencies and integrate heterogeneous data sources.

Model Architecture

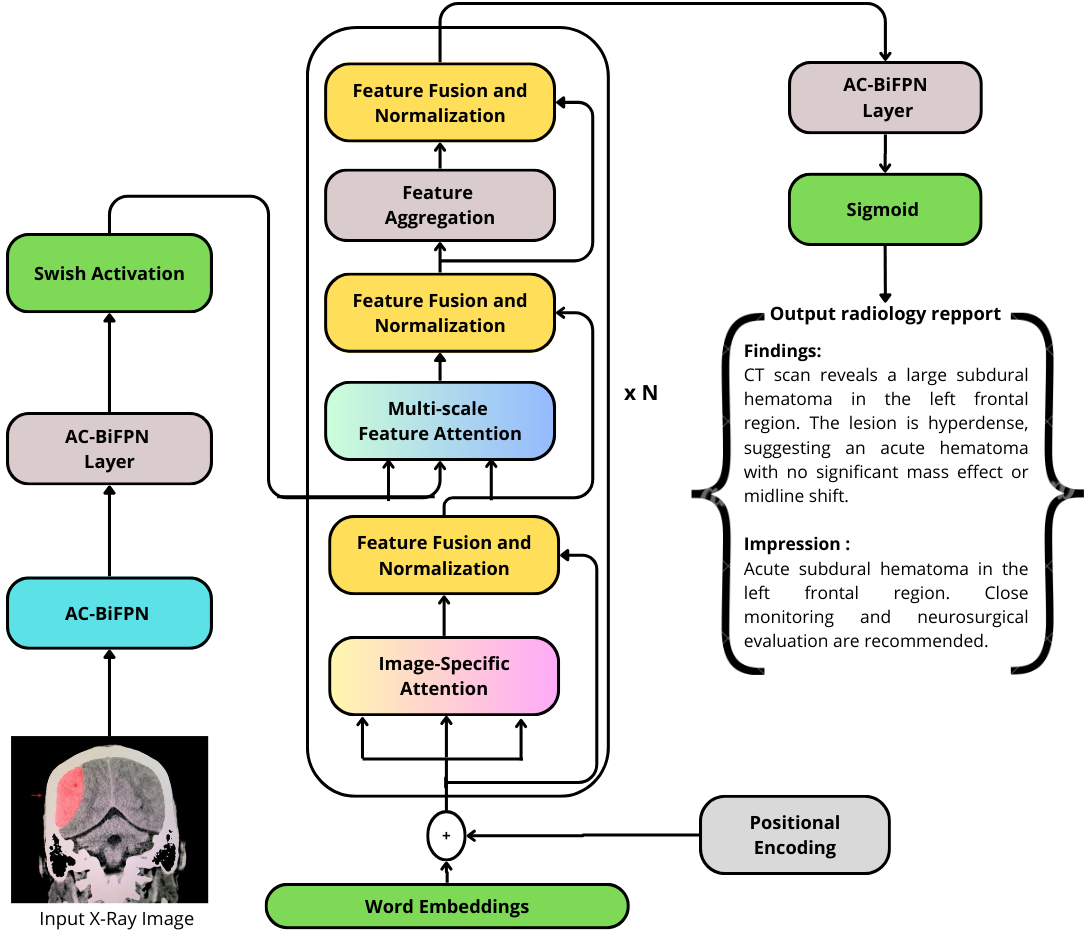

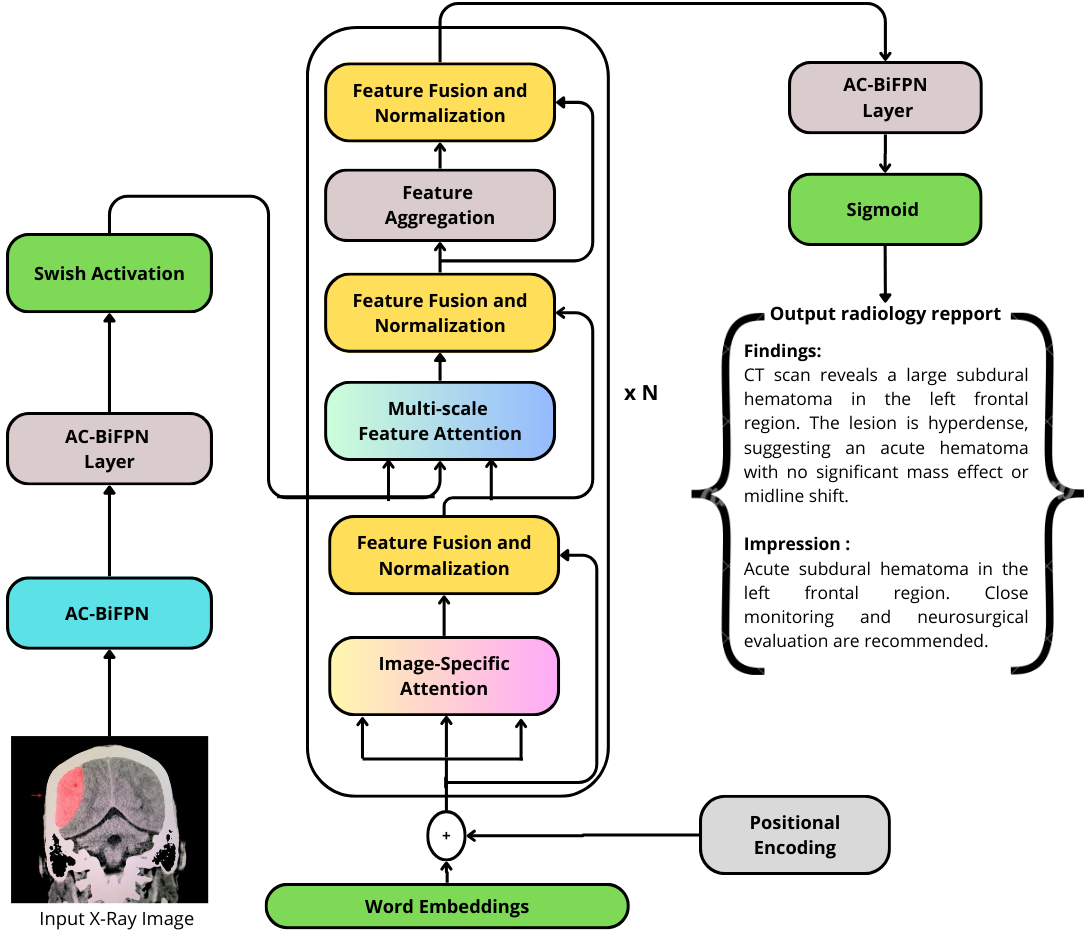

The proposed system consists of two primary components: an AC-BiFPN-based image encoder and a Transformer-based report generator. The AC-BiFPN processes CT and MRI images at multiple resolutions, aggregating features across scales to ensure comprehensive representation of both local and global image characteristics. These fused features are then passed to the Transformer decoder, which generates the radiology report token by token, leveraging multi-head self-attention to integrate visual features with linguistic context.

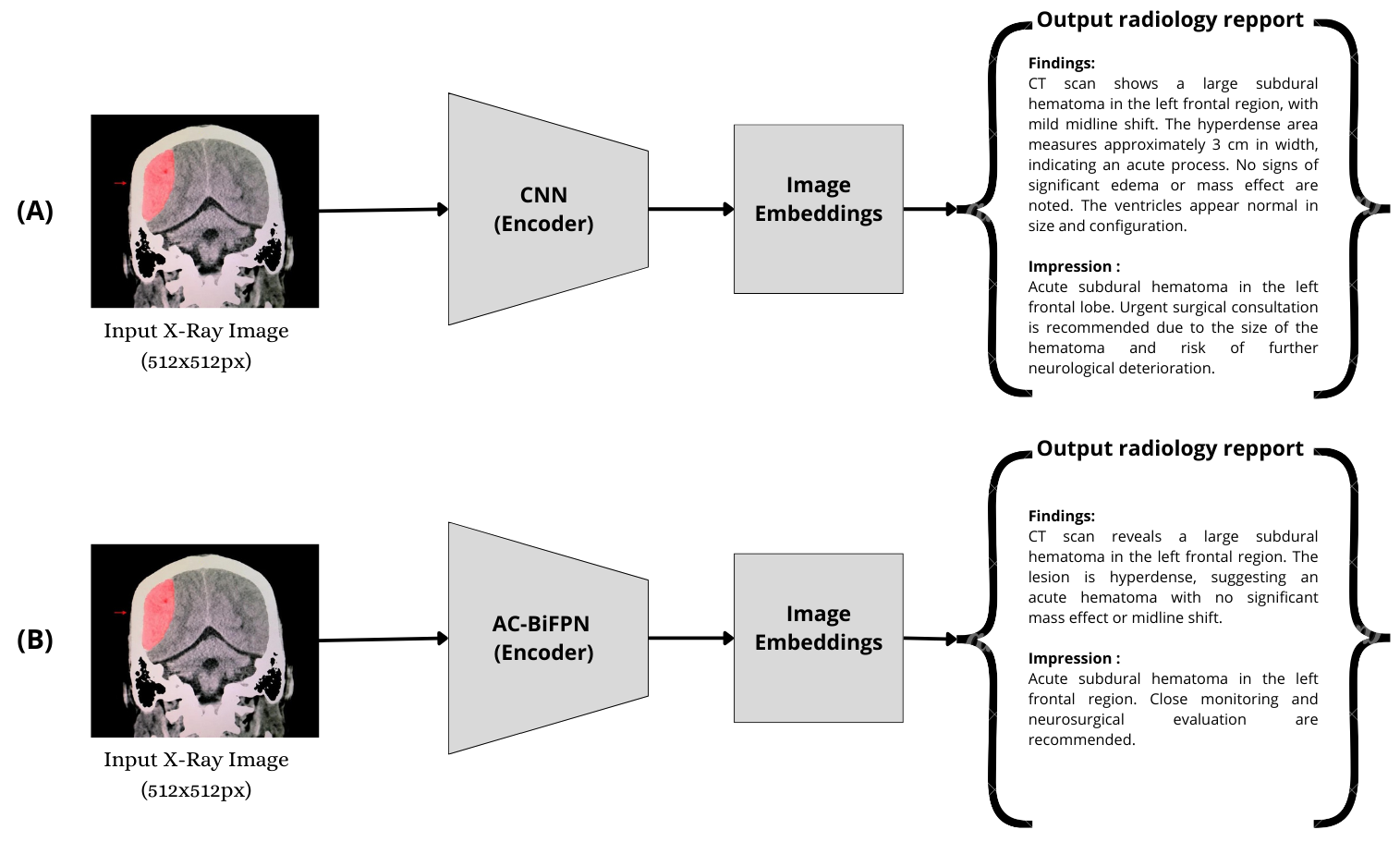

Figure 1: The architecture of the proposed AC-BiFPN + Transformer model for intracranial hemorrhage radiology report generation.

The architecture is designed to address the limitations of traditional CNN+LSTM pipelines by enabling more effective feature fusion and long-range dependency modeling. The use of positional encoding in the Transformer ensures that the sequential nature of language is preserved, while the multi-head attention mechanism allows the model to focus on clinically salient regions of the image during report generation.

Comparative Analysis and Ablation

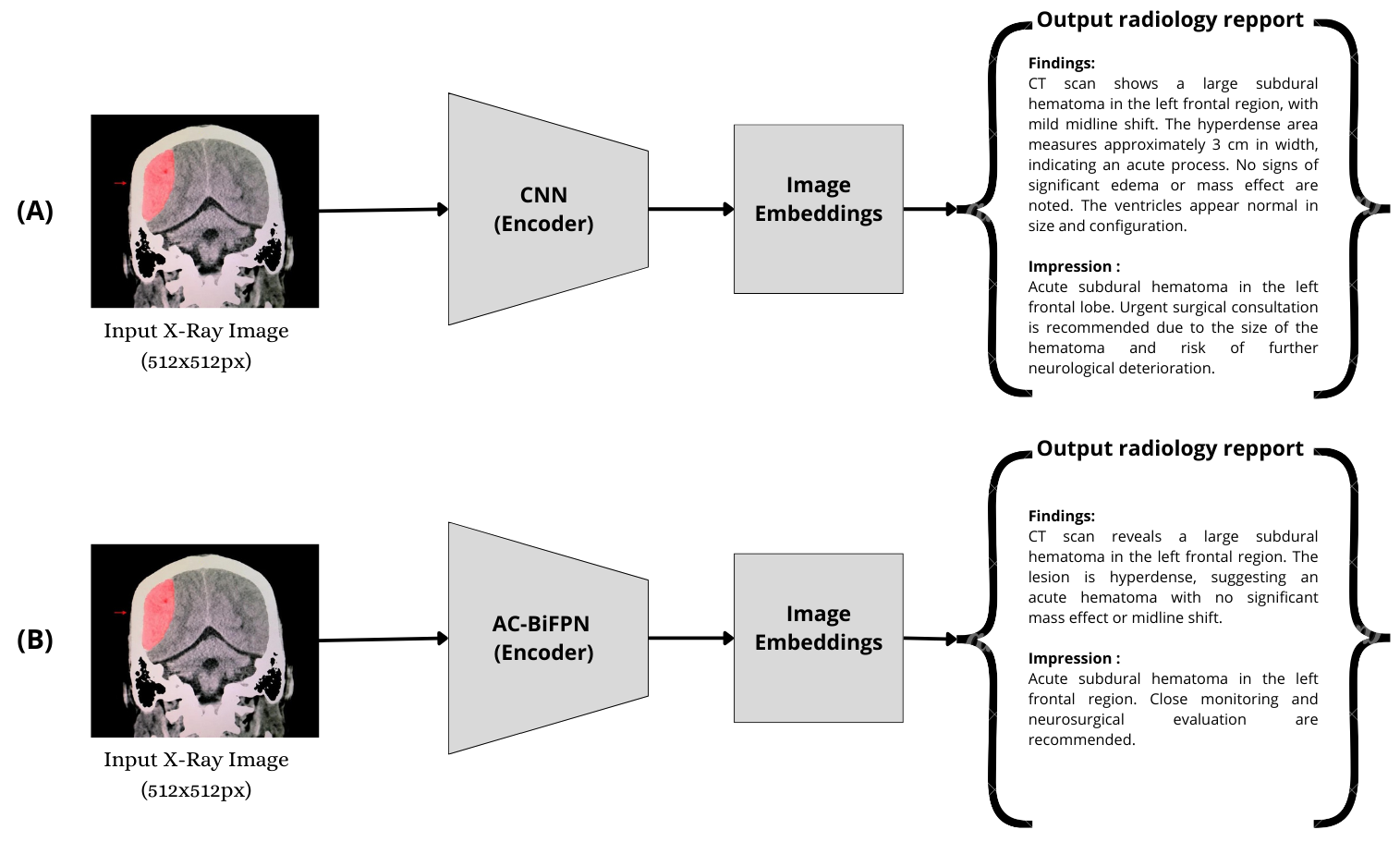

A direct comparison between the CNN+Transformer and AC-BiFPN+Transformer pipelines highlights the impact of multi-scale feature extraction on report quality and diagnostic accuracy.

Figure 2: Comparative analysis of CNN+Transformer (A) and AC-BiFPN+Transformer (B) for automated radiology report generation.

Empirical results indicate that the AC-BiFPN+Transformer model consistently outperforms CNN-based baselines across standard NLG metrics (BLEU, METEOR, ROUGE, CIDEr) and clinical classification metrics (precision, recall, F1-score). The improvement is most pronounced in cases involving subtle or complex hemorrhagic patterns, where multi-scale feature fusion is critical.

Dataset and Experimental Setup

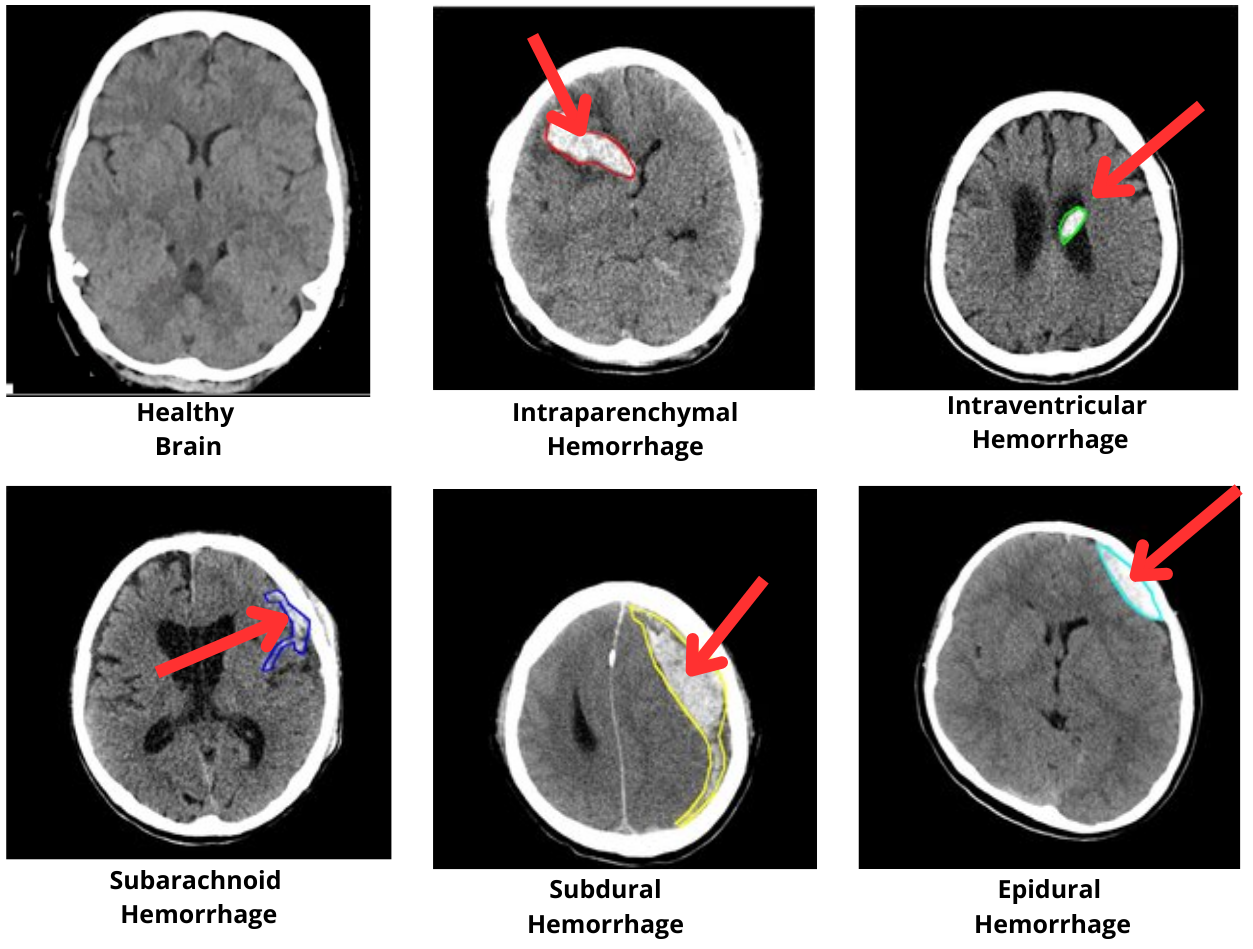

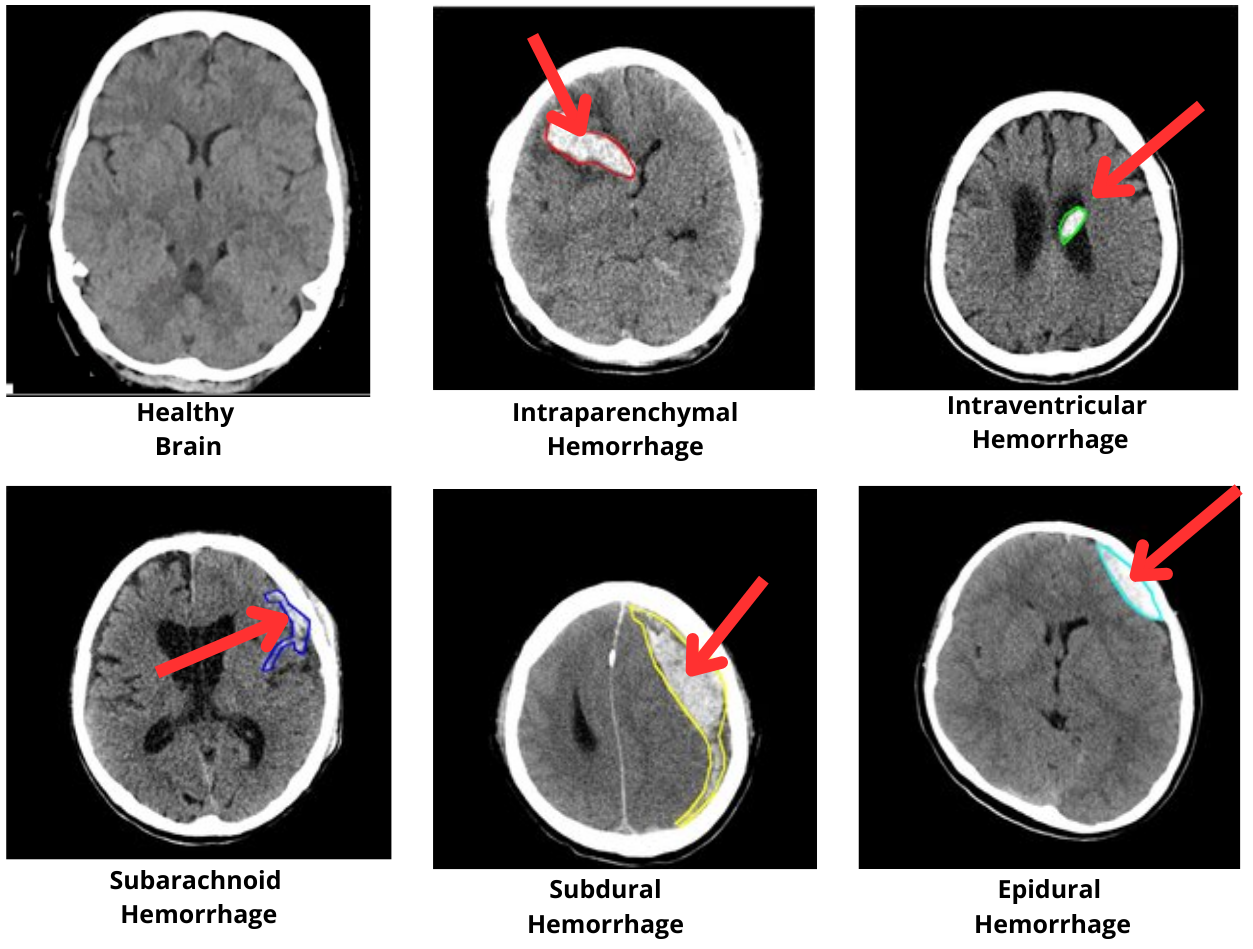

The RSNA Intracranial Hemorrhage Detection dataset, comprising over 670,000 annotated brain CT images, serves as the primary benchmark. The dataset includes five major hemorrhage types: epidural, subdural, subarachnoid, intraparenchymal, and intraventricular, providing a comprehensive testbed for evaluating the model's ability to detect and describe diverse TBI presentations.

Figure 3: Examples of brain CT images showing different types of intracranial hemorrhages: epidural, subdural, subarachnoid, intraparenchymal, and intraventricular hemorrhages from the RSNA Intracranial Hemorrhage Detection Dataset.

The model is implemented in PyTorch, with hyperparameters optimized via grid search. Training leverages the Adam optimizer, cross-entropy loss, and a ReduceLROnPlateau scheduler. Beam search is employed during inference to enhance report fluency and clinical relevance.

Results

The AC-BiFPN+Transformer model achieves a BLEU-1 score of 38.2, METEOR of 17.0, ROUGE of 31.0, and CIDEr of 45.8, outperforming all CNN-based baselines. Notably, increasing the number of hidden units in the model further improves performance, with the best results observed at 1024 units. The model demonstrates robust generalization, maintaining high precision and recall in multi-label clinical classification tasks.

The ablation studies confirm that both multi-scale feature extraction and attention-based decoding are essential for optimal performance. The Transformer decoder, in particular, provides significant gains over LSTM-based alternatives, especially in generating coherent and contextually accurate reports for complex TBI cases.

Implementation Considerations

- Computational Requirements: Training the AC-BiFPN+Transformer model requires substantial GPU resources (e.g., RTX 3070 or higher) and at least 32GB RAM for efficient data loading and model execution.

- Scalability: The architecture is modular, allowing for extension to other imaging modalities (e.g., MRI) and pathologies with minimal modification.

- Deployment: For clinical integration, inference latency is acceptable for emergency settings, and the model can be deployed as a cloud-based or on-premise service. Integration with PACS/RIS systems is feasible via standard DICOM and HL7 interfaces.

- Limitations: The absence of longitudinal data restricts the model's ability to assess temporal progression, and de-identification in the dataset may obscure clinically relevant context. Overfitting is a risk with increased model complexity, necessitating regularization and larger, more diverse training datasets.

Clinical and Educational Implications

The system provides real-time, automated report generation, supporting radiologists in high-pressure environments and offering immediate feedback to trainees. The interactive chatbot interface enhances educational value by explaining diagnostic reasoning and contextualizing findings. The model's robustness to missing modalities and its ability to generate clinically relevant reports position it as a valuable tool for both clinical decision support and medical education.

Future Directions

- Longitudinal Modeling: Incorporating temporal data (e.g., serial imaging) would enable assessment of disease progression and response to therapy.

- Multimodal Fusion: Integration of clinical metadata, laboratory results, and prior reports could further enhance report accuracy and contextual relevance.

- Explainability: Developing interpretable attention maps and saliency visualizations would facilitate clinical trust and regulatory acceptance.

- Generalization: Expanding to other pathologies and imaging modalities, and validating in diverse clinical environments, will be critical for widespread adoption.

Conclusion

The integration of AC-BiFPN for multi-scale feature extraction with a Transformer-based decoder establishes a new benchmark for automated radiology report generation in traumatic brain injury. The model demonstrates superior performance over traditional CNN-based approaches, particularly in complex cases requiring nuanced image interpretation and coherent report generation. While challenges remain—most notably the incorporation of longitudinal data and further improvements in model interpretability—the proposed framework represents a significant advance in AI-driven clinical decision support and medical education for neurotrauma.