- The paper introduces a novel tactile sensor system using bio-inspired whiskers, enabling vision-independent flight in environments where conventional sensors fail.

- It employs advanced signal processing with the TDORC algorithm and an MLP+Kalman filter fusion model, achieving sub-6 mm depth estimation errors and 0% false positives in free-flight.

- The system supports autonomous wall-following and active exploration on a lightweight 44.1 g drone, operating in real time on resource-constrained microcontrollers.

Whisker-Based Tactile Flight for Tiny Drones: A Technical Analysis

Introduction

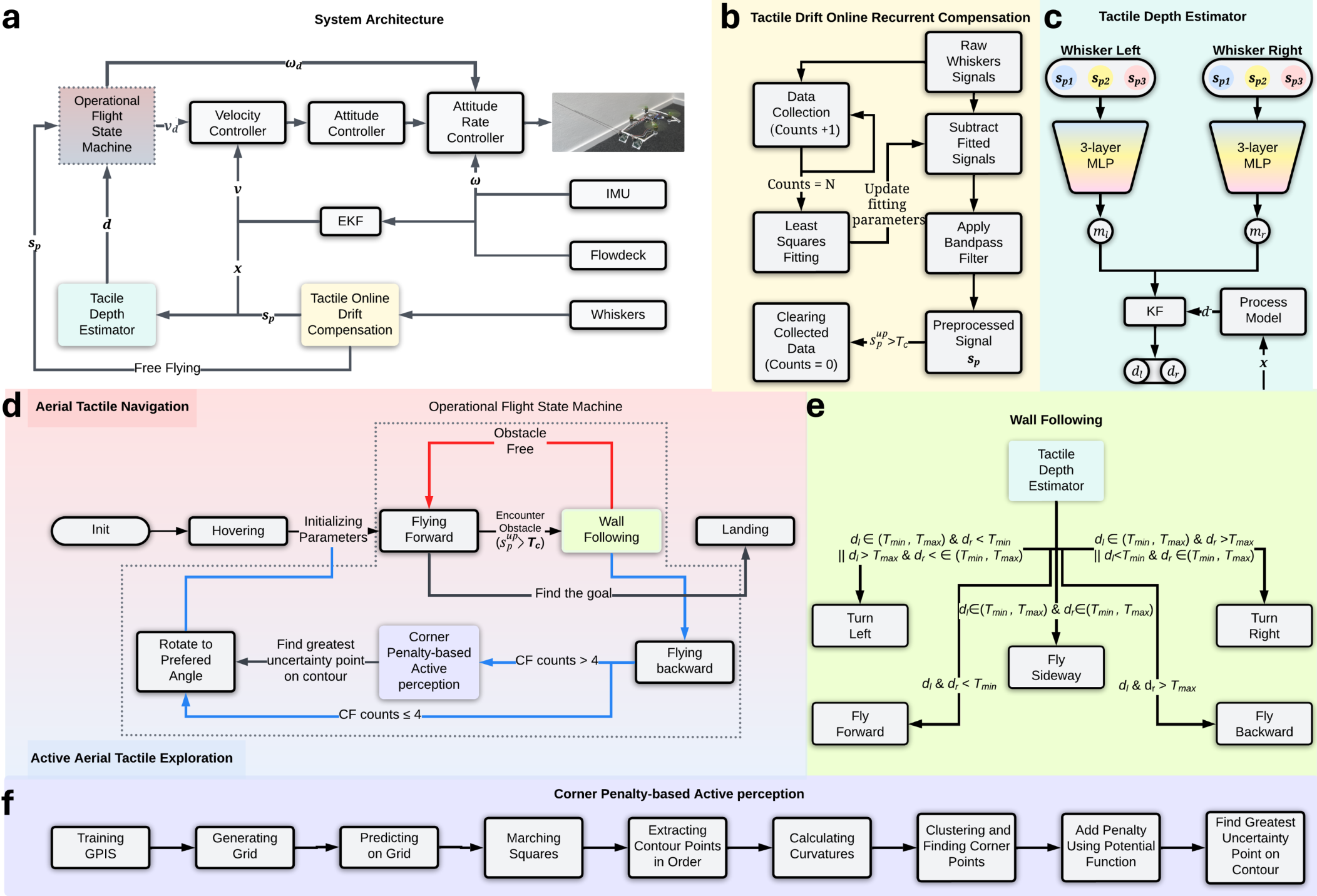

This paper presents a comprehensive framework for tactile perception and navigation in micro aerial vehicles (MAVs) using bio-inspired whisker sensors. The system enables vision-independent flight in environments where conventional sensors fail, such as darkness, smoke, or in the presence of transparent/reflective obstacles. The approach integrates lightweight hardware, advanced signal processing, and robust control algorithms, all running fully onboard resource-constrained platforms. The following analysis details the system architecture, sensor design, signal processing pipeline, tactile depth estimation, navigation strategies, and experimental validation.

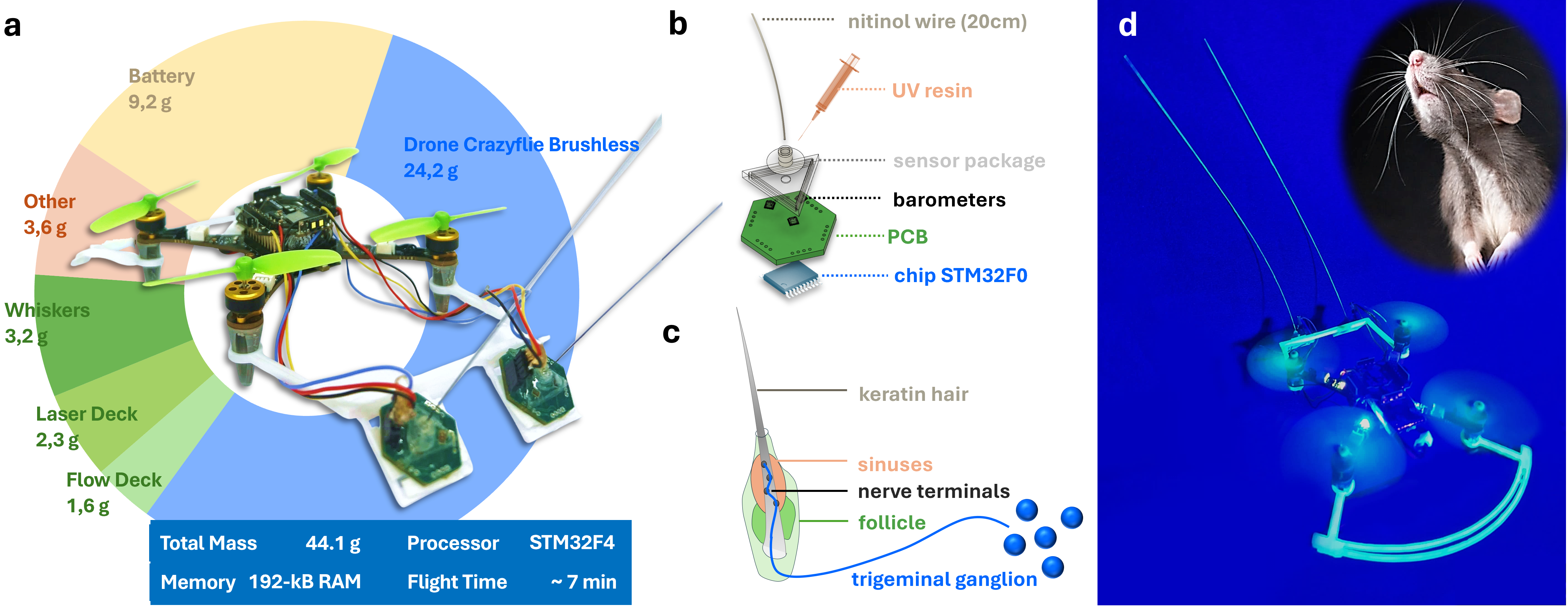

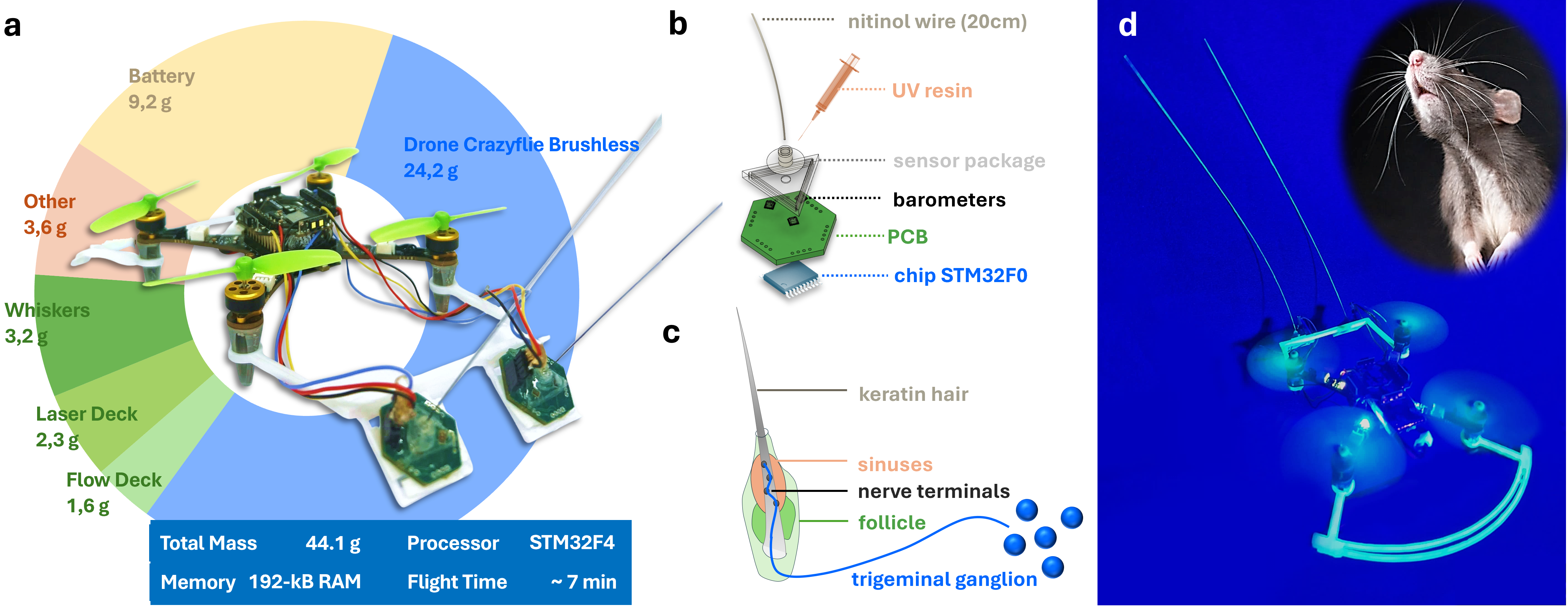

The platform is based on a 44.1 g Crazyflie Brushless drone equipped with two artificial whiskers. Each whisker comprises a 0.4 mm diameter, 200 mm Nitinol wire, a 3D-printed follicle-sinus complex (FSC) filled with UV resin, and three MEMS barometers (BMP390) for pressure sensing. The sensor design is directly inspired by rodent vibrissae, enabling high-sensitivity detection of contact forces with minimal destabilization.

Figure 1: Design and biological inspiration of the whiskered drone, showing the hardware platform, sensor structure, and biological analog.

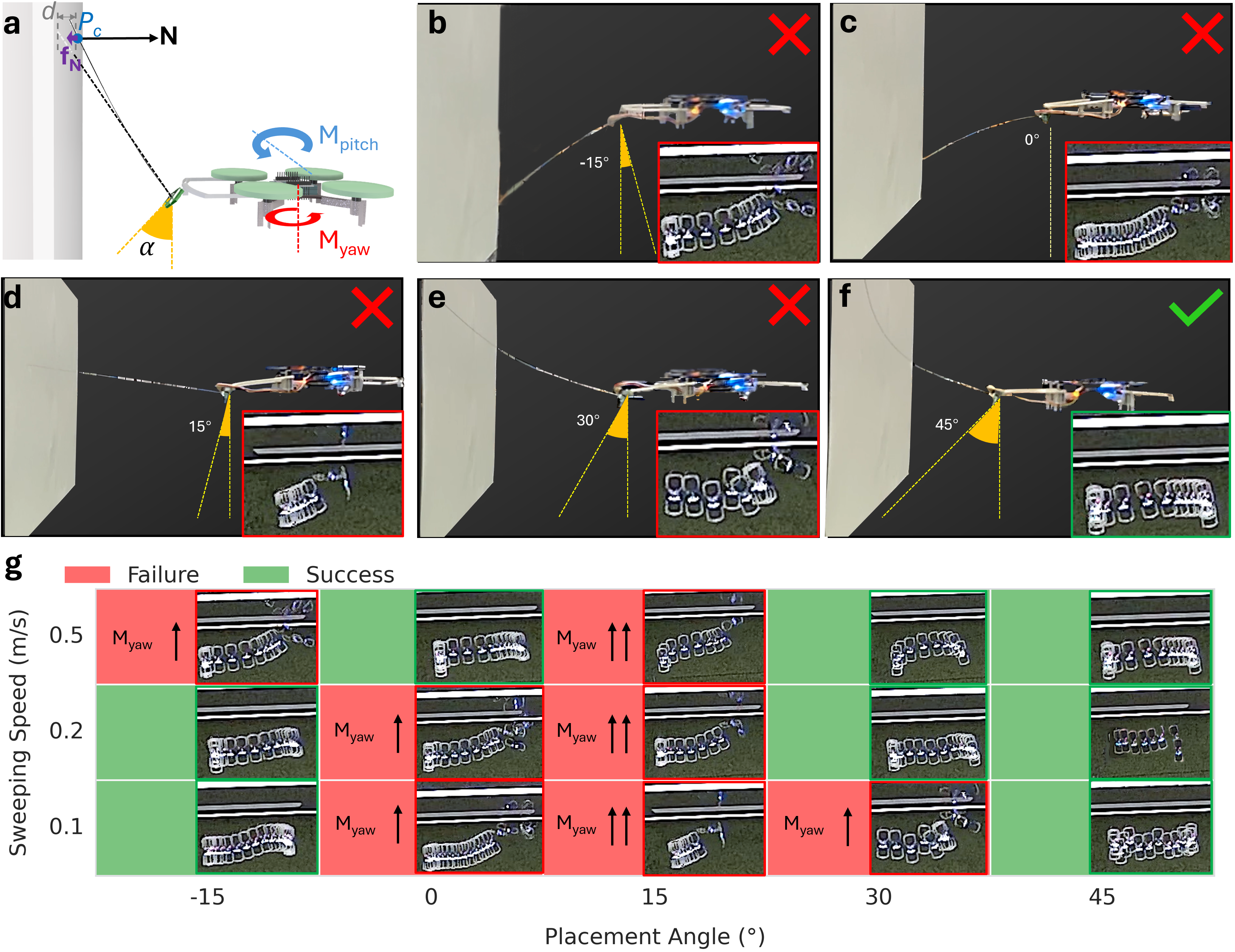

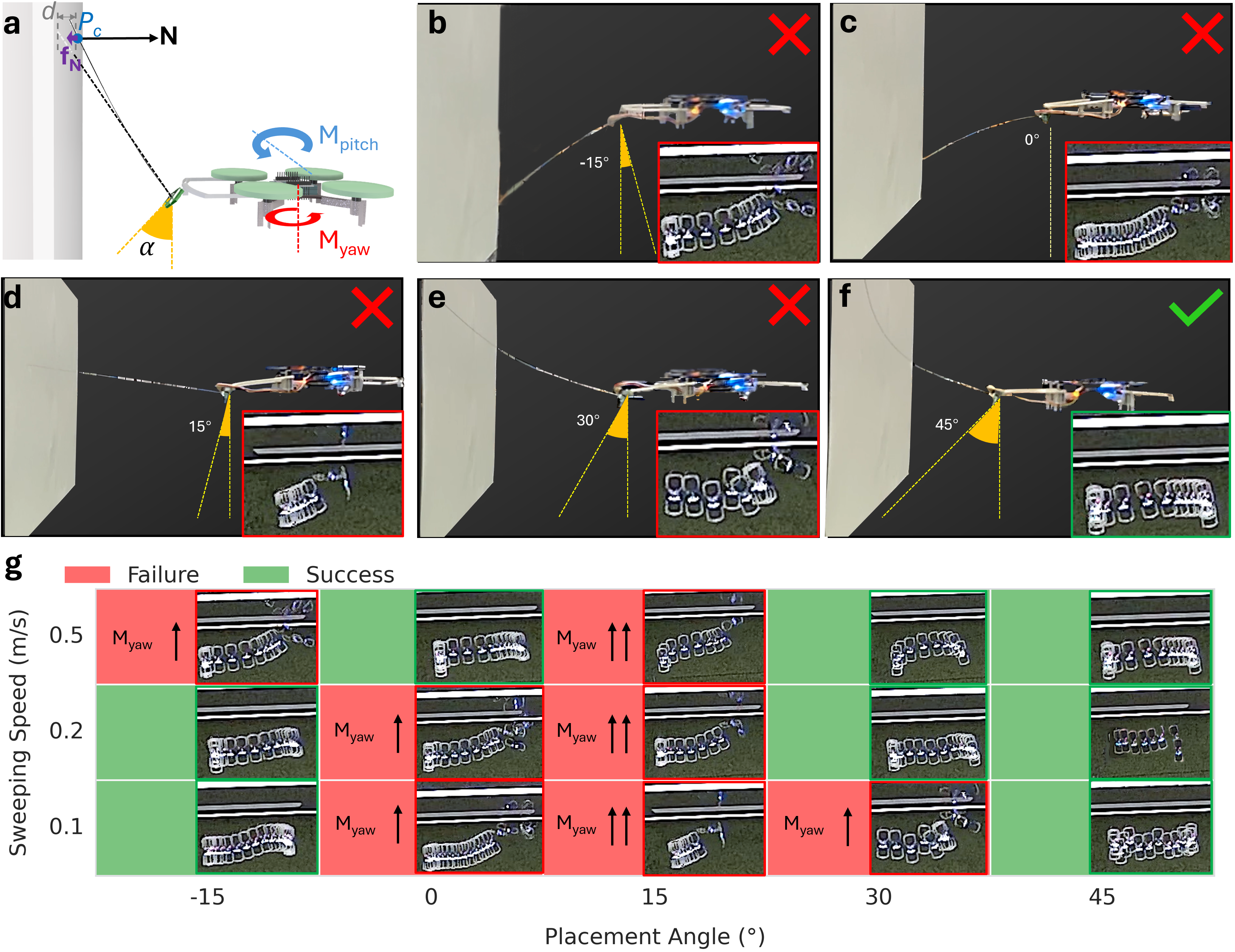

Whisker placement is critical for flight stability. Theoretical and experimental analyses using Pseudo Rigid-Body Modeling (PRBM) demonstrate that a 45∘ placement relative to the yaw plane minimizes destabilizing yaw moments and normal contact forces, ensuring robust wall-sweeping interactions across a range of velocities.

Figure 2: Effect of whisker placement angle on drone stability, with 45∘ placement yielding optimal performance.

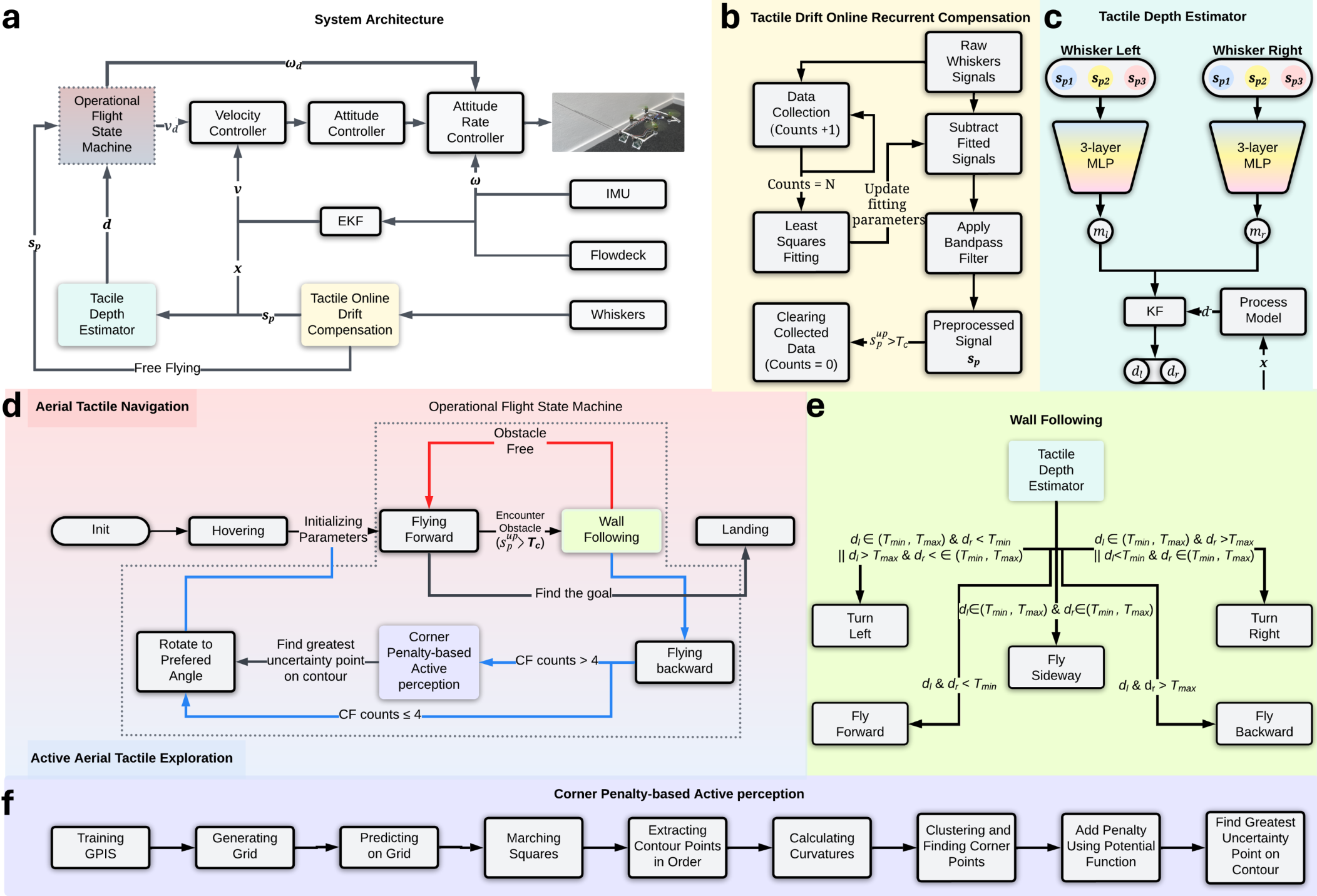

Signal Processing: Tactile Drift Compensation

Barometer-based whisker sensors are susceptible to drift from temperature fluctuations, mechanical hysteresis, and vibration-induced ringing. The Tactile Drift Online Recurrent Compensation (TDORC) algorithm is introduced to address these issues. TDORC employs a sliding-window first-order least squares regression to estimate and subtract temperature drift in real time, followed by a Butterworth bandpass filter to suppress hysteresis and vibration. Calibration updates are performed only during free-flight (no contact), preventing contamination of the drift model.

TDORC achieves a false positive rate (FPR) of 0% in free-flight, outperforming both bandpass filtering (38.24% FPR) and single-shot calibration (TDOC, 12.23% FPR).

Tactile Depth Estimation: Sensor and Process Model Fusion

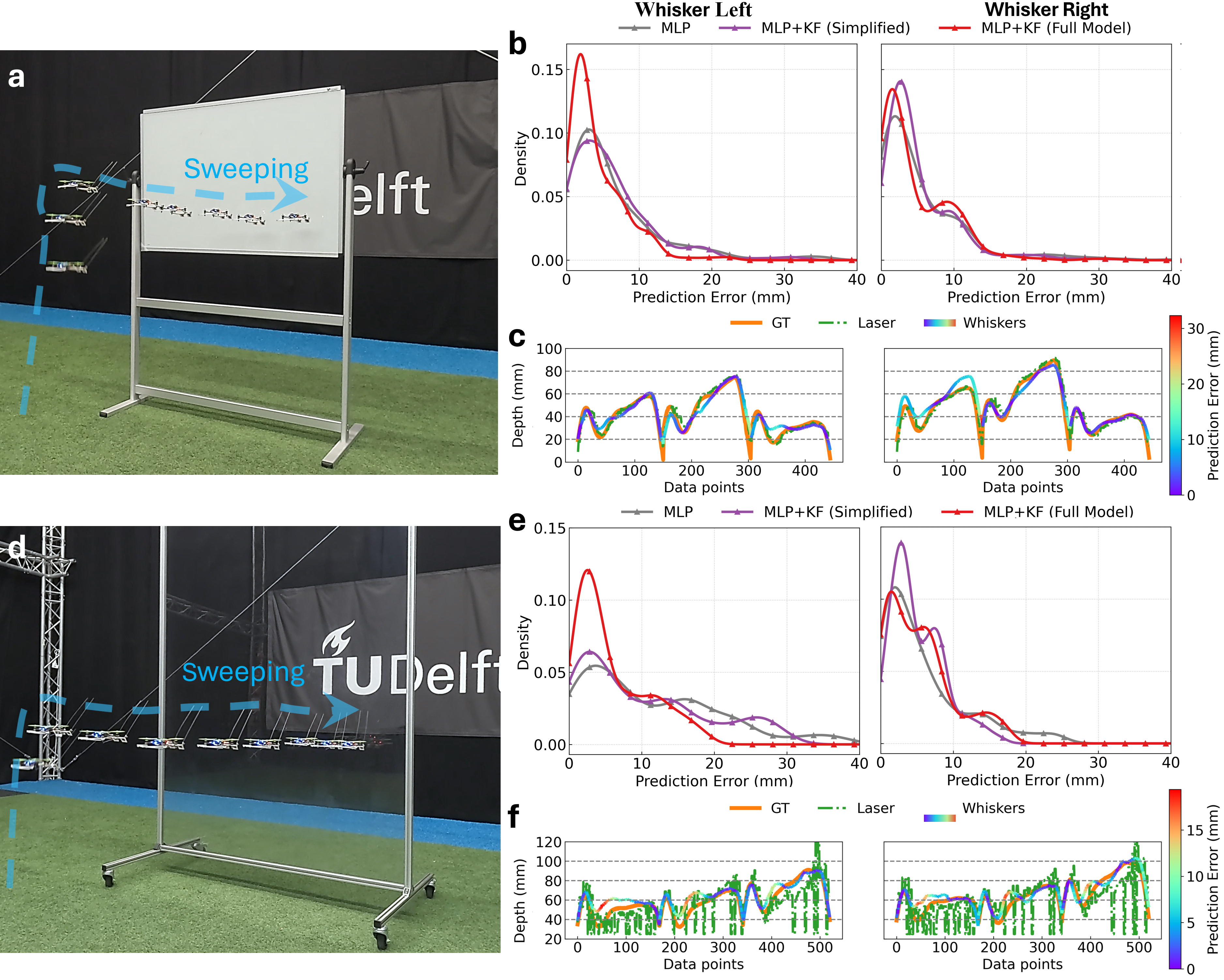

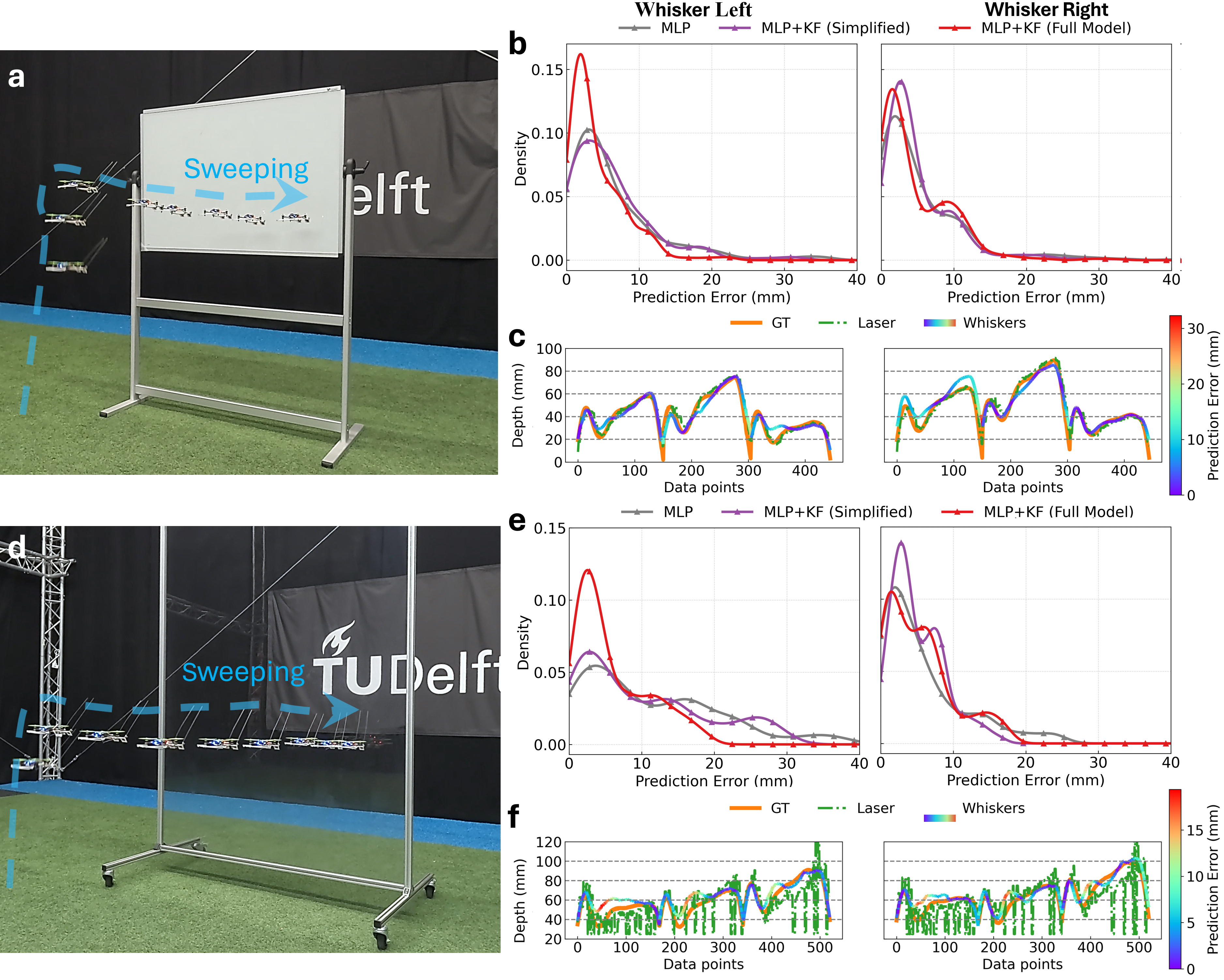

Tactile depth estimation is performed by fusing a neural network-based sensor model (MLP) with a process model via a Kalman filter. The MLP is trained on multi-channel barometer data collected during wall-sweeping maneuvers, capturing dynamic effects such as aerodynamics, vibration, and friction. The process model incorporates geometric relationships between the drone and contacted surfaces, assuming static, locally planar obstacles and single-point contact per whisker.

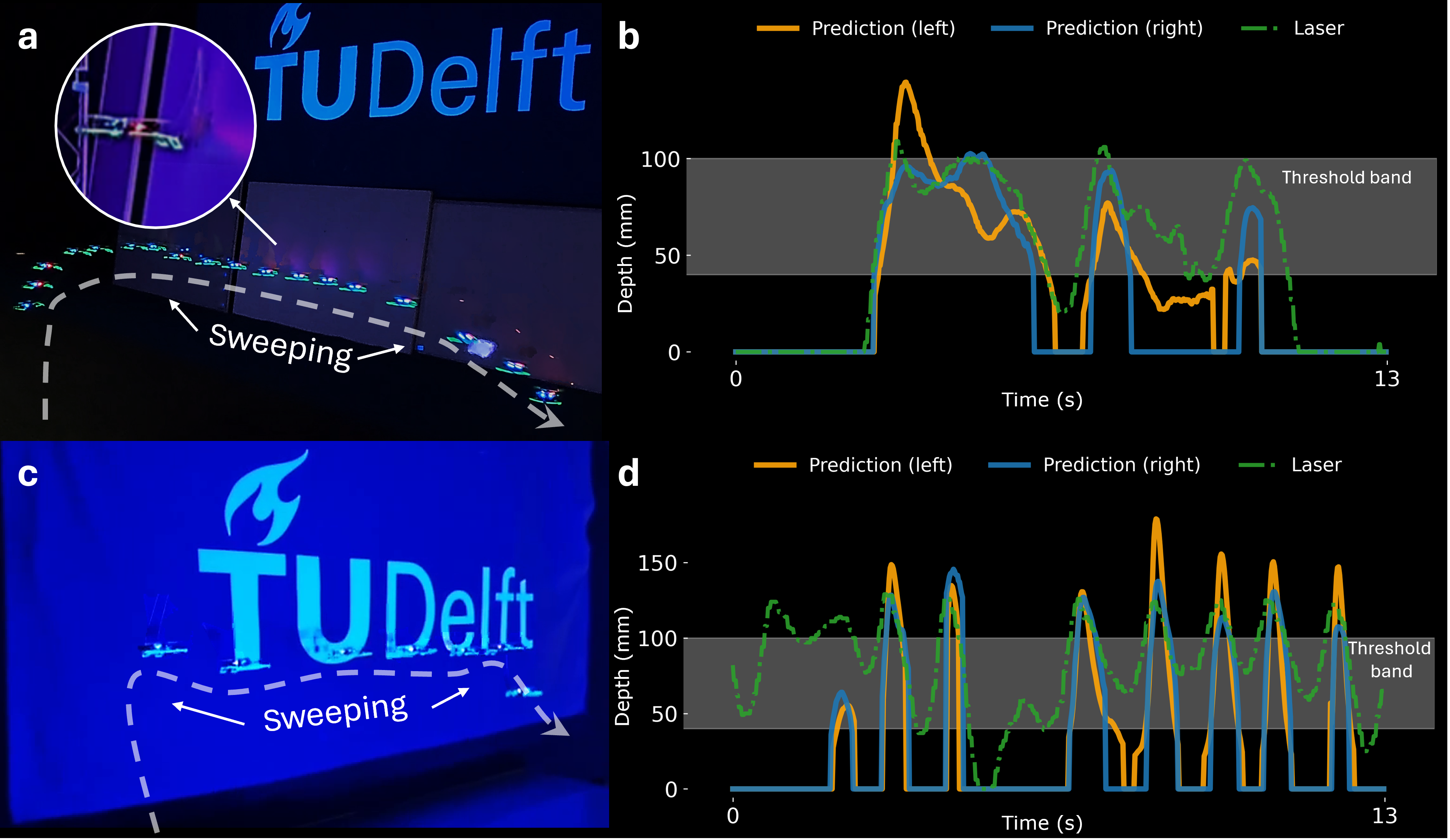

The Kalman filter recursively fuses sensor predictions and process model outputs, yielding temporally consistent and robust depth estimates. Experimental results on two datasets (whiteboard and glass wall) show that the full fusion model achieves sub-6 mm MAE and RMSE, outperforming both the MLP alone and simplified fusion models. Notably, whisker-based depth estimation is more robust than ToF laser sensors on transparent surfaces.

Figure 3: Whisker-based tactile depth estimation, including data collection, error density, and comparison with ground truth and laser measurements.

Autonomous Tactile Navigation and Exploration

Wall-Following Navigation

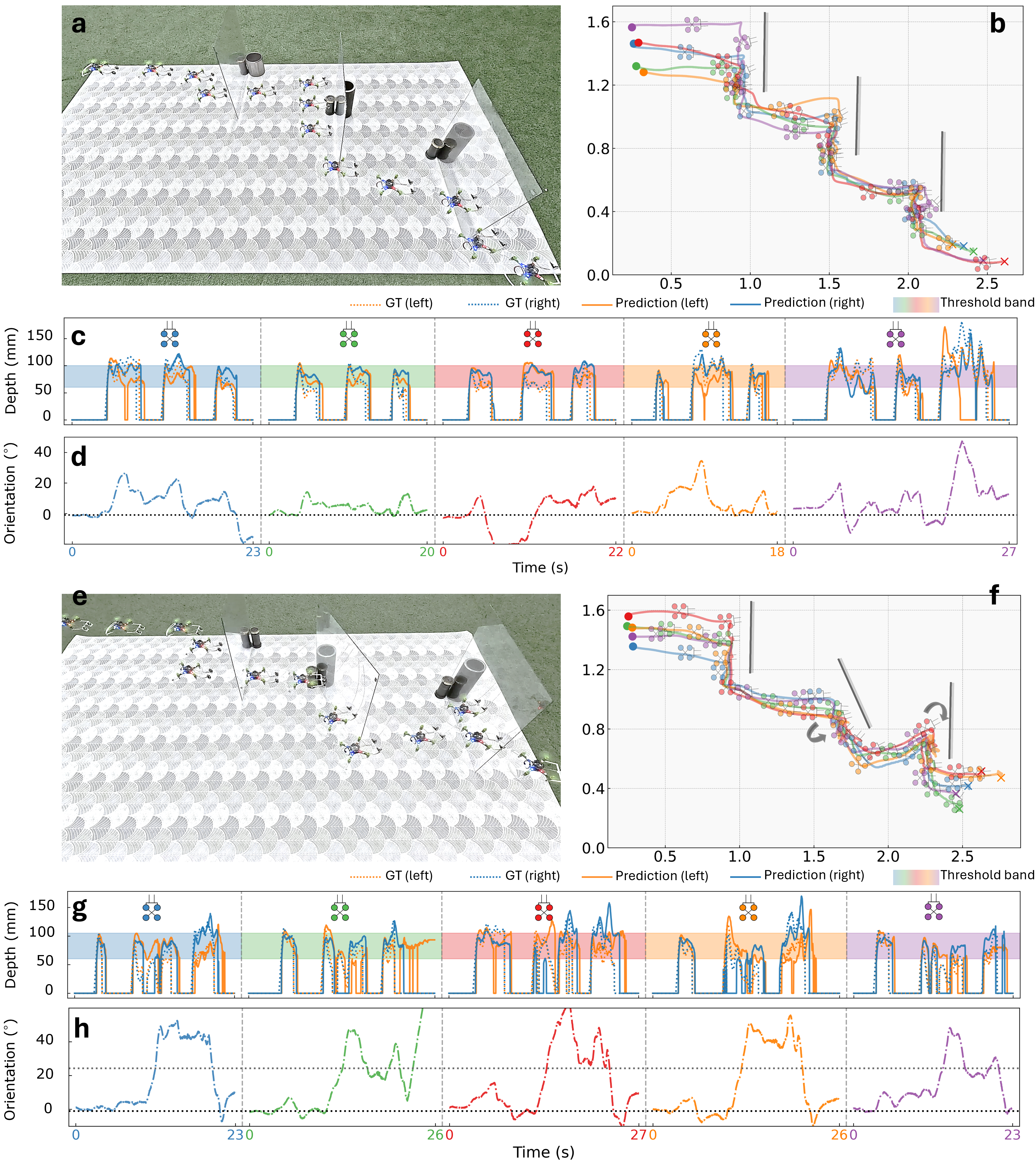

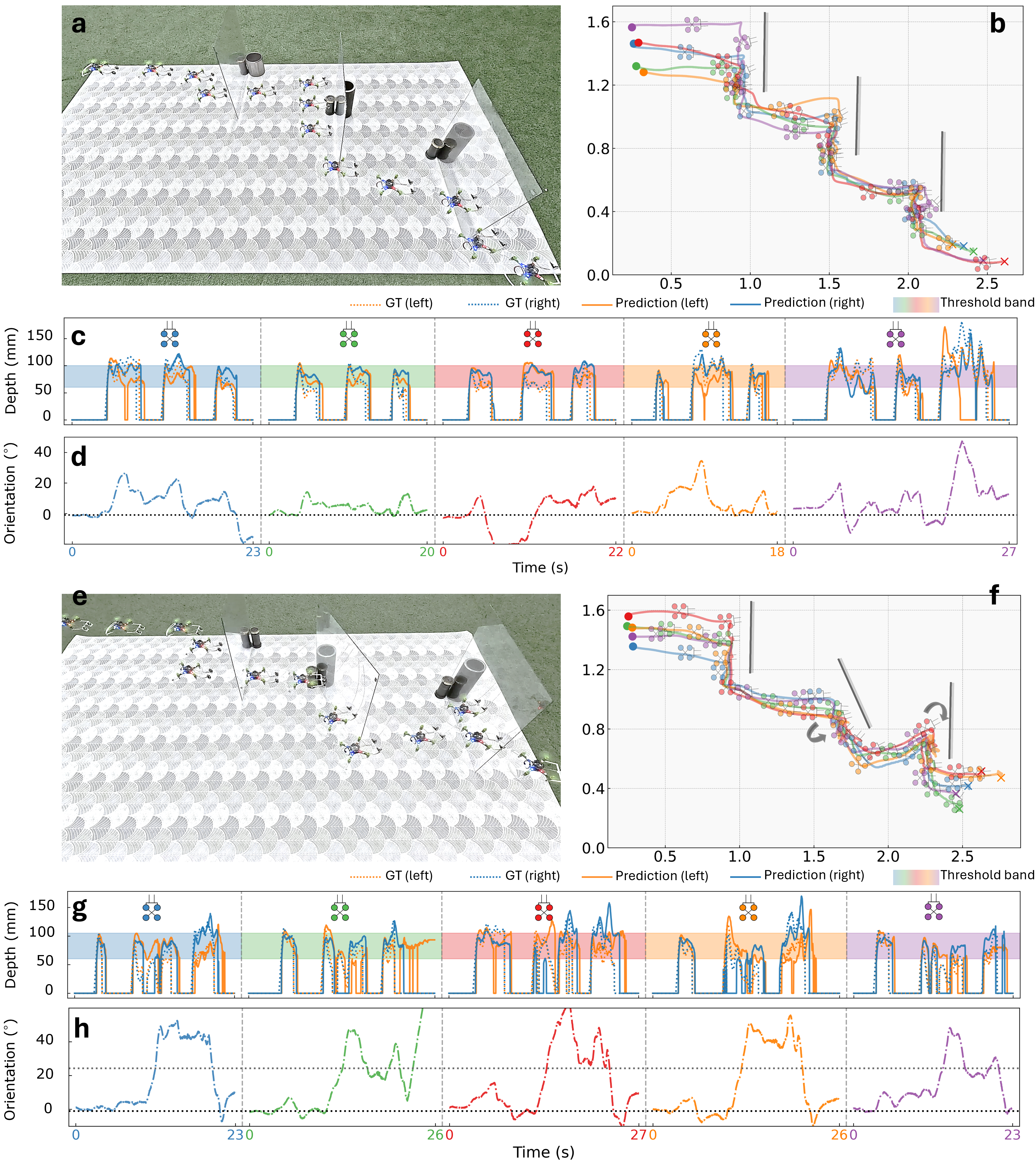

A finite-state machine (FSM) governs wall-following behavior, using whisker depth estimates to maintain a controlled standoff distance and regulate orientation. The system demonstrates reliable navigation through multiple unknown glass baffles, maintaining position and orientation within empirically determined thresholds across five independent trials.

Figure 4: Experimental results of aerial tactile navigation through three glass walls, showing trajectories, depth estimation, and orientation control.

Active Tactile Exploration

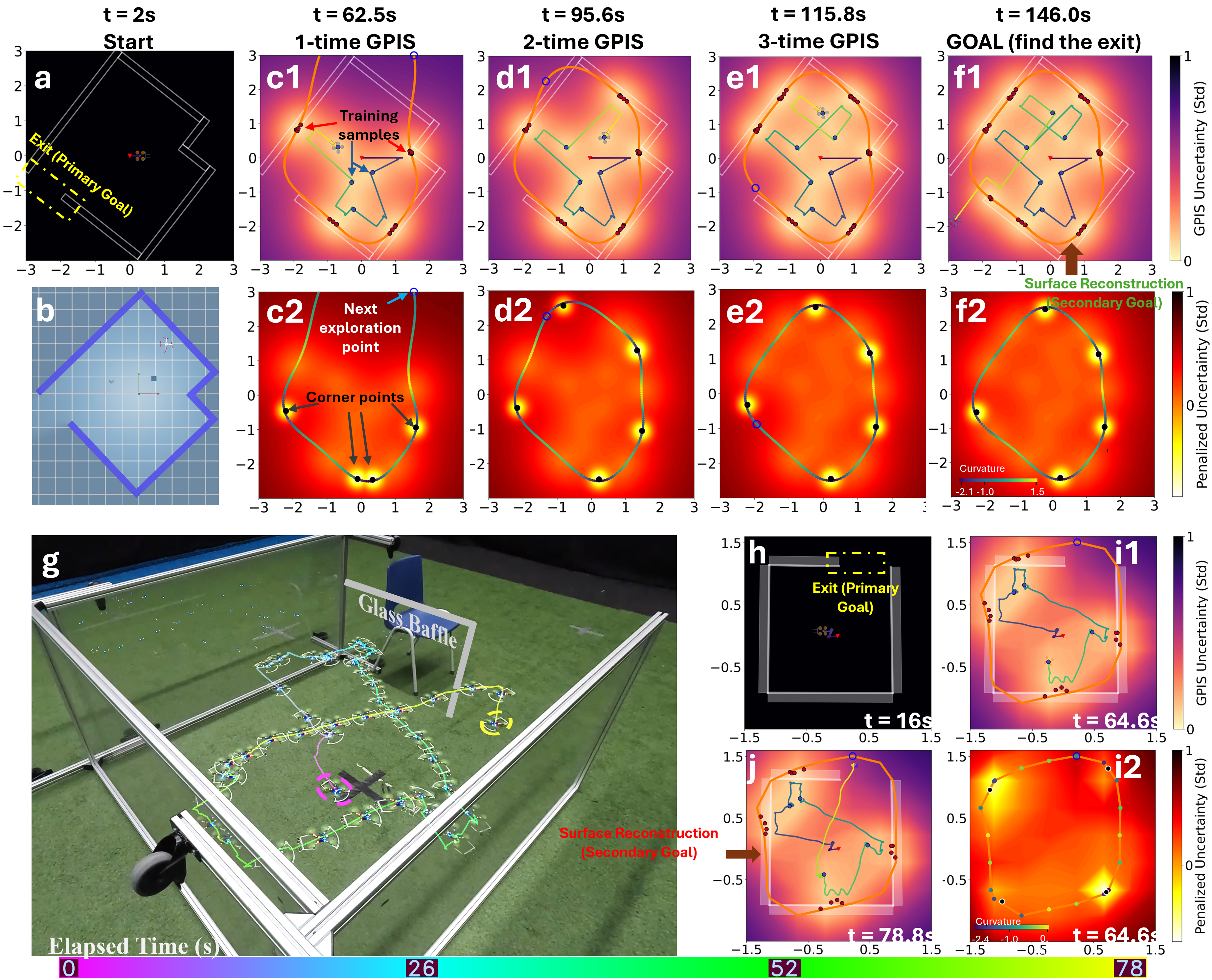

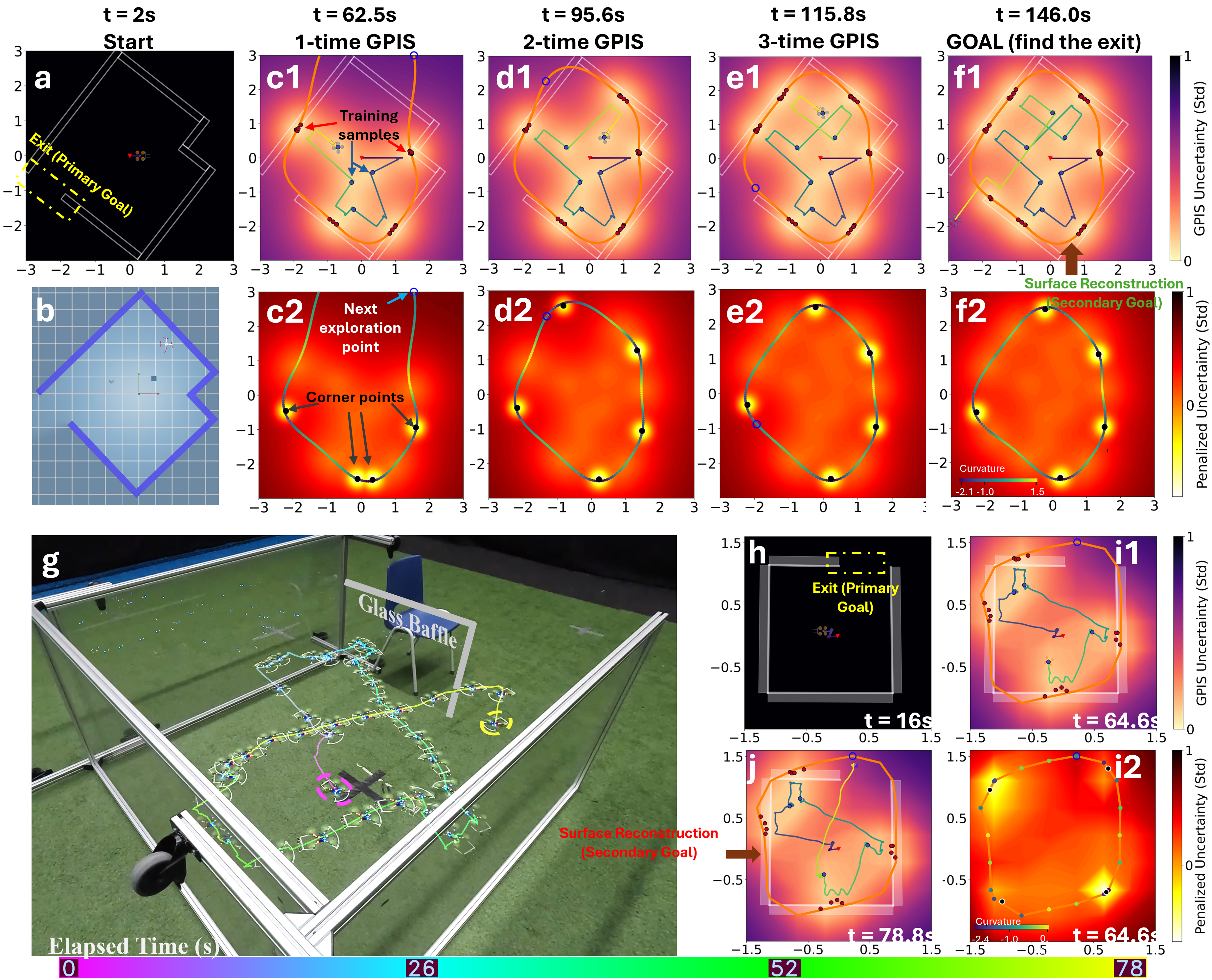

The framework extends to active exploration in unknown confined environments by integrating wall-following with Gaussian Process Implicit Surfaces (GPIS)-based mapping. The drone alternates between contour following and uncertainty-driven exploration, penalizing high-curvature (concave) corners to avoid collision risks. The exploration policy combines GPIS predictive variance with a repulsive potential function centered at detected corners.

Simulation and real-world experiments validate the approach, with the drone successfully reconstructing room layouts and locating exits. Mean reconstruction error is 0.09±0.01 m, and the system efficiently balances coverage and safety.

Figure 5: Simulation and real-world results of active aerial tactile exploration, including GPIS-based mapping, curvature analysis, and trajectory visualization.

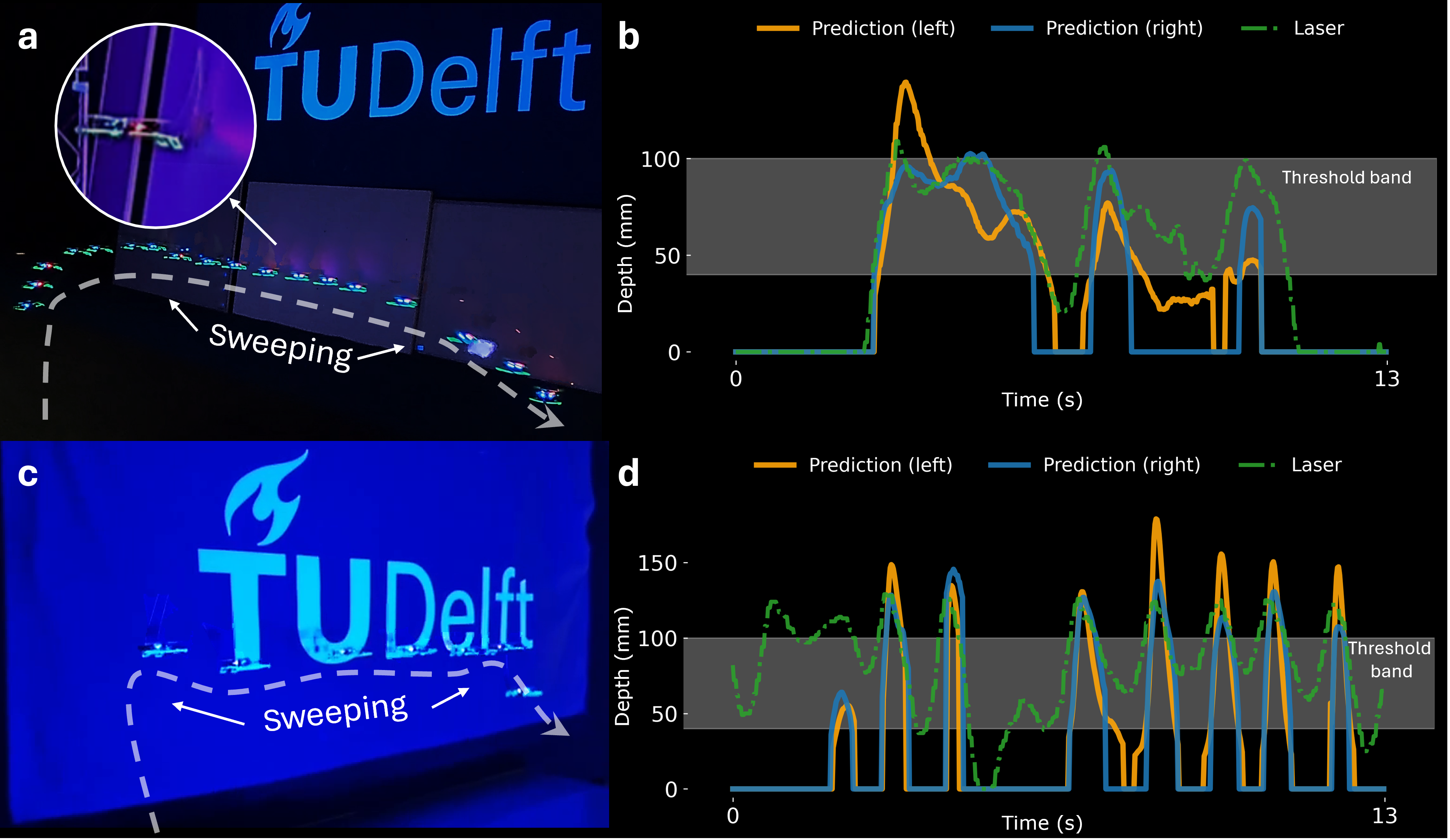

Navigation in Complete Darkness

The system is further validated in total darkness, performing wall-following along both rigid and soft surfaces using only onboard IMU and ToF sensors for state estimation. Whisker-based depth predictions closely track laser measurements, enabling stable closed-loop control even under significant IMU drift and challenging surface conditions.

Figure 6: Wall-following-based aerial tactile navigation in complete darkness, with depth estimation on rigid and soft surfaces.

System Pipeline and Resource Utilization

The entire perception, navigation, and control pipeline operates onboard an STM32F405 microcontroller with 192 kB RAM, of which only 34 kB are used by the tactile algorithms. The system runs at 50 Hz, supporting real-time autonomous flight without external computation or localization infrastructure.

Figure 7: Overview of the whiskered drone system pipeline, including architecture, drift compensation, depth estimation, navigation, and active perception modules.

Implementation Considerations

- Hardware Integration: The whisker sensor design is optimized for minimal weight and force disturbance, with modular 3D-printed components and UV resin encapsulation for robustness.

- Signal Processing: TDORC is critical for maintaining sensitivity and suppressing drift, especially in dynamic flight conditions.

- Depth Estimation: The MLP+KF fusion model should be trained on representative flight data, with careful calibration of barometer channels and process model parameters.

- Control Architecture: The FSM for wall-following and the active exploration policy are implemented as lightweight onboard modules, suitable for microcontroller deployment.

- Memory Management: The system is designed to operate within strict memory constraints, with downsampling and grid-based mapping for efficient data storage.

- Depth Estimation: Sub-6 mm MAE/RMSE on rigid and transparent surfaces.

- Navigation Robustness: 100% success rate in multi-baffle navigation and exit-finding tasks.

- Resource Usage: 34 kB RAM for tactile algorithms, 50 Hz real-time operation.

Limitations and Future Directions

- Drift Accumulation: Extended tactile sweeping can lead to drift in depth estimation; time-series modeling may mitigate this.

- Scalability: Increasing whisker count improves coverage but adds weight; scalable fabrication and calibration are ongoing challenges.

- Transferability: Barometric inconsistencies require labor-intensive calibration; transfer learning approaches may reduce this burden.

- Complex Environments: Future work will address multi-object exploration, mapping in complete darkness, and integration of drone dynamics for state estimation without external sensors.

Conclusion

This work establishes a robust, bio-inspired tactile perception and navigation framework for micro aerial vehicles, enabling autonomous flight in environments where vision and conventional sensors are unreliable. The integration of lightweight whisker sensors, advanced signal processing, and efficient onboard algorithms demonstrates the feasibility of vision-free navigation and mapping on highly resource-constrained platforms. The approach has significant implications for search-and-rescue, inspection, and exploration tasks in extreme or unknown environments, challenging the dominance of vision-based paradigms in aerial robotics.