- The paper introduces a novel framework that integrates LLM tool calling with multiple retrieval methods for conversational music recommendation.

- The methodology leverages components such as boolean filters, BM25, dense retrieval, and Semantic IDs to interpret user queries and enhance recommendation accuracy.

- Experimental results on the TalkPlayData-2 dataset demonstrate improved Hit@K metrics, especially Hit@1, validating the system’s effectiveness in precise user targeting.

Introduction

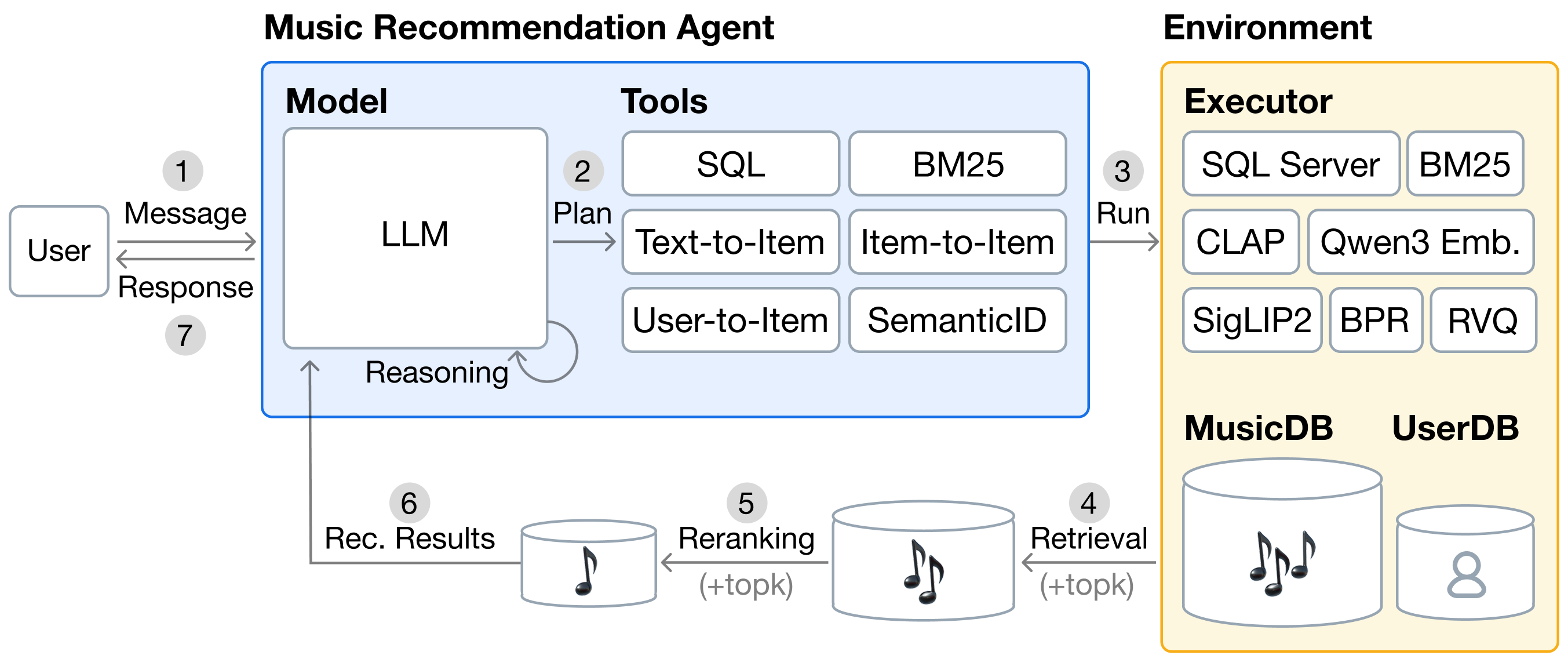

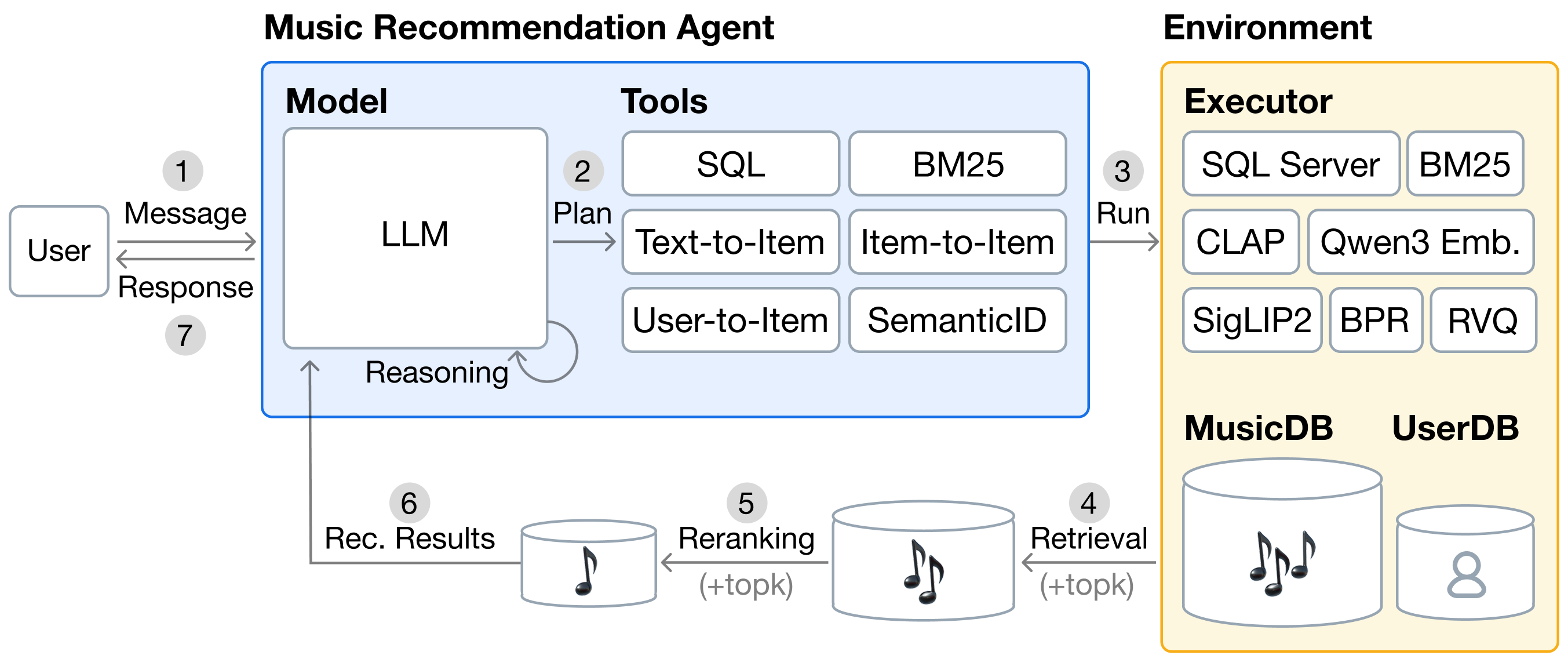

The paper "TalkPlay-Tools: Conversational Music Recommendation with LLM Tool Calling" introduces an advanced framework for music recommendation that leverages LLMs to seamlessly integrate multiple retrieval methods. The approach addresses limitations in traditional systems by enhancing the flexibility and efficacy of music recommendations through tool-calling mechanics, positioning the LLMs at the core of the recommendation system to interpret user intent, orchestrate tool invocations, and manage retrieval pipelines.

Music Recommendation Framework

The cornerstone of the proposed system is its ability to invoke specialized components for music retrieval, including boolean filters, sparse retrieval (BM25), dense retrieval (embedding similarity), and generative retrieval (Semantic IDs). The system is designed to interpret user queries, predict the optimal retrieval tools, establish execution sequences, and apply arguments to identify music that aligns with user preferences. This technique supports diverse modalities, effectively accommodating database filtering needs through a structured method of recommendation.

Figure 1: Overview of Music Recommendation Agents with Tool Calling.

The paper formalizes the problem of conversational music recommendation using structured retrieval methods, detailing the sequential application of tools within an execution environment called ToolEnv. The execution order significantly influences recommendation quality, allowing for refined tailoring of music selections based on detailed analysis of user preferences, historical data, and interactive dialogues.

The framework implements diverse tools for recommendation tasks. It includes SQL for structured numeric filtering, BM25 for lexical matching on predefined corpora, and dense retrieval models capable of semantic matching across text and multimedia data. Additionally, a novel approach using Semantic IDs enables multimodal content matching via quantized vectors, enhancing the comprehensive nature of the retrieval process.

Experimental Setup

The TalkPlayData-2 dataset was utilized to benchmark the framework's performance, leveraging multifaceted user profiles and comprehensive multimodal music representations. In experiments, the system demonstrated superior Hit@K metrics compared to baseline models, validating the advantage of using diverse retrieval methods and tool-calling strategies. Moreover, this setup highlights the system's capacity to offer conversational recommendations that align closely with user expectations.

Results and Analysis

The results indicate that the proposed tool-calling framework outperforms traditional approaches by harnessing the strengths of LLM-driven retrieval and reranking. A marked advantage was observed in Hit@1 performance, an indication of effective reranking achieved through LLM tool integration. Moreover, the system's ability to retry tool calls ensures that recommendations are derived with high robustness.

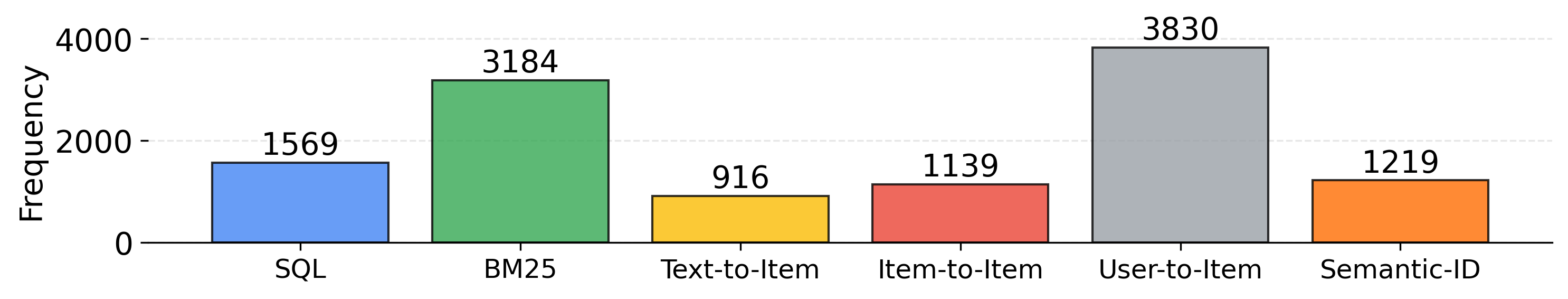

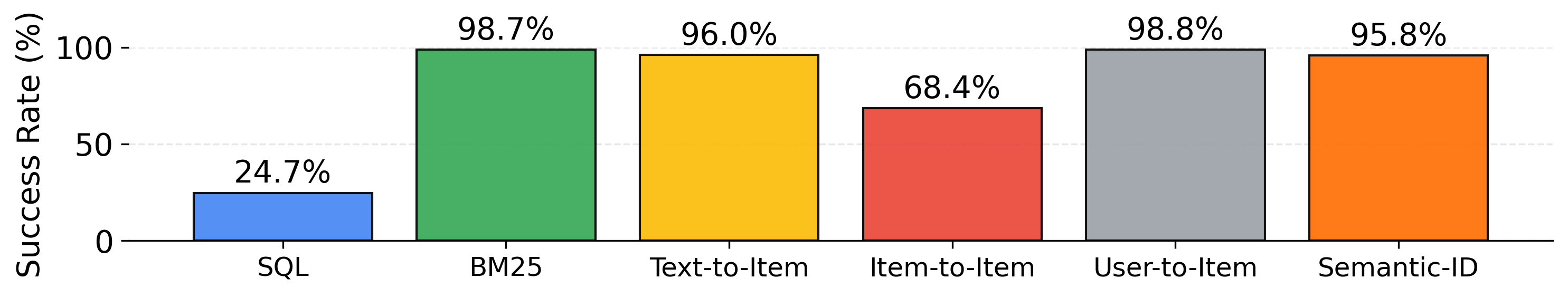

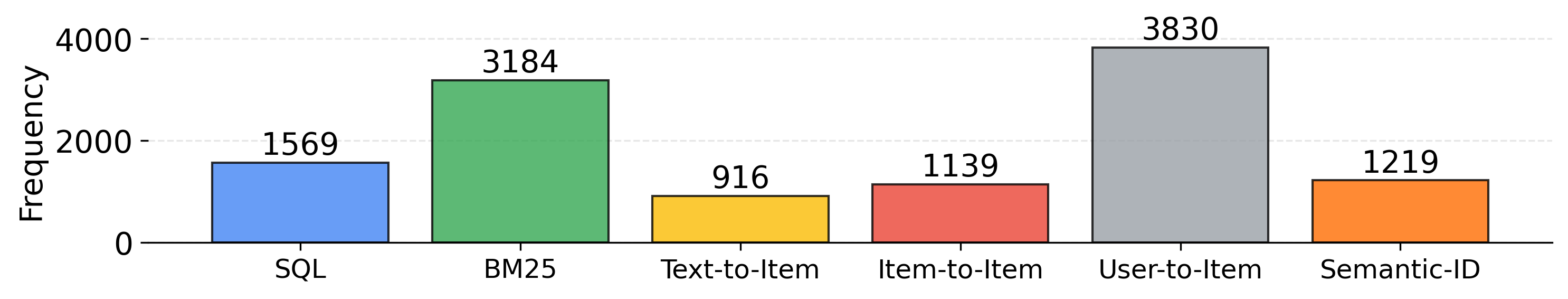

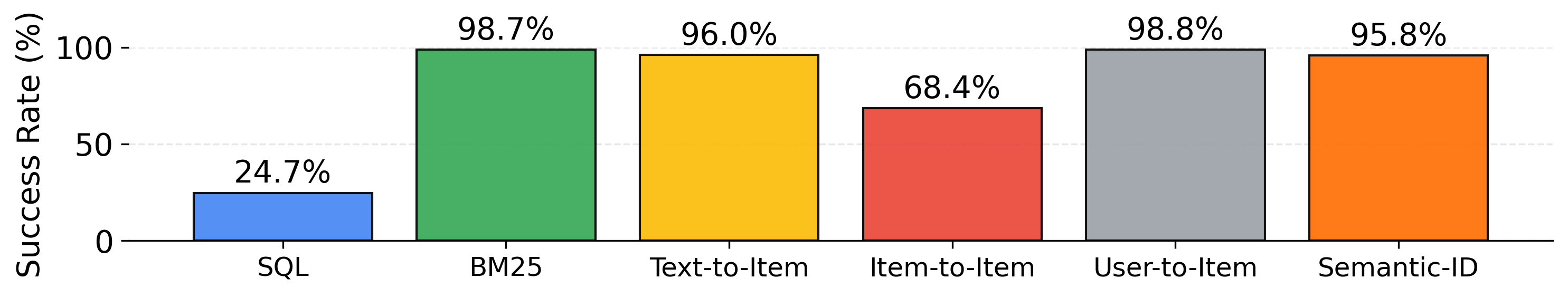

Figure 2: Tool Calling Frequency at First Attempt.

Through analysis of the tool calling frequency and success rates (Figure 2), the paper underscores the adaptability of natural language-friendly tools and challenges with complex syntax-intensive tools such as SQL. Frequency and success metrics illustrate variances in tool efficiency, suggesting further refinement and methodological development to bolster reliability in tool invocation.

Conclusion

The introduction of a tool-calling framework in conversational music recommendation systems opens new avenues for interactive engagement with users. By effectively integrating diverse retrieval methodologies and leveraging the potential of LLMs, the system is positioned to provide enhanced, contextually accurate recommendations. Future research directions may include the enhancement of tool calling precision, reduction of system retries, and embedding reinforcement learning techniques to further optimize tool orchestration processes. This work presents a noteworthy contribution to the evolving landscape of intelligent music recommendation systems, with implications for broadening the scope and applicability of similar AI-based decision-making systems across other domains.