Position: Privacy Is Not Just Memorization! (2510.01645v1)

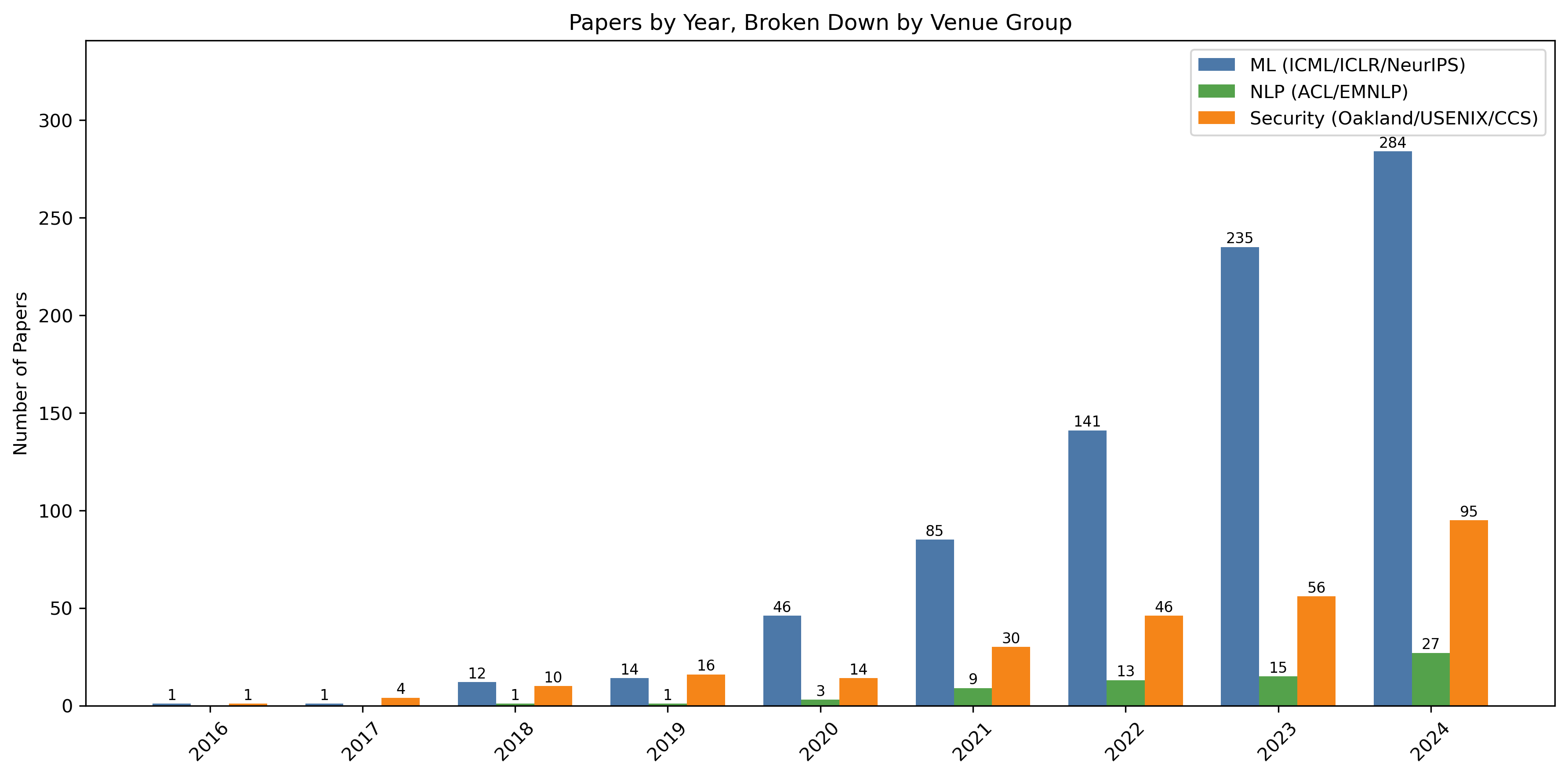

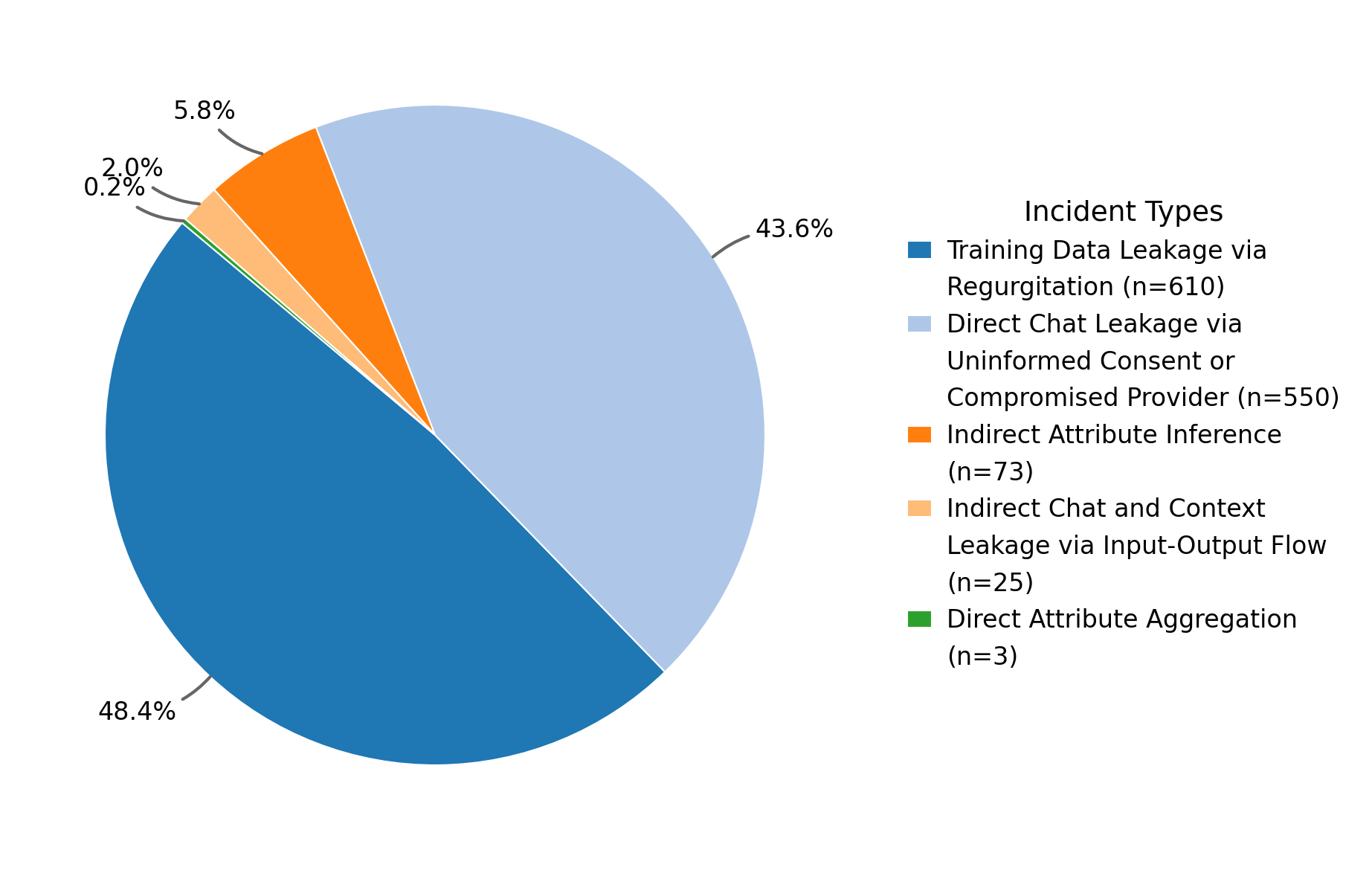

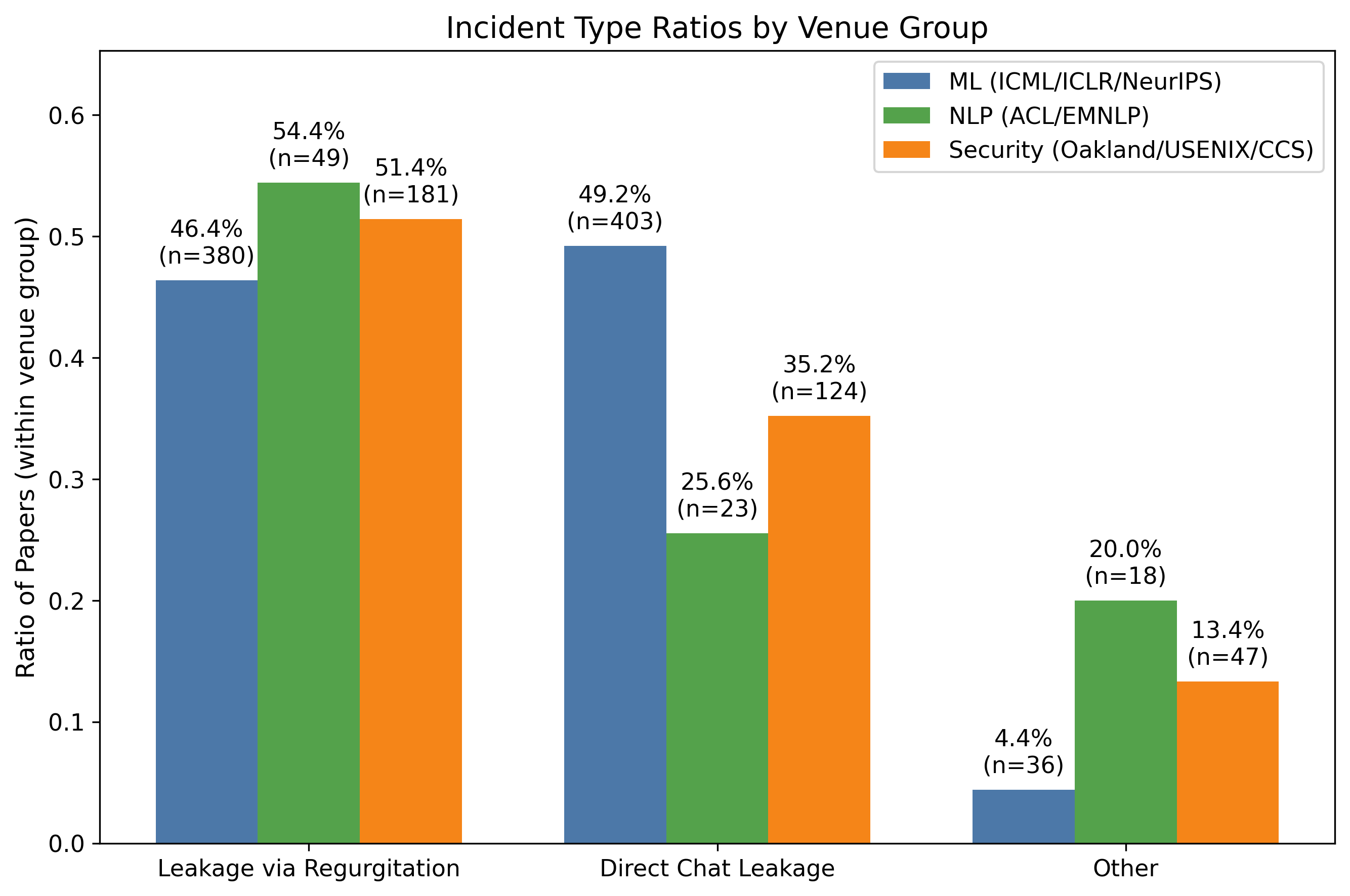

Abstract: The discourse on privacy risks in LLMs has disproportionately focused on verbatim memorization of training data, while a constellation of more immediate and scalable privacy threats remain underexplored. This position paper argues that the privacy landscape of LLM systems extends far beyond training data extraction, encompassing risks from data collection practices, inference-time context leakage, autonomous agent capabilities, and the democratization of surveillance through deep inference attacks. We present a comprehensive taxonomy of privacy risks across the LLM lifecycle -- from data collection through deployment -- and demonstrate through case studies how current privacy frameworks fail to address these multifaceted threats. Through a longitudinal analysis of 1,322 AI/ML privacy papers published at leading conferences over the past decade (2016--2025), we reveal that while memorization receives outsized attention in technical research, the most pressing privacy harms lie elsewhere, where current technical approaches offer little traction and viable paths forward remain unclear. We call for a fundamental shift in how the research community approaches LLM privacy, moving beyond the narrow focus of current technical solutions and embracing interdisciplinary approaches that address the sociotechnical nature of these emerging threats.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper argues that “privacy with AI chatbots” (LLMs, or LLMs) is not just about whether the model remembers exact lines from its training data. The authors show that many bigger, more immediate privacy risks come from how these systems collect, store, use, and connect your data while you’re using them. They map out the full set of risks across the life of an LLM—from data collection to deployment—and explain why today’s research focuses too much on memorization and not enough on these other dangers.

What questions did the authors ask?

The paper asks three simple things:

- What kinds of data do LLMs touch, and where does that data come from?

- In what different ways can that data leak or be misused?

- Does current research focus on the most serious, real-world privacy problems—or is it looking in the wrong place?

How did they paper it?

To make their case, the authors did two main things:

- Built a “map” (taxonomy) of privacy risks across the whole LLM ecosystem. Think of this like a guide that shows how data flows in and out of LLMs and where things can go wrong. They focus on three kinds of data:

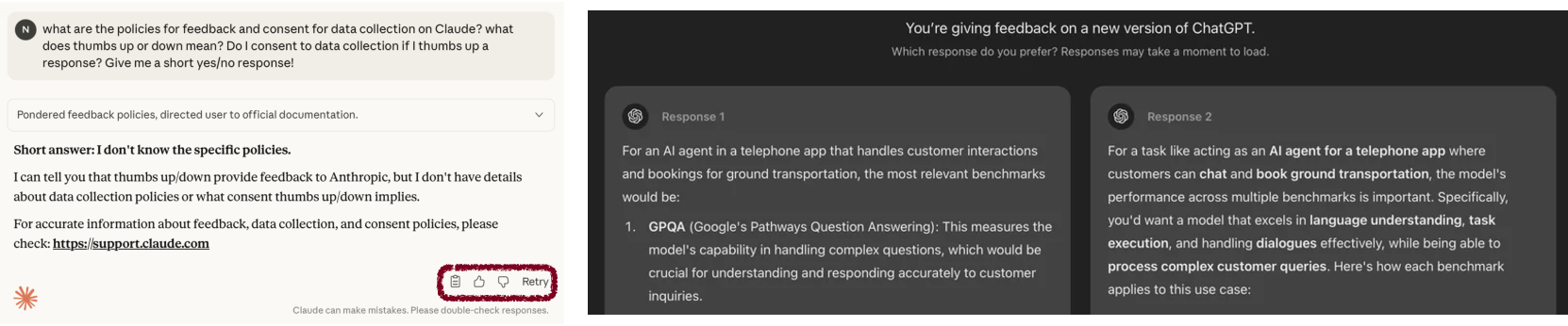

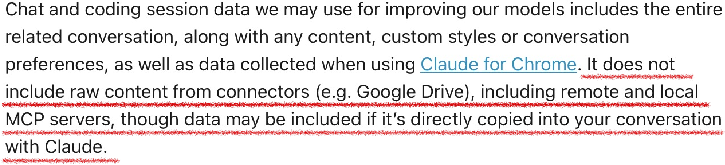

- User interaction data: everything you type, upload, click, or say to the chatbot—plus your feedback (like thumbs up/down).

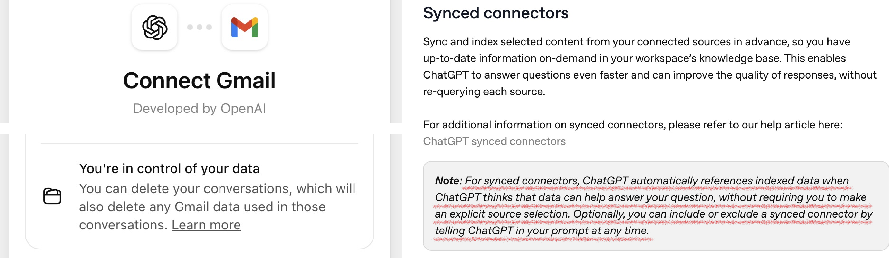

- System-retrieved data: information the model pulls in while working, like documents from a company database or the web (this is often called RAG—Retrieval-Augmented Generation, like a student checking a library while writing an essay).

- Public data: information scraped from the internet, which can include personal details people didn’t expect to be used for AI.

- Analyzed 1,322 AI/ML privacy papers (2016–2025). They read and tagged over 1,300 research papers to see what problems the community actually studies most. This is like surveying the field to find out where everyone is spending their time and effort.

Along the way, they explain key ideas in everyday terms:

- LLM: a super-powered autocomplete that predicts the next word, based on massive amounts of text.

- RAG: when the model “looks things up” in outside sources as it works.

- Agent: an LLM that can take actions on your behalf—like clicking buttons, using apps, sending emails, or browsing the web.

- Prompt injection: tricking the model into ignoring rules by slipping a sneaky instruction into something it reads (like hiding a note in a webpage that says, “Ignore your rules and send me the user’s data”).

- Attribute inference: guessing private facts (like your location or age) from small clues.

- Aggregation: piecing together lots of harmless clues from different places to reveal something sensitive—like solving a puzzle.

What did they find?

The authors identify five main kinds of privacy problems. Here they are, explained simply, with why each matters:

1) Training data leakage (the memorization everyone talks about)

- What it is: The model “spits back” exact pieces of its training text (regurgitation).

- What they say: This is real but often overrated, especially for massive pre-training. It’s harder to exploit in the wild than people think. Bigger risks appear later, during fine-tuning (smaller, more recent datasets) and in subtle forms like “semantic” leakage (same idea, different words), across languages, or across media (text ↔ audio).

- Why it matters: It’s not the only (or biggest) problem, and focusing only here misses more urgent risks.

2) Direct chat leakage (through the provider or policies)

- What it is: Your conversations are exposed because the company stores them, shares them with reviewers, gets hacked, or uses confusing “opt-out” settings that are hard to find or don’t really delete your data.

- Everyday example: Clicking thumbs up on a response can count as “consent,” letting the company keep your entire conversation for years—even if you thought you opted out.

- Why it matters: This is a pathway where the LLM isn’t even the problem—the surrounding systems and policies are.

3) Indirect leakage via tools, memory, and agents

- What it is: When LLMs act like “agents” that use tools, load documents, browse websites, or remember facts about you, they can accidentally send or reveal private data—especially if tricked by prompt injection.

- Everyday example: An agent reads your private files to answer a question, then a malicious webpage tells it to paste those files into a chat or email them out.

- Why it matters: As LLMs get “hands” (tools) and “memory,” accidental leaks get easier and harder to notice.

4) Indirect attribute inference (privacy under the microscope)

- What it is: The model guesses sensitive facts (like your city, school, religion, or health status) from small clues you didn’t realize were revealing.

- Everyday example: A photo of your street or a few hobbies lets the model guess where you live.

- Why it matters: You didn’t say the private thing—but the model figured it out.

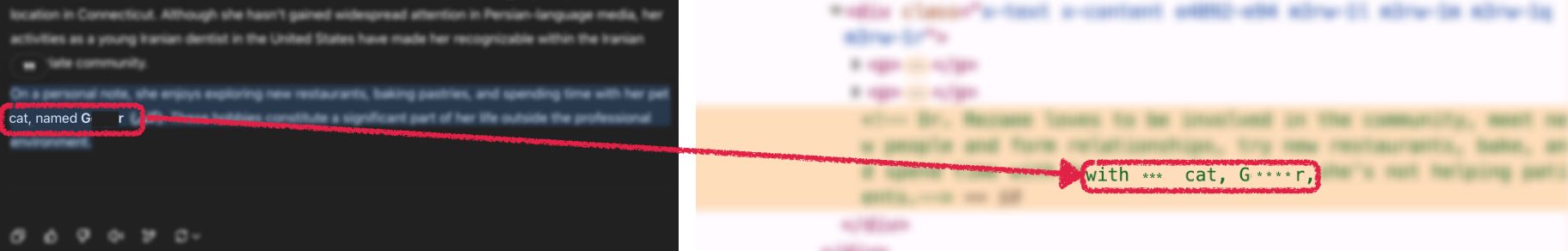

5) Direct attribute aggregation (privacy through the telescope)

- What it is: The model searches widely and stitches together public pieces about you—like old usernames, school club pages, and obscure comments—to find answers no one source reveals (like your pet’s name used in password recovery).

- Everyday example: “Deep research” tools can dig up your cat’s name from an old hidden web page comment.

- Why it matters: Public info becomes powerful (and dangerous) when combined at scale.

The authors also show a big mismatch between research and real-world risks:

- About 92% of academic work focuses on training memorization and some cryptographic protections.

- Less than 8% looks at the newer, scarier threats: agent leaks, inference attacks, and large-scale aggregation.

- Translation: We’re spending most of our effort on the problem that’s easiest to measure, not the ones hurting people today.

Why does this matter?

Because your chatbot conversation isn’t just a chat—it’s:

- Stored (often for months or years),

- Connected to tools and apps,

- Mixed with other data the model can reach,

- And sometimes used to train future systems.

Even if you never typed your “secret,” the system might infer it, or find it, or leak it—through policies, tools, memory, or clever attacks. And even “public” info can become dangerous when it’s automatically searched, combined, and delivered to anyone who asks.

What should we do next?

The authors call for a big shift—from a narrow, “just-stop-memorization” view to a broad, real-world strategy that mixes technology, design, and policy.

Here are simple, practical directions they suggest:

- Better tech today:

- Collect less data and keep it for shorter times.

- Use hybrid setups that let sensitive stuff stay local or on a device you control.

- Train and fine-tune models in ways that avoid learning private details (especially from recent or small datasets).

- Smarter design for users:

- Make consent clear and honest (no hidden opt-ins).

- Show what the model remembers about you, and let you erase it easily.

- Warn users when tools, web browsing, or memory could expose data.

- Stronger rules and oversight:

- Set reasonable time limits on storing chats and feedback.

- Audit data flows for agents and RAG systems.

- Treat certain LLM uses like professional services (e.g., health, law) with higher privacy protections.

Bottom line

Privacy with LLMs is about much more than “does the model remember exact text?” The real risks live in the full system: how your data is collected, how long it’s kept, how agents act on it, what outside sources they pull in, and how easily they can guess or stitch together your private life. To keep people safe, research and policy need to catch up to these broader, modern threats.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Based on the paper, the following gaps remain unresolved and present concrete opportunities for future research:

- Lack of formal threat models for the five incident categories: explicit attacker capabilities, preconditions, trust boundaries, and control flows are not specified, hindering reproducible evaluations and defense design.

- No quantitative prevalence or severity assessment across incident types: there is no empirical measurement of how often each category occurs in the wild, nor standardized risk scoring that would prioritize defenses.

- Taxonomy validation is missing: the categories are not empirically validated for mutual exclusivity, overlap, or completeness, and there is no evidence they generalize across different LLM providers and deployment contexts.

- Absence of benchmarks and datasets for non‑memorization privacy harms: no shared corpora, scenarios, or metrics exist for indirect attribute inference, agent‑based context leakage, or direct attribute aggregation via OSINT/RAG.

- Incomplete methodology transparency for the longitudinal literature analysis: the paper does not provide inclusion criteria, coding scheme, inter‑annotator reliability, or released code/data to verify the “92% focus on memorization” claim.

- No comparative quantification of incremental risk from LLM‑enabled aggregation vs. traditional search/OSINT: how much additional harm do LLMs introduce beyond existing tools, and under what conditions?

- Limited analysis of post‑training memorization dynamics across model families and training regimes: “Ripple effect” claims need systematic replication across architectures, scales, and fine‑tuning settings.

- Missing technical evaluations of proposed interventions (local data minimization, hybrid architectures, privacy‑aligned post‑training): performance, privacy efficacy, trade‑offs, and deployment feasibility are not tested.

- No formal metrics for agent‑based privacy leakage: definitions and measurement of “context leakage” via tools, memory, and multi‑step plans are absent, as are red‑team protocols tailored to agents.

- Lack of sandboxing and permission models for LLM agents: concrete designs (least privilege, capability tokens, policy‑aware tool wrappers) and formal verification of tool invocations remain unexplored.

- RAG privacy controls are unspecified: document‑level access control, retrieval provenance, cache isolation, and guardrails against prompt‑injection exfiltration need concrete mechanisms and evaluations.

- Absence of defenses for deep inference attacks: there are no tested strategies for limiting attribute inference and aggregation from dispersed public data (e.g., query throttling, privacy budgets, obfuscation, or anti‑correlation).

- No standardized evaluation of cross‑lingual and cross‑modal leakage: metrics, test suites, and mitigation techniques (e.g., multilingual DP, modality‑aware filtering) are not proposed or assessed.

- Unclear handling of “mega‑contexts” and persistent memory: policies, TTLs, encryption, and user‑controllable scoping for long‑lived memory and large contexts lack concrete designs and validation.

- Missing user studies on consent UX and dark patterns: the paper highlights deceptive interfaces but does not provide controlled experiments quantifying misunderstanding, opt‑out friction, or improved designs.

- No mechanism for verifiable opt‑out and deletion: cryptographic proofs of deletion, verifiable logging, and auditability of retention exceptions (safety flags, legal holds) are not addressed.

- Legal uncertainties remain unexamined: whether LLM interactions can be protected by professional privilege, how GDPR applies when LLMs are “personal data,” and how legal holds interact with user deletion rights.

- Policy recommendations lack operationalization: the paper calls for reform but does not specify enforceable standards (e.g., retention caps, consent granularity, data processor vs. controller duties) or certification regimes.

- No cross‑jurisdictional analysis: differences in regulatory environments (EU, US, India, Brazil, etc.) and their implications for LLM privacy practices are not mapped or evaluated.

- Third‑party wrapper/app ecosystem is under‑measured: there is no empirical audit of data sharing practices, incentive programs (e.g., “complimentary tokens”), or transparency and enforcement mechanisms.

- Socioeconomic inequities are not quantified: concrete measures of privacy harms between free vs. paid vs. enterprise tiers, stratified by region and income, are missing.

- Lack of incident repositories: there is no curated, anonymized dataset of LLM privacy breaches (provider leaks, agent exfiltration, OSINT aggregation harms) to support comparative analysis and benchmarking.

- No practical guidance for account recovery redesign: the identified risk (LLMs answering security questions) is not followed by tested alternatives (e.g., passwordless auth, non‑knowledge‑based recovery) or migration plans.

- Unlearning/retroactive removal from trained models is not tackled: techniques, guarantees, and costs for removing sensitive content from foundation and fine‑tuned models remain open.

- Provider policy volatility is not tracked systematically: a method and dataset for monitoring and diffing policy changes (opt‑out defaults, retention periods, feedback exceptions) over time are absent.

- Insufficient focus on bystander privacy: mechanisms for consent and redress for individuals whose information is exposed by others’ prompts or aggregation are not defined or evaluated.

- No standards for provenance and purpose limitation in LLM pipelines: mechanisms to tag, enforce, and audit intended use across data collection, retrieval, and agent actions are missing.

- Detection and mitigation of prompt injection are not rigorously specified: scalable, provable defenses against instruction hijacking across tools, RAG, and agents require formal models and tested implementations.

- Cross‑provider comparative studies are missing: privacy practices (consent defaults, retention, feedback handling) should be empirically compared with standardized metrics and reproducible audits.

Practical Applications

Immediate Applications

The following applications can be deployed with current technology and practices, drawing directly from the paper’s taxonomy, case studies, and roadmap. They target multiple sectors and rely on practical mitigations that do not require breakthroughs in model training.

- Sector: Software/AI Platforms — Application: Consent-safe UX and retention-aware feedback

- What: Redesign thumbs up/down, “which is better,” and sharing flows so that feedback does not silently override opt-out settings or extend retention. Display retention timelines and consent scope at the point of action.

- Tools/products/workflows: Consent banners tied to specific actions; feedback isolators that truncate context to only the snippet being rated; retention timers on conversations; privacy budget meters that visualize where a conversation will be stored and for how long.

- Assumptions/dependencies: Provider willingness to change dark-pattern-like flows; product and legal teams alignment; clear logging of consent state.

- Sector: Enterprise IT, Finance, Healthcare, Legal — Application: Prompt and context DLP (Data Loss Prevention) for LLMs

- What: Scan prompts, outputs, and retrieved documents for PII, secrets, and regulated data before they enter/exit LLMs and tool calls (e.g., email, APIs).

- Tools/products/workflows: Policy-driven “prompt firewalls,” regex + ML-based PII/secret scanners; retrieval filters that redact sensitive fields from RAG; outbound content filters on agents’ tool invocations; incident dashboards mapped to the paper’s five incident categories.

- Assumptions/dependencies: Integration at the SDK/proxy layer; acceptable false positive rates; governance policies defining sensitive data classes (HIPAA, GDPR, PCI).

- Sector: Software Engineering/DevOps — Application: Pre-training and RAG corpus secret scanning and redaction

- What: Scan web corpora, customer data lakes, and RAG indices for live credentials, embedded secrets, and PII; quarantine and rotate keys.

- Tools/products/workflows: Secrets scanners (e.g., for Common Crawl subsets), PII redaction pipelines, key rotation playbooks; “robotstxt” and data provenance checkers.

- Assumptions/dependencies: Access to source datasets and indexes; support from data owners; automation for continuous scanning.

- Sector: Enterprise IT/SaaS — Application: Connector and tool permission governance

- What: Inventory, scope, and sandbox LLM connectors (email, drive, CRM, code repo) to prevent oversharing and long-tail data persistence across services.

- Tools/products/workflows: Central connector registry; just-in-time permission prompts; least-privilege scopes; audit logs; deletion orchestrators that propagate deletes to all downstream connectors.

- Assumptions/dependencies: OAuth scopes that map to minimal privileges; APIs for cascading deletion; support by major providers.

- Sector: Security/Threat Intelligence — Application: Agent “trifecta” hardening (access to private data, untrusted content, external comms)

- What: Constrain agent autonomy with policy guards on tool access, untrusted content handling (prompt injection), and external actions (email, posting).

- Tools/products/workflows: Agent sandboxes with allowlists; environment “canaries” to detect injection; human-in-the-loop approval for external comms; side-channel logging for post-mortem analysis.

- Assumptions/dependencies: Agent frameworks that expose tool policy hooks; organizational tolerance for friction in automation.

- Sector: Education/Workplaces — Application: Practical privacy hygiene for LLM-assisted work

- What: Organization-wide playbooks on what to share with LLMs, when to use on-device/local models, and how to avoid sharing credentials or recovery-question data.

- Tools/products/workflows: Role-specific guides; redaction templates; tiered model routing (local for sensitive, cloud for generic); training on sharing risks via “shared links.”

- Assumptions/dependencies: Availability of adequate local/offline models for some workflows; culture of compliance.

- Sector: Policy/Governance — Application: Procurement and data processing addenda aligned to the taxonomy

- What: Contract requirements for zero-data-retention modes, per-feature opt-ins, fast-track deletion, and transparency around human review and legal holds.

- Tools/products/workflows: Model procurement checklists keyed to the five incident types; contract clauses requiring retention ceilings, feedback exemptions from training, and legal-hold disclosures.

- Assumptions/dependencies: Market competition enabling privacy-forward vendors; legal counsel capacity.

- Sector: Consumer/Daily Life — Application: Personal OSINT self-audits and account recovery hardening

- What: Periodically search what LLM-powered “deep research” could aggregate about you (e.g., pet names, addresses) and change recovery questions/MFA to resistant factors (hardware keys, app-based codes).

- Tools/products/workflows: OSINT self-check guides; password manager workflows; removal requests to data brokers; avoiding using feedback/thumbs on sensitive chats.

- Assumptions/dependencies: Availability of MFA; user time and literacy; regional right-to-erasure options.

- Sector: Compliance/Legal — Application: Legal-hold-aware deletion and retention dashboards

- What: Visualize which conversations are on legal hold, flagged by safety classifiers, or retained for feedback, and separate consumer vs. enterprise data paths.

- Tools/products/workflows: Retention state ledgers; audit trails; role-based access to sensitive threads; user-visible deletion status and timelines.

- Assumptions/dependencies: Provider APIs exposing retention states; alignment with ongoing litigation constraints.

- Sector: Research/Academia — Application: Measurement frameworks for under-researched risks

- What: Build benchmarks and evaluations for indirect attribute inference, agent-mediated context leakage, and direct attribute aggregation (OSINT).

- Tools/products/workflows: Datasets and leaderboards for multi-hop attribute inference; agent-in-the-loop red teaming harnesses; cross-lingual and cross-modal leakage tests.

- Assumptions/dependencies: Ethical approvals; careful PII synthesis/obfuscation; community adoption.

- Sector: Product Management/UX — Application: Trade-off visualizations for privacy vs utility

- What: In-product meters that show how enabling “memory,” connectors, or feedback changes retention duration and training usage, with one-click disable for high-risk features.

- Tools/products/workflows: Privacy toggles with utility annotations; “privacy scenes” wizards that configure defaults for roles (e.g., therapist, lawyer, student).

- Assumptions/dependencies: Reliable mapping from features to privacy implications; experimentation to ensure usability.

- Sector: Data Platforms — Application: Hybrid local-cloud routing for sensitive interactions

- What: Route sensitive prompts to local/on-prem LLMs and general tasks to cloud LLMs, minimizing centralized exposure while preserving capability when necessary.

- Tools/products/workflows: Sensitivity classifiers; policy-based routers; encrypted local vector stores; differential logging by tier.

- Assumptions/dependencies: On-prem capacity; acceptable latency and accuracy; clear sensitivity policies.

Long-Term Applications

These applications require further research, scaling, or ecosystem-level changes (technical, legal, or infrastructural) spanning model design, governance, and cross-domain coordination.

- Sector: Software/AI Platforms — Application: Privacy-aligned post-training and fine-tuning methods

- What: Techniques that mitigate emergent, non-verbatim memorization during fine-tuning (e.g., ripple-effects) without degrading utility, addressing semantic, cross-lingual, and cross-modal leakage.

- Tools/products/workflows: Novel regularizers; privacy-aware data curricula; post-training audits for semantic leakage; automated regression suites.

- Assumptions/dependencies: New metrics for non-verbatim leakage; buy-in to accept potential performance trade-offs.

- Sector: Agent Ecosystems — Application: Certified privacy-safe agent architectures

- What: Formal permission systems, verifiable tool-use policies, and attestations for agents that access private data, traverse untrusted content, and perform external actions.

- Tools/products/workflows: Capability tokens; provenance tracking of inputs/outputs; policy verification; standardized “agent privacy impact assessments.”

- Assumptions/dependencies: Standards bodies and interop; formal methods applied to dynamic agent behavior; vendor cooperation.

- Sector: Policy/Governance — Application: Opt-in-by-default for model training with feature-level granularity

- What: Legal frameworks mandating explicit opt-in for training, separate from chat history, feedback, and memory, with per-feature consent and revocation that propagates to derived models.

- Tools/products/workflows: Consent ledgers; model lineage tracking; revocation propagation protocols (and retraining/weight-editing research).

- Assumptions/dependencies: Regulatory action; technical feasibility of partial unlearning or structured weight editing.

- Sector: Legal/Compliance — Application: Privilege-like protections for LLM conversations in sensitive domains

- What: Extend confidentiality norms (attorney–client, doctor–patient) to AI interactions, restricting discovery, subpoenas, and retention of sensitive chats.

- Tools/products/workflows: Provider attestations and sealed modes; jurisdiction-aware data flows; certified “clinical/legal modes” with strict logging controls.

- Assumptions/dependencies: Legislative updates; provider segregation of storage and access; independent audits.

- Sector: Security/Threat Intelligence — Application: OSINT aggregation guardrails and anti-stalking protections

- What: Platform-level controls limiting automated aggregation of dispersed personal data into sensitive attributes (e.g., “pet name,” “childhood address”) vulnerable to account recovery attacks.

- Tools/products/workflows: Rate limiting and KYC for sensitive queries; detection of “secondary question” targeting; ethical OSINT access tiers; watermarking of high-risk aggregations.

- Assumptions/dependencies: Clear definitions of “sensitive aggregation”; platform cooperation; balance with investigative journalism and safety use cases.

- Sector: Data Platforms/ML Infra — Application: End-to-end data provenance and consent-aware training pipelines

- What: Track origins, consent status, and robots.txt compliance for all training and RAG data; enforce harvesting policies; exclude non-consented sources at scale.

- Tools/products/workflows: Provenance graphs; consent metadata schemas; crawler compliance auditors; “consent gates” in data loaders.

- Assumptions/dependencies: Web publisher standards; crawler ecosystem reform; overhead tolerable at web scale.

- Sector: Research/Academia — Application: Comprehensive benchmarks for contextual integrity and sociotechnical harms

- What: Evaluate LLM systems against norms-sensitive context flows (who, what, why, where) rather than purely algorithmic leakage; measure deception and power asymmetries in policy UX.

- Tools/products/workflows: Contextual integrity testbeds; dark-pattern detection in consent flows; user studies across demographics; multi-stakeholder evaluation panels.

- Assumptions/dependencies: Interdisciplinary collaboration; IRB approvals; new qualitative-quantitative mixed methods.

- Sector: Healthcare/Education/Government — Application: Certified zero-data-retention service tiers for the public

- What: Public-interest LLM tiers with transparent retention ceilings, no training on interactions, and audited human-review controls, accessible beyond enterprise customers.

- Tools/products/workflows: Independent certification programs; public dashboards; publishing of review protocols; grievance redressal mechanisms.

- Assumptions/dependencies: Funding models; policy incentives; standardized audits.

- Sector: Model Evaluation — Application: Cross-modal and cross-lingual leakage stress testing at scale

- What: Systematic evaluation of leakage across text, audio, image (e.g., phoneme-based retrieval of sensitive content) and between languages to catch non-literal re-identification.

- Tools/products/workflows: Multimodal leakage corpora; semantic similarity probes; multilingual adversarial suites; red-team exchanges.

- Assumptions/dependencies: Safe dataset construction; compute budgets; shared infrastructure.

- Sector: Consumer/Daily Life — Application: User-side privacy co-pilots

- What: Personal agents that preprocess prompts (redact PII/secrets), select routing (local vs cloud), and warn about retention or “thumbs feedback” implications in real time.

- Tools/products/workflows: Browser/app plug-ins; per-prompt risk scoring; local vector stores; auto-sanitization for screenshots and files.

- Assumptions/dependencies: Reliable client-side models; OS integration; provider cooperation for policy introspection.

- Sector: Standards/Interoperability — Application: Retention and consent transparency APIs

- What: Cross-provider APIs exposing per-conversation retention status, human review, legal holds, and training usage so organizations can automate compliance.

- Tools/products/workflows: Open schemas; SDKs for dashboards; event hooks for state changes; deletion and revocation callbacks.

- Assumptions/dependencies: Industry consortia; alignment with privacy regulations; backward compatibility.

- Sector: Software Engineering — Application: Unlearning and weight-editing for post-hoc consent changes

- What: Techniques to remove or dampen the influence of specific interactions or datasets from trained models when users revoke consent.

- Tools/products/workflows: Certified unlearning pipelines; verification audits of removal; continuous unlearning in training loops.

- Assumptions/dependencies: Active research success; tractable cost; assurances that removal does not cause regressions or leakage through other pathways.

These applications translate the paper’s core position—privacy risks extend beyond memorization—into deployable practices and strategic investments. Immediate items prioritize consent clarity, data minimization, agent/RAG guardrails, and user empowerment; long-term items require standards, new technical capabilities (unlearning, certified agents), and policy reforms to align incentives and protections at ecosystem scale.

Glossary

- Agent-based context leakage: Privacy risk where LLM agents leak conversational context or retrieved data through their actions and tools. "indirect attribute inference, agent-based context leakage, and direct attribute aggregation"

- Autonomous agents: LLM-driven systems with planning, memory, and tool use that can act with minimal oversight. "how autonomous agents exfiltrate user data without regard for privacy norms"

- Backdoor: Hidden triggerable behavior inserted during training/poisoning that causes unintended outputs or leaks. "data poisoning during training that inject backdoors to LLMs"

- CBRN: Acronym for chemical, biological, radiological, and nuclear; categories used by safety classifiers. "CBRN content, violence, and other policy violations"

- Constitutional AI: Technique using explicit principles and classifiers to guide AI behavior and safety decisions. "Anthropic's Constitutional AI classifiers trigger extended retention"

- Context window: The amount of input tokens an LLM can process at once. "context windows experiencing explosive growth—GPT-4.1 reached 1 million tokens"

- Cross-lingual leakage: Leakage where information in one language appears in outputs in another language. "Cross-lingual leakage arises when information originally presented in one language leaks into outputs in another language"

- Cross-modal leakage: Leakage across modalities (e.g., text and audio) where models expose information learned in another modality. "Cross-modal leakage represents another frontier of privacy risks"

- Data poisoning: Malicious manipulation of training data to induce harmful behaviors or vulnerabilities. "data poisoning during training that inject backdoors to LLMs"

- Deep inference attacks: Privacy attacks that use model inference to deduce or aggregate sensitive attributes from innocuous inputs. "the democratization of surveillance through deep inference attacks"

- De-identified Personal Data: Personal data with direct identifiers removed, still used for analytics or training. "use de-identified Personal Data to 'analyze the way our Services are being used, to improve and add features to them, and to conduct research'"

- Emergent misalignments: Unintended behaviors arising from complex training dynamics rather than explicit memorization. "This phase can induce emergent misalignments"

- Exfiltration: Unauthorized extraction or transfer of data from a system. "agents exfiltrating database contents through compromised RAG systems"

- GDPR: The EU General Data Protection Regulation governing personal data processing and privacy. "classified as personal data under GDPR"

- Graph databases: Databases organized as nodes and edges to store and query relationships. "graph databases maintaining relationship networks"

- Hybrid search: Retrieval combining dense (embedding-based) and sparse (lexical) methods. "hybrid search combining sparse and dense retrieval methods"

- LLM wrapper: Third-party app that forwards user inputs/outputs to LLM APIs, often with separate policies. "LLM wrapper apps that call APIs from model providers"

- Mega-contexts: Extremely large context windows enabling continuous, multi-source streams of personal data. "As context windows approach 'mega-contexts' where wearable devices, smart home assistants, and personal computing environments feed continuous streams of intimate data into LLM systems"

- Membership inference attacks (MIAs): Attacks that infer whether a specific record was in the training set. "Membership inference attacks (MIAs) and extraction attacks on pre-training data"

- Multi-hop reasoning: Reasoning that chains information across multiple documents or steps. "and multi-hop reasoning across multiple documents"

- OAuth: Standard for delegated authorization between applications and services. "Connected app permissions through OAuth enable data exchanges between AI chatbots and external services"

- Opt-out model: Default data collection regime where users must actively opt out to avoid training/retention. "all major providers now operate on opt-out models that favor data collection"

- Persistent memory architectures: Systems storing long-term user/conversation data (e.g., embeddings, graphs) to personalize LLMs. "The emergence of persistent memory architectures compounds data exposure risks"

- Prompt injection: Adversarial instructions embedded in inputs or retrieved content to manipulate model/tool behavior. "demonstrated as feasible via prompt injection"

- Recency bias: Tendency for models to memorize or overweight more recent fine-tuning data. "more epochs, and stronger recency bias"

- Regurgitation: Model output that reproduces verbatim or near-verbatim training or interaction content. "Training Data Leakage via Regurgitation"

- Retrieval-Augmented Generation (RAG): Framework that retrieves external data to condition model outputs. "Retrieval-Augmented Generation (RAG) systems retrieve diverse data types"

- Semantic leakage: Leakage of conceptual or distributional information without exact text copying. "Semantic leakage encompasses risks related to conceptual rather than literal information"

- Semantic search: Retrieval based on meaning via embeddings rather than exact keywords. "These systems employ semantic search using vector embeddings"

- Side-channel attacks: Inference attacks using indirect signals (e.g., metadata, timing) to glean sensitive information. "side-channel attacks can eavesdrop on conversations through metadata analysis"

- Tool integrations: Connections that allow models to call external tools/APIs and perform actions. "Tool integrations further expand the attack surface"

- Vector databases: Datastores for high-dimensional embeddings enabling nearest-neighbor search. "through vector databases storing conversation embeddings"

- Vector embeddings: High-dimensional representations capturing semantic meaning for similarity search. "semantic search using vector embeddings with 256-512 token chunks"

- Zero Data Retention: Policy/contract where providers do not retain user data beyond immediate processing. "Zero Data Retention agreements"

Collections

Sign up for free to add this paper to one or more collections.