MPMAvatar: Learning 3D Gaussian Avatars with Accurate and Robust Physics-Based Dynamics (2510.01619v1)

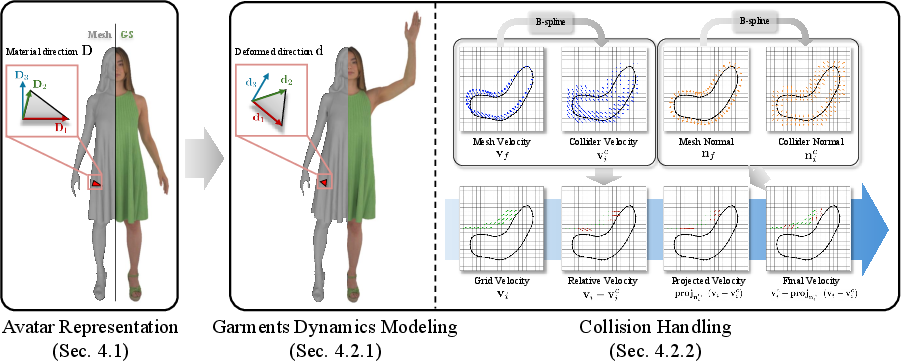

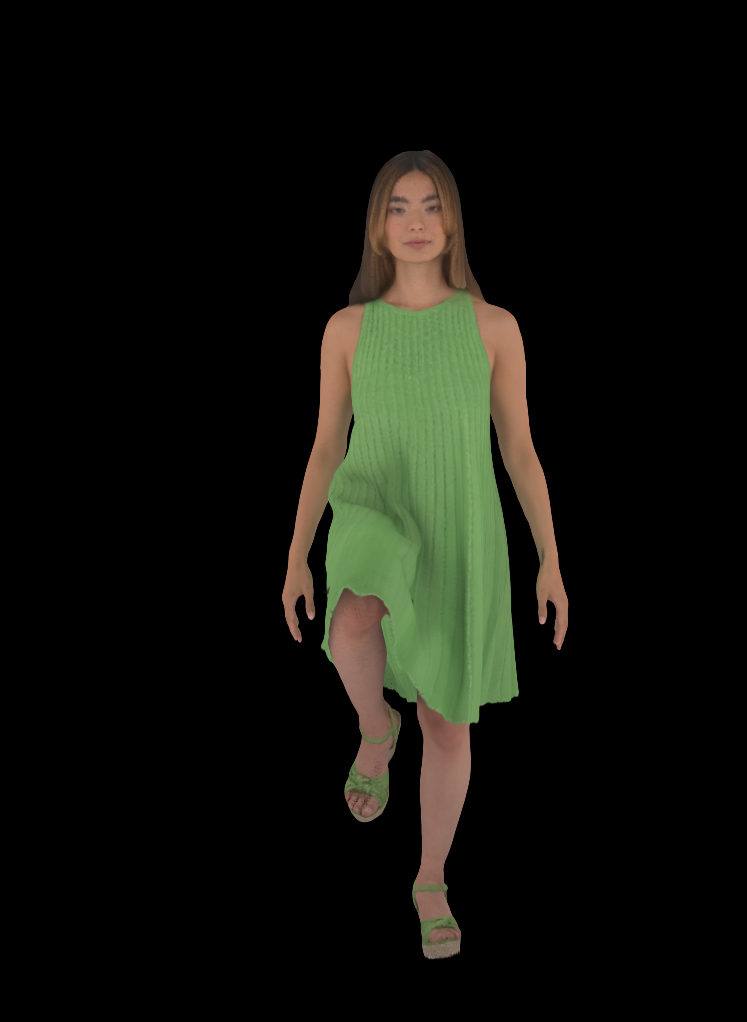

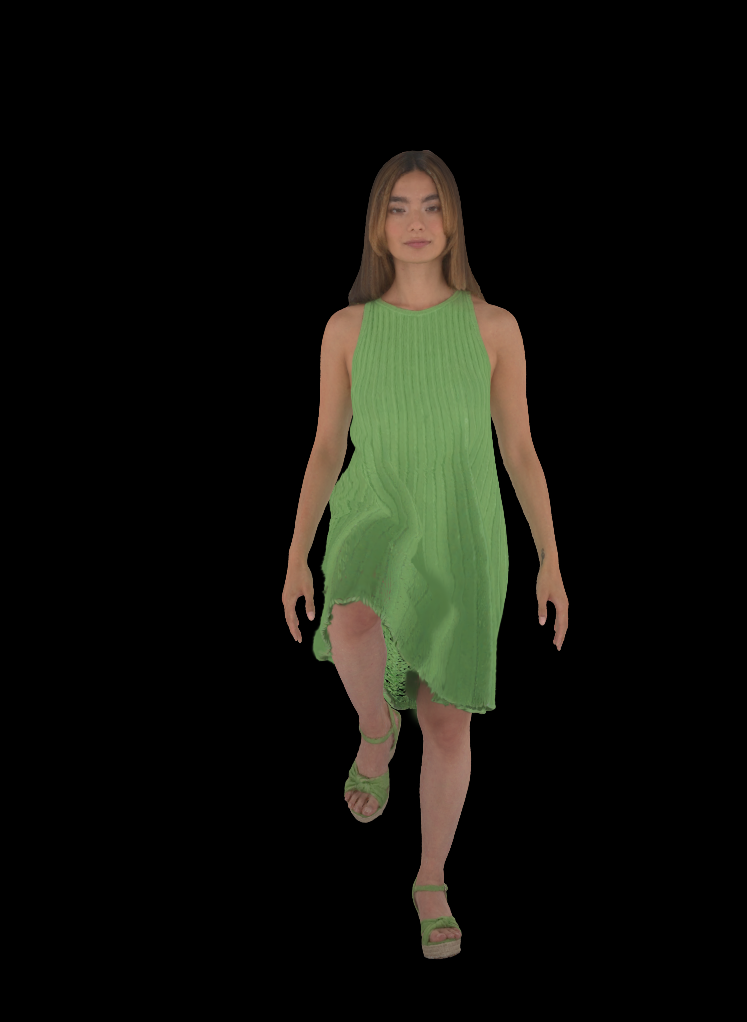

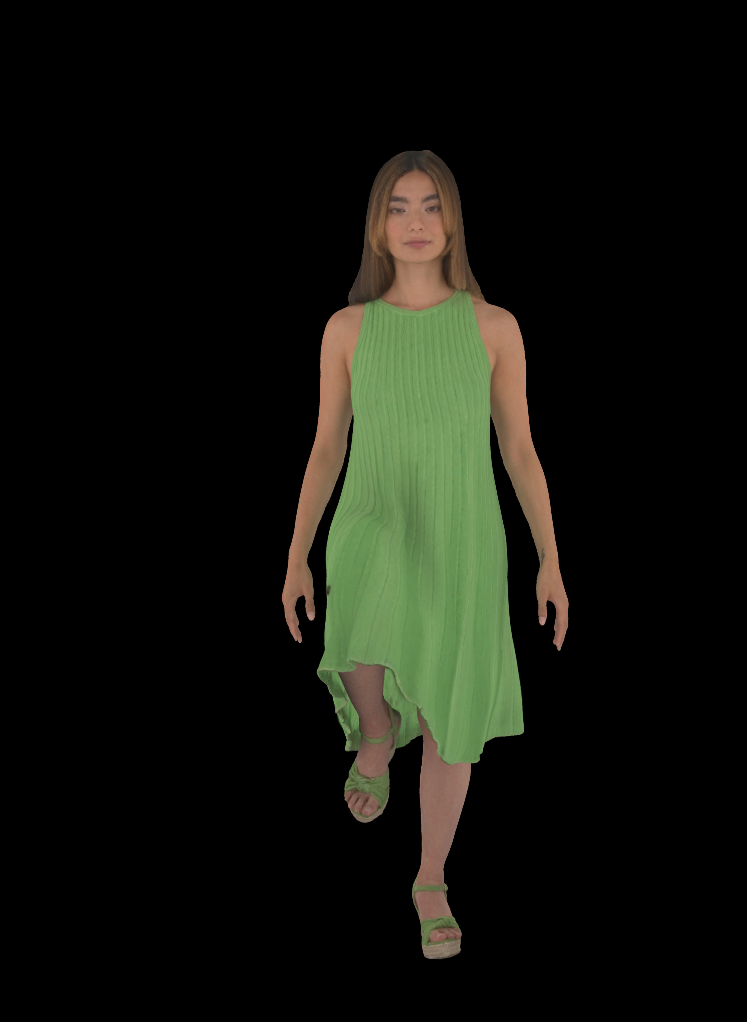

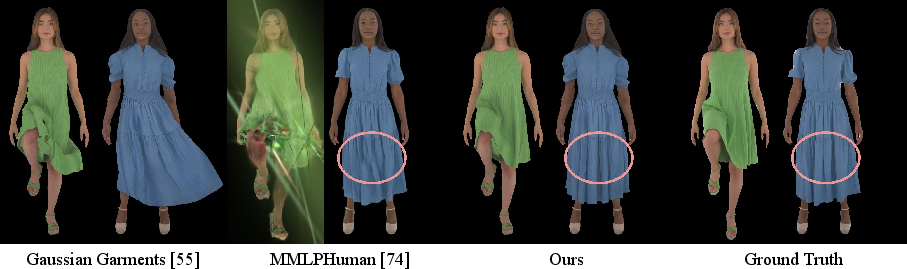

Abstract: While there has been significant progress in the field of 3D avatar creation from visual observations, modeling physically plausible dynamics of humans with loose garments remains a challenging problem. Although a few existing works address this problem by leveraging physical simulation, they suffer from limited accuracy or robustness to novel animation inputs. In this work, we present MPMAvatar, a framework for creating 3D human avatars from multi-view videos that supports highly realistic, robust animation, as well as photorealistic rendering from free viewpoints. For accurate and robust dynamics modeling, our key idea is to use a Material Point Method-based simulator, which we carefully tailor to model garments with complex deformations and contact with the underlying body by incorporating an anisotropic constitutive model and a novel collision handling algorithm. We combine this dynamics modeling scheme with our canonical avatar that can be rendered using 3D Gaussian Splatting with quasi-shadowing, enabling high-fidelity rendering for physically realistic animations. In our experiments, we demonstrate that MPMAvatar significantly outperforms the existing state-of-the-art physics-based avatar in terms of (1) dynamics modeling accuracy, (2) rendering accuracy, and (3) robustness and efficiency. Additionally, we present a novel application in which our avatar generalizes to unseen interactions in a zero-shot manner-which was not achievable with previous learning-based methods due to their limited simulation generalizability. Our project page is at: https://KAISTChangmin.github.io/MPMAvatar/

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What this paper is about

This paper introduces MPMAvatar, a way to build lifelike 3D people (avatars) from videos shot by several cameras. These avatars don’t just look real from any viewpoint; their clothes also move in a realistic, physics-based way, especially loose garments like skirts, dresses, and jackets.

What problems the paper tries to solve

In simple terms, the authors want to:

- Make 3D avatars that look sharp and detailed when rendered from any angle.

- Animate avatars so their clothes move like real fabric under gravity and motion, not like stiff or rubbery shapes.

- Keep the animation stable and reliable, even with tricky motions or noisy inputs.

- Generalize to new situations, like interacting with new objects the system hasn’t seen before.

How the method works (explained with everyday ideas)

The system combines two big ideas: a physics engine for motion and a fast, realistic way to render appearance.

1) Physics for realistic clothes

- Think of cloth like a thin sheet that stretches more easily along the fabric than through its thickness. The paper uses a physics simulator called the Material Point Method (MPM) to capture this.

- MPM, in kid terms: Imagine sprinkling lots of tiny “material dots” (like flour) over a body and also laying a grid over the space. The system moves mass and momentum back and forth between the dots and the grid each step, so it can simulate soft stuff that bends, stretches, and collides.

- Anisotropic behavior: “Anisotropic” just means “different in different directions.” Cloth is easier to stretch across the surface than to push through its thickness. The method uses a special cloth model that respects this, so skirts flow and fold more naturally.

- Collision handling: Clothes must not pass through the body. Standard MPM expects simple colliders (like spheres with formulas). But a human body is a complex triangle mesh. The authors create a new way to make cloth slide along the body surface by “spreading” the body’s surface directions and speeds to nearby grid points, then nudging the cloth’s motion so it slides instead of poking through. This makes collisions fast and robust.

2) Rendering that looks great from any angle

- 3D Gaussian Splatting: Instead of a traditional polygon surface, the avatar’s appearance is made from lots of small, fuzzy 3D blobs (Gaussians). You can imagine painting the avatar with many tiny, semi-transparent dots that together look detailed and smooth when viewed from any camera.

- Quasi-shadowing: The system uses an extra shading trick to approximate self-shadowing, so folds and wrinkles look more 3D and realistic.

3) Learning from multi-view videos

- Mesh tracking: First, they track a 3D mesh of the person through time using the videos. This gives them a “ground truth” of how the clothes actually moved.

- Inverse physics: They run their simulator with guessed fabric properties (like stiffness and density), compare the simulated cloth to the tracked cloth, and tweak the properties until the simulation matches reality.

- Rest shape correction: The first video frame already shows the clothes sagging under gravity. The method learns a simple “un-sag” factor (α) that estimates how the clothes would look without gravity. This helps the physics start from a proper, not-already-stretched shape.

- Appearance learning: While the physics handles motion, the color and detail come from optimizing those 3D blobs so the rendered images match the real video frames across all cameras and times.

What they found and why it matters

The authors test on public datasets and show:

- More accurate cloth motion: Their method better matches real folds, wrinkles, and swinging of loose clothes than previous works.

- Better rendering quality: The avatars look sharper and more detailed, especially in patterned or textured clothing.

- More robust and efficient simulation: It avoids common failures when the driving body model is a bit messy (for example, if parts of the body mesh slightly intersect). Their collision method is also computationally efficient.

- Zero-shot generalization: Because the motion comes from real physics, the avatar can handle new scene interactions it wasn’t trained on (like touching or brushing against a new object), which learned-only methods struggle with.

Why this matters:

- Games, movies, VR/AR, and digital fashion need believable digital humans. Realistic cloth is one of the hardest parts. This system moves closer to “it just looks right” without tons of manual tweaking.

- Robustness means fewer crashes and less manual cleanup, saving production time.

- Generalization means the avatars can behave plausibly in new situations, making them more useful in practice.

What this could lead to next

- Better virtual try-on and digital fashion shows where garments drape convincingly on different body shapes and motions.

- More lifelike characters in games and films without hand-animating every fold.

- Interactive VR experiences where your avatar’s jacket or skirt reacts correctly if you sit, run, or bump into objects.

- Research tools for studying cloth materials from video, since the method can “reverse engineer” fabric properties from real footage.

In short, MPMAvatar blends a strong physics engine with a modern rendering technique to produce 3D people whose clothes both look and move like the real thing—and it does so reliably, even in new, unseen situations.

Knowledge Gaps

Knowledge Gaps, Limitations, and Open Questions

Below is a concise, actionable list of what remains missing, uncertain, or unexplored based on the paper.

- Dependence on multi-view mesh tracking: the pipeline requires accurate precomputed mesh tracking and canonical mesh initialization; sensitivity to tracking errors, occlusions, and sparse-view settings is not quantified or mitigated (no end-to-end training from images to dynamics).

- Limited generalization to in-the-wild capture: evaluations are confined to controlled, studio-like datasets (ActorsHQ, 4D-DRESS); robustness to outdoor lighting, cluttered backgrounds, handheld cameras, rolling shutter, and calibration drift is untested.

- Real-time performance is unclear: per-frame simulation and training runtimes are not reported comprehensively (table is truncated); feasibility for interactive or real-time applications on commodity GPUs remains open.

- One-way coupling to colliders: the collider (SMPL-X body or external objects) appears purely kinematic; two-way coupled cloth-body or cloth-object dynamics (mutual force exchange and motion feedback) is not supported or evaluated.

- Collision modeling scope: the new mesh-to-grid collision handling targets garment–body contact; treatment of garment–garment collisions (multi-layer clothing) and robust garment self-collision with finite cloth thickness is not specified or evaluated.

- Friction and contact parameters: the contact model focuses on penetration prevention via velocity projection; identification and use of Coulomb friction (static/dynamic), frictional anisotropy, and spatially varying friction across body regions are not modeled or learned.

- Material parameter identifiability: only Young’s modulus E, density ρ, and a scalar rest-shape parameter α are learned; Poisson’s ratio ν, anisotropy parameters (γ, κ), damping, and bending stiffness are fixed, leaving parameter identifiability, realism, and generalization underdetermined.

- Bending and thin-shell behavior: explicit cloth bending resistance (critical for wrinkling) is not modeled or validated; suitability of volumetric MPM and the chosen constitutive model for thin-shell cloth at practical grid resolutions remains untested.

- Spatially varying materials and thickness: garments are treated as having globally uniform parameters; learning spatially varying material properties, thickness, and fabric weave directions per garment region is not explored.

- Rest-shape recovery is coarse: the single scalar α for gravity-induced prestretch cannot capture spatially heterogeneous prestrain; learning a spatially varying rest configuration (e.g., per-edge/per-region) is needed for realistic calibration.

- Differentiability and efficiency of inverse physics: parameter learning uses finite differences, which is slow and noisy; a differentiable, stable MPM with mesh-based collision handling is not provided, limiting scalability and joint learning with appearance.

- Validation against physical ground truth: geometry metrics are computed against tracked meshes (not physical ground truth); evaluation with measured fabric properties and high-fidelity motion capture (e.g., force plates, high-speed capture, known materials) is missing.

- Robustness to body tracking errors and self-penetrations: while the method claims robustness, systematic stress-testing across a spectrum of self-penetration magnitudes, pose noise, and mesh artifacts is not presented.

- High-speed and extreme motions: performance under rapid, highly non-linear cloth dynamics, large accelerations, or acrobatics is not quantified; stability limits and time-step constraints are unspecified.

- Aerodynamics and environment forces: wind/air drag, buoyancy, and other environmental forces are absent; generalization to scenes with significant aerodynamic effects remains open.

- Multi-garment layering: interactions between multiple garments (e.g., jacket over dress, skirt over tights) with friction, stacking, and sliding are not analyzed; pipeline for simultaneous simulation with correct layering order is unspecified.

- Body soft-tissue dynamics: the body is animated via LBS; coupling to soft-tissue or muscle dynamics (jiggle, secondary motion) is not modeled, limiting realism for tight garments.

- Coupling MPM state to render mesh: details on how the surface mesh is updated from particles/grid (and whether high-frequency wrinkles survive transfer) are limited; potential loss of small-scale detail and lag are not analyzed.

- Lighting and shading limitations: rendering uses 3DGS with quasi-shadowing driven by ambient occlusion; dynamic lighting, cast shadows, specularities, and global illumination are not modeled or estimated from data.

- Temporal consistency in appearance: strategies to suppress temporal flicker or shading inconsistencies in 3DGS under fast motion or thin structures are not discussed.

- External object generalization: zero-shot interaction claims lack breadth; diversity of object shapes, materials, motions, contact regimes (sticking/sliding), and complex contact topologies is limited and not systematically benchmarked.

- Editing and control: user-controllable editing (e.g., change fabric type, add layers, alter garment pattern) and its impact on learned parameters and stability are not supported.

- Data efficiency: the method uses tens to hundreds of frames per subject; ablations on the minimal data needed for accurate dynamics and appearance learning are missing.

- Failure cases and diagnostics: comprehensive reporting of when and why simulations fail (e.g., grid resolution limits, poor tracking, parameter extremes) and practical guidelines for avoiding them are absent.

- Memory and scalability: the computational/memory footprint for large domains, high grid resolutions, long sequences, and multiple garments is not reported; strategies for adaptive meshing/grids or multi-resolution simulation remain unexplored.

- Automatic garment segmentation and rigging: assumptions and automation for separating garment vs. body regions and setting up material directions and anchors are not detailed; robustness to segmentation errors is untested.

- Cross-subject generalization: whether learned material parameters transfer across sequences of the same subject/garment or across subjects with similar garments is unknown; a protocol for reusing or sharing parameters is missing.

Practical Applications

Overview

MPMAvatar introduces a hybrid avatar framework that combines 3D Gaussian Splatting for photorealistic rendering with a tailored Material Point Method (MPM) simulator for physically accurate and robust garment dynamics. Key innovations include:

- An anisotropic constitutive model for cloth-like, codimensional dynamics

- A mesh-based collision handling algorithm that is robust to SMPL-X body self-penetration and more efficient than level-set approaches

- Inverse physics to estimate material parameters (e.g., Young’s modulus, density) and a simple rest-geometry parameter to correct gravity-induced deformations

- Zero-shot generalization to novel scene interactions

The following lists summarize practical applications, grouped by their deployment readiness. Each item notes sector relevance, possible tools/workflows, and assumptions or dependencies that affect feasibility.

Immediate Applications

These applications can be deployed now using the paper’s methods and code with standard multi-view capture setups and offline processing.

- Media and Entertainment (Film/VFX/Games): asset creation pipeline from multi-view video

- What: Convert multi-view actor captures into animatable avatars with robust, physics-based garment dynamics; precompute sequences for film shots, cutscenes, and game cinematics.

- Tools/Workflows: A “MPMAvatar Capture-to-Asset” pipeline integrated with Blender/Unreal/Unity; offline simulation of garments; 3DGS-based rendering with quasi-shadowing for lookdev and final frames.

- Dependencies/Assumptions: Multi-view rig; SMPL-X tracking; GPU compute; offline simulation time acceptable; licensing and integration with DCC tools.

- AR/VR Content Production (Precomputed Avatars)

- What: Photorealistic, free-view avatars of performers for immersive experiences, events, and installations with physically consistent cloth motion.

- Tools/Workflows: Pre-recorded avatar sequences; 3DGS playback engines; “MPMAvatar Player” plugin for VR platforms.

- Dependencies/Assumptions: Offline precomputation; sufficient memory/graphics capability; multi-view input.

- Fashion and Apparel (Digital catalog and runway generation)

- What: Generate photorealistic product showcases with realistic cloth dynamics from studio captures; support skirts, dresses, loose tops.

- Tools/Workflows: “Virtual Runway Generator” that ingests multi-view videos of models wearing new garments; 3DGS rendering for marketing visuals.

- Dependencies/Assumptions: Professional capture pipeline; per-garment calibration; offline processing; material parameter estimation tuned to fabric class.

- Apparel CAD and Material Calibration (Design)

- What: Estimate key physical parameters (e.g., Young’s modulus E, density ρ, rest-geometry parameter α) from video to calibrate cloth behavior in CAD tools (e.g., CLO3D, Marvelous Designer).

- Tools/Workflows: “MPMAvatar Material Estimator” exporting parameters to CAD simulators; validation via side-by-side simulation.

- Dependencies/Assumptions: Assumes anisotropic model suffices; finite-difference optimization; consistent lighting/capture; CAD tool import formats.

- Robotics Research (Dataset Generation for Cloth Manipulation)

- What: Generate diverse, physically plausible cloth interactions and synthetic datasets to train perception/manipulation models (e.g., towel folding).

- Tools/Workflows: “Cloth Interaction Synthesizer” leveraging mesh-based collider handling and zero-shot scene interaction; automated annotation from meshes.

- Dependencies/Assumptions: Offline generation; fidelity good enough for sim-to-real transfer; accurate SMPL-X or proxy colliders for human interaction scenarios.

- Computer Vision Research (Benchmarking and Method Development)

- What: Use MPMAvatar as a reproducible baseline to paper physics-integrated avatar reconstruction, multi-view tracking, and rendering under cloth motion.

- Tools/Workflows: Open-source code; protocol to compare dynamics accuracy (Chamfer/F-Score) and appearance metrics (LPIPS/PSNR/SSIM).

- Dependencies/Assumptions: Access to ActorsHQ/4D-DRESS or similar datasets; training resources.

- Education (Graphics/Simulation Coursework)

- What: Teach anisotropic constitutive modeling, mesh-based collision handling, and hybrid explicit/implicit representations through hands-on notebooks.

- Tools/Workflows: “MPMAvatar Classroom Notebooks” in Python/Warp/PyTorch; visualizations of deformation gradients and grid-based collision resolution.

- Dependencies/Assumptions: Moderate GPU; curated sample data.

- Software Libraries and Plugins (Simulation)

- What: Integrate the mesh-based collider algorithm (O(N_f)) into existing MPM engines to improve stability and performance on mesh colliders.

- Tools/Workflows: “Mesh-Collider MPM Plugin” for Warp/PyTorch or C++ simulators; unit tests and API examples for cloth/soft-body setups.

- Dependencies/Assumptions: Maintenance and compatibility with host simulators; licensing and documentation.

- Creator Economy (Photoreal Avatars for Social Media/Advertising)

- What: Produce high-fidelity avatars with realistic garments for ad spots and influencer content; leverage free-view visuals for campaigns.

- Tools/Workflows: Studio capture sessions; semi-automated pipeline with pre-set presets for common fabrics; 3DGS render passes.

- Dependencies/Assumptions: Multi-view setups; post-production expertise; brand approvals and IP management.

Long-Term Applications

These applications require further research, scaling, or development (e.g., real-time constraints, single-view capture, differentiability, deployment infrastructure).

- Real-Time Telepresence with Physically Accurate Garments (AR/VR/Communication)

- What: Live avatars on consumer devices with robust cloth dynamics during calls or virtual meetings.

- Tools/Workflows: “Realtime MPM+3DGS Engine” with hardware acceleration; incremental physics updates; motion retargeting from body tracking.

- Dependencies/Assumptions: Significant optimization; reduced latency; efficient collision handling; edge GPU or specialized hardware.

- Monocular/Smartphone Capture (Consumer Onboarding)

- What: Turn single-camera videos into animatable, photoreal avatars; democratize capture beyond studio rigs.

- Tools/Workflows: “Monocular MPMAvatar” combining stronger priors, learned reconstruction, and physics post-fitting; SLAM-based multi-pass capture.

- Dependencies/Assumptions: Improved mesh tracking under sparse views; robust estimation of SMPL-X; occlusion handling; domain adaptation.

- Virtual Try-On and Fit Assessment (E-commerce/Retail)

- What: Personalized avatars with accurate garment dynamics for try-on, comfort prediction, and visual fit evaluation.

- Tools/Workflows: “Try-On Service” that maps retailer garment assets to user avatars; parameterized fabrics; body-shape estimation; physics retargeting.

- Dependencies/Assumptions: Standardized material parameter libraries; ease of garment digitization; privacy/security for user body data.

- Differentiable Physics for Closed-Loop Control (Robotics)

- What: Use differentiable MPM-like models for planning and control of cloth manipulation (e.g., folding, dressing assistance).

- Tools/Workflows: “Differentiable Cloth Controller” integrating gradient-based policy updates; simulation-to-real calibration.

- Dependencies/Assumptions: Differentiable solvers with stable gradients; efficient collision differentiability; accurate contact/friction modeling.

- Healthcare and Biomechanics (Cloth-Aware Motion Analysis)

- What: Improve gait and posture analysis by accounting for garment-induced occlusions and dynamics; model patient-specific interactions.

- Tools/Workflows: “Cloth-Aware Motion Lab” where patient captures produce avatars; quantitative metrics separated from garment artifacts.

- Dependencies/Assumptions: Clinical validation; robust estimation under varied clothing; regulatory compliance (data privacy).

- Live Performance and Interactive Installations (Events/Arts)

- What: Real-time interactive digital doubles with realistic cloth on stage or in galleries.

- Tools/Workflows: “Interactive Avatar Stage” with sensor fusion (IMUs/cameras); GPU clusters; on-the-fly rendering pipelines.

- Dependencies/Assumptions: Real-time constraints; robust tracking; content moderation and fail-safes for stability.

- Smart Retail and Mirrors (In-Store Experiences)

- What: Interactive mirrors that simulate realistic garment drape on customers’ avatars.

- Tools/Workflows: “Smart Mirror Physics” combining rapid body scanning with material libraries; local or edge compute.

- Dependencies/Assumptions: Fast capture and calibration; lighting variability; bandwidth constraints; user privacy.

- Industry Standards and Policy (Digital Garment Parameters and Avatar Ethics)

- What: Standardize fabric material parameters (E, ρ, anisotropy) and avatar provenance/watermarking to prevent misuse and ensure interoperability.

- Tools/Workflows: “Material Parameter Registry” linked to garment SKUs; “Avatar Provenance Toolkit” for traceable renders.

- Dependencies/Assumptions: Industry consensus; standard bodies engagement; compliance frameworks for IP/privacy.

- Media Preservation and Talent IP (Digital Doubles)

- What: Archive actors’ avatars and garments for future productions and licensing.

- Tools/Workflows: “Digital Double Archive” with parameterized garments and motion libraries; legal/IP frameworks.

- Dependencies/Assumptions: Long-term storage; rights management; evolving legal guidelines for posthumous use.

- Advanced Training Simulations (Safety/Industrial Training)

- What: Physics-faithful avatars improve realism in training scenarios where garments affect task performance (e.g., PPE, firefighters).

- Tools/Workflows: “Cloth-Aware Training Sim” integrating avatars with environment physics and equipment models.

- Dependencies/Assumptions: Real-time feasibility; validated transfer of training effectiveness; sector-specific certification.

Cross-Cutting Assumptions and Dependencies

- Input quality: Multi-view recordings and accurate SMPL-X estimation are critical; single-view and in-the-wild scenarios need further research.

- Compute budgets: MPM simulation and 3DGS rendering are GPU-intensive; many applications will be offline initially.

- Material modeling: Anisotropic cloth parameters must be calibrated per fabric; inverse physics may underperform on highly nonlinear textiles without richer models.

- Collision handling: Mesh-based collider algorithm improves robustness but may still need tuning in extreme self-contact or fast motions.

- Generalization: Zero-shot interaction is promising but must be validated across broader object types and interaction speeds; learned components (quasi-shadowing) depend on training data domain.

- Integration: Pipelines must bridge DCC tools, engines, and simulators; licensing (SMPL-X) and data governance (avatars of people) must be addressed.

Glossary

- 3D Gaussian Splatting: A point-based rendering technique that uses anisotropic 3D Gaussians for fast, high-fidelity novel view synthesis. "3D Gaussian Splatting with quasi-shadowing"

- 3D Gaussian Splats: The explicit set of Gaussian primitives (positions, covariances, colors) used to model and render scenes/avatars. "3D Gaussian Splats~\cite{kerbl20233d}"

- alpha-blending: An image compositing method that accumulates colors using opacity along a ray. "via -blending:"

- ambient occlusion: A shading approximation that darkens regions with limited exposure to ambient light to convey contact and concavities. "ambient occlusion features"

- anisotropic constitutive model: A material model whose stress–strain response depends on direction, enabling different stiffness in-plane vs. normal to cloth. "anisotropic constitutive model~\cite{jiang2017anisotropic}"

- barrier terms: Optimization penalties that prevent interpenetration by making configurations near contact energetically prohibitive. "barrier terms"

- B-Spline weights: Basis-function weights from B-splines used for smooth interpolation/transfer of quantities to grid nodes. "B-Spline weights"

- C-IPC: A cloth-specific extension of the Incremental Potential Contact method for robust, energy-based collision handling. "C-IPC~\cite{li2020codimensional}"

- Cauchy stress tensor: A continuum mechanics tensor representing internal forces per unit area within a deforming material. "cauchy stress tensor"

- Chamfer Distance (CD): A bidirectional distance between point sets used to evaluate geometric accuracy. "Chamfer Distance (CD)"

- codimensional manifold: A lower-dimensional surface embedded in a higher-dimensional space (e.g., 2D cloth in 3D). "codimensional manifold structure"

- Continuous Collision Detection (CCD): A method that detects collisions over a time interval to avoid tunneling, crucial for thin, fast-moving geometry. "Continuous Collision Detection (CCD)"

- deformation gradient: A local linear map describing how material neighborhoods deform from rest to current configuration. "deformation gradient"

- Eulerian grid: A spatially fixed grid on which continuum quantities (e.g., momentum) are updated in grid-based simulation. "Eulerian grid"

- F-Score: The harmonic mean of precision and recall at a chosen distance threshold to assess surface reconstruction quality. "F-Score"

- feedforward velocity projection: A collision-resolution step that projects velocities onto a tangent space to prevent interpenetration. "via feedforward velocity projection."

- finite-difference approach: A numerical method that approximates derivatives by sampling function values at small perturbations. "finite-difference approach."

- Graph Neural Network (GNN): A neural architecture operating on graph-structured data, used to learn dynamics from mesh or particle graphs. "Graph Neural Network (GNN)-based simulators"

- IPC (Incremental Potential Contact): A variational, energy-based collision handling framework ensuring non-penetration via contact potentials. "IPC~\cite{li2020incremental}"

- Jacobian (of the projective transformation): The matrix of partial derivatives relating changes in 3D to 2D under projection, used for covariance projection. "the Jacobian of the projective transformation"

- Lagrangian mesh: A mesh that moves with the material to track intrinsic directions and deformations. "Lagrangian mesh"

- Lagrangian particles: Material-following particles that carry mass and state in hybrid particle–grid methods like MPM. "Lagrangian particles"

- Learned Perceptual Image Patch Similarity (LPIPS): A deep feature–based metric for perceptual image similarity. "Learned Perceptual Image Patch Similarity (LPIPS)"

- level set: An implicit surface representation where a function’s zero level defines geometry; useful for analytic colliders. "dynamic level set"

- Linear Blend Skinning: A skeletal animation technique that blends bone transformations to deform meshes. "Linear Blend Skinning"

- material derivative: The time derivative following the motion of a material point in a flow. "material derivative"

- Material Point Method (MPM): A hybrid particle–grid continuum simulation method well-suited for large deformations and robust collisions. "Material Point Method (MPM)"

- mesh-to-grid transfer: The process of transferring mesh-defined quantities (e.g., normals/velocities) to grid nodes for simulation. "meshâtoâgrid transfer"

- Peak Signal-to-Noise Ratio (PSNR): A pixel-wise fidelity metric in decibels comparing rendered and reference images. "Peak Signal-to-Noise Ratio (PSNR)"

- Possion's ratio: A material parameter relating lateral contraction to axial extension (typo in text; Poisson’s ratio). "Possion's ratio "

- Position-Based Dynamics (PBD): A real-time simulation framework that enforces constraints directly on positions for stability. "Position-Based Dynamics (PBD)"

- QR-decomposition: A matrix factorization into an orthonormal matrix and an upper triangular matrix, used to separate rotation. "QR-decomposition"

- quasi-shadowing: A learned shading mechanism that approximates self-shadowing effects to enhance realism. "quasi-shadowing"

- quaternion: A 4D rotation representation used to parameterize 3D orientations robustly. "a quaternion "

- rest geometry: The undeformed reference configuration relative to which strains and stresses are computed. "rest geometry"

- SMPL-X: A parametric human body model with articulated body, hands, and face used to drive animations. "SMPL-X~\cite{SMPL-X:2019}"

- spherical harmonics: Orthogonal basis functions on the sphere used to represent view-dependent color/lighting. "spherical harmonics~\cite{kerbl20233d}"

- strain-energy density function: The stored elastic energy per unit volume as a function of deformation. "strainâenergy density function"

- Structural Similarity Index Measure (SSIM): An image metric assessing structural and contrast similarity between images. "Structural Similarity Index Measure (SSIM)"

- Variational integrators: Time-stepping schemes derived from discrete variational principles, preserving physical invariants. "Variational integrators"

- XPBD: Extended Position-Based Dynamics introducing compliance for more physically plausible constraint behavior. "XPBD~\cite{xpbd, sasaki2024pbdyg}"

- Young's modulus: A stiffness parameter defining the linear elastic response along a material direction. "Young's modulus "

- zero-shot: Generalization to unseen conditions without additional training or tuning. "zero-shot generalizable"

Collections

Sign up for free to add this paper to one or more collections.