Triangle Splatting+: Differentiable Rendering with Opaque Triangles (2509.25122v1)

Abstract: Reconstructing 3D scenes and synthesizing novel views has seen rapid progress in recent years. Neural Radiance Fields demonstrated that continuous volumetric radiance fields can achieve high-quality image synthesis, but their long training and rendering times limit practicality. 3D Gaussian Splatting (3DGS) addressed these issues by representing scenes with millions of Gaussians, enabling real-time rendering and fast optimization. However, Gaussian primitives are not natively compatible with the mesh-based pipelines used in VR headsets, and real-time graphics applications. Existing solutions attempt to convert Gaussians into meshes through post-processing or two-stage pipelines, which increases complexity and degrades visual quality. In this work, we introduce Triangle Splatting+, which directly optimizes triangles, the fundamental primitive of computer graphics, within a differentiable splatting framework. We formulate triangle parametrization to enable connectivity through shared vertices, and we design a training strategy that enforces opaque triangles. The final output is immediately usable in standard graphics engines without post-processing. Experiments on the Mip-NeRF360 and Tanks & Temples datasets show that Triangle Splatting+achieves state-of-the-art performance in mesh-based novel view synthesis. Our method surpasses prior splatting approaches in visual fidelity while remaining efficient and fast to training. Moreover, the resulting semi-connected meshes support downstream applications such as physics-based simulation or interactive walkthroughs. The project page is https://trianglesplatting2.github.io/trianglesplatting2/.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about a new way to turn a bunch of photos of a real scene into a 3D model that looks good and runs fast in game engines and VR. The method is called Triangle Splatting+, and it builds the scene out of solid, colored triangles—the same kind of shapes games and graphics already use. The big idea is to train these triangles directly from images so that, at the end, you get a ready-to-use 3D mesh without extra steps.

Key Objectives

Here are the main questions the paper tries to answer:

- How can we learn a triangle-based 3D model straight from photos so it looks great from new camera views?

- How do we make triangles solid (opaque) and well-connected, so the mesh works naturally in game engines?

- Can we keep training smooth and stable while turning soft, semi-transparent triangles into sharp, solid ones?

- Does this beat other methods on quality and speed, and does it work for things like physics and editing in games?

Methods and Approach

Think of building a 3D scene like making a sculpture out of many tiny triangle “tiles.” The paper explains how to start with rough tiles and gradually refine them until they fit the scene perfectly.

Step 1: Start from photos and a rough 3D sketch

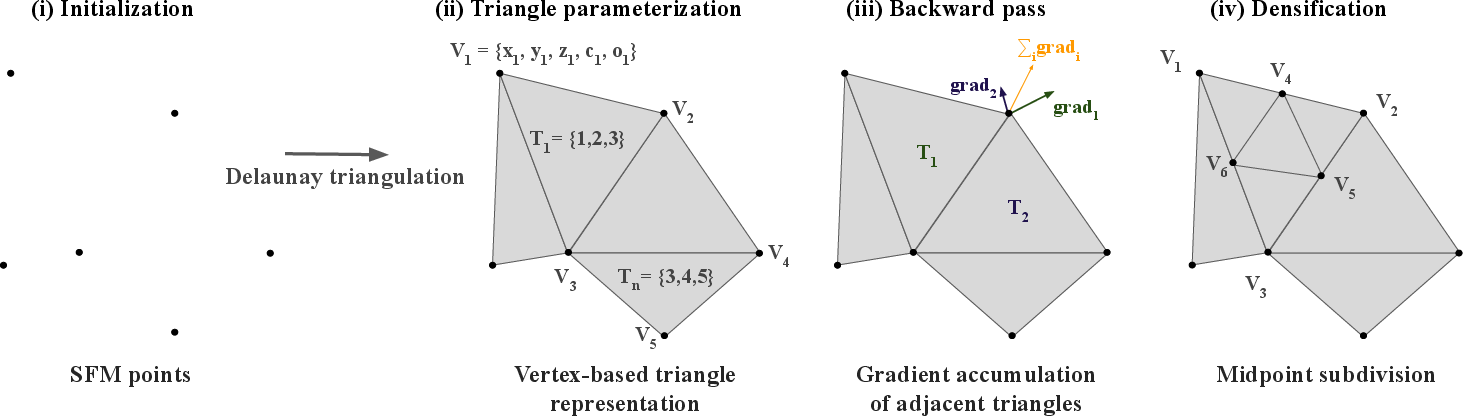

- The authors use Structure-from-Motion (SfM): a standard tool that takes many photos and figures out where the cameras were and where key 3D points are.

- They then run a 3D Delaunay triangulation: imagine stretching triangles between those points to get an initial mesh—like a rough net over the scene.

Step 2: Represent triangles with shared corners

- Each corner (vertex) stores:

- Position (where it is in 3D)

- Color (what it looks like)

- Opacity (how solid it is—eventually fully solid)

- Each triangle points to three shared vertices. This makes triangles connect naturally, like Lego pieces that click together at the same studs.

Step 3: “Differentiable rendering” (learning by comparing images)

- They render the triangles into the training images and compare the result to the real photos.

- Differentiable rendering means the computer can measure the difference and “nudge” the triangle positions and colors to make the render look more like the real photos. Think of it as getting feedback signals and adjusting the triangles bit by bit.

Step 4: Smooth-to-sharp training

- Early in training, triangles are soft and a bit see-through. This helps learning because small changes affect the image smoothly—like using a soft brush.

- Over time, they “anneal” (gradually change) settings so triangles become sharp and fully opaque. By the end, they are solid surfaces, perfect for game engines.

Step 5: Pruning and densification

- Pruning: remove triangles that don’t help the image much or that are hidden behind others. This keeps the mesh clean and avoids artifacts when everything becomes opaque.

- Densification: add detail by splitting triangles into smaller ones where more precision is needed. Picture cutting a big triangle into four smaller ones to capture fine edges or textures.

Step 6: Losses and quality tricks

- They use image-based losses (like measuring pixel differences and structural similarity) to guide learning.

- They supervise surface normals (which way a surface faces) to keep geometry realistic.

- They use anti-aliasing by rendering a bit bigger and downsampling, which reduces jagged edges.

Main Findings and Why They Matter

Here are the main results:

- Better mesh-based quality: Triangle Splatting+ makes sharper, more faithful 3D meshes than other methods that convert fuzzy blobs (“Gaussians”) into meshes later. It also improves over older triangle methods that relied on semi-transparent triangles.

- Fast training and fast running: It trains quickly (tens of minutes on a high-end GPU) and produces meshes that render extremely fast (hundreds of frames per second on a laptop), because the final triangles are solid and don’t need complicated blending.

- Game-engine ready: The output is a standard triangle mesh with colors—no extra “post-processing” is needed. You can drop it into Unity or other engines and it just works.

- Supports physics and editing:

- Physics: Because triangles are solid, you can use them for collisions and walkable environments (like in video games).

- Object editing: It’s easy to remove or extract objects. Each pixel comes from exactly one triangle, so you can map a 2D object mask back to the triangles that form that object and delete or move them.

- Ablation studies (tests of the design choices): They show that carefully controlling the transition from soft to hard triangles and pruning at the right time are crucial to the final quality.

- Limitations: Backgrounds with few photos (sparse data) can be less accurate, and transparent objects (like glass) are hard to handle with only opaque triangles.

Implications and Impact

Triangle Splatting+ bridges the gap between modern “learned” 3D methods and the practical world of graphics and games:

- It delivers meshes that are both high-quality and immediately usable, removing extra conversion steps.

- It enables fast, interactive uses like VR, AR, and video game scenes with real physics.

- It makes scene editing simpler, since the model is a standard mesh with clear triangle ownership of pixels.

- Overall, it moves 3D scene reconstruction toward real-world, plug-and-play applications—combining the smarts of AI training with the reliability of traditional computer graphics.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The paper makes strong progress toward mesh-compatible differentiable rendering with opaque triangles, but it leaves several important questions unresolved. Future research could address the following concrete gaps:

- Formal analysis of the differentiable window function: No theoretical characterization of the gradients, bias, and stability introduced by the signed-distance-based indicator and the σ-annealing schedule; compare against alternative differentiable rasterization kernels (e.g., SoftRasterizer variants) and quantify convergence behavior.

- Sensitivity of training schedules: The hard-coded σ decay, opacity floor schedule, and pruning thresholds (e.g., 5k-iteration hard prune, To≈0.2, τ_prune) lack sensitivity studies and automated selection; provide robust, scene-agnostic schedules or adaptive controllers with principled stopping/threshold criteria.

- Opacity aggregation choice: Triangle opacity is set to min(o_i, o_j, o_k) without justification; evaluate alternative aggregations (e.g., product, average, learned aggregation) and their effects on gradient flow, occlusion correctness, and final image quality.

- Full connectivity and watertightness: The method yields a “semi-connected” mesh and does not guarantee manifoldness, watertightness, or topological consistency; develop edge/vertex stitching, merging, and topology-repair procedures to produce manifold, watertight surfaces compatible with physics and PBR pipelines.

- Geometric quality evaluation: No quantitative geometry assessment (e.g., Chamfer distance, normal consistency, self-intersection rate) against ground-truth scans; add geometry-centric metrics to complement image-based metrics.

- Densification strategy: Midpoint subdivision driven by simple Bernoulli sampling from opacity is likely suboptimal; design error-driven, anisotropic, curvature-aware refinement (e.g., longest-edge split, edge-collapse/flip operations), topological change handling, and termination criteria tied to photometric residuals and geometric error.

- Decimation and simplification: The pipeline prunes triangles but does not perform structured mesh simplification (e.g., QEM-based decimation) to reduce redundancy while preserving fidelity and connectivity.

- Pose robustness and joint optimization: The approach assumes reliable SfM calibration; quantify robustness to pose/intrinsic errors and investigate joint optimization of camera parameters to mitigate reconstruction artifacts.

- Memory footprint and scalability: With 51 parameters per vertex and ~2M vertices, memory requirements are substantial; profile memory and runtime scaling, compare to 3DGS at similar quality, and explore compression (quantization, learned codes), streaming, and multi-resolution LODs.

- BRDF/material capture and relighting: Colors are per-vertex (with SH) and not physically based; provide a pathway to PBR-compatible albedo/normal/roughness/metalness estimation, UV parametrization, and consistent shading under novel lighting, especially since the paper claims support for relighting.

- View-dependent effects and specularity: Degree-3 spherical harmonics per vertex may not capture sharp specularities and complex view-dependent appearance; evaluate higher-order basis, neural reflectance fields on the mesh, or microfacet BRDFs.

- Transparent and refractive objects: The method explicitly struggles with transparent media (glass, liquids); propose hybrid representations (e.g., volumetric components, layered microgeometry, transmissive triangles) and differentiable handling of refraction/transmission.

- Generalization outside training orbit: Quality degrades outside the training-view orbit; investigate priors (geometry regularization, visibility-aware losses), broader sampling strategies, and consistency constraints to improve extrapolation.

- Anti-aliasing and edge quality: Downsampling-based anti-aliasing may be insufficient for sharp, opaque triangle edges; paper analytic coverage masks, MSAA-like differentiable techniques, and edge-aware losses to reduce shimmering and jaggies.

- Z-ordering and rasterization details: Training still requires depth sorting and accumulation; provide a detailed complexity analysis, GPU implementation specifics, and failure cases (z-fighting, coplanar primitives) to guide practical deployment.

- Physics robustness: While Unity colliders can be attached, no quantitative evaluation of collision reliability (penetrations, tunneling), stability, or performance under interaction; test against standard physics benchmarks and analyze non-watertight impacts.

- Object extraction evaluation: The SAM2-based 2D-to-3D triangle mapping is demonstrated qualitatively; quantify precision/recall under occlusion, thin structures, and multi-view consistency, and compare to Gaussian-based segmentation methods.

- Ray tracing integration: The paper claims natural support for ray tracing with triangles but provides no performance or correctness evaluation; benchmark BVH build time, ray traversal cost, and rendering artifacts vs. standard mesh pipelines.

- Comparisons to mesh-based differentiable renderers: No direct comparison to SoftRasterizer, DMesh/DMesh++, or modern differentiable mesh methods on real scenes; evaluate image quality, geometry accuracy, runtime, and ease of engine integration.

- Normal supervision source: Normals are supervised using a learned predictor (Hu, 2024); assess how normal estimation quality affects geometry, and consider self-consistent normal derivation from the mesh to avoid bias.

- Robustness to foliage/high-frequency textures: Triangulated opaque surfaces may struggle with thin, semi-transparent structures (foliage, hair, fences); measure failure modes and explore layered meshes or alpha-masked textures for these cases.

- UV parametrization and texturing: The output uses vertex colors, which limits compatibility with standard game asset pipelines; develop UV unwrapping and texture baking directly from the learned representation to enable downstream editing and PBR shading.

- Threshold-induced over-pruning: The blending-weight-based pruning may remove thin, partially occluded surfaces needed for completeness; analyze false positives and introduce multi-view occlusion-aware safeguards.

- Trade-off quantification (opaque vs. semi-transparent): Ablations show free opacity yields better visual metrics, but the paper chooses opacity for engine speed; quantify the quality–efficiency trade-off across scenes, resolutions, and hardware, and include user studies.

- Large-scale outdoor scenes and skies: The method suggests a triangulated sky dome as future work; design and evaluate sky/environment representations (HDR domes, distant geometry) and camera far-field handling for outdoor scenes.

- Runtime claims and reproducibility: The stated 400 FPS on a “consumer laptop” lacks resolution, scene complexity, and hardware details; publish standardized runtime benchmarks (training/inference) with reproducible configurations across GPUs/CPUs.

- Self-intersections and degenerate triangles: Midpoint subdivision and pruning may introduce geometric degeneracies; detect and repair self-intersections, flipped normals, and sliver triangles, and report their frequency and impact.

- Topology control: No mechanism to explicitly preserve or enforce topology (e.g., prevent holes or ensure closed surfaces where appropriate); add topology-aware losses or constraints during training/densification.

- Data diversity and dynamic content: Only static scenes from Mip-NeRF360 and T&T are used; extend to dynamic scenes, varied lighting/exposure, and handheld/VR capture to stress-test robustness and generality.

Practical Applications

Immediate Applications

Below are actionable use cases that can be deployed now, given the paper’s output of opaque, colored, semi-connected triangle meshes that import directly into standard game/VR engines and render at real-time rates.

- Game development and XR content pipelines (software, entertainment)

- Use Triangle Splatting+ to convert multi-view photos into game-ready meshes that import directly into Unity/Unreal for real-time rendering (~400 FPS), collisions, ray tracing, and walkable scene interactions.

- Product/workflow: “Photogrammetry-to-Game Mesh” plugin (Unity/Unreal) that wraps training + export; automatic collider assignment; instant scene editing (object removal/addition).

- Assumptions/dependencies: Requires posed multi-view images and reliable SfM calibration; scenes are predominantly opaque; semi-connected mesh is sufficient for gameplay but not watertight; quality degrades outside training orbit; transparent/reflective materials remain challenging.

- VR/AR walkthroughs and interior visualization (real estate, AEC, retail, education)

- Generate interactive VR/AR walkthroughs of homes, retail floors, museum exhibits, or classrooms from smartphone captures; enable scene editing (e.g., remove clutter, add furniture).

- Product/workflow: “AR Room Capturer” mobile workflow + server training + mesh export to headset; lightweight editor for object add/remove.

- Assumptions/dependencies: Accurate camera poses and adequate view coverage; meshes are visually faithful but not certification-grade for measurements; sparse backgrounds may underperform.

- Robotics simulation environments (robotics, autonomous systems)

- Import meshes into simulators (Unity, Gazebo, Isaac Sim) to create physics-ready environments for navigation, collision testing, and manipulation planning (non-convex mesh colliders supported).

- Product/workflow: “Robotics Sim Importer” that bundles training + physics collider configuration; batch conversion pipeline for asset libraries.

- Assumptions/dependencies: Semi-connected, non-watertight meshes are sufficient for many tasks; surface semantics not provided; transparent objects (glass) and highly reflective materials are not faithfully represented.

- Rapid digital twin creation for training and demonstration (industry training, facilities ops)

- Convert workshops, labs, stores, or factory areas into interactive digital twins to train staff in safe navigation and basic procedures, with real-time performance on consumer hardware.

- Product/workflow: “Physics-ready Digital Twin” pipeline that captures, trains, and deploys to a desktop VR app; in-app ray tracing for material previews.

- Assumptions/dependencies: Not engineering-grade; safety-critical simulations may require watertightness and material properties beyond opaque color (e.g., friction, compliance).

- Object removal and extraction with mask-driven workflows (software, media, privacy)

- Use the property that each pixel is determined by a single triangle to map 2D masks (from Segment Anything 2) to sets of triangles and remove/extract objects directly in 3D without retraining.

- Product/workflow: “Mask-to-Triangle Selector” plugin integrating SAM2 to extract objects across all views; export as separate mesh assets or delete from scene.

- Assumptions/dependencies: Mask accuracy depends on segmentation model and coverage; occlusions and incomplete views can leave holes; transparent objects are difficult.

- Film/VFX set capture and previsualization (media, entertainment)

- Fast set digitization into a mesh for previz, camera blocking, and lighting plan previews; supports real-time editing and ray tracing in standard engines.

- Product/workflow: “Previz Mesh Capture” kit with training scripts + engine template; on-set object clean-up via mask-based extraction.

- Assumptions/dependencies: Requires adequate multi-view coverage; high-fidelity textures are encoded via vertex colors (SH), not full PBR materials.

- Cultural heritage and museum digitization (culture, education)

- Capture galleries and exhibits for interactive tours and in-museum AR; enable safe virtual exploration with physics-based colliders.

- Product/workflow: “Heritage Walkthrough Builder” pipeline with curator-friendly object removal/addition tools.

- Assumptions/dependencies: Not suited for transparent artifacts; background sparsity or limited viewpoints may reduce reconstruction fidelity.

- E-commerce showrooms and retail demos (retail, marketing)

- Build interactive virtual showrooms from photos; quickly remove/display seasonal items; render in real-time on consumer devices.

- Product/workflow: “Retail Scene Builder” that ties into product CMS; object extraction yields standalone product meshes for web 3D.

- Assumptions/dependencies: Requires controlled capture; lighting/material realism limited to opaque color; scale accuracy may require additional calibration.

- Education in graphics and vision (academia, edtech)

- Teach differentiable rendering, mesh-based novel view synthesis, and splatting techniques with a practical pipeline that runs fast and integrates into game engines.

- Product/workflow: Course labs based on provided PLY assets; benchmark assignments comparing mesh-based view synthesis metrics (SSIM/PSNR/LPIPS).

- Assumptions/dependencies: Access to GPUs for training; datasets with known camera poses; integration into standard teaching environments.

- Insurance and property documentation (finance, legal)

- Create fast visual records with interactive walkthroughs; remove sensitive objects (privacy) before sharing; use physics for simple hazard simulations (e.g., fall paths).

- Product/workflow: “Claim VR Kit” with capture-to-mesh export and privacy filter (mask-based removal).

- Assumptions/dependencies: Not measurement-certified; legal compliance requires transparency about limitations and removal logs.

- Privacy and compliance workflows (policy, governance)

- Redact faces or personal items by selecting and deleting their triangles; share sanitized meshes for audits or public disclosure.

- Product/workflow: “Privacy Mesh Redactor” integrating SAM2; versioning tools tracking removed asset IDs.

- Assumptions/dependencies: Masking accuracy and auditability; policies must recognize non-watertight, visual-accuracy-first outputs.

- Energy-efficient visual pipelines (energy, sustainability)

- Replace longer training/render pipelines with fast training (25–39 minutes on A100 reported) and real-time rendering on commodity devices to reduce compute cost/carbon.

- Product/workflow: “Green Photogrammetry Pipeline” metrics dashboard (time-to-train, FPS, energy).

- Assumptions/dependencies: Actual energy savings depend on resolution, scene complexity, and hardware specifics.

Long-Term Applications

These use cases require further research or development—e.g., improved connectivity/watertightness, handling of non-opaque materials, larger-scale capture, or on-device training.

- Watertight, semantically annotated meshes for engineering-grade simulations (AEC, industrial simulation)

- Deliver fully connected, watertight outputs with material/semantic labels suitable for CFD/FEA or safety-critical physics.

- Dependencies: Robust connectivity enforcement; material models beyond opaque color; better handling of sparse backgrounds.

- Transparent and reflective materials modeling (graphics, retail, automotive)

- Extend the framework to handle glass, water, mirrors with accurate appearance and interactions.

- Dependencies: New primitives/shaders or hybrid representations; revised training to avoid artifacts with non-opaque surfaces.

- Large-scale outdoor capture and urban digital twins (smart cities, infrastructure)

- City blocks reconstructed from drones/vehicles for planning, crowd flow simulations, and emergency response training.

- Dependencies: Scalable training, robust SfM in wide areas, handling variable lighting/weather, streaming-based densification.

- Dynamic and time-varying scenes (robotics, entertainment)

- Support moving objects and temporal consistency for interactive, dynamic simulations or live telepresence.

- Dependencies: Temporally coherent optimization, streaming updates, occlusion-aware segmentation/extraction.

- On-device and mobile training (consumer AR, field ops)

- Perform capture-to-mesh on smartphones/AR headsets within minutes, enabling instant scene use.

- Dependencies: Mobile GPU acceleration, energy constraints, incremental training with limited memory.

- Autonomous driving and advanced robotics simulation (mobility, safety)

- Convert real roads and facilities into physics-ready simulators for planning and perception testing at scale.

- Dependencies: Generalization beyond training orbit; improved contact and friction modeling; semantic labeling; support for sensor simulation.

- Standardization and policy frameworks for interoperable mesh outputs (policy, industry consortia)

- Define interchange formats, provenance, and privacy redaction standards for photogrammetry-to-mesh pipelines.

- Dependencies: Multi-stakeholder collaboration; conformance tests; audit trails for mask-driven edits.

- Healthcare training and clinical environment rehearsal (healthcare, edtech)

- VR training in patient wards/ORs; quick edits to enforce privacy; physics for movement/ergonomics.

- Dependencies: Regulatory approvals; measurement fidelity; integration with hospital IT and safety protocols.

- Advanced relighting and material editing in engines (media, design)

- Extend beyond vertex color (SH) to PBR materials and controllable relighting with physically-based shading.

- Dependencies: Learned material properties, consistent UVs/texturing, engine-side tooling.

- Real-time capture for collaborative design (AEC, product design)

- Multi-user capture/edit sessions with immediate mesh updates and shared object-level operations.

- Dependencies: Low-latency distributed training, conflict resolution, consistent segmentation across users.

- Cloud services and marketplaces around capture-to-mesh (software platforms)

- Managed services offering training, edit tools, and engine plugins with SLAs and cost control.

- Dependencies: Secure data handling, predictable training performance across varied scenes, pricing models.

- Environmental impact modeling (energy, resilience)

- Use physics-ready digital twins to simulate hazards, evacuation paths, and energy flows in facilities.

- Dependencies: Integration of physical properties, validation against ground truth, scenario authoring tools.

Glossary

- 3D Delaunay triangulation: A tetrahedralization method that maximizes the minimum dihedral angles by ensuring no point lies inside any tetrahedron’s circumsphere; used to initialize meshes from sparse points. "We start from sparse SfM points and apply 3D Delaunay triangulation to obtain an initial mesh."

- 3D Gaussian Splatting: An explicit radiance-field representation that models scenes with millions of Gaussians and renders them via splatting for fast, high-fidelity training and inference. "Kerbl \etal \cite{Kerbl20233DGaussian} introduced 3D Gaussian Splatting, representing scenes as large sets of Gaussian primitives"

- Anti-aliasing: Techniques (e.g., supersampling and filtering) to reduce jagged artifacts from sampling discrete pixels. "Anti-aliasing. To mitigate aliasing artifacts, we render at the target resolution and then downsample to the final resolution using area interpolation, which averages over input pixel regions and acts as an anti-aliasing filter."

- Anisotropic Gaussians: Gaussian primitives with direction-dependent covariance (elliptical in 2D, ellipsoidal in 3D), allowing oriented, elongated blurs. "showed that it is possible to fit millions of anisotropic Gaussians in just minutes, enabling real-time rendering with high fidelity."

- Barycentric coordinates: Weights associated with a triangle’s vertices that linearly interpolate attributes (e.g., color) across the triangle. "by interpolating the vertex colors using barycentric coordinates"

- Bernoulli sampling: Random selection where each candidate is independently chosen with some probability; used here to decide densification candidates. "using Bernoulli sampling."

- Densification: The process of adding more primitives (triangles/vertices) to increase detail where needed during training. "Densification is performed by midpoint subdivision, introducing new vertices and triangles while preserving connectivity."

- Differentiable rendering: Rendering formulations that allow gradients to flow from image-space losses back to scene parameters for end-to-end optimization. "Differentiable rendering enables end-to-end optimization by propagating image-based losses back to scene parameters"

- Extrinsic parameters: Camera pose parameters (rotation and translation) that map world coordinates to the camera coordinate frame. "the intrinsic camera matrix and the extrinsic parameters, i.e., rotation and translation "

- Incenter: The point inside a triangle equidistant from all edges; used as a reference for the window function. "the incenter of the projected triangle (\ie, the point inside the triangle with minimum signed distance)."

- Intrinsic camera matrix: The matrix encoding camera internal parameters (focal lengths, principal point) used in pinhole projection. "The projection is defined by the intrinsic camera matrix and the extrinsic parameters"

- LPIPS: A learned perceptual image similarity metric correlating with human judgments, used to evaluate rendering quality. "We evaluate the visual quality using standard metrics: SSIM, PSNR, and LPIPS."

- Marching Tetrahedra: An isosurface extraction algorithm operating on tetrahedral grids to produce meshes from implicit fields. "and applying Marching Tetrahedra on Gaussian-induced tetrahedral grids"

- Markov chain Monte Carlo (MCMC): A family of sampling methods using Markov chains to draw proposals according to a target distribution; used to guide densification. "we adopt a probabilistic MCMC-based framework~\cite{Kheradmand20243DGaussian} to progressively introduce additional triangles."

- Mesh-Based Novel View Synthesis: The task of synthesizing new views directly from a mesh representation, emphasizing mesh fidelity. "we follow MiLo \cite{Guedon2025MILo-arxiv} and adopt the task of Mesh-Based Novel View Synthesis, which assesses how well reconstructed meshes represent complete scenes."

- Midpoint subdivision: A mesh refinement step that splits a triangle into four by connecting edge midpoints, preserving connectivity. "midpoint subdivision: the midpoints of the three edges of a selected triangle are connected, splitting it into four smaller triangles."

- Non-convex mesh collider: A physics collider that uses the full (possibly concave) mesh surface for collision detection in game engines. "an off-the-shelf non-convex mesh collider implementation, specifically the one provided in the Unity game engine."

- Opacity floor: A lower bound on opacity enforced during training to gradually push primitives toward full opacity. "where denotes the opacity floor."

- Pinhole camera model: A projection model mapping 3D points to 2D via an idealized aperture, ignoring lens distortion. "using a standard pinhole camera model."

- Poisson reconstruction: A surface reconstruction technique that solves a Poisson equation from oriented points to produce a watertight mesh. "followed by Poisson reconstruction"

- PSNR: Peak Signal-to-Noise Ratio, an image fidelity metric (in dB) comparing reconstructed and ground-truth images. "achieves a 4â10 dB higher PSNR."

- Rasterization: The process of converting geometric primitives into pixel fragments for rendering images. "The rasterization process begins by projecting each 3D vertex"

- Ray tracing: Rendering by simulating light transport along rays through the scene to compute visibility and shading. "and (d) ray tracing"

- Signed distance field (SDF): A scalar field giving signed distances to a surface (negative inside, positive outside, zero on the surface). "The signed distance field (SDF) of the 2D triangle in image space is given by:"

- Spherical harmonics: A set of orthonormal basis functions on the sphere used to compactly represent view-dependent color/lighting. "For all experiments, we set the spherical harmonics to degree 3"

- SSIM: Structural Similarity Index, a perceptual image quality metric focusing on luminance, contrast, and structure. "We evaluate the visual quality using standard metrics: SSIM, PSNR, and LPIPS."

- Structure-from-Motion (SfM): A pipeline that estimates camera poses and sparse 3D points from multiple images. "calibrated via SfM~\cite{Schonberger2016Structure}, which also provides a sparse point cloud."

- Tetrahedralization: Decomposition of a 3D volume or point cloud into tetrahedra, often used for mesh initialization. "a tetrahedralization, from which we extract all unique triangles."

- Transmittance: The cumulative fraction of light not yet absorbed along a ray; used in compositing weights. "with denoting transmittance"

- Triangle soup: An unstructured collection of triangles lacking explicit connectivity information. "When its triangle soup is rendered in a game engine, a noticeable drop in visual quality occurs"

- Truncated Signed Distance Fields (TSDF): SDFs clamped to a finite band around the surface, commonly used for robust surface extraction. "extracting surfaces via truncated signed distance fields (TSDF)"

- Volume rendering weight: The compositing weight of a primitive in front-to-back rendering, typically transmittance times opacity. "the maximum volume rendering weight (with denoting transmittance and opacity)"

- Watertight mesh: A closed mesh without holes, suitable for robust rendering and simulation. "produce watertight and fully connected meshes"

- Window function: A smooth indicator used to weight pixel contributions within a primitive’s footprint. "The window function is then defined as:"

- Zero-level set: The locus where an implicit function equals zero; often used to define surfaces. "the triangle is given by the zero-level set of the function ."

Collections

Sign up for free to add this paper to one or more collections.