- The paper introduces a novel framework that employs compositional prototypes to facilitate cross-embodiment skill transfer using unpaired human and robot demonstrations.

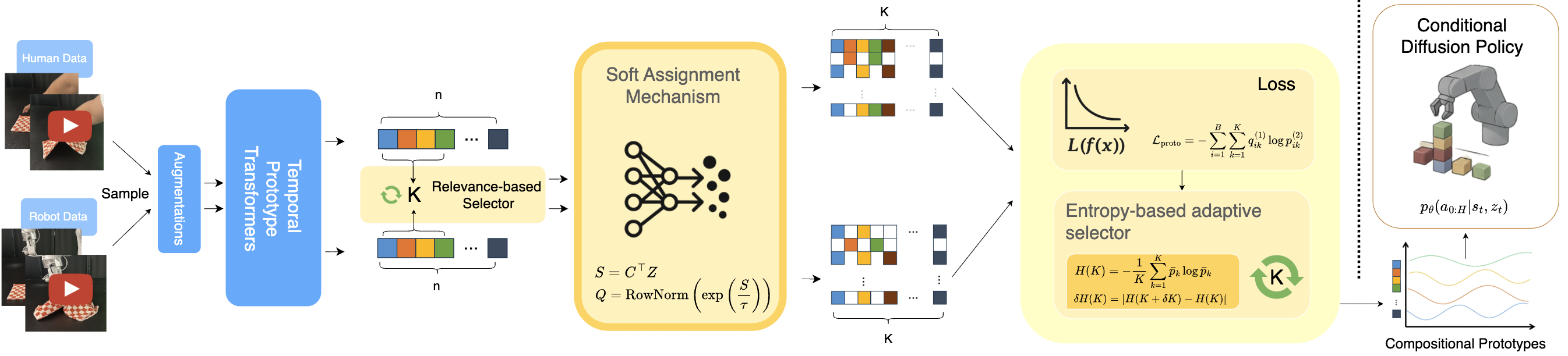

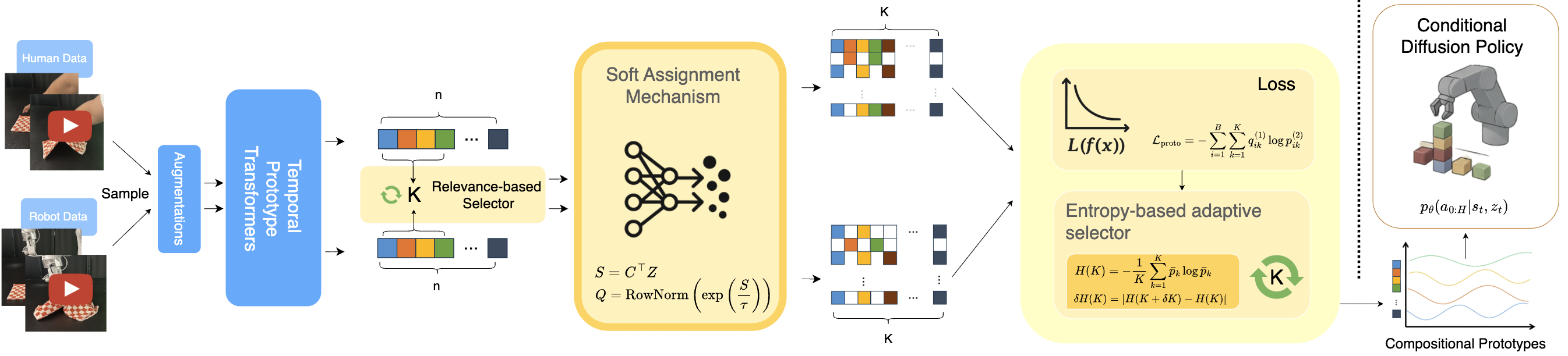

- It uses a soft assignment mechanism and adaptive prototype selection to dynamically align skill primitives, ensuring scalable and efficient learning.

- Experimental evaluations in simulation and real-world scenarios demonstrate improved manipulation performance and generalization compared to existing methods.

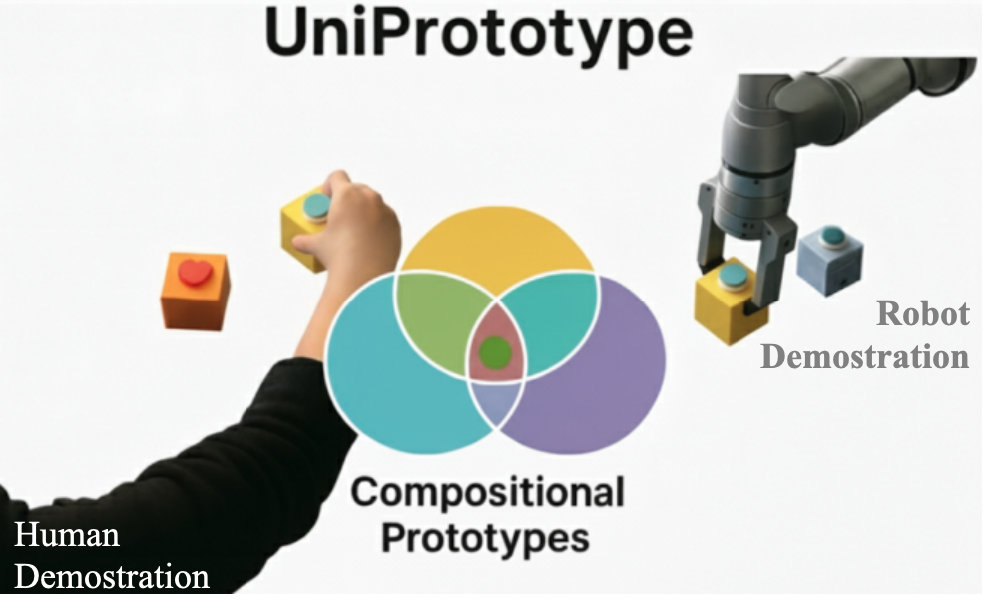

UniPrototype: A Framework for Human-Robot Skill Transfer

Introduction

"UniPrototype" presents a novel framework in the field of human-robot skill learning, leveraging the concept of compositional prototypes to bridge the embodiment gap between humans and robots in manipulation skill acquisition. This framework addresses the challenge of data scarcity in robotics by utilizing abundant human demonstration data, while mitigating issues arising from morphological differences between humans and robots. The core innovation of UniPrototype lies in its ability to discover and utilize shared skill prototypes, effectively enabling cross-embodiment transfer of manipulation skills.

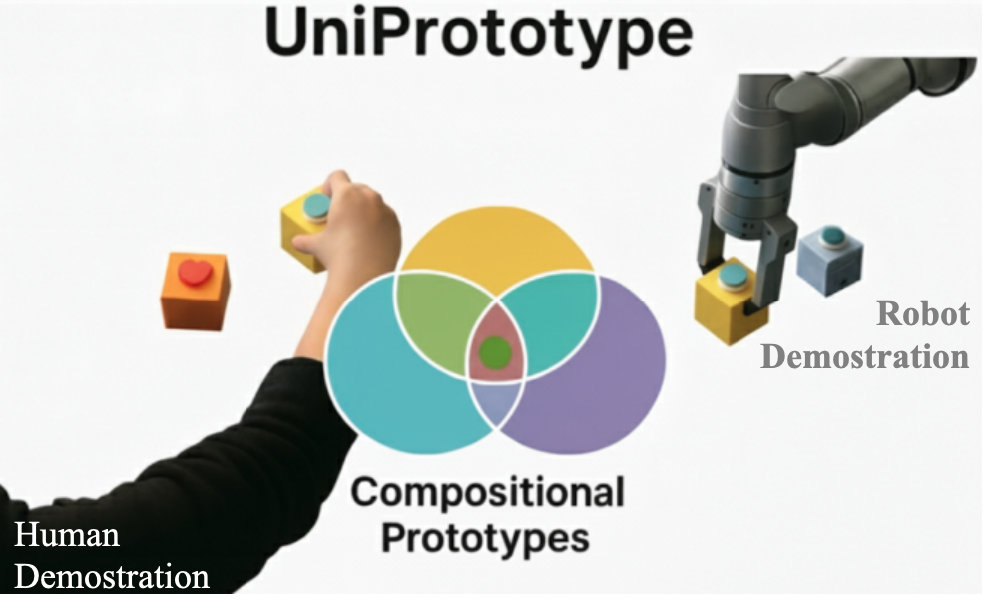

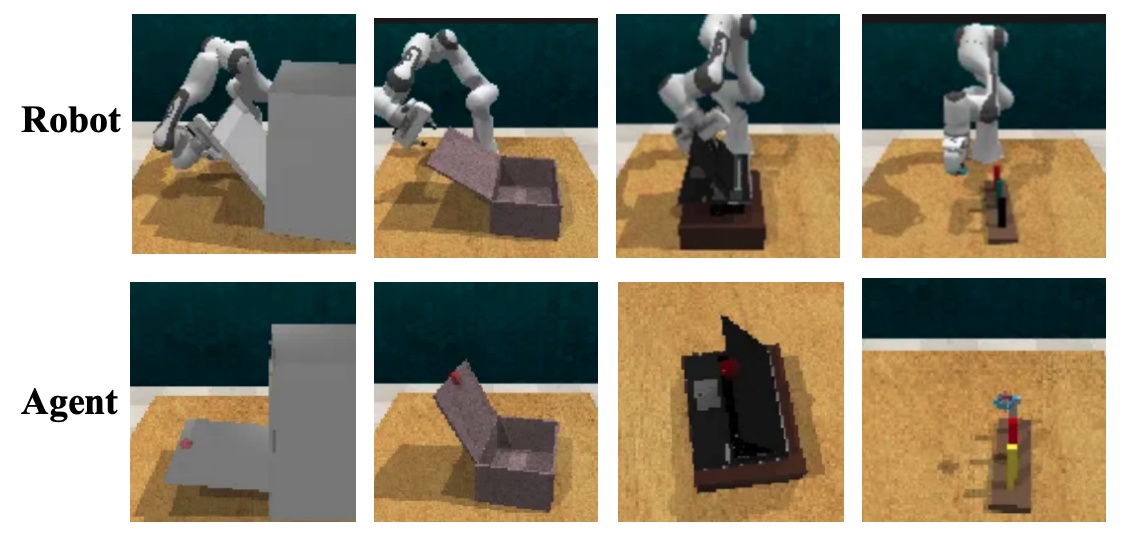

Figure 1: UniPrototype learns compositional skill prototypes from human and robot demonstrations. The framework discovers compositional primitive representations that bridge the embodiment gap between human manipulation and robot execution, enabling effective cross-embodiment transfer.

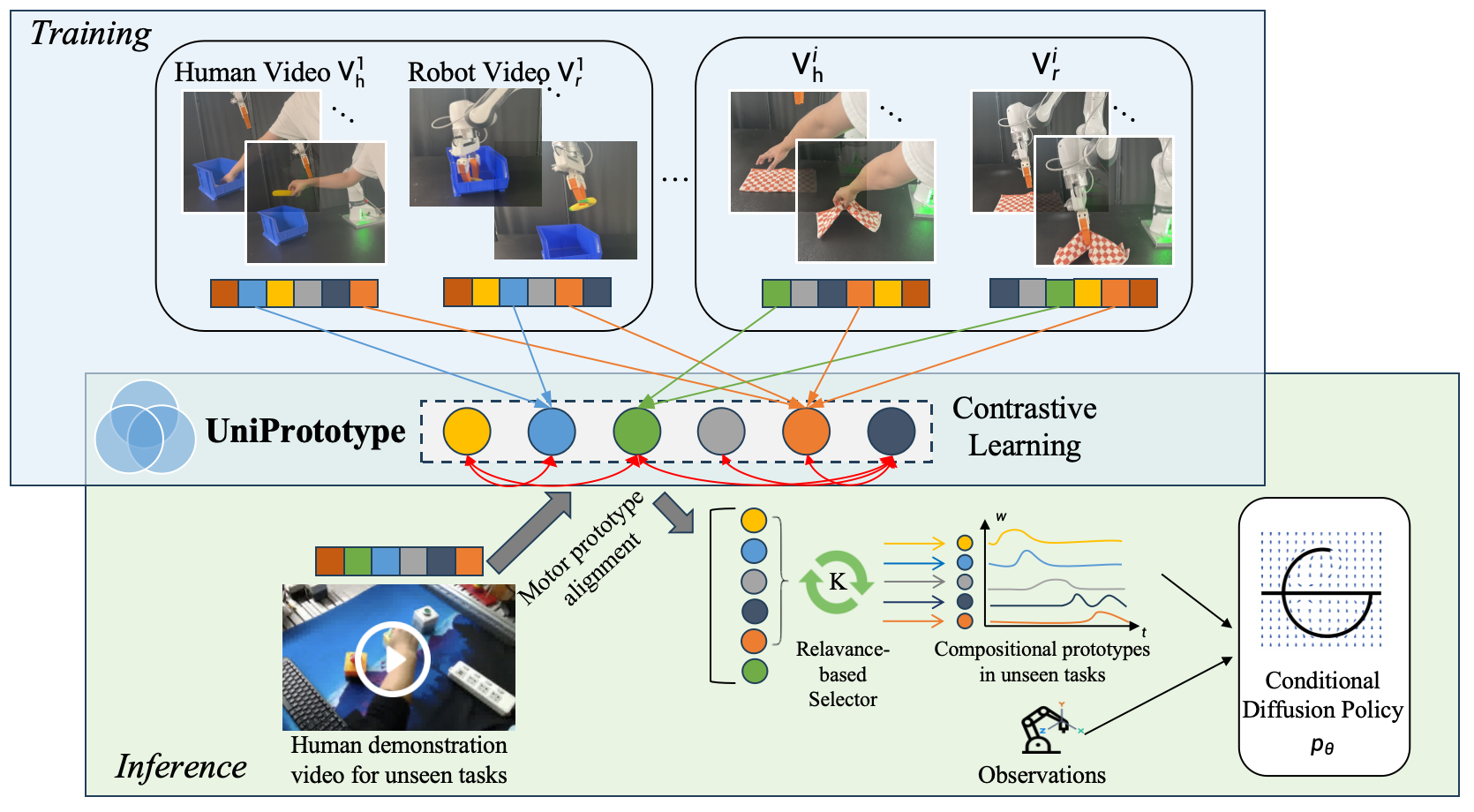

UniPrototype Framework

Compositional Prototype Discovery

The UniPrototype framework operates by discovering compositional prototypes from both human and robot demonstrations. This involves three key mechanisms:

- Soft Assignment Mechanism: UniPrototype departs from traditional clustering by allowing multiple prototypes to be activated simultaneously, capturing the inherent compositionality of manipulation skills. This is crucial for accurately representing blended skills like pouring, which involve simultaneous execution of lifting, rotating, and holding actions.

- Adaptive Prototype Selection: Utilizing an entropy-based criterion, UniPrototype automatically adjusts the number of prototypes to match the complexity of the task at hand. This ensures scalable representation without manual hyperparameter tuning, accommodating both simple and complex tasks.

- Shared Representational Space: By aligning prototypes from human and robot demonstrations into a shared space, UniPrototype facilitates the transfer of skills without the need for paired demonstrations, making the process both efficient and flexible.

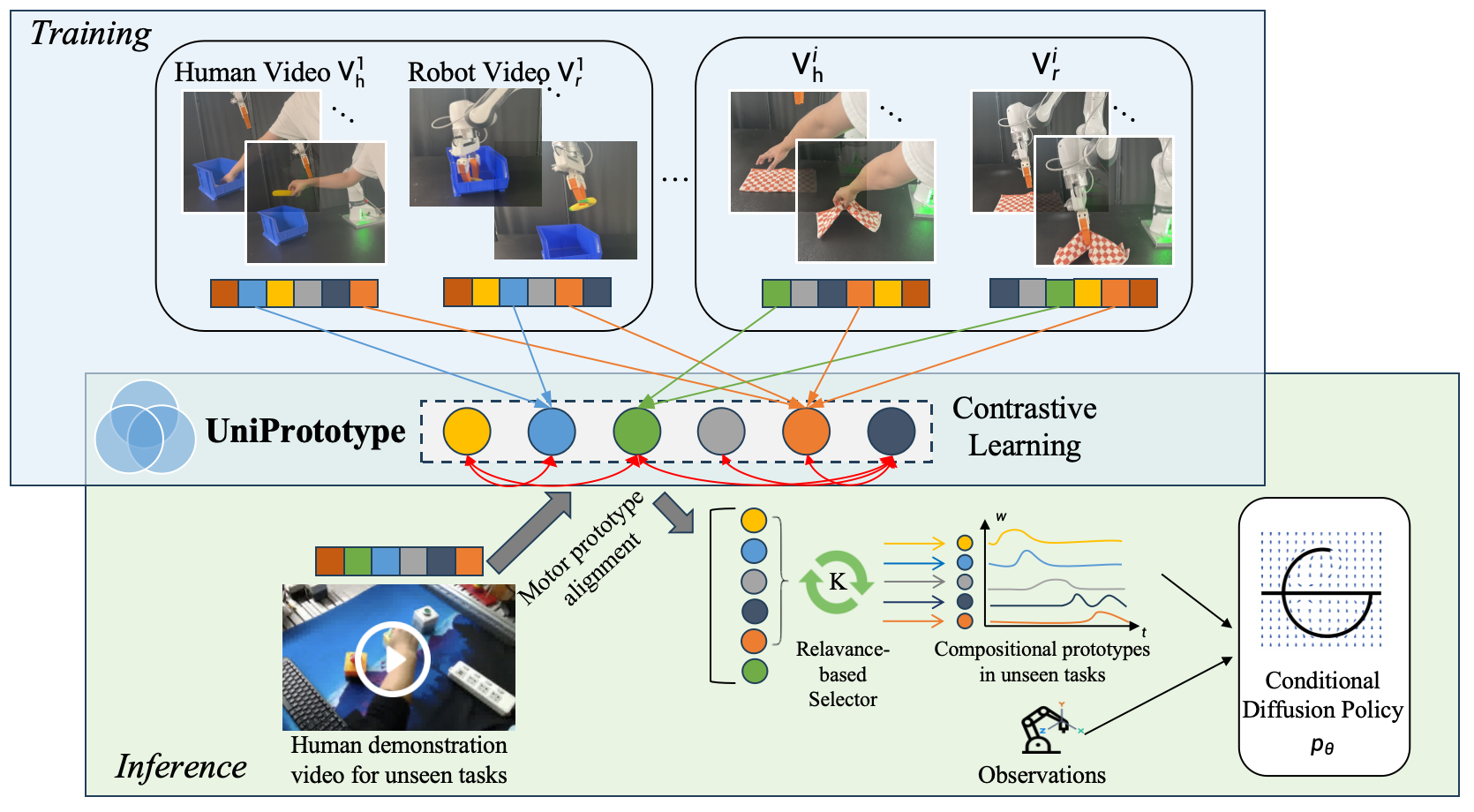

Figure 2: Overview of the UniPrototype framework. Given unpaired human and robot demonstrations, UniPrototype learns compositional prototype sequences that capture shared skill primitives across embodiments. A temporal skill encoder extracts sequence features, which are then clustered through prototype discovery with a soft assignment mechanism.

Learning Compositional Policies

To execute the learned prototypes, UniPrototype utilizes a diffusion-based policy architecture that leverages the compositional representation to generate robot actions. This architecture enables the generation of smooth transitions and novel behaviors by recomposing known prototypes in new sequences. The policy architecture handles the multimodal action distributions that arise from the compositional nature of skills.

Flexible Task Execution

In the inference phase, UniPrototype performs novel tasks through a Skill Alignment Module (SAM) that dynamically aligns the robot's current state with the extracted prototype sequence from human demonstrations. This ensures robust execution despite variations in speed, embodiment, and environmental conditions, thus supporting effective one-shot imitation.

Figure 3: Training flow of UniPrototype. The framework augments raw data and encodes it using temporal prototype transformers, allowing multiple primitives to activate simultaneously through a soft assignment mechanism.

Experimental Evaluation

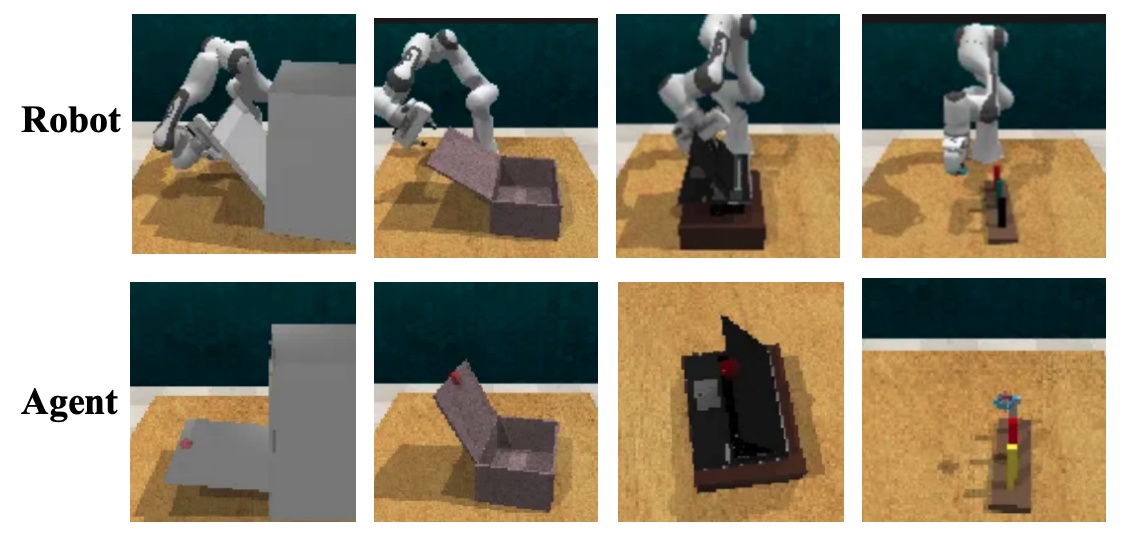

Simulation and Real-World Experiments

UniPrototype's effectiveness is validated through extensive experiments in both simulated environments (utilizing RLBench) and real-world scenarios. The framework demonstrates superior performance in cross-embodiment transfer, achieving significant improvements over existing methods in both simulation tasks and real-world executions.

Figure 4: Cross-embodiment transfer in simulation across diverse manipulation tasks: UniPrototype demonstrates robust skill transfer between robot and humanoid agent embodiments.

Prototype Utilization

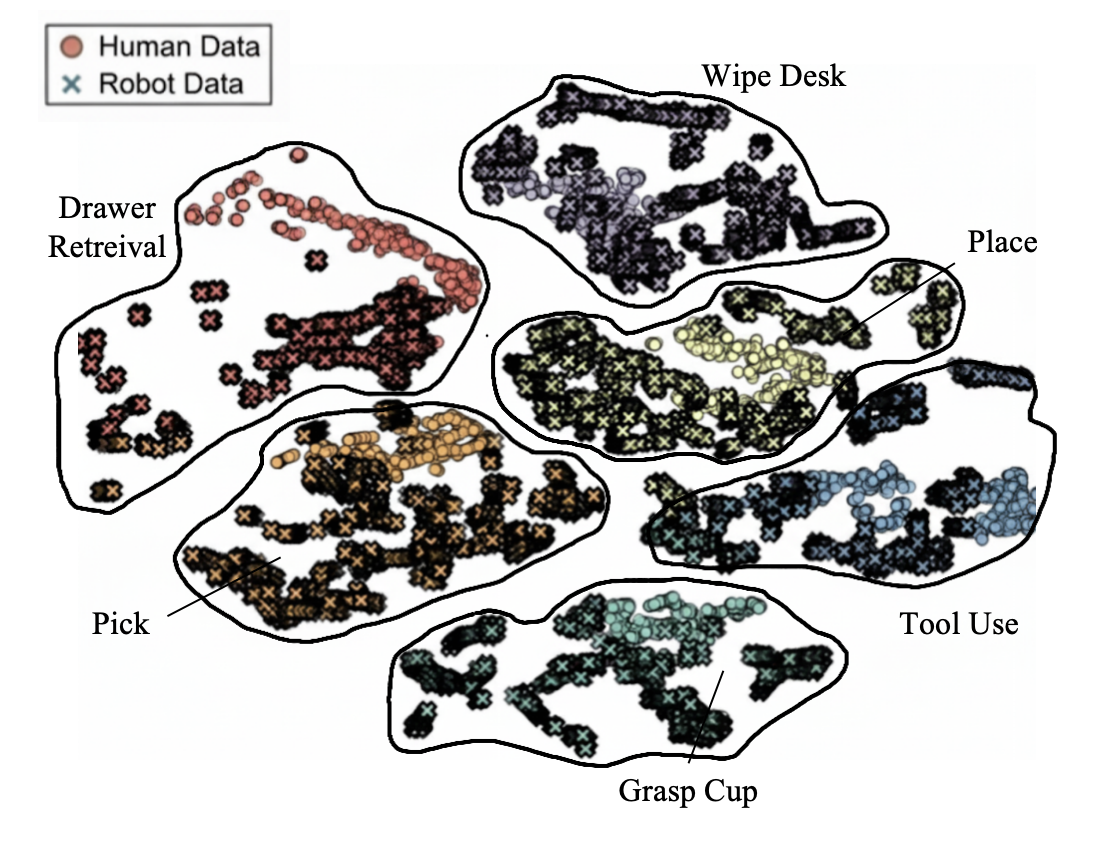

Empirical results indicate that adaptive prototype selection effectively matches task complexity, with the number of prototypes scaling with the complexity of the manipulation task. This adaptive mechanism enhances the capability of robots to generalize beyond the training distribution.

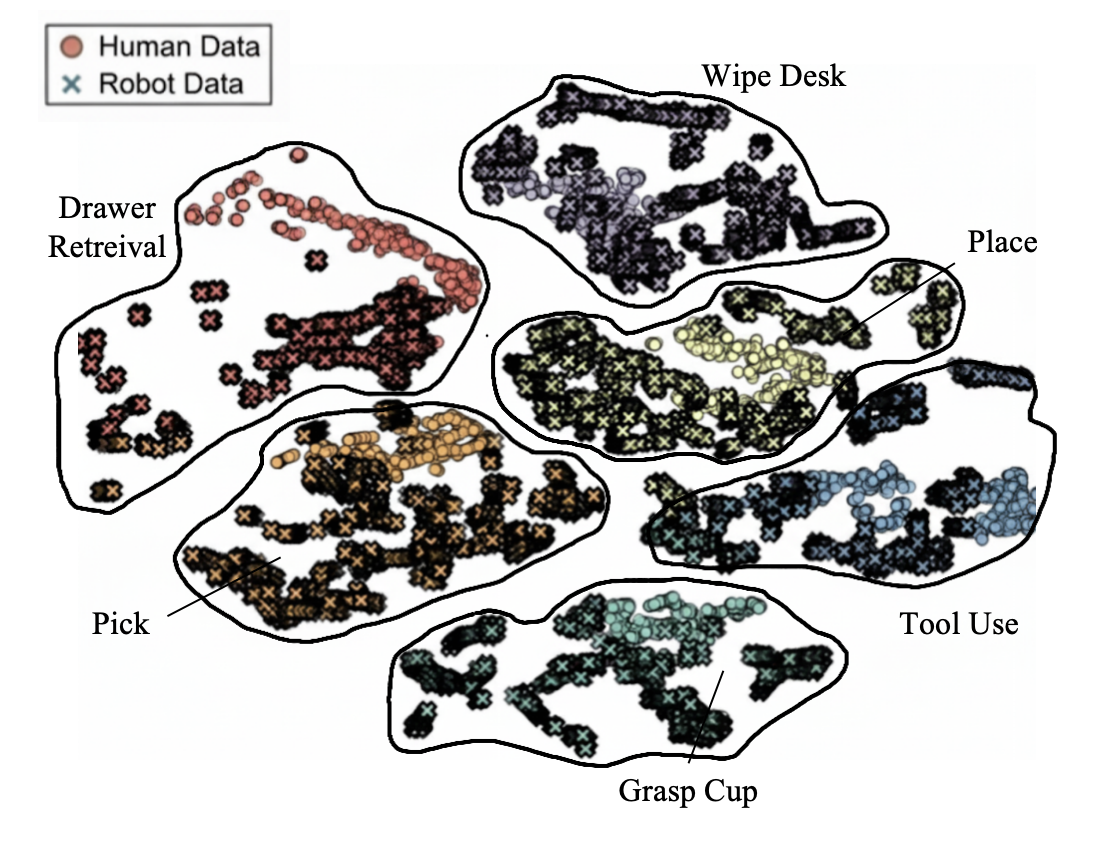

Figure 5: t-SNE visualization of features extracted from human demonstrations and robot executions. The projection reveals six distinct clusters corresponding to manipulation tasks.

Conclusion

UniPrototype provides an innovative approach to human-robot skill transfer by leveraging the concept of compositional prototypes. The framework's ability to discover transferable prototypes and align them across different embodiments enables the exploitation of abundant human demonstration data for effective robot learning. The success in both simulation and real-world tasks underscores the potential of UniPrototype to significantly impact the field of robotics, offering a scalable method for skill acquisition and generalization across diverse manipulation tasks. Future research will explore applying UniPrototype to more unstructured environments and integrating online refinement mechanisms to enhance adaptability.