RLBFF: Binary Flexible Feedback to bridge between Human Feedback & Verifiable Rewards (2509.21319v1)

Abstract: Reinforcement Learning with Human Feedback (RLHF) and Reinforcement Learning with Verifiable Rewards (RLVR) are the main RL paradigms used in LLM post-training, each offering distinct advantages. However, RLHF struggles with interpretability and reward hacking because it relies on human judgments that usually lack explicit criteria, whereas RLVR is limited in scope by its focus on correctness-based verifiers. We propose Reinforcement Learning with Binary Flexible Feedback (RLBFF), which combines the versatility of human-driven preferences with the precision of rule-based verification, enabling reward models to capture nuanced aspects of response quality beyond mere correctness. RLBFF extracts principles that can be answered in a binary fashion (e.g. accuracy of information: yes, or code readability: no) from natural language feedback. Such principles can then be used to ground Reward Model training as an entailment task (response satisfies or does not satisfy an arbitrary principle). We show that Reward Models trained in this manner can outperform Bradley-Terry models when matched for data and achieve top performance on RM-Bench (86.2%) and JudgeBench (81.4%, #1 on leaderboard as of September 24, 2025). Additionally, users can specify principles of interest at inference time to customize the focus of our reward models, in contrast to Bradley-Terry models. Finally, we present a fully open source recipe (including data) to align Qwen3-32B using RLBFF and our Reward Model, to match or exceed the performance of o3-mini and DeepSeek R1 on general alignment benchmarks of MT-Bench, WildBench, and Arena Hard v2 (at <5% of the inference cost).

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

1) What this paper is about

LLMs like chatbots are trained after pretraining to behave helpfully and safely. Two popular ways to do that are:

- RLHF: Reinforcement Learning with Human Feedback, where people judge which answers are better.

- RLVR: Reinforcement Learning with Verifiable Rewards, where a program checks if an answer is correct (for things like math or code tests).

Each has limits. Human judgments can be hard to interpret and sometimes get “tricked” (reward hacking). Verifiers are clear but only cover tasks where “correctness” is easy to check.

This paper proposes a new middle ground called RLBFF: Reinforcement Learning with Binary Flexible Feedback. Instead of just “correct or not,” it uses simple yes/no checks for many different principles people care about (like accuracy, clarity, following instructions, no repetition, code readability). That makes training clearer, more precise, and user-controllable, while still staying flexible beyond pure correctness.

2) The big questions the paper asks

- Can we turn human feedback into clear, yes/no principles (like checklist items) and train reward models to check if an answer meets each principle?

- Will this “binary principles” approach be more accurate, more interpretable, and less easy to game than usual human-preference models?

- Can users pick which principle matters at inference time (for example, judge answers mainly by “clarity” or “accuracy”)?

- Will models trained with this method reach top scores on standard reward-model benchmarks?

- Can this approach help align an open-source LLM to match or beat well-known proprietary models, while being much cheaper to run?

3) How they did it (methods, in plain language)

Think of grading with a checklist. Instead of saying “Response A is better than Response B,” the paper suggests asking questions like:

- “Does the answer contain accurate information?” Yes/No

- “Is the answer clear?” Yes/No

- “Does the answer follow the user’s instructions?” Yes/No

- “Does the answer avoid repetition?” Yes/No

Here’s the approach:

- Start with a big human feedback dataset (HelpSteer3-Feedback). Each example has a prompt, two answers, and human-written comments about those answers.

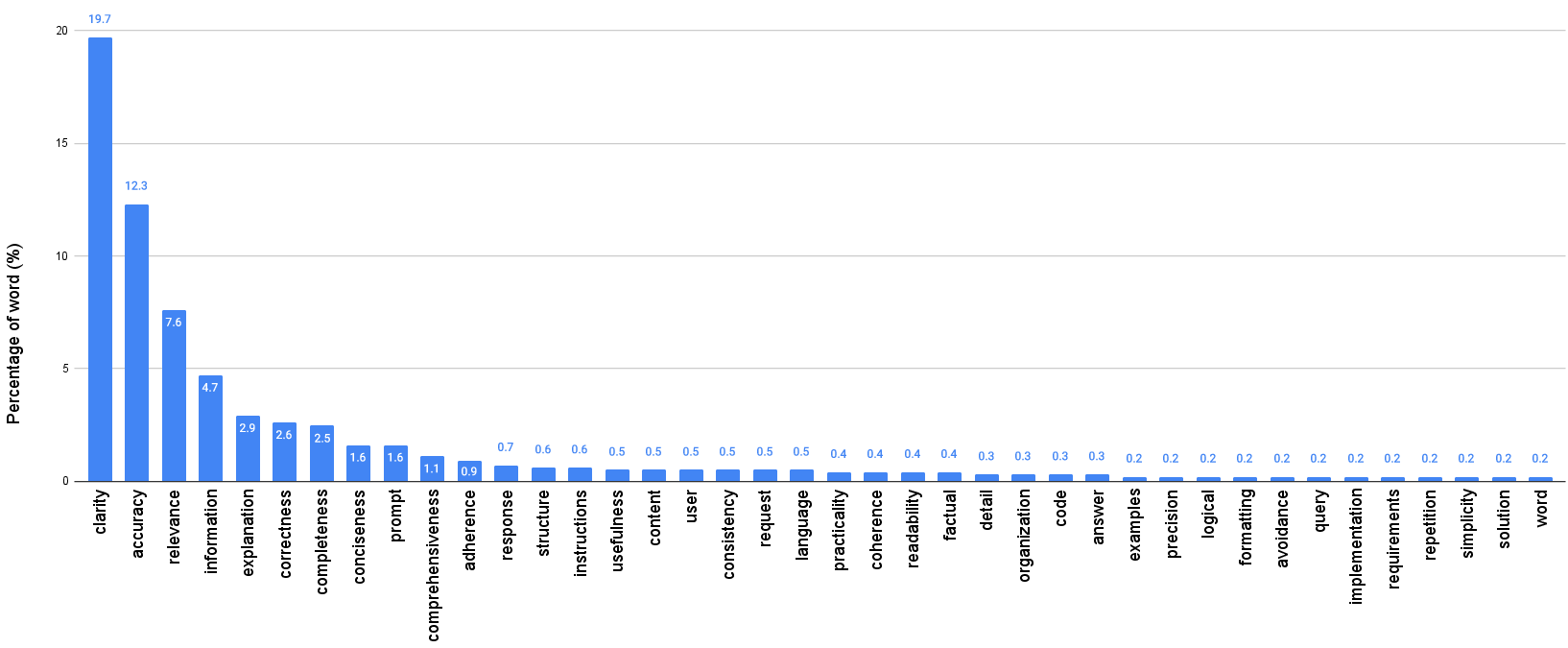

- Use an LLM to read the human comments and extract concrete principles as short phrases (like “accuracy,” “clarity,” “includes inline comments”), and also pull out exact quotes from the comments as evidence. If the evidence doesn’t match the comment text well enough, throw out that principle to avoid hallucinations.

- Remove vague “partial” labels and keep only clear yes/no. Also remove generic “helpfulness” because it mixes many factors.

- Find consensus principles across multiple annotators by measuring similarity (like checking if “accuracy” and “correctness” mean the same thing). Keep only high-confidence, widely agreed principles.

- Verify quality with a small human review: most extracted principles matched the original comments.

Then they trained two types of reward models:

- A fast “Scalar” reward model: it reads the prompt, the answer, and the chosen principle, then outputs a yes/no decision with a confidence score. It only needs about one token to decide, so it’s very fast.

- A “Generative” reward model (GenRM): it reasons step-by-step before making a yes/no decision. This can be more powerful for tasks needing reasoning but is much slower (hundreds to thousands of tokens).

They evaluated on standard benchmarks:

- RM-Bench and JudgeBench (popular tests for reward models).

- PrincipleBench (a new benchmark they created) to check if reward models obey specific principles like clarity, accuracy, no repetition, instruction-following, and language alignment across many domains and languages.

They also used their best reward model to align an open-source LLM (Qwen3-32B) using a training method called GRPO. In simple terms, the LLM proposes several answers, and the reward model judges them based on the chosen principle. The LLM learns to produce answers that satisfy those principles more often.

Small glossary to help:

- LLM: A LLM, like a very advanced auto-complete for text.

- Reward model: A “judge” that scores how good an answer is.

- Binary feedback: Yes/No grading, like checking boxes on a rubric.

- Bradley–Terry model: A system that ranks answers by comparing pairs (A vs. B).

- Reward hacking: When a model finds shortcuts to get high scores without truly improving quality.

- Precision/Recall: Precision = how often the judge is right when it says “good.” Recall = how often the judge recognizes all the truly good answers.

- Verifier: A rule-based checker, often used in math/coding to test correctness.

- Embeddings: A way to measure how similar two phrases are in meaning.

- GRPO: A training technique that compares multiple answers and boosts the better ones according to the judge’s scores.

4) What they found and why it matters

Main results:

- Their “Flexible Principles” reward models hit top scores:

- Scalar (fast) model: RM-Bench 83.6%, JudgeBench 76.3%, PrincipleBench 91.6%.

- Generative (reasoning) model: RM-Bench 86.2%, JudgeBench 81.4% (ranked #1 on the JudgeBench leaderboard as of Sept 24, 2025).

- You can choose the principle at inference time. For example, you can ask the reward model: “Judge mainly by clarity,” or “Judge mainly by instruction-following.” Traditional preference models don’t let you do that.

- The fast Scalar model is extremely quick (often under 0.1 seconds per judgment) and still very strong. The reasoning GenRM is slower but pushes peak scores higher on correctness-heavy benchmarks.

- Their single-answer judging avoids a big problem in pairwise judges: position bias (models liking the first or second answer more just because of order). Many existing generative reward models struggled on JudgeBench because of this.

- On PrincipleBench, Scalar models generally beat reasoning models. Likely because reasoning models focus too much on “correctness,” while PrincipleBench tests broader qualities (clarity, repetition, formatting, following instructions, multilingual alignment).

- Aligning Qwen3-32B with this method improved it across major benchmarks:

- MT-Bench: 9.50

- Arena Hard v2: 55.6

- WildBench overall: 70.33

- These results are comparable to or better than o3-mini and DeepSeek R1, but at less than 5% of their inference cost.

Why that’s important:

- The approach combines the strengths of human feedback (rich, flexible) with the clarity of verifiers (yes/no).

- It reduces reward hacking by making each judgment about a specific principle, not a vague “helpfulness.”

- It expands beyond pure correctness, so models can be trained and judged on things people actually care about: clarity, relevance, completeness, style, language, etc.

- It gives users control: different tasks and audiences can set different priorities.

5) What this could change going forward

- More interpretable AI training: Clear yes/no principles make it easier to understand why an answer scored well or poorly.

- Safer, higher-quality outputs: By checking many dimensions (not just correctness), models can become clearer, more honest, and better at following instructions.

- User customization: Teachers, developers, or companies can choose the principle mix that matters to them.

- Lower costs: Strong alignment can be achieved with open models and open data, at a fraction of the price of proprietary systems.

- Better benchmarks: PrincipleBench highlights qualities that matter in real-world use, not only whether an answer is “correct.”

In short, RLBFF turns human feedback into a simple, flexible checklist that models can learn from. It helps train better “judges,” which then train better “writers.” That leads to chatbots that are not only correct, but also clear, relevant, and aligned with what users actually need—without breaking the bank.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a consolidated list of specific gaps and unresolved questions that future work could address:

- Principle extraction reliability: The pipeline relies on a single LLM (DeepSeek V3-0324) with zero-shot prompts and a fuzzy string-match threshold (partial_ratio > 60) to validate cited evidence; there is no systematic ablation of this threshold, nor a larger-scale human audit across domains to quantify false positives/negatives and hallucination rates.

- Limited human verification: Only 126 samples with 3 annotators (Fleiss’ κ = 0.447) were checked; broader, stratified validation across General/STEM/Code/Multilingual subsets, longer contexts, and more nuanced principles is missing.

- Aggressive low-recall consensus filtering: The 0.8 cosine-similarity consensus threshold (Qwen3-8B Embedding) keeps ~100k/1.2M raw principles and only ~33k “unique meanings,” likely discarding valid but less common principles; the impact on coverage, domain balance, and downstream generalization is not quantified beyond a single threshold ablation.

- Embedding-based grouping fidelity: Using cosine similarity for principle clustering risks conflating antonyms/negations or collapsing semantically distinct aspects; no targeted tests for failure modes (negation, polysemy, compositional principles) are reported.

- Removal of “partial” labels: Discarding “partially” fulfilled principles (13.8%) oversimplifies graded qualities; the utility of ternary/ordinal labeling or calibrated thresholds for partial satisfaction remains unexplored.

- Negation robustness: The dataset contains negated principles (e.g., “avoidance of repetition”), but model sensitivity to negation (and double negation) is not evaluated via minimal-pair tests.

- Out-of-distribution principle generalization: The Flexible Principles models are trained on 1,414 unique principles; their ability to handle user-specified, novel, compositional, or longer principle phrases at inference time is not tested.

- Multi-principle aggregation: The paper does not propose or validate principled methods for combining multiple user-selected principles (e.g., weighting, Pareto optimization) or resolving conflicts (conciseness vs. completeness) during scoring or RL training.

- Actor conditioning on principles: The alignment RL trains an actor not conditioned on principles, optimizing rewards over varying principles it never sees; whether principle-aware conditioning would yield controllable, principle-specific generation is untested.

- Reward hacking and spurious correlations: While RLBFF aims to reduce reward hacking, there is no targeted adversarial evaluation (e.g., length, verbosity, hedging, sycophancy, style artifacts) to quantify residual vulnerabilities in ScalarRM/GenRM or the aligned policy.

- Calibration and comparability: The use of log p(Yes) − log p(No) as a scalar score is not calibrated across different principles; how to normalize across heterogeneous principles and set robust decision thresholds remains open.

- PrincipleBench scope: PrincipleBench is small (487 samples), covers a limited set of binary aspects, and draws from one source (HelpSteer3 validation); expansion to more principles, harder compositional cases, more languages, and out-of-domain prompts is needed.

- Data leakage and benchmark overlap: There is no explicit de-duplication or overlap analysis between training data and RM-Bench/JudgeBench/PrincipleBench prompts/responses; potential leakage may inflate reported gains.

- Baseline parity and templates: Some baselines are reported from papers or HF defaults; strict parity on prompt templates, tokenization, decoding settings, and parameter counts is not ensured, which may confound comparisons.

- GenRM correctness bias: The GenRM initialized from a reasoning model appears to over-emphasize correctness (strong on RM-Bench/JudgeBench, weaker on PrincipleBench aspects like clarity and repetition); alternative initializations or joint objectives to balance non-correctness principles are untested.

- Multilingual generalization: Feedback (and extracted principles) are in English, while tasks include 13 natural languages; whether principles can be specified and reliably judged in non-English (or mixed-language) settings is not evaluated.

- Code-specific validity: For code-related principles (e.g., readability, commenting), there are no functional checks (execution/tests) to disambiguate correctness vs. style; the interaction between style principles and functional correctness remains unmeasured.

- Verifier-equivalence claim: The claim that RLBFF reduces failures to recognize equivalent correct answers (e.g., 3 hours vs. 180 minutes) is not substantiated by dedicated equivalence tests or ablations vs. RLVR baselines.

- Evidence-citation quality: Beyond fuzzy matching, there is no analysis of whether highlighted evidence reliably supports the principle decision (e.g., entailment consistency checks, human audits of evidence sufficiency).

- Sensitivity to hyperparameters: The extraction prompt, evidence thresholds, and token choices for Yes/No are not systematically ablated; impacts on stability, calibration, and performance are unknown.

- Principle distribution bias: The high-precision filtering and removal of “helpfulness” shift the principle distribution; effects on long-tail principles, fairness across domains, and subgroup preferences (e.g., regional/cultural variation) are not examined.

- Position and order biases beyond pairwise: While pairwise position bias is analyzed for GenRMs, potential biases in single-response settings (e.g., order of multi-turn context, placement or phrasing of the principle) are not measured.

- Online/continual learning: The feasibility of continuously extracting principles from fresh, noisy human feedback streams and updating RLBFF/RMs without catastrophic forgetting or drift is not explored.

- Practical scalability: The compute and latency costs of the full principle-extraction-and-filtering pipeline (1.2M → ~100k) are not reported; guidance for scaling to web-scale feedback or enterprise deployments is absent.

- Safety-specific principles: The Safety results are reported, but the method’s ability to enforce nuanced policy constraints (multi-rule, hierarchical, context-sensitive safety principles) or to interoperate with programmatic/verifiable safety checkers is not evaluated.

- Personalized alignment: RLBFF suggests user-controllable principles, but there is no paper of personalization across demographics or tasks, nor safeguards against encoding harmful or biased user-specified principles.

- Process-level feedback: The method evaluates final answers; integration with process reward models (step-level checks) or detection of reasoning-process errors is not investigated.

- Release and reproducibility details: PrincipleBench depends on additional labels “obtained from the authors”; clarity on full public release, licensing, and exact construction scripts is needed to guarantee third-party reproducibility.

- Robustness to adversarial principles: The system is not tested against misleading, inconsistent, or adversarially phrased principles (e.g., ambiguous scope, overlapping constraints), nor on principle injection attacks in multi-turn settings.

- Cross-principle consistency: No analysis ensures that logically related principles (e.g., “no repetition” vs. “avoid redundancy”) yield consistent judgments; consistency checks across a principle ontology/taxonomy are missing.

Practical Applications

Immediate Applications

Below is a concise set of actionable, real-world uses that can be deployed now, organized across sectors and roles. Each item notes the core tool/workflow and key feasibility dependencies.

- Principle-controllable LLM evaluation and response gating (Software, Content Platforms)

- What: Use the Flexible Principles Scalar Reward Model (Scalar RM) to score N candidate responses against user-specified binary principles (e.g., accuracy, relevance, clarity), then route or select the best.

- How: “Principle-Gated Inference” wrapper that generates candidates, scores each with a single-token “Yes/No” judgment per principle, and picks/ensembles the output.

- Dependencies/assumptions: Clear principle phrasing; stable prompt templates; tasks evaluable as binary criteria; base LLM quality for equivalence recognition.

- Safety and policy compliance auditing with evidence (Policy, Trust & Safety; Healthcare; Finance; Legal)

- What: Apply RLBFF-based checks (e.g., “no harmful content,” “includes disclaimers,” “respects privacy”) and log cited evidence spans for audit trails.

- How: “Safety Verifier++” employing binary principle checks with explainable evidence spans; integrate with existing moderator pipelines.

- Dependencies/assumptions: Curated, domain-aligned principle inventories; governance for logs; accurate evidence extraction from feedback.

- Code assistant quality gate in IDEs and CI/CD (Software Engineering)

- What: Enforce code principles such as “includes inline comments,” “readable,” “tests included,” “security-safe patterns.”

- How: IDE plugin and CI stage that runs Scalar RM checks on generated diffs/snippets, blocking merges that fail selected principles.

- Dependencies/assumptions: Language-aware formatting; mapping code style guides to binary principles; reliable scoring for multiple programming languages.

- Multilingual prompt compliance and localization QA (Customer Support; Education; Global Ops)

- What: Check “response follows prompt language,” “no repetition,” “relevance” across 10+ languages at low latency.

- How: Use Scalar RM in chat routing to enforce language alignment and core quality principles; translate or regenerate on failure.

- Dependencies/assumptions: Multilingual principle prompts and test fixtures; robust handling of mixed-language contexts.

- Vendor/model benchmarking beyond correctness (Procurement; Academia; R&D)

- What: Adopt PrincipleBench to evaluate adherence to non-correctness aspects (clarity, absence of repetition, language alignment) in addition to RM-Bench/JudgeBench.

- How: Standardize internal evaluations and vendor trials with PrincipleBench; weight principles per deployment needs.

- Dependencies/assumptions: Benchmark availability and reproducibility; principle coverage aligns with operational priorities.

- Cost-efficient alignment of internal LLMs (Startups; Enterprises; Academia)

- What: Use the open RLBFF recipe (GRPO + Flexible Principles GenRM) to align Qwen3-32B-like models to near-SOTA performance at <5% inference cost vs. proprietary baselines.

- How: “RLBFF Alignment Kit” to train on open data with principle-conditioned rewards; deploy in existing serving stacks.

- Dependencies/assumptions: Access to compute for training; compatibility with serving infra; alignment goals overlap with benchmark domains.

- Reduce reward hacking in RLHF pipelines (ML Platform/Training Teams)

- What: Replace or augment pairwise preference signals with explicit binary principles to cut over-optimization on spurious features (e.g., length, belief-matching).

- How: Convert human feedback to principle labels using the described extraction+filtering pipeline; retrain reward models on principled signals.

- Dependencies/assumptions: Sufficient human feedback; high-precision filtering (embedding similarity threshold, evidence checks); operational integration.

- Position bias mitigation in evaluation and ranking (Product QA; A/B Testing; Academia)

- What: Score single responses per principle to avoid pairwise ordering bias identified in many GenRMs.

- How: Switch gating from pairwise to per-response Scalar RM judgments; require agreement across multiple principle checks.

- Dependencies/assumptions: Well-defined principle sets; acceptance of single-response scoring in QA processes.

- Human-in-the-loop feedback transformation for training data ops (Product Operations; Community Platforms)

- What: Convert natural language user feedback into binary principle labels with evidence spans, creating high-precision reward training datasets.

- How: Deploy the extraction pipeline (LLM + RapidFuzz + embedding consensus filter) to produce principled labels from reviews/support transcripts.

- Dependencies/assumptions: Data access and consent; pipeline calibration (similarity threshold ~0.8); ongoing spot checks to prevent drift.

- Personalized assistants with principle toggles (Consumer Apps; Productivity Tools)

- What: User-configurable sliders/toggles (e.g., “concise,” “formal,” “no repetition,” “include steps”) to steer response selection.

- How: UI + Scalar RM scoring per selected principle; fast reranking to maintain sub-100ms latencies.

- Dependencies/assumptions: Mapping UX controls to principle prompts; clear defaults/weights; user education on trade-offs.

Long-Term Applications

These opportunities require further research, scaling, standardization, or domain integration before broad deployment.

- Certified principle-driven LLMs for regulated domains (Healthcare; Finance; Legal; Public Sector)

- What: Standardized, auditable principle sets (e.g., “medical disclaimer present,” “no unverified diagnosis,” “risk disclosure intact”) with evidence-based compliance logs.

- How: Regulator-endorsed rubrics and test suites; formal reporting; third-party audits using RLBFF-style verifiers.

- Dependencies/assumptions: Standards (NIST/ISO-like) and legal acceptance; coverage of edge cases; domain-specific correctness verifiers layered on RLBFF.

- Continual principle mining and adaptive alignment from live feedback streams (Large Platforms; Enterprise Knowledge Ops)

- What: Real-time ingestion of user feedback to discover new principles, update inventories, and retrain reward models continuously.

- How: Streaming principle extraction, consensus filtering, drift detection, and safe deployment pipelines.

- Dependencies/assumptions: Privacy, consent, and governance; robust anti-hallucination measures; ops maturity for continuous retraining.

- Robust Generative Reward Models for non-correctness dimensions (AI Research; Model Providers)

- What: Close the performance gap seen in PrincipleBench by teaching GenRMs to reason about clarity, tone, formatting, repetition—not just correctness.

- How: New objectives, curricula, and datasets emphasizing non-STEM aspects; process reward models that “think” about style and structure.

- Dependencies/assumptions: Improved training recipes; better reasoning prompts; balancing latency vs. multi-step deliberation.

- Multi-principle optimization during generation (Enterprise Product; Personalization)

- What: Optimize jointly for multiple principles with tunable weights (e.g., 40% accuracy, 30% clarity, 30% brevity), exposing trade-offs to users and teams.

- How: Multi-objective RL or inference-time reranking blending several binary judgments into a composite score; interactive weight controls.

- Dependencies/assumptions: Stable aggregation strategy; explainability for trade-offs; avoidance of pathological behaviors across combined principles.

- Agent ecosystems negotiating principles with users and other agents (Enterprise Workflows; Multi-agent Systems)

- What: Agents propose, negotiate, and adhere to principle profiles appropriate for tasks (e.g., legal vs. marketing), with logs and accountability.

- How: Protocols for principle discovery, agreement, and enforcement; cross-agent auditing via RLBFF verifiers.

- Dependencies/assumptions: Standards for inter-agent coordination; user preference modeling; safeguards against gaming.

- Cross-domain, multilingual, and code-stack expansion (Global Products; Developer Tools)

- What: Principle libraries spanning 100+ natural languages and 20+ programming ecosystems, with localized evidence and QA.

- How: Large-scale data collection; domain experts to curate and validate principle sets; continuous benchmark expansion.

- Dependencies/assumptions: Data diversity and quality; consistent binary semantics across cultures/languages; localization workflows.

- Safety-critical monitoring in robotics and autonomous systems (Robotics; Automotive; Industrial IoT)

- What: Real-time principle monitors for instruction clarity, ambiguity avoidance, and procedural safety in language-driven control.

- How: On-device Scalar RM gating and alerts; layered verification with procedural checklists; incident logging.

- Dependencies/assumptions: Ultra-low latency; certification; mapping between language principles and physical behaviors.

- Education policy, accreditation, and assessment modernization (Education; EdTech)

- What: Principle-based evaluation of AI educational assistants (accuracy, step-by-step clarity, language level), integrated into accreditation rubrics.

- How: District or institution adoption; dashboards reporting per-principle performance; accommodations for diverse learners.

- Dependencies/assumptions: Fairness validation; bias audits; stakeholder acceptance of binary rubric framing.

- Energy and industrial maintenance assistants (Energy; Manufacturing; Field Service)

- What: Principles like “specific actionable steps,” “procedural safety acknowledged,” “no irrelevant details” for troubleshooting assistants.

- How: Domain-tuned RLBFF models; integration with EHS (environment, health, safety) policies; incident reviews with evidence citations.

- Dependencies/assumptions: Domain data and SMEs; principle mapping to safety standards; evaluation in field conditions.

- Marketplace/model cards with Principle Profiles (AI Platforms; Model Hubs)

- What: Standardized reporting of per-principle performance (e.g., accuracy, clarity, repetition avoidance) for model selection and procurement.

- How: Extend model cards with PrincipleBench metrics; platform-level filters and comparisons by principle.

- Dependencies/assumptions: Broad platform adoption; stable, accepted benchmarks; incentives for transparent reporting.

Glossary

- Arena Hard v2: A challenging, human-evaluated benchmark for general alignment of LLMs, replacing an earlier saturated version. "Arena Hard v2 that was recently released to replace Arena Hard v0.1"

- binary rewards: A reward scheme that assigns either full reward or zero based on a verifier’s decision. "using binary rewards:"

- Bradley-Terry model: A pairwise preference model that estimates latent scores from comparative judgments. "a trained Bradley-Terry model produces scores"

- cosine similarity: A measure of angular similarity between embedding vectors, often used to cluster or match textual concepts. "cosine similarity 0.8"

- entailment task: An evaluation framing where the model judges whether a response satisfies a stated principle. "an entailment task (response satisfies or does not satisfy an arbitrary principle)"

- Fleiss' : A statistical coefficient for measuring inter-rater agreement among multiple annotators on categorical labels. "Fleiss' "

- Generative RMs (GenRMs): Reward models that generate reasoning before issuing a judgment, typically producing longer outputs. "Generative RMs (GenRMs)"

- generative verifiers: Models that judge correctness by generating verification logic instead of using fixed checks. "trains generative verifiers to judge whether an answer is correct."

- Group Relative Policy Optimization (GRPO): A reinforcement learning algorithm that optimizes policies using group-relative baselines. "GRPO (Group Relative Policy Optimization)"

- inter-rater agreement: The degree to which different human annotators agree on labels or judgments. "inter-rater agreement"

- JudgeBench: A benchmark evaluating reward models’ judging ability across knowledge, reasoning, math, and coding. "JudgeBench"

- KTO: A binary-feedback formulation where general-domain responses are labeled good or bad without explicit criteria. "KTO"

- Likert scoring: An ordinal annotation scheme (e.g., 5-point scale) that can be hard to calibrate across annotators. "Likert scoring (e.g. 5-point scale)"

- Macro-avg: An averaging method that weighs categories equally rather than by sample count. "Macro-avg across domains"

- micro-average: An averaging method computed over individual samples rather than per-category aggregates. "sample-level micro-average"

- MTEB: A standardized benchmark suite for evaluating text embedding models across many tasks. "MTEB"

- open-weight: Refers to models whose parameters are publicly available for use and fine-tuning. "Recent open-weight general-purpose LLMs"

- pairwise GenRM: A generative reward modeling setup that judges response pairs to mitigate position bias. "pairwise GenRM"

- position bias: Systematic preference caused by the order in which options are presented. "position bias"

- PrincipleBench: A benchmark that tests reward models on adherence to explicit, binary principles beyond correctness. "PrincipleBench"

- RapidFuzz: A Python library for fast approximate string matching used to validate evidence spans. "RapidFuzz string matching library"

- rapidfuzz.partial_ratio: A fuzzy matching function measuring partial similarity between strings. "rapidfuzz.partial_ratio(feedback, text_span) "

- RM-Bench: A benchmark focused on reward model performance across chat, math, code, and safety. "RM-Bench"

- Reinforcement Learning with Binary Flexible Feedback (RLBFF): The proposed approach combining human-like flexibility with verifiable, binary principles. "Reinforcement Learning with Binary Flexible Feedback (RLBFF)"

- Reinforcement Learning with Human Feedback (RLHF): An RL paradigm where human preference judgments guide model training. "Reinforcement Learning with Human Feedback (RLHF)"

- Reinforcement Learning with Verifiable Rewards (RLVR): An RL paradigm using rule-based correctness checks as rewards. "Reinforcement Learning with Verifiable Rewards (RLVR)"

- Reward Hacking: When models exploit spurious features to obtain high reward without truly improving quality. "Reward Hacking"

- Reward Models: Models that estimate the quality or preference score of responses to guide RL training. "Reward Models"

- Scalar RMs: Reward models that output compact scalar judgments (e.g., log-prob of Yes/No) without lengthy reasoning. "Scalar RMs"

- verifiable instruction following: Tasks where compliance can be checked automatically against precise requirements. "verifiable instruction following"

- verifier: A rule- or model-based component that decides if a response is correct and awards reward accordingly. "a verifier will either give the response full reward"

- zero-shot prompt template: A prompting setup without examples, used to extract principles directly from feedback. "zero-shot prompt template"

Collections

Sign up for free to add this paper to one or more collections.