- The paper introduces a Variance-Aware Sampling (VAS) method that leverages reward variance and trajectory diversity to stabilize RL for multimodal reasoning.

- It demonstrates that VAS significantly improves model performance and convergence, achieving state-of-the-art results on challenging reasoning benchmarks.

- The release of large-scale long chain-of-thought and RL QA datasets provides essential open resources for reproducible research and future RL advancements.

MMR1: Variance-Aware Sampling and Open Resources for Multimodal Reasoning

Introduction and Motivation

The MMR1 framework addresses two central challenges in the development of large multimodal reasoning models: (1) the scarcity of open, high-quality, long chain-of-thought (CoT) datasets for multimodal tasks, and (2) the instability of reinforcement learning (RL) post-training, particularly the gradient vanishing problem in Group Relative Policy Optimization (GRPO). The paper introduces Variance-Aware Sampling (VAS), a theoretically grounded data selection strategy that leverages reward variance and trajectory diversity to stabilize RL optimization. In addition, the authors release large-scale, curated datasets and open-source models, establishing new baselines for the community.

Variance-Aware Sampling: Theory and Implementation

The core methodological contribution is the VAS framework, which dynamically prioritizes training prompts that are most likely to induce high reward variance and diverse reasoning trajectories. The theoretical underpinning is a formal result showing that the expected improvement in policy optimization is lower-bounded by the reward variance of the sampled prompts. This is formalized in the Variance–Progress Theorem, which holds for both REINFORCE and GRPO-style RL algorithms.

VAS operates by computing a Variance Promotion Score (VPS) for each prompt, defined as a weighted sum of:

- Outcome Variance Score (OVS): The empirical Bernoulli variance of correctness across multiple rollouts for a prompt, maximized when the model is equally likely to be correct or incorrect.

- Trajectory Diversity Score (TDS): A diversity metric (e.g., inverse self-BLEU or distinct-n) over the generated reasoning chains, capturing the variability in solution paths.

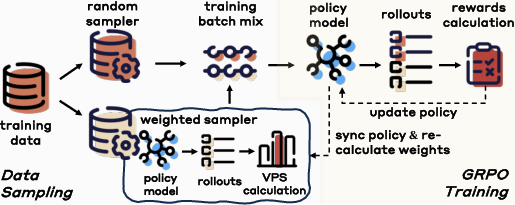

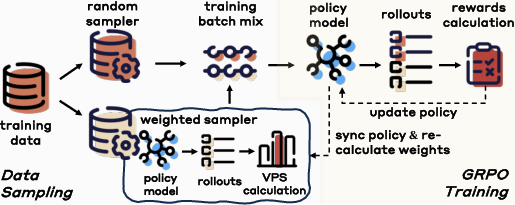

At each training step, the batch is constructed by sampling a fraction λ of prompts according to their VPS (favoring high-variance, high-diversity items) and the remainder uniformly at random to ensure broad coverage. VPS is periodically updated to reflect the evolving policy.

Figure 1: Overview of the Variance-Aware Sampling (VAS) framework.

This approach directly mitigates the gradient vanishing issue in GRPO, as prompts with low reward variance yield weak or vanishing gradients, impeding learning progress. By maintaining a frontier of challenging and diverse prompts, VAS ensures persistent, informative gradient signals throughout training.

Empirical Results and Analysis

MMR1 is evaluated on a suite of challenging mathematical and logical reasoning benchmarks, including MathVerse, MathVista, MathVision, LogicVista, and ChartQA. The 7B-parameter MMR1 model achieves an average score of 58.4, outperforming all other reasoning-oriented multimodal LLMs (MLLMs) of comparable size. Notably, the 3B variant matches or exceeds the performance of several 7B baselines, demonstrating the efficiency of the VAS-driven training pipeline.

The ablation studies reveal that:

- Cold-start supervised fine-tuning on curated long CoT data provides a strong initialization, especially for complex reasoning tasks.

- RL post-training with GRPO further improves performance, but the addition of VAS yields the most substantial and consistent gains across all benchmarks.

- Both OVS and TDS are necessary: OVS increases expected reward variance, while TDS provides a lower bound on variance when correctness signals are sparse.

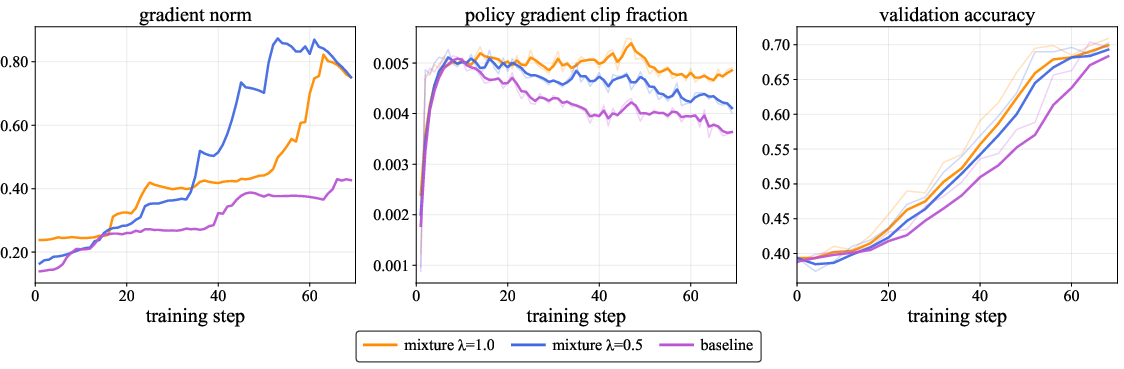

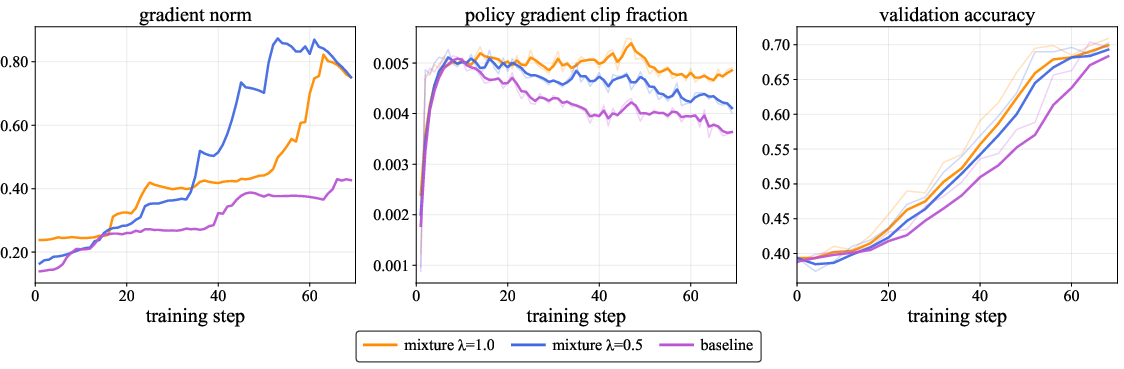

The training dynamics under VAS are characterized by higher gradient norms, increased policy-gradient clipping fractions, and faster convergence compared to uniform sampling.

Figure 2: Training efficiency of Variance-Aware Sampling (VAS) compared to mixed and uniform sampling, showing improvements in gradient norm, clip fraction, and validation accuracy.

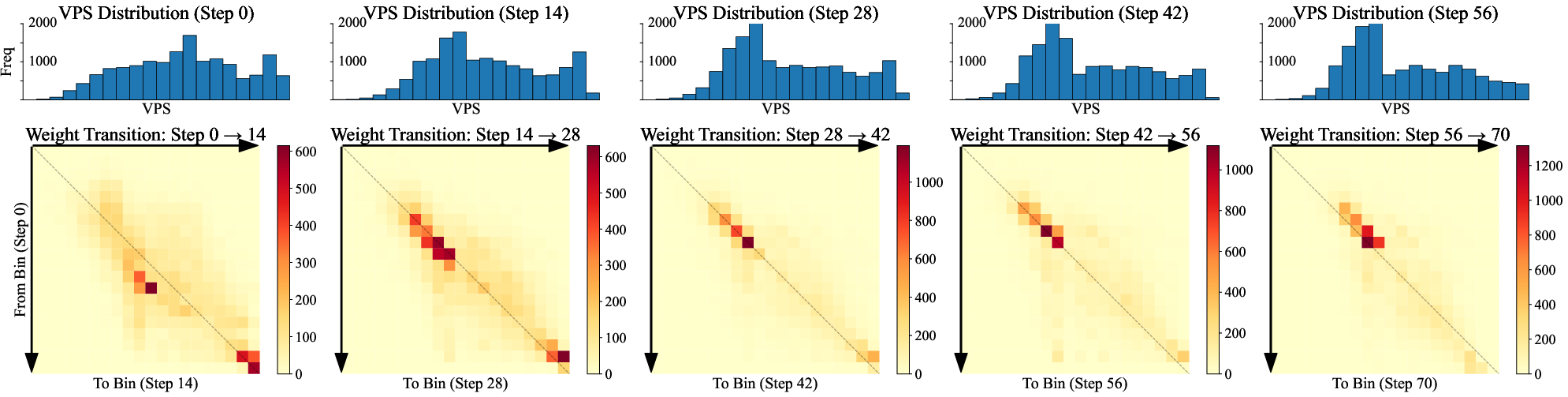

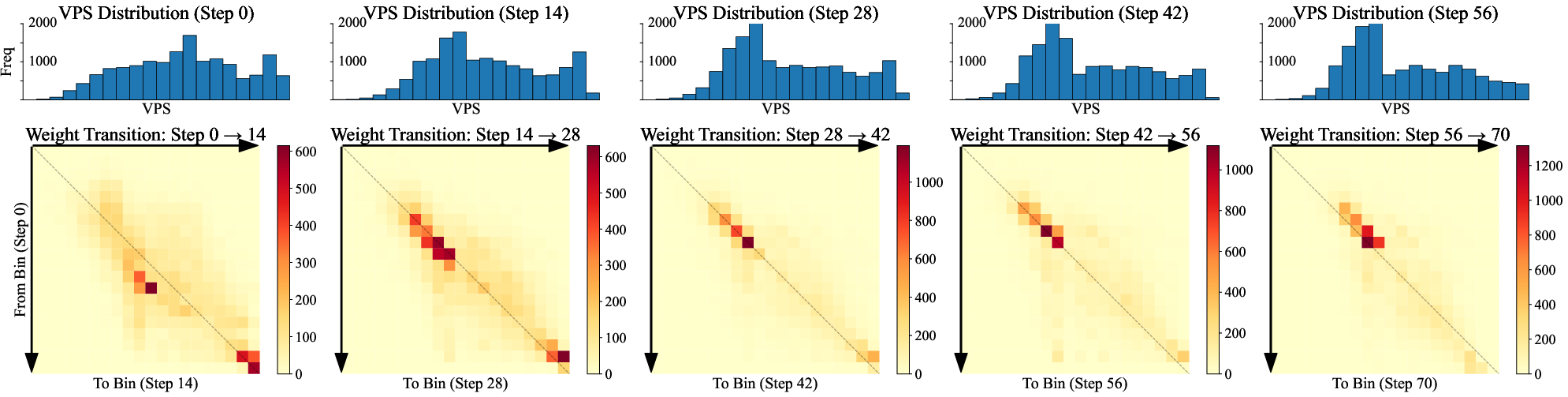

The evolution of VPS during training shows a convergence toward a stable set of frontier prompts, with adaptive reallocation as the model's competence improves. The distribution of VPS shifts from a broad, high-variance regime to a more compact, mid-variance regime as learning progresses.

Figure 3: Dynamics of Variance Promotion Score (VPS) during training, illustrating the stabilization and adaptation of prompt informativeness.

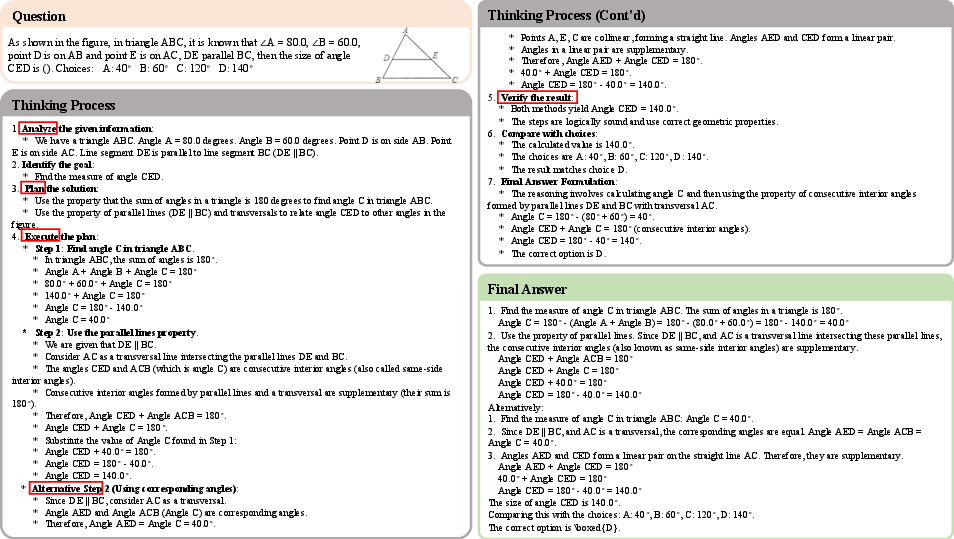

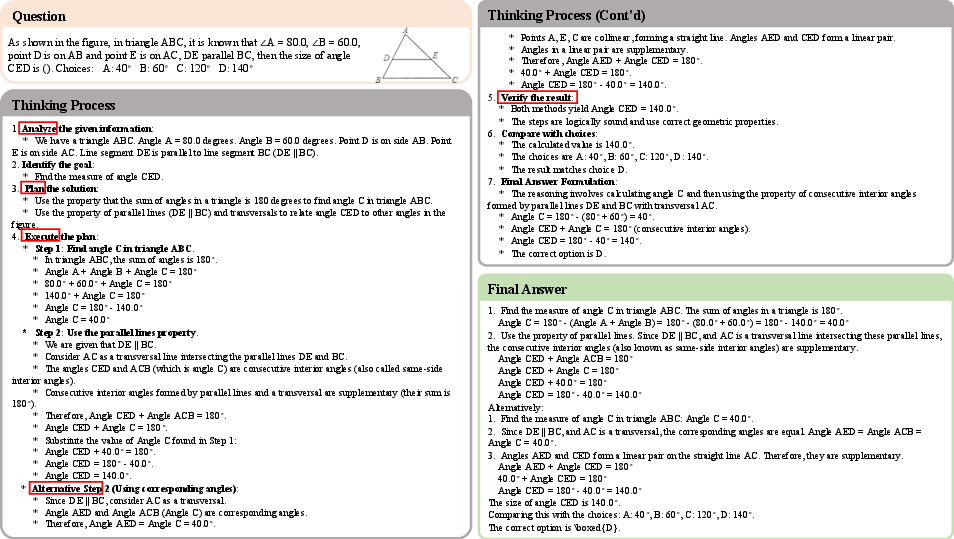

Qualitative analysis of MMR1's reasoning on MathVerse problems demonstrates logically structured, multi-step solutions, including verification and alternative approaches, indicative of robust and reflective reasoning capabilities.

Figure 4: Qualitative demonstration of MMR1’s reasoning process on a MathVerse problem, showing stepwise analysis, execution, verification, and alternative solution paths.

Data Curation and Open Resources

A significant practical contribution is the release of two large-scale, high-quality datasets:

- Cold-start CoT Dataset (~1.6M samples): Emphasizes long, verified chain-of-thought rationales across math, science, chart, document, and general domains. Data is filtered for correctness and difficulty, with hard and medium cases prioritized.

- RL QA Dataset (~15k samples): Focuses on challenging, diverse, and verifiable short-answer prompts, spanning fine-grained mathematical and logical reasoning categories.

The authors also provide a fully reproducible codebase and open-source checkpoints for models at multiple scales, facilitating standardized benchmarking and further research.

Implications and Future Directions

The VAS framework provides a principled, data-centric approach to stabilizing RL post-training for multimodal reasoning models. By directly targeting reward variance and trajectory diversity, it complements algorithmic advances in RL and can be integrated with other techniques such as reward rescaling, entropy regularization, or curriculum learning. The empirical results suggest that VAS is robust to hyperparameter choices and scales effectively to both small and large models.

The open release of high-quality, long CoT datasets and strong baselines addresses a critical bottleneck in the field, enabling reproducibility and fair comparison across future work.

Potential future directions include:

- Extending VAS to other domains (e.g., scientific reasoning, open-domain QA) and reward designs.

- Systematic integration with advanced RL algorithms and process-based reward models.

- Further analysis of the interplay between data-centric and algorithmic approaches to RL stability and sample efficiency.

Conclusion

MMR1 advances the state of multimodal reasoning by introducing a theoretically justified, empirically validated variance-aware sampling strategy and by releasing comprehensive open resources. The framework demonstrates that targeted data selection, grounded in reward variance and trajectory diversity, is essential for stable and effective RL post-training in complex reasoning tasks. The open datasets and models are poised to serve as a foundation for future research in multimodal reasoning and RL-based model alignment.